CHAPTER 5

Virtualization Components

In this chapter, you will learn about

• Hypervisor

• Virtualization host

• Virtual machine

• Virtualized infrastructure service elements

Virtualization technologies have grown substantially over the years. Before that, many major software vendors would not support their applications if they were being run in a virtualized environment. Now virtualization is the standard when it comes to creating an efficient data center, and almost all application vendors support their applications running in a virtualized environment. Virtualization allows a cloud provider to deliver resources on demand to a cloud consumer as needed rather than wasting time or losing opportunities because IT could not keep up with demand. Resource flexibility and scalability are key elements of cloud computing and some of the primary reasons for its rapid adoption.

The IT world has shifted from a one-to-one application-to-hardware model to a many-to-one model. Virtualization uses one physical computer to run multiple virtual servers, each with its own independent operating system and applications. Virtualization has made the IT industry more efficient. Virtualization results in better utilization of existing computing assets and technology cost savings.

Virtualization plays a key role in cloud computing by empowering cloud providers to deliver lower-cost hosting environments to cloud consumers. With virtualization, an organization can do more with less physical hardware and can deliver applications to its users faster than ever.

Virtualization makes the most of the physical hardware by running multiple virtual servers on one physical server. This consolidates infrastructure and reduces the total cost of ownership (TCO) by cutting data center space, power consumption, and cooling costs.

One key piece of software that has allowed the shift to virtualization is the hypervisor. Know this term for the exam. This chapter begins by looking at the various types of hypervisors and how they operate.

Hypervisor

A hypervisor is software that creates and manages the virtual infrastructure, including virtual switches (vSwitch), virtual CPUs (vCPU), virtual memory, virtual disks, and virtual machines.

The hypervisor is the entity that allows multiple operating systems to run on a single physical machine. The computer running the hypervisor is defined as the “host” computer. The virtual machines that are running on the host are called “guest” machines. The hypervisor is responsible for managing the guest operating system resources, including memory, CPU, and other resources that the guest operating system might need.

There are currently two distinct types of hypervisors: type 1 and type 2. Understanding the two types of hypervisors is critical to creating a successful virtualization environment and integrating that environment with the cloud computing models discussed in Chapter 1.

Type 1

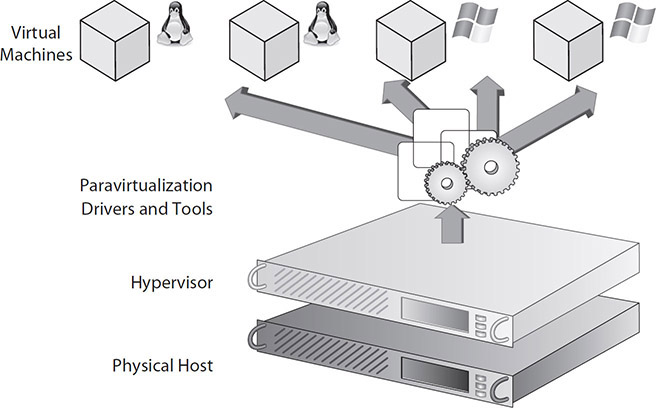

A type 1 hypervisor is one that is created and deployed on a bare-metal installation. The first thing installed on a type 1 hypervisor is the hypervisor itself; it acts as the operating system for the bare-metal machine.

Type 1 hypervisor software communicates directly with the physical server hardware and boots before the operating system (OS). Almost all of the major virtualization distributors, including VMware, Microsoft, Citrix, Oracle, and Red Hat, currently use type 1 hypervisors. Figure 5-1 shows an example of what a type 1 hypervisor looks like. The image is meant to give you a graphical representation of the layered design, with the hypervisor layer building on top of the hardware layer.

Figure 5-1 The layered design of a type 1 hypervisor

Type 2

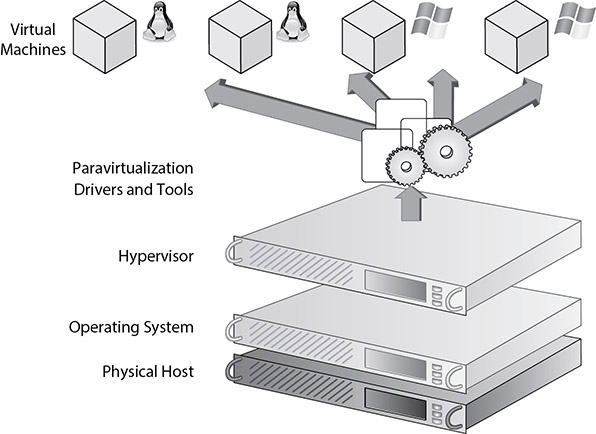

Unlike a type 1 hypervisor that is loaded on a bare-metal server, a type 2 hypervisor is loaded on top of an already existing operating system installation. For example, a system running Microsoft Windows 10 might have a VMware workstation installed on top of that operating system.

Type 2 hypervisors create a layer they must traverse as they are distributed to the guest virtual machines. A type 2 hypervisor relies on the operating system and cannot boot until the operating system is loaded and operational. Since type 2 relies heavily on the underlying operating system, if the system crashes or doesn’t boot, all of the guest virtual machines are affected. This makes type 2 hypervisors much less efficient than type 1 hypervisors.

Type 1 hypervisors are the best choice for high performance, scalability, and reliability, since they operate directly on top of the host hardware and expose hardware resources to virtual machines. This results in less overhead and less complexity.

Type 2 hypervisors sit on top of the operating system, making the virtualized environment less scalable and more complex to manage. Figure 5-2 gives a graphical representation of a type 2 hypervisor. Notice the difference in layering as compared to the type 1 hypervisor.

Figure 5-2 Image of a type 2 hypervisor

Proprietary

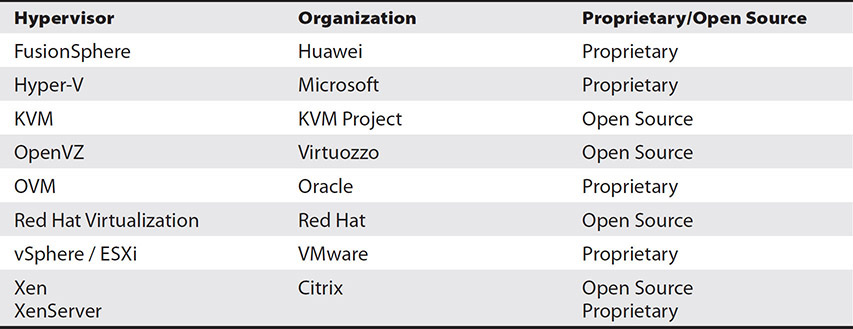

When a company is choosing which type of hypervisor to use, it needs to understand the difference between proprietary and open-source hypervisors. A proprietary hypervisor is developed and licensed under an exclusive legal right of the copyright holder. Proprietary hypervisors are created and distributed under a license agreement to the customer. Hyper-V, vSphere, OVM, and FusionSphere are examples of proprietary hypervisors.

Open Source

Some say that the open-source market is growing and advancing faster than the proprietary product market. It can also be argued that the open-source hypervisors are more secure than the proprietary hypervisors because of the underlying operating system running the hypervisor.

An open-source hypervisor is provided at no cost, yet it delivers the same basic functionality as a proprietary hypervisor to run multiple guest virtual machines on a single host. Some examples of open-source hypervisors are Citrix Xen, kernel-based virtual machine (KVM), and OpenVZ. However, many differences arise when evaluating advanced feature sets, migration capabilities, performance, scalability, and integration with other virtualization environments.

Choosing between proprietary and open-source hypervisors can be a difficult decision. Some of the factors that need to be considered are security, the reliability of the vendor, and the operating systems that are supported by the hypervisor. Some organizations also choose not to use an open-source hypervisor because their IT staff is not familiar with the interface. For example, an organization may choose to use Microsoft Hyper-V over Citrix Xen because its IT staff is already familiar with the Microsoft product line. This will reduce the learning curve to become proficient in managing the system compared to if the organization were to choose an open-source hypervisor.

Table 5-1 shows some of the most popular hypervisors, the companies or groups behind them, and whether they are proprietary or open source. The list is arranged alphabetically.

Table 5-1 Popular Hypervisors

Consumer vs. Enterprise

Hypervisors are available in many flavors to meet the varied needs of everyone from end users, to developers, to enterprise data centers. Many of the current desktop operating systems come with a virtualization option already built in. These operating systems offer a consumer hypervisor that allows users to create virtual machines on a standard PC. For example, Microsoft Windows 8 and later versions come packaged with Hyper-V, allowing desktop-level hardware to run a virtual environment. Similarly, KVM can be added to Linux by pulling down a few packages.

However, not all hypervisors are the same. Consumer and enterprise use cases differ significantly. Thus, when selecting a hypervisor, it is essential to consider the user’s or group’s specific virtualization goals.

The enterprise virtualization goal is to host the required virtual servers and their associated data and applications on the least amount of hardware to provide acceptable performance and redundancy. Enterprises want to run many virtual machines on each physical machine. Those virtual machines, running independent operating systems, must support many concurrent users and a variety of applications and workloads. This is the job for a type 1 hypervisor because it is built to effectively handle this type of use case and workload.

In comparison, the average consumer’s goal is to configure a virtual environment on a desktop or laptop machine that was not designed primarily for the purpose of virtualization. The device may serve other purposes such as gaming, word processing, or Internet browsing in addition to functioning as a hypervisor. Consumers are typically not looking to support a large number of users.

Desktop virtualization is popular with developers and IT professionals. Developers often test out applications locally in virtual machines without the complexity of managing a dedicated hypervisor. IT professionals may want to test new operating systems or applications in an isolated environment separate from the operating system running on their desktop. Similarly, Linux or Mac users may use virtualization such as KVM or Parallels to run another operating system so that they can run OS-specific applications or games.

The type 2 hypervisor is more likely to fit consumers’ needs because type 2 hypervisors allow the desktop to continue to run the original operating system and its applications side by side with virtualized operating systems and applications.

Virtualization Host

Now that you understand what a hypervisor is and how it interacts with a computer, you need to understand the virtualization host that runs the hypervisor software. The virtualization host is the system that is installed first and then hosts or contains the guest virtual machines. The host server provides all of the underlying hardware and compute resources for the guest virtual machines, including memory, CPU, hard disk, and network I/O. Since the host machine provides the resources for the guest, it must contain at least enough hardware resources to meet the minimum requirements for its guest virtual machines.

A virtualization host computer allows different operating systems to coexist on the same host computer. For example, you could have a virtual machine running Microsoft Windows Server 2016 and another virtual machine running your favorite Linux distro. The first step in configuring a virtualization host is to confirm that your system meets the virtualization host’s requirements. Hardware requirements include BIOS configuration, sufficient memory, CPU, and at least one NIC. Figure 5-3 shows an example of a virtualization host computer.

Figure 5-3 A graphical representation of a virtualization host

Hardware-Assisted Virtualization

Hardware-assisted virtualization enables efficient full virtualization, which is used to simulate a complete hardware environment or a virtual machine. It is basically software that allows the hardware to provide architectural support for the host computer to support running guest virtual machines. Hardware-assisted virtualization helps make virtualization more efficient by utilizing the hardware capabilities built into the host computer’s processor. Both AMD and Intel support hardware-assisted virtualization.

If an organization wants to find out whether its hardware supports hardware-assisted virtualization, an excellent place to start is with the AMD and Intel websites. Both websites have a list of all the processors that support hardware-assisted virtualization. It should also be noted that all processors manufactured after 2003 have hardware-assisted virtualization built in.

If an organization has already purchased the hardware or wants to repurpose older hardware as a virtualization host, it can download and run free software tools to check to see if its hardware supports hardware-assisted virtualization. For example, suppose a company is trying to use an older server as a virtualization host to run Microsoft Hyper-V. In that case, Microsoft has a free software tool that can determine if that server supports hardware-assisted virtualization and Microsoft Hyper-V.

Single Root Input/Output Virtualization

Single root input/output virtualization (SR-IOV) allows the PCI Express bus to be shared by multiple virtual machines. Devices plugged into the PCI Express bus, such as a graphics card or network card, have physical functions (PFs) shared with the virtualization host. Virtual machines map to virtual functions (VFs) that share the PFs of the device.

SR-IOV provides a PCI Express Requester ID for each PF and VF. This allows different access requests to be identified so that access can be managed for the resource. SR-IOV provides for the sharing of PCI Express resources without having to go through the virtualization stack. This dramatically improves performance and reduces overhead.

BIOS

The Basic Input/Output System (BIOS) is software residing on a ROM chip or a flash memory chip installed from the manufacturer. The system mainboard or motherboard has a system BIOS, and some advanced components such as RAID controllers and HBAs have their own BIOSs as well.

The system BIOS determines which features a computer supports without having to access any additional software that is loaded on the computer. For example, the system BIOS can contain the software needed to control the keyboard, the display settings, disk drives, USB settings, power options, and multiple other options. The system BIOS allows a computer to boot itself and is available even if the hard disks in the computer fail or are corrupted, because it is self-contained on the chip.

So what does the BIOS have to do with virtualization? The system BIOS plays a key role when enabling virtualization on a host computer. In order for a modern computer to act as a host and have the ability to host guest virtual machines, modern operating systems rely on the system BIOS to support hardware-assisted virtualization. Some older computers do not have this feature available in the system BIOS. Other computers might need a firmware update for the system BIOS before the feature can be enabled. However, most of the newer servers from mainstream manufacturers, including the latest desktop computers, support this feature.

With the advancement in virtualization and desktop computers, it is no longer a requirement to have a host machine running server-class hardware. Much of the desktop hardware now natively supports hardware-assisted virtualization.

A BIOS has several limitations that necessitated its eventual replacement with UEFI, discussed next. A BIOS is limited to 16-bit processing and 1MB of addressable memory. The BIOS can only boot to drives that are 2.1TB or less due to limitations in the master boot record (MBR).

UEFI

The Unified Extensible Firmware Interface (UEFI) is a replacement for BIOS that was introduced in 2007. UEFI is supported on Windows Vista SP1 and newer Windows operating systems, as well as most Linux versions, including Ubuntu, Fedora, Red Hat Enterprise, CentOS, and OpenSUSE.

UEFI addresses the limitations in BIOS by allowing both 32-bit and 64-bit processing, which can significantly improve system boot times. It also allows for more than 1MB of addressable memory during the boot process when the process is in real mode. UEFI supports hard drives larger than 2.1TB and the GUID Partition Table (GPT) instead of the MBR. UEFI also supports secure boot, a technology that performs OS integrity checking. This helps prevent rootkits from starting up a modified version of the OS.

Firmware Configurations

Firmware is a set of instructions that are programmed for a specific hardware device. The firmware tells the hardware device how to communicate with the computer system. Firmware upgrades can be performed on many devices, including motherboards, network cards, and hard drives. Firmware upgrades are generally carried out so that the hardware can support new features and functionality. For example, you might do a firmware upgrade on a network card so that the card is supported in a new operating system.

In some cases, it might be necessary to do a firmware upgrade to a computer’s BIOS for it to support hardware-assisted virtualization. This would generally be done on older hardware, as most new hardware purchased today already supports hardware-assisted virtualization. Motherboard manufacturers place firmware updates and the software needed to update the BIOS firmware on their websites for customers to download.

Hyperconverged Systems and Infrastructure

Hyperconverged infrastructure (HCI) is a virtualization effort to reduce complexity, improve flexibility, and improve the manageability of systems. HCI operates by combining the compute, storage, graphics, and networking hardware into a single system compared with virtualization that uses dedicated hardware for compute, networking, or storage functions, otherwise known as converged infrastructure. HCI reduces complexity because converged infrastructure can be challenging to configure. Converged infrastructure components are sometimes only compatible with equipment from a select few vendors. Upgrading such equipment often involves upgrading several other connected systems as well. All too often, a single system can hold back an entire upgrade because newer technologies on the compute or storage side do not support technologies of other parts of the infrastructure.

HCI can reduce the need for multiple teams to support the virtualization solution, reducing the number of people necessary to manage the system, and improving communication because team members are working from the same frame of reference. Converged infrastructure may require the efforts of a storage team to manage the SAN, a network infrastructure team to manage network connectivity, a server team to manage the server hardware, and a virtualization team to handle the host and VMs. Conversely, HCI can be managed by a more generalist team, rather than multiple teams of specialists.

HCI can operate off more generic hardware because complex hardware configurations are instead performed within software. This software also allows for resources to be shared more easily across the HCI. Virtualization software in HCI combines the underlying hardware resources such as storage, compute, networking, or graphics hardware into easy-to-manage pools that can be easily allocated to virtual machines.

VM Sizing Considerations

Now that you understand the prerequisites to creating a host machine, you need to know how to properly size a host machine. Host machines may be physical servers located in an organization’s data center, or they could be cloud hypervisors that the organization rents from a cloud vendor. Ensuring that the host machine can support at least the minimum number of guest virtual machines that the organization is trying to run is a critical step in creating a successful virtualization environment.

One of the many benefits of virtualization is the ability to provision virtual machines on the fly as the organization’s demands grow, making the purchase of additional hardware unnecessary. Suppose the host computer is not sized correctly. In that case, however, it is not possible to add virtual machines without adding compute resources to the host computer or purchasing additional resources from a cloud vendor.

In this section, we will look at some of the primary resources assigned to virtual machines. Storage was already covered in earlier chapters, but this section covers the CPU, GPU, memory, and NIC.

CPU

The central processing unit (CPU) is the device in a computer system that performs the mathematical operations specified in computer code. When you break down computer operations to their most basic form, it is just math. The CPU, sometimes just referred to as the processor, is designed to perform such math quickly and efficiently. The first step to sizing the CPU on a host machine is purchasing the correct type and number of CPUs. Both AMD (AMD-V) and Intel (Intel VT) support virtualization, so the manufacturer is not as critical as the number of CPU cores and the CPUs’ speed. A multicore processor is a single physical CPU with two or more independent CPUs called cores.

Once the organization has defined the host computer processor, it needs to evaluate how to assign those CPU resources to the guest virtual machines. Not surprisingly, virtual machines use virtual CPUs (vCPUs), which can be added to a virtual machine when it is created. The number of vCPUs that the company should add is dependent on a number of factors, but it is possible to assign multiple vCPUs to a single virtual machine.

Simultaneous Multithreading

Simultaneous multithreading (SMT) is a technology that allows for a processor core to work on multiple independent threads at the same time. These threads do not have to be related to one another. Processors with SMT are capable of fetching instructions from multiple threads in a single CPU cycle. The CPU accomplishes SMT by creating multiple logical cores from its physical cores.

SMT allows for a CPU to better use its resources and reduce overall power consumption compared with those that do not support SMT. SMT was introduced with the Pentium 4 in 2002, and today, nearly all modern processors are equipped with SMT.

SMT increases the system’s ability to run parallel processes, but whether performance increases using hyperthreading depends upon the application’s ability to execute multiple steps on processors in parallel. SMT is not the same as having multiple cores in a CPU because SMT shares the CPU’s pipeline, cache, and system bus interface instead of dedicating cache and interfaces to distinct cores.

SMT is beneficial in virtualization, allowing for higher oversubscription ratios of vCPUs to physical CPUs, discussed later in this chapter. Hypervisors with only a few processor cores will see the most significant advantage from SMT because they are likely running out of available processors to allocate to virtual machines.

Hyperthreading

Hyperthreading is a proprietary SMT technology for Intel CPUs. Hyperthreading creates two logical CPU cores for each physical CPU core that supports hyperthreading. It was introduced in 2002 with the Xeon server and Pentium 4 desktop processors. Hyperthreading requires operating system support for symmetric multiprocessing (SMP), a feature that all current operating systems support.

Clock Speed

Clock speed, also known as clock rate, is the measurement of how many cycles per second the CPU operates at. Clock speed is measured in hertz (Hz), with modern processors running in the billions of cycles per second, measured in gigahertz (GHz). The computer motherboard has a front-side bus (FSB) speed that is also measured in Hz. The processor, memory, and other components on the motherboard will run at some multiplier of this. The CPU clock speed will be a multiple of the FSB speed. In Figure 5-4, you can see the results of the CPU-Z tool for an AMD Ryzen 7 3800X. The FSB bus speed is just labeled as bus speed in CPU-Z. It is operating at 99.19 MHz. The CPU is running at 41 times the FSB speed, so its clock speed is 4066.76 MHz, or 4.06 GHz.

Figure 5-4 Processor specifications as shown in CPU-Z

Clock speed is important because it determines how much work the processor can perform in a given amount of time. The CPU performs one or more instructions in each clock cycle. Some operations will be more efficient than others, depending on how capable the processor is at breaking the operations down into steps and performing them with its available resources. Processors use instruction pipelines to streamline this process by defining common processor workflows.

Clock Speed vs. Cores

As a general virtualization rule, more cores are usually better than higher processor clock speeds. It is typically better to invest in more cores with more cache than faster CPU speed because this will allow computing resources to be distributed more effectively.

For example, imagine that you are setting up a new virtualization host and trying to choose the best processor for the workload between two alternatives. The first option is to put two 6-core processors running at 2.6 GHz into the host. The second option is to equip it with two 4-core processors running at 3.2 GHz. Between these two choices, the system with two 6-core processors is the better choice. This is because, with virtualization, the company can spread the virtual machine load across more CPU cores, which translates into faster and more consistent virtual machine performance. Also, if you do the math, two 6-core processors equal 12 cores running at 2.6 GHz, equaling 31.2 GHz of total processing power. In comparison, two 4-core processors equal 8 cores running at 3.2 GHz, equaling 25.6 GHz. However, even if the math favored the higher speed over cores, the best choice is still additional cores.

VT-x

VT-x is a set of instructions performing virtualization functions that are built into the CPU. VT-x decreases the complexity of hypervisors that run on top of supported VT-x hardware. In fact, VT-x is a requirement of most modern hypervisors. VT-x also improves the speed of hypervisor functions because they can be performed in hardware rather than software.

CPU Oversubscription Ratio

It is possible to assign more vCPUs to virtual machines than available physical CPU cores in the hypervisor in a process known as oversubscription or overcommitment. However, assigning more vCPUs than CPU cores must be done with care. Before undertaking such a move, the technicians should evaluate all the virtual machines’ workload on the server and whether or not that workload is processor intensive.

Virtual machines often require more CPU when starting up or when loading processes for the first time. CPU usage usually then decreases significantly, with occasional increases due to utilization. Oversubscription can allow the hypervisor to host more virtual machines than otherwise possible and make better use of the available processors. The hypervisor manages CPU requests, providing each virtual machine with the CPU the resources it needs up to its max.

However, oversubscription can result in contention for CPU resources when multiple machines attempt to utilize all their vCPUs at the same time. This results in reduced performance for the virtual machines and the applications that run on them. For this reason, it is important to understand what a reasonable oversubscription ratio is.

It is generally safe to maintain an oversubscription ratio of 5:1, with five vCPUs for each physical CPU, so a server with four physical CPU cores could assign up to 20 vCPUs. You may be able to increase this, especially for hosts with a large number of rarely used virtual machines. Monitor resources closely when using an oversubscription ratio of 6:1 to 7:1 because it is easy to produce a situation where virtual machines are waiting for available CPU cycles.

Most of the time, it is unsafe to assign more than seven vCPUs for every CPU core on the server. However, this number may vary based on virtual machine vCPU utilization, so evaluating the environment and the goal of that environment is key.

Another important consideration is that you should not allocate more vCPUs to an individual virtual machine than you have physical cores in the hypervisor. This is because the virtual machine may try to use all assigned vCPUs. If the VM tries to use more cores than are available at once, some processes that were meant to be in parallel will be serialized because there will never be enough CPUs available to satisfy the demand. This will result in less-than-ideal performance. For example, if a hypervisor has four CPUs with 4 cores each, it has 16 cores. Assign no more than 16 cores to an individual virtual machine on the host, even if your oversubscription ratio is below the recommended 5:1 ratio.

It is best to allocate one vCPU to a machine and then monitor performance, adding additional vCPUs as needed. When a virtual machine attempts to use a vCPU, the hypervisor must wait for the physical CPU associated with that vCPU to become available. The virtual machine believes that vCPU to be idle and will attempt to spread the load around if the application is configured for multiprocessing, but this can have an adverse impact on virtual machine performance if the physical CPU has a large number of processes in the queue. Furthermore, even idle processors place some load on the hypervisor from host management processes, so it is best to not provision more than will be necessary.

Monitor hypervisor metrics to determine if oversubscription bottlenecks are occurring. The most important metric to watch is the CPU-ready metric. CPU ready measures the amount of time a virtual machine has to wait for physical CPU cycles to become available. It is also important to monitor CPU utilization within each virtual machine and on the host. High CPU utilization might indicate the need for additional vCPUs to spread the load. High host CPU utilization could indicate that virtual machines are not properly balanced across hosts. If one host has high CPU utilization and others have available resources, it may be best to move one or more virtual machines to another host to relieve the burden on the overtaxed host. Host resources could also be expanded for physical hosts or requested for those provisioned in the cloud.

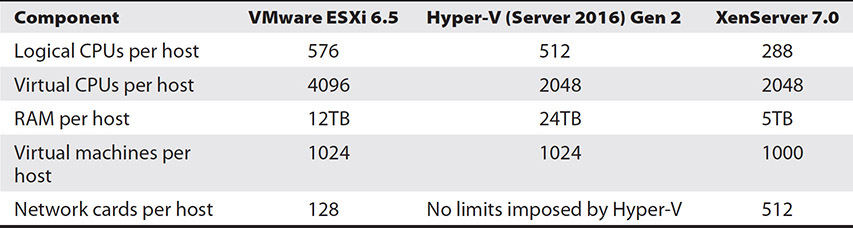

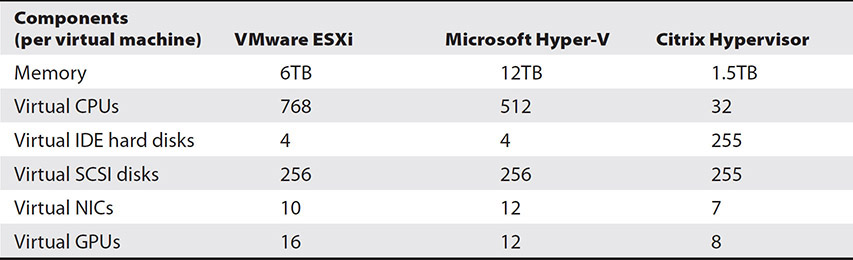

For example, a heavily used Microsoft SQL server will be a very processor-intensive virtual machine. In that scenario, an organization would want a one-to-one CPU-to-vCPU assignment. VMware, Hyper-V, and Citrix all have calculators available to help determine exactly how to distribute vCPUs based on best practices for that particular virtualization product. Table 5-2 displays the maximum number of logical CPUs and virtual CPUs for some of the virtualization products currently available.

Table 5-2 Virtualization Host Maximum Resources

GPU

The graphics processing unit (GPU) is the device in a computer system that performs the mathematical operations associated with displaying content on the screen. GPUs process the pixels that fill your screen, but they also do many other computations to determine what should be displayed on the screen. These computations include determining texture mappings, rendering shapes, and determining the physics for games and other applications. The GPU may be bundled with a motherboard, or it can exist on a dedicated graphics card. Multiple graphics cards may be linked together so that the combined power of multiple GPUs can be applied to workloads.

GPUs started out as something only needed to play video games, but they have evolved to become a far more essential part of the modern computing platform. In the beginning, GPUs were just CPUs that were put onto a video card to improve video performance. However, the specific requirements for graphics processing led to a divergence in GPU architecture. GPUs need to break down problems, not into a couple or a dozen parallel tasks, but rather into thousands or millions of tasks. For this reason, GPUs are designed with hundreds or thousands of cores and have pipelines optimized for such processing. This makes them much more efficient at completing such tasks.

The GPU role was greatly expanded with the development of the Compute Unified Device Architecture (CUDA), which allows developers to create applications that take advantage of the GPU architecture to perform specialized tasks that would be overly burdensome for a CPU. Some workloads that utilize CUDA include blockchain, cryptocurrency mining, deep learning, cryptography, genomics research, artificial intelligence, climate modeling, data analytics, scientific visualization, physics, neuroscience, seismic prediction, augmented reality, and virtual reality. Therefore, GPU selection for the host should be based on the type of workloads that will be performed on the VMs it will house. It is also essential to know which operating systems the VMs on the host will be running. Some GPUs may support Linux VMs, but not Windows, and vice versa.

Once the technician has defined the GPUs for the host computer, he or she needs to evaluate how to assign those GPU resources to the guest virtual machines. There are three options for allocating GPU resources, as follows:

• Sharing the GPU among multiple VMs

• Dedicating the GPU to a single VM

• Dedicating multiple GPUs to a single VM

Virtual/Shared

The GPU can be virtualized so that it can be shared among multiple virtual machines on the host. The physical GPU is divided into multiple logical GPUs, called virtual GPUs (vGPUs), which can be added to a virtual machine. vCPUs are commonly used when provisioning virtual desktops. Standard enterprise desktops do not need a lot of processing power. Still, it is more efficient to allocate a portion of the GPU to the virtual desktop than to have graphics tasks processed by the vCPU.

When a VM using a vGPU starts up, the host starts a corresponding process for it on the host system. This process communicates with the graphics driver running within the VM and then sends the commands to the host’s coordinating service to be delivered to the GPU. The coordinating service may juggle requests from multiple VMs concurrently, so some requests may have to wait for available GPU cycles. Assigning more vGPUs than the number of GPUs in a machine is called GPU oversubscription.

GPU Oversubscription Ratio

Virtualization allows for more vGPUs to be assigned to virtual machines than available physical GPU cores in the hypervisor in a process known as oversubscription. Oversubscription can enable the hypervisor to host more virtual machines than otherwise possible and make better use of the available graphics processors. The hypervisor manages GPU requests, providing each virtual machine with the GPU resources it needs up to its max.

Assigning more vGPUs than GPU cores must be done with care. Too much oversubscription can result in contention for GPU resources. This results in reduced performance for the virtual machines and the applications that run on them. For this reason, it is crucial to understand what a reasonable oversubscription ratio is.

There is no standard oversubscription ratio for GPU. The ratio depends on the GPU model, so you will need to determine the maximum vGPUs supported for the GPUs you are considering. For example, the Nvidia Tesla M60 can support 8 vGPUs; however, the Nvidia GRID K100 supports 32 vGPUs.

Pass-through

GPU pass-through is used when one or more GPUs are dedicated to a single VM. Pass-through allows the VM to address the GPU directly without having to go through the hypervisor stack. This significantly improves performance for the application. Pass-through is used for very GPU-intensive workloads such as deep learning, artificial intelligence, and data analytics.

Memory

After a company has determined how many and what type of CPUs and GPUs they will purchase for the virtualization host, the next step is to plan the amount of random-access memory (RAM) that the host machine will need. Planning the amount of memory required on a host machine is quite different from planning the number of CPUs. Planning for memory is critical. The more RAM and the faster the RAM speed, the better for a virtualization host.

Hypervisors have a virtual allocation table (VAT) that uses methods such as nested page tables or shadow page to map virtual memory to that of the host. Some virtualization platforms allow for adjusting virtual machine memory on the fly, essentially allowing one virtual machine to borrow memory from another virtual machine without shutting down the system. Each of the virtualization products supports virtual machine memory allocation differently, but the one consistent thing is that more memory on the host machine is always better. The IT administrator’s job is to maximize the cost savings of virtualization and the value it brings to the organization.

Careful planning is required to provide enough memory on the host machine to dynamically provision virtual machines as the organization’s needs grow and, at the same time, to make the most cost-efficient choices. Table 5-2, shown earlier, includes the maximum amount of memory allowed on a host machine for some of the virtualization products currently available.

Memory Ballooning

Virtual machines often require more memory when starting up or when loading processes for the first time. Memory usage often then decreases significantly, with occasional increases due to utilization. However, when oversubscription ratios are high, there might not be enough memory to start new machines.

Memory ballooning comes into play when there are not enough resources available to handle new memory requests from virtual machines. Ballooning requests memory resources from other virtual machines. These virtual machines decide which processes they can swap out to free up space, and then they loan those memory pages to the hypervisor. The hypervisor places all the memory pages lent to it into a balloon that is temporarily allocated to a machine that urgently needs it.

The beauty of ballooning is that the hypervisor does not need to seize the memory, and the virtual machine can make an intelligent decision about which memory to swap out to have the least impact on the virtual machine. The ballooning process runs as a standard Windows process in the VM guest. As it requests memory, Windows allocates pages to it as it would any other process. The hypervisor then takes those pages and makes them available to others. The virtual machine believes the memory is in use by the ballooning process until the ballooning process releases it back to the virtual machine.

Memory Bursting

Virtual machines can be configured with a minimum and a maximum memory size in a technique known as dynamic memory. The machine can request up to the max amount of memory, and the hypervisor will allocate pages to the virtual machine. The burst memory is the maximum amount of memory that the virtual machine can utilize. When configuring burst values, consider how much the machine will use at peak levels and then add a buffer to that value for the burst/max memory.

Transparent Page Sharing

Transparent page sharing is a technology that deduplicates hypervisor memory allocated to virtual machines. Several virtual machines may load the same data into memory, especially when running the same application. In a virtual desktop infrastructure (VDI), this is even more prevalent, with users commonly running office productivity, web browsing, and other apps on their virtual desktops. Operating systems also load many processes into memory that may be deduplicated.

Transparent page sharing maps duplicate pages to a single page or fixed block of memory. When transparent page sharing finds a duplicate, the memory references for the pages assigned to virtual machines are mapped to a single page on the hypervisor so that only one copy is retained.

Memory Compression

When memory is entirely consumed, operating systems are configured to dump data from memory into a page file. The page file is located on disk and is much slower to access than memory. Hypervisors can be configured to compress memory when available memory is low rather than page that memory. This consumes CPU resources to perform the compression and decompression, but it reduces memory read and write I/O, since the data does not have to be read from disk.

Memory Oversubscription Ratio

Virtual machines can be configured with a minimum and a maximum memory size. The machine can request up to the max amount of memory, and the hypervisor will allocate pages to the virtual machine. When the memory is not needed anymore, the hypervisor reclaims it for use on other virtual machines. In this way, the total maximum amount of memory configured for virtual machines can exceed the available physical memory, known as memory oversubscription. This works as long as the actual consumption remains lower than physical memory. Other technologies, such as memory compression and transparent page sharing, can further reduce memory consumption, allowing for more oversubscription.

Oversubscription can allow the hypervisor to host more virtual machines than otherwise possible and make better use of the available memory. However, oversubscription ratios are much lower for memory than they are for CPU.

It is generally safe to maintain an oversubscription ratio of 1.25:1, with 125 percent of physical memory allocated to virtual machines. Thus, a server with 256GB of memory could assign up to 320GB of memory to virtual machines. You may be able to increase this comfortably to 1.5:1, in particular for hosts that have a significant number of similar virtual machines, as transparent page sharing will reduce actual memory consumption. A 1.5:1 oversubscription ratio would allow for 384GB of memory to be allocated to virtual machines in a host that has 256GB of physical memory. Monitor resources closely when using an oversubscription ratio higher than 1.25:1. In such cases, it can be easy to produce a situation where virtual machines consume all available physical memory and the host is forced to page memory to disk.

Most of the time, it is unsafe to operate at an oversubscription ratio above 1.5:1. Be extremely careful if you operate at this level and ensure that memory metrics are configured with alerts so that administrators are aware when memory thresholds are reached. Set alerting thresholds below the level where paging will occur to avoid performance issues, and adjust virtual machine memory settings accordingly.

NIC

In addition to choosing CPU, GPU, and memory, choosing the type of network interface card (NIC) to use is just as important when planning the hardware for a virtualization host. Choosing the correct network configuration and type of card is critical to a virtual environment’s success because, without reliable network connectivity, VMs will not be accessible or might be less available. Network latency can diminish the speed of a virtual environment, so the organization needs to carefully plan for which features its network cards on the host computer need to support.

The first step when planning the NICs for the host computer is to understand the physical aspects of the network. This includes how the host will be connected to other resources and how users will connect to it. Next, one should consider which features will provide the required network performance for the desired workloads.

Performance Considerations

Server-class NICs provide the best possible network performance for virtualization. It is also necessary to verify that the source and destination NIC infrastructure does not introduce a bottleneck. For example, if the organization is using a 10 Gbps NIC to connect to a 10 Gbps port on a switch, it must make sure that all the patch cables support 10 Gbps speeds and that the switch is configured to use 10 Gbps and is not hardcoded to use 1 Gbps speeds. The network can only be as fast as the slowest link, so having a misconfigured switch or a bad cable can cause a bottleneck and slower performance.

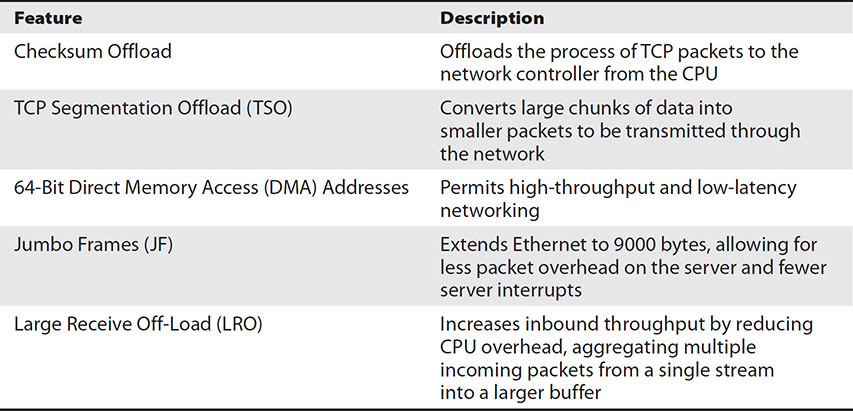

There are some other key features to consider when purchasing NICs for the virtualization host computer. Table 5-3 lists those features and gives a brief description of each.

Table 5-3 NIC Hardware Features

NIC Oversubscription Ratio

Multiple virtual machines can share the same NIC in a similar fashion to the sharing of other resources, such as GPU, CPU, or memory. Assigning more virtual network resources than physical network resources available is NIC oversubscription.

As with other resources, it is important to understand the workload of the systems that you will be attaching to each physical NIC. Some types of systems utilize more network traffic than others. For example, a file server or e-mail server might consume a significant portion of a NIC’s bandwidth. In contrast, a certificate server might use only a small percentage.

Network oversubscription ratios are usually relatively high. Systems typically have lower utilization of network resources than they do for CPU, GPU, or memory. It is generally safe to maintain an oversubscription ratio of 10:1, but as with other resources, pay attention to network utilization metrics and tweak this if utilization stays consistently high so that you can avoid contention.

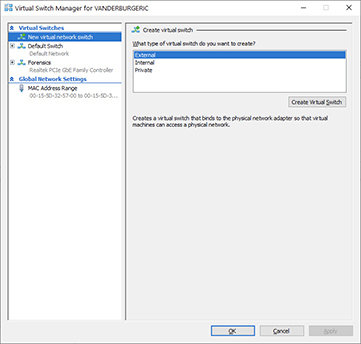

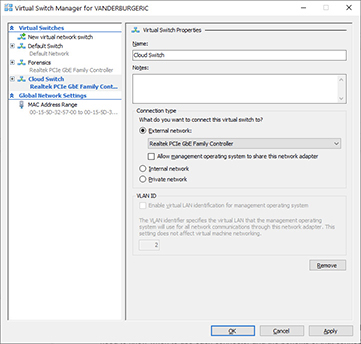

Exercise 5-1: Creating a Virtual Switch in Hyper-V

In this exercise, we will create a virtual switch from a physical NIC to be used for Hyper-V on Windows 10 Enterprise, Pro, or Education edition. To follow these steps, you must have the Hyper-V installed. You can install it by opening PowerShell as administrator and then running the following command:

Enable-WindowsOptionalFeature -Online -FeatureName Microsoft-Hyper-V -All

Follow along with these steps to create a new virtual switch in Hyper-V.

1. Open the Hyper-V Manager tool.

2. Click Virtual Switch Manager in the Actions pane on the right side of the Hyper-V Manager.

3. Select External under Create Virtual Switch on the right pane of the window and then click Create Virtual Switch. This will create a virtual switch that is mapped to a physical adapter on the host, allowing the host to communicate with the network outside of the host. This is shown in the following illustration. The internal or private virtual switches can be used for traffic that will not leave the host, such as traffic between VMs on the host. The internal switch allows VMs on the host to communicate, and the host can communicate with them. The private switch is the same as the internal switch, except that the host cannot communicate with the VMs on the private switch.

4. Give the switch a name. In this example, we will call it Cloud Switch.

5. Next, map this virtual switch to a physical NIC in the host. In this example, a Realtek PCIe GbE Family Controller NIC was selected.

6. If this is the only NIC in your machine, leave “Allow management operating system to share this network adapter” checked. However, uncheck this if you will dedicate this NIC to virtual machines. The following illustration shows the cloud switch on a dedicated NIC, so the option is unchecked. Click OK when you have finished setting these options, and the virtual switch will be created.

Virtual Machine

After the virtualization host computer has been carefully planned and designed, it is ready to support guest virtual machines. However, there is just as much planning, if not more, that needs to go into configuring the virtual machines. With virtualization comes the ability to maximize the physical server and no longer have “unused” resources. While this is a considerable advantage and cost savings to an organization, it also requires more planning than the one-to-one way of thinking before virtualization.

Before virtualization, IT administrators were confined to the physical resources available on the server running a particular application. With virtualization, an IT administrator can now add compute resources to a virtual machine without purchasing additional hardware, as long as the virtualization host computer has been designed with this in mind.

The concept of a virtual machine is sometimes tricky to grasp for those new to the term. Think of a virtual machine in the same way you think of a physical server hosting an application. A virtual machine emulates a physical computer, with the only difference being that its resources are managed by a hypervisor that translates resource requests to the underlying physical hardware. You can think of a virtual machine as a portable file that can be moved, copied, and reassigned to a different virtualization host with minimal administration.

Virtualization separates the physical hardware from the virtual hardware running on a virtual machine, so virtual machines can be moved to another hardware platform easily. Physical servers are typically on a refresh cycle of several years. Every few years, the IT team would have to build a new server, configure the applications, and migrate software. With virtualization, the underlying hardware can be upgraded while the virtual machines stay the same. This reduces the IT maintenance burden.

With full virtualization, guest operating systems are unaware that they are running in a virtual environment as opposed to paravirtualization, a virtualization method that presents a more customized virtual interface to host system hardware. Applications and software can be installed on a virtual machine as if it were a physical server. Isolation of applications is just one of the many advantages of running a virtual environment. Applications can be installed on separate virtual machines, which provides complete isolation from other applications running on the host computer or another virtual machine. This is a great way to test new applications without interfering with existing applications or to create a development environment that is segmented from the production environment. Isolation can also be performed through containers rather than allocating an entire VM. Containers are discussed in Chapter 7.

Virtualization is used for both servers and desktops. Organizations will virtualize desktops so that end users can log into their desktop remotely. Desktops are maintained centrally in a cloud or data center environment, where they can be more effectively managed and secured. Desktop virtualization is known as virtual desktop infrastructure (VDI).

The remainder of this section explains the compute resources that make up a virtual machine and how to manage and plan for those resources in a virtual environment.

Virtual Disks

Just like a physical server, a virtual machine needs to have a place to install an operating system and applications and to store files and folders. Simply put, a virtual disk is a file that represents a physical disk drive to the virtual machine. VMware virtual machine disks (VMDKs) have an extension of .vmdk, while Hyper-V virtual hard disks (VHDs) have an extension of .vhdx.

A virtual disk file resides on the host computer and is accessed by the guest virtual machine. It contains the same properties and features of a physical drive, including disk partitions, a file system, and files and folders.

When creating a virtual disk, you need to make a few decisions, including the type of disk, the name and location of the disk, and the disk’s size. Each of the significant virtualization manufacturers uses different terminology to describe virtual disk configurations. For example, if you are using Microsoft Hyper-V, you would have the option of making a dynamically expanding virtual disk, a fixed virtual disk, or a differencing virtual disk. If you are creating a fixed-size disk, you will specify the disk’s size when it is created. If you create a dynamically expanding virtual disk, the disk starts at the minimum size required to hold the data and adds storage as needed.

Differencing virtual disks are used in parent-child virtual disks, where a parent virtual disk holds files inherited by its children. For example, five Windows 10 machines could all share a parent virtual disk for the operating system, while user profile directories and applications would reside on the child disks. This results in lower disk consumption and easier updating.

On the other hand, if you are creating a virtual disk in VMware ESXi, you have the option of creating a thick disk or a thin disk. A thick disk is similar to a fixed disk in Microsoft Hyper-V in that the size is specified and allocated during the creation of the virtual disk. A thin disk is similar to a dynamically expanding disk in Microsoft Hyper-V in that the disk starts out small and adds space as required by the virtual machine.

While the different virtualization vendors use different terms to define their virtual disks, the concepts are similar. Whether you are using Hyper-V, ESXi, or XenServer, you still need to decide which type of disk to use for which application. If you are concerned about disk space, then using a thin disk or dynamically expanding disk would be the best option. If disk size is not a concern, then you could use a fixed-size or thick disk.

Virtual disks also use instruction sets and queueing techniques just like physical disks, and virtual disks must be created using a specific virtual disk interface such as IDE or SCSI. Some virtual machine types can only boot from a virtual IDE drive, while others, such as those requiring secure boot, require a SCSI boot drive. Table 5-4 shows the maximum number of virtual IDE and SCSI disks available for various types of virtual machines. Please note that XenServer does not emulate SCSI or IDE and uses a unique disk format with a maximum of 16 disks.

Table 5-4 Virtual Machine Limits

vNIC

Configuring and planning the virtual network interface cards are just as important as planning the virtual disk configuration. The network interface card in a computer is a device that allows a physical computer to interact with other virtual machines and devices on the network. Likewise, a virtual NIC (vNIC) is associated with a physical NIC and allows a virtual machine to communicate on the network. Proper configuration of the vNIC and network settings is a crucial component to minimizing bottlenecks in the virtual environment.

A vNIC does not have any physical components; it is a software component made up of software drivers that mimic a physical NIC. A vNIC allows an organization to change some of the properties of the vNIC, including MAC address settings, network connections, and VLAN ID. This allows for greater control over the vNIC from within the hypervisor software. Once the settings are configured and the vNIC is installed on the virtual machine, it functions like a physical NIC installed on a physical server.

After attaching a vNIC to a virtual machine, you can add the vNIC to a virtual network. A virtual network is a group of network devices configured to access local or external network resources and consists of virtual network links. In effect, a virtual network is a network where traffic between the virtual servers is routed using virtual switches (vSwitches) and virtual routers.

A virtual router is a software-based router that allows a virtualization host to act as a hardware router over the network. This is required if you wish to enable inter-VLAN communication without a hardware router. A virtual network allows the virtual machine to interact with the rest of the LAN.

In addition to configuring a vSwitch, you may configure bridged networking, which allows the virtual machine to communicate with the outside world using the physical NIC so it can appear as a normal host to the rest of the network.

You need to consider some options when configuring a virtual machine to communicate with the rest of the LAN. Sometimes an organization may want to prevent a virtual machine from communicating with devices on the LAN, in which case you can isolate the virtual machine on a private network so that it can communicate only with other virtual machines on the same host. Virtual machine isolation with a private network is common when setting up a test lab from cloned virtual machines. The cloned machines would conflict with existing production machines if they could talk on the network, so they are isolated to only talk among themselves on a private network. Also, clustered virtual machines use private networks for heartbeat connections.

In a different scenario, an organization might want to bridge the connection between its virtual machine and the LAN used by the host computer so that the virtual machine can communicate with devices that are external to the host computer. Determining how the vNIC and virtual machine use virtual networks is an essential piece of virtualization. Remember, one of the many benefits of virtualization is the ability to isolate applications for testing and deployment, but that is only possible if the virtual network and vNIC are correctly configured.

After the virtual machine’s operating system recognizes and installs the vNIC, it can be configured just like a physical NIC. It is possible to set the IP address, DNS servers, default gateway, subnet mask, and link speed. The actual network configuration of the vNIC is identical to that of a physical network adapter. So the virtual machine connects to the network in the same manner that a physical device would that has the same IP address and subnet mask configuration. A virtual machine can be configured to use one or more virtual Ethernet adapters, allowing each adapter to have its own MAC and IP address. Table 5-4, shown earlier, includes the maximum number of vNICs available on various types of virtual machines.

Virtual Switches

Once the organization has created and added a vNIC to its virtual machine, the next step in the process is to assign a vSwitch to the machine so that it can communicate with other network devices. Similar to a physical switch, a vSwitch makes it possible to connect other network devices together.

A vSwitch controls how the network traffic flows between the virtual machines and the host computer, as well as how network traffic flows between the virtual machine and other network devices in the organization. Virtual switches also allow the organization to isolate network traffic to its virtual machines. A vSwitch can provide some of the same security features that a physical switch provides, including policy enforcement, isolation, traffic shaping, and simplified troubleshooting.

A vSwitch can support VLANs and is compatible with standard VLAN implementations. However, a vSwitch cannot be attached to another vSwitch; instead, more ports can be added to the existing switch.

An organization can create different types of vSwitches to control network connectivity to a virtual machine. An external vSwitch allows the virtual machine to communicate with other virtual machines on the same host and with other network devices located outside the host computer. An internal vSwitch allows the virtual machines and the host to communicate with each other, but the virtual machine is unable to communicate with network devices located outside the host computer.

Planning the vSwitch configuration is extremely important to an organization’s virtualization design. It is equally important for the organization to make sure the vSwitch that the virtual machine uses to communicate is configured correctly. Proper design of the vSwitch environment is critical to the virtual machine being able to communicate to the correct part of the network.

Memory

Managing memory on a virtual machine is different than managing memory on a physical server. When dealing with a physical server, an organization has to decide at the time of purchase how much memory a server needs to have. When building or deploying a virtual machine, the organization can change the memory on the fly as needed. Also, a virtual machine only consumes memory if that virtual machine is running, so memory can be freed up by shutting down less important virtual machines if necessary.

Managing virtual machine memory is easier and allows the organization to maximize its resources for that virtual machine. It can set the initial size of the virtual machine’s memory and change that setting after the virtual machine has been created and is operational. For example, the organization may have a virtual machine running file and print services and may be uncertain what the memory requirements ultimately will be. In this instance, it can configure a low amount of memory to start and then monitor the virtual machine to determine its memory utilization. If the virtual machine reaches 90 to 100 percent utilization, the organization can easily increase the amount of memory without having to purchase additional hardware.

Keep in mind, however, that increasing virtual machine memory is only possible if there is additional memory available on the virtualization host computer. The host computer must also have enough physical memory available to start the virtual machine; if there is not enough available physical memory, the virtual machine will not be allowed to start. Earlier in this chapter, you learned how to plan memory allocation on the virtualization host; now you can see why planning the host computer resources is so important.

There are three things that you should consider when provisioning a virtual machine and assigning memory to it:

• Operating system requirements Ensure that the amount of memory meets the minimum recommendations for the operating system that the virtual machine will be running.

• Application requirements Consider the applications the virtual machine will be running. Applications that require a lot of memory on a physical server may also need a similar setup on a virtual machine.

• Virtual machine neighbors Consider what other virtual machines are running on the host computer that will be competing with this virtual machine for memory resources and whether other applications are going to be running on the host computer that might need resources as well.

Resource contention is not typically a significant factor in a type 1 hypervisor, since best practice is not to run any additional software on the host computer. However, if additional applications are running on the host computer besides the hypervisor, you should consider their impact on resource contention with virtual machines when planning memory size. On a type 2 hypervisor, other applications would be running on the host computer. These applications require memory, so the memory necessary for those applications will need to be factored in when determining memory size for the virtual machine.

Memory can be assigned to a virtual machine in a couple of ways. One option is to configure a static amount of memory assigned to the virtual machine at all times. Static memory is a predefined amount of memory that is allocated to the virtual machine. If an organization uses this setting for all the virtual machines on a host computer, then the host computer must have at least enough physical memory to support those virtual machines.

A second option is to use dynamic memory, which allows a company to assign a minimum and maximum amount of memory to a virtual machine. With dynamic memory, a virtual machine consumes memory based on its current workload. Dynamic memory also allows for overcommitting the host computer’s physical memory so that more virtual machines can be run on that host computer.

Dynamic memory can be enabled on a per-virtual-machine basis, targeting only those virtual machines that can benefit from it. One way for a company to determine if it should use static or dynamic memory is by considering the application the virtual machine will be running. For example, if the company has a virtual machine running an application that uses a fixed amount of memory, the better option is to use static memory and allocate precisely the amount of memory that the virtual machine needs. Managing virtual machine memory is a crucial component of the performance of the virtualization environment and thus needs to be carefully planned and executed. Table 5-4, shown earlier, lists the maximum amount of memory available for various types of virtual machines.

Storage Virtualization

Planning where to store the virtual disks and configuration files for the virtual machine is something that needs careful consideration. Storage virtualization groups multiple network storage devices into a single storage unit that can be managed from a central console and used by a virtual machine or host computer.

Storage virtualization usually occurs in a storage area network (SAN), where a high-speed collection of shared storage devices can be used. Managing storage devices can be a complex and tedious task for an administrator. Storage virtualization simplifies the administration of common storage tasks, such as archiving, recovery, backups, and storage configuration.

A virtualized storage environment has some distinct advantages over nonvirtualized storage. In a nonvirtualized storage environment, the host computers connect directly to the internal storage to the host or to an external array. In this scenario, the server takes complete ownership of the physical storage, with an entire disk tied to a single server.

Virtualized storage enables the use of shared storage devices. Virtualized storage addresses the challenge of a single server owning storage by allowing multiple host servers and virtual machines to simultaneously access storage. Shared storage can present storage to a host computer, and the host computer, in turn, can present the storage to the virtual machine. Multiple host computers can access the shared storage simultaneously, which allows the virtual machines to migrate between host computers. Virtualization software supports all the common storage interconnects for block-based storage, including Fibre Channel, iSCSI, Fibre Channel over Ethernet (FCoE), and direct-attached storage. The virtualization software provides an interface to simplify how the virtual machine accesses the storage. It also presents SCSI and IDE controllers to the virtual machines so that the operating system can recognize the storage. The virtual machine sees only a simple physical disk attached via the IDE or SCSI controller provided by the virtualization software.

Virtual storage offers the following advantages to a virtual machine:

• Ease of management

• Improved efficiency

• Support for a range of storage types that the native operating system might not support

• Flexible placement and migration

Suppose an organization uses Fibre Channel to connect to shared storage. In that case, it is taking advantage of N_Port ID Virtualization (NPIV), a technology that allows multiple host computers to share a single physical Fibre Channel port identification, or N_Port. This allows a single host bus adapter to register multiple worldwide names (WWNs) and N_Port identification numbers. Using NPIV, each host server can present a different WWN to the shared storage device, allowing each host computer to see its own storage.

In addition to storage virtualization, an organization might use clustered storage to provide increased performance, capacity, and reliability for the storage environment that the virtual machines access. Clustered storage combines multiple storage devices together to distribute the workload between storage devices and provide access to the virtual machine files, regardless of the physical location of the files.

Guest Tools

Guest tools are software additions added to a virtual machine after the operating system has been installed. They enhance a virtual machine’s performance and improve the interaction between the virtual machine and the host computer. Guest tools also make it easier to manage a virtual machine by providing enhanced features, such as faster graphics performance, time synchronization between host and guest, increased network performance, and the ability to copy files between the virtual machine and the host computer. The guest tools are also responsible for integrating the drivers into the guest virtual machine operating system.

A guest virtual machine operating system can run without installing guest tools, but it loses important functionality and ease of administration without them. Installing the guest tools is easy and straightforward on all mainstream virtualization applications and is sometimes even built into the operating system. For example, a Windows Server 2019 virtual machine created using Microsoft Hyper-V has the virtual machine integration services already loaded. Most operating systems, including Microsoft Windows, Linux, Oracle Solaris, FreeBSD, and macOS, support the installation of guest tools.

Exercise 5-2: Creating a Virtual Machine in Hyper-V

In this exercise, you will create a new virtual machine using Hyper-V.

Follow along with these steps to create a new virtual machine in Hyper-V.

1. Open the Hyper-V Manager tool.

2. Click New | Virtual Machine under the actions pane on the right or right-click on your computer name on the left and select New | Virtual Machine.

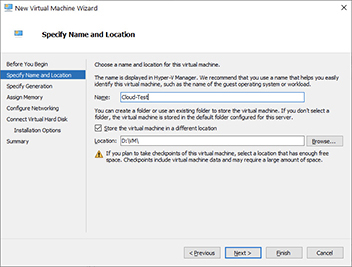

3. Click Next.

4. Give the virtual machine a name. In this example, the machine is named Cloud-Test.

5. Check the box labeled “Store the virtual machine in a different location,” and then select a folder on your disk that has enough space. In this example, the location is on the D drive in a folder called VM so that it does not contend with the programs running on the C system drive. Click Next when you are finished.

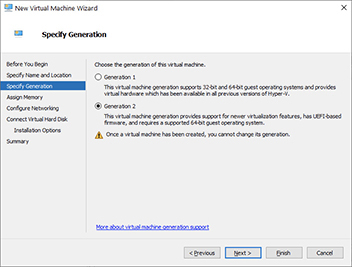

6. On the screen shown next, choose Generation 2, and click Next. The only reason to select Generation 1 will be if you are installing a 32-bit operating system. Generation 1 machines are much more limited. They use IDE-based virtual hard disks and do not allow for some of the more advanced virtualization features.

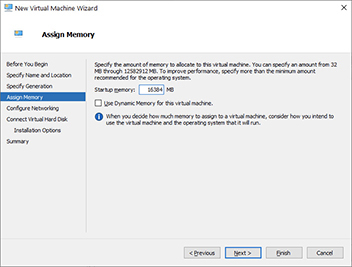

7. Assign the virtual machine memory. Hyper-V specifies the memory in MB, so if you want 16GB of memory, you will allocate 16,384MB.

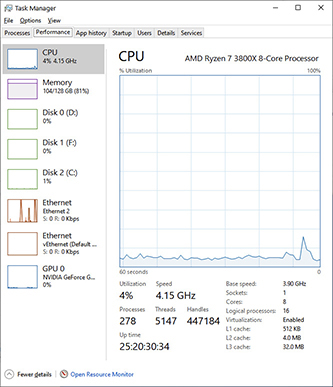

Depending on your machine’s available memory, you may need to assign less memory to this machine. If you are unsure about how much available memory you have, right-click on your taskbar and then select Task Manager. Click the Performance tab, and then you will see how much memory you have available. The system shown next is using 104GB out of 128GB of memory. This leaves 24GB. Do not assign all available memory to the virtual machine because you will want to leave some buffer for increased use on the host. Uncheck the box to use dynamic memory. Dynamic memory allows Hyper-V to grow and shrink the amount of memory assigned to the machine according to demand. Click Next when you are done.

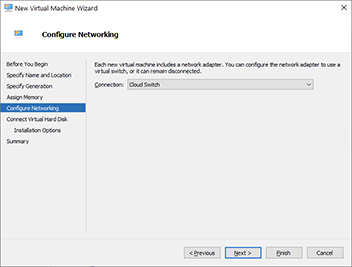

8. Choose a virtual switch to attach this machine to. If this were a physical server, the system would have a NIC, and an Ethernet cable would connect it to a switch for network access. As a VM, the machine is given a vNIC associated with a virtual switch mapped to a physical NIC in the host. In this example, the switch “Cloud Switch” created in this chapter’s earlier exercise was chosen, shown next. After you have set these options, click Next.

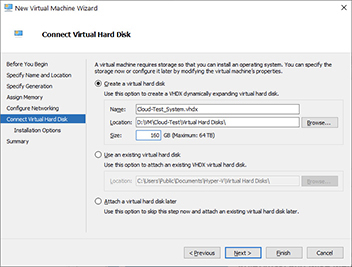

9. The next step is to give the virtual machine a virtual hard disk. You will need to select a name, location, and maximum size for the virtual disk. Alternatively, if you already have a virtual disk, you can use that instead. You might choose this option if you were creating a machine from a virtual hard disk template. The last option is to attach a disk later. However, if you choose this option, your VM will not have a hard disk. You might choose this if you were going to run a live VM from an ISO such as a live Kali Linux VM. In this exercise, we will give the virtual hard disk the name Cloud-Test_System.vhdx, as shown next. Hyper-V defaults the name of the hard disk to the name of the virtual machine. However, it is a good practice to add a bit more information to the virtual hard disk name. Often VMs will have more than one hard disk, so in this example, we appended the “_System” at the end of the name to indicate that this is the system drive. The system drive is the one where the operating system and default applications are installed. Give the hard disk a maximum size of 160GB. The disk will only consume the actual space used by the VM, but it will be able to grow to this size. Last, click Next.

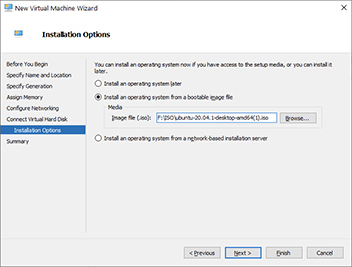

10. The next screen allows you to mount an ISO to install an operating system. You can also point the system to a server to install an OS or choose to install an operating system later. For this example, we will create an Ubuntu VM. Download the Ubuntu install ISO from https://ubuntu.com/download/desktop and then choose “Install an operating system from a bootable image file” and click the Browse button. Next, navigate to the location where you downloaded the Ubuntu ISO, select the ISO, and then click Open. You will be returned to the installation options screen, which should look like the following illustration, except that your ISO file’s location will be wherever you placed it on your system. Click Next when you are done.

11. You are now shown a summary of the settings you selected. Click Finish to complete the process.

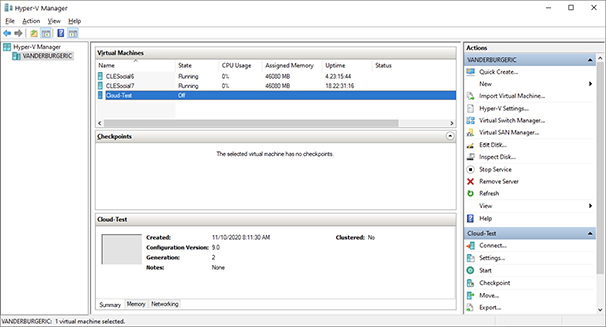

12. The virtual machine will now appear in your Hyper-V manager. It will have a state of Off, as shown here.

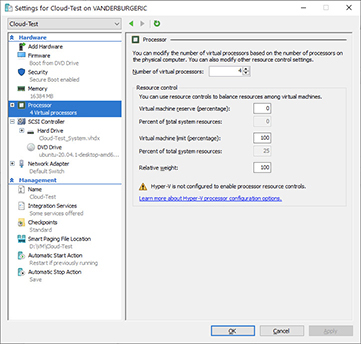

13. You might have noticed that we did not choose how many vCPUs to assign to the VM. Hyper-V does not include this in the wizard. Instead, it assigns just one vCPU, but this can be changed once the machine is created. Please note that the machine must be in an Off state to modify the vCPU settings. Right-click on the Cloud-Test VM and select Settings.

14. Select Processor on the left. On the right side of the window, you can adjust how many vCPUs are assigned to the VM by typing a number in the box labeled Number Of Virtual Processors or clicking on the up arrow next to the box, as shown next. In this example, four vCPUs were assigned to the VM. Once you set this value to your preferred number of vCPUs, click OK to save the settings.

Virtualized Infrastructure Service Elements

Experts used to debate about which workloads were suitable for virtualization and which were not. However, today, most workloads are safe to run in a virtual machine. Virtualization is not just used for testing or development environments. Rather, enterprise-grade production systems run in highly virtualized environments. Of course, the purpose of virtual machines is to run services or applications, and this section presents some of the types of services you should be familiar with as you build out a virtualized infrastructure into a given cloud solution.

DNS

Domain Name System (DNS) is the backbone of network communication, for it provides name resolution for devices on the local network, the domain, or the Internet. DNS servers respond to queries for name resolution for hosts that reside within their particular space. This space is called a zone. Name resolution is the process whereby a name such as cloudplus.comptia.com is translated into its associated IP address. Each machine requires a unique IP address to communicate on a network. On the Internet, those addresses are assigned and distributed to companies. DNS servers maintain records of which names are associated with those addresses so that users and applications can access the services on those hosts.

Computers that are configured with IP are usually configured with one or more DNS servers. These servers are typically the DNS servers closest to the computer, such as those of an organization’s domain or a user’s or company’s Internet service provider (ISP). On a local domain network, computers can be referenced by just their name. For example, a user using a workstation on the cloudplus.com domain who wants to connect to a server called server1 can type in the hostname, server1, and their computer will query the local DNS server for the address associated with server1.cloudplus.com. However, when a computer wants to connect to a resource outside its own network, it must use a fully qualified domain name (FQDN). This includes the name of the host and the domain. For example, the FQDN of server1 in this example is server1.cloudplus.com.

DNS servers can be configured to be authoritative for a zone. Those that are authoritative are associated with the domain owner, and they provide the most accurate results. However, computers often receive answers to DNS queries that are nonauthoritative. That is because their local DNS server issues queries on their behalf when it does not know of other domain names. It retains a local cache of queries and can respond back from that cache until the cache expires. DNS servers inform other servers how long a DNS entry may be cached so that stale records are not provided back to users.

DHCP

Each machine on an IP network needs a unique address to communicate. These addresses can be assigned manually or automatically. Dynamic Host Configuration Protocol (DHCP) is a service that hands out IP addresses to machines upon request. This is most often used for end-user workstations or for devices that connect to an ISP. DHCP servers are configured with one or more scopes. Each scope can hand out IP addresses from a specific range. Within that range, some addresses can be reserved for a particular machine by assigning an IP address to the MAC address of the desired node in the DHCP configuration. A MAC address is a unique identifier that is placed on a network interface port from the manufacturer.

DHCP servers usually hand out other information in addition to the IP address. This information includes the DNS server, default gateway, local time server, and other options, such as where server boot images can be found. (DNS servers were discussed in the previous section.) The default gateway is the device that computers will send traffic to if its destination does not reside on the local network, VLAN, or VXLAN.

Certificate Services

Certificates are cryptographic entities that can encrypt data in a public key infrastructure (PKI) or verify the authenticity of devices, program code, or websites. Certificates are cryptographic in that they are a function of an encryption algorithm where a public and private key pair can be used to sign data from one another (e.g., that requires the use of a public and private key pair to sign and validate data sent between the certificate holders). Data encrypted with a public key can only be decrypted by the associated private key. Similarly, data encrypted by the private key can only be decrypted by the associated public key. Data that is signed with the private key can prove authenticity because the signature can be decrypted with the public key, proving that the signer has the private key. This is called a digital signature. Signing data with a private key is not used to encrypt the data (protecting confidentiality). It is only used for validating authenticity.