CHAPTER 15

Troubleshooting

In this chapter, you will learn about

• Troubleshooting methodology

• Troubleshooting steps

• Documentation and analysis

• Troubleshooting tools

Service and maintenance availability must be a priority when choosing a cloud provider. Having the ability to test and troubleshoot the cloud environment is a critical step in providing the service availability an organization requires. This chapter introduces you to troubleshooting tools, discusses documentation and its importance to company and cloud operations, and presents a troubleshooting methodology with various sample scenarios and issues that you might face in your career and on the CompTIA Cloud+ exam.

Troubleshooting Methodology

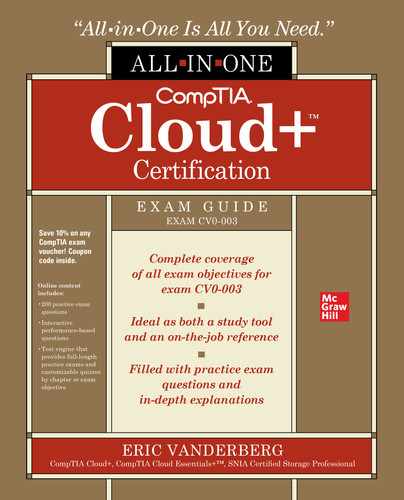

CompTIA has established a troubleshooting methodology consisting of six steps, as shown in Figure 15-1. They are as follows: First, identify the problem. This is followed by establishing a theory of probable causes. Next, you test the theory to determine the cause. Fourth, establish a plan of action to resolve the problem and implement the solution. Fifth, verify full system functionality and, if applicable, implement preventative measures. Last, document your findings, actions, and outcomes.

Figure 15-1 CompTIA troubleshooting methodology

Step 1: Identify the problem. The first step in the CompTIA troubleshooting methodology is to identify the problem. There are four parts to this, as follows:

1. Interview the user

2. Reproduce the issue

3. Preserve the state

4. Identify changes precipitating the issue

Interview the User Talk with the user experiencing the issue to understand it. Identify the problem’s scope, including which machines and network devices, subnets, sites, or domains are affected. Identifying the scope may involve interviewing others in the department or company. You will also need to find out when the issue first began. Did this occur last week, earlier in the day, or is this a chronic issue that they are finally reporting? Try to get as precise a time as possible because this will make it easier to isolate changes and conditions that occurred surrounding that time.

Since both of these steps require asking the user or others questions, it is important to be courteous and respectful. Show the user that you are concerned about the problem by actively listening to what they have to say. All too often, IT professionals want to run off as soon as a problem is mentioned so that they can begin fixing it. However, in a rush to fix the problem, they may not truly understand the problem and give the user the impression that their situation is not important.

Reproduce the Issue A critical step in identifying the problem is to ask the user to demonstrate what is not working for them. If they say the Internet is not working on their cloud virtual desktop, ask them to demonstrate. In this way, both you and the user can better understand the scope of the problem, and the user might be able to do more while you troubleshoot.

Sometimes a user will describe a scenario that you cannot reproduce. For example, they may have trouble visiting a site, and you can get there fine until they show you and you see they are using a different, out-of-date browser. Similarly, they may show you that a particular site does not come up, but when you ask them to go to another site, it loads correctly.

It can be frustrating if you cannot reproduce the error with the user. If this happens, be patient and try several more times. If you still cannot reproduce it, ask them to notify you when it happens again and to note other things that happen around that time. It could be that the issue only occurs when certain conditions are met, such as a high load on the network, a specific time of day, or when other applications are open.

Preserve the State Before moving to further steps, ensure that a backup is taken of the system. You want to preserve the state the machine is in so that you can get back to this state later, if necessary. This allows you to revert back if changes make the problem worse as you are troubleshooting.

Identify Changes Precipitating the Issue Lastly, determine what has changed recently. Evaluate whether those changes could have contributed to the issue. Some things to consider include

• New software installations.

• Applying patches or updates.

• New users logging on.

• The machine was moved to a new location.

• Connections to new Wi-Fi networks.

• Operating system changes were made.

• New hardware was added, or hardware was removed. This could include something as simple as plugging in a portable hard drive or flash drive or removing a webcam.

• Connections to new network resources, such as network shares or printers.

• The system was damaged in some way, or the user noticed signs of damage.

• The user noticed signs of tampering or tampering alarms were triggered.

Step 2: Establish a theory of probable causes. The second step in the CompTIA troubleshooting methodology is to establish a theory of probable causes. There are three parts to this, as follows:

1. List and prioritize possible causes

2. Question the obvious

3. Conduct research based on symptoms

List and Prioritize Possible Causes The information gathered in step 1 should help in generating possible causes. Ask yourself what is common between devices that are experiencing the issue. Do they share a common connection or resource?

Question the Obvious Be sure to question the obvious (the simple things). Sometimes, problems can be solved by something relatively simple such as plugging in an Ethernet cable, verifying that users are typing a URL or UNC correctly, or verifying that target systems are turned on. Don’t spend your time working out potential complex solutions until you have eliminated the simple solutions.

Conduct Research Based on Symptoms Next, research the symptoms the user is experiencing. Documentation comes in very handy when performing this research. Be sure to review records on how systems should be configured and compare these with the actual configuration.

Depending on the issue, you may need to review vendor manuals as well. For example, if this is an issue with a software application, check the vendor manual for that application. It is essential to know where to find this information. For this reason, keep manuals and other documentation in a central location, typically on a network share or a shared cloud folder that can be easily updated and accessed.

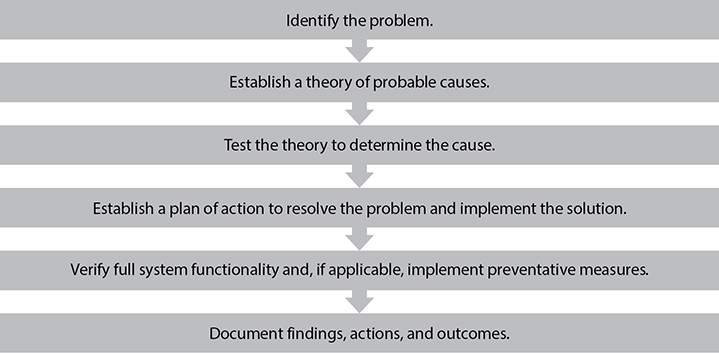

Step 3: Test the theory to determine the cause. At this point, you will likely have multiple theories on what might be the problem. Only one of those theories will be correct, so you will need to test the theories to identify which one it is. Systematically go through the theories, testing them to either confirm or reject them. Figure 15-2 shows the process.

Figure 15-2 Testing the theory

Start by testing the simplest theories before thinking through the complex ones. As mentioned in the previous step, most issues are caused by simple things, and simple things are easier to test. Ensure that the system you use for testing is similar enough to the one experiencing issues, and ensure that you can replicate the issue on the test system before attempting a fix. Some IT professionals have worked hard to deploy a solution to a system that was not experiencing the problem. They then falsely believe they fixed the issue when they later test. For each theory, determine if you can confirm or reject it. If you reject the theory, move on to the next one. If you confirm it, move on to step 4.

If you need to have the user test some things, be sure to ask politely. Phrase your request so as not to cause the user to think that you are blaming them for the problem, even if you believe it is a user error. If you are incorrect and it is not a user error, you will look foolish, and the user might be offended.

If you end up rejecting all the theories, you will need to establish a new theory. Go back to step 2 and consider the situation from angles that you did not consider before. If you are unable to think of new theories, solicit help from others.

Step 4: Establish a plan of action to resolve the problem and implement the solution. Document the steps that you want to take to resolve the problem. Ensure that you can demonstrate that your tests confirmed a nonworking condition and then a working condition following the proposed actions’ implementation. Review the plan with others and ensure that change controls are followed. The change management process was discussed in Chapter 14. Lastly, after approval has been given to implement the change, perform the outlined steps to fix the problem.

Step 5: Verify full system functionality and, if applicable, implement preventative measures. Check with all users who were experiencing the issue to ensure that they are no longer experiencing the problem. Also, check with others around them to ensure that you have not created other issues by implementing the fix. Lastly, implement restrictions or additional controls to prevent the problem from occurring in the future. This could involve retraining the user or placing technical controls on the system to prevent such actions from happening again. In some cases, permissions may need to be changed or system configurations updated. System changes should follow the same change control process as the troubleshooting change did.

Step 6: Document findings, actions, and outcomes. This last step is critical to ensure that you or others at your company do not continue to solve the same problems from scratch over and over. If you are anything like us, you will need to write down what you did so that you can remember it again later. IT professionals lead busy lives, and there never seems to be time to document. However, if you do not document, you will find that you spend time performing the same research when you could have simply consulted your documentation.

The CompTIA troubleshooting steps provided here will be demonstrated in the scenarios that follow to help you understand better how the troubleshooting methodology is applied to real-world problems.

In the course of your career, you will run into a wide variety of issues that you will need to troubleshoot. No book could be comprehensive enough to cover all of them, so I have selected a few problems that you are likely to see. The issues are also ones that you are likely to see on the CompTIA Cloud+ exam.

Troubleshooting Steps

Troubleshooting is a broad subject because there are so many things that can go wrong. This is complicated with the many complex systems in use today. No matter the issue, remember to try to break the problem down to the smallest parts so that you can work on just those. This is why it is crucial to identify the issue as precisely as possible.

This section discusses some of the potential issues you might face in the following main areas:

• Security

• Deployment

• Connectivity

• Performance

• Capacity

• Automation and orchestration

Security Issues

Security issues can cause significant problems for system availability and data confidentiality or integrity. Some security issues you should be aware of are as follows:

• Federations, domain trusts, and single sign-on Federations, domain trusts, and single sign-on (SSO) are each technologies that extend authentication and authorization functions across multiple interdependent systems.

• External attacks External attacks can be minimized using firewalls, intrusion detection systems, hardening, and other concepts discussed in Chapter 11.

• Internal attacks Separation of duties and least privilege can help reduce the likelihood of internal attacks.

• Privilege escalation System vulnerabilities, incorrectly configured roles, or software bugs can result in situations where malicious code or an attacker can escalate their privileges to gain access to resources that they are unauthorized to access.

• External role change Role change policies should extend out to procedures and practices employed to change authorizations for users to match changes in job roles for employees.

• Incorrect hardening settings Hardening, discussed in Chapter 11, reduces the risk to devices.

• Weak or obsolete security technologies Security technologies age quickly. Those technologies that are out of support may not receive vendor patches and will be unsafe to use in the protection of corporate assets.

This section explores three common security topics in more detail, along with scenarios that utilize CompTIA’s troubleshooting methodology to help you become familiar with the process in practice. These topics include authorization and authentication issues, malware, and certificate issues. Lastly, certificates are used to secure communication between devices and verify the identity of communication partners. When certificates or the systems around them fail, communication failures are sure to follow, significantly affecting business operations.

Insufficient Security Controls and Processes

Security controls are technologies, procedures, or services that reduce security risk. As you can imagine, there are a wide variety of security controls. They are generally divided into three types as follows:

• Preventative controls

• Detective controls

• Corrective controls

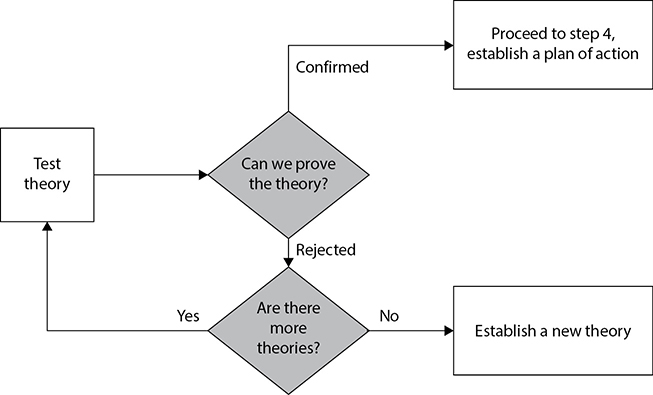

Preventative Controls Preventative controls are designed to stop harmful activity from occurring. Some examples include firewalls that block malicious traffic from entering the network, encryption that prevents other parties from viewing data or a communication stream, hardening that closes unused ports and disables unused services, or patch management that keeps systems up-to-date with fixes for discovered vulnerabilities in software.

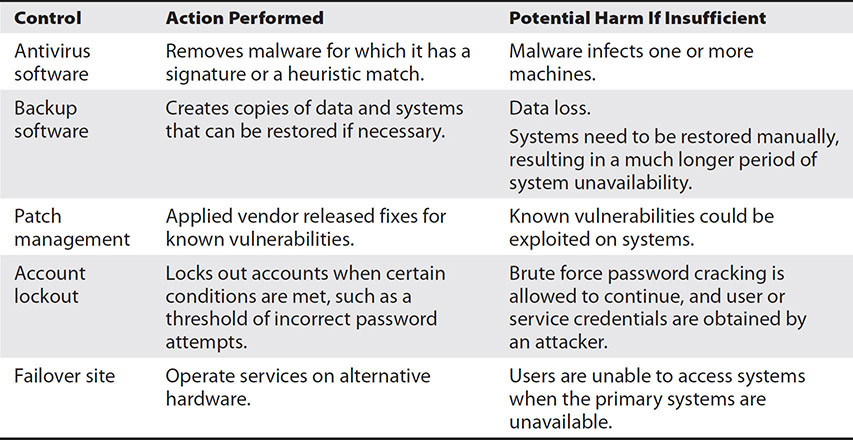

Insufficient preventative controls increase the likelihood that an attacker will exploit the area the control is designed to protect against. Table 15-1 shows the example preventative controls, action they perform, and potential results of insufficient controls. Some controls perform actions that fit into multiple categories, such as antimalware software that both detects and corrects malware by identifying it and then removing it.

Table 15-1 Preventative Controls and the Harm They Prevent

Detective Controls Detective controls are designed to identify and alert on harmful activity. For example, DLP would alert on activity that violates policies. It could also be configured to block such activity, so it would fall into a preventative control as well. Similarly, Internet filtering can detect attempts to access restricted Internet content that could contain harmful material, but it can also be configured to block that access. The mode that each of these runs in can be audit only or audit and protect.

Insufficient preventative controls result in the company being unaware of ongoing attacks or misuse of company resources. Table 15-2 shows the example preventative controls, the action they perform, and the potential results of insufficient controls.

Table 15-2 Detective Controls and the Harm They Prevent

Corrective Controls Corrective controls are designed to bring systems or data back to a normal operating state following a security event. Some corrective controls include antivirus software that removes malware for which it has a signature, backup software that restores data or systems, and patch management that applies fixes for known vulnerabilities.

Insufficient corrective controls increase the harm of a security event, including loss of data, extended system outages, or more expensive recovery. Table 15-3 shows the example corrective controls, the action they perform, and the potential results of insufficient controls.

Table 15-3 Corrective Controls and the Harm They Prevent

Privilege Issues

Privileges are the rights to perform actions on an information system. Privileges can be assigned to users or other entities, such as service accounts. These privileges may allow users to access files, run programs, or use services.

Privilege issues can sometimes arise when employees change positions and their privileges are not correctly updated. It could also happen when files are restored and older permissions are restored with them, or permissions are not carried along with the restored files. Whatever the cause, permission issues result in a lack of access to resources that affect authorized user or system activities.

Privilege issues may reveal themselves when a user or service attempts to perform an action that they are authorized to perform, but they cannot do so. For example, a user may try to access a share that they are supposed to be able to access, but they are given an access denied error. Service accounts can also cause issues. Service accounts are created for very specific uses, and their permissions are usually granularly defined. However, as needs change, so must the permissions. These privileges would need to be updated to grant the permissions to resources a service account can access and what the service can do with that resource.

Let’s demonstrate the CompTIA troubleshooting methodology with a scenario: A service account is used to log into a database server. It issues queries to three databases. The service can add data to the tables of one database but cannot modify the table structure. This account works fine for operating the application, but upgrading the application results in an error stating that tables could not be updated.

Step 1: Identify the problem. The application upgrade fails when updating tables.

Step 2: Establish a theory of probable causes. You theorize that this could be due to a permissions issue with the person running the upgrade or with the service account. You run a trace on the database as the application is upgraded. You identify the account used to perform the upgrade and the queries that fail. The queries are related to adding new fields.

Step 3: Test the theory to determine the cause. You review the permissions for the account and find that it does not have permission to modify the table structure, and adding new fields is a change to the structure.

Step 4: Establish a plan of action to resolve the problem and implement the solution. ou recommend that an account with permission to modify the table structure should be used to install the application. Management agrees, and you put in a service ticket to have an account created with the appropriate permissions and roles. Once the account is created, you provide the credentials to the application team.

Step 5: Verify full system functionality and, if applicable, implement preventative measures. The application team reports that the application installs correctly with the new credentials. You confirm that the application upgrade is complete and then submit a ticket to have the account disabled until the application team needs it again.

Step 6: Document findings, actions, and outcomes. You document the account that needs to be used for application updates and the process that must be followed to enable the account.

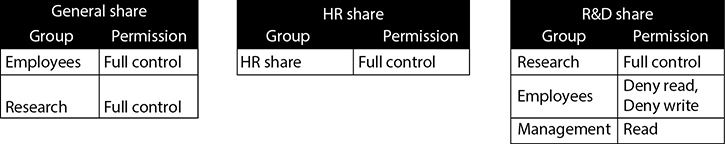

Security Groups Permissions are typically assigned to a group associated with a role rather than giving permissions directly to a user account. This makes it easier to add others to a role because they can just be added to the group, rather than copying all the permissions assigned to another user. Similarly, making changes to the permissions can be done in one place, rather than for each user that has access. However, security groups can become complex to troubleshoot when users are members of many groups and those groups have overlapping permissions.

In most systems, permissions are cumulative unless a deny permission is applied. Let’s look at an example. You will likely find that as systems grow over time, some convoluted data organization methods can evolve that result in some cumbersome privileges. Consider a situation where Todd is unable to access the R&D share. Todd is a member of the employees and management groups. Figure 15-3 shows the folder structure for the company data.

Figure 15-3 Shared folder structure

This data is stored within OneDrive, and permissions to the folders are assigned to groups. The folder permissions for each group are shown in Figure 15-4.

Figure 15-4 Folder permissions

For this scenario, consider the troubleshooting methodology and walk through it on your own. Think through each step. You may need to make some assumptions as you move through the process since this is a sample scenario.

Step 1. Identify the problem.

Step 2. Establish a theory of probable causes.

Step 3. Test the theory to determine the cause.

Step 4. Establish a plan of action to resolve the problem and implement the solution.

Step 5. Verify full system functionality and, if applicable, implement preventative measures.

Step 6. Document findings, actions, and outcomes.

Todd is a member of the employees and management groups, but he would not be able to access the R&D share because the deny read and write permission from his membership in the employees group would override his read permission as a member of the management group. You could resolve this by removing Todd from the employees group, but this could cause issues with other resources he needs to access. The best thing to do would be to move the R&D folder out from under the general share. The employees group could then be removed from the permissions on the R&D folder so that a deny permission would not be required there.

Authorization and Authentication Issues

Authentication is the process of validating an identity, and authorization validates that the identity has the required privileges to perform the requested action. Authorization and authentication issues include scenarios such as systems that are deployed without proper service accounts or account lockouts.

Authentication issues can be as simple as users locking their accounts by entering their credentials incorrectly several times consecutively. The user’s account will need to be unlocked before they can access network resources. If many users report permission problems, check services like DNS and Active Directory, or LDAP on Linux servers, to verify that they are functioning. Problems with these services can prevent users from authenticating to domain services.

Consider a scenario where a user reports that they are unable to log into their Office 365 mailbox and their OneDrive but they can access other resources.

For this scenario, consider the troubleshooting methodology and walk through it on your own. Think through each step. You may need to make some assumptions as you move through the process since this is a sample scenario.

Step 1. Identify the problem.

Step 2. Establish a theory of probable causes.

Step 3. Test the theory to determine the cause.

Step 4. Establish a plan of action to resolve the problem and implement the solution.

Step 5. Verify full system functionality and, if applicable, implement preventative measures.

Step 6. Document findings, actions, and outcomes.

Malware

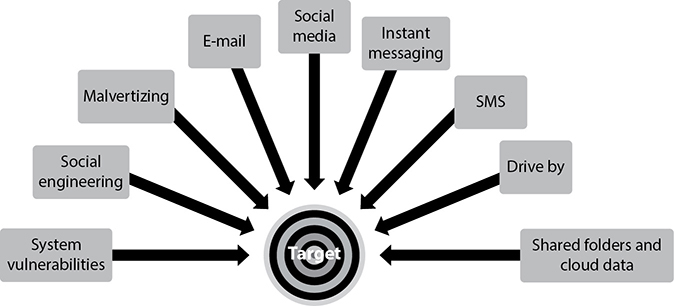

Another security issue you might face is the presence of malware. Malware impact can range from low, such as malware that slows a machine, to high-risk malware that results in a data breach. Malware infects machines through infected media that is plugged into a computer or other device, through website downloads or drive-by malware that executes from infected websites, or malicious ads known as malvertizing. Malware is also distributed through a variety of methods, as shown in Figure 15-5.

Figure 15-5 Malware distribution methods

Computers infected with malware might run slowly or encounter regular problems. Ransomware, a particularly troublesome form of malware, encrypts data on the user’s machine and on network drives the machine has write access to.

Let’s demonstrate the CompTIA troubleshooting methodology with a scenario: Aimee, a cloud security engineer, receives reports that user files are being encrypted on the network.

Step 1: Identify the problem. Files are being encrypted on the company NAS. Access logs from the NAS around the time of the encryption show connections from a computer called LAB1014. LAB1014 has many encrypted files on its local drive. No other users report encrypted files on their machines, and a spot check by another administrator confirms no encrypted files on a sample of other devices.

Step 2: Establish a theory of probable causes. This could be due to a rogue script or ransomware running on LAB1014.

Step 3: Test the theory to determine the cause. Both theories have the same response. LAB1014 needs to be quarantined immediately so that the problem does not spread and continue. If it is the cause of a rogue script, the activity will cease after LAB1014 is quarantined. If it is the result of ransomware, the LAB1014 will continue encrypting files on its local drive, but uninfected machines on the network and the NAS will continue operating normally.

Step 4: Establish a plan of action to resolve the problem and implement the solution. The first step is to isolate LAB1014 from the network so that it cannot infect any other machines. Next, check other computers, starting with devices that were connected to the infected machine, such as file servers or departmental servers and surrounding workstations. Isolate all machines that have malware on them.

Next, make a forensic copy of LAB1014 in case an investigation is required. Once the forensic image is verified, you can begin identifying the malware through virus scanning and removing the malware using virus scanning tools or specific malware removal tools. It is best to scan the LAB1014 computer with installed antivirus tools and with bootable media that can scan the machine from outside the context of the installed operating system. Sometimes malware tricks the operating system into thinking parts of its code are legitimate. It might even tell the operating system that its files do not exist. Virus scanning tools installed on the operating system rely on the operating system to provide them with accurate information, but this is not always the case. Bootable antivirus tools work independently from the operating system, so they do not suffer from these potential limitations.

Step 5: Verify full system functionality and, if applicable, implement preventative measures. Verify that the ransomware has been removed from LAB1014 and any other machines that may have been identified as containing ransomware in the course of troubleshooting and that new devices are not being infected. Next, restore data to the machines where data was encrypted.

Step 6: Document findings, actions, and outcomes. Create a report of the impact and actions taken.

Key and Certificate Issues

Certificates are used to encrypt and decrypt data, as well as to digitally sign and verify the integrity of data. Each certificate contains a unique, mathematically related public and private key pair. During the standard authentication process to a website, a client is presented with a certificate from a website. It then verifies that the certificate is in its trusted root store, thus trusting the certificate was signed by a trusted certificate authority. Afterward, the client confirms that the certificate is coming from the correct web server.

When the certificate is issued, it has an expiration date; certificates must be renewed before the expiration date. Otherwise, they are not usable. Expired certificates or certificates that are misconfigured can make sites unavailable or available with errors for end users.

Misconfigured certificates include sites that have a different name from their certificate, such as a site with the URL www.example.com configured with a certificate, for example.com. The missing “www” in the certificate name would result in certificate errors for site visitors.

Consider a scenario with a certificate issue and how the CompTIA troubleshooting methodology could be applied to resolve the issue: Users report that the company website shows security errors and customers are afraid to go to the website. Some customers on Twitter are saying that the company site has been hacked.

Step 1: Identify the problem. You open the site and see that the site is displaying a certificate error.

Step 2: Establish a theory of probable causes. The certificate either is expired or has been revoked.

Step 3: Test the theory to determine the cause. View the certificate on the web server to see if it is expired. If it is not expired, check the certificate revocation list (CRL) to see if it has been revoked. In this case, the certificate expired.

Step 4: Establish a plan of action to resolve the problem and implement the solution. Discuss renewal of the certificate and receive approval to perform the renewal and a purchase order to purchase the certificate renewal. Complete the renewal of the server certificate.

Step 5: Verify full system functionality and, if applicable, implement preventative measures. Log onto the site to confirm that certificate errors are no longer displayed.

Step 6: Document findings, actions, and outcomes. Identify all certificates in use at the company and when they expire. Discuss which ones are still required and establish a process to review certificates needed at least annually. Next, create a schedule with alerts so that certificates are renewed before they expire. Share the schedule with management so that they can budget for the certificate renewal cost.

Misconfigured or Misapplied Policies

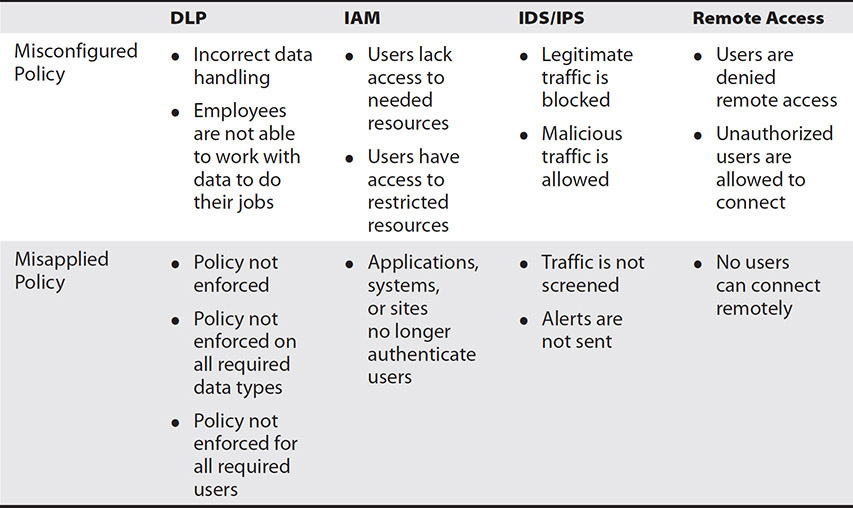

In this context, policies do not refer to documents, but to rules given to security systems. Some of these include

• DLP Data loss prevention (DLP) uses policies to define acceptable and unacceptable ways of working with data.

• IAM Identity access management (IAM) systems have policies that define how resources can be accessed.

• IDS/IPS IDS/IPS systems have policies that define the actions taken when traffic matches a signature.

• Remote access VPN and other remote access technologies, such as Microsoft’s Remote Desktop Gateway, have policies that define conditions that allow access, such as group membership, location, or time.

Table 15-4 shows each of these systems and some of the issues that could arise with misconfiguration or misapplication of policies.

Table 15-4 Misconfigured or Misapplied Policies

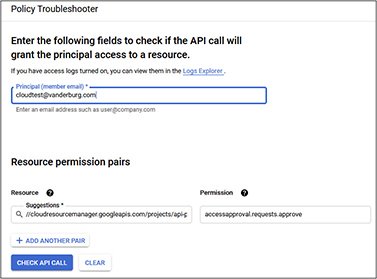

Exercise 15-1: Using the Google Cloud Policy Troubleshooter

In this exercise, we will create the Google Cloud Policy Troubleshooter to determine if an account has the required permissions for a resource under the current IAM policies. The tool will show whether the user can perform the selected action and which policies give them that right. If they do not have permission, it will state that access is denied and then specify the required role the user would need to be able to perform the action.

1. Log in to your Google Cloud Platform and then click the menu button in the upper left. Select IAM & Admin and then Policy Troubleshooter.

2. The Policy Troubleshooter screen will load. Type in a user account to test and then select the resource and the desired permission. This will test to see if the user account entered can perform that action on the resource. For this example, I have selected a sample account [email protected]. We will see if the account has the ability to approve requests through the cloud resource manager API. To do this, we entered the e-mail shown and then selected the cloud resource manager API and the accessapproval.requests.approve permission.

3. Click Check API Call. The troubleshooter will then assess whether that account has the selected access. In this example, the account does not have access. It shows that the role required for that permission is the owner role and that there is currently only one member of that role. The member has been blurred to protect privacy, but it is the value following “user:,” shown here.

If we were having difficulty with this account performing approvals using the API, one solution would be to add them to the owner’s role. However, this role likely contains permissions that the user does not need, so the best solution would be to create a new role and then assign the permissions to that role and grant the user that role.

Data Security Issues

A major component of security is protecting the data on systems from compromise, including unauthorized access, deletion, or corruption. Data security issues are those that result in some compromise of the data. Some data security issues you should be aware of are as follows:

• Unencrypted data Sensitive data transmitted or stored in an unencrypted format could potentially be exposed to unauthorized parties. For example, a backup tape containing sensitive information is lost, and the tape is not encrypted.

• Data breach When data is stolen from a company, it is known as a data breach. Data breaches require an investigation to determine the scope and impact of the breach and root causes so that those can be remediated. For example, a customer service rep opens a phishing e-mail containing a malicious attachment that provides access to an attacker. The attacker then uses the customer service rep’s credentials to retrieve the personal information on customers from the company database.

• Misclassification Data classifications are used to properly handle data. However, if the data is misclassified, incorrect handling rules and procedures will be applied to it. For example, controlled unclassified information (CUI) is received and misclassified as normal data. DLP policies based on the data classification tags are then not applied to it.

• Unencrypted protocols We have covered a wide variety of protocols used for communication over the Internet, on a local network, over VPNs, and back-end storage networks. Some of these protocols used encryption to protect the data in transit, while others were unencrypted. A data security issue could arise when an unencrypted protocol is used to send sensitive data over a public Internet link or a shared medium.

• Insecure ciphers A cipher is a mathematical formula used to convert plaintext (unencrypted) data into ciphertext (encrypted data). As ciphers age, weaknesses in their implementation are sometimes discovered that render them unsafe to use. In other cases, the power of computing renders them obsolete because faster computers may break the encryption using brute force methods.

Exposed Endpoints

Endpoints can be exposed to security threats if they are misconfigured, lack security updates, or lack essential security software such as host-based firewalls and antivirus protection. An exposed endpoint can lead to the compromise of the credentials of users on the machine or data that resides on the endpoint.

Misconfigured or Failed Security Appliances

Security appliances are hardware or virtual appliances that are used to secure the network infrastructure and systems. Misconfigured devices may not provide the level of security needed, or they could prevent legitimate services from operating correctly. This section discusses issues that could be seen with IDS/IPS, NAC, and WAF.

• IDS/IPS A misconfigured intrusion prevention system (IPS) or intrusion detection system (IDS) could result in blocked traffic or false alarms on traffic.

• NAC Misconfigured network access control (NAC) could result in machines that do not meet the NAC policy to connect, or it may block legitimate connections that do meet the NAC policy.

• WAF Web application firewalls (WAFs) screen traffic destined for web applications. A misconfigured WAF could block legitimate traffic or allow unauthorized traffic to the site. For example, suppose an administrator wants to remove encrypted HTTP access for a website used to accept customer orders. They log into the firewall and remove the access control list (ACL) that allows HTTPS traffic rather than the one for HTTP.

Deployment Issues

Application deployment issues are relatively commonplace. Most applications will be deployed without issue, but you will deploy so many apps that deployment issues will be something that you see quite often.

Missing or Incorrect Tags

Tags can be used to represent resources in the cloud. Policy rules can be defined on the tags to more efficiently manage the resources. For example, you could tag each IP that represents a web server. Then, you could deploy a new version of Apache to each system with the web server tag. However, you can run into a real mess if tags are missing or if the wrong devices are tagged.

Imagine deploying the wrong software or code to machines. You could have servers with completely different roles running software that serves no purpose. This creates an attack surface on those machines that would likely not be protected by appropriate controls. The machines that really do need the software would not have it, resulting in services that would not work properly.

Troubleshooting this would require verifying tags against system documentation to ensure that the correct machines are tagged. You would also want to check the change management system to see which changes were made recently to the tags and cross-reference this with the logs. The system might show that 192.168.1.60 was added to the web servers, but the logs show that 192.168.1.50 was added. You could then remove 50 and add 60 to correct the problem.

Application Container Issues

When deploying containers, there are a wide range of issues you might encounter. One issue you might face is incompatible host and container images. The base image version of a container must match the OS of the system you are deploying it to. If the versions do not match, the deployment will fail or will not operate correctly. Failed deployments will have the following error code: 0xc0370101.

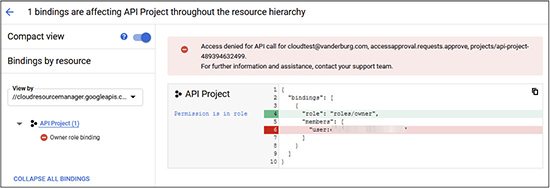

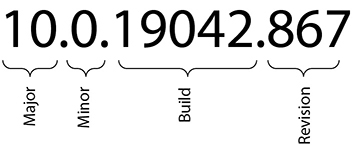

Host and container image versions must match because the host and the container share a single kernel. On Windows, the versions must match at the build level. You can check the version of the container and the host to compare. You can query the version of a system with the ver command at the command prompt, as shown in Figure 15-6.

Figure 15-6 Querying the Windows version

Windows build numbers are divided into four sections, as shown in Figure 15-7. The first three sections must match. These are the major, minor, and build numbers. The revision numbers can differ.

Figure 15-7 Windows version breakdown

Another issue you might see is attempting to deploy a container from a missing or mistyped image. If you encounter this, verify that the specified image exists at the location specified and that you typed the name correctly.

You will need to have enough resources available to deploy the image. If you lack sufficient CPU or memory, the container deployment will fail. If you encounter errors related to resource availability, try one of the following:

• Deploy the container to a different host

• Reduce the CPU or memory specified for the container

• Reduce the CPU or memory of other containers on the host

• Migrate other containers to a different host

Incompatible or Missing Dependencies

Missing or incompatible dependencies can make an application fail to install or not work correctly. They can also cause software upgrades to fail. For example, you may want to upgrade the version of WordPress running on your hosted site, but the upgrade fails because it requires an upgrade to PHP and MySQL first.

When deploying a web application, ensure that programming libraries are installed first. Windows applications written in a .NET programming language such as C# will require a particular version of .NET on the machine. Other applications may require PHP or Java to be installed. Read through deployment documentation carefully to ensure that you meet all the requirements. Of course, you will also need Internet Information Services (IIS) and any other operating system roles and features. Ensure that all this is in place before application installation.

The Java Runtime Environment (JRE) can be particularly troublesome when running multiple Java-based applications on the same machine because they might not all support the same version. For example, three applications are installed on the server, and you upgrade the first one. You read through the documentation before upgrading and find that you need to update the Java version first. The Java upgrade completes successfully, and then you deploy the new version of the application. Testing confirms that the new app works fine, but a short time later, users report that the other two applications are no longer working. Upon troubleshooting, you find that they do not support the new version of Java that was deployed.

The most straightforward fix to this issue is to deploy dedicated VMs for each application. You can also use application containers to host each application so that dependencies can be handled individually for each container. Containers are more lightweight, quicker to deploy (less disk space, since OS is generally not in the container), and start up much more quickly than VMs.

Now that you understand the potential problem, let’s try a scenario: You have been asked to set up a new website for your company. You purchase a hosted cloud solution and create a host record in your company’s hosted DNS server to point to the hosted cloud server’s IP address. You test the URL and see the default setup page. You then use the cloud marketplace to install some website applications and themes. However, when you navigate to your website, you now receive the following error message:

Warning: Creating default object from empty value in customizer.php

Step 1: Identify the problem. New applications and themes were installed since the site last came up correctly, so the error is most likely related to the latest software and themes.

Step 2: Establish a theory of probable causes. You research the error online and see issues relating to missing PHP files. You theorize that PHP is installed incorrectly or that the PHP dependency is missing.

Step 3: Test the theory to determine the cause. To test these theories, you can reinstall PHP on the server or install it if it is missing. You first identify the required level of PHP from the software that you installed earlier. Then you log onto the cloud server and check the PHP version. You find that PHP is not installed, so it seems like installing the required PHP version will solve the problem.

Step 4: Establish a plan of action to resolve the problem and implement the solution. You log into the cloud management portal and go to the marketplace. After locating the PHP version required by the software, you review the release notes to determine if it is compatible with your other software and system. You find that your cloud vendor maintains a database of compatible applications and software, and it has already queried your systems and noted that this version of PHP is compatible with your cloud installation.

You place a change request to install PHP and include relevant documentation on why the software is needed. Once the change request is approved, you proceed to install the software from the marketplace and then verify that the software installs correctly.

Note that because this is a new installation, no users are accessing the site. If this were a production site, installing a significant dependency like PHP would take the site down, so you would need to perform the install in a downtime.

Step 5: Verify full system functionality and, if applicable, implement preventative measures. You open a web browser, navigate to the company website URL, and verify that you can access the site. The installation of the PHP dependency solved the problem. Additionally, you find that you can enable the system to automatically install dependencies in the future so that you can avoid such a situation. You create another change request to enable this feature and wait for the approval. Once approval is provided, you enable the feature.

Step 6: Document findings, actions, and outcomes. You update both change request tickets to indicate that the work was completed successfully and that no other changes were required. Additionally, you send a memo to the other team members noting the issue and what was done to resolve it and that dependencies will be installed automatically moving forward.

Integration Issues with Different Cloud Platforms

Cloud applications typically do not reside on their own. They are often integrated with other cloud systems with APIs. A vendor will create an API for its application and then release documentation so that developers and integrators know how to utilize that API. For example, Office 365, a cloud-based productivity suite that includes an e-mail application, has an API for importing and exporting contacts. Salesforce, a cloud-based customer relationship management (CRM) application, could integrate with Office 365 through that API so that contacts could be updated based on interactions in the CRM tool. However, APIs must be implemented correctly, or the integration will not work.

Let’s try the CompTIA troubleshooting methodology with a scenario: You receive an e-mail from Microsoft informing you of a new API that works with Salesforce. You log into Salesforce and configure Salesforce to talk to Office 365. You educate users on the new integration and that contacts created in Salesforce will be added to Office 365 and that tasks in Salesforce will be synchronized with Office 365 tasks. However, a user reports that their contacts are not being updated and that tasks are not being created. You also find when opening your tasks that there are hundreds of new tasks that should belong to other users.

For this scenario, consider the troubleshooting methodology and walk through it on your own. Think through each step. You may need to make some assumptions as you move through the process since this is a sample scenario.

Step 1. Identify the problem.

Step 2. Establish a theory of probable causes.

Step 3. Test the theory to determine the cause.

Step 4. Establish a plan of action to resolve the problem and implement the solution.

Step 5. Verify full system functionality and, if applicable, implement preventative measures.

Step 6. Document findings, actions, and outcomes.

Script Misconfiguration

Deployment scripts need to be absolutely correct, or they will deploy the wrong thing or fail altogether. Scripts can be developed for a wide variety of applications, but it is a best practice to build in checks so that changes can be validated as the script executes. If a step in the process fails, the script should be able to roll back the changes and send notifications to appropriate personnel to investigate. Developing scripts like this takes much more time, but it can help avoid many headaches in the future and provide additional details when troubleshooting. Ensure the following to help avoid or prevent script misconfiguration issues:

• Referencing the right objects Ensure that object names are correctly spelled and that they exist.

• Using accounts with the necessary privileges The script will need to use accounts that have sufficient privileges to perform the operation.

• Referencing the right resources Ensure that the resource names are correctly spelled, that they exist, and that there are sufficient resources for the operation. Some resources include storage locations, network security groups, or virtual networks.

• Correct networking Ensure that the script configures the correct networking settings for the desired deployment location. This is easier if you are using DHCP, but in many cases, servers will not use DHCP so you will need to ensure the IP address, subnet mask, and default gateway work on the deployment network.

• Deploying resources in the correct order Ensure that you create each resource so that dependencies are met.

If your script fails, check your logs to see which step in the process failed. For example, you may find that a step for creating the database fails, so you would then check the logs on the database. If those logs show that the database drive is full, you could correct that issue and then try the script again.

Consider a scenario where you attempt to run a deployment script but encounter an AuthorizationFailed error. For this scenario, use the troubleshooting methodology and walk through it on your own. Think through each step. You may need to make some assumptions as you move through the process since this is a sample scenario.

Step 1. Identify the problem.

Step 2. Establish a theory of probable causes.

Step 3. Test the theory to determine the cause.

Step 4. Establish a plan of action to resolve the problem and implement the solution.

Step 5. Verify full system functionality and, if applicable, implement preventative measures.

Step 6. Document findings, actions, and outcomes.

Incorrect Configuration

Computer programs need to be configured perfectly for them to run. There is really no margin for error. An extra character in a UNC path or a mistyped password is all that is required for the program to crash and burn. It is important to double-check all configuration values to ensure that they are correct. If you run into issues, go back to the configuration and recheck it, maybe with another person who can offer some objectivity. Compare configuration values to software documentation and ensure that the required services on each server supporting the system are running.

Let’s look at this in a scenario and consider how the CompTIA troubleshooting methodology would help in solving a configuration issue: Your company is consolidating servers from two cloud environments into one for easier manageability. The transition team is responsible for moving the servers and the shares. The transition team successfully moves the servers to the new location and consolidates the shares onto a single server. A web application retrieves files from one of the shares, but users of the site report that they can no longer access files within the system. You are part of the troubleshooting team, and you are assigned the trouble ticket.

Step 1: Identify the problem. The problem is that users cannot access files in the application. You send a message to the user base informing them of the problem and that you are actively working to resolve it.

Step 2: Establish a theory of probable causes. Several changes were made when the servers were moved over from one cloud to another. The servers were exported into files and then imported into the new system. Each server was tested, and they worked following the migration. You check the testing notes and verify that the website was working correctly following the migration. The shares were consolidated after that. However, you do not see testing validation following the share consolidation. It is possible that the application is pointing to a share that no longer exists.

Step 3: Test the theory to determine the cause. You log into the server hosting the application and review the configuration. The configuration for the files points to a UNC path. You attempt to contact the UNC path but receive an error. You then message the transition team, asking them if the UNC referenced in the application still exists or if it changed. They send you a message stating that the UNC path has changed, and they provide you with the new path.

Step 4: Establish a plan of action to resolve the problem and implement the solution. You plan to change the application configuration to point to the new path. You put in a change request to modify the application configuration, and the change request is approved. You then adjust the application settings, replacing the old UNC path with the new one.

Step 5: Verify full system functionality and, if applicable, implement preventative measures. You log into the site and verify that files are accessible through the application. You then reach out to several users and request they test as well. Each user reports that they can access the files successfully. Finally, you message the users and let them know that the issue has been resolved.

Step 6: Document findings, actions, and outcomes. You update the change request ticket to indicate that the work was completed successfully and that no other changes were required. Additionally, you send a memo to the transition team members noting the issue and what was done to resolve it. Management then creates a checklist for application transitions that includes a line item for updating the UNC path in the application if the back-end share path changes.

Template Misconfiguration

When an organization is migrating its environment to the cloud, it requires a standardized installation policy or profile for its virtual servers. The VMs need to have a similar base installation of the operating system, so all the devices have the same security patches, service packs, and base applications installed.

VM templates provide a streamlined approach to deploying a fully configured base server image or even an entirely configured application server. VM templates help decrease the installation and configuration costs when deploying VMs and lower ongoing maintenance costs, allowing for faster deploy times and lower operational costs. However, incorrectly configuring templates can result in a large number of computers that all have the same flaw.

Now that you understand the potential problems, let’s try a scenario: Karen is creating VM templates for common server roles, including a web server with network load balancing (NLB), a database server, an application server, and a terminal server. Each server will be running Windows Server 2016 Standard. She installs the operating system on a VM, assigns the machine a license key, and then installs updates to the device in offline mode.

Karen applies the standard security configuration to the machine, including locking down the local administrator account, adding local certificates to the trusted store, and configuring default firewall rules for remote administration. She then shuts down the VM and makes three copies of it using built-in tools in her cloud portal. She renames the machines and starts each up.

She then installs the server roles for web services and NLB on the web server, SQL Server 2016 on the database server along with Microsoft Message Queuing (MSMQ), SharePoint on the application server, and Remote Desktop Session Host services on the terminal server. She applies application updates to each machine and then saves the virtual hard disks to be used as a template.

A month later, Karen is asked to set up an environment consisting of a database server and a web server. She uses the built-in tools in her cloud portal to make copies of her database and web server templates. She gives the new machines new names and starts them up. She then assigns IP addresses to them. Both are joined to the company domain under their assigned names. However, server administrators report that the servers are receiving a large number of authentication errors.

Step 1: Identify the problem. The servers are receiving a large number of authentication errors.

Step 2: Establish a theory of probable causes. Karen theorizes that the authentication errors could be caused by incorrect licensing on the machines or by duplicate security identifiers.

Step 3: Test the theory to determine the cause. Karen issues unique license keys to both machines and activates them. However, the authentication errors still continue. She then clones another web server and runs Sysprep on it. She adds it to the domain and observes its behavior. The new machine does not exhibit the authentication errors.

Step 4: Establish a plan of action to resolve the problem and implement the solution. Karen proposes to remove faulty machines from the domain, run Sysprep on the defective machines to regenerate their security identifiers, and then add them back in. She puts change requests in for each activity and waits for approval. Upon receiving authorization, Karen implements the proposed changes.

Step 5: Verify full system functionality and, if applicable, implement preventative measures. Server administrators confirm that the authentication errors have ceased after the changes were made.

Step 6: Document findings, actions, and outcomes. Karen updates the change management requests and creates a process document outlining how to create templates with the Sysprep step included.

CSP/ISP Outage

An outage with a cloud service provider (CSP) or an Internet service provider (ISP) may result in some of your systems being unavailable to users. Check the website of the CSP or ISP to see if they are experiencing an outage. Sometimes downed limbs in bad weather or construction mistakes can break buried or hanging network cabling. Similarly, power outages at your ISP could prevent them from operating. If you do not see a notice, create a trouble ticket with them, describing the outage and any other details you have, such as subscriber ID or site ID.

If you have a business continuity plan, this is the time to enact it. You may need to fail resources over to a different region or availability zone. If you have redundant services running on multiple clouds, switch to an alternative cloud to continue service. Internal users may need to use another method for accessing the Internet, such as hotspots. Enact these measures while staying in contact with the CSP or ISP so that you can switch back to normal operations once service has been restored.

Vendor Issues

You could have issues that arise from vendor-supported systems. Not all issues are under your control. The issues could be related to a configuration you have applied, or they could be the result of some issue on the provider’s systems. If so, you will need to be able to accurately describe the issues to the vendor so that they can fix them. It is also helpful to have documentation on what responses your application expects from standard input. Companies will routinely provide testing teams with sheets that show what results should be expected from test searches or transactions involving specific items or users. You may have automated this testing process. If so, check your workflow to identify which parts are failing to provide this information to the vendor.

Some vendor issues you might experience include vendor or platform misconfiguration, integration issues, API request limits, or cost or billing issues.

Vendor or Platform Misconfiguration Vendor or platform misconfigurations can be a big headache for teams deploying new systems or software. If you experience this, start by analyzing log files and monitoring systems to determine where the problem lies. If you suspect that the configuration may be at fault, go back to vendor documentation to verify that you have the system configured properly. Vendors often supply best practice guides for different implementation types, so consider these when deploying and troubleshooting systems. If you do not find your solution on the documentation, consider the following additional resources:

• Reviewing the vendor’s FAQ.

• Checking forums on the vendor or platform. Vendors sometimes have their own official forums, or you can seek out forums where other professionals discuss their systems, problems, and resolutions.

• Create a trouble ticket.

Vendor or Platform Integration Issues Cloud services increasingly are part of a much larger enterprise ecosystem, consisting of multiple clouds and on-premise equipment. This requires a high degree of interoperability between the systems. An upgrade of one cloud or component can sometimes break the integration between these systems.

Stay on top of release notes and updates from vendors on the changes they are making and those they plan to make so that you can ensure that updates are made to other systems that integrate with them. It is also a good idea to try to stick to vendor best practices when configuring the integration. If the vendor has gone through the process of developing a best practice document for the solution, they are invested enough to keep you informed on how those best practices change with updates and additions to their service offering. It can also be much easier to troubleshoot down the road.

Ensure that you are using the same standard on both ends of a connection between clouds. CSPs often support many protocols for communication, but you will need to choose the same one on both ends. Also, make sure that you keep keys and secrets up to date. Expired keys or secrets will not work for encrypting the communication between systems, so those communication sessions will fail. Effective secret management can help keep these up to date with no or minimal effort on your part.

API Request Limits Your cloud provider may set limits on the number of application programming interface (API) requests that will be serviced over time, such as 100 per second or 10 million per month. Requests that exceed these limits will not be processed.

If you are experiencing an API issue, a reasonable place to look is at the request capacity to see if you have hit the limit. You should increase the limit if this is something that you expect to happen again. Alternatively, you could balance requests across multiple APIs. It is a good practice to monitor API usage and capacity to avoid these issues before they happen.

Cost or Billing Issues It is an unfortunate fact that you can have a fine-running solution come to a crashing halt with something as simple as an expired credit card. Make sure you keep billing information up to date in cloud systems so that you can avoid such issues. CSPs often process payments shortly before the renewal date and will notify you of issues or retry payment methods. However, if you do not address the issue before the renewal period, these services may become unavailable.

System Clock Differences

Networked computer systems rely on time synchronization to communicate. When computers have different times, some may not be able to authenticate to network resources, they may not trust one another, or they may reject data sent to them.

Using the CompTIA troubleshooting methodology, let’s consider a scenario: Eddie is a cloud administrator managing over 40 servers in a hosted cloud. His monitoring system frequently sends out alerts that servers are unavailable. He restarts the machines, and the problem goes away, but the problem comes back a few days later. He scripts restarts for each of the servers but realizes that this is a short-term fix at best.

Step 1: Identify the problem. Servers lose connectivity periodically.

Step 2: Establish a theory of probable causes. Eddie theorizes that there could be connectivity issues on the cloud backend. There also could be an issue with the template that each of the machines was produced from. Lastly, the machines could be losing time synchronization.

Step 3: Test the theory to determine the cause. Eddie creates a support ticket with the cloud provider and provides the necessary details. The cloud provider runs several tests and reports no issues. Eddie creates another machine from the template and finds that it also exhibits the same problems. However, he is not sure where the problem might lie in the template. Lastly, he configures a scheduled job to run three times a day that sends him the system time for each of the servers.

As he reviews the output from the scheduled job, it becomes clear that the domain controller is getting out of sync with most of the network every few hours. Upon analyzing the configuration of the servers that go out of sync and the others, he finds that some are configured to obtain their time from the cloud provider NTP server, while others are set to obtain their time from a different server.

Step 4: Establish a plan of action to resolve the problem and implement the solution. Eddie proposes to set all servers to the same time server. He creates a change request documenting the proposed change and receives approval to move forward with the change during a scheduled downtime. He makes the change.

Step 5: Verify full system functionality and, if applicable, implement preventative measures. Eddie monitors the output from the scheduled task and confirms that each server remains in sync.

Step 6: Document findings, actions, and outcomes. Eddie documents the NTP server settings on a standard setup configuration document. He mentions the issue in a standup IT meeting the following Friday, and the document is circulated around and placed on the company intranet for reference.

Connectivity Issues

Connectivity issues can create a broad range of problems, since most systems do not operate in isolation. There is a myriad of interdependencies on the modern issues of networking, and connectivity can be a digital monkey wrench that breaks a plethora of systems.

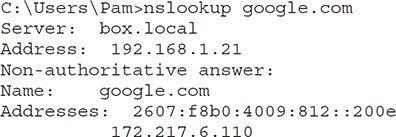

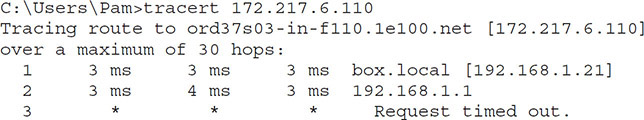

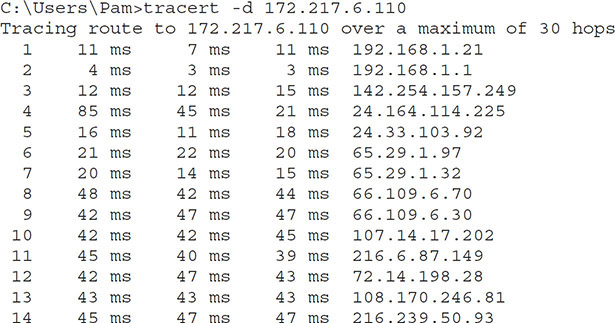

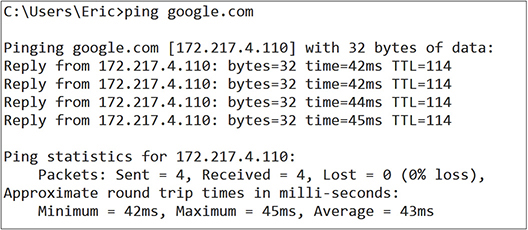

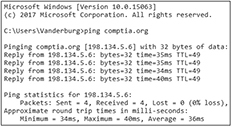

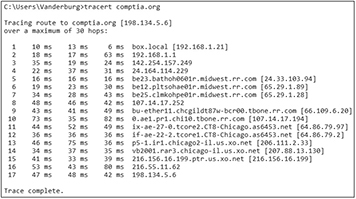

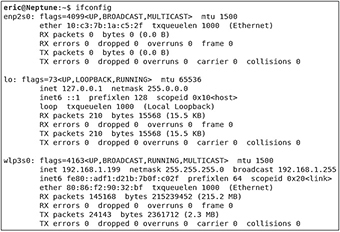

The first indicator that there is a connectivity problem will be the scope of the issue. Because everything is connected, connectivity issues usually affect a large number of devices. Ensure that affected devices have an IP address using the ipconfig (Windows) or ifconfig (Linux) command described later in this chapter. Suppose they do not have an IP address. In that case, it could be a problem with DHCP or with DHCP forwarders or cloud-based virtual network IP address ranges, firewall ACLs, and routing tables.

For example, the DHCP scope could be full, so the administrator might need to expand the scope or reduce the lease interval so that computers do not keep their addresses for as much time. A user may reside in a different subnet from the DHCP server, and no forwarder exists on the subnet to direct DHCP requests to the DHCP server. The connection may be on a different cloud-based virtual network IP address range from the servers it wishes to contact, and there are no rules defined to allow traffic between these ranges. There could be firewall ACLs that need to be defined to allow traffic between two nodes that are not communicating. Lastly, the default gateway or VPN concentrator, if the issue is with a VPN connection, may not have the correct information on the destination network in its routing table.

When identifying the problem, determine the scope by using the ping command described earlier in this chapter. Ping devices on the network starting with your default gateway. If the default gateway pings, try another hop closer to the Internet or to where others are experiencing issues. Try to connect to other devices that report problems as well. If the default gateway will not ping, attempt to ping something else on the same network. If neither will ping, it is likely an issue with the switch that connects both devices. If you can ping the other machine but not the gateway, it might be a problem with the gateway.

Connectivity issues could be part of the CSP’s responsibility, but determining whether it is the CSP’s responsibility or yours requires that you accurately determine the source of the connectivity issue.

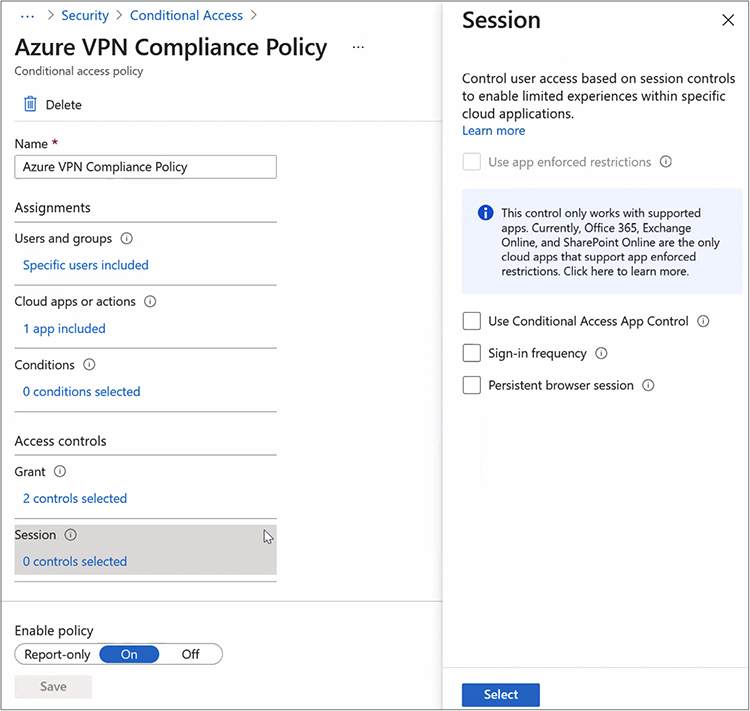

Network Security Group Misconfiguration

Users may not be able to connect to resources if they lack the required membership in security groups. For example, suppose users cannot connect to the Azure VPN. You could check the groups the user belongs to and then check the settings in Azure to see if those groups are allowed access. Figure 15-8 shows the Azure VPN compliance policy.

Figure 15-8 Azure VPN compliance policy

ACL Firewalls screen traffic using ACLs. A misconfigured ACL could create a connectivity issue by blocking legitimate traffic. For example, suppose an administrator sees a large number of new connections coming from an IP address, so they block that address. Later, in an IT staff meeting, they find that the DevOps team deployed a new application that started working and then failed for some reason. Upon investigation, the administrator realizes that the IP they blocked was the new application.

Inheritance Inheritance issues can prevent connectivity to files and folders. Inheritance is when the permissions of a higher-level folder are applied to subfolders or files. Permissions on subfolders are inherited by default until different permissions are assigned to those subfolders. However, the subfolders can be changed to inherit permissions again of higher-level folders and overwrite permissions of subfolders.

Sometimes changes to higher-level folders on a file system will be propagated down to subfolders, overwriting more granular permissions. For example, you might have a marketing folder that is only available to the marketing team under a general shared folder. If someone changes the permissions on the general shared folder and forces those permissions to be applied to all subfolders and files, the custom permissions for the marketing folder would be lost.

Network Configuration Issues

Network configuration issues may cause connectivity loss, slowness, or excessive latency in applications. Some network configuration issues to be aware of include

• Peering Incorrect peering will result in a loss of communication between VPCs.

• Incorrect subnet An incorrect subnet will either result in communication over the wrong network if the nearest routing device has connectivity to that subnet or a lack of communication entirely if there is no connectivity to that subnet.

• Incorrect IP address You may accidentally assign a duplicate IP address where either the newly configured machine or an existing machine will lose connectivity. You might also assign an IP address that does not have the correct mappings. For example, firewall rules or other ACLs may be defined for a specific IP address or IP address range. If you assign a different one, these rules will not be triggered for traffic going to and from that system. The same case would occur if tagging has been applied for one IP address, but you assigned a different one.

• Incorrect IP space You may be managing multiple IP address spaces for different sites and subnets. Some administrators use systems like IP Address Management (IPAM) to help manage these IP address spaces. If you assign an IP address for the wrong IP address space, it will not communicate with its network peers.

• Incorrect routing The most common routing mistake is to assign the wrong default gateway. This will result in the computer being able to communicate with its local peers, but not with any external systems. Another issue is the misconfiguration of routing information on routers or firewalls. This will result in packets not being delivered to certain networks.

Firewall Issues

Some firewall issues to be aware of include

• Incorrectly administered micro-segmentation Micro-segmentation isolates application communication by implementing specific rules governing application traffic. Incorrect micro-segmentation configurations can result in a loss of connectivity to the application or to portions of the application. Review the Syslog for blocked connections, or review the application log on the application server for failed connections to troubleshoot or identify such issues.

• NAT issues Network address translation (NAT) allows one or more public IP addresses to be used to service internal addresses. NAT issues could prevent connectivity between internal systems and the Internet. Test NAT for the internal IP address scope and test for source or destination connectivity issues by verifying communication from the outside in and from the inside out. The firewall should show a hit on the NAT rule when connecting. You may need to enable enhanced logging on the NAT rule in testing to ensure that you get this information.

• VPN Issues with a virtual private network (VPN) can result in a loss of connectivity between cloud sites or between users and a cloud. Review the VPN log or console to identify VPN issues. Verify that users or sites have the correct VPN configuration. Ensure also that secrets or keys are updated on both ends.

Load Balancer Issues

Load balancer issues could be the result of misconfiguration of one of the following components:

• Methods Load balancers use a variety of methods to perform their balancing. Some methods differ based on the way they distribute traffic, and others differ based on the services they can offer. Some methods include DNS, database, round robin, SIP, and link load balancing. You will want to pair the method you use with the resources you are load balancing. For example, round robin is often used for load balancing connections between web servers. SIP load balancing would most likely be used for messaging services, and link load balancing would be used for optimizing traffic between multiple ISP links. Database load balancing would be used for distributing traffic across nodes in a database cluster.

• Headers The IP address headers are changed when packets are processed by the load balancers and then sent on to their destination system. Load balancing modifies the X-Forwarded-For, X-Forwarded-Proto, and X-Forwarded-Port portion of the header. Ensure that your end systems are configured to work with this data.

• Protocols There is a wide range of load balancing protocols. Some protocols include Direct Routing (DR), NAT-based, layer 4 tunneling, Source Network Address Translation (SNAT), or HAProxy. You may experience issues with an application if the wrong load balancing protocol is used. For example, simple DR load balancing would not allow for cookie persistence over HTTPS, so an application relying on cookies would not function properly.

• Encryption If you are using encryption, such as SSL/TLS, ensure that load balancers will be able to process this traffic. Load balancing will fail if the load balancers are not configured to handle the decryption of such traffic. Load balancers will need to be able to decrypt the data to properly direct it. They can then be configured to re-encrypt the data on its way to the destination or send data to the back-end systems unencrypted.

• Backends The load balancers need to be configured to send traffic to a set of systems that will receive and process the information. If this is not configured, the load balancer will not be able to properly direct traffic.

• Frontends Firewalls or WAFs will need to be configured to send traffic to the load balancers, not the end machines. If this is not configured, traffic will not be delivered to the load balancers. Similarly, the load balancers will need to be configured to accept the desired traffic. Otherwise, they will reject the traffic.

DNS Record Issues

Domain Name System (DNS) is used to translate the name of a system to its IP address so that devices can communicate with one another. As IP addresses change, DNS will need to be updated to reflect that. Some systems are configured to automatically update DNS entries when DHCP leases change. Others require manual updates. Also, you may advertise some services through DNS, and these will need to be kept up to date if you make changes to the IP addresses of those services.

Another issue you could face is the resolution of stale entries. Stale entries are records for systems that no longer exist. Automated processes can be enabled to remove stale entries from DNS to avoid this issue.

VLAN or VXLAN Misconfiguration

VLANs and VXLANs were discussed back in Chapter 4. Both the VLAN and VXLAN partition a network to create logical separation between subnetworks. Connectivity problems can appear when VLANs or VXLANs are misconfigured. For example, machines must be on the same VLAN or have inter-VLAN routing configured for the two machines to communicate. It is common to configure virtual networks with specific VLANs or to add VLAN tagging to virtual networks. Incorrectly setting these values could allow devices to talk to machines they are not supposed to talk to, and they would be unable to communicate with others. Subnets are usually assigned per VLAN, so if the IP address is configured manually for one subnet on the machine and it is placed on the wrong VLAN, it will not be able to communicate with any of its neighbors.

Let’s demonstrate the CompTIA troubleshooting methodology with a scenario: Geoff configures three VLANs named VLAN1, VLAN2, and VLAN3. He has four servers that are running on a virtual network, and he plans on cloning those servers several times and then assigning the servers to each of the VLANs for use. He performs the clones and then assigns the machines to the appropriate VLANs but finds that they are unable to communicate with one another.

Step 1: Identify the problem. The cloned servers cannot communicate with each other.

Step 2: Establish a theory of probable causes. Geoff determines that the VLANs could be misconfigured, the tagging could be incorrectly set, the virtual switches could be misconfigured, or the IP addresses could be incorrectly assigned.

Step 3: Test the theory to determine the cause. Geoff tries to ping a single server called VM-DC1 from each of the other machines. None of the computers can communicate with the server. Geoff then creates a testing strategy where he will rotate VLANs and test. He explains the strategy to his manager and receives approval to proceed. Geoff then rotates the VLAN that is assigned to VM_DC1 and tries the tests again. He is unable to connect to the machine on any of the three VLANs. Geoff then removes VLAN tagging from the virtual switch configuration on VM_DC1 and receives an IP address conflict on the main VM_DC1 computer. Geoff suddenly realizes that the IP addresses are hard-coded into each of the machines and that they do not correspond to their assigned VLAN.

Step 4: Establish a plan of action to resolve the problem and implement the solution. Geoff documents IP addresses to assign to each of the machines in each VLAN. He then creates a change request to modify the IP addresses for each of the computers and explains why the change needs to be made. Once approval is given, Geoff modifies the IP addresses on each machine as planned.

Step 5: Verify full system functionality and, if applicable, implement preventative measures. Geoff verifies that each machine can talk to other devices on the same VLAN and that computers cannot talk to those on other VLANs.