![]()

Plan Stability

One of the most frustrating things about Oracle’s Cost-Based Optimizer (CBO) is its tendency to change plans for statements at seemingly random intervals. Of course, these changes are not random at all. But, because the optimizer code is so complex, it’s often difficult to determine why a plan changes. Oracle recognized this issue years ago and has been working to improve the situation for at least a decade. It has provided many tools for identifying when plans change and why they change. Oracle has also provided numerous tools that allow you to exert varying degrees of control over the execution plans the optimizer chooses, but let’s save that discussion for the next chapter.

This chapter’s focus is plan instability, and it is concerned with issues that cause you not to experience the stability you expect. You’ll discover how to identify when and why plans changed, and how to locate plan changes that create a significant performance impact, and gain some insight into common causes of plan instability issues. I use a number of scripts in this chapter, but for readability purposes, in most cases, I do not show the source of these scripts in the listings. The scripts can be found in the example download for this book.

Plan Instability: Understanding the Problem

Oracle’s CBO is an extremely complex piece of software. Basically, its job is to work out the fastest way to retrieve a given set of data as specified by a SQL statement. Generally speaking, it must do this in an extremely short period of time using precalculated statistical information about the objects involved (tables, indexes, partitions, and so forth). The optimizer usually doesn’t have the time to verify any of the information. The tight time constraints are imposed because parsing is a serialized operation. Therefore, the database needs to retrieve the data as quickly as possible and as infrequently as possible; otherwise, parsing becomes a severe bottleneck to scalability. I should note here that my comments are aimed at what I would typically call an OLTP-type environment—an environment with many users executing lots of relatively quick SQL statements. Of course, in environments with relatively few but long-running statements, it’s much more important to get the correct plan than to get a decent plan quickly. These types of systems, though, don’t suffer from plan stability issues nearly as often (in part because they tend to use literals as opposed to bind variables, but I talk about that more later).

So why do plans change? Well there are three main inputs to the CBO:

- Statistics: associated with the objects that are referenced by the SQL statement

- Environment: optimizer-related parameter settings, for example

- SQL: the statement itself (including bind variable usage)

So, unless one of these three things changes, the plan should not change. Period. I believe frustration with plan instability arises primarily from the belief that “nothing has changed,” when in fact something has changed. I can’t even count the number of times I have heard that phrase. The story usually goes something like this:

- Them: Everything was working fine and then, all of a sudden, the system just started crawling.

- Me: When did this happen?

- Them: 12:00 noon on Thursday

- Me: What changed around that time?

- Them: Nothing changed!

Of course, they are not intentionally lying to me. What they really mean is, “Nothing has changed that I think could have anything to do with this particular issue.” But, regardless of whether someone thinks an event is relevant, or if he or she even knows about it, there was a change that precipitated the issue.

So, the first thing I want you to get out of this chapter is that performance doesn’t just magically get worse (or better). If a SQL statement gets a new plan, there is a reason. Something changed!

Let’s take a brief look at the possibilities for why a plan can change.

Changes to Statistics

Changes to statistics is a rather obvious place to look for changes that can cause new plans to be generated. Object-level statistics are gathered frequently on most systems. By default, Oracle versions 10g and above have a job that runs on a nightly basis to calculate new statistics. If these jobs are running on your system, it means that, every day, you have an opportunity to get a new plan. Although a thorough discussion of statistics gathering is outside the scope of this chapter, be aware of the mechanisms in play in your environment. Also know that you can check quickly to determine when stats were last gathered on an object, and you can restore a previous version of an object’s statistics in a matter of seconds. Last, be aware that, by default, the standard stats-gathering jobs in 10g and above allow statements to stay in the shared pool for some period of time after new stats have been gathered. This feature is called rolling invalidation. By default, the dbms_stats procedures set the no_invalidate parameter to dbms_stats.auto_invalidate. This means that cursors are not invalidated automatically when statistics are gathered. Existing cursors are invalidated at some random time during the next five hours. This is a feature designed to prevent parsing storms, which can occur if all statements referencing a specific object are invalidated at the same time. In general, this feature is a good thing, but be aware that a plan change can be the result of a statistics change, even though the statistics change occurred several hours before the new plan showed up. Listing 16-1 presents an example of checking the last statistics-gathering event for a table and for restoring a previous version (all scripts are in the example download for the book).

Listing 16-1. Table Statistics Setting and Restoring

SQL> exec dbms_stats.set_table_stats( -

ownname => user, tabname => 'SKEW', -

numrows => 1234, numblks => 12, -

avgrlen => 123, no_invalidate => false);

PL/SQL procedure successfully completed.

SQL> @set_col_stats

Enter value for owner: KRM

Enter value for table_name: SKEW

Enter value for col_name: PK_COL

Enter value for ndv: 1234

Enter value for density: 1/1234

Enter value for nullcnt: 0

PL/SQL procedure successfully completed.

SQL> @dba_tables

Enter value for owner: KRM

Enter value for table_name: SKEW

OWNER TABLE_NAME STATUS LAST_ANAL NUM_ROWS BLOCKS

---------- ------------------------- -------- --------- ---------- ----------

KRM SKEW VALID 12-AUG-13 1234 12

SQL> @col_stats

Enter value for owner: KRM

Enter value for table_name: SKEW

Enter value for column_name:

COLUMN_NM DATA_TYPE DENSITY NDV HISTOGRAM BKTS LAST_ANAL

---------- ---------- ---------- ---------- ---------- ---- ---------

PK_COL NUMBER .000810373 1,234 NONE 1 12-AUG-13

COL1 NUMBER .000002568 902,848 HEIGHT BAL 75 02-AUG-13

COL2 VARCHAR2 .500000000 2 NONE 1 03-AUG-13

COL3 DATE .000002581 1,000,512 HEIGHT BAL 75 02-AUG-13

COL4 VARCHAR2 .000000016 3 FREQUENCY 2 02-AUG-13

SQL> @tab_stats_history

Enter value for owner: KRM

Enter value for table_name: SKEW

OWNER TABLE_NAME STATS_UPDATE_TIME

------- ------------ -----------------------------------

KRM SKEW 31-JUL-13 09.06.42.785067 PM -05:00

KRM SKEW 02-AUG-13 07.14.04.486871 PM -05:00

KRM SKEW 02-AUG-13 09.29.48.761056 PM -05:00

KRM SKEW 02-AUG-13 09.31.11.788522 PM -05:00

KRM SKEW 02-AUG-13 09.38.00.524266 PM -05:00

KRM SKEW 12-AUG-13 08.27.17.497396 PM -05:00

6 rows selected.

SQL> @restore_table_stats.sql

Note: No_Invalidate=false - means invalidate all cursors now (stupid triple negatives)

Enter value for owner: KRM

Enter value for table_name: SKEW

Enter value for as_of_date: 03-aug-13

Enter value for no_invalidate: false

PL/SQL procedure successfully completed.

SQL> @dba_tables

Enter value for owner: KRM

Enter value for table_name: SKEW

OWNER TABLE_NAME STATUS LAST_ANAL NUM_ROWS BLOCKS

---------- ------------------------- -------- --------- ---------- ----------

KRM SKEW VALID 02-AUG-13 32000004 162294

SQL> @col_stats

Enter value for owner: KRM

Enter value for table_name: SKEW

Enter value for column_name:

COLUMN_NM DATA_TYPE DENSITY NDV HISTOGRAM BKTS LAST_ANAL

---------- ---------- ----------- ----------- ---------- ---- ---------

PK_COL NUMBER .000000032 32,000,004 HEIGHT BAL 75 02-AUG-13

COL1 NUMBER .000002568 902,848 HEIGHT BAL 75 02-AUG-13

COL2 VARCHAR2 .000000016 2 FREQUENCY 1 02-AUG-13

COL3 DATE .000002581 1,000,512 HEIGHT BAL 75 02-AUG-13

COL4 VARCHAR2 .000000016 3 FREQUENCY 2 02-AUG-13

Changes to the Environment

There are many parameters that affect the optimizer’s calculations. Some of the optimizer parameters have values that are calculated automatically based on the values of other parameters or the physical characteristics of the machine on which the database is running, such as the number of CPUs. If any of these environmental values change, the optimizer may come up with a new plan. This is also one of the reasons that it is sometimes difficult to get the plans in development and test environments to match the plans that are generated in production.

The settings in effect when a statement is parsed can be obtained by enabling a 10053 (CBO) trace. Oracle also keeps track of the settings for each of the optimizer-related parameters in an X$ table called X$KQLFSQCE. This is the structure that underlies the V$SQL_OPTIMZER_ENV view, which (much like V$PARAMETER) doesn’t display the hidden parameters (unless they have been altered). The optim_parms.sql script shows all the parameters, including the so-called hidden parameters that start with an underscore (this is the complete list of parameters that affect the optimizer’s calculations and the same ones that are dumped in a 10053 trace file). Listing 16-2 contains the optimizer parameter values for SQL statement 9du01uy8z1k04 in a 12.1.0.1 instance. Note that these are the values that were set when the statement was parsed.

Listing 16-2. Optimizer Parameter Values

SQL> @optim_parms

Enter value for sql_id: 9du01uy8z1k04

Enter value for isdefault:

NAME VALUE DFLT?

---------------------------------------- ------------------- -----

...

cpu_count 24 YES

cursor_sharing exact YES

db_file_multiblock_read_count 128 YES

...

optimizer_adaptive_features true YES

optimizer_adaptive_reporting_only false YES

optimizer_capture_sql_plan_baselines false YES

optimizer_dynamic_sampling 2 YES

optimizer_features_enable 12.1.0.1 YES

optimizer_features_hinted 0.0.0 YES

optimizer_index_caching 0 YES

optimizer_index_cost_adj 100 YES

optimizer_mode all_rows YES

optimizer_mode_hinted false YES

optimizer_secure_view_merging true YES

optimizer_use_invisible_indexes true NO

optimizer_use_pending_statistics false YES

optimizer_use_sql_plan_baselines true YES

parallel_autodop 0 YES

...

parallel_degree_limit 65535 YES

parallel_degree_policy manual YES

parallel_dml_forced_dop 0 YES

...

sqlstat_enabled true NO

star_transformation_enabled false YES

statistics_level all NO

total_cpu_count 24 YES

total_processor_group_count 1 YES

transaction_isolation_level read_commited YES

workarea_size_policy auto YES

...

_always_anti_join choose YES

_always_semi_join choose YES

_always_star_transformation false YES

...

_complex_view_merging true YES

_connect_by_use_union_all true YES

_convert_set_to_join false YES

_cost_equality_semi_join true YES

_cpu_to_io 0 YES

...

_db_file_optimizer_read_count 8 YES

_optim_adjust_for_part_skews true YES

_optim_enhance_nnull_detection true YES

_optimizer_adaptive_cursor_sharing true YES

_optimizer_adaptive_plans true YES

_optimizer_adjust_for_nulls true YES

...

_use_column_stats_for_function true YES

_use_hidden_partitions false YES

_virtual_column_overload_allowed true YES

_with_subquery OPTIMIZER YES

_zonemap_control 0 YES

_zonemap_use_enabled true YES

415 rows selected.

Changes to the SQL

Changes to the SQL may not make much sense at first glance. How can the SQL statement change? When I talk about plan instability, I am talking about the optimizer coming up with different plans for a single statement (in other words, the same SQL text and therefore the same sql_id). However, there are a few reasons that the text of a statement (and its sql_id or hash_value) remains fixed, but the actual SQL statement that the optimizer evaluates may change. These reasons are as follows:

- If a statement references views and then an underlying view changes, the statement has changed.

- If a statement uses bind variables and then the values passed via the variables change, the statement has changed.

- If the optimizer uses statistics feedback (known as cardinality feedback prior to 12c), the statement has changed.

The first situation is easy to understand and is rarely a point of confusion. The third situation is a way the optimizer attempts to adjust the plan automatically to improve it to produce a better performing plan. It is intended to work for good by supplementing missing or stale information with better, updated information. The second situation, though, can be confusing. We have been trained over the years to use variables in our SQL statements so that Oracle can reuse the statements without having to reparse them. So instead of writing a statement like this:

select avg(col1) from skew where col1 > 1;

We typically write it like this:

select avg(col1) from skew where col1 > :X;

In this way, we can pass any value we want to our program via variable X, and Oracle does not have to reparse the statement. This is a very good thing when it comes to scalability, particularly for systems in which many users execute many statements concurrently. However, unless the bind variables always contain the same data, the optimizer is basically evaluating a different SQL statement every time it undergoes a hard parse. This is because Oracle introduced a feature in 9i that allows the optimizer to “peek” at the values of bind variables during the part of the parsing process where the execution plan is determined. This is the infamous bind variable peeking that you’ve probably already heard about, and it is one of the major contributors to plan stability issues.

Bind Variable Peeking

When Oracle introduced histograms in 8i, they provided a mechanism for the optimizer to recognize that the values in a column were not distributed evenly. That is, in a table with 100 rows and ten distinct values, the default assumption the optimizer makes, in the absence of a histogram, is that no matter which value you pick, you always get 100 ÷ 10, or ten rows back. Histograms let the optimizer know whether this isn’t the case. A classic example is 100 records with two distinct values, where value Y occurs 99 times and value N occurs only once. Without a histogram, the optimizer always assumes that, regardless of whether you request records with a Y or an N, you get half the records back (100 ÷ 2 = 50). Therefore, always do a full table scan as opposed to using an index on the column. A histogram, assuming it is accurate (and I come back this topic later), lets the optimizer know the distribution is not normal—in other words, not spread out evenly (also commonly called skewed), and that a Y basically gets the whole table, whereas an N gets only 1 percent. This allows the optimizer to pick an appropriate plan regardless of which value is specified in the Where clause.

So let’s consider the implications of this. Would this improve the response time for the query when the value is Y? The answer is no. In this simple case, the default costing algorithm is close enough and produces the same plan that the histogram produces. The full table scan takes just as long regardless of whether the optimizer thinks it’s getting 50 rows or 99 rows. But, what about the case when you specify the value N? In this case, with a histogram, you pick up the index on that column and presumably get a much better response time than the plan with the full table scan. This is an important point. In general, it is only for the outliers—the exceptional cases, if you will—that the histogram really makes a difference.

So, at first glance, the histogram looks like a pretty good idea; but, there is a fly in the ointment. You have to use literals in your SQL statements for the optimizer to be able use the histograms. So you have to write your statements like this:

SELECT XYZ FROM TABLE1 WHERE COLUMN1 = 'Y';

SELECT XYZ FROM TABLE1 WHERE COLUMN1 = 'N';

This is not a problem in this simple example because there are only two possibilities. But, consider a statement with two or three skewed columns, each with a couple hundred distinct values. The possible combinations could grow quickly into the millions—not a good thing for the shared pool or scalability of your system.

Enter the star: bind variable peeking . This feature was introduced in 9i to allow the optimizer to peek at the value of bind variables and then use a histogram to pick an appropriate plan, just like it does with literals. The problem with this feature is that it only looks at the variables once, when the statement is parsed. So let’s make our simple example a little more realistic by assuming you have a table with 10 million rows in which 99 percent have a value of Y and 1 percent has a value of N. In this example, if the first time the statement is executed it passes a Y, the full table scan plan is locked in and used until the statement has to be reparsed, even if the value N is passed to it in subsequent executions.

Let’s consider the implication of this. When you get the full table scan plan (because you passed a Y the first time), it behaves the same way no matter which value you pass subsequently. Oracle always performs a full table scan, always does the same amount of work, and usually results in the same basic elapsed time. From a user standpoint, this seems reasonable. The performance is consistent. (This is the way it works without a histogram, by the way.) On the other hand, if the index plan gets picked because the first execution that caused the parse occurs with a value of N, the executions where the value is N are almost certainly faster than they were before (and maybe considerably faster), but the execution with a value of Y is incredibly slow. This is because using an index to read virtually every row in a table is incredibly slow, which is not at all what the users expect. They expect the response time to be about the same every time they execute a piece of code. And this is the problem with bind variable peeking. It’s basically just Russian roulette. It depends on which value you happen to pass the statement when it’s parsed (which could be any execution, by the way).

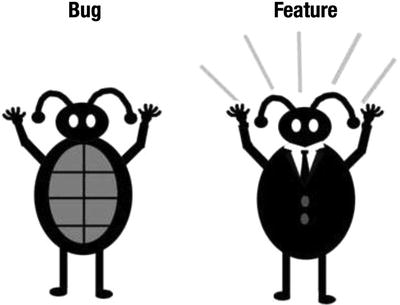

So is bind variable peeking a feature or a bug? Figure 16-1 illustrates how that can sometimes be a tricky question to answer.

Figure 16-1. Feature or bug? (Figure by Noah Osborne)

Well, technically, it’s not a bug because it works the way it’s designed to work. I just happen to believe that it is not a good decision to implement it in this way, but what other choices did the optimizer development group members have?

- They could have evaluated the bind variables and reparsed for every execution of every statement using bind variables. This would eliminate the advantage of having bind variables in the first place and would never work for high-transaction systems. So, this is not an option.

- They could have just said no, and made us use literals to get the benefit of histograms. This is probably not a bad option, in retrospect. The fact that they added _optim_peek_user_binds, which allows us to turn off bind variable peeking altogether, probably means they decided later to give us this option via setting this hidden parameter.

- They could have implemented a system in which they could identify statements that might benefit from different plans based on the values of bind variables, and then peek at those variables for every execution of those “bind-sensitive” statements (Sound familiar? This is what they finally did in 11g with Adaptive Cursor Sharing).

So why is this such a pervasive problem? (And I do believe it is a pervasive problem, with 10g in particular.)

- We’ve been taught always to use bind variables. It’s a “best practice,” which allows SQL statements to be shared, thus eliminating a great deal of work/contention. Using bind variables is an absolute necessity when building scalable high-transaction rate systems. Of course, just because it’s a best practice doesn’t mean you have to follow it blindly. There are situations when literals work better.

- In 10g, the default stats-gathering method was changed to gather histograms automatically. So in a typical 10g database, there are a huge number of histograms, many of them inappropriate (in other words, on columns that don’t have significantly skewed distributions) and many of them are created with very small sample sizes, which causes the histograms to be less than accurate. Note that 11g does a better job on both counts. That is to say, 11g seems to create fewer inappropriate histograms and appears to create many more accurate histograms, even with relatively small sample sizes. And in 12c, we now have two new types of histograms that help the accuracy of the histograms that do get created: top-frequency and hybrid histograms.

- In my humble opinion, bind variable peeking is not that well understood. When I talk to people about it, they usually have heard of it and have a basic idea what the problem is, but their behavior (in terms of the code they write and how they manage their databases) indicates bind variable peeking is something they don’t really have a good handle on.

So, what’s the best way to deal with this issue? Well, recognizing that you have a problem is the first step to recovery. In other words, being able to identify that you have a problem with plan stability is an appropriate first step. Direct queries against the Statspack or AWR tables are probably the best way to identify the issue. You’re looking for statements that flip-flop back and forth between two or more plans. Note that there are other reasons for statements to change plans, but bind variable peeking is high on the list of usual suspects.

Adaptive Cursor Sharing

Adaptive cursor sharing was a new feature added in 11g that aimed to fix performance issues resulting from bind variable peeking. The basic idea is to try to recognize automatically when a statement might benefit from multiple plans. If a statement is found that the optimizer thinks is a candidate, it is marked as bind aware. Subsequent executions peek at the bind variables, and new cursors with new plans may result. The feature does work, although it has a few quirks. The two biggest drawbacks are that it must execute a statement badly before it notices that an additional plan is needed, and that the information regarding bind sensitivity is not persistent (in other words, if the statement gets flushed from the shared pool, all information about bind sensitivity is lost). As a result, bind variable peeking issues continue in 11g.

The example in Listing 16-3 walks through how adaptive cursor sharing works.

Listing 16-3. Adaptive Cursor Sharing

SQL>@desc bigemp

Name Null? Type

--------- -------- -------------

EMPNO NUMBER

ENAME VARCHAR2(20)

PHONE VARCHAR2(20)

DEPTNO NUMBER

SQL>select column_name, histogram from user_tab_cols

2 where table_name = 'BIGEMP';

COLUMN_NAME HISTOGRAM

------------------------------ ---------------

EMPNO NONE

ENAME NONE

PHONE NONE

DEPTNO FREQUENCY

SQL>select deptno, count(*) ct from bigemp

2 group by deptno ;

DEPTNO CT

--------------- ---------------

1 10

6 10

2 10

4 10

5 10

8 10

3 10

7 10

10 99900

9 10

0 10

As you can see, the deptno column has skew such that when the value of deptno is 10, the query returns 99.9999 percent of that table’s rows, and the execution plan choice that is likely the best is a full table scan. However, any other nonpopular value is best served by using an index scan. Listing 16-4 shows the execution plan choice for a query against a nonpopular value.

Listing 16-4. Execution Plan with a Nonpopular Value

SQL>variable v_dept number

SQL>exec :v_dept := 1

SQL>

SQL>select /* acs-ex-01 */ count(*), max(empno)

2 from bigemp

3 where deptno = :v_dept ;

COUNT(*) MAX(EMPNO)

--------------- ---------------

10 91

SQL>@dcplan

Enter value for sql_id: 6xpk12dpa41ga

Enter value for child_no: 0

PLAN_TABLE_OUTPUT

-----------------------------------------------------

SQL_ID 6xpk12dpa41ga, child number 0

-------------------------------------

select /* acs-ex-01 */ count(*), max(empno)

from bigemp where deptno = :v_dept

Plan hash value: 2854685483

--------------------------------------------------

| Id | Operation | Name |

--------------------------------------------------

| 0 | SELECT STATEMENT | |

| 1 | SORT AGGREGATE | |

| 2 | TABLE ACCESS BY INDEX ROWID| BIGEMP |

| 3 | INDEX RANGE SCAN | BIGEMP_I1 |

--------------------------------------------------

As expected, the index scan was chosen. Now take a look at the information associated with this query.

SQL>col is_bind_aware for a10 heading "BIND_AWARE"

SQL>col is_bind_sensitive for a15 heading "BIND_SENSITIVE"

SQL>

SQL>select executions, buffer_gets, is_bind_sensitive, is_bind_aware

2 from v$sql

3 where sql_id = '6xpk12dpa41ga' ;

EXECUTIONS BUFFER_GETS BIND_SENSITIVE BIND_AWARE

--------------- --------------- --------------- ----------

1 53 Y N

Note that the statement is marked as bind sensitive. This is an indication that the optimizer thinks the best plan choice depends on the value of the bind variable, and it is marked this way because the deptno column has a histogram that is used to compute the predicate selectivity. Because a histogram is present, this means that the column contains skewed data and therefore different bind variable values may need to have different plans to execute optimally.

Listing 16-5 shows what happens if we change the bind variable to 10 (our popular value).

Listing 16-5. Execution Plan with a Popular Value

SQL>exec :v_dept := 10

SQL>

SQL>select /* acs-ex-01 */ count(*), max(empno)

2 from bigemp

3 where deptno = :v_dept ;

COUNT(*) MAX(EMPNO)

--------------- ---------------

99900 100000

SQL>@dcplan

Enter value for sql_id: 6xpk12dpa41ga

Enter value for child_no: 0

PLAN_TABLE_OUTPUT

-----------------------------------------------------

SQL_ID 6xpk12dpa41ga, child number 0

-------------------------------------

select /* acs-ex-01 */ count(*), max(empno)

from bigemp where deptno = :v_dept

Plan hash value: 2854685483

--------------------------------------------------

| Id | Operation | Name |

--------------------------------------------------

| 0 | SELECT STATEMENT | |

| 1 | SORT AGGREGATE | |

| 2 | TABLE ACCESS BY INDEX ROWID| BIGEMP |

| 3 | INDEX RANGE SCAN | BIGEMP_I1 |

--------------------------------------------------

SQL>select executions, buffer_gets, is_bind_sensitive, is_bind_aware

2 from v$sql where sql_id = '6xpk12dpa41ga' ;

EXECUTIONS BUFFER_GETS BIND_SENSITIVE BIND_AWARE

--------------- --------------- --------------- ----------

2 957 Y N

The plan still uses an index range scan, but (as you can see by the execution statistics) there is a big jump in the number of buffer gets, which increase from 53 to 957. Also note that the plan is still marked only as bind sensitive. Listing 16-6 shows what happens if we execute the query a third time and keep the bind variable value at 10.

Listing 16-6. Execution Plan for Second Execution with a Popular Value

SQL>select /* acs-ex-01 */ count(*), max(empno)

2 from bigemp

3 where deptno = :v_dept ;

COUNT(*) MAX(EMPNO)

--------------- ---------------

99900 100000

SQL>select child_number, executions, buffer_gets, is_bind_sensitive, is_bind_aware

2 from v$sql where sql_id = '6xpk12dpa41ga' ;

CHILD_NUMBER EXECUTIONS BUFFER_GETS BIND_SENSITIVE BIND_AWARE

--------------- --------------- --------------- --------------- ----------

0 2 957 Y N

1 1 818 Y Y

SQL>@dcplan

Enter value for sql_id: 6xpk12dpa41ga

Enter value for child_no: 1

PLAN_TABLE_OUTPUT

-----------------------------------------------------

SQL_ID 6xpk12dpa41ga, child number 1

-------------------------------------

select /* acs-ex-01 */ count(*), max(empno)

from bigemp where deptno = :v_dept

Plan hash value: 870989070

---------------------------------------------

| Id | Operation | Name |

---------------------------------------------

| 0 | SELECT STATEMENT | |

| 1 | SORT AGGREGATE | |

| 2 | TABLE ACCESS STORAGE FULL| BIGEMP |

---------------------------------------------

Finally, we have the proper plan—a full table scan—and a new child cursor has been created. So, we have two plans now: one for the nonpopular bind variable values and one for the popular values. Notice that our new cursor is marked as both bind sensitive and bind aware. From now on, the proper plan should be chosen based on the bind variable value. But, why doesn’t the first cursor show that it is bind aware? Let’s take a look at Listing 16-7.

Listing 16-7. Bind Aware Cursor Identification

SQL>exec :v_dept := 3

SQL>

SQL>select /* acs-ex-01 */ count(*), max(empno)

2 from bigemp

3 where deptno = :v_dept ;

COUNT(*) MAX(EMPNO)

--------------- ---------------

10 93

SQL>col is_shareable for a9 heading "SHAREABLE"

SQL>select child_number, executions, buffer_gets,

2 is_bind_sensitive, is_bind_aware, is_shareable

3 from v$sql where sql_id = '6xpk12dpa41ga' ;

CHILD_NUMBER EXECUTIONS BUFFER_GETS BIND_SENSITIVE BIND_AWARE SHAREABLE

------------ ----------- ------------ --------------- ---------- ---------

0 2 957 Y N N

1 1 818 Y Y Y

2 1 3 Y Y Y

SQL>@dcplan

Enter value for sql_id: 6xpk12dpa41ga

Enter value for child_no: 2

PLAN_TABLE_OUTPUT

-----------------------------------------------------

SQL_ID 6xpk12dpa41ga, child number 2

-------------------------------------

select /* acs-ex-01 */ count(*), max(empno)

from bigemp where deptno = :v_dept

Plan hash value: 2854685483

--------------------------------------------------

| Id | Operation | Name |

--------------------------------------------------

| 0 | SELECT STATEMENT | |

| 1 | SORT AGGREGATE | |

| 2 | TABLE ACCESS BY INDEX ROWID| BIGEMP |

| 3 | INDEX RANGE SCAN | BIGEMP_I1 |

--------------------------------------------------

Ah! Did you see what happened? A new child cursor was created (child_number 2). Note the additional column called is_shareable included in the query against v$sql. The first cursor (child_number 0), in which a nonpopular bind value is used, is marked as not shareable, but the new child cursor is shareable. What happened was that the original cursor was discarded when the cursor switched to bind aware. The original cursor is now basically marked to be aged out of the cursor cache because it is no longer shareable, and the two remaining cursors (1 and 2), which are shareable and bind aware, can be used for future executions of the statement.

Note that it is possible for additional cursors to get created. If the bind variable value’s selectivity matches one of the aware and shareable plans, it is used. But, if it doesn’t, then a new plan is created (just like we saw in this example) and the process repeats.

Statistics feedback, known as cardinality feedback prior to Oracle 12c, is a mechanism used by the optimizer to improve automatically plans’ repeated query executions that have cardinality misestimates. The first time a SQL statement executes, the optimizer determines the execution plan and marks the plan to enable statistics feedback monitoring if.

- Any of the tables in the statement have missing statistics

- There are ANDed or ORed filter predicates on a table

- Any predicates contain complex operators for which the optimizer cannot compute cardinality estimates accurately

After a completed statement execution, the optimizer then compares the original cardinality estimates with the actual cardinalities. If there are any significant differences, it stores the correct estimates to use during the next execution of that statement to reoptimize (reparse) it. Prior to Oracle 12c, this is as far as things went. But, in 12c, the optimizer also stores a SQL plan directive. A SQL plan directive contains additional information and instructions the optimizer can use to generate a better plan the next time the statement is executed; but, it isn’t pertinent just to a single cursor. It is pertinent to any cursor that uses similar expressions.

About SQL Plan Directives

SQL plan directives are not tied to a specific SQL statement, but instead are defined on query expressions. By defining them on query expressions, the optimizer is able to use them on numerous SQL statements that use similar patterns. These directives are stored in the shared pool and are written periodically to the SYSAUX table space every 15 minutes. This is what makes the Oracle 12c statistics feedback different (and better) than its predecessor, cardinality feedback.

The presence of SQL plan directives alerts the optimizer that there may be missing or inadequate statistics such as histograms or extended statistics. The is_reoptimizable column in v$sql is marked with a Y to indicate that a hard parse should be performed on the next execution. Because these bits of information are recorded, not only can the optimizer use them to reparse statements to achieve better plans, but also even subsequent statistics collections using DBMS_STATS are alerted to create the proper statistics as well. Listing 16-8 shows an example of how SQL plan directives are used.

Listing 16-8. SQL Plan Directives

SQL>select /* sql-plan-dir */ * from sh.customers

2 where cust_state_province = 'FL' and country_id = 52790 ;

2438 rows selected.

SQL>@dcplan

Enter value for sql_id: 94ykht2aac5xg

Enter value for child_no: 0

Enter value for format: ALLSTATS LAST

PLAN_TABLE_OUTPUT

----------------------------------------------------------------------------

SQL_ID 94ykht2aac5xg, child number 0

-------------------------------------

select /* sql-plan-dir */ * from sh.customers where cust_state_province

= 'FL' and country_id = 52790

Plan hash value: 2008213504

----------------------------------------------------------------------------

| Id | Operation | Name | Starts | E-Rows | A-Rows | Buffers |

----------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1 | | 2438 | 1677 |

|* 1 | TABLE ACCESS FULL| CUSTOMERS | 1 | 128| 2438| 1677 |

----------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

1 - filter(("CUST_STATE_PROVINCE"='FL' AND "COUNTRY_ID"=52790))

SQL>select is_reoptimizable

2 from v$sql

3 where sql_id = '94ykht2aac5xg' ;

IS_REOPTIMIZABLE

----------------

Y

The execution of the query reveals what appears to be a problem with the statistics. Note how the estimated rows estimate (E-Rows) of 128 is several orders of magnitude underestimated compared with the actual cardinality (A-Rows). The optimizer recognizes the issue and marks the cursor for reoptimization on the next execution. To see the SQL plan directives that are created, we can query the DBA_SQL_PLAN_DIRECTIVES view as shown in Listing 16-9.

Listing 16-9. Using the DBA_SQL_PLAN_DIRECTIVES View

SQL>exec dbms_spd.flush_sql_plan_directive;

SQL>select o.subobject_name col_name,

2 o.object_type, d.type, d.state, d.reason

3 from dba_sql_plan_directives d, dba_sql_plan_dir_objects o

4 where d.directive_id=o.directive_id

5 and o.owner = 'SH'

6 and o.object_name = 'CUSTOMERS'

7 order by 1,2,3,4,5;

COL_NAME TYPE DIRECTIVE TYPE STATE REASON

---------- ------- ---------------- ------ ------------------------

COUNTRY_ID COLUMN DYNAMIC_SAMPLING NEW SINGLE TABLE CARDINALITY

MISESTIMATE

CUST_STATE COLUMN DYNAMIC_SAMPLING NEW SINGLE TABLE CARDINALITY

MISESTIMATE

PROVINCE TABLE DYNAMIC_SAMPLING NEW SINGLE TABLE CARDINALITY

MISESTIMATE

Note there are three directives stored. The columns referenced are the two columns used in our example query predicate. The underestimation is noted and directives are now in place to make adjustments during the next execution of the query. As shown in Listing 16-10, we run the query again, check the plan, and see that it has changed (note that new child cursor number). Also note statistics feedback used for this statement, and the fact that the new child cursor is now marked as not reoptimizable, which indicates it will be used for all future executions of this cursor.

Listing 16-10. Statistics Feedback in Use

SQL>@dcplan

Enter value for sql_id: 94ykht2aac5xg

Enter value for child_no: 1

Enter value for format: ALLSTATS LAST

PLAN_TABLE_OUTPUT

----------------------------------------------------------------------------

SQL_ID 94ykht2aac5xg, child number 1

-------------------------------------

select /* sql-plan-dir */ * from sh.customers where cust_state_province

= 'FL' and country_id = 52790

Plan hash value: 2008213504

----------------------------------------------------------------------------

| Id | Operation | Name | Starts | E-Rows | A-Rows | Buffers |

----------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1 | | 2438 | 1677 |

|* 1 | TABLE ACCESS FULL| CUSTOMERS | 1 | 2438 | 2438 | 1677 |

----------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

1 - filter(("CUST_STATE_PROVINCE"='FL' AND "COUNTRY_ID"=52790))

Note

-----

- statistics feedback used for this statement

SQL>select child_number, is_reoptimizable

2 from v$sql

3 where sql_id = '94ykht2aac5xg' ;

CHILD_NUMBER IS_REOPTIMIZABLE

------------ ----------------

0 Y

1 N

We can now collect statistics to determine whether the SQL plan directives will be used to update the statistics to avoid this problem in the future as shown in Listing 16-11.

Listing 16-11. SQL Plan Directives Utilized During Statistics Collection

SQL>exec dbms_stats.gather_table_stats('SH','CUSTOMERS')

SQL>select table_name, extension_name, extension

2 from dba_stat_extensions

3 where owner='SH'

4 and table_name='CUSTOMERS';

TABLE_NM EXTENSION_NAME EXTENSION

--------- ------------------------------ -----------------------------------

CUSTOMERS SYS_STS#S#WF25Z#QAHIHE#MOFFMM_ ("CUST_STATE_PROVINCE","COUNTRY_ID")

Yes! They were! We now have an extended statistic for the column group of cust_state_province and country_id. This means that the optimizer now has the correct information to produce the proper cardinality estimates for any query using this column group in the future. Let’s verify as shown in Listing 16-12.

Listing 16-12. Verifying Cardinality Estimates after Creation of Extended Statistics

SQL>select /* sql-plan-dirs */ distinct cust_postal_code from sh.customers

2 where cust_state_province = 'LA' and country_id = 52790 ;

SQL>@dcplan

Enter value for sql_id: 789cx77mh8ftm

Enter value for child_no: 0

Enter value for format: ALLSTATS LAST

PLAN_TABLE_OUTPUT

-----------------------------------------------------------------------------

SQL_ID 789cx77mh8ftm, child number 0

-------------------------------------

select /* sql-plan-dirs */ distinct cust_postal_code from sh.customers

where cust_state_province = 'LA' and country_id = 52790

Plan hash value: 2048334152

-----------------------------------------------------------------------------

| Id | Operation | Name | Starts | E-Rows | A-Rows | Buffers |

-----------------------------------------------------------------------------

| 0 | SELECT STATEMENT | | 1 | | 2 | 1522 |

| 1 | HASH UNIQUE | | 1 | 52 | 2 | 1522 |

|* 2 | TABLE ACCESS FULL| CUSTOMERS | 1 | 54 | 54 | 1522 |

-----------------------------------------------------------------------------

Predicate Information (identified by operation id):

---------------------------------------------------

2 - filter(("CUST_STATE_PROVINCE"='LA' AND "COUNTRY_ID"=52790))

As you can see, statistics feedback and SQL plan directives can accomplish some pretty great things. So, even though they may cause plans to change, it’s very likely they’ll change for the better. But, like any “automatic” feature, always be aware of what it might and might not do. Don’t get lazy and fail to identify issues and fix them on your own when possible.

Identifying Plan Instability

Sometimes it’s painfully obvious when a plan has changed for the worse. A quick look at Enterprise Manager or a query against gv$session can show dozens of sessions executing the same statement. Other times, the problem is not as obvious. One of the best ways to identify the problem is to look for statements with multiple plans that have very different performance characteristics depending on which plan they use. AWR is extremely handy for this because it keeps copies of execution statistics as well as plans used for the most “important” statements. Note that not all statements are captured by AWR. Those that rank high in number of executions, logical IO, physical IO, elapsed time, CPU time, or parses are there, but if you have a statement that is very efficient that later becomes very inefficient, the very efficient version may not be captured by AWR. Regardless of the fact that AWR does not represent a complete record of every statement executed, it does provide data on most statements that are of interest.

Capturing Data on Currently Running Queries

One of my most used diagnostic scripts queries ¥ for all sessions that have a status of Active. This can be used to see what is actually running at any given point in time. It is not perfect, because really fast statements may not show up very often, even if they are dominating the workload. So, I often use Tanel Poder’s snapper script for this same purpose. His script has many advantages, but one of the most useful is that it runs many times in a tight loop and aggregates the data so that very fast statements still show up in the output. Listing 16-13 shows both scripts in action.

Listing 16-13. Two Diagnostic Scripts: as.sql and Session Snapper

SQL> @as

SID PROG SQL_ID CHILD PLAN_HASH_VALUE EXECS AVG_ETIME

---- --------- ------------- ----- --------------- ----- ---------

24 sqlplus@h gf5nnx0pyfqq2 0 4072605661 55 86.18

42 sqlplus@h gf5nnx0pyfqq2 0 4072605661 55 86.18

100 sqlplus@h gf5nnx0pyfqq2 0 4072605661 55 86.18

83 sqlplus@h gf5nnx0pyfqq2 0 4072605661 55 86.18

61 sqlplus@h gf5nnx0pyfqq2 0 4072605661 55 86.18

SQL> @snapper ash=sid+event+wait_class,ash1=sql_id 5 1 all

Sampling with interval 5 seconds, 1 times...

-- Session Snapper v3.11 by Tanel Poder @ E2SN (http://tech.e2sn.com)

--------------------------------------------------------------

Active% | SID | EVENT | WAIT_CLASS

--------------------------------------------------------------

100% | 83 | ON CPU | ON CPU

98% | 42 | ON CPU | ON CPU

98% | 61 | ON CPU | ON CPU

93% | 24 | ON CPU | ON CPU

88% | 100 | ON CPU | ON CPU

12% | 100 | direct path read temp | User I/O

7% | 24 | direct path read temp | User I/O

5% | 248 | control file parallel wri | System I/O

2% | 42 | direct path read temp | User I/O

2% | 61 | direct path read temp | User I/O

-------------------------

Active% | SQL_ID

-------------------------

500% | gf5nnx0pyfqq2

5% |

-- End of ASH snap 1, end=2013-08-12 22:23:17, seconds=5, samples_taken=42

The scripts have very different output formats. My as.sql script has one line per session and shows the sql_id being executed by the session, along with the average elapsed time for that statement. Keep in mind that this represents a single instant in time. So, you should run it several times in succession to get a feel for what is actually happening. Tanel’s snapper, on the other hand, doesn’t require repeated running. Just give it a length of time to run and it automatically samples repeatedly for that length of time. It is also considerably more flexible than my simple script. The format is quite different, too. The top section shows the activity percentage by session ID, or SID, and Event. Notice that the same SID may have multiple entries if it spends significant time on more than one thing during the sample period. The second section shows a breakdown of the work by SQL statement.

In the case shown, both snapper and my as.sql scripts show different views of the same situation. There are five sessions all running the same statement. Any time you are asked to look at a system and you see that many sessions are all running the same long-running SQL statement, you have a pretty good idea where to start your investigation into the plan stability problem.

Reviewing the History of a Statement’s Performance

When a problem is obvious (as in the previous example, in which several sessions were all running the same long-running query), it is often instructive to view the performance of the statement of interest over time. This can be done easily by querying the AWR or Statspack tables directly. Listing 16-14 shows an example.

Listing 16-14. The awr_plan_change.sql Script

SQL> @awr_plan_change

Enter value for sql_id: 3dhwvfmkjzwtv

SNAP_ID NODE BEGIN_INTERVAL_TIME PHV EXECS AVG_ETIME AVG_LIO

------- ---- ------------------- ---------- ----- --------- ------------

1785 3 24-APR-13 05.00 PM 1093407144 6 1.102 2,872.7

1786 2 24-APR-13 06.00 PM 158 0.024 2,873.0

1786 3 24-APR-13 06.00 PM 223 0.023 2,873.0

1787 2 24-APR-13 07.00 PM 749 0.020 2,873.0

1787 3 24-APR-13 07.00 PM 873 0.019 2,873.0

1788 2 24-APR-13 08.00 PM 726 0.020 2,873.9

1788 3 24-APR-13 08.00 PM 871 0.020 2,873.9

1789 2 24-APR-13 09.00 PM 373 0.016 2,874.0

1789 3 24-APR-13 09.00 PM 566 0.016 2,874.0

1892 2 29-APR-13 04.00 AM 1 2.613 3,811.0

1897 2 29-APR-13 09.00 AM 2 8.179 8,529.0

1918 3 30-APR-13 06.00 AM 2 0.421 485.5

1919 2 30-APR-13 07.00 AM 1 1.152 1,242.0

1920 2 30-APR-13 08.00 AM 4 3.273 3,200.3

1920 3 30-APR-13 08.00 AM 12 2.491 3,314.2

1921 2 30-APR-13 09.00 AM 5 3.947 3,333.4

1921 3 30-APR-13 09.00 AM 2 2.416 1,769.5

1922 3 30-APR-13 10.00 AM 4076066623 2 54.237 2,291,432.5

1923 2 30-APR-13 11.00 AM 1093407144 2 0.812 975.0

1923 3 30-APR-13 11.00 AM 4076066623 3 134.031 933,124.3

1924 3 30-APR-13 12.00 PM 3 227.009 6,987,169.3

1926 2 30-APR-13 02.00 PM 1093407144 8 0.818 1,574.5

1926 3 30-APR-13 02.00 PM 4076066623 2 175.709 8,963,417.0

1927 2 30-APR-13 03.00 PM 1093407144 4 1.344 1,068.8

1927 3 30-APR-13 03.00 PM 4076066623 5 156.378 10,059,992.0

1928 2 30-APR-13 04.00 PM 1093407144 6 0.923 1,225.8

1928 3 30-APR-13 04.00 PM 4076066623 1 180.488 2,150,190.0

1930 3 30-APR-13 06.00 PM 2 180.371 8,255,881.5

1934 3 30-APR-13 10.00 PM 1 180.491 3,002,577.0

1939 2 01-MAY-13 03.00 AM 1093407144 21 0.825 1,041.8

1939 3 01-MAY-13 03.00 AM 4 0.575 1,211.8

1944 3 01-MAY-13 08.00 AM 6 1.328 1,788.3

1946 2 01-MAY-13 10.00 AM 1 1.170 2,411.0

1946 3 01-MAY-13 10.00 AM 4 2.041 2,414.3

1947 3 01-MAY-13 11.00 AM 10 1.725 2,937.1

1948 3 01-MAY-13 12.00 PM 3 2.232 3,415.7

1987 2 03-MAY-13 03.00 AM 7 1.029 901.0

1990 3 03-MAY-13 06.00 AM 3 1.225 1,465.7

1991 3 03-MAY-13 07.00 AM 26 0.370 710.5

1992 2 03-MAY-13 08.00 AM 6 0.213 685.7

1992 3 03-MAY-13 08.00 AM 3 0.658 883.0

1993 2 03-MAY-13 09.00 AM 8 0.769 950.9

1996 2 03-MAY-13 12.00 PM 2 0.101 861.5

2015 3 04-MAY-13 07.00 AM 4 0.376 854.5

2016 3 04-MAY-13 08.00 AM 6 0.143 571.0

2019 2 04-MAY-13 11.00 AM 12 0.937 1,352.1

2019 3 04-MAY-13 11.00 AM 10 1.612 1,341.9

2019 3 04-MAY-13 11.00 AM 4076066623 1 41.592 3,942,672.0

2020 2 04-MAY-13 12.00 PM 1093407144 15 1.037 1,734.6

2020 3 04-MAY-13 12.00 PM 4076066623 1 181.044 1,764,007.0

2022 2 04-MAY-13 02.00 PM 1093407144 2 2.214 2,780.5

The awr_plan_change.sql script simply queries DBA_HIST_SQLSTAT for a list of snapshots that contains information about the statement in question (based on its sql_id), and then prints out the relevant statistical information. In this output, I show the plan_hash_value, the average logical IO, and the average elapsed time. (This sort of a report can also be generated from data collected by Statspack, by the way.) One of the most interesting features of this kind of a historical view of a statement is the history of the plan or plans being used. The output in the example shows a classic case of plan instability. As you can see, the plan changes fairly often (note that the script uses the SQL*Plus break feature on the plan_hash_value column, so if the value does not change from row to row, the value is not printed). This is not a situation in which something changed in the environment that caused a plan to change; rather, the plans are in a constant state of flux. This is classic plan instability. If you note a single plan being used for many days and then an abrupt change to another plan, you should look for a change to statistics or some other environmental change, such as an optimizer setting.

You can also see clearly that the performance characteristics are wildly different between the two plans. In the sample output, you can see that plan 1093407144 does only a couple of thousand logical IOs, whereas plan 4076066623 does a few million. Consequently, the average elapsed time is several minutes for the “bad” plan and a couple of seconds for the “good” plan. This is another characteristic of classic plan instability. There is often a single plan that, although not the absolute best performance you can get for any combination of bind variables, is good enough to be acceptable and provides the desired stability.

Aggregating Statistics by Plan

In general, we don’t care much about the optimizer changing its mind and picking a different plan unless the execution times vary widely from one plan to the other. When there are a lot of snapshots or a lot of plans, it’s often helpful to aggregate the statistics by plan. The awr_plan_stats.sql script shown in Listing 16-15 does just that (note that I’ve cut some of the rows from the previous output so the averages don’t match exactly, but they are close enough to get the point across):

Listing 16-15. The awr_plan_stats.sql Script

SQL> @awr_plan_stats

Enter value for sql_id: 3dhwvfmkjzwtv

SQL_ID PHV EXECS ETIME AVG_ETIME AVG_LIO

------------- ---------- ----- ------- --------- -----------

3dhwvfmkjzwtv 1093407144 207 100.0 .935 2,512.5

3dhwvfmkjzwtv 4076066623 22 1,236.5 154.559 4,072,416.3

The output from the awr_plan_stats.sql script clearly shows that, in this example, there are two plans with very different performance characteristics. This is a fairly normal situation (although there are often more than two plans). However, it is, nevertheless, a common occurrence that one plan is often consistent with a reasonable amount of work and a reasonable average elapsed time, but be aware that averages can hide a lot of important details (such as a few very fast executions with the plan that has the horrible averages). But again, the goal is to get stability at a reasonable performance level. So, finding a plan that you can stick with is often all you’re after. (I talk about how you get the optimizer to stick with a single plan in a bit.)

![]() Note The default retention period for both AWR and Statspack is woefully inadequate for this type of diagnosis. The default is seven days for AWR. I’ve been involved in many cases when the necessary data have scrolled out of the retention window and been purged before a proper diagnosis was done. AWR (and Statspack) data do not take up that much space, so I routinely set the retention to several months (and I know of sites that retain multiple years of AWR data). There is even a supplied script that allows you to estimate the storage requirements for AWR based on the workload in your system: $ORACLE_HOME/rdbms/admin/utlsyxsz.sql.

Note The default retention period for both AWR and Statspack is woefully inadequate for this type of diagnosis. The default is seven days for AWR. I’ve been involved in many cases when the necessary data have scrolled out of the retention window and been purged before a proper diagnosis was done. AWR (and Statspack) data do not take up that much space, so I routinely set the retention to several months (and I know of sites that retain multiple years of AWR data). There is even a supplied script that allows you to estimate the storage requirements for AWR based on the workload in your system: $ORACLE_HOME/rdbms/admin/utlsyxsz.sql.

Looking for Statistical Variance by Plan

When a problem is not obvious, but you suspect plan instability is an issue, it’s often helpful to look for statements that have run with more than one plan that have a large statistical variance in their execution times or the amount of work they do (logical IOs, for example). Listing 16-16 shows the execution of a script (unstable_plans.sql) that can help identify statements that are suffering from plan instability.

Listing 16-16. The unstable_plans.sql Script

SQL> @unstable_plans

Enter value for min_stddev:

Enter value for min_etime:

SQL_ID SUM(EXECS) MIN_ETIME MAX_ETIME NORM_STDDEV

------------- ---------- ----------- ----------- -------------

4fc7tprp1x3uj 43212 .18 .84 2.0222

47s01ypztkmn6 6 54.46 210.28 2.0230

3rx5cnvua3myj 8126 .03 .12 2.0728

80tb4vmrsag5j 29544 .78 3.16 2.1433

cahnk07yj55st 17 26.35 113.09 2.3272

2azfw6wnqn01s 388 1.39 6.20 2.4522

a31u2rn7zup46 4 30.38 183.82 2.5271

607twnwf0ym10 30 146.50 728.15 2.8075

7y3w2mnqnp8jn 65 .56 3.05 3.1227

82rq0xvp6u1t2 34 12.34 119.20 3.4625

9cp3tujh0z4mt 42455 .02 .15 3.5998

6ykn5wq4jmu91 58584 .01 .21 3.7001

cvfj7g4fub5kn 116 .43 3.76 5.4863

26nrsfgurpgpm 427450 .07 1.08 5.5286

brntkgfqa9u1u 2 261.26 2,376.86 5.7258

d9ddsn04krw9d 99 .43 5.66 5.9018

fnwxd5kmnp6x9 2227 .47 4.46 6.0031

96567x1dqvzw1 23 27.02 311.04 7.4330

5wwnfumndntbq 10 98.58 1,481.40 7.7765

dm4hyyyxyay5t 1368 .03 .36 7.8945

5ub7xd1pn57by 1118281 .04 1.23 10.8031

870uasttnradg 441864 .12 2.07 11.3099

2p86vc476bvht 34 14.66 297.76 13.6548

2gz0c5c3vw59b 30 53.45 1,197.24 15.1320

4g22whjx1ycnu 818 .55 22.02 15.3194

48k8mu4mt68pw 1578 13.58 2,002.27 81.6759

1ct9u20mx6nnf 25782 .00 .93 287.5165

27 rows selected.

The output in Listing 16-16 shows there are several SQL statements in this system that are most likely suffering from plan instability issues. The script pulls the information from the AWR tables and displays the total executions, the average elapsed time for the fastest plan, the average elapsed time for the slowest plan, and a calculated normalized standard deviation. As you can see, statement 1ct9u20mx6nnf is the worst offender. However, it may not be all that noticeable to the users because the delta between the slowest plan and the fastest plan is still less than a second. If it is executed many times in succession, the users almost certainly suffer; otherwise, they may not notice. On the other hand, sql_id 48k8mu4mt68pw varies from 14 seconds to more than 30 minutes. Anyone that runs this statement will certainly notice the difference. And seeing that the statement is executed more than 1500 times makes this one appear to be a significant contributor to perceived inconsistency.

Last, after identifying the suspects, use awr_plan_stats.sql and awr_plan_change.sql to get a better idea of what’s going with specific statements.

Checking for Variations around a Point in Time

The other thing I do occasionally to check for plan instability is to look for variations around a point in time. The whats_changed.sql script computes a normalized variance for elapsed time around a specific point in time. In fact, it computes the average elapsed time before a reference time and the average elapsed time after the reference time for every statement in the AWR tables. It then displays all statements with average elapsed times that are significantly different (two times, by default). It’s similar to the unstable_plans.sql script, but it looks for variance around a point in time as opposed to variance among plans. It is most useful when new code is rolled out or a new index is created or, basically, whenever you want to see which statements have been affected either for better or for worse. Listing 16-17 shows an example of its use.

Listing 16-17. The whats_changed.sql Script

SQL> @whats_changed

Enter Days ago: 30

Enter value for min_stddev:

Enter value for min_etime:

Enter value for faster_slower:

SQL_ID EXECS AVG_ETIME_BEFORE AVG_ETIME_AFTER NORM_STDDEV RESULT

------------- --------- ---------------- --------------- ----------- ------

5ub7xd1pn57by 1,118,281 0.18 0.05 2.0827 Faster

03rhvyrhjxgg9 3,838 0.10 0.38 2.0925 Slower

cahnk07yj55st 17 113.09 26.35 2.3272 Faster

4bf2kzg2h1sd0 148 0.60 0.13 2.6403 Faster

9cp3tujh0z4mt 42,455 0.12 0.02 2.7272 Faster

fnwxd5kmnp6x9 2,227 0.92 4.47 2.7283 Slower

607twnwf0ym10 30 146.50 728.15 2.8075 Slower

akm80a52q4qs9 649 6.16 1.21 2.9014 Faster

4g22whjx1ycnu 818 0.48 2.44 2.9272 Slower

14mxyzzjzjvpq 1,537 33.08 191.20 3.3800 Slower

6zncujjc43gsm 1,554 22.53 168.79 4.5894 Slower

6zt6cu6upnm8y 3,340 0.62 0.08 4.8153 Faster

870uasttnradg 441,864 0.98 0.12 4.9936 Faster

d9ddsn04krw9d 99 5.66 0.68 5.1708 Faster

cvfj7g4fub5kn 116 3.76 0.43 5.4863 Faster

2p86vc476bvht 34 14.66 297.76 13.6548 Slower

2gz0c5c3vw59b 30 53.45 1,197.24 15.1320 Slower

17 rows selected.

Summary

There are several things that contribute to plan instability. If you get one thing out of this chapter, I hope it is that you realize that plans do not change without a reason. Plans remain static unless something else changes in the system. Bind variable peeking and changes in statistics are the most likely causes of plan instability. Oddly enough, failure of statistics to keep up with changing data is another common cause of instability. Of these three issues, though, bind variable peeking is probably the most prevalent and the most frustrating to deal with. Although most shops are understandably reluctant to turn off the bind variable peeking “feature,” turning it off altogether is a viable option. There are many production systems that have taken this approach. Part of the standard configuration of SAP, for example, is to set the _optim_peek_user_binds parameter to FALSE. This can prevent the optimizer from choosing the absolute best plan available for a certain set of queries, but the tradeoff is a more consistent environment. Short of turning off bind variable peeking altogether, using literals appropriately with columns that need histograms to deal with skewed data distributions is really the only effective way to deal with the issue while still providing the optimizer the ability to choose the absolute best execution plans. However, if circumstances prevent this approach, there are other techniques that can be applied. In the next chapter, we look at how to improve the situation.