If we assume equilibrium, we place a very strong filter on what we can see in the economy. Under equilibrium by definition there is no scope for improvement or further adjustment, no scope for exploration, no scope for creation, no scope for transitory phenomenon, so anything in the economy that takes adjustment - adaptation, innovation, structural change, history itself - must be bypassed or dropped form theory. The result is a beautiful structure, but it is one that lacks authenticity, aliveness and creation. -W.B. Arthur, ‘Complexity and the Economy’, (2014).

Towards the end of 2008, Queen Elizabeth paid a visit to the Economics Department of the London School of Economics. During her visit, she asked a few simple and straight-forward questions to the economist’s present: “Why did no one see it [the crisis] coming?”; “If these things were so large how come everyone missed it?” (Pierce, 2008). While the responses varied in depth and breadth, two facts were clear: Firstly, an unflinching false sense of rectitude with regards to the doctrine that markets are the best handlers of the financial system had led to increased financialization and an unsupportable amount of debt build-up. Secondly, this belief in the ability of markets was based on the intransigent orthodoxies of academic economics and policy making. Not only had these theories failed to see the coming crisis, it had also weakened the financial system to the point that governments were forced to bail out the banks that were the cause of the problem in the first place. Sadly, although some steps are being taken to address these flaws, the underlying theories being taught and practised at institutions have yet to be thoroughly questioned, let alone be completely modified.

Emulating the directness of the Queen’s questions, the previous three chapters reveal three straight- forward facts - Firstly, increasing debt and financialisation of economies causes systemic risk and is currently one of the biggest challenges that national economies face. Secondly, financial markets are constantly in a state of flux and the accelerating profusion of new technologies is poised to increase the turbulence of this changing state. Thirdly, the current framework that governs markets, regulations and policies is maladroit to identify the symptoms of bubbles and crashes. In essence, the dominant strains in economic thinking, namely the Efficient Markets Hypothesis (EMH) and the Rational Expectations Theory (RET) , are becoming increasingly ill-suited to gauging and understanding financial and macroeconomic stability.

As technology continues to make the financial system more complex, these problems are poised to become more frequent. Hence, rather than focus on ways to maintain the status quo, what is required is a fundamental rethinking of how we study economics and how we understand and measure capitalism. Without adopting a new mindset, we will be forced to acknowledge the threat of a never-ending series of booms, busts and crises. Simply put, we cannot expect to solve the problems of today, let alone tomorrow, with an intransigent belief in the tools and theories of yesteryear.

Ironically, it is by studying the history of technology and its sequential metamorphic impact on capitalism that solutions can be found, as every time we are faced with new technological trends, we come face to face with a reoccurring ironic dilemma. On one hand technology makes industries and services more efficient and more capable of addressing our needs. But on the other, it forces us to reframe the kind of jobs and tasks that need to be performed and how the education and skillset of the workforce needs to be adapted to function in the new, updated economy. It would seem that the task of rethinking economics is analogous to a macabre quandary - not only does technology force us to change our capitalistic mindsets, but we are also required to be very expedient in our efforts if we are to keep up with the speed of technological change. Thus, to begin the final chapter on the redefinition of capitalism, we need to understand the galloping tendencies of technological changes in the context of static economic theories.

Technology and Invention: A Combinatorial Process

Ray Kurzweil, the noted inventor and futurist, once said that the exponential growth curves exhibited by current technological trends is based on the tendency of technology to ‘feed off’ technology. In light of the pace of change that we currently witness, this can be accepted as a fair statement if we are to accept that every technology that has been invented, is being invented and will be invented follows the very same formula - they are combinations of technologies that already existed and do not come out of sheer inspiration alone.

This might seem like a common-sense statement to some and in the past, philosophers, historians, sociologists and economists, such as Lewis Mumford, George Basalla, Joel Mokyr and Paul Romer, have come up with anecdotal theories that state this as a concept. But if we are to respect the Popperian scientific method to making a claim, then we need to consult the research that pertains to this subject to prove our hypothesis. More importantly, if we are to state that technology is created by combining bits and pieces of previous technological inventions, then we also need to extend this hypothesis to the process of invention as it is invention that generates new technologies.

Studies done by researchers from the Santa Fe Institute and the Oxford Martin School have found that invention, and thus almost any technology by proxy, exhibits this combinatory phenomenon. In a 2015 paper published by the Royal Society,1 titled, ‘Invention as a combinatorial process: evidence from US patents’, the researchers studied US patent records from 1790 to 2010 in an attempt to conceptualize invention, which is how we get new technologies. Patents were considered as the ‘carriers’ of technology or as the ‘footprints’ of invention, as they leave behind a documentary trail in the way technologies evolve. By executing this study, the researchers were able to show that invention was the manner in which technologies (either very new or previously in use), were brought together and combined in ways not previously seen (Youn et.al, 2015). As per this study, and others2 that have begun investigating the concept of combinatorial evolution 3 of technology, invention can be conceptualised as combinatorial possibilities. In other words, invention is simply the novel combination of existing technological capabilities and is evolutionary4 in nature.

This tendency of technology to build itself on previous or existing technologies is very similar to biological evolution. Kevin Kelly makes the analogy between biological evolution and technological evolution in a more succinct manner. As per his research, the long-term co-evolutionary trends seen in natural and technological paradigms share five common salient features: Specialisation, Diversity, Ubiquity, Socialization and Complexity. These five features are exhibited by any technology. As FinTech is one of the protagonists in this book and in modern capitalism, let’s analyse the evolution of this technology:

Financial technology finds its roots in the history of computing (refer Notes: A brief history of computing). Initially computers were made for very specific or specialised operations . For example, early computers such as the Differential Analyser, invented by Vannevar Bush in the mid 1930’s, were analog computation machines5 that were created to solve ordinary differential equations to help calculate the trajectories of shells. As World War Two broke out, these advances in computing were adopted and developed by various militaries to communicate sensitive information by integrating the techniques of cryptography - a kind of natural selection. To combat this, pioneers such as Alan Turing and his mentor Max Newman, set about designing and building automated machines (Turing Machines) that could decrypt these camouflaged communiqués. This effectively changed the use of the computer and increased the diversity of the kinds of computers.

After the war, advances by notable inventors such as John Mauchly, Presper Eckert and John von Neumann (a veritable polymath) led to the creation of the EDVAC (Electronic Discrete Variable Automatic Computer) , the first binary computer. With binary computers coming of age, there was an increasing need to develop software to give instructions to computers. Punch cards were soon replaced by logic gates (from Boolean algebra) and languages such as COBOL and FORTRAN (FORmula TRANslation), helped in the creation of early operating systems. As software design began to evolve so did the functionality of computers. Programming languages and such as BASIC, LISP, SIMULA, C, C++, UML, Unix, Linux, etc., helped in the construction of distributed communication networks, the internet and ultimately the worldwide web. As the cost of transistors began to drop (Moore’s Law), more tasks got computerized leading to the ubiquity of computers in almost all functions of business and life.

This ubiquitousness gradually entered the sector of trade and thus finance. As trade is a fundamental social interaction, the socializationof computers for communication and value exchange was a natural evolutionary technological development. Increased socialization via digital channels over the past two decades has led to more interconnections between different nodes and led to a complex interwoven structure, where there is no central point that holds the entire edifice in place. As the developmental process of computing continues to become increasingly distributed, the future of computing (and Fintech by extension), is bound to increase in complexity. Selection, diversity, incremental variation and temporal progression (Wagner & Rosen, 2014) will be the hallmarks of tomorrow’s technology and captialism.

It is the final stage of complexitythat poses the greatest intellectual challenge to our understanding of modern day capitalism. As the previous chapters have shown, the increased complexity that has arisen with the socialization and financialisation of banking and commerce has created a system that is opaque and difficult to regulate. The entry of new technologies in the financial system, such as the Blockchain, will help us gain more transparency but will also further add complexity to the system, as every participating node will become a point of both communication and value exchange. If regulators face difficulties identifying points of systemic risk and malicious players in an economy today, the problem is bound to get increasingly complicated in a more inclusive and complex cashless system.

Secondly, we also need to consider the accelerating consilience of technology. The concept of accelerating consilience of technology needs to be highlighted as it sets the stage for understanding the reason why there is a disconnect between the way economics and technology is studied and analysed. This disconnect is especially important as the pace of technological evolution and the disruptive impact it has on the economy is getting shorter and quicker as seen in Figure 4-1.

Figure 4-1. The quickening pace of Konratiev waves Source: The Economist, ‘Innovation in Industry - Catch the Wave’, 1999.6

As technology continues to accelerate, it has a profound impact on the economy as technological performance results in reduction of production costs. Wright’s law (1936) and Moore’s Law (1965) shows that as technological performance increases, it is accompanied with a reduction in the cost of production (Figure 4-2). Theodore Wright (who created Wright’s Law) predicted in 1936 that as technologies improve exponentially with time, costs would decrease as a power law of cumulative production. Some recent research from MIT and the Santa Fe institute shows that a combination of an exponential decrease in cost and an exponential increase in production would make Moore’s law and Wright’s law indistinguishable (as originally pointed out by Sahal) (Nagy et al., 2013).

Figure 4-2. Technology curves and their economic effect on the cost of production. Left image: Wright’s Law (1936); Right image: Moore’s Law (1965) Sources: Left image - Wikipedia; Right image - http://dx.doi.org/10.1371/journal.pone.0052669

This link between the combinatorial evolution of technology and the effect it has on a networked economy is key to understanding not just the economic impact of technological progress, but also in understanding a key tenet of modern day capitalism - technology and the economy follow the same patterns of evolution as seen in ecological systems, and in doing so, increase the complexity of the system.

The Blockchain (which itself is a combination of cryptography, computer science, game theory and monetary economics), is just one element that is increasing the complexity of economics. Other technologies, which have also been discussed in this book, show that most new businesses being created today do not depend on a single technology to propose value to clients. It is because of this accelerating consilience that new businesses are able to scale faster than in comparison to the path that was followed by older incumbents.

If the economy were to be looked at as a network of production agents where each node is an industry, then owing to the consilience of technology, the goods produced by one industry are used as inputs to another industry. As technology from one industry begins to combine with that of another, innovations occur which lead to production cost reductions and the emergence of new technologies. As the inputs get cheaper or better in terms of efficiency, it leads to the creation of new goods. As new products become ubiquitous, improved social connections (including management styles), lead to better distribution of the technology and the associated economies of scale. The greater the connection between the industries, i.e.: the more networked the structure of the economy, the faster this phenomenon repeats itself leading to exponential changes in technological evolution and production cost reductions (Also refer, Farmer and Lafond, 2015).

We can infer from the above statement, that technology and investment decisions are in a constant state of change and are rarely static. But while the endogenous role of technology in the economy has gained increased traction in academic circles, the study of economics has yet to make the transition towards treating change in the economy as an entropic system immersed in an ecological environment. Even one of the most highly cited papers on this subject, ‘Endogenous Technological Change’ (1990), by Paul M. Romer, the current Chief Economist of the World Bank, is modelled around finding the state of equilibrium in light of technological change.7

As these physical flows of technology are accompanied by monetary flows, the economic impact of technological change are two sides of the same coin. As technological complexity continues to increase, the networked economy of modern day capitalism is bound to get more complex as well. But in spite of this increasing complexity and the accompanying entropy that is tied to it, the economic models that are used today are still based on states of equilibrium.

Therefore, if we are to fix the disconnected views we have about technology and economics, we need to rethink economic theory. As a system gets more complex, new bonds are formed, old bonds are destroyed and the repercussions of these creative - destructive movements create a state of constant change or entropy. So why is it that when we learn about economics, we are taught theories of equilibrium and rational expectations , when the changes occurring are entropic and mostly unforeseen, i.e. not rationally expected? If technology creates complexity, why is that the theories of economics are based on states of equilibrium in spite of the fact that changing nature of technology is highly endogenous to economics and capitalism? A primary reason for this mode of thinking is based on how we cognitively interpret the world and why we are constantly trying to predict the future. Sidebar 4-1 offers some neuroscience insights.

Economic Entropy versus Economic Equilibrium

Technology is generally studied as per the scientific method since science is the creative engine of technology. The scientific method was best described by the philosopher Karl Popper, who stated that any science should be scrutinized by decisive experimentation to determine a scientific law. As per this method, also known as Popperian falsifiability , the empirical truth of any scientific law cannot be verified beyond a shadow of a doubt and cannot be known with absolute certainty. They can only be falsified by testing - Even a single failed test is enough to falsify and no number of conforming instances is sufficient to verify. Scientific laws, and thus the study of technology, are hypothetical in character and their validity remains subject to testing.

While the scientific method largely applies to the natural sciences, its rigors ensure the establishment of facts. But while we hold the natural sciences to such exacting standards, we do not extend the same discipline of analysis to the social sciences, especially to economics. Indeed, for the past several decades, mainstream economics has been less concerned with describing reality and has preferred to base its theories on an idealised version of the world. The reason for this could possibly be traced backed to the neuroscience findings we have discussed in Sidebar 4-1. Conventional economics, after all, is searching for an answer to a difficult question that is very centric to human society - it wishes to know how agents in the economy (banks, firms, investors and consumers) might react to the general pattern of the economy they are creating together. Linear models that are easier to digest offer a delicious simplicity.

As a result, to answer this question, contemporary economics has preferred to dogmatically adhere to theories based on what it considers are reasonable axioms. The most eminent of these theories is the Efficient Market Hypothesis (EMH ) which sees the economy as a perfect, rational, mechanistic entity, where asset prices fully reflect all available information. Agents thus logically behave (perform actions, create strategies, make forecasts) in aggregate patterns that would represent all the information at their disposal. As a result, as per RET, all agents have a single optimum view of the future and over time all market participants converge around that view. If one agent were to execute an action that was contrary to this view, another agent would behave in a way to leverage that action to his or her interest and offset the discrepant action. The result is an economy that is static in origin and always trying to achieve equilibrium.

This theory of economics, although very eloquent, is quite absurd. It is completely divorced from actual data and any kind of scientific method. While EMH states that agents are ‘utility maximising’ and make decisions based on RET, almost no agent has the time nor the resources to go through the optimisation process and calculate the best course of action. Even if agents had all the information, the complexity of the decision-making process at hand (especially in light of the information overload provided by today’s technologies), means that computation of such a task is bound to be restricted to ‘good enough’ rather than the ‘best one under all circumstances’. Moreover, agents can make choices for moral or superstitious values that might have nothing to do with rationality. But neither the EMH, the RET or any mathematical framework of economics take these conditions into consideration. Not only are they devoid of good scientific practices, they are detached from human interests and societal reality.

Economics is a social science because it involves people and social interactions that are demonstrated in acts of trade and social allegiances. Although every participant is working towards his or her individual benefit, their view of the world is always incomplete and their actions are always biased. Owing to this, they will not take the appropriate action as stipulated by EMH and RET . George Soros defines this incomplete view of the world as the ‘principle of fallibility’ and the biased decision making process as the ‘principle of reflexivity’ in his ‘Theory of Reflexivity’.

Soros first published his theory of Reflexivity in his book, ‘The Alchemy of Finance’, in 1987. The book was a big success as fund managers and investors clamoured to discover what his investment secrets were. But very little attention was paid to his philosophy of Reflexivity. In a lecture8 he gave following the publication of his book, Soros himself said, “the philosophical arguments [in his book] did not make much of an impression. They were largely dismissed as the conceit of a man who has been successful in business and fancied himself as a philosopher”. However, the theory is interesting because of the principle role uncertainty plays in it.

Uncertainty is something that is normally reserved to risk assessment . EMH and RET do not stipulate exacting codes of conduct or modes of operation to encompass the concept of uncertainty. However, uncertainty needs to addressed at a fundamental level as the economic decisions made by agents bask in uncertainty. Agents making decisions (be it buying, selling, producing, strategizing, or forecasting), also have to anticipate the future decisions that other agents are going to make. Market prices, after all, are the agents weighted-average view of what is going to happen in the future. As the future is contingent on accurately anticipated decisions of others, uncertainty poses a central role in logical economic decision making.

RET circumvents this problem by postulating that in every market, there is a single optimal set of expectations and agent’s views will converge around it. But this hypothesis bears no resemblance to reality. In practice, every agent has different interests and since these interests cannot be known, agents have to make their decisions in conditions of uncertainty as there is no other choice. Their decisions are bound to be based on information asymmetries and personal biases. This is seen in the form of asset price distortions, fluctuating stock prices and currency volatility. Soros called this phenomenon of volatility and distortion owing to personal decision making the ‘ human uncertainty principle’.

The human uncertainty principle and the volatility that is brings along with it, are the fundamental reasons why the economy is never in a state of equilibrium. Individual decisions made by agent’s cause price distortions which set in motion boom-bust phenomena, leading to the creation of bubbles, crises and recessions. The past four decades have seen 8 major crises,9 or a crisis every five years on average. If there is anything that is constant in the economy, it the fact that it is in a constant state of entropy.

Thus, the first point to consider when deciding how to rethink the study of the economics is to acknowledge that agents in an economy react to the outcomes that have been created by others as well as themselves. As these decisions lead to volatility, an economy should not be looked at as a machine-like system operating at equilibrium, but more like an ecology where actions, strategies, and beliefs compete simultaneously creating new behaviours in the process. In other words, an economy is always forming and evolving, and not necessarily in equilibrium.

Secondly, we need to consider the relationship between technology and the economy , for while the economy creates technology, it is also created by technology, as has been explained earlier. The impact of technolgy on the economy has also been discussed in a number of recent works such as Brynjolfsson’s and McAfee’s Race Against the Machine (2011). The economy is therefore not just a container of technology but also an expression of them (Arthur, 2014). As technology changes, markets, regulations and policies need to change as well. In essence, technological change restructures the economy.

Owing to the constant change that is predicated by uncertain agent decisions and technological evolution, the economy is in fact usually in disequilibrium and behaves more like an organic entity in a vibrant ecology, where structures are constructed and reconstructed, and where openness to change is emphasised. Just as technology is endogenous to the economy, so is disequilibrium. Equilibrium could exist, but it is a temporary state of existence. Non-equilibrium is the natural state of affairs.

If the economy is seen in this light, then the reactions of technology with agents and the following assemblages and adaptations that are seen in an economy are reflective of the study of complex systems, an academic discipline that observes the deep laws of complexity and emergence in any system. Complexity theory was born in the 1970’s (Wilson, 1998) and was originally inspired by 19th century physics, specifically the fields of classical mechanics, statistical non-equilibrium physics and thermodynamics (Helbing and Kirman, 2014). The main tenets of complexity theory borrow their conceptions from chaos theory, self-criticality and adaptive landscapes, to bring into focus the way complex systems grow, persist and collapse.

The first scholars of complexity theory began their formulations at the Santa Fe institute, and based their study of complex systems on abstract non-linear transformative computer simulations. They attempted to recreate the same phenomenon seen in complex systems, be it rain forests or collisions of protons in the large hadron collider (LHC 10), in massive computer-aided simulations. By adopting this approach, they attempted to achieve a higher level of understanding comprehensive systems consistent in the real world. In the past, this group of scientists were largely ignored by mainstream academia as traditional academics found their conclusions too vague and metaphoric.

This was partly due to two reasons - the lack of data and slow computing power provided insufficient evidence to support their theories. But over the past two decades this has changed. Increasing amounts of data and exponential rises in computing power, now allow practitioners of this discipline to recreate and study complex operations, such as, simulating the remnants left behind after proton bombardments. As the study of complexity gained traction, a few of the members of the Santa Fe institute began to ponder if the methods and tools at their disposal would allow them to study the interactions that were occurring in a complex economic system? A result of this thought experiment led to the creation of complexity economics in the early 1990’s.11

Complexity economics does not attempt to sidestep the complexity and entropy of a dynamic, vibrant and ever changing economic ecosystem. On the contrary, the very basis of complexity economics is to use tools and methods that inculcate non-equilibrium phenomenon. The mathematics of complexity science is based on non-linearly interacting components (agents), where the system spends long periods of time far from equilibrium. The system may exhibit multiple states of equilibria, but these states are unstable and temporary as feedback and emergent properties (such as new technology), produce counter-intuitive behavioural traits. The system is studied as a highly connected network with no top-bottom control parameters, and where agents are unable to behave as they prefer since they cannot act independently.

All these features are observed in our current economic and financial markets, especially when considering the emergence and growing popularity of distributed value exchange networks. But in spite of this, owing to our dependence and attachment to out-dated dogmas of economic thought, we continue to delude ourselves in thinking that the economy functions in a state of equilibrium, and study the subject based on this convenient view.

It is surprising that this mode of thinking is the dominant view not only in academia, but also in economic governance. One might think that if commercial banks were investing millions of dollars in Blockchain technology, then policy makers would also be updating their methods and models of analysis according to the capabilities of the new systems. Would it not be sensible to prepare for a Blockchain future by developing tools and methods that are capable of leveraging the real-time transactional data between multiple economic agents who are making Knightian decisions12 on a transparent cashless Blockchain? The answer of course, is no.

In spite of the similarities between complex economic systems and our current (and future) economic system, we continue to use models based on the general equilibrium economic theory, the most popular of which, is the Dynamic Stochastic General Equilibrium Model (DSGE ). DSGE based models have been dominant tools in macroeconomic modelling, economic forecasting and policy construction since the early 1980’s and continues to play this role today - For example, in 2009 the Reserve Bank of New Zealand developed and adopted the KITT (Kiwi Inflation Targeting Technology) DSGE model as their main forecasting and scenario tool (Beneš et al., 2009). Hence, to fully understand why we need to consider the use of complexity based models, in the context of the Blockchain, it is essential for us to first review equilibrium economic models.

The Mathematical Wizardry of Equilibrium Economic Models

Today, most central banks use DSGE models for monetary policy analysis and business cycle forecasting. As we have seen in Chapter 3, it is monetary and fiscal policy that play a determining role in guiding the state of markets and the prosperity of a nation. Thus, owing to their fundamental role in monetary policy decision making, it is important to understand the history, abilities and limitations of these models.

Currently, most central banks, such as the Federal Reserve and the ECB,13 use two kinds of models to study and build forecasts about the economy (Axtell and Farmer, 2015). The first, statistical models , fit current aggregate data of variables such as GDP, interest rates, and unemployment to empirical data in order to predict/suggest what the near future holds. The second type of models (which are more widely used), are known as “Dynamic Stochastic General Equilibrium” (DSGE) models . These models are constructed on the basis that the economy would be at rest (i.e.: static equilibrium) if it wasn’t being randomly perturbed by events from outside the economy.

Although DSGE models are dynamic macroeconomic models of business cycle behaviour, their foundations are derived from microeconomics as they assume that the economy is based on optimising agents who make decisions based on rational expectations to maximize their objectives based on constraints14 (Slobodyan and Wouters, 2012). The agents in the models are represented as households and firms - households consume goods, provide labour, invest and trade in bonds and accumulate capital in the form of real estate or liquidity. On the flipside, firms manufacture goods, provide employment and try to maximize profits based on their constraints. The interactions between firms and households result in guiding the economy through various stages of the business cycle and the central bank changes the nominal interest rate in response to changes in inflation, output, or other economic conditions. The central bank thus acts as a reactionary entity (in the context of the Taylor rule 15) in DSGE models. To account for fiscal shocks, the models contain some portion of ‘non-optimizing’ households which deplete all their earnings. In the language of political correctness, these households are referred to as hand-to- mouth households. Notice the emphasis on business cycles, assumptions and rational expectations.

The origins of these models can be traced back to the 1940’s, following the publication of Keynes’ General Theory (1936). Following the publication of this seminal work, governmental and academic bodies began the construction of large-scale macroeconomic models based on this style. Specific or ad-hoc rules were postulated, converted into variables and equations were created based on Keynesian macroeconomic theory . For close to three decades, these neo-Keynesian modelswere the mainstay of macroeconomic decision making.

But as the 1960’s ended and the 1970’s rolled in, advances in technology challenged the assumptions on which these models were built. As these models depended on a direct trade-off between inflation and unemployment, they were unable to appropriately consider the labour replacement function of technology. Secondly, they were incapable of integrating variables that represented microeconomic changes such as the elastic substitution of goods, the elasticity of labour supply (especially as technology replaced physical labour making economies more service oriented rather than manufacturing intensive), etc. Finally, they did not recognize that the decision-making rules of economic agents would vary systematically with changes in monetary policy. This final flaw is often referred to as the Lucas Critique.

The Lucas Critique and the introduction of Rational Expectations (following a seminal paper by Muth in 1961), led to demise of neo-Keynesian models. In its stead, DSGE models came into being. The first DSGE models were known as Real Business Cycle (RBC) modelswere introduced in the early 1980’s and were based on the concepts detailed by Finn E. Kydland and Edward C. Prescott in 1982. RBC models were based on the assumptions of perfect competition on the goods and labor markets and flexible prices and wages, and increasingly gained traction thanks to their success in matching some business cycle patterns (Slanicay, 2014). These models saw business cycle fluctuations as the efficient response to exogenous changes which implied that business cycles were created by ‘real’ forces and that productivity shocks were created by technological progress.

But in spite of the theoretical underpinning that technology was the main source of business fluctuations, after a period of use, the RBC model began to lose favour with academics and policy makers. A host of empirical studies found that the contribution of technology shocks to the business cycle fluctuations was relatively small and the predictions made by RBC models with regards to labour and productivity in response to technology shocks began to be rejected. A second reason for their rejection was their view on monetary policy - In most RBC models, monetary policy was taken to have no effect on real variables even in the short run. This was at odds with the longstanding belief of that monetary policy had the power to influence productivity output and unemployment in the short term (Christiano et al., 1998)(Also see ‘The Trouble With Macroeconomics’, Romer (2016) .

Owing to these differences, the RBC models began to undergo a phase of evolution which led to the creation of another class of DSGE models also known as New Keynesian models (NK models). These models were build on top of the existing framework of RBC models but their construction was also influenced by a new theory that had begun to gain increasing traction in the field of economics at that time - contract theory. This theory might be familiar to most readers, as two of the three16 economists who developed the theory received the Nobel Memorial Prize in Economic Sciences in October 2016.

Contract theory introduced the concepts of price and wage rigidities (See Sidebar 4-2 and ‘Theories of Wage Rigidity’, Stiglitz, 1984) in NK models . The older RBC models were thus enhanced with some Keynesian assumptions, namely competition on goods, labour and rigidities. By including nominal price and wage rigidities into the model, changes in short-term nominal interest rate were not offset by identical changes in the inflation, which caused real interest rate to vary over time. As a shift in the real interest rate affects consumption and investment, productivity outputs and employment levels adjusted in relation to the new level of aggregate demand. Monetary policy was thus no longer neutral in the short term, as it was in the older RBC models .

The evolution of DSGE models however, is still far from complete. Although contract theory allows for the introduction of rigidities and allows monetary policy to be an effective tool in the short-term, empirical facts have shown that there are gaps with the models prognostications. Recently, economists such as Gregory Mankiw, Ricardo Reis and Laurence Ball have shown that the sticky price effects in these models don’t explain observed persistent inflation (See Mankiw & Reis, 2001, 2002). This has led to the introduction on a new kind of rigidity - sticky information. As per sticky information, knowledge about macroeconomic conditions disseminate slowly through the economy which effects the decision-making process of household and firms, and thus effects wages and prices (Reis, 2006). This insight has also led to the creation of SIGE (Sticky Information General Equilibrium) models , a new variant of DSGE models.

All DSGE models share the core assumptions on the behaviour of households and firms and were constructed to represent the self-regulating spirit of markets and economies. However, they are still predicated on a state of equilibrium. While firms adjust to new demand levels and changing interest rates, in the long run, all prices and wages are said to adjust and return the economy to ‘its natural equilibrium’ (Slanicay, 2014). As per these models, in the case of an unanticipated shock, the economy would deviate from its equilibrium, but after a certain amount of time it would revert back to the equilibrium. The length of the adjustment process is influenced by the degree of nominal and real rigidities (Goodfriend and King, 1997) and the graduality of the adjustment process would make room for potential welfare enhancing economic (monetary) policy which would minimize the distortions, and thus stabilize the economy around its equilibrium (Slanicay, 2014).

The extensive use of DSGE models in the past few decades have not been without strife. As the economy has gotten increasing interconnected, greater amounts of data are available to agents. Consequently, the rational expectations of agents have begun to fluctuate with greater intensity than before. As the expectations of firms and households are unobservable older DSGE models cannot distinguish whether changes in activity are a function of altered expectations today or lagged responses to past plans. For example, they cannot determine whether a rise in business capital investment is attributable to revised expectations about sales or is part of a sequence of gradual capital acquisitions related to earlier investment plans (Brayton et al., 1997).

As a result of this, the Federal Reserve began developing and using a new tool for macroeconomic policy analysis in 1996 (it has undergone periodic revisions) and is referred to today as the FRB/US model. As per the description given on the Board of Governors of the Federal Reserve System , ‘One distinctive feature compared to dynamic stochastic general equilibrium (DSGE) models is the ability to switch between alternative assumptions about expectations formation of economic agents. Another is the model’s level of detail: FRB/US contains all major components of the product and income sides of the U.S. national accounts’. (Text in italics taken from an article published on the Board of Governors of the Federal Reserve website in April 2014. See website link below17).

Owing to the scope and scale of the model, the FRB/US model can be considered the most advanced macroeconomic policy tool in use today (although there are various critiques of this model dating back to the 1970s when the conceptualisation of this model first began). But the FRB/US model is still ‘a large- scale estimated general equilibrium model of the U.S. economy’ (Federal Reserve Board, 2014). The key words to be underlined in the previous phrase are ‘estimated’ and ‘equilibrium’. Almost every model that has existed since the 1960’s till today are based on these two terms. Whether we use Traditional structural models, Rational expectations structural models, Equilibrium business-cycle models, or Vector Auto Regression (VAR) models (See Notes ‘Types of Macroeconomic Models’), the base parameters on which these models are build are assumptions, estimations and equilibrium.

Secondly there is not real inclusion of the financial market. The family of DSGE macroeconomic models, which we have rapidly covered, emerged as a synthesis between the Chicago school of thought and the new Keynesian approach over the period of the Great Moderation (1983‐2008). This was a period during which the relative stability of the economy allowed for policy approaches that could only rely in the use of monetary policy (i.e.: the rate of interest). This was because the Chicago led thought considered that all that was needed to face business cycles and/or recessive trends was an active monetary policy. Some thought that not even that was needed since they believed that free market adjustment will always find the way out (Garcia, 2011). This belief was also shared by the new‐neo‐Keynesians, who believed that fiscal policy was not needed to deal with business cycle or recessive trends. Hence both schools of thought converged in the idea that all that was needed to avert the risks of business cycles or recessive trends was a clever monetary policy guided by a monetary rule (García,2010). The result was the gradual crowding out of fiscal policy and even less attention to fiscal policy alternatives. However, the crisis has shown us how ineffective economic policy can be if it guided only by fiscal policy. It is also one the main reasons for proposing a Blockchain based fiscal policy system. (Also see: ‘The Case for Monetary Finance 18 - An Essentially Political Issue’, Turner, 2015).

This wide-spread accepted assumption that financial markets would function as the best determinants of price based on agents making logical decisions, and hence there was no need to factor in models of financial markets was directly related to the EMH. If markets effectively reflect the prices of assets, then why bother modelling the financial sector? This rationale was based on two assumptions - firstly, that the financial sector always tends to be in equilibrium, and secondly, that financial markets are complete, i.e.: unbalances (defaults, insolvencies, illiquidity, etc.) are balanced over time. But as we have seen in the previous chapters, this is not reflective of reality. Thus, it is unsurprising to learn that DSGE models are unable to capture the full view of international financial linkages (Tovar, 2008).

The RET premise is another pitfall of DSGE models. There is sufficient scientific evidence, notably from Douglass C. North, winner of the 1993 Nobel Prize for Economics, that under uncertainty, there is no certitude of rational behaviour. In his own words,

“Frank Knight (1933) made a fundamental distinction between risk and uncertainty. In the case of the former, probability distributions of outcomes could be derived with sufficient information and therefore choices [are] made on the basis of that probability distribution (the basis of insurance). But in the case of uncertainty no such probability distribution is possible and in consequence, to quote two of economics most eminent practitioners “no theory can be formulated in this case” (Arrow, 1951 p. 417) and again “In cases of uncertainty, economic reasoning will be of little value” (Lucas, 1981, P 224). But human beings do construct theories all the time in conditions of pure uncertainty-- and indeed act on them … It is the widespread existence of myths, taboos, prejudices and simply half-baked ideas that serve as the basis of decision making. Indeed, most of the fundamental economic and political decisions that shape the direction of polities and economies are made in the face of uncertainty.” (Douglass C. North, ‘Economics and Cognitive Science’, Procedia - Journal of Social and Behavioural Sciences, 2010).

Another Nobel prize winner, Daniel Kahneman,19 stated a similar point in his Prospect Theory. Kahneman was able to prove empirically that decisions under uncertainty did not point towards a ‘rational behaviour of agents’ and that it was risk aversion that dominated behaviour. Albert Bandura, Professor Emeritus of Social Science in Psychology at Stanford University, makes a similar claim in his Social Cognitive Theory, in which he states that,

"Rationality depends on reasoning skills which are not always well developed or used effectively. Even if people know how to reason they make faulty judgments when they base their reasoning on incomplete or erroneous information, or they fail to consider the full consequences of different choices. They often misread events through cognitive biases in ways that give rise to faulty beliefs about themselves and the world around them. When they act on their misconceptions, which appear subjectively rational to them, they are viewed by others as behaving in an unreasonable or foolish manner. Moreover, people often know what they ought to do but are swayed by compelling circumstances or emotional factors to behave otherwise.” (Bandura, ‘Social Cognitive Theory’, 1998).

It is important to emphasis the role of RET in the context of DSGE models as they act as an input variable in these models. As it was seen in both the DSGE and the FRB/US model , agent expectations are the main channel through which policy affects the economy (Sbordone et al., 2010). But if the structural parameters are based on wrongly assumed microfoundations, the model is bound to make bad predictions and have errors even though it is technically Lucas-robust.

Finally, it is their attachment to equilibrium that is the bane of these models. DSGE models are based on the absolute belief that the market adjustment will always tend to equilibrium. This belief is based in four principles : (i) Under budget constraints, consumers always maximize their individual utility; (ii) Under resource constraints, producers always maximize their profits; (iii) Markets may become turbulent owing to exogenous shocks. But this always returns to a state of equilibrium after a few quarters; and (iv) Agents make decisions based on rational expectations. Hence, even if a shock were to move the economy from steady state growth, within a few quarters the market would make a dynamic adjustment process and return to its previous state.

DSGE models are therefore based on an assumption of a steady state equilibrium of the economy . They allow for real amounts of time being taken to move towards that steady state and also allow for dynamic interaction between three integrated blocks supply, demand and monetary policy. Hence, the “dynamic” aspect of the DSGE label - in the sense that expectations about the future are a crucial determinant of today’s outcomes (Sbordone et al., 2010). They also allows for a random (i.e. stochastic) element in the path being taken towards that steady state. But the underlying premise is the existence of an omni-present state of equilibrium. Figure 4-4 offers a graphical interpretation:

Figure 4-4. The Basic Structure of DSGE Models Image source: ‘Policy Analysis Using DSGE Models: An Introduction’, Federal Reserve Bank of New York

The reason these models always tend to equilibrium, it is because they are built to perform in this way and not because they are accurately interpreting the real economy. Willem Buiter, Chief Economist of Citigroup, points that one of the main reasons for this mode of construction is because policy decisions tend to create non-linear behaviours. As the interactions of this non-linearity with agent uncertainty creates complex mathematical problems, DSGE modellers removed the non-linearity elements and reduced the complex evolution of random variables into a linear system with additive stochastic variations. In an article titled, ‘DSGE models and central banks’, Camilo E. Tovar, a senior economist at the IMF also supports this argument by stating that, ‘there are important concerns related to the degree of misspecification of current DSGE models.... DSGE models are too stylized to be truly able to describe in a useful manner the dynamics of the data’ (Tovar, 2008). In the same article, published by the Bank of International Settlements, he also states that, ‘Possibly the main weaknesses in current DSGEs is the absence of an appropriate way of modelling financial markets’.

The premises on which these models are constructed are however representative of a bigger problem. In a short article titled, ‘The unfortunate uselessness of most ‘state of the art’ academic monetary economics’, posted on the website of the Centre for Economic Policy Research (CEPR) , Willem Buiter explains what are the consequences of using DSGE models based on linearity an equilibrium,

‘When you linearize a model, and shock it with additive random disturbances, an unfortunate by-product is that the resulting linearized model behaves either in a very strongly stabilising fashion or in a relentlessly explosive manner. There is no ‘bounded instability’ in such models. The dynamic stochastic general equilibrium (DSGE) crowd saw that the economy had not exploded without bound in the past, and concluded from this that it made sense to rule out, in the linearized model, the explosive solution trajectories. What they were left with was something that, following an exogenous random disturbance, would return to the deterministic steady state pretty smartly. No L-shaped recessions. No processes of cumulative causation and bounded but persistent decline or expansion. Just nice V-shaped recessions....

… ‘The practice of removing all non-linearities and most of the interesting aspects of uncertainty from the models that were then let loose on actual numerical policy analysis was a major step backwards. I trust it has been relegated to the dustbin of history by now in those central banks that matter’....

… ‘Most mainstream macroeconomic theoretical innovations since the 1970s … have turned out to be self- referential, inward-looking distractions at best. Research tended to be motivated by the internal logic, intellectual sunk capital and aesthetic puzzles of established research programmes rather than by a powerful desire to understand how the economy works - let alone how the economy works during times of stress and financial instability’…

(Buiter, 2009)

These statements reflect not just a bad ideation of economic theory but also a reflection of mathematical ignorance and arrogance that is turning economics into a posterchild for the bad application of science. Paul Romer, the current Chief Economist at the World Bank, explored the growth of this tendency over the past seven decades’ in his paper, ‘Mathiness in the Theory of Economic Growth’ (2015). Mathiness, Romer defines, ‘is [what] lets academic politics masquerade as science. Like mathematical theory, Mathiness uses a mixture of words and symbols, but instead of making tight links, it leaves ample room for slippage between statements in natural versus formal language and between statements with theoretical as opposed to empirical content’. (Romer, 2015) (Also see ‘The Trouble With Macroeconomics’, Romer, 2016).

Romer’s analysis tests the mathematical foundations of a number of seminal articles in the field of economics including - Solow’s 1956 mathematical theory of growth, Gary Becker’s 1962 mathematical theory of wages, McGrattan and Prescott’s 2010 paper on price-taking models of growth and Boldrin and Levine’s 2008 paper on Perfectly Competitive Innovation. He also analyses the work of other prominent economists such as Robert Lucas (Nobel Prize in Economics in 1995) and Thomas Piketty (who wrote Capital in the Twenty-First Century) among others. His analysis shows how Mathiness has been used repeatedly to bend data to fit a model. More disturbingly, these practices have been accepted by the academic community which makes the discipline of economics divergent from Popper’s scientific method of testing. A few extracts from his paper illustrate these statements,

’In addition to using words that do not align with their formal model, Boldrin and Levine (2008) make broad verbal claims that are disconnected from any formal analysis. For example, they claim that the argument based on Euler’s theorem does not apply because price equals marginal cost only in the absence of capacity constraints. Robert Lucas uses the same kind of untethered verbal claim to dismiss any role for books or blueprints in a model of ideas: “Some knowledge can be ‘embodied’ in books, blueprints, machines, and other kinds of physical capital, and we know how to introduce capital into a growth model, but we also know that doing so does not by itself provide an engine of sustained growth.” (Lucas 2009, p.6) ....’

’…the fact that oversight was not picked up at the working paper stage or in the process leading up to publication may tell us something about the new equilibrium in economics. Neither colleagues who read working papers, nor reviewers, nor journal editors, are paying attention to the math.... Perhaps our norms will soon be like those in professional magic; it will be impolite, perhaps even an ethical breach, to reveal how someone’s trick works’ (Romer, 2015).

Romer’s paper was inspired by a paper published in 2014 by Paul Pfleiderer, a professor of finance at Stanford University. In his paper, titled, ‘Chameleons: The Misuse of Theoretical Models in Finance and Economics’, Pfleiderer discusses how theoretical models in finance and economics are used in ways that make them “chameleons” when they are built on assumptions with doubtful connections to the real world but whose conclusions that are uncritically (emphasis added) applied to understanding our economy. Pfleiderer shows that most economic models ‘cherry pick’ the data they want to use to support a desired result. Any data that does not perform this function is excluded, and as a result, the conclusions depend not only based on what is included but also by what is excluded. In his view, this occurs because models, and specifically their assumptions, are not always subjected to the critical evaluation necessary to see whether and how they apply to the real world (Pfleiderer, 2014).

Pfleiderer provides a few examples of chameleon models in his paper. However, there is one that bears citing and emphasis as it is directly related to the subject of debt which is one of the principal topics in this book. Sidebar 4-3 presents an extract from his paper and provides a fitting conclusion to this critique of equilibrium economic models.

At the very least chameleons add noise and contribute to public misunderstanding and confusion about important issues

The analysis provided by Pfleiderer shows us why a new approach to understanding the economy is required urgently. DSGE models were unable to predict the crisis because they are based on unrealistic assumptions, just like infinite credit, and are incredibly simple caricatures of the real world which fail to consider that economy is never in equilibrium, but is rather in a continual state of adaptive change.

What we require today is an approach to economic modelling that is based on the complex interactions that take place in real economies which create a state of entropy rather than equilibrium. Fortunately, there is a way to perform this feat using the methods and tools of complexity science and via agent-based modelling (ABM) . Using these methods, we can simulate the actions of millions of artificial households, firms, and people in a computer and watch what happens when they are allowed to interact.

By adopting such new techniques not only will we make the study of economics more scientific, but we will also be introducing a new way to think about economics. The critique of DSGE models shows us how the study of economics has evolved over the years. However, most of the effort has been directed towards altering bits and pieces of existing theories in relatively minor ways to explain empirical facts. Economists cling to past theories and attempt to rectify them to better understand the changing economy. But this approach has provided little insight to understanding the structural changes that emerge as an economy evolves. For example, the idea that an economic system could develop towards a distributed value exchange system with bottom-up participation and with new patterns of competition, efficiency and growth has received little attention because the concept of participatory decentralised organisation has been regarded as incompatible with efficient economic outcomes (Helbing and Kirman, 2014).

Research in economics today consists of working with modest amounts of data on a personal computer to create and solve abstract simple models that bear little resemblance to reality (Axtell and Farmer, 2015). Current models distil millions of individuals into one household and thousands of firms into just one firm, and then rationally optimize their risk-adjusted discounted expected returns over an infinite future. And they do all of this with a laptop and some maths. It is surprising to note this since we create and run models that use the largest computers to crunch petabytes of data to predict global climate change, for oil/gas exploration, for molecular modelling and for weather forecasting. But when it comes to modelling the economy, apparently a laptop and some cherry-picked data will suffice.20 The fact that financial firms on Wall Street use teams of meteorologists running a bank of supercomputers to gain a small edge over others in identifying emerging weather patterns, but don’t use or find DSGE models quite useless, speaks volumes about their worth. If economists’ DSGE models offered any insight into how economies work, they would be used in the same way (BloombergView, 2014 ).

The main problem with current mainstream economics is its methodology. The addiction on building models that show the certainty of logical entailment has been detrimental to the development of a relevant and realist economics. Insisting on formalistic (mathematical) modelling forces the economist to give upon on realism and substitute axiomatic for real world relevance (Syll, 2016). However, reality refuses to bend to the desire for theoretical elegance that an economist demands from his model. Modelling itself on mathematics, mainstream economics is primarily deductive and based on axiomatic foundations (Sinha, 2012).

Complexity economics offers us a chance to rethink the way we study economics and leverage the transparency offered by the Blockchain. As we begin to learn about complexity economics, we must remember two points: First, the economy is never in equilibrium, but is rather in a continual state of adaptive change. Second, we shall embrace the complex interactions that take place in real economies through a relatively new computational technology called agent-based modelling (ABM) . Let’s see how we can teach an old dog new tricks.

An introduction to Complexity Economics and Agent Based Modelling

“Science is the poetry of reality”, Richard Dawkins

If we are to provide a new perspective on how to look and measure the economy, then we need to begin by asking why has economics adopted this ontological notion that the economy is an equilibrium system and borrowed the mathematical toolkit that goes with that? The answer to this question lies in the beginnings of economics as a formal branch of study.

In the 1870s, Léon Walras a French mathematical economist, came across a book titled, ‘The Elements of Statistics’ (Eléments de statique), by Louis Poinsot, a French mathematician and physicist. The book represented what was at that time the cutting edge in the fields of mathematics and physics, and explored concepts such as the analysis of static equilibrium systems and simultaneous and interdependent equations (Beinhocker, 2007) (Also see Mirowski, ‘More Heat than Light’, 1991). Walras borrowed heavily from this book in coming up with his own theories which resulted in the formulation of the marginal theory of value and the development of general equilibrium theory. He was not alone in adopting this approach and another economist of the time, William Stanley Jevons, performed the same exercise by borrowing from another renowned physics textbook, the ‘Treatise on Natural Philosophy’, by Lord Kelvin and Peter Guthrie Tait21 and independently developed the marginal utility theory of value.22

These economists opened a new period in the history of economic thought by making the case that economics is a mathematical science concerned with quantities. Indeed, Walras went so far with Poinsot’s ideas that he reduced economic agents to atoms who functioned on physical laws and which were devoid of learning or adapting new behaviours (Walker, 1996).

What followed from these early days was the gradual progression of treating economics as a mathematical subject based on equilibrium equations and where agents behave as per the aggregate tendencies of the market (Refer the previous note on ‘Mathiness’ by Romer). This legacy can even still be seen today in the form of maximization principles (eg: utility) that mirrors the framing of physics in terms of minimization principles (eg: principle of least action) (Sinha et al., 2010), and in the form of the ‘flow of money’, which was created by Bill Philips (of the Philips Curve fame), based on his construction of the MONIAC23 (Figure 4-5). The mapping of macroeconoic movements to the flow of fluids was representative that these thinkers looked at the economy as a subject of physical inquiry.

Figure 4-5. Professor A.W.H (Bill) Phillips with the Phillips Machine (MONIAC) Source: The Phillips Machine Project’ by Nicholas Bar, LSE Magazine, June 1988, No 75.

However, the introduction of mathematical game theory in the 1950s by John von Neumann, threw a monkey wrench into this link between economic and physics. When game theory was introduced (See ‘Theory of Games and Economic Behaviour’, von Neumann and Morgenstern), economics immediately realised that the maths of this field could be used to study the behaviour of selfish agents to get the better of other agents in an economy. But in experiments conducted with actual subjects, the agents showed irrational cooperative action was the norm (Sinha et al., 2010). Economists thus reduced and embraced the abstract idea that an economy converges to equlibrium where the negative actions of one agent are offset by the postive actions of another. It is this fallacy that complexity economics attempts to solve.

What we have seen until now is that while we conduct economic study using deterministic and axiomatized models , economic phenomena are not derived from deterministic, axiomatic, foreseeable or mechanical precepts. The reductionist approach that was adopted by Classical and Neo-Classical economists in the past, ignored the dependencies and interconnections between different agents, their influence on each other and the macroeconomic consequences of these interactions. Instead of analysing these interactions, economists reduced the general behaviour of a system to a set of essential rules and then attempted to study these parts individually, in an attempt to gain a picture of the whole. This provides them with an aggregated view of specific economic phenomenon, which are then generalized and applied as the rational rules of conduct for the economy. This reductionist excludes the study of unfolding patterns created by agents and simplifies their individual consequence, creating a separation between reality and its formal representation (Bruno et al., 2016).

Complexity economics challenges the fundamental orthodox assumptions of equilibrium, archetypal agents and rational behaviour. It attempts to change the narrative of the study of economics by emphasising the importance of network effects, feedback loops and the heterogeneity of agents. Axiomatisation, linearization and generalization are replaced by a study of the interconnections and the relevance of relationships among agents, and the effect they have on their economic environment and vice versa. Heterogenous interacting agents who make new decisions based on new information they receive from their environment and other agents replace the tradition ‘rationally’ minded independent agents who make decisions whilst standing still in time (Gallegati and Kirman, 2013).

Time and Information play key roles in this new paradigm of economic thought as they highlight the importance of the meso-layer, which is the connective tissue between the micro and macro structures of an economy. In complexity economics, abstract and dogmatic theories are replaced by a study of the patterns that are formed when interacting agents are exposed to new information and make decisions that influence others around them and change the structure of the economic environment in doing so. As the economy and the decisions that are made by agents’ changes over time, new structures are formed. Complexity economics is thus about formation - the formation of new structures within an economy, based on exogenous and endogenous changes , and how these formations affect the agents who are causing it (Arthur, 2013).

Information as well plays a key role as changes in decisions and the introduction of new technologies affects the concentration and dispersion of knowledge and knowhow. As information is constantly changing, agents are bound to interact to increase their knowledge of these changes. As interaction is ever-present, it adds to the non-linearity and the development of shocks which reduces the direct proportionality between cause and effect: a small shock can lead to a large effect based on the interpretation of the shock by the agents. This also means that the standard tools of physics cannot be used sic et simpliciter, due to the agent’s cognitive abilities to process information and make consequential decisions based on new information.

The aspect of information merits highlighting, not just because it is ubiquitous in today’s digital economy, but also because it is directly related to the prosperity of an economy. In his book, ‘Why Information Grows’ (2015), César Hidalgo24 shows that information is a growing entity that has the ability to manifest itself in new products which are essentially cauldrons of information. The production of new products is based on the accumulation of knowledge and expertise in networks of agents. The higher the number of links between agents, the larger the network and the greater the accumulation of knowledge. This concept is important to understand as the growth of economics is based on the growth of information. Hidalgo shows that more prosperous countries are those that are better are making information grow, while those regions which produce networks with less porous boundaries hinder the growth of information and are limited in their long-term adaptability to economic changes.

Thus, a crucial part of complexity economics is the flow of information and how this information affects agents . The network of the economy can still produce aggregate patterns, but what is important to note is that firstly, these patterns are evolutive, and secondly, it is the individual decisions of agents that cause a pattern to emerge. The agent is effected by this pattern and interprets it as new information. As new information is introduced, the decisions of the agent’s change, and a new pattern begins to form. The two are not separated but intrinsically linked. Aggregation is not just the process of summing up market outcomes of individual agents to obtain an economy wide total. It is the two-way interdependency between agents and the aggregate properties of the system: interacting elements produce aggregate patterns that those elements in turn react to it (Gallegati and Kirman, 2013). The meso-layer thus plays a key role in this study of economics.

In contrast to the Walrasian approach , where agents do not interact at all, complexity economics looks at interactions as the base of economic development, since these interactions not only influence macro patterns, but also create progressively complex networks that allow them to compensate for having limited information. This approach of seeing the economy where actions and strategies constantly evolve, where time becomes important, where structures constantly form and re-form, where phenomena appear that are not visible to standard equilibrium analysis, and where a meso-layer between the micro and the macro becomes important (Arthur, 2013) are the key branches of complexity economics.

Complexity economics is thus the study of a history-dependent, living and always evolving system whose properties emerge as a result of the interaction between self-adapting individual agents , who in adapting to new information, change the system level behaviour and the overall state of the system. Breaking down the system to individual components thus destroys down the systems properties (Bruno et al., 2016). Hence the main areas of the study of complexity economics include, self-organization, pattern development and pattern recognition, agent-decision making, network propagation, interdependence of interactions, emergence, learning and memory, endogenous innovation, institutional effects, unpredictable dynamics, heterogeneity, path dependence, topology, change and evolution, holism and synergy (Manson, 2001). This interdisciplinary branch of study combines elements of physics, mathematics, computer science, ecology, engineering and, of course, economics.

It is not my intention to provide a complete education of complexity economics in this chapter. Firstly, I am not qualified to do so, and secondly, there is a growing body of researchers and academics who have done this admirably well in recent times. Table 4-1 lists some of the work I have referred to which could provide an anchor to your own investigative efforts.

Table 4-1. Reference books for Complexity Economics and Agent Based Modeling

Author(s) | Book |

|---|---|

W. Brian Arthur | Complexity and the Economy (2014) |

Dirk Helbing | Quantitative Sociodynamics (2010) |

Dirk Helbing | Thinking Ahead - Essays on Big Data, Digital Revolution, and Participatory Market Society (2015) |

César Hidalgo | Why Information Grows: The Evolution of Order, from Atoms to Economies (2015) |

Sitabhra Sinha, Arnab Chatterjee, Anirban Chakraborti, Bikas K. Chakrabarti | Econophysics: An Introduction (2010) |

Sitabhra Sinha, Arnab Chatterjee, Anirban Chakraborti, Bikas K. Chakrabarti | Econophysics of income and wealth distributions (2012) |

Linda F Dennard, Kurt A Richardson and Göktugˇ Morçöl | Complexity and Policy Analysis (2008) |

Uri Wilensky and William R and David S. Wilson and Alan Kirman | An Introduction to Agent-Based Modelling: Modelling Natural, Social, and Engineered Complex Systems with NetLogo (2015) Complexity and Evolution: Toward a New Synthesis for Economics (2016) |

Jean-Luc Gaffard and Mauro Napoletano | Agent-based models and economic policy (2012) |

What I hope to provide in the remaining part of this chapter is a summary of the key areas of study that are associated with complexity economics, so that you may identify a branch of study that peaks your interest to continue your own research in this domain. The pleasure of studying complexity economics is that as you embark on this voyage, you will be exposed to a plethora of influences from other disciplines, giving you a holistic view of science. With that introduction, let’s look at some of the key topics in this discipline:

Dynamics

Non – Linearity

Power Laws

Networks

Feedback loops

Path Dependence

Emergence

Agents

Dynamics

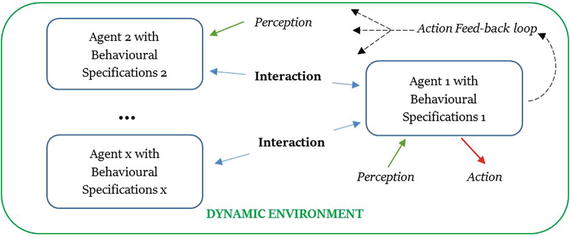

In complexity economics, agents are influenced by others and by the behaviour of the system as a whole leading to emergent behaviour at the aggregate level. While the Walrasian economy is closed, static, and linear in the sense that it can be understood using the tools of algebraic geometry and manifold theory, the complex economy is open, dynamic, nonlinear, and generally far from equilibrium (Beinhocker, 2007). The dynamic interactions are based on the specifications of the agents - agents try to act as rationally as they can but are influenced by other agents and the aggregate behaviours of the changing market place causing them to deviate from a previously optimal behaviour. Figure 4-6 graphically interprets this statement:

Figure 4-6. Representation of a dynamic environment and agent’s decision making influences Reference: Chapter 7, ‘Computational Complexity’, Robert A. Meyers, (2012).

Non - Linearity

An extension of the dynamics seen in a complex economy is non-linearity . A system is linear if one can add any two solutions to the equations that describe it and obtain another, and multiply any solution by any factor and obtain another (Ladyman, Lambert and Wiesner, 2012). This is referred to as superposition principle (Bruno et al., 2016) as the whole is looked upon as the sum of its parts. As complex systems depend on the interactions between agents, superposition does not work as the whole is looked up as something more than its parts. Thus, small changes effected by one agent can have large effects on the environment and vice versa.

Non - linearity can thus be defined as disproportionality. As we have discussed in Box 9, our thinking is attuned to be linear and the tools we use in economics represent that facet. In regression analysis, for example, the scores that do not fit into our linear models are treated as errors or have extreme variations. Complexity economics treats such variations as the intrinsic characteristics of interrelated systems. Non - linearity thus plays a central role in complexity economics.

Power Laws

The effects of agents on a non-linear dynamic system follow rules of power laws

. Power laws imply that small occurrences are very common, but large eco-system changes are rare. For example- patterns involving incomes, the growth of cities, firms, the stock market and fluctuations of returns, order flow, volume, liquidity and even natural calamities such as hurricanes and earthquakes, all follow power laws. A power law, can also be called a scaling law, as there is a direct relationship between two variables. Mathematically this can be interpreted as,

![]()

where ‘Y’ and ‘X’ are variables of interest,

“is called the power law exponent,

and ‘a’ is typically an unremarkable constant.

So, if X is multiplied by a factor of 10, then Y is multiplied by 10; i.e.: Y ‘scales’ as X to the power.

Power laws or scaling laws are seen in different disciplines of study, particularly physics. A commonly known power law is the Pareto principle (used in marketing studies for example) or the also known as the 80/20 rule, which states that, for many events, roughly 80% of the effects come from 20% of the causes. The study of power laws in markets has increasingly been a subject of interest to econophysicists25 (a complimentary offshoot of complexity economics) as power laws signal the occurrence of scale independent behaviour that is closely related to phase transitions and critical phenomenon . Some reliable examples of power law distributions occur in financial markets (Sinha et al., 2010) (Also see, ‘Power Laws in Finance’, Chapter 5, ‘Econophysics: An Introduction’, Sinha et al., (2010); ‘Power Laws in Economics: An Introduction’, Xavier Gabaix (2008)).

Complex systems are more commonly characterised by probability distributions that are better described by a power laws instead of normal distributions, as these gradually decreasing mathematical functions are better at probabilistically predicting the future states of even highly complex systems (Levy D. L., 2000).

Networks

The study of network science and its related disciplines is a pillar of complexity economics. Agents in a complex economy participate in interwoven overlapping networks that allow them to compensate for their limited access to information. In the Walrasian economy, agents do not interact at all. Rather, each agent faces an impersonal price structure (Beinhocker, 2007). However, in complexity economics, the study of networks is conducted with a high level of granularity, as economic exchanges between agents (be it credit-debt relations, change of ownership of assets, simple monetary transactions or exchanges between banks or nations) do not happen randomly. They are dependent on the position and the reputation of each individual node. A node which has a more important role to play (eg.: large banks) will have more interconnections to other nodes making it a hub. There can be correlations between links to a hub leading to assortativity, or assortative mixing, which is the preference of a node to attach itself to another node that is similar to it in some way. This can also lead to the clustering of connections between nodes that identify themselves as part of a particular sub-group leading to displays of cliquishness; i.e. nodes associating with each other based on belonging to an exclusive clique. Figure 4-7 provides a visual interpretation.

Figure 4-7. Formation of sub-groups in networks Reference: ‘Introduction to social network methods’, Hanneman and Riddle, 2005.