Chapter 6. Performance Optimization

What You Will Learn in This Chapter

In this chapter, we will cover:

Why optimizing performance is a critical web sustainability component.

Techniques for creating better optimized digital products and services.

Workflow tips for assessing performance.

Performance Counts

Can digital products and services find the perfect balance between speed, reliability, and sustainability? In an interview, Chicago-based developer Eric Mikkelsen brought up this point about the dichotomy between them: “Speed and reliability are interesting because sometimes those things are opposed to each other,” he says. “Building a website that takes advantage of techniques for tomorrow yet requires a fallback for yesterday increases the size of our codebase.” So where does the perfect balance lie?

Several years ago, Amazon calculated that a page load slowdown of just one second could potentially cost the online retailer up to $1.6 billion in annual sales.[140] Similarly, Google noted that slowing search results by a mere four-tenths of a second would cut down search volume by eight million queries per day, depriving them of the opportunity to serve as many or more ads and resulting in a direct loss of potential revenue. There are many examples like these, and losses don’t just take the form of customer revenue either. Netflix, for example, saw a 43% decrease in its bandwidth bill after enabling GZip, a common file compression tool.[141] It is clear that better performance yields better financial results and happier users.

In practice, maintaining a commitment to optimized performance can, apparently, be challenging. The average web page, according to the HTTP Archive, crested at more than 2.4 MB in May 2016. Bloated, rotating image carousels, slow share widgets, and looping video backgrounds have become the norm on websites everywhere, even though there is mounting evidence that these features can adversely affect page performance. Clearly, there’s a disconnect somewhere. How does this happen?

In an article he penned for A List Apart titled Sustainable Web Design, James Christie notes that “No one intentionally sets out to build a 1.4 MB page, but clients often demand eye-catching images, advanced social features, and plenty of design bells and whistles, and that’s pretty much all it takes to get to that size.” (At the time Christie wrote that article in late 2013, the average page size was only 1.4 MB, so you can see how quickly they are rising.)

It is easy to blame clients for bloated websites that perform poorly—they want what they want, after all, and are typically willing to pay for it—but building high-performance products and services is a cause we should all get behind, including clients. Keeping our clients informed and guiding them toward making more sustainable choices, especially when it comes to optimizing performance, shouldn’t be a constant uphill battle. There is compelling evidence that can help them see the light, as we will explore in this chapter.

A Balancing Act

Whereas the website optimization practices discussed in Chapter 5 focus on design tactics, experimentation, and A/B testing to help digital products and services yield better business results, website performance optimization (WPO) focuses solely on getting data to users more quickly. In reality, it’s a combination of the two that yields the most sustainable results: useless data is still useless no matter how fast it loads and renders on your iPhone. Plus, in the service of sustainability—that is, not compromising the needs of the future—web teams must consider the maintainability of their solutions, as well, as Eric Mikkelsen noted earlier. If it takes an army to make updates—as can be the case with outdated or custom content management systems or those few sites still without a CMS—you’re wasting time, money, and frustrating users who expect real-time information in the applications they use. But maintainability has performance overhead ramifications. So let’s discuss in more detail how we balance speed, reliability, and sustainability.

WPO Defined

At the risk of being too obvious but for the sake of clarity, WPO is defined as a “field of knowledge about increasing the speed in which web pages are downloaded and displayed on the user’s browser.”[142]

Long considered the realm solely of developers, early WPO efforts focused mainly on optimizing site code and pushing hardware limitations. The best optimization occurs, however, when designers and developers collaborate to bring speedy, reliable and useful solutions to users. Leading tech VC Fred Wilson noted in his “10 Golden Principles of Successful Web Apps” presentation that, “There is real empirical evidence that substantiates the fact that speed is more than a feature. It’s a requirement.”[143] (He put speed at number one on his list.)

So by this logic, everyone involved with bringing a digital product or service to life—designers, developers, product owners, content strategists, project managers, everyone—should make optimization their number one priority.

Similarly, according to performance pundit Steve Souders, who coined the term web performance optimization in 2004, “80 to 90% of the end-user response time is spent on the frontend. Start there.”[144]

In 2012, Mr. Souders ran performance tests on a range of popular websites and discovered that, across the board, the resources needed to load and execute frontend components—JavaScript, images, CSS/HTML, and other assets, plus page rendering tasks a browser must perform—comprised between 76 to 92% of total page load time. Because design decisions drive so much of what happens on the frontend, designers clearly have a critical role to play in creating optimized solutions. Yet developers are often the ones tasked with optimizing performance.

Optimization and Collaboration

In a workflow scenario in which designers and developers work in isolation from one another, it’s easy for optimization tasks to become lost in the project shuffle. “If a designer creates a page with huge images, no amount of optimization by the developer is going to make that page efficient,” says author and professor Pete Markiewicz. “Web designers often don’t see their job beyond ‘drawing a picture’ of a website, which often results in the developer creating bloatware to support their ideas. However, a designer can think of performance at the onset. In fact, they must if they are actually designing for the Web instead of a piece of electric paper.”

Thankfully, with the rise of Lean/Agile teams and UX as a discipline we have options for maintaining both performance and user happiness across a project life cycle, but it still remains a legitimate concern as we become caught up in client requests, project scope, and deadline-driven decision making. Collaboration and constant communication as well as establishing clear performance goals up front and sticking to those goals throughout a project’s life cycle, are key to launching and maintaining more sustainable optimized solutions.

But you still need to answer the question of whether or not you are building a lightweight site that works fast or a maintainable site that can work for years to come. The most sustainable answer to that question will have inevitable trade-offs.

Performance versus Maintenance

The fastest product is the one with the leanest code and the fewest assets to load. It puts the right information before users at the time they need it, even if, for instance, those users are on slower, older devices or in an area with limited bandwidth. It knows when to serve bare-bones content and when the user agent supports a more robust user experience.

But creating the fastest product possible might also mean not using certain libraries or frameworks, ditching a content management system (CMS), or forgoing customer relationship management (CRM) integration. Many things that clients or administrators might want—those familiar UI patterns that shield them from the codey bits—can undermine optimized performance. Conversely, developers might focus only on optimizing code yet leave UI/UX issues alone. Finally, a web app that can’t be updated without enlisting the help of a development team (even though it might be crazy fast) is not very future-friendly, which makes it less sustainable.

Developer Eric Mikkelsen on this topic:

Libraries and frameworks are like web developer pickup trucks. They are helpful tools that make it super easy for me to do my job, but they take up a ton of gas. No one actually wants to get rid of something that helpful when they’re told their job is to get things done in a timely manner. So we have to reset what people’s jobs are a little. I think this is where a performance budget comes in. Setting a team performance budget tells everyone that it’s okay to take extra time and build something that’s faster and lighter because that’s where our priorities are.

These trade-offs are decisions that must be made between project teams and product owners in collaborative settings designed to help achieve consensus. The answers will be different for every project. Consider focusing these discussions on a goal of creating the most sustainable solutions that achieve balance between performance and maintainability while still staying within project specs.

Libraries versus Frameworks

Who controls the system? This question often drives choices between libraries and frameworks. Or, as blogger and developer Tomas Petricek notes, “A framework defines a structure that you have to fill, while [a] library has some structure that you have to build around.”[145] It’s up to you to figure out whether the empty house or the collection of individual rooms is more useful. Also, the nature of their structures makes it easy to use multiple libraries in your digital product or service but difficult to use multiple frameworks.

This is relevant to performance optimization and sustainability because both approaches have overhead and can potentially slow down your application. But both speed up the development process, as well. Let’s take a look at how they do this.

Frameworks

Using a framework as a starting point can save time when developing an application. Web frameworks often alleviate many common programming tasks by providing quick access to commonly used scripts and assets, so developers aren’t burdened with programming common features from the ground up each time they start a project.

When using a framework, your own code sits within it. You insert your code into various places inside the framework so that the framework can call upon your code when needed as the application runs. This is useful and saves time, but what happens if you only need a portion of what the framework has to offer? Too bad.

Though using frameworks can save significant development time up front, your application can take a performance hit due to the overhead of the framework itself.

Libraries

When employing libraries, you make calls to external scripts from within your application. These libraries might sit on your own server or, more likely, on a server meant to house shared resources. Google, for example, houses more than a dozen different commonly used JavaScript libraries. Development teams can call upon these libraries to execute common application functions.

Like frameworks, libraries are helpful and time-saving tools, but they come with the added overhead of increased HTTP requests each time a different library is called. However, when using a common resource like Google’s Hosted Libraries, if your own site has caching-enabled, it is likely that the end user might already have your script loaded into the browser, thus reducing the overhead of said HTTP requests.

Speed Is Just One Metric

Your application’s speed alone can be measured by using tools like Google’s PageSpeed Insights, Pingdom Tools, and others. But those tools don’t show the complete picture. Developer Dave Rupert addresses this in a blog post titled “The Cost of Frameworks”:[146]

By measuring what can only be measured in terms of page speed means we have no insight to the reasons a framework was employed or how much money it saved the organization. How big is [the] team that built the site? One? Ten? What were past organizational failures that led to adopting a large framework? Did using a framework win out in a lengthy internal Cost-Benefit analysis? Is CSAT up due to swanky animations and autocomplete features that took minutes to build? Was code shipped faster? Was it built by one person over a weekend? Did the abstraction allow the developers to actually have fun building, therefore increasing job satisfaction, therefore reducing organizational churn, therefore reducing cost of the end product for the user? We don’t know.

With this in mind, a smart approach might be to start your projects without libraries or frameworks, given their potential overhead, but consider them carefully as you plan and build out your application. A page weight budget, discussed elsewhere in this book, might help, as could a deeper analysis of the tools you’re using. As noted previously in this chapter (and as is evidenced by the preceding quote), collaboration and communication are key to weighing which approach seems best for your project.

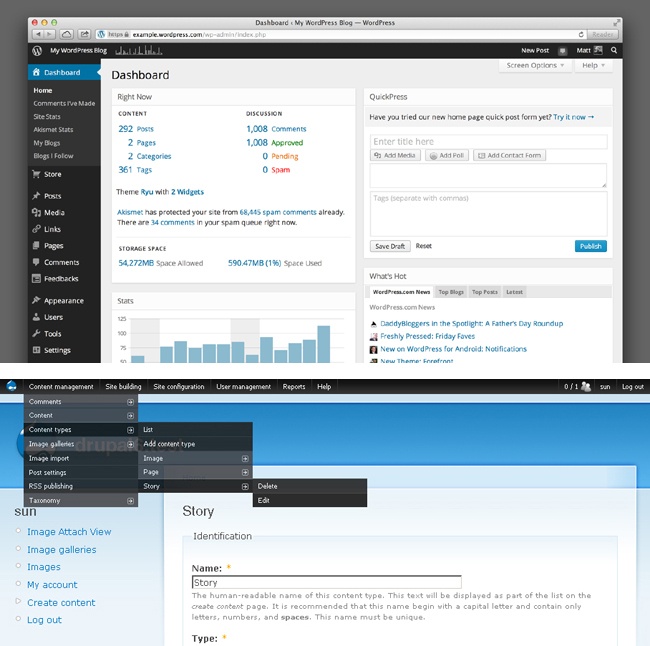

CMS Optimization

Similarly, CMS’s bring incredible convenience to the often complicated tasks associated with creating and managing web content. But, if not optimized properly, they can also come with significant overhead. Their extensible architecture brings flexibility to users that frequently comes at the price of performance and reliability. For our purposes here, we will cover WordPress and Drupal, which comprise 52% and 3% of CMS usage, respectively, for the top one million websites.[147]

These systems offer tens of thousands of available plug-ins (WordPress) or modules (Drupal) that handle everything from security to search engine optimization, from commerce to contact forms. Most any web feature you can imagine has likely been turned into a module or plug-in that you can install with just a few clicks. Their simplicity is part of the allure these tools bring to the table. Using them can be very tempting.

But inside these plug-ins and modules lurk some potential pitfalls:

Each plug-in typically comes with multiple CSS and JavaScript files that might be redundant and can add overhead, affecting performance.

Updating the CMS or other modules and plug-ins can potentially break your entire website, or take it offline.

Although the average frontend user might not notice a few extra scripts loading here and there from a single module, every little bit adds up. Install a handful of plug-ins and your site can exhibit performance impediments that will be noticed.

The upgrade path mentioned earlier is significantly more detrimental if you’re not careful. It is important to keep your software updated because of security concerns. Because these systems are designed for ease-of-use, updating them is understandably meant to be a simple process. In the case of WordPress, an alert shows up in your dashboard whenever an update is available. WordPress is known for simple updates that require just a couple clicks. Because the alert is at the upper-left of your dashboard when you log in, it is the first thing you see, and the temptation to click that update button is palpable. What unwitting content creators don’t often realize is that these upgrades can quickly take your site offline. Module and plug-in updates typically lag behind CMS updates, so it is important to ensure that the tools you already use will work together when updated. Otherwise, what seems like a simple process can put you on the fast track to a downed website.

Here are some methods used by developers to streamline these two popular content management systems. It should be noted that the standard web performance optimization practices covered in the following sections can and should also be applied to WordPress or Drupal sites. These recommendations are specific to each CMS.

WordPress

For the purpose of this section, we will focus specifically on two key areas where WordPress can be optimized: plug-ins and themes. We’ll also talk a bit about how comments can drag down site performance.

Also, this is by no means a comprehensive list; rather, it represents some of the more common WordPress optimization techniques. There are literally hundreds of tasks you can execute to better optimize your website. Designers and developers worth their salt will also follow guidelines for optimizing frontend, user-facing components, such as those outlined in Chapter 5 as well as CMS-agnostic, general web performance optimization techniques covered later in this chapter.

WordPress Plug-ins

The first thing to do with plug-ins is to deactivate any that aren’t being used. Active plug-ins load resources (and make HTTP requests), adding overhead to every page that loads. If a plug-in is not being used, deactivate it.

Keeping in mind that each instance of a plug-in adds its own overhead, here are some specific plug-ins that can help you improve WordPress performance:

- Profile performance

If your site is slow, the P3 Plugin Performance Profiler will tell you which plug-ins are taking longest to load, how much data they use, and other helpful metrics. Begin there.

- Security

As just noted, it’s important to keep your site secure and free from malicious hacks. Plug-ins such as Wordfence, Sucuri Security, and All in One WordPress Security and Firewall can help with this.

- Combine scripts

MinQueue combines all your CSS and JavaScript into a single file, which can speed up load times.

- Caching

A good caching plug-in like W3 Total Cache can speed up page load times by bypassing elements that are already cached in a user’s browser.

- Optimize the database

A plug-in like WP-Optimize, Yoast Optimize DB, or WP DB Manager, can reduce the overhead of your site’s database by optimizing its elements.

- Lazy load images

“Lazy loading” images keeps post images from loading until the user scrolls and they enter a browser’s viewport. This speeds up the time it takes for the page to initially load. Davo Hynds, a developer at Mightybytes, created Lazy Load XT, a WordPress plug-in that balances configurability with lightweight overhead.[148] Other lazy-load plug-ins include Lazy Load and BJ Lazy Load.

- Compress images

Sure, you compress your photos out of your favorite image editor. A plug-in like WP Smushit can add an extra layer of compression by scanning every image you upload to WordPress and stripping hidden, bulky information, reducing file size without losing quality.

- Revision control

Left to its own devices, WordPress will save every draft of every post you publish, creating unnecessary information to store in the database. A plug-in like Revision Control can help you set a specific number of revisions to save. The key is to find a balance between not losing your work (i.e., zero revisions) and cluttering up your database.

- Findable content

A plug-in like Yoast SEO can help you create pages that meet SEO standards, allowing your content to be found more quickly.

- Creating mobile pages

PageFrog’s plug-in for Google AMP and Facebook Instant Pages can autogenerate stripped-down versions of your page that load up to 10 times faster than the original.

These are just some of the helpful plug-ins that can help optimize your WordPress site. Just remember, more plug-ins = more potential overhead, so choose wisely.

Your WordPress theme

The concept of progressive enhancement (covered in Chapter 5) applies here. A performance-optimized and more sustainable WordPress theme would embrace this concept—that is, display base content to browsers with few supported features and then layer on additional functionality as a user agent allows. Premium themes such as the Thesis Theme Framework or Lucid are feature-rich and responsive without slowing your site down. But there are many others, as well.

A good theme also uses valid HTML, which loads quickly across browsers and devices. Unfortunately, some themes can contain spam or malware. You can check the validity of the theme you’re using with plug-ins like the WordPress Authenticity Checker or Exploit Scanner. You can also check whether the base code is standards-compliant by using the World Wide Web Consortium (W3C)’s Markup Validation Service.[149]

Next, check the assets your theme uses:

- Number of queries

Can you hardcode static elements into the theme? This will reduce the number of HTTP requests. This could be relevant for static menus, site title, and so on.

- Images

Are there unnecessary images? Are those that are being used properly compressed? Are they in the right format (JPG, GIF, PNG, etc.)?

- Number of files

Can you reduce the number of files needed to display pages—CSS, JavaScript, and so on—by combining them into a single, optimized file that is minified? (This will likely change with wider adoption of HTTP/2.)

Pete Markiewicz also notes: “I think the WordPress JavaScript API will change the game for theme design, allowing pages to be vastly streamlined. If possible, designers might want to consider using themes with the JavaScript API over older PHP-driven themes.”

Of course, every site has different needs and requirements, so what works for one WordPress site might not work for another. Keeping the aforementioned considerations in mind when creating and editing your theme can help reduce overhead and speed up load times.

Comments, pingbacks, and trackbacks

Disabling pingback and trackback functionality in WordPress can streamline the amount of work your site does tracking who’s mentioned your posts. People can still link to you. Those links just are not added to your WordPress database. Given how much spam is out there, this could potentially make a significant difference in performance. Automated spam comments can begin to eat up your MySQL database. As the folks at SEOChat note, “If you continually ignore this, you might notice that your site is down, or you won’t be able to post because your MySQL disk space has exceeded its quota.”[150]

If your site doesn’t rely heavily on user comments, you might consider disabling commenting functionality altogether. Monitoring, moderating, and interacting with comments (and the spammers who create them) can be a resource-intensive process. We will cover comments in more detail later in this chapter.

Drupal

With more than one million websites (as of this writing), Drupal runs about 3% of the Internet.[151] As with WordPress, the techniques outlined in the following sections are not meant to serve as a comprehensive resource but rather as a place to get started when thinking about optimizing your Drupal site’s performance.

Your Drupal modules

Keep in mind that, like WordPress, unnecessary and unused Drupal modules add overhead to page loads. When considering a module strategy, be sure each one you choose will be used and delete or disable any that won’t.

- Remove unnecessary HTML

The Fences module creates leaner markup and removes extra classes to keep your code lightweight.

- Compress and aggregate files

Modules like Minify, Speedy, and the Advanced CSS/JS Aggregation module will help you compress files and, in the case of the latter, run JavaScript in the footer (to stop page render blocks), and use Google’s shared jQuery library, which will reduce HTTP requests if cached in the user’s browser.

- Serve optimized images

Though you should always compress images before uploading them to the CMS, an added boost from the ImageCache module, which optimizes images after they are uploaded, might help, as well.

- Load lazily

Loading images as the user needs them can help offset a large download up front. The Lazy Loader module will load images as they come into the browser’s viewport.

- Findable content

A module like the SEO Checklist can help you create pages that meet SEO standards, making it possible for your content to be found more quickly.

- Speedier databases

Your site’s database can become bloated and slow down performance. The DB Maintenance module can help optimize database files for speedier delivery of content.

- Enable caching

Drupal can also cache your files using modules like Memcached or Varnish, saving your browser from making repeated server trips for files that are already cached.

- Share resources

By using shared libraries or placing your static content on a content delivery network (CDN), you reduce the amount of time it takes users to get content either through proximity to the server or via user-cached files. The CDN and/or Boost modules can help with this.

- Search on site

Adding search functionality to your own site via Drupal’s core search functionality or the Apache Solr module can help users find what they need more quickly, saving time and energy.

Your Drupal theme

Similarly, here are two things you can do to keep your Drupal theme optimized:

- Start smart

Begin with solid base theme like Zen or Adaptive Theme, which are built with modern web standards in mind: they are responsive, accessibility-compliant, and use semantic HTML5 and CSS 3.

- Remove that registry

Drupal’s theme registry holds cached theming data which is helpful when adding theme hooks or new modules, but unnecessary when a site is live in production. Disabling the theme registry on a production site can help increase performance.

Performance Rules

Google, Yahoo, and many others have published extensively on guidelines for optimizing website performance. Google’s PageSpeed Insights tool, which grades your website based on speed, publishes a set of rules it has devised for grading criteria. Yahoo’s Exceptional Performance team has created similar recommendations. The following lists outline some common tactics.

Yahoo includes the following rules as part of its “Best Practices for Speeding Up Your Web Site”:[152]

Minimize HTTP requests by reducing the number of objects—scripts, images, stylesheets, and so on—required for a browser to render a page. You can do this by using CSS sprites, image maps, inline images, combining scripts, and so on.

Use a CDN to deploy your content across multiple servers so that proximity to request yields faster page loads. This can potentially be cost-prohibitive for organizations with constrained resources like startups or some nonprofits, but these services can yield dramatic results. Yahoo reported improved end-user response times by up to 20% with this approach.

Adding an Expires or Cache-Control header could speed up pages for users by caching commonly used scripts, images, or other components. As users navigate from screen to screen, common page elements are already preloaded.

Compress site components with Gzip to reduce their file size by about 70%. HTML, stylesheets, and scripts can all benefit from this server-side compression technology that can reduce page weight and speed up a user’s experience.

Put stylesheets in the document HEAD to render them progressively, which gives the appearance of faster loading.

Put scripts at the bottom of your document to accommodate parallel downloads and load your page faster. Scripts block parallel downloads, so putting them at the bottom allows other page components to start loading first.

Avoid CSS Expressions, which are evaluated by the browser when the page is rendered, resized, scrolled, or when a user moves her mouse. This can significantly slow down page performance.

Make JavaScript and CSS external so that they can be cached by the user’s browser. Even though this initially increases HTTP requests upon first load, after it’s cached those external files don’t need to be downloaded again, which in turn decreases HTTP requests on subsequent pages. Conversely, inline JavaScript and CSS are downloaded every time a page loads, which increases the size of your HTML documents.

Reduce DNS lookups by reducing unique hostnames included in your pages. This includes those used in URLs, images, script files, stylesheets, embedded objects, and so on. Each DNS lookup can add anywhere from 20 to 120 ms in load time to your page.

Minifying JavaScript and CSS will remove excess unnecessary characters, comments, and white spaces from your code to improve load times. Tools such as JSMin and YUI Compressor can take care of this for you.

Avoid redirects when possible because they can slow down the user’s experience. If you must redirect from a page that no longer exists to a new one, use a 301 permanent redirect to avoid any potential SEO penalties. Not including trailing slashes (/) at the end of your URLs forces autoredirects by the server unless you set it up otherwise.

Remove duplicate scripts in any page. Why load the same script twice or more while also increasing HTTP requests from your pages?

Configure Entity Tags (ETags) to validate and match cached scripts to those being requested by the server. ETags work well for sites on the same server, but don’t typically translate from one server to another due to server-specific embedded data, which reduces their usefulness.

Make Ajax cacheable by using the Expires or Cache Control methods noted earlier to speed up applications that might require lengthy asynchronous JavaScript and XML responses.

Flush the buffer early using PHP’s

flush()function so that partially ready HTML documents can begin fetching data without waiting for the backend server to stitch together your pages.Use GET for Ajax Requests rather than POST when using

XMLHttpRequest, as the latter implements a two-step browser process in contrast to GET’s one (unless you have a lot of cookies).Post-load components that aren’t absolutely required to render the page initially. This could include content that requires scrolling to view, JavaScript animations or drag-and-drop behaviors, hidden content, and so on.

Preload components to take advantage of when the browser is idle to request components (images, styles, scripts, etc.) that will be needed in the future. Subsequent pages will in turn load much faster.

Reduce the number of Document Object Model (DOM) elements in your pages to speed up access by scripting languages like JavaScript. More complex pages can slow down DOM access.

Split components across domains to maximize parallel download opportunities. Just don’t use more than two to four domains, for what you gain in parallel downloads you could lose again in DNS lookup time.

Minimize the number of iframes in your documents to reduce data download costs that occur by using them. iframes can be helpful with slow third-party content like badges and ads, but even a blank iframe can add to your page weight.

Avoid 404 errors, which use HTTP requests while returning no valuable information to users.

Reduce cookie size and eliminate unnecessary cookies to minimize their impact on user response time.

Use cookie-free domains for static components, such as images, that don’t require cookie requests. Static components can be served from a cookie-free subdomain, for example, to minimize unnecessary network traffic.

Minimize DOM access by JavaScript, which can make pages less responsive. Cache references to accessed elements, update nodes “offline” and then add them to the tree, and don’t fix layout issues with JavaScript.

Develop smart event handlers that can delegate events in order to manage how often and when handlers are executed. Handlers that are executed too often can make pages less responsive.

Optimize images to ensure that they preserve best possible image quality while maintaining smallest possible file size. (Chapter 5 covers this in detail.)

Optimize CSS sprites to reduce file size while maintaining image quality. (Also covered in detail in Chapter 5.)

Don’t scale images in HTML; instead, create them at the size they’re needed.

Make favicon.ico small and cacheable because a browser will always request it (and send a cookie in return) whether you want one or not. If possible, make the image 1 KB or smaller and set a lengthy Expires header so it is cached.

Keep components under 25 KB because iPhones don’t cache anything larger. Note this is uncompressed size.

Pack components into a multipart document to reduce HTTP requests.

Avoid empty

<image src>tags in HTML and JavaScript because they still make server requests even though they are empty.

In addition to these recommendations, Google’s PageSpeed Insights rules include the following:[153]

Speed:

Improve server response time to less than 200 ms to avoid performance bottlenecks. Slow server times could have numerous potential causes, including slow application logic, slow database queries, CPU resource or memory starvation, and so on. Many automated web application monitoring solutions are available to track performance.

Inline CSS, if the scripts are small. This lets the browser render the styles individually rather than waiting for an entire stylesheet to load. Just make sure you don’t duplicate scripts.

Prioritize visible content to load above-the-fold content first by structuring your HTML and CSS accordingly, and also reducing its size.

Usability:

Avoid plug-ins that can cause crashes, hangs, or security issues. Even though these plug-ins help a browser process special kinds of content, most are not supported on mobile devices.

Configure a viewport to control how a page is rendered on various devices to provide optimal viewing for users.

Size content to fit the viewport, once configured.

Size tap targets appropriately for the devices your content will be viewed on. If buttons or form fields are too close together, they can be difficult for users to accurately use on touchscreen devices.

Use legible font sizes that are appropriate for the devices defined in your viewport.

The preceding list is by no means comprehensive, but reflects common recommendations for speeding up your site. As we will see in the sections that follow, web teams devise all sorts of methodologies for hitting performance goals on a web project. And speed isn’t the only metric.

Speed, Reliability, and Version Control

Where a common performance quandary arises is in providing the best experience for users while also supporting as many devices and platforms as possible. In sustainability parlance, this goes back again to the concept of serving the needs of the present without compromising the needs of the future—but in reverse. Here we’re trying to support the devices of the past while providing the best possible experience for users with the latest browsers, quickly and efficiently, of course. Progressive enhancement and all that.

This approach will provide a more reliable experience that works across a wider array of devices and platforms, but it could come at the cost of speed. To address this conundrum, following are several approaches that Eric Mikkelsen and the rest of the Mightybytes development team have come to rely on.

Autoprefixer

With Autoprefixer, you can write CSS for today while also supporting older browsers. Vendor prefixes allow browsers to support more experimental CSS declarations. You can use some of these declarations to create more efficient and user-friendly CSS layouts. This is great news for users of the latest and greatest browsers, but not so great for those who use older browsers or browsers with a different interpretation of CSS declarations than what you wrote.

With Autoprefixer, developers have the ability to set how many versions back they want to support. It uses information housed in the “Can I use” database to control which declarations are executed and which aren’t based on the user’s browser profile. It eases the process of phasing out code to support older browsers, providing a useful experience across browsers and devices.

Similarly, when your page boasts a feature like background gradients or rounded corners, you can rely on Autoprefixer to support those in current browsers while not serving the fancier styles to older browsers. A box with text in it, for example, still reads just fine without rounded corners. But when you have more complex features like Flexbox or Grid Layouts, you need more complex fallbacks than Autoprefixer can offer. By allowing frontend developers to use variables and mixins in their CSS, they can easily remove these fallbacks as browser support increases with time, not requiring full overhauls for speed increases.

ShareThis, AddThis, DumpThis

Social media sharing plug-ins, those ubiquitous buttons that beg you to share content across your social channels every time you land on a blog post, can add significant heft to your pages. In 2013, UX designer James Christie ran an experiment to find out just how much these widgets add to a typical page.[154] He discovered that adding four social widgets to a web page also added an additional 64 HTTP requests and caused his page to bloat from 80 KB to 480 KB. The widget-addled page also took more than six seconds to load. Christie took his calculations further to note that if one million people loaded the page it would result in 1,727 hours, or 71 days, of collectively wasted time waiting for data to load. In addition, those 71 days also added up to 379.8 GB of extra data sent and, by his calculations, about 7.41 tons of greenhouse gas emissions, about the same amount as four transatlantic flights.

Still, your marketing department wants people to share your content and they don’t trust people’s abilities to copy and paste a URL to Facebook or Twitter. What are you to do? Some sites have taken to simply adding hypertext links to their preferred social networks on content pages. Rather than making unnecessary additional HTTP requests and automatically loading the widget code and images for each individual network upon page load, this approach puts the decision in a user’s hands. The result is a much lighter page weight that only loads sharing data when a user selects the network with which they want to share content.

Or, you can trust that users know how to copy and paste a URL.

Comments and Page Bloat

A similar situation can arise with blog comments. This can be especially problematic if you use a third-party comments system like Disqus, Livefyre, and so on. In tests we ran on the Mightybytes blog, our comments system added an additional 14 HTTP requests, 34 MySQL queries, and .4 MB to each page that used it. This also translated into about a half-second of additional load time, or nearly 69.5 collective hours and 400 additional unnecessary gigabytes were one million people to download a page from our site that included the comments system. This would be slightly more greenhouse gas emissions than the four transatlantic flights noted previously in James Christie’s calculations.

All this said, comments certainly have their place. I’m not advocating that every blog remove comments to save the planet. Comments give users a way to carry on content-centered conversations, share ideas (and links), and create connections. For some sites or products, this is absolutely necessary. For other blogs, the comments section unwittingly exists as a repository for spam and little else. You have to weigh the choices between the value of comments versus the overhead they add to your site.

HTTP/2 and Performance

Finally, another area where performance could improve significantly is with HTTP/2, which promises to speed up access to content by allowing simultaneous downloads. In early Internet days, when web pages were much simpler, loading them didn’t require as many data requests. Today’s web pages are more resource-intensive with shared libraries, more images, embedded videos, JavaScript, CSS, and so on.

Web teams have used techniques like CSS sprites, shared libraries, and caching to reduce the number of server requests on a page for many years, but these are just workarounds for what is an inherent problem with HTTP/1: it allows only one outstanding request per TCP connection. HTTP/2 is an attempt to modify this rule, and for performance-focused developers, this means a lot of standard practices are about to get thrown out the window.

HTTP/2 supports compressing headers and cookies before sending them to the user. Other improvements, like multiplexing using a single TCP connection and server push, could affect the process by which developers optimize their code. With wider adoption, web teams will no doubt discover new ways to optimize performance, many of which will likely affect developer workflows.

HTTP/2 requires support on both the server and browser sides to function properly. As of this writing, caniuse.com reports that 70.8% of global browsers fully support (63%) or at least partially support (7.18%) HTTP/2.[155] That includes Chrome, Edge, Firefox, and Opera, with Safari following in 2016 as soon as Safari 9 is released. Adoption is happening quickly on the server side, as well. The HTTP/2 Wiki on GitHub has an up-to-date list of server-side adoptions.[156]

Because HTTP/2 is backward-compatible with HTTP/1.1, you could ostensibly ignore it and everything will continue to work. From a performance perspective, there are, however, a few things you should know.

To update your own website, two important things need to happen first:

Server software will need to be updated to versions that support the protocol.

You will also need a Secure Sockets Layer (SSL) certificate for your site to ensure secure connections. You should do this anyway, as Google identified HTTPS as a ranking signal in August 2014. Secure sites rank higher.

In some instances, workarounds discussed elsewhere in this book for HTTP/1.1 shortcomings will actually become less efficient:

- Concatenating CSS and JavaScript files

HTTP/2’s server push feature will deliver all of your separate CSS files directly to the user without them having to make individual requests for each one. Not only will it figure out which files the user needs, but it can deliver those files faster than a single concatenated script file could compile and deliver them via HTTP/1.1.

- Embedded image data

Base64-encoded images embedded with CSS will reduce HTTP requests, as is discussed in Chapter 5. This workaround will increase the size of your CSS files. Because multiple HTTP requests aren’t a concern with HTTP/2, visitors will need to download all of this data regardless of whether they visit the pages that use that data. So, although this workaround can improve performance for sites that use HTTP/1.1, it could slow performance for others.

- Sharding

HTTP/1.1 restricts the number of open connections between your website and server. Historically, a workaround was to retrieve assets from multiple domains by using a technique called domain sharding. You can request as many resources as you need with HTTP/2, eliminating the need for sharding.

- Sprites

HTTP/2 supports multiplexing, so resources are no longer queued, which can result in loading lag time. Chapter 5 discusses using CSS sprites as a workaround for this, which is smart for HTTP/1.1 but unnecessary for HTTP/2.

If you don’t have control over your hosting environment, you will need to wait until servers are updated to support serving pages via HTTP/2. Similarly, when the majority of your users run browsers that support the protocol, you should consider switching, but maybe not before. Analytics data can tell you this. That said, SSL certificates are readily available from many sources. That is something you can, and should, do today.

Finally, for the aforementioned reasons, HTTP/2 will change your regular design and development workflow, so you should assess your own needs as well as those of your team and end users to ascertain a timeline that works for all involved to make the change.

Workflow Tips

We have talked a lot about techniques in this chapter so far, but how are developer workflows affected by a web team that is driven by performance?

Lean/Agile Workflows

We covered Lean/Agile workflows earlier in the book, but it is worth mentioning again in terms of how, specifically, these workflows can address performance issues:

Iterative testing in every sprint will often reveal performance hiccups that can be caught early on rather than in beta, or worse, post-launch.

Agile teams can build performance-specific sprints into their process.

User testing is typically built into Agile workflows, which lets teams identify performance impediments early and fix them within the course of a sprint.

You can set page “budgets” in a sprint planning meeting to set ongoing guidelines for the project that all teams must adhere to.

Standards-Based Development

Using web development techniques based on accepted standards put forth by the W3C will always yield more sustainable results. By the time standards are approved, functionality associated with those standards is typically well entrenched within current browser capabilities. Creating applications based on standards will ensure a wider audience. Testing against those standards will ensure your information can be accessed across the widest array of devices and platforms.

The adoption of standards can be a slow process, however. Standards move at a much slower pace than the release of new devices, which can make for many design and development challenges.

By the time HTML5 and CSS 3 were officially approved as web standards—a process that took several years—nearly all modern browsers had already long supported them.

That said, keep in mind that these efforts are for naught if you don’t provide solid value for both businesses and users, or if your content sucks:

To manage in a world of ever-increasing device complexity, we need to focus on what matters most to our customers and businesses. Not by building lowest common-denominator solutions but by creating meaningful content and services. People are also increasingly tired of excessive noise and finding ways to simplify things for themselves. Focus your service before your customers and increasing diversity do it for you.[157]

So ground your work in performance-driven standards but ensure that there is a solid business foundation that includes real value propositions for users, as well.

Validating Your Work

Not sure if your digital product or service is as optimized for performance as it could be? Here are some tools that can help.

Use validators like Google’s PageSpeed Insights or Pingdom Tools. These services will guide you through the optimization process, offering helpful tips and suggestions for maximizing performance.

Similarly, the W3C’s Validation Markup Service can help you create digital products and services that meet the rigorous standards set forth by the W3C.

508 Checker, by the folks at FormStack, can help you get a better handle on how your site meets accessibility standards for people with disabilities.

Finally, a tool like Ecograder or CO2Stats (covered in detail in Chapter 7) can help you to better understand how poor performance can lead to wasted energy and a larger environmental footprint for your application.

Accessibility and Sustainability

Whether it’s energy, water, sanitation, or something else, universal access is a key part of all sustainability frameworks. An accessible website gives people with disabilities, who might use enabling devices such as screen readers, the ability to experience digital products or services in meaningful ways. If we look at accessibility through the lens of sustainability we must take a broader approach. The French Open Web Group states that accessibility is good for the planet:[158]

When working on Web accessibility, one finds frequently that some practices result in reducing the size of pages, or the amount of data transferred to the user. Furthermore, an accessible Web site is generally simpler and therefore faster to view, not only for users with disabilities.

Every small earnings, effortlessly obtained, add to each other, and humbly contribute to the global effort. Much rather like those soda cans you throw in the right recycling bin: this is not what will fundamentally change things; but things will not change unless we do at least our share.

Open Concept Consulting’s Mike Gifford agrees:

In a fast-paced world, having well-structured content that is written in plain language helps people get what they need and act on it. Numbers vary, but a sizable portion of the population has a disability that can affect how they use the Web. The Web Content Accessibility Guidelines (WCAG) push for sites to be perceivable, operable, understandable and robust.

If you look at the entire range of potential user disabilities—low vision, color-blindness, mobility challenges, debilitating injuries, and so on—the numbers could perhaps reach up to 20% of any given population. What is the impact of either excluding these people or simply having to service them in a different way? Thus, following accessibility guidelines just makes sense.

Complicating this is the need to optimize digital products and services for new browsers and devices that appear around every corner while also supporting older or less popular models with a more basic experience. Though it’s not always possible to create the best experience for all users on all devices, we do have a responsibility to build something that’s useful for everyone who interacts with our product or service. This can cause an ever-dizzying array of contingencies that must be planned for, adding overhead not just to the product or service itself but also to the process of its creation.

Brad Frost, in his post “Support vs. Optimization,” states:[159]

The minute we start giving the middle finger to these other platforms, devices, and browsers is the minute where the concept of the Web starts to erode. Because now it’s not about universal access to information, knowledge and interactivity. It’s about catering to the best of breed and leaving everyone else in the cold. All of a sudden, the “native vs web” argument is no longer bullshit. It becomes more an apples-to-apples comparison, where web experiences only work on the platforms that happen to provide thousands of gorgeous native apps.

Potential Barriers and Workarounds

There are some hurdles to reaching a lean, mean Internet. Focus on performance is increasing but still not commonplace. Few web teams, for instance, allot a performance budget. Defining and implementing good performance practices so that they become a rote part of any process will ultimately lead to more accessible and sustainable digital products and services. But this means getting the entire Internet supply chain—product managers, clients, designers, developers, data centers, agencies, and so on—to focus on performance and efficiency. There is still a long way to go.

Then there is the curious case of the DIY website. Like them or not, tools such as Wix and Squarespace make it easy for many people without web design experience to create sites. This leads to just as many poorly designed web solutions. Jevons Paradox at work again. Inexperienced site creators make bad decisions that undermine user experience and decrease performance. Plus, some of these solutions are closed source, which could mean less accessibility, less efficiency, and more wasted energy.

Standard APIs, hosted libraries, and frameworks offer easier access to more features, which makes more-robust applications possible. They can also significantly increase the amount of data transferred from page to server, and in the case of frameworks can contribute to applications that are larger than they need to be. This is one of those situations where the advantage of shared data might outweigh the barrier of slower applications, but tell that to the guy in rural India who’s trying to find directions on your travel app.

And of course, it’s the Internet. Standards always shift and that’s typically a good thing, but shifting standards means those who don’t keep up are potentially creating subpar products and services that could perform poorly.

Conclusion

Here’s what we covered in this chapter:

Why optimizing performance is a critical web sustainability component.

Techniques for creating better optimized digital products and services.

Workflow tips for assessing performance.

Hopefully the processes outlined herein will help you create fast, reliable, performance-optimized solutions that are accessible to as many people as possible.

Action Items

Try these three things:

Run your site, product, or service through the speed, accessibility, and standards validators mentioned in this chapter. Is there room for improvement?

Create a checklist of tasks you can do to make your website or app more accessible, standards-based, and perform better.

Start attacking that list!

[140] Kit Eaton, “How One Second Could Cost Amazon $1.6 Billion In Sales”, Fast Company, March 15, 2012. (http://www.fastcompany.com/1825005/how-one-second-could-cost-amazon-16-billion-sales)

[141] Bill Scott, “Improving Netflix Performance”, O’Reilly Velocity Conference, June 23, 2008. (http://cdn.oreillystatic.com/en/assets/1/event/7/Improving%20Netflix%20Performance%20Presentation.pdf)

[142] Wikipedia, Web performance optimization (WPO). (https://en.wikipedia.org/wiki/Web_performance_optimization)

[143] Fred Wilson, “The 10 Golden Principles of Successful Web Apps”, The Future of Web Apps, Miami 2010. (https://vimeo.com/10510576)

[144] Steve Souders, “The Performance Golden Rule”, SteveSouders.com, February 10, 2012. (http://www.stevesouders.com/blog/2012/02/10/the-performance-golden-rule)

[145] Tomas Petricek, “Library patterns: Why Frameworks Are Evil”, Tomas Petricek’s Blog, March 3, 2015. (http://tomasp.net/blog/2015/library-frameworks)

[146] Dave Rupert, “The Cost of Frameworks”, DaveRupert.com, November 17, 2015. (http://daverupert.com/2015/11/framework-cost)

[147] BuiltWith, “CMS Usage Statistics”. (https://trends.builtwith.com/cms)

[150] Seochat, “Prevent Comment Spam from Damaging Your WordPress Website”. (https://www.seochat.com/c/a/search-engine-optimization-help/prevent-comment-spam-from-damaging-your-wordpress-website)

[151] Mike Gifford, “Tips for a Sustainable Drupal 7 & 8 Website”, OpenConcept Consulting, October 23, 2015. (http://openconcept.ca/blog/mike/tips-sustainable-drupal-7-8-website)

[154] JC UX, “Social Sharing Buttons: Page Weight Experiment”. (http://jcux.co.uk/oldsite/posts/buttons.html)

[155] Can I use, “Can I use... Support tables for HTML5, CSS3, etc”. (http://caniuse.com/#search=http2)

[158] OpenWeb, “Accessibility Is Good for the Planet”. (http://openweb.eu.org/articles/accessibility-is-good-for-the-planet)

[159] Brad Frost, “Support vs. Optimization”. (http://bradfrost.com/blog/mobile/support-vs-optimization)