Chapter 1. Introduction to Weak Supervision

Deep learning is data-hungry. A great amount of data is required for training deep learning models. The growing popularity of deep learning approaches fuels the need for larger, labeled datasets. On the other hand, the trend that we are seeing is that large deep learning models often need more data to work better. Recent key Artificial Intelligence (AI) achievements would not have been possible without labeled data.

The cost of creating, cleaning, and labeling training data often is a significant expense in terms of both time and money. Furthermore, in some cases, privacy is a key requirement, and an “eyes off” approach wherein machine learning practitioners cannot directly inspect the data to provide labels isn’t possible given sensitive and personally identifiable information (PII) in the data.

In this book, you will learn practical techniques that will enable you to leverage weak supervision in your machine learning projects, to address the challenges and high costs of acquiring a high-quality labeled dataset.

Before we do that, let us learn about why weak supervision is important for practical machine learning. In this chapter, we will share practical use cases on how weak supervision can help overcome some of the challenges of implementing ML in the enterprise. We will provide an overview of the different types of weak supervision approaches and introduce Snorkel, a new machine learning framework. Finally, we will cover a new paradigm called data programming which builds on weak supervision and the benefits it provides.

What is Weak Supervision?

Weak supervision is a broad collection of techniques in machine learning where models are trained using sources of information that are easier to provide than hand-labeled data, where this information is incomplete, inexact, or otherwise less accurate.

Instead of a subject matter expert (SME) hand labeling high-quality data, all of which is very cost-prohibitive, we can use other techniques that combine diverse sources of data, creating an approximation of labels. Using weak supervision we can reconcile these labels to a single label.

Weak Supervision enables these noisy weakly sourced labels to be combined programmatically to form the training data that can be used to train a model.

Labels are considered ‘weak’ because they are noisy – i.e., the data measurements that the labels represent are not accurate and have a margin of error. The labels are also considered ‘weak’ if they have additional information that does not directly indicate what we want to predict.

The model created using training data generated via weak supervision is comparable in performance to a supervised learning model created with traditional ‘strong’ labels. In addition, as researchers at the Massachusetts Institute of Technology (MIT) (Robinson, Jegelka, Sra, 2020) found, using a combination of a few ‘strong’ labels, along with a larger ‘weak’ label dataset resulted in a model which not only learned well but also trained at a faster rate.

Before we get into the details of weak supervision, first let us look at some real-world use cases.

Real-world Weak Supervision with Snorkel

Snorkel started as a project at Stanford in 2016 with the premise that users can programmatically label, build, and manage training data. The research around Snorkel also pushed innovations on other related tasks, such as data augmentation, multi-task learning, and eyes-off training. Snorkel has since expanded and has partnered with companies and research institutes such as DARPA, Google, and Intel.

Building on this research, Snorkel (an open-source project) has expanded into a commercial company called Snorkel.ai. The primary product of Snorkel AI is Snorkel Flow, a data-centric platform for building AI applications that enterprises can adopt for their AI teams. Snorkel Flow supports a Python SDK, and developer APIs, and also has a GUI no-code interface that allows both technical and non-technical business users to incorporate weak supervision when building applications.

For the scope of this book, where we are learning about the underlying programmatic labeling technology, we will use the open-source version of Snorkel and not the commercial platform of Snorkel Flow.

Weak supervision and Snorkel help address the MLOps challenges such as model governance, operations, data lineage, etc. Weak supervision expressed as code allows us a lot of flexibility, as we incorporate different data points. This helps improve generalization and can easily scale with unlabeled data.

Programmatic weak supervision may help address bias

Programmatic weak supervision can be useful in improving explainability and reducing bias. Unlike most ML models, which are opaque-box, in weak supervision, we write the code that generates the training data. As a result, we can have more fine-grained control over how labels are created. As an example, it is possible to observe which labeling functions contribute and how they are being combined when using programmatic weak supervision to train and create an ML model.

This ability to interpret opens up opportunities to identify and address bias in our data or those that might arise during inference when deployed in production.

Snorkel has been used in many real-world applications across industry, medicine, and academia. Industry applications include product classification (Bach et al. 2019), bootstrapping conversational agents (Mallinar et al 2018), and many others. Rapidly identifying new knowledge from the scientific literature is a long-standing challenge in NLP, and Snorkel has been used to build a database of novel genetic associations using scientific documents (Kuleshov et al 2019) and mine chemical-disease relations (Mallory et al 2020).

In electronic health records (EHRs), patient notes are an important source of information for AI in healthcare. Snorkel has been used to extract outcome labels for medical imaging (Dunnmon et al 2020) and improve radiology report labeling (Smit et al 2020), monitor medical device safety (Callahan et al 2019), classify risk factors like smoking status (Wang et al 2019), as well as medical NER and continuous monitoring of COVID-19 symptoms (Fries et al 2021).

Let’s take a look at some real-world use cases showing how Snorkel allows us to get labeled data more easily, and how companies have successfully leveraged this paradigm for their needs.

- Industrial Image defect detection

-

Researchers in the Korea Advanced Institute of Science and Technology (Heo, Roh, Hwang, Lee & Whang, 2020) have used Snorkel as part of a system that created a dataset of images of defective products. These images were then used for training a computer vision model to recognize defective products in the production line. The team uses a combination of crowdsourcing, data augmentation, and data programming. They start with the SMEs, the workers in the assembly line, and ask them to classify the objects based on the defect patterns.

The images in each category are then augmented with the help of GANs (Generative adversarial networks). Images from those categories are analyzed, and the distribution of the pixels for lines across the x and y-axis is generated. Those distributions are the feature vectors, supplied then to a multi-layer perceptron trainer, which will perform training and learn the model. The labeling functions consist of the learned model for each pattern emitting a prediction on whether the image contains the respective defect or not.

- Labeling Bio-Medical Data for Gene Clustering

-

One of the problems bioinformatics deals with is predicting the function of a gene. Often, this is done by studying the proteins those genes code - a process known as gene expression. A protein can be coded by more than one gene; studying gene expression often is treated as a classification problem (Dutta & Saha, 2019).

Researchers at the computational bioinformatics Indian Institute of Technology Patna (Dutta & Saha, 2019), utilized Snorkel to label gene expression data. They initially clustered the data using a multi-objective optimization (MOO) technique (aiming at optimizing three objectives). For MOO, a solution is said to dominate another solution if all its objective values are better than the values of another solution. The non-dominated solutions of a MOO are the ones for which there are no solutions for all the objective functions doing better than them. Dutta & Saha used those non-dominated solutions from the gene expression clustering, as the labels for the data.

- Intent Classification

-

This task consisted of detecting and classifying the intent of a user allowing for more intelligent and better experiences for the user - be it from automatically performing actions based on intent to help answer questions. The input to the labeling functions is user actions and interactions with various systems such as search, emails, recommendation systems. Many of these interactions can be correlated with the intent of the action by the user; these correlations of action and intent is can also be noisy making weak supervision a great paradigm to implement.

- Intel Osprey

-

Intel and Stanford’s researchers built Osprey, a weak supervision system on top of Snorkel specifically designed for highly imbalanced datasets (Bringer, Israeli, Ratner, Re, 2019). Osprey is unique as it is an end-to-end ML pipeline that allows non-technical business users to create labeling functions using a GUI - without writing any code. Osprey supports generalization using a novel ensembling technique and achieving a high precision level by combining a label-based generative model with a discriminative model.

- Snorkel DryBell

-

Researchers from Stanford, Brown, and Google (Bach, Rodriguez, Liu, Ratner, et al, 2019) cooperated to create Snorkel DryBell, a weak supervision framework based on Snorkel, utilized to solve labeling data at Google, for tasks like:

- Topic Classification

-

This task consisted of creating a new classifier to detect the topic in the textual content. The heuristic used in the labeling functions for this data looked at the URL of the source of the data, heuristics related to statistics regarding the Named Entity Recognition (NER) appearing in the content, and heuristics based on the prediction of existing topic models, outputting semantic categorizations for the content.

- Product Classification

-

This task needed labeled data in order to build a classifier to detect content references to products in particular categories. The labeling functions looked at the keywords in the content and made use of Google’s Knowledge Graph to translate the keywords into another language. Finally, it explored predictions from the semantic topic model to set apart content that was not related to the categories of interest. (Cubuk et al, 2019)

- Event Classification

-

This task consisted of classifying real-time events into different categories. The weak classifiers used were again model-based: making use of other existing models’ predictions, heuristics over characteristics of the event, and knowledge-graph-based labeling function, to look at the relationship of the events and the entity related to the event.

We will explore Snorkel in more detail in Chapter 2 but first let us understand what are the different approaches to weak supervision that make up the broader umbrella of weakly supervised learning.

Approaches to Weak Supervision

Weak supervision can be used along with many other types of supervision and broadly can be broken down into three different approaches as outlined below. The type of information and labels available determine which of these three different approaches would work better for that situation.

-

Incomplete supervision

-

Inexact supervision

-

Inaccurate supervision

Incomplete supervision

In incomplete supervision, we have a small set of training data that is of high quality and is labeled, but that is not enough data to train a model; the remaining training data is not labeled. This is quite common.

Say we want to classify news (fake vs. authentic). It is relatively straightforward to get news datasets from the internet. However, labeling all of it would be quite a task because of time and cost. For a small set of this news dataset, a human can label and classify the news; the rest is left as unlabeled and incomplete.

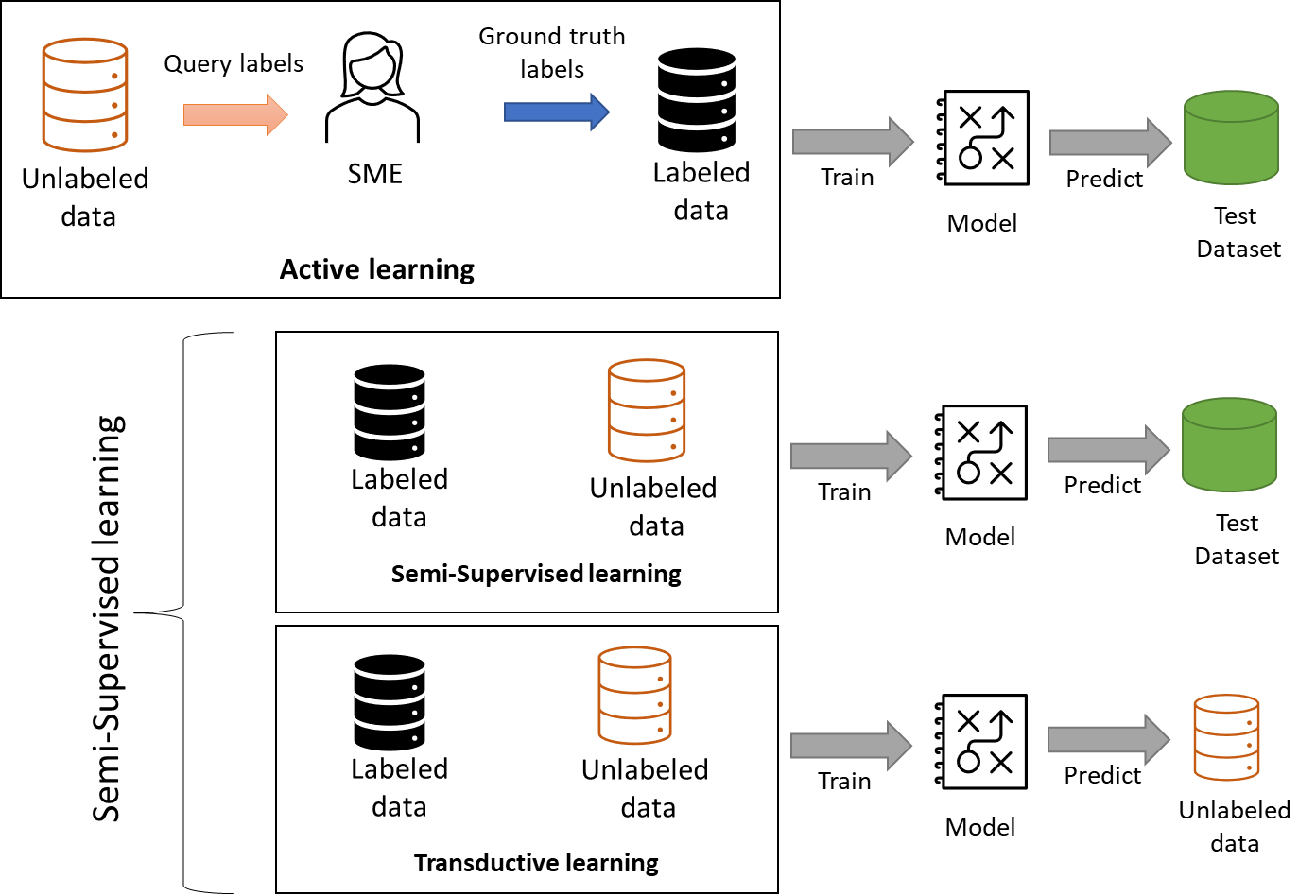

At a high level, we have three approaches for incomplete supervision: active learning, which is with human help; semi-supervised learning, where no person is involved; and transfer learning,

- Active learning

-

This approach is all about a human (typically an SME) in the loop and involves finding strong labels for data by an SME. However, instead of directly querying strong labels for the most informative samples, we first query weak labels and optimize the most important labels allowing an SME to concentrate on only that subset. This output from active learning acts as weak labels and inputs to weak supervision - nicely complementing each other.

When it comes to selecting the most important labels from an unlabeled dataset, there can be many criteria. The two most common ones are informativeness and representativeness (Huang, Jin, Zhou, 2014). For an unlabeled set of data, informativeness tells us the degree to which uncertainty has been reduced. Representativeness on the other hand measure how well does that dataset represents the structure of the input patterns. (Settles, 2004)

- Semi-Supervised Learning

-

Unlike active learning, instead of using an SME, the goal is to use assumptions that are task-agnostic and domain-specific, supported by heuristics on the unlabeled data. At a high level, we use a data distribution and clustering approach and outline a belief that similar data has similar characteristics and output. This leads to two basic premises that help in labeling data – cluster assumption, and manifold assumptions.

- Manifold assumption

-

This is the premise that clusters that are closer (to each other) have similar predictions and lie on a manifold.

- Cluster assumption

-

This is the premise that data has some inherent pattern that allows us to cluster data points, and to these clusters, we can apply some class labels.

- Transfer learning

-

Here we would use models trained on another dataset and apply them to the data and task we are interested in. The data and its shape would be similar, and we would expect to ‘fine-tune’ the model for our task. This can also involve combining several tasks to understand relationships from an approximate domain that might not be obvious.

Semi-supervised approaches

There are four types of semi-supervised approaches:

-

Generative methods – this assumes both the labeled and unlabeled data is generated from the same model. This allows us to view unlabeled data as missing values and use techniques such as expectation-maximization algorithms.

-

Graph-based methods – This uses a graph where nodes correlate to training instances and relations (e.g., distance, or similarity) between the data points are modeled as edges between different instances. The unlabeled data points can be propagated on this graph.

-

Low-density separation methods – these are density-based sorting algorithms that try to place classification boundaries across less dense regions between the clusters of the input space.

-

Disagreement-based methods – here we have multiple learner instances that collaborate and work on a task. During this process, they might have overlapping and conflicting data points. These conflicts and disagreements are used as the key to learning.

What are Expectations-Maximization algorithms?

Expectation-Maximization Algorithms (EM algorithms) is a general technique that helps in finding the maximum likelihood estimators in latent variable models. Latent variables are hidden variables that are not directly visible but are inferred using other (observable) variables.

EM algorithms are widely used in ML algorithms especially in unsupervised learning settings, such as clustering and density estimation.

Transductive Learning

Transductive learning is different than a typical ML approach, which is based on induction where one is attempting to discover rules based on the training data, and these rules are induced via a model. We essentially are generalizing rules to apply to a class problem.

On the other hand, in the transductive learning approach, instead of solving a class problem, we solve for that instance problem. We use training data to discover rules, that are then used to make better predictions for the same type of training data - essentially it being an instance problem and not a class problem.

Figure 1-1 at a high-level outlines the differences between the approaches for incomplete supervision.

Figure 1-1. Semi-supervised learning approaches

Inexact supervision

In inexact supervision, we have labels and features (i.e. metadata) on the training dataset. The label information is not is complete and not the kind exact kind preferred as it relates to the task at hand - hence the name inexact. In addition, the metadata associated with the training dataset is imprecise and the labels are poor.

For example, say we need to detect fraudulent activity for a financial organization from a set of transactions. Typically, we do not have details that directly correlate to what we would classify as fraud. However, if we have labeled examples of other types of fraud transactions from other datasets (non-financial), we can use these other examples to predict the label for the original set of transactions. Here we don’t have the exact labels and combining additional similar but non-financial examples of fraud labels makes this inexact. Inexact supervision uses multi-instance learning.

What is Multi-Instance Learning?

Multi-instance learning is a type of weakly supervised learning for situations where we have training examples but have incomplete knowledge about labels of those training examples. These training examples are grouped in sets called bags. Within a certain bag, the specific training instances whilst different from each other, are still similar. A label is provided for the entire bag, but not specific instances within that bag.

There are positive and negative bags. If at least one instance in a bag is positive, that bag is labeled positive. Conversely, if all the instances in a bag are negative, that bag is labeled negative. The goal of multi-instance training is to classify unseen instances and bags using labeled bags as training data.

Multi-instance learnings can be applied to many use cases and domains; some examples are:

-

Content-based image retrieval allowing image classification based on their subject.

-

Molecule classification allowing one to predict if a molecule produces a given effect.

-

Object localization in an image – allowing one to find specific objects in an image such as medical image diagnosis.

-

Sentiment analysis in the text.

Inaccurate supervision

As the name suggests, in inaccurate supervision the information has errors with some of the ground truth labels either not accurate, or of high quality. Usually, this happens when one is crowdsourcing data and there would be distractions and inaccuracies or when the data is difficult to categorize. The idea is to band together possibly mislabeled examples, and then try to correct them. Think of it as an approximate correction.

One way to correct the labels is to learn from data engineering, where we use techniques such as neighborhood-graph and measure connected nodes within the graph to look for outliers and missing information.

Note

Data programming and data engineering sound very similar, but they are quite different. Data engineering deals with the science and knowledge of large-scale data flows, data pipelines, data exploration and transformation (ETL) including data cleaning, and preparation. A lot of this is specific to the underlying database implementation and the runtime optimization.

In the next section, we will learn about Data programming. This is a technique that is foundational when using Snorkel and helps address the one-off and ad hoc implementations of weak supervision.

Data programming

Data programming is a new paradigm that allows for the generation of a large set of labeled training datasets programmatically. In data programming, a user creates a set of simple programmable functions called labeling functions that are used to label the data. Each of these labeling functions provides a label for each training example (or abstains). By running multiple labeling functions, we get useful, but potentially conflicting information about the label for each example. Data programming enables us to aggregate these votes into a coherent probability distribution over the true, unknown labels.

Until more recently, weak supervision was being applied in a very isolated and ad hoc manner, and it used a subset of available data. This caused suboptimal performance. Data programming helps standardize this ad hoc nature of weak supervision with a more unified and formal approach. Compared to traditional ML approaches for training data, data programming allows a richer, more flexible, and generic approach.

Recent AI milestones have demonstrated that fundamentally the ML algorithms haven’t dramatically evolved; what has changed is the amount of data used. The data is much more (i.e. bigger) and with better cleaner labels. Today, most ML solutions are trending towards a more comprehensive end-to-end solution, which requires even more labeled data to work. The only way we can scale is with data programming – which helps us create training data programmatically, which can be assembled, labeled, debugged. The code that enables this is called labeling functions; these functions create weak labels that allow us to use weak supervision.

Data programming has many advantages. Using data programming, you can:

-

Encode domain knowledge representing real-life constraints and situations in reusable and updatable form, rather than in individual training labels.

-

Simultaneously incorporate a wide range of supervision resources for training in a principled way.

-

Reduce the time and effort required to deploy new models.

Data programming helps bring other benefits such as version control, reusability, modularity, maintainability, etc. We will explore more details on data programming in Chapter 2.

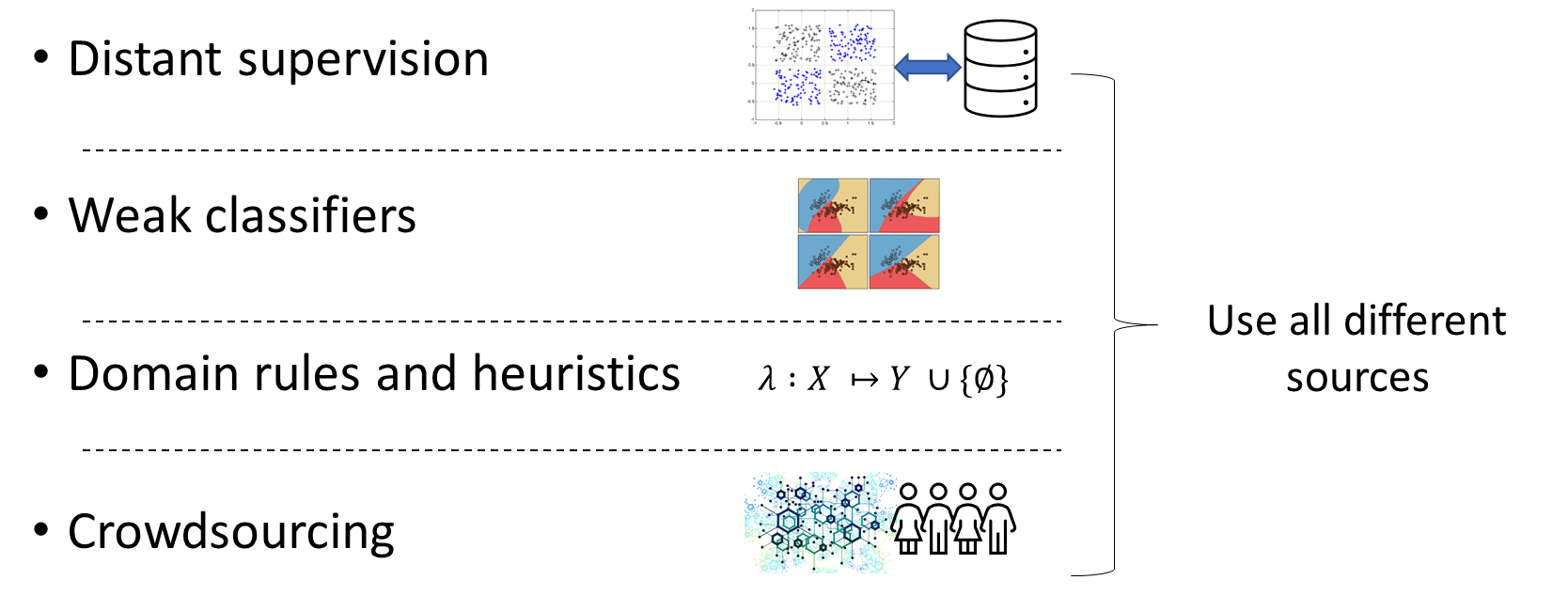

Getting training data

Weak supervision is an approach that is more efficient and scalable to address the training data gap compared to other techniques. When using weak supervision, we can have diverse inputs that contribute to the training data. These diverse inputs are:

-

Pattern-based heuristics - allow an SME to feature annotate and help bootstrap based on specific patterns instead of arbitrary and unknown correlations.

-

Distant supervision - allows data points that heuristically align with an external knowledge base to generate noisy labels.

-

Weak classifiers - allows for classifiers that are not a good fit (for the task at hand) to be used on a different dataset to create noisy and biased datasets – this becomes the training set.

-

Labeling function generators - allows for programmatic weak supervision systems such as Snorkel (which we will explore in Chapter 2) to generate multiple functions using code. Given this programmable code, this makes these generators more scalable and manageable.

All this progress in algorithms is driving the need for larger everything - larger models with more parameters, which require larger training datasets. Instead of picking one set of data sources to get all this large training dataset, weak supervision is the unifying method to get different types and sources of data together (see Figure 1-3) - allowing the scale needed for this progress to continue.

Figure 1-3. Unifying method for weak supervision

How data programming is helping accelerate Software 2.0

Instead of “software is eating the world”, a new trend — “ML is eating software” is starting to emerge. There have been a few leading academics (Re, 2021) and industry leaders (Karpathy, 2017) who have been highlighting this shift and change in software development and programming. This new phenomenon is loosely called “Software 2.0”. Software 2.0 (SW2.0) is not something far out in the future. It is happening now and in some production cases, it is already responsible for a 1000x productivity increase.

One example - Google’s neural machine translation. The codebase for it went down from 500K lines of C++ code to approximately 500 lines of ML code (Clark, 2017) - a very impressive leap. We are seeing this trend also in classical computer science problems such as database tuning, networks, ETL and data cleaning, etc. This was possible only because ML replaced swaths of code and heuristics. Software 2.0 is indeed starting to eat Software 1.0.

With SW2.0 we are moving from innovating and reinventing a new ML model, to understanding which signals to put in an ML model. This ML model is combining several off-the-shelf SOTA models and is used as part of an application. This is also making the end-to-end process more efficient. This speed is achieved by abstracting to more ML-optimized and efficient hardware compared to generic hardware.

ML is fundamentally changing how we build, deploy, and manage code in production; with SW 2.0 we are shifting from conventional programming to high-level domain knowledge that is programming using lots of training data. And much of this training data is powered by weak supervision, and Snorkel could be one of the critical pieces required to program this SW2.0.

Software 2.0 Architecture

In the SW2.0 architecture, we are not ripping and replacing the system components with ML implementations. Instead, we are fundamentally changing the system components and what they do, and data programming and its potential to create training data are at the heart of that change.

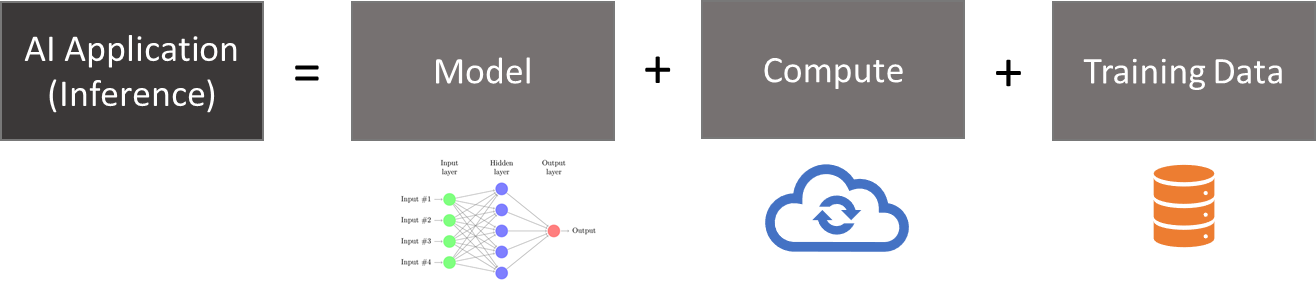

A typical AI application can be divided into three aspects - a model, data, and some compute hardware for training (see Figure 1-4). We see both the model and compute aspects are getting to be commodities and the one different thing is the training data. Most users would pip install a SOTA model instead of hand-cranking a new model.

Figure 1-4. Typical AI Application

In a world where models are commodities, and everything is a data flow the development time for SW2.0 is greatly reduced compared with “traditional DL”. SW2.0 paradigm also has a very interesting side benefit, with the SOTA models doing most of the heavy-lifting allowing enterprises to concentrate time and effort on the long tail of edge cases.

With SW 2.0 we are also seeing a cycle, which is creating a flywheel. SW 2.0 uses the latest SOTA (state-of-the-art) model, and also uses new methods of gathering varied quality training data. Over time, this new training data is used to create the next iteration of the SOTA models. These new generations of SOTA models are then incorporated back into SW 2.0 - completing the flywheel. Data programming and Snorkel’s ability to work across several types of data (text, image, video, time-series, real-time, etc.) makes Snorkel ideal in the new world of “hands-free” engineering - where we don’t hand tune features or hand label training data to get high quality.

A note on the code

All the code in the book can be cloned from the following GitHub repository: https://bit.ly/WeakSupervisionBook.

The code that is in the book is meant to help us understand the core concepts and follow along. Whilst the code in the chapters is correct, in the interest of space we leave out some of the boilerplate code. Trying to copy the code from within the book might cause some of the code not to execute.

We would encourage you to clone the book GitHub repository to use.

Summary

Data programming is a new machine learning paradigm for creating and managing training datasets for ML algorithms. Programming is changing, with ML models and techniques much easier to incorporate and use, but increasingly more difficult to create.

The appetite for labeled data is going to just keep growing exponentially. To do practical machine learning, the cost and complexity of getting high-quality labeled training data need to be carefully managed. In addition, other issues for practical machine learning include - model management, dealing with bias, AI ethics considerations, interpretability of models, and more.

Data programming is emerging as a unifying method for weak supervision - where one can combine different sources and methods, and use them all instead of picking one.

Weak Supervision, together with the notion of data programming presents an exciting approach towards solving part of the problem of getting a high-quality training label. In the next few chapters, we are excited to share with you how you can get started.

Bibliography

-

Dhruv Mahajan, Ross Girshick, Vignesh Ramanathan, Kaiming He, Manohar Paluri, Yixuan Li, Ashwin Bharambe, Laurens van der Maaten Exploring the Limits of Weakly Supervised Pretraining, 2018

-

Joshua Robinson, Stefanie Jegelka, Suvrit Sra Strength from Weakness: Fast Learning Using Weak Supervision, Massachusetts Institute of Technology, Cambridge, MA, USA, 2020.

-

Angluin, D., Laird, P. Learning From Noisy Examples. Machine Learning 2, 343–370 (1988), 1988

-

Jack Clark, Import AI Import AI: #63, 2017

-

Stephen H. Bach, Daniel Rodriguez, Yintao Liu, Chong Luo, Haidong Shao, Cassandra Xia, Souvik Sen, Alexander Ratner, Braden Hancock, Houman Alborzi, Rahul Kuchhal, Christopher Ré, Rob Malkin Snorkel DryBell: A Case Study in Deploying Weak Supervision at Industrial Scale, 2019

-

Ekin Dogus Cubuk and Barret Zoph and Dandelion Mane and Vijay Vasudevan and Quoc V. Le AutoAugment: Learning Augmentation Policies from Data, 2019

-

Chris Re Software 2.0: Machine Learning is Changing Software, 2021

-

Andrej Karpathy Software 2.0, 2017

-

Geon Heo, Yuji Roh, Seonghyeon Hwang, Dayun Lee, Steven Euijong Whang Inspector Gadget: A Data Programming-based Labeling System for Industrial Images, 2020

-

Pratik Dutta and Sriparna Saha A Weak Supervision Technique with a Generative Model for Improved Gene Clustering, 2019

-

Alex Ratner, Henry Ehrenberg, Zeshan Hussain, Jared Dunnmon, Chris Ré Learning to Compose Domain-Specific Transformations for Data Augmentation, 2017

-

Ying Sheng, Nguyen Vo, James B. Wendt, Sandeep Tata, Marc Najork Migrating a Privacy-Safe Information Extraction System to a Software 2.0 Design, 2020

-

Sheng-Jun Huang, Rong Jin, and Zhi-Hua Zhou Active learning by querying informative and representative examples, 2014

-

Eran Bringer, Abraham Israeli, Alex Ratner, Christopher Ré Osprey: Weak Supervision of Imbalanced Extraction Problems without Code, 2019

-

Burr Settles Active learning literature survey. Computer Sciences Technical Report 1648, 2009

-

Neil Mallinar, Abhishek Shah, Rajendra Ugrani, Ayush Gupta, Manikandan Gurusankar, Tin Kam Ho, Q. Vera Liao, Yunfeng Zhang, Rachel K.E. Bellamy, Robert Yates, Chris Desmarais, Blake McGregor Bootstrapping Conversational Agents With Weak Supervision, 2018

-

Volodymyr Kuleshov, Jialin Ding, Christopher Vo, Braden Hancock, Alexander Ratner, Yang Li, Christopher Ré, Serafim Batzoglou, Michael Snyder A machine-compiled database of genome-wide association studies, 2019

-

Emily K. Mallory, Matthieu de Rochemonteix, Alex Ratner, Ambika Acharya, Chris Re, Roselie A. Bright, Russ B. Altman Extracting chemical reactions from text using Snorkel, 2020

-

Akshay Smit, Saahil Jain, Pranav Rajpurkar, Anuj Pareek, Andrew Ng, Matthew Lungren CheXbert: Combining Automatic Labelers and Expert Annotations for Accurate Radiology Report Labeling Using BERT, 2020

-

Yanshan Wang, Sunghwan Sohn, Sijia Liu, Feichen Shen, Liwei Wang, Elizabeth Atkinson, Shreyasee Amin, Hongfang Liu A clinical text classification paradigm using weak supervision and deep representation, 2019

-

Jason Fries, Ethan Steinberg, Saelig Khattar, Scott Fleming, Jose Posada, Alison Callahan, Nigam Shah Ontology-driven weak supervision for clinical entity classification in electronic health records, 2021