Hadoop frameworks and its components are still evolving, and new releases come out on a regular basis. Despite delivering successful projects, the Hadoop momentum may lose its value as soon as new releases or products are launched in the market and we don't keep our systems in sync with the technology.

I encourage readers to keep in touch with this technology and look out for new trends.

This chapter will cover the following topics:

- Hadoop distribution upgrade cycle

- Best practices and standards

- New trends

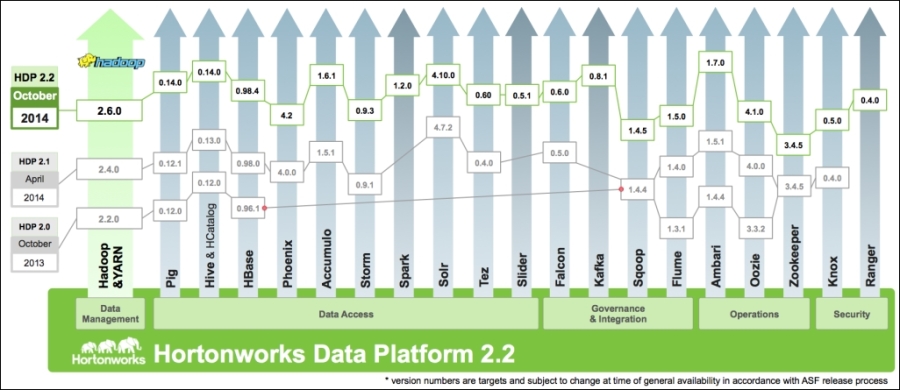

Hadoop distributions must be upgraded regularly to keep up with the latest Hadoop ecosystem releases. As Hadoop is evolving very rapidly, upgrades are released almost every few months. For example, Hortonworks released its HDP 2.1 in April 2014, followed by HDP 2.2 in October 2014.

Technology sectors generally keep up with the latest releases.

On the other hand, upgrade cycles are extremely slow in the financial sector, especially in large banks. Just to give a context, we have seen that in the majority of banks, desktops are still running Windows XP and Office 2003; ironically, they are supporting trades worth billions of dollars. So, please don't get discouraged if your Hadoop distribution is not upgraded as soon as a new release is out.

As shown in the following diagram, enterprise-ready Hadoop distribution from companies such as Hortonworks is simply a combination of various version-compatible Hadoop components:

The distribution companies recommend that you upgrade the software regularly. The bank's IT management departments now plan to upgrade for at least major releases, but minor ones can be skipped. So, it will more likely be a half-yearly or yearly upgrade cycle.

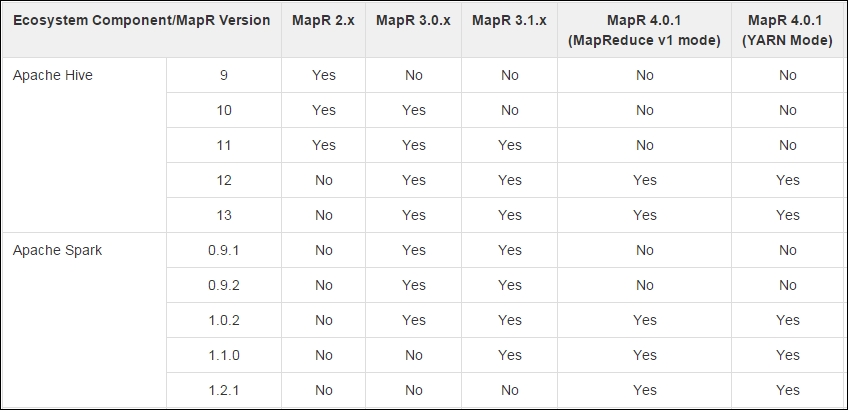

For example, as shown in the following diagram for MapR 4.0.1 V1 mode, the compatible version of Apache Spark is v1.0.2 onwards. So, if you upgrade MapR from V3.1.x to 4.0.1 V1 mode and are still using Spark v0.9.2, you will have no choice but to upgrade your Spark version as well:

Although upgraded Hadoop components will generally be backward compatible and support developed applications, but this is not always guaranteed. So, a little bit of code rewriting may be required and must be accounted for in the version upgrade project.

The steps to upgrade are:

- Plan: First, you need to decide if you need an offline upgrade or a rolling upgrade. I recommend rolling upgrades for the cluster with mission-critical applications running in production. An offline upgrade is reliable and recommended if the cluster is used to run analytics and batch jobs, and has an appetite for downtime. The plan should include the communication on dates when the upgrade occurs, so that the business is made aware of the system being offline or analytics running a bit slower than normal.

- Prepare: I recommend a regular automated backup of NameNode metadata; all databases including HDFS, Hive, HBase, CM and, Oozie. Additionally, prepare your scripts to back up the critical data, programs, and configuration just prior to upgrade. Test the upgrade execution steps on test, sandbox, or non-production servers and update the steps, if required. There must be a smooth end-to-end execution on the non-production server before the execution steps are finalized for production.

- Upgrade servers: All servers are upgraded in the correct order, such as name nodes and data nodes in sequence, and completed within the batch window agreed with the business.

- Upgrade clients: All clients need to be upgraded, followed by a quick test with the client tools.

- Upgrade ecosystem: Not all components are upgraded with the cluster upgrade, so some need to be upgraded individually on a case by case basis. For example, if you already have your Spark version compatible with the new Hadoop distribution, you can choose to upgrade later, depending on your requirements.