Unlike the Spark standalone cluster manager, which can run only Spark apps, Mesos is a cluster manager that can run a wide variety of applications, including Python, Ruby, or Java EE applications. It can also run Spark jobs. In fact, it is one of the popular go-to cluster managers for Spark. In this recipe, we'll see how to deploy our Spark application on the Mesos cluster. The prerequisite for this recipe is a running HDFS cluster.

Running a Spark job on Mesos is very similar to running it against the standalone cluster. It involves the following steps:

- Installing Mesos.

- Starting the Mesos master and slave.

- Uploading the Spark binary package and the dataset to HDFS.

- Running the job.

Download Mesos on the local machine by following the instructions at http://mesos.apache.org/gettingstarted/.

After you have installed the OS-specific tools needed to build Mesos, you have to run the configure and make commands (with root privileges) to build Mesos (this will take a long time) unless you pass -j <number of cores> V=0 to your make command, as shown here:

As a side note, just like Spark, the ec2 folder inside the mesos installation directory provides scripts to spawn a new EC2 mesos cluster.

Now that we have Mesos installed, the next step is to start the Mesos master and slave:

bash-3.2$ pwd /Users/Gabriel/Apps/mesos-0.22.1/build bash-3.2$ sudo ./bin/mesos-master.sh --ip=127.0.0.1 --work_dir=/var/lib/mesos

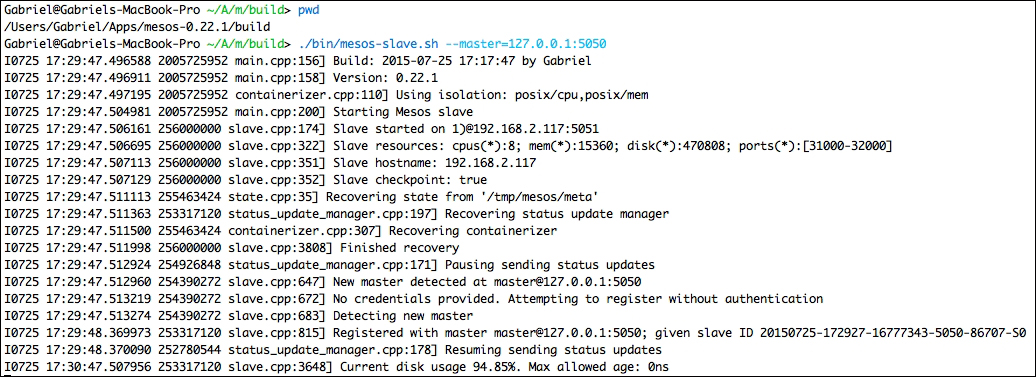

In another terminal window, let's bring up a worker node:

Gabriel@Gabriels-MacBook-Pro ~/A/m/build> pwd /Users/Gabriel/Apps/mesos-0.22.1/build Gabriel@Gabriels-MacBook-Pro ~/A/m/build> ./bin/mesos-slave.sh --master=127.0.0.1:5050

We can now look at the Mesos status page at http://127.0.0.1:5050, and this is what we will see:

Mesos requires that all worker nodes have Spark installed on the machines. We can achieve this either by configuring the spark.mesos.executor.home property in the spark configuration, or by simply uploading the entire Spark tar bundle to HDFS and making it available to the Mesos workers:

./bin/hadoop fs -mkdir /scalada ./bin/hadoop fs -put /Users/Gabriel/Apps/spark-1.4.1-bin-hadoop2.6.tgz /scalada/spark-1.4.1-bin-hadoop2.6.tgz

Let's set the spark binary as the executor URI

export SPARK_EXECUTOR_URI=hdfs://localhost:9000/scalada/spark-1.4.1-bin-hadoop2.6.tgz

Also, let's upload the dataset to HDFS:

./bin/hadoop fs -mkdir /scalada ./bin/hadoop fs -put /Users/Gabriel/Apps/SMSSpamCollection /scalada/

There is one thing that we need to do before running the program itself— configure the location of the libmesos native library. This file can be found in the /usr/local/lib folder as libmesos.so or libmesos.dylib, depending on your operating system:

export MESOS_NATIVE_JAVA_LIBRARY=/usr/local/lib/libmesos-0.22.1.dylib

Now, let's use cd to enter the Spark installation directory, and then run the job:

cd /Users/Gabriel/Apps/spark-1.4.1-bin-hadoop2.6 export MESOS_NATIVE_JAVA_LIBRARY=/usr/local/lib/libmesos-0.22.1.dylib ./bin/spark-submit --class com.packt.scalada.learning.BinaryClassificationSpamMesos --master mesos://localhost:5050 --executor-memory 2G --total-executor-cores 2 <REPO_FOLDER>/chapter6-scalingup/target/scala-2.10/scalada-learning-assembly.jar

As you can see in the following screenshot, the tasks run fine on this single-worker-node cluster:

The next screenshot shows the list of tasks that are already completed: