Even though this is the last chapter of the book, it can hardly be an afterthought even though monitoring in general often is in practical situations, quite unfortunately. Monitoring is a vital deployment component for any long execution cycle component and thus is part of the finished product. Monitoring can significantly enhance product experience and define future success as it improves problem diagnostic and is essential to determine the improvement path.

One of the primary rules of successful software engineering is to create systems as if they were targeted for personal use when possible, which fully applies to monitoring, diagnostic, and debugging—quite hapless name for fixing existing issues in software products. Diagnostic and debugging of complex systems, particularly distributed systems, is hard, as the events often can be arbitrary interleaved and program executions subject to race conditions. While there is a lot of research going in the area of distributed system devops and maintainability, this chapter will scratch the service and provide guiding principle to design a maintainable complex distributed system.

To start with, a pure functional approach, which Scala claims to follow, spends a lot of time avoiding side effects. While this idea is useful in a number of aspects, it is hard to imagine a useful program that has no effect on the outside world, the whole idea of a data-driven application is to have a positive effect on the way the business is conducted, a well-defined side effect.

Monitoring clearly falls in the side effect category. Execution needs to leave a trace that the user can later parse in order to understand where the design or implementation went awry. The trace of the execution can be left by either writing something on a console or into a file, usually called a log, or returning an object that contains the trace of the program execution, and the intermediate results. The latter approach, which is actually more in line with functional programming and monadic philosophy, is actually more appropriate for the distributed programming but often overlooked. This would have been an interesting topic for research, but unfortunately the space is limited and I have to discuss the practical aspects of monitoring in contemporary systems that is almost always done by logging. Having the monadic approach of carrying an object with the execution trace on each call can certainly increase the overhead of the interprocess or inter-machine communication, but saves a lot of time in stitching different pieces of information together.

Let's list the naive approaches to debugging that everyone who needed to find a bug in the code tried:

- Analyzing program output, particularly logs produced by simple print statements or built-in logback, java.util.logging, log4j, or the slf4j façade

- Attaching a (remote) debugger

- Monitoring CPU, disk I/O, memory (to resolve higher level resource-utilization issues)

More or less, all these approaches fail if we have a multithreaded or distributed system—and Scala is inherently multithreaded as Spark is inherently distributed. Collecting logs over a set of nodes is not scalable (even though a few successful commercial systems exist that do this). Attaching a remote debugger is not always possible due to security and network restrictions. Remote debugging can also induce substantial overhead and interfere with the program execution, particularly for ones that use synchronization. Setting the debug level to the DEBUG or TRACE level helps sometimes, but leaves you at the mercy of the developer who may or may not have thought of a particular corner case you are dealing with right at the moment. The approach we take in this book is to open a servlet with enough information to glean into program execution and application methods real-time, as much as it is possible with the current state of Scala and Scalatra.

Enough about the overall issues of debugging the program execution. Monitoring is somewhat different, as it is concerned with only high-level issue identification. Intersection with issue investigation or resolution happens, but usually is outside of monitoring. In this chapter, we will cover the following topics:

- Understanding major areas for monitoring and monitoring goals

- Learning OS tools for Scala/Java monitoring to support issue identification and debugging

- Learning about MBeans and MXBeans

- Understanding model performance drift

- Understanding A/B testing

While there are other types of monitoring dealing specifically with ML-targeted tasks, such as monitoring the performance of the models, let me start with basic system monitoring. Traditionally, system monitoring is a subject of operating system maintenance, but it is becoming a vital component of any complex application, specifically running over a set of distributed workstations. The primary components of the OS are CPU, disk, memory, network, and energy on battery-powered machines. The traditional OS-like tools for monitoring system performance are provided in the following table. We limit them to Linux tools as this is the platform for most Scala applications, even though other OS vendors provide OS monitoring tools such as Activity Monitor. As Scala runs in Java JVM, I also added Java-specific monitoring tools that are specific to JVMs:

Table 10.1. Common Linux OS monitoring tools

In many cases, the tools are redundant. For example, the CPU and memory information can be obtained with top, sar, and jmc commands.

There are a few tools for collecting this information over a set of distributed nodes. Ganglia is a BSD-licensed scalable distributed monitoring system (http://ganglia.info). It is based on a hierarchical design and is very careful about data structure and algorithm designs. It is known to scale to 10,000s of nodes. It consists of a gmetad daemon that is collects information from multiple hosts and presents it in a web interface, and gmond daemons running on each individual host. The communication happens on the 8649 port by default, which spells Unix. By default, gmond sends information about CPU, memory, and network, but multiple plugins exist for other metrics (or can be created). Gmetad can aggregate the information and pass it up the hierarchy chain to another gmetad daemon. Finally, the data is presented in a Ganglia web interface.

Graphite is another monitoring tool that stores numeric time-series data and renders graphs of this data on demand. The web app provides a /render endpoint to generate graphs and retrieve raw data via a RESTful API. Graphite has a pluggable backend (although it has it's own default implementation). Most of the modern metrics implementations, including scala-metrics used in this chapter, support sending data to Graphite.

The tools described in the previous section are not application-specific. For a long-running process, it often necessary to provide information about the internal state to either a monitoring a graphing solution such as Ganglia or Graphite, or just display it in a servlet. Most of these solutions are read-only, but in some cases, the commands give the control to the users to modify the state, such as log levels, or to trigger garbage collection.

Monitoring, in general is supposed to do the following:

- Provide high-level information about program execution and application-specific metrics

- Potentially, perform health-checks for critical components

- Might incorporate alerting and thresholding on some critical metrics

I have also seen monitoring to include update operations to either update the logging parameters or test components, such as trigger model scoring with predefined parameters. The latter can be considered as a part of parameterized health check.

Let's see how it works on the example of a simple Hello World web application that accepts REST-like requests and assigns a unique ID for different users written in the Scalatra framework (http://scalatra.org), a lightweight web-application development framework in Scala. The application is supposed to respond to CRUD HTTP requests to create a unique numeric ID for a user. To implement the service in Scalatra, we need just to provide a Scalate template. The full documentation can be found at http://scalatra.org/2.4/guides/views/scalate.html, the source code is provided with the book and can be found in chapter10 subdirectory:

class SimpleServlet extends Servlet {

val logger = LoggerFactory.getLogger(getClass)

var hwCounter: Long = 0L

val hwLookup: scala.collection.mutable.Map[String,Long] = scala.collection.mutable.Map()

val defaultName = "Stranger"

def response(name: String, id: Long) = { "Hello %s! Your id should be %d.".format(if (name.length > 0) name else defaultName, id) }

get("/hw/:name") {

val name = params("name")

val startTime = System.nanoTime

val retVal = response(name, synchronized { hwLookup.get(name) match { case Some(id) => id; case _ => hwLookup += name -> { hwCounter += 1; hwCounter } ; hwCounter } } )

logger.info("It took [" + name + "] " + (System.nanoTime - startTime) + " " + TimeUnit.NANOSECONDS)

retVal

}

}First, the code gets the name parameter from the request (REST-like parameter parsing is also supported). Then, it checks the internal HashMap for existing entries, and if the entry does not exist, it creates a new index using a synchronized call to increment hwCounter (in a real-world application, this information should be persistent in a database such as HBase, but I'll skip this layer in this section for the purpose of simplicity). To run the application, one needs to download the code, start sbt, and type ~;jetty:stop;jetty:start to enable continuous run/compilation as in Chapter 7, Working with Graph Algorithms. The modifications to the file will be immediately picked up by the build tool and the jetty server will restart:

[akozlov@Alexanders-MacBook-Pro chapter10]$ sbt [info] Loading project definition from /Users/akozlov/Src/Book/ml-in-scala/chapter10/project [info] Compiling 1 Scala source to /Users/akozlov/Src/Book/ml-in-scala/chapter10/project/target/scala-2.10/sbt-0.13/classes... [info] Set current project to Advanced Model Monitoring (in build file:/Users/akozlov/Src/Book/ml-in-scala/chapter10/) > ~;jetty:stop;jetty:start [success] Total time: 0 s, completed May 15, 2016 12:08:31 PM [info] Compiling Templates in Template Directory: /Users/akozlov/Src/Book/ml-in-scala/chapter10/src/main/webapp/WEB-INF/templates SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder". SLF4J: Defaulting to no-operation (NOP) logger implementation SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details. [info] starting server ... [success] Total time: 1 s, completed May 15, 2016 12:08:32 PM 1. Waiting for source changes... (press enter to interrupt) 2016-05-15 12:08:32.578:INFO::main: Logging initialized @119ms 2016-05-15 12:08:32.586:INFO:oejr.Runner:main: Runner 2016-05-15 12:08:32.666:INFO:oejs.Server:main: jetty-9.2.1.v20140609 2016-05-15 12:08:34.650:WARN:oeja.AnnotationConfiguration:main: ServletContainerInitializers: detected. Class hierarchy: empty 2016-15-05 12:08:34.921: [main] INFO o.scalatra.servlet.ScalatraListener - The cycle class name from the config: ScalatraBootstrap 2016-15-05 12:08:34.973: [main] INFO o.scalatra.servlet.ScalatraListener - Initializing life cycle class: ScalatraBootstrap 2016-15-05 12:08:35.213: [main] INFO o.f.s.servlet.ServletTemplateEngine - Scalate template engine using working directory: /var/folders/p1/y7ygx_4507q34vhd60q115p80000gn/T/scalate-6339535024071976693-workdir 2016-05-15 12:08:35.216:INFO:oejsh.ContextHandler:main: Started o.e.j.w.WebAppContext@1ef7fe8e{/,file:/Users/akozlov/Src/Book/ml-in-scala/chapter10/target/webapp/,AVAILABLE}{file:/Users/akozlov/Src/Book/ml-in-scala/chapter10/target/webapp/} 2016-05-15 12:08:35.216:WARN:oejsh.RequestLogHandler:main: !RequestLog 2016-05-15 12:08:35.237:INFO:oejs.ServerConnector:main: Started ServerConnector@68df9280{HTTP/1.1}{0.0.0.0:8080} 2016-05-15 12:08:35.237:INFO:oejs.Server:main: Started @2795ms2016-15-05 12:03:52.385: [main] INFO o.f.s.servlet.ServletTemplateEngine - Scalate template engine using working directory: /var/folders/p1/y7ygx_4507q34vhd60q115p80000gn/T/scalate-3504767079718792844-workdir 2016-05-15 12:03:52.387:INFO:oejsh.ContextHandler:main: Started o.e.j.w.WebAppContext@1ef7fe8e{/,file:/Users/akozlov/Src/Book/ml-in-scala/chapter10/target/webapp/,AVAILABLE}{file:/Users/akozlov/Src/Book/ml-in-scala/chapter10/target/webapp/} 2016-05-15 12:03:52.388:WARN:oejsh.RequestLogHandler:main: !RequestLog 2016-05-15 12:03:52.408:INFO:oejs.ServerConnector:main: Started ServerConnector@68df9280{HTTP/1.1}{0.0.0.0:8080} 2016-05-15 12:03:52.408:INFO:oejs.Server:main: Started @2796mss

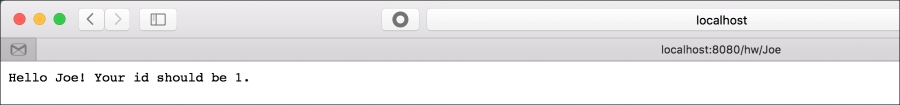

When the servlet is started on port 8080, issue a browser request:

Tip

I pre-created the project for this book, but if you want to create a Scalatra project from scratch, there is a gitter command in chapter10/bin/create_project.sh. Gitter will create a project/build.scala file with a Scala object, extending build that will set project parameters and enable the Jetty plugin for the SBT.

http://localhost:8080/hw/Joe.

The output should look similar to the following screenshot:

Figure 10-1: The servlet web page.

If you call the servlet with a different name, it will assign a distinct ID, which will be persistent across the lifetime of the application.

As we also enabled console logging, you will also see something similar to the following command on the console:

2016-15-05 13:10:06.240: [qtp1747585824-26] INFO o.a.examples.ServletWithMetrics - It took [Joe] 133225 NANOSECONDS

While retrieving and analyzing logs, which can be redirected to a file, is an option and there are multiple systems to collect, search, and analyze logs from a set of distributed servers, it is often also important to have a simple way to introspect the running code. One way to accomplish this is to create a separate template with metrics, however, Scalatra provides metrics and health support to enable basic implementations for counts, histograms, rates, and so on.

I will use the Scalatra metrics support. The ScalatraBootstrap class has to implement the MetricsBootstrap trait. The org.scalatra.metrics.MetricsSupport and org.scalatra.metrics.HealthChecksSupport traits provide templating similar to the Scalate templates, as shown in the following code.

The following is the content of the ScalatraTemplate.scala file:

import org.akozlov.examples._

import javax.servlet.ServletContext

import org.scalatra.LifeCycle

import org.scalatra.metrics.MetricsSupportExtensions._

import org.scalatra.metrics._

class ScalatraBootstrap extends LifeCycle with MetricsBootstrap {

override def init(context: ServletContext) = {

context.mount(new ServletWithMetrics, "/")

context.mountMetricsAdminServlet("/admin")

context.mountHealthCheckServlet("/health")

context.installInstrumentedFilter("/*")

}

}The following is the content of the ServletWithMetrics.scala file:

package org.akozlov.examples

import org.scalatra._

import scalate.ScalateSupport

import org.scalatra.ScalatraServlet

import org.scalatra.metrics.{MetricsSupport, HealthChecksSupport}

import java.util.concurrent.atomic.AtomicLong

import java.util.concurrent.TimeUnit

import org.slf4j.{Logger, LoggerFactory}

class ServletWithMetrics extends Servlet with MetricsSupport with HealthChecksSupport {

val logger = LoggerFactory.getLogger(getClass)

val defaultName = "Stranger"

var hwCounter: Long = 0L

val hwLookup: scala.collection.mutable.Map[String,Long] = scala.collection.mutable.Map() val hist = histogram("histogram")

val cnt = counter("counter")

val m = meter("meter")

healthCheck("response", unhealthyMessage = "Ouch!") { response("Alex", 2) contains "Alex" }

def response(name: String, id: Long) = { "Hello %s! Your id should be %d.".format(if (name.length > 0) name else defaultName, id) }

get("/hw/:name") {

cnt += 1

val name = params("name")

hist += name.length

val startTime = System.nanoTime

val retVal = response(name, synchronized { hwLookup.get(name) match { case Some(id) => id; case _ => hwLookup += name -> { hwCounter += 1; hwCounter } ; hwCounter } } )s

val elapsedTime = System.nanoTime - startTime

logger.info("It took [" + name + "] " + elapsedTime + " " + TimeUnit.NANOSECONDS)

m.mark(1)

retVal

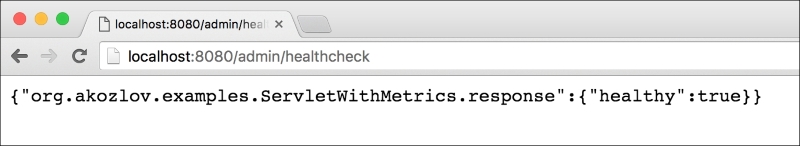

}If you run the server again, the http://localhost:8080/admin page will show a set of links for operational information, as shown in the following screenshot:

Figure 10-2: The admin servlet web page

The Metrics link will lead to the metrics servlet depicted in Figure 10-3. The org.akozlov.exampes.ServletWithMetrics.counter will have a global count of requests, and org.akozlov.exampes.ServletWithMetrics.histogram will show the distribution of accumulated values, in this case, the name lengths. More importantly, it will compute 50, 75, 95, 98, 99, and 99.9 percentiles. The meter counter will show rates for the last 1, 5, and 15 minutes:

Figure 10-3: The metrics servlet web page

Finally, one can write health checks. In this case, I will just check whether the result of the response function contains the string that it has been passed as a parameter. Refer to the following Figure 10.4:

Figure 10-4: The health check servlet web page.

The metrics can be configured to report to Ganglia or Graphite data collection servers or periodically dump information into a log file.

Endpoints do not have to be read-only. One of the pre-configured components is the timer, which measures the time to complete a task—which can be used for measuring scoring performance. Let's put the code in the ServletWithMetrics class:

get("/time") {

val sleepTime = scala.util.Random.nextInt(1000)

val startTime = System.nanoTime

timer("timer") {

Thread.sleep(sleepTime)

Thread.sleep(sleepTime)

Thread.sleep(sleepTime)

}

logger.info("It took [" + sleepTime + "] " + (System.nanoTime - startTime) + " " + TimeUnit.NANOSECONDS)

m.mark(1)

}Accessing http://localhost:8080/time will trigger code execution, which will be timed with a timer in metrics.

Analogously, the put operation, which can be created with the put() template, can be used to either adjust the run-time parameters or execute the code in-situ—which, depending on the code, might need to be secured in production environments.

Note

JSR 110

JSR 110 is another Java Specification Request (JSR), commonly known as Java Management Extensions (JMX). JSR 110 specifies a number of APIs and protocols in order to be able to monitor the JVM executions remotely. A common way to access JMX Services is via the jconsole command that will connect to one of the local processes by default. To connect to a remote host, you need to provide the -Dcom.sun.management.jmxremote.port=portNum property on the Java command line. It is also advisable to enable security (SSL or password-based authentication). In practice, other monitoring tools use JMX for monitoring, as well as managing the JVM, as JMX allows callbacks to manage the system state.

You can provide your own metrics that are exposed via JMX. While Scala runs in JVM, the implementation of JMX (via MBeans) is very Java-specific, and it is not clear how well the mechanism will play with Scala. JMX Beans can certainly be exposed as a servlet in Scala though.

The JMX MBeans can usually be examined in JConsole, but we can also expose it as /jmx servlet, the code provided in the book repository (https://github.com/alexvk/ml-in-scala).

We have covered basic system and application metrics. Lately, a new direction evolved for using monitoring components to monitor statistical model performance. The statistical model performance covers the following:

- How the model performance evolved over time

- When is the time to retire the model

- Model health check

ML models deteriorate with time, or 'age': While this process is not still well understood, the model performance tends to change with time, if even due to concept drift, where the definition of the attributes change, or the changes in the underlying dependencies. Unfortunately, model performance rarely improves, at least in my practice. Thus, it is imperative to keep track of models. One way to do this is by monitoring the metrics that the model is intended to optimize, as in many cases, we do not have a ready-labeled set of data.

In many cases, the model performance deterioration is not related directly to the quality of the statistical modeling, even though simpler models such as linear and logistic regression tend to be more stable than more complex models such as decision trees. Schema evolution or unnoticed renaming of attributes may cause the model to not perform well.

Part of model monitoring should be running the health check, where a model periodically scores either a few records or a known scored set of data.

A very common case in practical deployments is that data scientists come with better sets of models every few weeks. However, if this does not happen, one needs come up with a set of criteria to retire a model. As real-world traffic rarely comes with the scored data, for example, the data that is already scored, the usual way to measure model performance is via a proxy, which is the metric that the model is supposed to improve.

A/B testing is a specific case of controlled experiment in e-commerce setting. A/B testing is usually applied to versions of a web page where we direct completely independent subset of users to each of the versions. The dependent variable to test is usually the response rate. Unless any specific information is available about users, and in many cases, it is not unless a cookie is placed in the computer, the split is random. Often the split is based on unique userID, but this is known not to work too well across multiple devices. A/B testing is subject to the same assumptions the controlled experiments are subject to: the tests should be completely independent and the distribution of the dependent variable should be i.i.d.. Even though it is hard to imagine that all people are truly i.i.d., the A/B test has been shown to work for practical problems.

In modeling, we split the traffic to be scored into two or multiple channels to be scored by two or multiple models. Further, we need to measure the cumulative performance metric for each of the channels together with estimated variance. Usually, one of the models is treated as a baseline and is associated with the null hypothesis, and for the rest of the models, we run a t-test, comparing the ratio of the difference to the standard deviation.