Until now, all that we have done is develop microservices and discuss the frameworks around the process of building software components. Now it is time to test all of them. Testing is the activity of validating the software that has been built. Validating is a very broad term. In this chapter, we are going to learn how to test microservices, not only from the functional point of view, but we will also learn how to test the performance of our applications, as well as other aspects such as integration with different modules. We will also build a proxy using Node.js to help us to inspect the inputs and outputs of our services so that we can validate that what we have designed is actually happening and, once again, reassure the versatility of a language, such as JavaScript, to quickly prototype features.

It is also nowadays a trend to release features with an A/B test, where we only enable the features for certain type of users, and then we collect metrics to see how the changes to our system are performing. In this chapter, we will build a microservice that is going to give us the capability of rolling out features in a controlled way.

On the other hand, we are going to document our application, which unfortunately, is a forgotten activity in traditional software development: I haven't found a single company where the documentation captures 100% the information needed by new developers.

We will cover the following topics in this chapter:

- Functional testing: In this section, we will learn how to test microservices and what a good testing strategy is. We will also get to study a tool called Postman to manually test our APIs, as well as build a proxy with Node.js to spy our connections.

- Documenting microservices: We will learn how to use Swagger to document our microservices using the open API standard. We will also generate the code from the YAML definition using an open source tool.

Testing is usually a time-consuming activity that does not get all the required attention while building a software.

Think about how a company evolves:

- Someone comes up with an idea.

- A few engineers/product people build the system.

- The company goes to market.

There is no time to test more than the minimal required manual testing. Especially, when someone reads on the Internet that testing done right could take up to 40% of your development time, and once again, the common sense fails.

Automation is good and unit, integration, and end-to-end tests are a form of automation. By letting a computer test our software, we are drastically cutting down the human effort required to validate our software.

Think about how the software is developed. Even though our company likes to claim that we are agile, the truth is that every single software project has some level of iterative development, and testing is a part of every cycle, but generally, it is overlooked in favour of delivering new features.

By automating the majority (or a big chunk) of the testing, we are saving money, as shown in the following diagram:

Costs and Iterations

Testing is actually a cost saver if is done right, and the key is doing it right, which is not always easy. How much testing is too much testing? Should we cover every single corner of our application? Do we really need deep performance testing?

These questions usually lead to a different stream of opinions, and the interesting thing is that there is not a single source of truth. It depends on the nature of your system.

In this chapter, we are going to learn a set of extensive testing techniques, which does not mean that we should be including all of them in our test plan, but at least we will be aware of the testing methodologies.

In the past seven years, Ruby on Rails has created a massive trend towards a new paradigm, called Test-driven development (TDD), up to a point that, nowadays, majority of the new development platforms are built with TDD in mind.

Personally, I am not a fierce adopter of TDD, but I like to take the good parts. Planning the test before the development helps to create modules with the right level of cohesion and define a clear and easy-to-test interface. In this chapter, we won't cover the TDD in depth, but we will mention it a few times and explain how to apply the exposed techniques to a TDD test plan.

How to lay down your testing plan is a tricky question. No matter what you do, you will always end up with the sensation that this is completely wrong.

Before diving into the deep, let's define the different type of tests that we are going to be dealing with from the functional point of view, and what should they be designed for.

A unit test is a test that covers individual parts of the application without taking into account the integration with different modules. It is also called white box testing as the aim is to cover and verify as many branches as possible.

Generally, the way to measure the quality of our tests is the test coverage and it is measured in percentage. If our code spans over ten branches and our tests cover seven branches, our code coverage is 70%. This is a good indication of how reliable our test coverage is. However, it could be misleading as the tests could be flawed, or even though all the branches are tested, a different input would cause a different output that wasn't captured by a test.

In unit tests, as we don't interact with other modules, we will be making a heavy use of mocks and stubs in order to simulate responses from third-party systems and control the flow to hit the desired branch.

Integration tests, as the name suggests, are the tests designed to verify the integration of our module in the application environment. They are not designed to test the branches of our code, but business units, where we will be saving the data into databases, calling third-party web services or other microservices of our architecture.

These tests are the perfect tool for checking whether our service is behaving as expected, and sometimes, could be hard to maintain (more often than not).

During my years of experience, I haven't found a company where the integration testing is done right and there are a number of reasons for this, as stated in the following list:

- Some companies think that integration testing is expensive (and it is true) as it requires extra resources (such as databases and extra machines)

- Some other companies try to cover all the business cases just with unit testing, which depending on the business cases, could work, but it is far from ideal as unit tests make assumptions (mocks) that could give us a false confidence in our test suite

- Sometimes, integration tests are used to verify the code branches as if they were unit tests, which is time consuming as you need to work out the environment to make the integration test to hit the required branch

No matter how smart you want to be, integration testing is something that you want to do right, as it is the first real barrier in our software to prevent integration bugs from being released into production.

Here, we will demonstrate that our application actually works. In an integration test, we are invoking the services at code level. This means that we need to build the context of the service and then issue the call.

The difference with end-to-end testing is that, in end-to-end testing, we actually fully deploy our application and issue the required calls to execute the target code. However, many times, the engineers can decide to bundle both type of tests (integration and end-to-end tests) together, as the modern frameworks allow us to quickly run E2E tests as if they were integration tests.

As the integration tests, the target of the end-to-end tests is not to test all the paths of the application but test the use cases.

In end-to-end tests, we can find a few different modalities (paradigms) of testing, as follows:

- We can test our API issuing JSON requests (or other type of requests)

- We can test our UI using Selenium to emulate clicks on the DOM

- We can use a new paradigm called behavior-driven development (BDD) testing, where the use cases are mapped into actions in our application (clicks on the UI, requests in the API, and so on) and execute the use cases for which the application was built

End-to-end tests are usually very fragile and they get broken fairly easy. Depending on our application, we might get relaxed about these tests as the cost-value ratio is pretty low, but still, I would recommend having some of them covering at least the most basic and essential flows.

Questions such as the following are not easy to answer, especially in fast paced businesses, like startups:

- Do we have too many integration tests?

- Should we aim for 100% unit test coverage?

- Why bother with Selenium tests if they break every second day for no reason?

There is always a compromise. Test coverage versus time consumed, and also, there is no simple and single answer to these questions.

The only useful guideline that I've found along the years is what the testing world calls the pyramid of testing, which is shown in the following figure. If you think for a moment, in the projects where you worked before, how many tests did you have in total? What percentage of these were integration tests and unit tests? What about end-to-end tests?:

The pyramid of testing

The preceding pyramid shows the answers for these questions. In a healthy test plan, we should have a lot of unit tests: some integration tests and very few E2E tests.

The reason for this is very simple, majority of the problems can be caught within unit testing. Hitting the different branches of our code will verify the functionality of pretty much every functional case in our application, so it makes sense to have plenty of them in our test plan. Based on my experience, in a balanced test plan, around 70% of our tests should be unit tests. However, in a microservices-oriented architecture, especially with a dynamic language such as Node.js, this figure can easily go down and still be effective with our testing. The reasoning behind it is that Node.js allows you to write integration tests very quickly so that we can replace some unit tests by integration tests.

Integration tests are responsible for catching integration problems, as shown in the following:

- Can our code call the SMS gateway?

- Would the connection to the database be OK?

- Are the HTTP headers being sent from our service?

Again, around 20% of our tests, based on my experience, should be integration tests; focus on the positive flows and some of the negative that depend on third-party modules.

When it comes down to E2E tests, they should be very limited and only test the main flows of the applications without going into too much detail. These details should be already captured by the unit and integration tests that are easy to fix in an event of failure. However, there is a catch here: when testing microservices in Node.js, 90% of the time, integration and E2E tests can be the same thing. Due to the dynamic nature of Node.js, we can test the rest API from the integration point of view (the full server running), but in reality, we will also be testing how our code behaves when integrated with other modules. We will see an example later in this chapter.

Node.js is an impressive language. The amount of libraries around any single aspect of the development is amazing. No matter how bizarre the task that you want to achieve in Node.js is, there will be always an npm module.

Regarding the testing, Node.js has a very powerful set of libraries, but two of them are especially popular: Mocha and Chai.

They are pretty much the industry standard for app testing and are very well maintained and upgraded.

Another interesting library is called Sinon.JS, and it is used for mocking, spying and stubbing methods. We will come back to these concepts in the following sections, but this library is basically used to simulate integrations with third parties without interacting with them.

This library is a BDD/TDD assertions library that can be used in conjunction with any other library to create high quality tests.

An assertion is a code statement that will either be fulfilled or throw an error, stopping the test and marking it as a failure:

5 should be equal to A

The preceding statement will be correct when the variable A contains the value 5. This is a very powerful tool to write easy-to-understand tests, and especially with Chai, we have access to assertions making use of the following three different interfaces:

shouldexpectassert

At the end of the day, every single condition can be checked using a single interface, but the fact that the library provides us with such a rich interface facilitates the verbosity of the tests in order to write clean, easy, and maintainable tests.

Let's install the library:

npm install chai

This will produce the following output:

This means that Chai depends on assertion-error, type-detect, and deep-eql. As you can see, this is a good indication that we will be able to check, with simple instructions, complex statements such as deep equality in objects or type matching.

Testing libraries such as Chai are not a direct dependency of our application, but a development dependency. We need them to develop applications, but they should not be shipped to production. This is a good reason to restructure our package.json and add Chai in the devDependencies dependency tag, as follows:

{

"name": "chai-test",

"version": "1.0.0",

"description": "A test script",

"main": "chai.js",

"dependencies": {

},

"devDependencies": {

"chai": "*"

},

"author": "David Gonzalez",

"license": "ISC"

}This will prevent our software to ship into production libraries such as Chai, which has nothing to do with the operation of our application.

Once we have installed Chai, we can start playing around with the interfaces.

Chai comes with two flavors of BDD interfaces. It is a matter of preference which one to use, but my personal recommendation is to use the one that makes you feel more comfortable in any situation.

Let's start with the should Interface. This one is a BDD-style interface, using something similar to the natural language, we can create assertions that will decide whether our test succeeds or fails:

myVar.should.be.a('string')In order to be able to build sentences like the one before, we need to import the should module in our program:

var chai = require('chai');

chai.should();

var foo = "Hello world";

console.log(foo);

foo.should.equal('Hello world');Although it looks like a bit of dark magic, it is really convenient when testing our code as we use something similar to the natural language to ensure that our code is meeting some criteria: foo should be equal to 'Hello world' has a direct translation to our test.

The second BDD-style interface provided by Chai is expect. Although it is very similar to should, it changes a bit of syntax in order to set expectations that the results have to meet.

Let's see the following example:

var expect = require('chai').expect;

var foo = "Hello world";

expect(foo).to.equal("Hello world");As you can see, the style is very similar: a fluent interface that allows us to check whether the conditions for the test to succeed are met, but what happens if the conditions are not met?

Let's execute a simple Node.js program that fails in one of the conditions:

var expect = require('chai').expect;

var animals = ['cat', 'dog', 'parrot'];

expect(animals).to.have.length(4);Now, let's execute the previous script, assuming that you have already installed Chai:

code/node_modules/chai/lib/chai/assertion.js:107 throw new AssertionError(msg, { ^ AssertionError: expected [ 'cat', 'dog', 'parrot' ] to have a length of 4 but got 3 at Object.<anonymous> (/Users/dgonzalez/Dropbox/Microservices with Node/Writing Bundle/Chapter 6/code/chai.js:24:25) at Module._compile (module.js:460:26) at Object.Module._extensions..js (module.js:478:10) at Module.load (module.js:355:32) at Function.Module._load (module.js:310:12) at Function.Module.runMain (module.js:501:10) at startup (node.js:129:16) at node.js:814:3

An exception is thrown and the test fails. If all the conditions were validated, no exception would have been raised and the test would have succeeded.

As you can see, there are a number of natural language words that we can use for our tests using both expect and should interfaces. The full list can be found in the Chai documentation (http://chaijs.com/api/bdd/#-include-value-), but let's explain some of the most interesting ones in the following list:

not: This word is used to negate the assertions following in the chain. For example,expect("some string").to.not.equal("Other String")will pass.deep: This word is one of the most interesting of all the collection. It is used to deep-compare objects, which is the quickest way to carry on a full equal comparison. For example,expect(foo).to.deep.equal({name: "David"})will succeed iffoois a JavaScript object with one property callednamewith the"David"string value.any/all: This is used to check whether the dictionary or object contains any of the keys in the given list so thatexpect(foo).to.have.any.keys("name", "surname")will succeed iffoocontains any of the given keys, andexpect(foo).to.have.all.keys("name", "surname")will only succeed if it has all of the keys.ok: This is an interesting one. As you probably know, JavaScript has a few pitfalls, and one of them is the true/false evaluation of expressions. Withok, we can abstract all the mess and do something similar to the following list of expressions:expect('everything').to.be.ok:'everything'is a string and it will be evaluated tookexpect(undefined).to.not.be.ok: Undefined is not ok in the JavaScript world, so this assertion will succeed

above: This is a very useful word to check whether an array or collection contains a number of elements above a certain threshold, as follows:expect([1,2,3]).to.have.length.above(2)

As you can see, the Chai API for fluent assertions is quite rich and enables us to write very descriptive tests that are easy to maintain.

Now, you may be asking yourself, why have two flavors of the same interface that pretty much work the same? Well, they functionally do the same, however, take a look at the detail:

expectprovides a starting point in your chainable languageshouldextends theObject.prototypesignature to add the chainable language to every single object in JavaScript

From Node.js' point of view, both of them are fine, although the fact that should is instrumenting the prototype of Object could be a reason to be a bit paranoid about using it as it is intrusive.

The assertions interface matches the most common old-fashioned tests assertion library. In this flavor, we need to be specific about what we want to test, and there is no such thing as fluent chaining of expressions:

var assert = require('chai').assert;

var myStringVar = 'Here is my string';

// No message:

assert.typeOf(myStringVar, 'string');

// With message:

assert.typeOf(myStringVar, 'string', 'myStringVar is not string type.');

// Asserting on length:

assert.lengthOf(myStringVar, 17);There is really nothing more to go in depth if you have already used any of the existing test libraries in any language.

Mocha is, in my opinion, one of the most convenient testing frameworks that I have ever used in my professional life. It follows the principles of behavior-driven development testing (BDDT), where the test describes a use case of the application and uses the assertions from another library to verify the outcome of the executed code.

Although it sounds a bit complicated, it is really convenient to ensure that our code is covered from the functional and technical point of view, as we will be mirroring the requirements used to build the application into automated tests that verifies them.

Let's start with a simple example. Mocha is a bit different from any other library, as it defines its own domain-specific language (DSL) that needs to be executed with Mocha instead of Node.js. It is an extension of the language.

First we need to install Mocha in the system:

npm install mocha -g

This will produce an output similar to the following image:

From now on, we have a new command in our system: mocha.

The next step is to write a test using Mocha:

function rollDice() {

return Math.floor(Math.random() * 6) + 1;

}

require('chai').should();

var expect = require('chai').expect;

describe('When a customer rolls a dice', function(){

it('should return an integer number', function() {

expect(rollDice()).to.be.an('number');

});

it('should get a number below 7', function(){

rollDice().should.be.below(7);

});

it('should get a number bigger than 0', function(){

rollDice().should.be.above(0);

});

it('should not be null', function() {

expect(rollDice()).to.not.be.null;

});

it('should not be undefined', function() {

expect(rollDice()).to.not.be.undefined;

});

});The preceding example is simple. A function that rolls a dice and returns an integer number from 1 to 6. Now we need to think a bit about the use cases and the requirements:

- The number has to be an integer

- This integer has to be below 7

- It has to be above 0, dice don't have negative numbers

- The function cannot return

null - The function cannot return

undefined

This covers pretty much every corner case about rolling a dice in Node.js. What we are doing is describing situations that we certainly want to test, in order to safely make changes to the software without breaking the existing functionality.

These five use cases are an exact map to the tests written earlier:

- We describe the situation: When a customer rolls a dice

- Conditions get verified: It should return an integer number

Let's run the previous test and check the results:

mocha tests.js

This should return something similar to the following screenshot:

As you can see, Mocha returns a comprehensive report on what is going on in the tests. In this case, all of them pass, so we don't need to be worried about problems.

Let's force some of the tests to fail:

function rollDice() {

return -1 * Math.floor(Math.random() * 6) + 1;

}

require('chai').should();

var expect = require('chai').expect;

describe('When a customer rolls a dice', function(){

it('should return an integer number', function() {

expect(rollDice()).to.be.an('number');

});

it('should get a number below 7', function(){

rollDice().should.be.below(7);

});

it('should get a number bigger than 0', function(){

rollDice().should.be.above(0);

});

it('should not be null', function() {

expect(rollDice()).to.not.be.null;

});

it('should not be undefined', function() {

expect(rollDice()).to.not.be.undefined;

});

});Accidentally, someone has bumped a code fragment into the rollDice() function, which makes the function return a number that does not meet some of the requirements. Let's run Mocha again, as shown in the following image:

Now, we can see the report returning one error: the method is returning -4, where it should always return a number bigger than 0.

Also, one of the benefits of this type of testing in Node.js using Mocha and Chai is the time. Tests run very fast so that it is easy to receive feedback if we have broken something. The preceding suite ran in 10ms.

The previous two chapters have been focused on asserting conditions on return values of functions, but what happens when our function does not return any value? The only correct measurement is to check whether the method was called or not. Also, what if one of our modules is calling a third-party web service, but we don't want our tests to call the remote server?

For answering these questions, we have two conceptual tools called mocks and spies, and Node.js has the perfect library to implement them: Sinon.JS.

First install it, as follows:

npm install sinon

The preceding command should produce the following output:

Now let's explain how it works through an example:

function calculateHypotenuse(x, y, callback) {

callback(null, Math.sqrt(x*x + y*x));

}

calculateHypotenuse(3, 3, function(err, result){

console.log(result);

});This simple script calculates the hypotenuse of a triangle, given the length of the other two sides of the triangle. One of the tests that we want to carry on is the fact that the callback is executed with the right list of arguments supplied. What we need to accomplish such task is what Sinon.JS calls a spy:

var sinon = require('sinon');

require('chai').should();

function calculateHypotenuse(x, y, callback) {

callback(null, Math.sqrt(x*x + y*x));

}

describe("When the user calculates the hypotenuse", function(){

it("should execute the callback passed as argument", function() {

var callback = sinon.spy();

calculateHypotenuse(3, 3, callback);

callback.called.should.be.true;

});

});Once again, we are using Mocha to run the script and Chai to verify the results in the test through the should interface, as shown in the following image:

The important line in the preceding script is:

var callback = sinon.spy();

Here, we are creating the spy and injecting it into the function as a callback. This function created by Sinon.JS is actually not only a function, but a full object with a few interesting points of information. Sinon.JS does that, taking advantage of the dynamic nature of JavaScript. You can actually see what is in this object by dumping it into the console with console.log().

Another very powerful tool in Sinon.JS are the stubs. Stubs are very similar to mocks (identical at practical effects in JavaScript) and allow us to fake functions to simulate the required return:

var sinon = require('sinon');

var expect = require('chai').expect;

function rollDice() {

return -1 * Math.floor(Math.random() * 6) + 1;

}

describe("When rollDice gets called", function() {

it("Math#random should be called with no arguments", function() {

sinon.stub(Math, "random");

rollDice();

console.log(Math.random.calledWith());

});

})In this case, we have stubbed the Math#random method, which causes the method to be some sort of overloaded empty function (it does not issue the get call) that records stats on what or how it was called.

There is one catch in the preceding code: we never restored the random() method back and this is quite dangerous. It has a massive side effect, as other tests will see the Math#random method as a stub, not as the original one, and it can lead to us coding our tests according to invalid information.

In order to prevent this, we need to make use of the before() and after() methods from Mocha:

var sinon = require('sinon');

var expect = require('chai').expect;

var sinon = require('sinon');

var expect = require('chai').expect;

function rollDice() {

return -1 * Math.floor(Math.random() * 6) + 1;

}

describe("When rollDice gets called", function() {

it("Math#random should be called with no arguments", function() {

sinon.stub(Math, "random");

rollDice();

console.log(Math.random.calledWith());

});

after(function(){

Math.random.restore();

});

});If you pay attention to the highlighted code, we are telling Sinon.JS to restore the original method that was stubbed inside one of the it blocks, so that if another describe block makes use of http.get, we won't see the stub, but the original method.

Mocha has a few flavors of before and after:

before(callback): This is executed before the current scope (at the beginning of thedescribeblock in the preceding code)after(callback): This is executed after the current scope (at the end of thedescribeblock in the preceding code)beforeEach(callback): This is executed at the beginning of every element in the current scope (before eachitin the preceding example)afterEach(callback): This is executed at the end of every element in the current scope (after everyitin the preceding example)

Another interesting feature in Sinon.JS is the time manipulation. Some of the tests need to execute periodic tasks or respond after a certain time of an event's occurrence. With Sinon.JS, we can dictate time as one of the parameters of our tests:

var sinon = require('sinon');

var expect = require('chai').expect

function areWeThereYet(callback) {

setTimeout(function() {

callback.apply(this);

}, 10);

}

var clock;

before(function(){

clock = sinon.useFakeTimers();

});

it("callback gets called after 10ms", function () {

var callback = sinon.spy();

var throttled = areWeThereYet(callback);

areWeThereYet(callback);

clock.tick(9);

expect(callback.notCalled).to.be.true;

clock.tick(1);

expect(callback.notCalled).to.be.false;

});

after(function(){

clock.restore();

});Now, it is time to test a real microservice in order to get a general picture of the full test suite.

Our microservice is going to use Express, and it will filter an input text to remove what the search engines call stop words: words with less than three characters and words that are banned.

Let's see the code:

var _ = require('lodash');

var express = require('express');

var bannedWords = ["kitten", "puppy", "parrot"];

function removeStopWords (text, callback) {

var words = text.split(' ');

var validWords = [];

_(words).forEach(function(word, index) {

var addWord = true;

if (word.length < 3) {

addWord = false;

}

if(addWord && bannedWords.indexOf(word) > -1) {

addWord = false;

}

if (addWord) {

validWords.push(word);

}

// Last iteration:

if (index == (words.length - 1)) {

callback(null, validWords.join(" "));

}

});

}

var app = express();

app.get('/filter', function(req, res) {

removeStopWords(req.query.text, function(err, response){

res.send(response);

});

});

app.listen(3000, function() {

console.log("app started in port 3000");

});As you can see, the service is pretty small, so it is the perfect example for explaining how to write unit, integration, and E2E tests. In this case, as we stated before, E2E and integration tests are going to be the exact same as testing the service through the REST API will be equivalent to testing the system from the end-to-end point of view, but also how our component is integrated within the system. Given that, if we were to add a UI, we would have to split integration tests from E2E in order to ensure the quality.

Our service is done and working. However, now we want to unit test it, but we find some problems:

Here TDD comes to the rescue; we should always ask ourselves "how am I going to test this function when writing software?" It does not mean that we should modify our software with the specific purpose of testing, but if you are having problems while testing a part of your program, more than likely, you should look into cohesion and coupling, as it is a good indication of problems. Let's take a look at the following file:

var _ = require('lodash');

var express = require('express');

module.exports = function(options) {

bannedWords = [];

if (typeof options !== 'undefined') {

console.log(options);

bannedWords = options.bannedWords || [];

}

return function bannedWords(text, callback) {

var words = text.split(' ');

var validWords = [];

_(words).forEach(function(word, index) {

var addWord = true;

if (word.length < 3) {

addWord = false;

}

if(addWord && bannedWords.indexOf(word) > -1) {

addWord = false;

}

if (addWord) {

validWords.push(word);

}

// Last iteration:

if (index == (words.length - 1)) {

callback(null, validWords.join(" "));

}

});

}

}This file is a module that, in my opinion, is highly reusable and has good cohesion:

- We can import it everywhere (even in a browser)

- The banned words can be injected when creating the module (very useful for testing)

- It is not tangled with the application code

Laying down the code this way, our application module will look similar to the following:

var _ = require('lodash');

var express = require('express');

var removeStopWords = require('./remove-stop-words')({bannedWords: ["kitten", "puppy", "parrot"]});

var app = express();

app.get('filter', function(req, res) {

res.send(removeStopWords(req.query.text));

});

app.listen(3000, function() {

console.log("app started in port 3000");

});As you can see, we have clearly separated the business unit (the function that captures the business logic) from the operational unit (the setup of the server).

As I mentioned before, I am not a big fan of writing the tests prior to the code, but they should be written (in my opinion) alongside the code, but always having in mind the question mentioned before.

There seem to be a push in companies to adopt a TDD methodology, but it could lead to a significant inefficiency, especially if the business requirements are unclear (as they are 90% of the time) and we face changes along the development process.

Now that our code is in a better shape, we are going to unit test our function. We will use Mocha and Chai to accomplish such task:

var removeStopWords = require('./remove-stop-words')({bannedWords: ["kitten", "parrot"]});

var chai = require('chai');

var assert = chai.assert;

chai.should();

var expect = chai.expect;

describe('When executing "removeStopWords"', function() {

it('should remove words with less than 3 chars of length', function() {

removeStopWords('my small list of words', function(err, response) {

expect(response).to.equal("small list words");

});

});

it('should remove extra white spaces', function() {

removeStopWords('my small list of words', function(err, response) {

expect(response).to.equal("small list words");

});

});

it('should remove banned words', function() {

removeStopWords('My kitten is sleeping', function(err, response) {

expect(response).to.equal("sleeping");

});

});

it('should not fail with null as input', function() {

removeStopWords(null, function(err, response) {

expect(response).to.equal("small list words");

});

});

it('should fail if the input is not a string', function() {

try {

removeStopWords(5, function(err, response) {});

assert.fail();

}

catch(err) {

}

});

});As you can see, we have covered pretty much every single case and branch inside our application, but how is our code coverage looking?

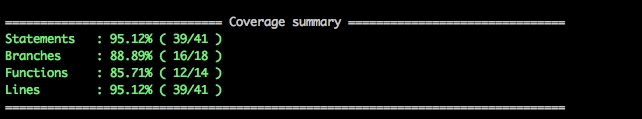

Until now, we have mentioned it, but never actually measured it. We are going to use one tool, called Istanbul, to measure the test coverage:

npm install -g istanbul

This should install Istanbul. Now we need to run the coverage report:

istanbul cover _mocha my-tests.js

This will produce an output similar to the one shown in the following image:

This will also generate a coverage report in HTML, pointing out which lines, functions, branches, and statements are not being covered, as shown in the following screenshot:

As you can see, we are looking pretty well. Our code (not the tests) is actually well covered, especially if we look into the detailed report for our code file, as shown in the following image:

We can see that only one branch (the or operator in line 7) is not covered and the if operator in line 6 never diverted to the else operator.

We also got information about the number of times a line is executed: it is showing in the vertical bar beside the line number. This information is also very useful to spot the hot areas of our application where an optimization will benefit the most.

Regarding the right level of coverage, in this example, it is fairly easy to go up to 90%+, but unfortunately, it is not that easy in production systems:

- Code is a lot more complex

- Time is always a constraint

- Testing might not be seen as productive time

However, you should exercise caution when working with a dynamic language. In Java or C#, calling a function that does not exist results in a compilation time error; whereas in JavaScript, it will result in a runtime error. The only real barrier is the testing (manual or automated), so it is a good practice to ensure that at least every line is executed once. In general code coverage, over 75% should be good enough for the majority of cases.

In order to test our application end to end, we are going to need a server running it. Usually, end-to-end tests are executed against a controlled environment, such as a QA box or a pre-production machine, to verify that our about-to-be-deployed software is behaving as expected.

In this case, our application is an API, so we are going to create the end-to-end tests, which at the same time, are going to be used as integration tests.

However, in a full application, we might want to have a clear separation between the integration and end-to-end tests and use something like Selenium to test our application from the UI point of view.

Selenium is a framework that allows our code to send instructions to the browser, as follows:

- Click the button with the

button1ID - Hover over the

divelement with the CSS classhighlighted

In this way, we can ensure that our app flows work as expected, end to end, and our next release is not going to break the key flows of our app.

Let's focus on the end-to-end tests for our microservice. We have been using Chai and Mocha with their corresponding assertion interfaces to unit test our software, and Sinon.JS to mock services functions and other elements to avoid the calls being propagated to third-party web services or get a controlled response from one method.

Now, in our end-to-end test plan, we actually want to issue the calls to our service and get the response to validate the results.

The first thing we need to do is run our microservice somewhere. We are going to use our local machine just for convenience, but we can execute these tests in a continuous development environment against a QA machine.

So, let's start the server:

node stop-words.js

I call my script stop-words.js for convenience. Once the server is running, we are ready to start testing. In some situations, we might want our test to start and stop the server so that everything is self-contained. Let's see a small example about how to do it:

var express = require('express');

var myServer = express();

var chai = require('chai');

myServer.get('/endpoint', function(req, res){

res.send('endpoint reached');

});

var serverHandler;

before(function(){

serverHandler = myServer.listen(3000);

});

describe("When executing 'GET' into /endpoint", function(){

it("should return 'endpoint reached'", function(){

// Your test logic here. http://localhost:3000 is your server.

});

});

after(function(){

serverHandler.close();

});As you can see, Express provides a handler to operate the server programmatically, so it is as simple as making use of the before() and after() functions to do the trick.

In our example, we are going to assume that the server is running. In order to issue the requests, we are going to use a library called request to issue the calls to the server.

The way to install it, as usual, is to execute npm install request. Once it is finished, we can make use of this amazing library:

var chai = require('chai');

var chaiHttp = require('chai-http');

var expect = chai.expect;

chai.use(chaiHttp);

describe("when we issue a 'GET' to /filter with text='aaaa bbbb cccc'", function(){

it("should return HTTP 200", function(done) {

chai.request('http://localhost:3000')

.get('/filter')

.query({text: 'aa bb ccccc'}).end(function(req, res){

expect(res.status).to.equal(200);

done();

});

});

});

describe("when we issue a 'GET' to /filter with text='aa bb ccccc'", function(){

it("should return 'ccccc'", function(done) {

chai.request('http://localhost:3000')

.get('/filter')

.query({text: 'aa bb ccccc'}).end(function(req, res){

expect(res.text).to.equal('ccccc');

done();

});

});

});

describe("when we issue a 'GET' to /filter with text='aa bb cc'", function(){

it("should return ''", function(done) {

chai.request('http://localhost:3000')

.get('/filter')

.query({text: 'aa bb cc'}).end(function(req, res){

expect(res.text).to.equal('');

done();

});

});

});With the simple test from earlier, we managed to test our server in a way that ensures that every single mobile part of the application has been executed.

There is a particularity here that we didn't have before:

it("should return 'ccccc'", function(done) { chai.request('http://localhost:3000') .get('/filter') .query({text: 'aa bb ccccc'}).end(function(req, res){ expect(res.text).to.equal('ccccc'); done(); }); });

If you take a look at the highlighted code, you can see a new callback called done. This callback has one mission: prevent the test from finishing until it is called, so that the HTTP request has time to be executed and return the appropriated value. Remember, Node.js is asynchronous, there is no such thing as a thread being blocked until one operation finishes.

Other than that, we are using a new DSL introduced by chai-http to build get requests.

This language allows us to build a large range of combinations, consider the following, for example:

chai.request('http://mydomain.com')

.post('/myform')

.field('_method', 'put')

.field('username', 'dgonzalez')

.field('password', '123456').end(...)In the preceding request, we are submitting a form that looks like a login, so that in the end() function, we can assert the return from the server.

There are an endless number of combinations to test our APIs with chai-http.

No matter how much effort we put in to our automated testing, there will always be a number of manual tests executed.

Sometimes, we need to do it just when we are developing our API, as we want to see the messages going from our client to the server, but some other times, we just want to hit our endpoints with a pre-forged request to cause the software to execute as we expect.

In the first case, we are going to take the advantage of Node.js and its dynamic nature to build a proxy that will sniff all the requests and log them to a terminal so that we can debug what is going on. This technique can be used to leverage the communication between two microservices and see what is going on without interrupting the flow.

In the second case, we are going to use software called Postman to issue requests against our server in a controlled way.

My first contact with Node.js was exactly due to this problem: two servers sending messages to each other, causing misbehavior without an apparent cause.

It is a very common problem that has many already-working solutions (man-in-the-middle proxies basically), but we are going to demonstrate how powerful Node.js is:

var http = require('http');

var httpProxy = require('http-proxy');

var proxy = httpProxy.createProxyServer({});

http.createServer(function(req, res) {

console.log(req.rawHeaders);

proxy.web(req, res, { target: 'http://localhost:3000' });

}).listen(4000);If you remember from the previous section, our stop-words.js program was running on the port 3000. What we have done with this code is create a proxy using http-proxy, that tunnels all the requests made on the port 4000 into the port 3000 after logging the headers into the console.

If we run the program after installing all the dependencies with the npm install command in the root of the project, we can see how effectively the proxy is logging the requests and tunneling them into the target host:

curl http://localhost:4000/filter?text=aaa

This will produce the following output:

This example is very simplistic, but this small proxy could virtually be deployed anywhere in between our microservices and give us very valuable information about what is going on in the network.

Out of all the software that we can find on the Internet for testing APIs, Postman is my favorite. It started as a extension for Google Chrome, but nowadays, has taken the form of a standalone app built on the Chrome runtime.

It can be found in the Chrome web store, and it is free (so you don't need to pay for it), although it has a version for teams with more advanced features that is paid.

The interface is very concise and simple, as shown in the following screenshot:

On the left-hand side, we can see the History of requests, as well as the Collections of requests, which will be very handy for when we are working on a long-term project and we have some complicated requests to be built.

We are going to use again our stop-words.js microservice to show how powerful Postman can be.

Therefore, the first thing is to make sure that our microservice is running. Once it is, let's issue a request from Postman, as shown in the following screenshot:

As simple as that, we have issued the request for our service (using the GET verb) and it has replied with the text filtered: very simple and effective.

Now imagine that we want to execute that call over Node.js. Postman comes with a very interesting feature, which is generating the code for the requests that we issue from the interface. If you click on the icon under the save button on the right-hand side of the window, the appearing screen will do the magic:

Let's take a look at the generated code:

var http = require("http");

var options = {

"method": "GET",

"hostname": "localhost",

"port": "3000",

"path": "/filter?text=aaa%20bb%20cc",

"headers": {

"cache-control": "no-cache",

"postman-token": "912cacd8-bcc0-213f-f6ff-f0bcd98579c0"

}

};

var req = http.request(options, function (res) {

var chunks = [];

res.on("data", function (chunk) {

chunks.push(chunk);

});

res.on("end", function () {

var body = Buffer.concat(chunks);

console.log(body.toString());

});

});

req.end();It is quite an easy code to understand, especially if you are familiar with the HTTP library.

With Postman, we can also send cookies, headers, and forms to the servers in order to mimic the authentication that an application will fulfill by sending the authentication token or cookie across.

Let's redirect our request to the proxy that we created in the preceding section, as shown in the following screenshot:

If you have the proxy and the stop-words.js microservice running, you should see something similar to the following output in the proxy:

The header that we sent over with Postman, my-awesome-header, will show up in the list of raw headers.