CHAPTER 3: THE SECURITY BALANCE

This chapter aims to give a pragmatic overview of some of the potential security benefits and potential pitfalls of working in the Cloud. From the security perspective, working in the Cloud typically tends to be neither intrinsically better nor worse than on-premises – just different.

Security benefits

Like beauty, security is very much in the eye of the beholder. Which is a slightly pretentious way of saying that 'good' security is (or at least should be) dependent upon the context of your organisation in terms of the nature of your business, the threats and vulnerabilities to which your business is exposed and the risk appetite of your organisation. What is 'secure' for one organisation may be viewed as inadequate by another organisation with a lower appetite for the acceptance of risk. Security baselines, therefore, vary across organisations; all of which makes it difficult to make categorical statements about security benefits and downsides. Given this variability, I will discuss potential security benefits and downsides; you will have to take an honest look at your currently deployed security controls and consider whether each of the benefits listed below would be a real improvement on your current situation.

Data centre security

Designing, constructing and then operating a secure data centre is a costly exercise. A suitable location must be found, preferably one with a low incidence of natural disasters, close enough to transport links (without being too close), conveniently located for staff to commute to work and with excellent utility facilities for communications, power and water. The data centre must then be constructed complete with a secure outer perimeter, secure inner perimeter, appropriate security monitoring devices (CCTV, passive infrared, etc.), strong walls, access control mechanisms (e.g. proximity cards and mantraps), internal monitoring controls and countless other controls. You then need to consider the environmental aspects around cooling, humidity, uninterruptible power supplies, on-site generators (with fuel) and the staff to police and operate the building and the IT hardware that it contains. Or perhaps you do not.

In general, Cloud providers have already invested in state of the art secure data centres, or in the case of many SaaS and PaaS providers, in building upon the secure data centres offered by one of the Big 3 IaaS providers. The task of recouping the initial capital expenditure of construction, and the on-going operational costs, are shared amongst their client-base. The major Cloud providers tend to operate very lean data centres and employ an extremely strict segregation of duties: the few staff with physical access to the servers and storage in a Cloud data centre will have zero knowledge of which clients are operating on that specific hardware. Any threat source seeking to gain unauthorised access to their target's data via physical access will struggle to identify the right hardware to attack. Similarly, the major Cloud providers tend to stripe their customers’ data across different storage disks, so an attacker walking off with a physical disk is unlikely to escape with a disk containing only the data of their target. Add in consideration of the use of data encryption at rest, along with the minimal chances of being able to sneak physical hardware out of the secure data centres, and the risk of a compromise of the confidentiality of data via physical access becomes minimal.

One other physical security factor to consider is the nature of the hardware used to provide the Cloud services – yes, even ‘serverless’ services rely upon physical servers. The major Cloud providers have the scale to procure their own custom hardware, including bespoke hardware security chips. For example, the custom AWS Nitro8 hardware has various components, including specific elements covering network virtualisation and security, whilst GCP has the Titan9 chip which provides secure boot capabilities by acting as a hardware-based root of trust. The use of such custom hardware can be a determining factor when choosing between different Cloud providers. Smaller providers using commodity hardware may still be exposed to more common security issues relating to IPMI (Intelligent Platform Management Interface) or the BMC (Baseboard Management Controller). An example of such an issue was the Cloudborne10 attack that enabled security researchers to install an implant within the BMC and maintain persistence on the hardware after it had been ‘freed’ for use by other customers of affected Cloud providers.

For those organisations that do not have adequate data processing environments (e.g. those of you with business-critical servers hiding under that desk in the corner), then moving services to a Cloud provider will almost certainly provide a benefit in terms of the physical security of your information assets and, potentially, the security of the hardware hosting those assets. For those organisations that already run highly secure data centres, the Cloud will probably not offer as much benefit from a security perspective. However, it may prove more cost-effective to deploy new applications on to a public Cloud if capacity issues demand construction of new data centre floorspace. One common factor driving many organisations to the Cloud is a burning platform of legacy data centres coming towards the end of their life, either due to lease expiry or the inevitable march of technology.

It must be noted that there is an implicit assumption here that the Cloud providers facilities are as secure as expected – would-be consumers should perform appropriate levels of due diligence to ensure that they are comfortable with the locations where their data may be held.

Improved resilience

In most circumstances, it is likely that the top Cloud services will provide more resilience (by default) than the on-premises equivalent. For example, Amazon Web Services offers a number of different Availability Zones11 within different Regions such that services can be hosted across different Availability Zones (or Regions) in order to improve the resilience of the hosted services. The Microsoft Azure platform now also offers Availability Zones and automatically replicates customer data held within the Azure storage facilities and stores copies in three separate locations to improve resilience. Outside of top tier enterprises, how many other organisations have multiple data centres (across different geographies) capable of providing similar levels of resilience? Furthermore, if your service undergoes a rapid surge in demand then an on-premises service could find itself struggling to cope whilst additional capacity is procured and installed. Additional instances could be spun up on the Cloud in a matter of minutes (or less depending upon your provider and toolset).

Of course, not everything always works according to plan, and even Cloud providers have service outages despite all their efforts to eliminate points of failure. The ‘illusion of infinite resource’ is also just that: an illusion. Cloud consumers using smaller Regions can sometimes find themselves unable to provision resources of a specific type due to wider demand. Consumers are well advised to investigate how much information Cloud providers release about past outages so as to judge their levels of openness and competence when managing incidents. An example of a comprehensive post-mortem of an outage of the AWS S3 service can be found at:

https://aws.amazon.com/message/41926/.

One potential use case for Cloud computing is for disaster recovery purposes. Why invest in a backup data centre to cater for an event that may never occur if a Cloud solution could provide an environment to operate within for a short period of time, but at very little cost whilst not in operation? For those organisations that run heavily virtualised environments, the Cloud can be a very cost-effective way of providing disaster recovery capabilities; hybrid technologies have improved dramatically over the last few years making the Cloud a suitable backup option for VMware or Hyper-V hosted workloads. Whilst full-blown Cloud-based disaster recovery may not be possible for some organisations,12 the Cloud can still be a suitable option for storing backups of data rather than relying on physical storage media.

Improved security patching

Security patching is not straightforward in many organisations: firstly, you need to obtain vendor or researcher security advisory notifications; secondly, you need to identify which of those advisories are relevant to your environment; and, thirdly, you need skilled staff to understand the content of the bulletins or advisories. Once you are confident that you know you have a problem that you need to fix you then get into the real pain of patch testing and the scheduling of when these tested patches are to be applied, particularly if down-time is necessary to business-critical applications. With SaaS and PaaS (in general) consumers do not need to worry about patching of operating systems; this task is the responsibility of the Cloud service providers. Unfortunately, this is not usually the case for the standard IaaS service model, in which consumers must still ensure that their virtual images are up to date with the required patches; however, Managed IaaS models, whereby a Cloud provider or Cloud broker will take on responsibility for such patching, are available.

SaaS consumers also have the added bonus of not having to concern themselves with patches to the applications that they are using; again this is the responsibility of the service provider who would typically patch any issues during their regular updates of functionality unless there is a need for a more urgent fix. PaaS consumers are responsible for fixing any issues in the code that they may have developed and deployed, whilst the provider is responsible for fixing any issues in the shared capabilities that they offer. PaaS consumers do not get to completely forget about security patching.

Overall, SaaS and PaaS solutions can significantly reduce the workload of existing system administrators with regard to the monthly patch process.

Automation and DevSecOps

Real-world consumers are driving rapid evolution of the services and products that they consume, particularly in the online world. This means that Cloud-native organisations are moving from, perhaps, a major release every quarter to multiple releases in a single day. Traditional security checkpoints and processes cannot operate at that speed of delivery. This is where the DevSecOps approach – the embedding of security within the DevOps structure and workflows – and an associated increase in security automation comes into play. Organisations can build security in much closer to the start of the development and delivery lifecycle, hence the emergence of the term ‘shift-left’. Security can be embedded in the form of preapproved templates and machine images, the embedding of code analysis tools (static, dynamic and fuzzers) into continuous integration and continuous delivery (sometimes deployment) pipelines, alongside the use of third-party dependency checkers looking at known vulnerabilities and potential license restrictions in any libraries a development team may choose to reuse. This approach stands in stark contrast to the legacy world where developers may be left to their own devices, at least until the penetration test immediately before the scheduled go-live date – which then often needs to be rescheduled to allow the identified weaknesses to be addressed.

Much of this DevSecOps approach can be adopted whether an organisation works on the Cloud or on-premises. Where Cloud comes into its own is the ability to define an infrastructure as code (IaC), i.e. an entire network environment, the constituent servers, security controls and application components can all be defined in code for implementation on an IaaS platform using tools such as CloudFormation, Azure Resource Manager or Terraform templates. Much of the security tooling designed for use in the Cloud can also be driven via APIs, e.g. anti-malware, host-based intrusion prevention systems, firewalling, container security tools, et cetera. Organisations can, therefore, automate changes to their Cloud-based environments, perhaps as part of an automated response to an identified security incident, simply by making code changes and then pushing the updated code through the deployment pipeline (including the embedded security tests). Cloud providers also offer the capability to check for policy drift and, following this, to automatically remediate any such deviations from expected configuration. Whilst some level of automation is possible within virtualised on-premises environments, the Cloud tends to offer a wider range of technologies that can be defined as code; these include compute, storage, security and data warehouses.

Another potential advantage of Cloud and IaC is the opportunity to enforce immutability within their production environments, i.e. to make them unchangeable. This allows organisations to move away from the need to have privileged administrative users in production environments, significantly reducing the risk of outages due to human error. In this model, any errors are investigated in separate environments and then fixed by the DevSecOps teams via the CI/CD pipeline. Further advantages of automation and DevSecOps approaches will be explored later in this book, including chaos engineering and the use of blue/green environments13 (or red/black if you are using Netflix-derived terminology or tooling, e.g. Spinnaker14) to remove the need to patch services in a currently live environment.

Security expertise

Many smaller businesses and start-ups do not have the budget, inclination or identified business need to employ dedicated security staff. A typical large enterprise may require security expertise across a diverse range of technologies such as networking, operating systems, databases, enterprise resource planning, customer relationship management, web technologies, secure coding and others. It can be difficult and/or expensive for these organisations to retain skilled security staff due to the demand for such scarce resources.

Most organisations can benefit from improved security expertise at the provider when operating in the Cloud. The established Cloud providers are well aware of the impact that a serious security incident would have upon their business in the competitive Cloud market, and so have invested in recruiting and retaining high calibre security expertise. At the SaaS level, it should be expected that the providers understand the security of their application extremely well. Similarly, many of the IaaS providers operate customised variants of open source hypervisors and again, they should understand the security at least as well as a consumer would understand their own installed hypervisor.

On the other hand, it would be an exaggeration to suggest that all Cloud providers operate to the highest levels of security. In 2011, a study conducted by the Ponemon Institute and CA15 canvassed 127 Cloud service providers across the US and Europe on their views with respect to the security of their services. Worryingly, a majority of the surveyed providers did not view security as a competitive advantage and were also of the opinion that it was the responsibility of the consumer to secure the Cloud, and not that of the provider. Furthermore, a majority of the surveyed providers admitted that they did not employ dedicated security personnel to secure their services. Without more information on the providers that took part in this study, it is difficult to judge whether the canvassed providers were truly representative of the attitude of Cloud providers targeting enterprise customers at the time. What is clear is that Cloud service provider understanding of the importance of security as a business differentiator has increased in the years since the Ponemon survey – the market has driven CSPs towards a need to demonstrate their compliance with relevant security standards and sector-specific regulations.

In all cases, it is advisable to investigate the security expertise available to a Cloud provider prior to adopting their services. Options for investigation include:

•Examination of any security certifications or independent operational reviews of the service (e.g. SOC2 type II reports16);

•Investigation of the security materials present on their website or otherwise made available to consumers (sometimes this information is only available under Non-Disclosure Agreements (NDAs)). Major Cloud providers now make much of this information freely available, e.g. Amazon Web Services offers the Artifact17 service to facilitate download of such materials, whilst the Azure Service Trust Portal18 and the GCP Compliance19 page offer customers the ability to obtain assurance reports, which are not covered by NDAs; and

•Investigation of the security individuals employed by the Cloud provider, e.g. looking for past research papers or thought leadership pieces.

Knowledge sharing and situational awareness

Cloud providers are in a privileged position whereby they have visibility of the network traffic entering, traversing and leaving their Cloud services, though there are some exceptions, such as situations in which consumers employ encrypted links. This visibility can give the provider the ability to identify an attack against one of their clients and then apply any identified mitigations to the whole of their service, improving the security position of their entire customer base. Although a number of (typically) industry-specific information sharing exchanges do exist with regard to the sharing of identified attack vectors, such forums tend to be limited in scope compared to the vista available to the major Cloud providers. Most organisations will, therefore, obtain more complete protection when using Cloud solutions than when relying on their own knowledge (or that of their partners) to identify active threats. Cloud providers have also now productised elements of their threat intelligence and active monitoring capabilities and they make those capabilities available for incorporation into their customers’ overall security management solutions. The best examples of such solutions are AWS GuardDuty20 and Azure Sentinel21.

There have been a number of occasions where Cloud providers have informed their clients of a compromise of one of the client's hosted services of which the client themselves were unaware. One example of which I am aware involved a compromised virtual server being used to distribute illegal materials. Some Cloud providers go even further, e.g. AWS helps to secure customer accounts by scanning public code repositories like Gitlab and GitHub for the presence of AWS credentials and then informing affected users of any leaked credentials. Consumers can, therefore, benefit from an additional security monitoring and incident response facility.

Improved information sharing mechanisms

There have been many publicised incidents of sensitive information being placed at risk through the loss of removable storage media such as flash drives. The Cloud can be a more secure alternative for the sharing of information, particularly when information is encrypted and decrypted on-premises. Consider the balance of possibilities: what is the most likely event, the compromise of the storage as a service offer of a major provider or the loss of a memory stick?

Renewal of security architecture and segmentation

Moving to any new model of outsourced service provision should encourage a thorough re-examination of the underlying security requirements of the organisation and/or specific service. Business processes and enabling technologies tend to evolve faster than the deployed security solutions. Consider how many organisations still rely upon their stateful inspection firewalls for protection despite their applications interacting using JSON or XML tunnelled over TLS, i.e. effectively bypassing their firewall. The recent Wannacry22 and NotPetya23 malware outbreaks both demonstrated the risks of operating flat network environments with little internal segmentation. A move to the Cloud enables organisations to deploy applications into their own dedicated security domains, reducing the blast radius should any single application be compromised. In other words, should an attacker or a piece of malware compromise an application, the segmentation offered by this approach will prevent the incident impacting the wider organisation. Segmentation can be provided at a variety of levels, from full account level segmentation through to simple deployments into different virtual networks or virtual private Clouds.

A fresh start via a move to a Cloud service can offer an opportunity to renew the overall security architecture – so that it supports rather than hinders the needs of the business – whilst protecting the business from ever more virulent malware. Even if an organisation decides not to move to a Cloud-based service, this process of re-examination of the security architecture and its underlying requirements can still offer real benefits to the organisation.

Potential pitfalls

As with the potential security benefits of moving to the Cloud, the potential pitfalls are also very much dependent upon the relative merits of the current security solutions in place at the would-be Cloud consumer.

Compliance

Compliance is often highlighted as being one of the major potential problem areas for organisations wanting to make use of public Clouds. Chapter 5 discusses some of these compliance and regulatory issues in more detail. Suffice to say, for now, that organisations should take great care to ensure that they remain within their compliance and regulatory regimes. Compliance cannot be outsourced.

Assurance

Cloud providers can sometimes make bold claims about the strength of their security controls; however, it can be very difficult to ascertain whether those claims are valid. From the perspective of the Cloud providers, it is clearly not feasible to allow each and every potential customer to conduct a visit and complete a thorough review of the physical security of their data centres. Similarly, the providers cannot afford the resource to be able to answer a multitude of compliance-centred questionnaires for each potential consumer. Consumers should look for those Cloud providers that have undertaken some form of independent security certification, validation or assurance exercise. Examples include ISO 27001 certification, CSA STAR certification24 or the results of a SOC2 Type II audit. Now, in isolation, neither an ISO 27001 compliance certificate nor a statement that a SOC2 Type II audit has been undertaken offers much value to consumers. In order to derive any real value from such assessments, would-be consumers must obtain the scope of such exercises, e.g. the statement of applicability for any ISO 27001 certification. The detailed assurance documentation is not always made publicly available by the provider, but it can often be obtained under non-disclosure agreements. This is clearly not as transparent a process as you would typically find in a more traditional outsourcing agreement where the consumer can conduct their own visit and due diligence. However, the need for disclosure control is often a result of restrictions placed upon the CSPs by the independent firms authoring assurance reports and not as a result of a reluctance to share on the part of the CSPs. The Big Four audit and assurance firms that commonly produce such reports, on behalf of the CSPs, will typically wish to control distribution of their outputs to reduce their own risk from those seeking to place reliance on their reports.

There are other options for obtaining assurance of the services implemented in the Cloud, primarily using vulnerability assessment and penetration testing approaches. AWS, Azure and Google all allow their clients to conduct penetration testing within their own accounts, but not across different accounts. Similar approaches are available for some PaaS services; however, it is not uncommon for SaaS providers to bar any penetration testing of their services.

This does cause concerns for consuming organisations; they may be able to check that their services are correctly configured, but they cannot test the actual barriers separating their virtual environment from those of other tenants within the CSP infrastructure. Consumers must be comfortable with trusting the effectiveness of the controls put in place by their CSPs to segregate their customer environments.

Availability

In theory, Cloud services should offer greater availability than their on-premises equivalents due to their greater geographic diversity and wide use of virtualisation. However, to quote the American Baseball legend Yogi Berra, “In theory there is no difference between theory and practice. In practice there is.”

Consumers are well-advised to closely examine the guaranteed availability service levels contained within the contracts of their likely Cloud providers. Service levels tend to be around the 99.5% mark with little in the way of recompense should the providers fail to meet those targets.

In the on-premises world, consumers can aim for higher service levels and implement their own measures to ensure those service levels are met, e.g. backup data centres, uninterruptible power supplies and on-site generators. Just as importantly, organisations can conduct their own disaster recovery exercises by switching across data centres as often as they wish to ensure that the failover processes work correctly. Such testing is not as straightforward for Cloud providers due to the number of clients potentially having their service adversely affected.

Outages in Cloud services are usually widely reported, and this can give an exaggerated impression of the relative stabilities of Cloud services versus on-premises equivalents. Consider how much press attention is paid to outages in the Microsoft Office 365 service25 compared to an outage in the Exchange infrastructure of any individual organisation.

For smaller organisations, without the luxury of backup data centres, the availability offered by Cloud services is likely to be no worse than that available to them on-premise. For large enterprises that have invested in the hardware to support five 9's availability (99.999%) the public Cloud is unlikely to offer equivalent levels of service for business-critical applications. Private Clouds should be able to meet equivalent service levels to traditional deployments, as the private Cloud is dedicated to a single consuming organisation and the consumer can invest as much capital as they need to meet their desired levels of availability.

Organisations considering a move to a Cloud model should confirm any existing rationale underlying expensive high availability requirements with their business stakeholders prior to discounting a move to the public Cloud. It is not uncommon for services to have been assigned high availability requirements ‘just to be on the safe side’ where business stakeholders have not been able to provide more realistic requirements.

Shadow IT

One of the strengths of the Cloud model is the ease of procurement and implementation, particularly when it comes to SaaS. Any individual with a credit card and Internet access can establish a presence on a Cloud service; this can cause issues in the enterprise space where users may decide to establish such a presence without going through central procurement and IT processes. Such actions can result in business critical processes being delivered by services that enterprise IT has no awareness of, or the transfer of regulated data to inappropriate locations. This lack of visibility and awareness underlies the rationale for the labelling of this phenomenon as ‘Shadow IT’. Shadow IT is a common feature in many enterprises as users, or indeed departments or business units, may get frustrated with central IT or procurement and decide to proceed under their own steam. We will cover the use of Cloud Access Security Broker (CASB) technologies to both identify and, then, control Shadow IT later in this book (chapter 11).

Information leakage

The ongoing skills shortage in the area of Cloud computing, and Cloud security in particular, can lead to Cloud usage being poorly secured. This impact is most commonly seen in the number of publicised security incidents relating to poorly secured S3 buckets. It should be noted that S3 buckets are not accessible from the Internet by default; every time you read about yet another organisation leaking information from their S3 buckets it is as a result of somebody, somewhere, having taken the necessary action to open up access to that specific bucket. However, it is fair to say that information stored within an S3 bucket is more at risk of compromise from the Internet than the same information stored within an on-premises Storage Area Network (SAN). A SAN will typically be behind numerous layers of defence which means that a simple single misconfiguration is unlikely to render stored information available to all.

Whilst information leakage is typically discussed in the context of S3 buckets, or very similar storage services, these are not the only sources of potential risk. Consider also Internet-accessible code repository tools such as GitHub and BitBucket. As with S3, such tools can be adequately secured if the relevant security specialists have the skills. However, if the security specialists lack the required skills, organisations can find their source code available to all; in the days of infrastructure as code, organisations can find themselves a single username/password compromise away from losing their entire environment. In short, sensitive information can be stored safely in the Cloud; however, the Internet-native nature of Cloud services will often mean that there are fewer layers of defence in place to mitigate the risk posed by a simple misconfiguration.

Lock-in

Vendor lock-in is a problem with traditional IT; it's a little more pronounced with the Cloud model.

Although significant effort has been invested to improve interoperability of, and portability between, Cloud services, it is still not straightforward to move an IT service from one Cloud to another. The Distributed Management Task Force's Open Virtualisation Format26 (OVF) is supported by a number of the well-known on-premises hypervisor vendors (e.g. VMware, XenSource, KVM, Dell, IBM, etc.) and related OVA (Open Virtual Appliance) files; essentially, a compressed archive of OVF files can be imported and exported onto the AWS Cloud via their VM Import/Export capability.27 It is not as straightforward to import or export such images via the other main IaaS Cloud providers. A cynic could argue that it is not in the commercial interests of IaaS providers to make it straightforward for their consumers to switch providers. Third-party tooling is available to manage the migration of workloads across different Cloud providers to provide a degree of portability, e.g. RightScale.28 An alternative approach is to look to containerise workloads using tools such as Docker, and, then, look to move these containers between Cloud providers as necessary.

Unfortunately, lock-in is not limited to virtual machine image or container formats. What about data? Many Cloud provider cost models involve making it considerably more expensive to take data out of their Clouds than it is to place data within their Clouds. For example, AWS currently charges consumers up to $0.09 per GB29 for data transfers out of their Clouds using a tiered pricing model. AWS do not charge for data transferred into their Cloud (other than the storage costs once the data has been transferred). This becomes more of an issue for any consumers that use an IaaS-hosted application to generate data in which case they may have significantly more data to get out than they put in.

The question of data export is also an issue for consumers of PaaS and SaaS services where data may be stored in specific formats or again be more expensive to export than import. However, data export is not the largest lock-in threat for PaaS. Applications must be coded differently to run on different PaaS services due to the variance in the languages they support and in platform capabilities; for example, an application coded to run on Microsoft Azure would not run on the Force.com Apex platform. Even where PaaS providers make use of the same underlying language (e.g. C#, Java, Ruby, etc.) the available libraries or APIs may vary. This issue is even more pronounced when it comes to FaaS: FaaS applications will typically be tightly bound to the underlying serverless capabilities of the platform, e.g. for storing of state data, persistence of data, access control, observability, et cetera. PaaS and FaaS consumers must, therefore, be cognisant of the costs involved in porting their applications when considering the trade-offs between agility, functionality and portability.

Switching SaaS providers is more straightforward than switching either IaaS or PaaS providers; consumers must be able to export their data from their existing provider and be able to transform this data into the form expected by a new provider. By 'data' I don't just mean business data, data such as audit data and access management information must also be preserved such that security and/or compliance holes are not created through the switch of providers. Finally, consumers must be aware of the potential impact of switching SaaS providers on the back-end business processes. If an organisation has tailored their business processes to reflect the capabilities of their existing SaaS provider, then changing that provider could require substantial reworking of the relevant business processes. Such a reworking is likely to have an adverse impact upon the dependent business services during the changeover period.

In any type of migration or portability scenario, enterprises must not forget the need to have suitably trained resources able to operate the new platform appropriately; this training of existing staff, or hiring of new staff, does not come without cost, particularly when the number of such specialist resources may be limited.

Multi-tenancy

There can be no denying that multi-tenancy adds risk to Cloud services in comparison to traditional on-premises deployment models. Whether sharing takes place at the data, compute, network or application layer, sharing is still taking place. This means that there is a boundary between your service and those of other tenants that would not be there in a traditional deployment. For a private Cloud, organisations may not care that different business units now share a boundary. For a public Cloud, organisations may be greatly concerned that they could be sharing a boundary with their most hostile competitors or other highly capable threat actors.

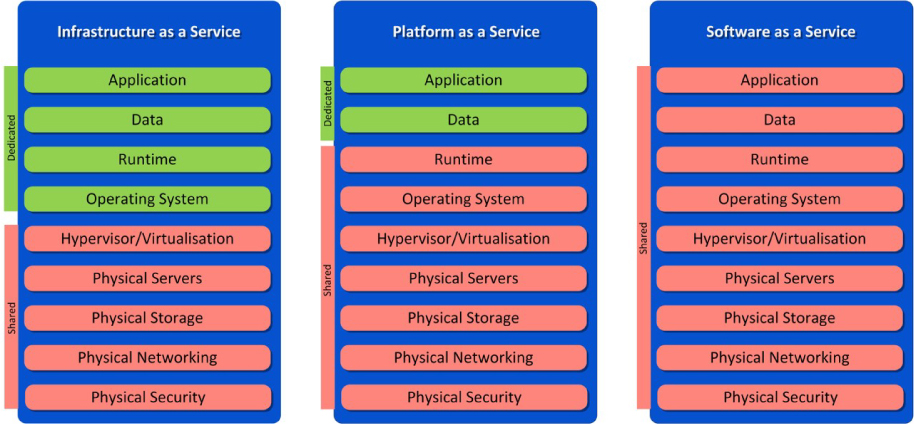

Figure 2: Levels of sharing

Figure 2 illustrates the increasing levels of resource sharing as you move up the IT stack from IaaS through to SaaS, with the barriers between the different tenants sitting in different places depending upon the chosen delivery model.

The issue of multi-tenancy is most commonly discussed at the infrastructure level, particularly with regard to hypervisor security. If an attacker can use a weakness in the hypervisor to jump from their virtual machine into yours then there is little that you can do to protect yourself. Hypervisor security is also, obviously, an issue for any PaaS or SaaS service that relies on server virtualisation to host their services. Hypervisors should not be viewed as security barriers; hypervisors are primarily there to enable organisations to consolidate their physical servers and to offer increased agility in terms of server deployment. Server virtualisation has been subject to extensive research by the security community (e.g. the work of Joanna Rutkowska et al at www.invisiblethingslab.com). Hypervisors have not escaped from such scrutiny unscathed. In 2009 Kostya Kortchinsky of Immunity Security discovered a means of executing code in the underlying VMware host from a guest machine.30 This issue was fixed in subsequent releases of the VMware hypervisor, but the principle was proved – hypervisor hacking is not just a theoretical threat.

More recently, security researchers have turned their attention to security at the hardware level, i.e. the ability to exploit weaknesses within physical RAM or the CPU itself. Bitflipping attacks against RAM, such as Rowhammer,31 can be used to escalate privileges to Ring 0 (the kernel), whereas, CPU weaknesses, such as those labelled Meltdown and Spectre,32 can lead to arbitrary memory reads. In a Cloud context, this could lead to an attacker escaping their VM to read memory contents belonging to other tenants operating on the same physical hardware. This is a risk that Cloud consumers can seek to manage by only using dedicated hosts within IaaS Clouds, at additional cost; alternately, they can explicitly accept the risk on the basis that the major CSPs have access to excellent threat intelligence and will usually be part of any such vulnerability embargo process whilst fixes are developed. This was the case during the Meltdown and Spectre remediation process: the major CSPs had fixes in place, where these were available, in advance of smaller players and traditional, on-premises enterprises.

There are other forms of multi-tenancy, each with their own threats. Storage can be shared but organisations need to be aware of the risks associated with iSCSI storage.33 Back-end databases can be shared, in which case organisations need to be comfortable that the security controls within the underlying database are sufficiently strong. For example, Salesforce.com is driven off a shared back-end database per POD (point of deployment, essentially an instance of the Salesforce.com application) with each customer having a specific Organization ID (OrgID) used to separate out their data through partitioning.34 Networks can be shared using a variety of virtualisation technologies; Cisco for example offer Virtual Routing and Forwarding (VRF) and Virtual Device Context (VDC) technology in addition to the well-established Virtual LAN (VLAN) technology. All of which leads to increased sharing of physical network equipment and cabling.

As well as the direct threats to confidentiality posed by attackers breaking through whatever multi-tenancy boundary is relevant to your service model, multi-tenancy also comes with some second-order threats. For example, suppose you share a service with another tenant that undergoes a massive spike in demand (e.g. through a distributed denial of service attack (DDos)). The Cloud only gives an impression of infinite resource, there are still physical limits on the compute, network bandwidth and storage availability; such a DDoS could exhaust the available bandwidth taking out your own service as collateral damage. This is more of a risk with smaller, often local providers that do not have the scale of the global Cloud providers and their individual Cloud distribution networks. Another, real world, example of second-order damage occurred when the FBI suspected a customer of DigitalOne, a Swiss-based service provider, of being related to their investigation of the Lulzsec hacking crew. Rather than simply taking the three servers suspected of being involved in the illegal activity, FBI agents unwittingly removed three enclosures of servers effectively knocking several DigitalOne customers off the Internet35. Whilst law enforcement seizures of equipment are a relatively rare event, it is something that Cloud providers should be able to cater for, e.g. through appropriate disaster recovery mechanisms.

There is no alternative to having multi-tenancy at some level in a true Cloud service – it is this level of sharing and increased utilisation of shared resources that drive the underlying economics providing the savings associated with Cloud models. Even if dedicated hardware is used, the networking and back-end storage will still likely be shared, alongside some level of infrastructure support.

Cloud consumers need to ensure that they understand where their new boundaries lie when they work in the Cloud and implement suitable controls to secure, or at least monitor, these new boundaries.

Visibility

Cloud consumers do not have the same levels of visibility to the network traffic that they are used to in more traditional deployment scenarios. Consumers cannot install physical network taps in public Cloud providers to provide full packet capture capabilities, particularly in PaaS or SaaS environments36. Cloud providers will perform their own network monitoring activities in order to protect the services that they offer; however, Cloud consumers must be prepared to sacrifice a level of network visibility if moving towards Cloud services.

Inflexible and/or inadequate terms and conditions

Most public Cloud providers offer standard 'click wrap' terms and conditions that users sign up to when they create their accounts. Unless your organisation is of significant scale or importance, there is little opportunity to negotiate individual terms and conditions more suited to your own individual requirements. This is an area where private and community Clouds offer more protection and more flexibility than their public equivalents.

Research by Queen Mary's College of the University of London37 shows that the standard terms and conditions of the major public Cloud providers typically offer little in the way of protection to the consumer in the event of the service provider failing to protect their service or data. Whilst this research is now fairly old in Cloud terms (published in 2011), the underlying themes remain relevant and little new research has been conducted in this area since.

A survey38 of Cloud provider terms and conditions conducted by Queen Mary researchers found that “most providers not only avoided giving undertakings in respect of data integrity but actually disclaimed liability for it”. Most providers include terms making it clear that ultimate responsibility for the confidentiality and integrity of customer data remains with the customer. Furthermore, many providers explicitly state that they will not be held liable to their consumers for information compromise, e.g. even the current Amazon Web Service AWS Customer Agreement (as updated on 1 November 2018) (https://aws.amazon.com/agreement/) disclaims any liability for:

ANY UNAUTHORIZED ACCESS TO, ALTERATION OF, OR THE DELETION, DESTRUCTION, DAMAGE, LOSS OR FAILURE TO STORE ANY OF YOUR CONTENT OR OTHER DATA.

Interestingly, given the general concern around the location of data within Cloud services, the Queen Mary researchers found that 15 of the 31 surveyed providers made no mention of the geographic location of data or protection of data in transit between their data centres within their terms and conditions.

One other important point regarding Cloud provider terms and conditions is the recompense available to consumers should their Cloud services be unavailable. Such recompense is usually extremely limited (typically in the form of service credits), and bearing no relation to the actual business impact of such an outage on the Cloud consumer. Consumers are, therefore, well-advised to maintain tested disaster recovery plans even when implementing using Cloud-based services.

On the positive side, the Queen Mary research found no evidence of Cloud providers attempting to claim ownership of Intellectual Property that consumers may upload to the Cloud. This was an issue that dogged the adoption of Cloud computing at the outset, and authoritative research in this area had been lacking.

8 https://perspectives.mvdirona.com/2019/02/aws-nitro-system/.

9 https://cloud.google.com/blog/products/gcp/titan-in-depth-security-in-plaintext.

10 https://eclypsium.com/2019/01/26/the-missing-security-primer-for-bare-metal-cloud-services/.

11 Essentially different data centres within a specific geographic Region.

12 This could be for a number of reasons, one good example being that of organisations running business critical systems on mainframes that cannot be ported to cloud services.

13 https://martinfowler.com/bliki/BlueGreenDeployment.html.

14 www.spinnaker.io/concepts/.

15 www.ca.com/~/media/Files/IndustryResearch/security-of-cloud-computing-providers-final-april-2011.pdf.

16 SOC2 Type II reports examine whether a set of claimed controls are implemented and operated in accordance with those claims. ISAE3402 (International standard) and SSAE16 (US equivalent) replaced the well-known SAS70 style reports.

17 https://aws.amazon.com/artifact/.

18 https://servicetrust.microsoft.com/.

19 https://cloud.google.com/security/compliance/#/.

20 https://aws.amazon.com/guardduty/.

21 https://azure.microsoft.com/en-us/services/azure-sentinel/.

22 www.kaspersky.co.uk/resource-center/threats/ransomware-wannacry.

23 www.wired.com/story/notpetya-cyberattack-ukraine-russia-code-crashed-the-world/.

24 https://cloudsecurityalliance.org/star/#_overview.

25 For example, www.wired.co.uk/article/microsoft-office-365-down-multi-factor-authentication-login.

26 www.dmtf.org/standards/ovf.

27 https://aws.amazon.com/ec2/vm-import/.

29 This refers to the pricing available from https://aws.amazon.com/s3/pricing/ for the EU (London) Region on 3 March 2019.

30 www.blackhat.com/presentations/bh-usa-09/KORTCHINSKY/BHUSA09-Kortchinsky-Cloudburst-SLIDES.pdf.

31 http://dreamsofastone.blogspot.com/2016/05/row-hammer-short-summary.html.

32 https://googleprojectzero.blogspot.com/2018/01/reading-privileged-memory-with-side.html.

33 For example, www.isecpartners.com/files/iSEC-iSCSI-Security.BlackHat.pdf (old, but a worthwhile read).

34 www.salesforce.com/au/assets/pdf/Force.com_Multitenancy_WP_101508.pdf.

35 https://bits.blogs.nytimes.com/2011/06/21/f-b-i-seizes-web-servers-knocking-sites-offline/.

36 IaaS consumers do have some options to use cloud provider offerings such as Azure Network TAP or VPC Traffic Mirroring to capture network traffic as discussed in Part 2 of this book.

37 www.cloudlegal.ccls.qmul.ac.uk/research/.

38 www.cloudlegal.ccls.qmul.ac.uk/research/our-research-papers/cloud-contracts/terms-of-service-analysis-for-cloud-providers/.