For this next task, we'll leverage a couple libraries discussed in Chapter 8, Classifying Images with Convolutional Neural Networks, TensorFlow and Keras. Both can be pip installed if you haven't done that already.

We're also going to use the type of advanced modeling discussed earlier in the chapter; it's a type of deep learning called sequence-to-sequence modeling. This is frequently used in machine translation and question-answering applications, as it allows us to map an input sequence of any length to an output sequence of any length:

Francois Chollet has an excellent introduction to this type of model on the blog for Keras: https://blog.keras.io/a-ten-minute-introduction-to-sequence-to-sequence-learning-in-keras.html. It's worth a read.

We're going to make heavy use of his example code to build out our model. While his example uses machine translation, English to French, we're going to repurpose it for question-answering using our Cleverbot dataset:

- Set the imports:

from keras.models import Model from keras.layers import Input, LSTM, Dense import numpy as np

- Set up the training parameters:

batch_size = 64 # Batch size for training. epochs = 100 # Number of epochs to train for. latent_dim = 256 # Latent dimensionality of the encoding space. num_samples = 1000 # Number of samples to train on.

We'll use these to start. We can examine the success of our model and then adjust as necessary.

The first step in data processing will be to take our data, get it in the proper format, and then vectorize it. We'll go step by step:

input_texts = [] target_texts = [] input_characters = set() target_characters = set()

This creates lists for our questions and answers (the targets) as well as sets for the individual characters in our questions and answers. This model will actually work by generating one character at a time:

- Let's limit our question-and-answer pairs to 50 characters or fewer. This will help speed up our training:

convo_frame['q len'] = convo_frame['q'].astype('str').apply(lambda

x: len(x))

convo_frame['a len'] = convo_frame['a'].astype('str').apply(lambda

x: len(x))

convo_frame = convo_frame[(convo_frame['q len'] < 50)&

(convo_frame['a len'] < 50)]

- Let's set up our input and target text lists:

input_texts = list(convo_frame['q'].astype('str'))

target_texts = list(convo_frame['a'].map(lambda x: ' ' + x +

'

').astype('str'))

The preceding code gets our data in the proper format. Note that we add a tab ( ) and a newline ( ) character to the target texts. This will serve as our start and stop tokens for the decoder.

- Let's take a look at the input texts and the target texts:

input_texts

The preceding code generates the following output:

target_texts

The preceding code generates the following output:

Let's take a look at those input and target-character sets now:

input_characters

The preceding code generates the following output:

target_characters

The preceding code generates the following output:

Next, we'll do some additional preparation for the data that will feed into the model. Although data can be fed in any length and returned in any length, we need to add padding up to the max length of the data for the model to work:

input_characters = sorted(list(input_characters))

target_characters = sorted(list(target_characters))

num_encoder_tokens = len(input_characters)

num_decoder_tokens = len(target_characters)

max_encoder_seq_length = max([len(txt) for txt in input_texts])

max_decoder_seq_length = max([len(txt) for txt in target_texts])

print('Number of samples:', len(input_texts))

print('Number of unique input tokens:', num_encoder_tokens)

print('Number of unique output tokens:', num_decoder_tokens)

print('Max sequence length for inputs:', max_encoder_seq_length)

print('Max sequence length for outputs:', max_decoder_seq_length)

The preceding code generates the following output:

Next, we'll vectorize our data using one-hot encoding:

input_token_index = dict(

[(char, i) for i, char in enumerate(input_characters)])

target_token_index = dict(

[(char, i) for i, char in enumerate(target_characters)])

encoder_input_data = np.zeros(

(len(input_texts), max_encoder_seq_length, num_encoder_tokens),

dtype='float32')

decoder_input_data = np.zeros(

(len(input_texts), max_decoder_seq_length, num_decoder_tokens),

dtype='float32')

decoder_target_data = np.zeros(

(len(input_texts), max_decoder_seq_length, num_decoder_tokens),

dtype='float32')

for i, (input_text, target_text) in enumerate(zip(input_texts, target_texts)):

for t, char in enumerate(input_text):

encoder_input_data[i, t, input_token_index[char]] = 1.

for t, char in enumerate(target_text):

# decoder_target_data is ahead of decoder_input_data by one

# timestep

decoder_input_data[i, t, target_token_index[char]] = 1.

if t > 0:

# decoder_target_data will be ahead by one timestep

# and will not include the start character.

decoder_target_data[i, t - 1, target_token_index[char]] =

1.

Let's take a look at one of these vectors:

Decoder_input_data

The preceding code generates the following output:

From the preceding figure, you'll notice that we have a one-hot encoded vector of our character data, which will be used in our model.

We now set up our sequence-to-sequence model-encoder and -decoder LSTMs:

# Define an input sequence and process it. encoder_inputs = Input(shape=(None, num_encoder_tokens)) encoder = LSTM(latent_dim, return_state=True) encoder_outputs, state_h, state_c = encoder(encoder_inputs) # We discard `encoder_outputs` and only keep the states. encoder_states = [state_h, state_c] # Set up the decoder, using `encoder_states` as initial state. decoder_inputs = Input(shape=(None, num_decoder_tokens)) # We set up our decoder to return full output sequences, # and to return internal states as well. We don't use the # return states in the training model, but we will use them in

# inference. decoder_lstm = LSTM(latent_dim, return_sequences=True,

return_state=True) decoder_outputs, _, _ = decoder_lstm(decoder_inputs, initial_state=encoder_states) decoder_dense = Dense(num_decoder_tokens, activation='softmax') decoder_outputs = decoder_dense(decoder_outputs)

Then we move on to the model itself:

# Define the model that will turn # `encoder_input_data` & `decoder_input_data` into `decoder_target_data` model = Model([encoder_inputs, decoder_inputs], decoder_outputs) # Run training model.compile(optimizer='rmsprop', loss='categorical_crossentropy') model.fit([encoder_input_data, decoder_input_data],

decoder_target_data, batch_size=batch_size, epochs=epochs, validation_split=0.2) # Save model model.save('s2s.h5')

In the preceding code, we defined our model using our encoder and decoder input and our decoder output. We then compile it, fit it, and save it.

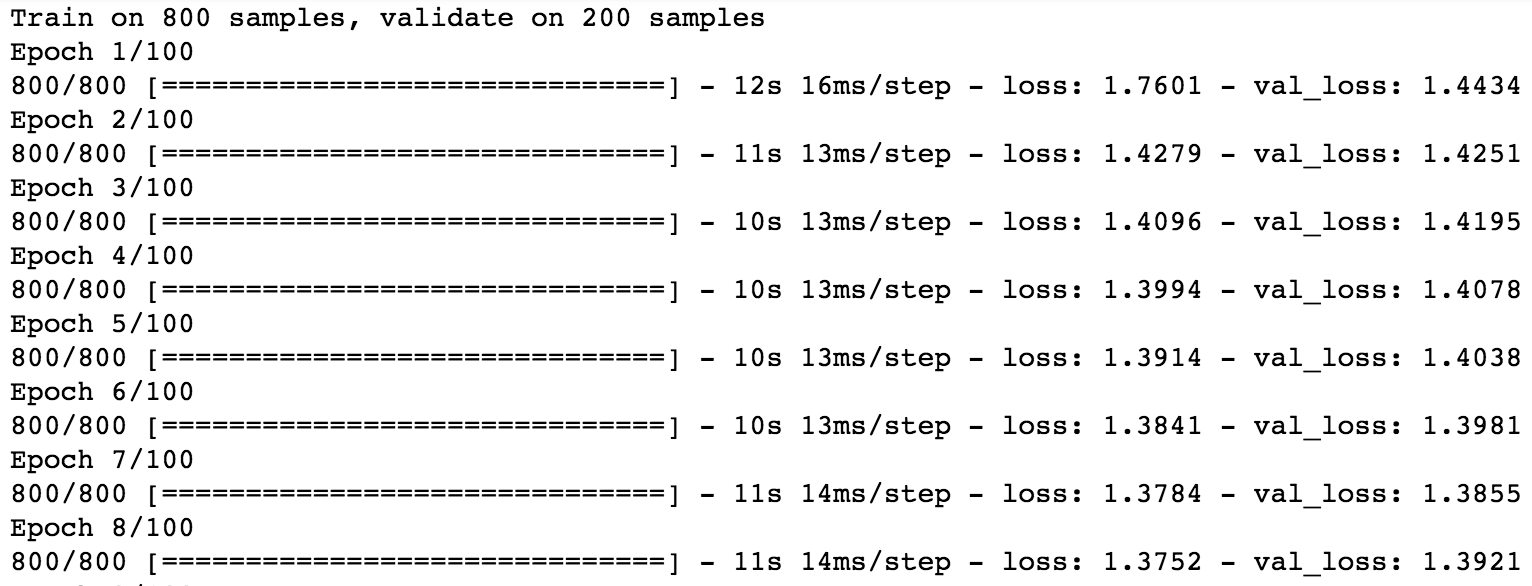

We set up the model to use 1,000 samples. Here, we also split the data 80/20 to training and validation, respectively. We also set our epochs at 100, so this will essentially run for 100 cycles. On a standard MacBook Pro, this may take around an hour to complete.

Once that cell is run, the following output will be generated:

The next step is our inference step. We'll use the states generated from this model to feed into our next model to generate our output:

# Next: inference mode (sampling).

# Here's the drill:

# 1) encode input and retrieve initial decoder state

# 2) run one step of decoder with this initial state

# and a "start of sequence" token as target.

# Output will be the next target token

# 3) Repeat with the current target token and current states

# Define sampling models

encoder_model = Model(encoder_inputs, encoder_states)

decoder_state_input_h = Input(shape=(latent_dim,))

decoder_state_input_c = Input(shape=(latent_dim,))

decoder_states_inputs = [decoder_state_input_h, decoder_state_input_c]

decoder_outputs, state_h, state_c = decoder_lstm(

decoder_inputs, initial_state=decoder_states_inputs)

decoder_states = [state_h, state_c]

decoder_outputs = decoder_dense(decoder_outputs)

decoder_model = Model(

[decoder_inputs] + decoder_states_inputs,

[decoder_outputs] + decoder_states)

# Reverse-lookup token index to decode sequences back to

# something readable.

reverse_input_char_index = dict(

(i, char) for char, i in input_token_index.items())

reverse_target_char_index = dict(

(i, char) for char, i in target_token_index.items())

def decode_sequence(input_seq):

# Encode the input as state vectors.

states_value = encoder_model.predict(input_seq)

# Generate empty target sequence of length 1.

target_seq = np.zeros((1, 1, num_decoder_tokens))

# Populate the first character of target sequence with the start character.

target_seq[0, 0, target_token_index[' ']] = 1.

# Sampling loop for a batch of sequences

# (to simplify, here we assume a batch of size 1).

stop_condition = False

decoded_sentence = ''

while not stop_condition:

output_tokens, h, c = decoder_model.predict(

[target_seq] + states_value)

# Sample a token

sampled_token_index = np.argmax(output_tokens[0, -1, :])

sampled_char = reverse_target_char_index[sampled_token_index]

decoded_sentence += sampled_char

# Exit condition: either hit max length

# or find stop character.

if (sampled_char == '

' or

len(decoded_sentence) > max_decoder_seq_length):

stop_condition = True

# Update the target sequence (of length 1).

target_seq = np.zeros((1, 1, num_decoder_tokens))

target_seq[0, 0, sampled_token_index] = 1.

# Update states

states_value = [h, c]

return decoded_sentence

for seq_index in range(100):

# Take one sequence (part of the training set)

# for trying out decoding.

input_seq = encoder_input_data[seq_index: seq_index + 1]

decoded_sentence = decode_sequence(input_seq)

print('-')

print('Input sentence:', input_texts[seq_index])

print('Decoded sentence:', decoded_sentence)

The preceding code generates the following output:

As you can see, the results of our model are quite repetitive. But then we only used 1,000 samples and the responses were generated one character at a time, so this is actually fairly impressive.

If you want better results, rerun the model using more sample data and more epochs.

Here, I have provide some of the more humorous output I've noted from much longer training periods: