Localization can be achieved using a similar network architecture to the one we learned about in Chapter 3, Image Classification in TensorFlow.

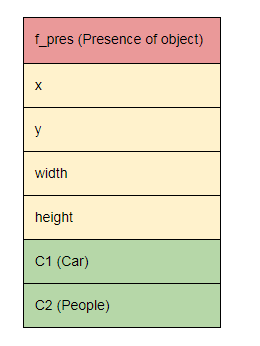

In addition to predicting the class labels, we will output a flag indicating the presence of an object and also the coordinates of the object's bounding box. The bounding box coordinates are usually four numbers representing the upper-left x and y coordinates, along with the height and width of the box.

For example, in this case, we have two classes, C1 (Car) and C2 (People), to predict. The output of our network will look something like this:

The model would work as follows:

- We feed an input image to a CNN.

- The CNN produces a feature vector that is fed to three different FC layers. Each of these different FC layers, or heads, will be responsible for predicting a different thing: Object presence, Object location, or Object class.

- Three different losses are used in training: one for each head.

- A ratio from the current training batch is calculated to weigh how much influence the classification and location loss will have, given the presence of objects. For example, if the batch has only 10% of images with objects, those losses will be multiplied by 0.1.

Note that important distinction between classification and regression is that with classification, we get discrete/categorical outputs, whereas regression provides continuous values as output. We present the model in diagram as follows:

From the diagram, we can clearly see the three heads of fully-connected layers, each outputting a different loss (presence, class, and box). The losses used are logistic regression/log loss, cross entropy/softmax loss, and Huber loss. Huber loss is a loss we haven't seen before; it is a loss used for regression that is a sort of combination of the L1 and L2 losses.

The regression loss for localization gives some measure of dissimilarity between the ground-truth bounding-box coordinates of the object in the image and the bounding-box coordinates that the model predicts. We use the Huber loss here, but various different loss functions could be used instead, such as L2, L1, or smooth L1 loss.

The classification loss and localization loss are combined and weighted by a scalar ratio. The idea here is that we are only interested in backpropagating the classification and bounding-box losses if there is an object there in the first place.

The complete loss formula for this model is as follows:

![]()