Originally conceived in the early 1940s as a mathematical construct, the artificial neural network was popularized in the 1980s through a method called backpropagation. Backprop, for short, allows an artificial neural network to adjust the weights of each layer at every epoch of training. In the 1980s, the limits of computational power only allowed for a certain level of training. As the computing power expanded and the research grew, there was a renaissance with ML.

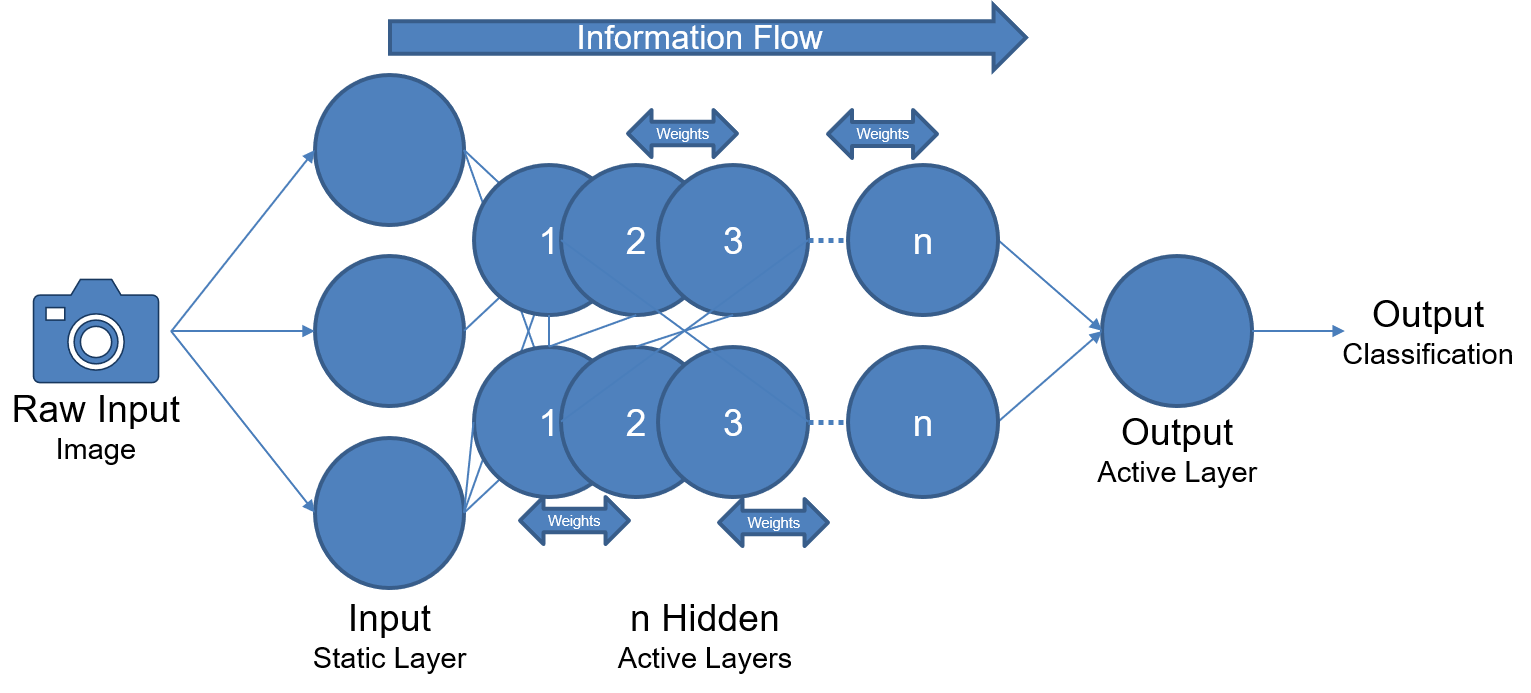

With the advent of cheap computing power, a new technique was born: deep neural networks. Utilizing the ability of GPUs to compute tensors very quickly, a few libraries have been developed to build these deep neural networks. To become a deep neural network, the basic premise is this: add four or more hidden layers between the input and output. Typically, there are thousands of neurons in the graph and the neural network has a much larger capacity to learn. This construct is illustrated in the following diagram:

This represents the basic architecture for how a deep neural network is structured. There are plenty of modifications and basic restructuring of this architecture, but this basic graph provides the right pieces to implement a Deep Neural Network. How does all of this fit into GANs? Deep neural networks are a critical piece of the GAN architecture, as you'll see in the next section.