6

Intelligent Computation Offloading in the Context of Mobile Cloud Computing

Zeinab MOVAHEDI

Iran University of Science and Technology, Tehran, Iran

6.1. Introduction

Nowadays, due to the progress of mobile technologies in terms of both software and hardware, mobile devices, whose use is on the rise, are becoming an intrinsic part of our everyday life (Li et al. 2014). Given these advances, the role of mobile phones has evolved from a simple communication device to an essential tool for many other applications addressing our various daily needs. Examples of such applications include simulations, compression/decompression, image processing, virtual reality, video games, etc. Moreover, in response to the high expectations of mobile users, future mobile devices will use increasingly sophisticated applications. The development of these new applications is, nevertheless, restricted by mobile device limitations in terms of storage space, computation power and battery lifetime (Chen et al. 2015).

On the other hand, recent advances in telecommunication networks, in terms of data transmission throughput as well as number of users taken on, offer an opportunity for computation and greedy data offloading to cloud computing and storage center. This latter paradigm, known as mobile cloud computing (MCC), may give rise to a significant number of emerging applications, which are either not possible given the current performance of mobile devices, or are feasible, but require significant processing time and battery energy consumption, therefore frequent recharging of the mobile device (Abolfazli et al. 2014; Khan et al. 2014).

NOTE.– Mobile cloud computing is a new mobile technology paradigm, in which the capacities of mobile devices are extended using the resources of cloud data centers and computing.

Nevertheless, the efficiency of data and computation offloading to the cloud strongly depends on radio connection quality. The latter is essentially variable in space and time in terms of signal quality, interference, transmission throughput, etc. This dynamic context requires the use of an offloading decision mechanism in charge with determining if a data and computation offloading is beneficial. The offloading benefit is generally evaluated in terms of completed run time and amount of energy consumed, while considering the status of the underlying network (Zhang et al. 2016). Moreover, given the overlap of mobile networks such as WIMAX and LTE with wireless local area networks (WAN) such as Wi-Fi and femtocell, as well as the multi-homing property of current mobile devices, the offloading decision mechanism could also contribute to the selection of the appropriate access network for the transmission of the offloaded task data (Magurawalage et al. 2015).

Moreover, given that cloud service providers are competing to attract more customers, they may play a role on the MCC market, leading to a multi-cloud environment for mobile device users. In such an environment, the offloading decision mechanism should determine the appropriate cloud provider based on their quality of service and on the cost of computing and storage resources dedicated to the user’s query. Market price may however vary depending on supply and demand conditions and interprovider competition (Hong and Kim 2019).

This dynamic context of multi-access networks and multi-clouds requires the enhancement of offloading decision mechanisms by artificial intelligence (AI) tools, enabling a multicriteria decision that best responds to the needs of mobile users in the real world. In this context, this chapter deals with various AI applications for offloading efficiency optimization from the perspective of mobile users, cloud providers and access network providers.

The structure of the rest of this chapter is as follows: Section 6.2 defines the basic offloading notions; section 6.3 presents the MCC architecture in classical access networks and also in cloud-radio access networks; a detailed presentation of the offloading decision is then presented in section 6.4; section 6.5 deals with proposed AI-based solutions to solve the offloading problem; finally, section 6.6 concludes this chapter and proposes several future research directions.

6.2. Basic definitions

As previously described, computation offloading relies on cloud servers that process computations in order to optimize the run time of the mobile application and the energy consumed for task execution.

Given the mobility of the mobile user, the dynamic nature of the radio channel and the variable cost of storage resources and cloud processing, offloading full computations may not always be the best choice. For example, in cases with low bandwidth of the access network, it is more interesting to offload only a subpart of the complete task that corresponds to greedy computation but has very light input data.

NOTE.– Offloading the entire computation may, in some situations, not lead to optimal run time and energy consumption.

Consequently, fine-grain or coarse-grain computation offloading can be done depending on the respective task characteristics and on the surrounding context (Khan et al. 2015; Wu 2018).

The two types of offloading are described in further detail in the following sections.

6.2.1. Fine-grain offloading

DEFINITION.– Fine-grain offloading involves the outsourcing of only one subpart of the original task to the cloud.

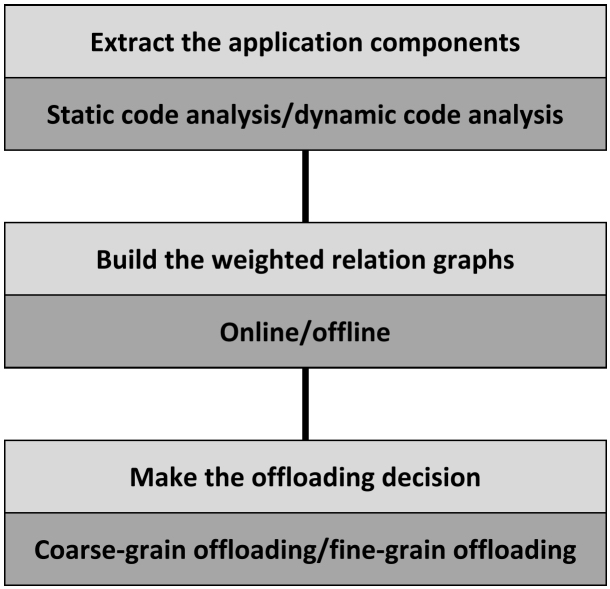

In order to determine the subpart of the task whose outsourcing optimizes the application run time and the energy consumed by the mobile device, task components must first be extracted. This can be done based on granularity level, such as class, object and thread. Independently of the chosen granularity level, application components can be extracted with a static code analysis approach or with a dynamic code analysis approach. In the static code analysis, application components are extracted without code execution, while dynamic code analysis relies on the extraction of application components during code execution.

Static code analysis makes it possible to extract components and build the weighted relation graph (WRG) of the application (as described below) prior to the user making an offloading decision. Consequently, the time required for reaching the offloading decision result is shorter. Nevertheless, using static code analysis to extract the application components leads to a less accurate result, particularly for certain types of granularity, for which the program components could vary from one execution to another.

EXAMPLE.– If offloading granularity is considered per object, dynamic code analysis is more appropriate for extracting the application components, as code objects can be created and destroyed depending on the conditions during the application execution.

The WRG of the application can be built from the application components extracted by static or dynamic analysis. It is a graph WRG=(V ,E,WV,WE), in which each vertex v ∈ V represents a component of the application and each edge e ∈ E describes the invocation between the two endpoint components. Each wv ∈ WV and we ∈ WE represents, respectively, the weight of a vertex v and of an edge e of the graph. Each vertex and edge of this graph is, respectively, weighted according to the number of instructions in the corresponding component and to the amount of data transmitted between the two adjacent components.

An example of application code and the corresponding WRG are illustrated in Figure 6.1.

Figure 6.1. Conversion of an application code into a weighted relation graph (Movahedi 2018)

Based on the WRG, the fine-grain offloading decision algorithm should determine the components of the application whose offloading ensures optimal run time and energy consumption. This is a multicriteria decision whose efficiency depends on its context awareness, including the underlying radio and cloud environments.

Figure 6.2 illustrates the conceptual model describing the various stages required for making the fine-grain offloading decision.

Figure 6.2. Stages of offloading decision

6.2.2. Coarse-grain offloading

Despite the efficiencies of fine-grain offloading, some works prefer either to offload the entire application or not to offload a program at all. This latter approach, known as “coarse-grain offloading”, is motivated by the decision simplicity compared to fine-grain offloading.

DEFINITION.– Coarse-grain offloading involves outsourcing the execution of an entire program to the cloud.

NOTE.– Coarse-grain offloading is beneficial especially when a program cannot be divided into several components.

Despite the decision rapidity enabled by coarse-grain offloading, this approach wastes the advantages related to offloading application subparts. These advantages relate to the optimization of the application computing time as well as to the mobile device battery consumption.

To address this problem, certain works divide the application into several subtasks that are not interrelated. This enables making the appropriate decision for each component of the application without having to manage the impact of invocations between components. If these works are taken into account, coarse-grain offloading could be redefined as follows:

DEFINITION.– Coarse-grain offloading is more generically defined as individual offloading of program tasks. As a result, the application is either considered in its entirety or as several independent components.

Coarse-grain offloading of several components of an application makes it possible to preserve the simplicity advantage of the offloading decision while at the same time benefiting of an individual decision for each component of the program, and thus improving the resulting efficiency. Nevertheless, dividing the program into several unrelated components is complicated and depends on offloading granularity, functionalities of the components extracted from this granularity and the characteristics and connections of these components. Sometimes there may be a need to aggregate several related components in order to represent them by only one combined component.

A further possibility is to normally extract the components of an application, relying on the chosen granularity, but to represent the connection between two components via the intermediary of its main program, which is obviously implemented in the mobile device. In other terms, each component takes its input parameters from the mobile device and returns its outputs to the mobile device, which then plays the role of intermediary to invoke the connected component.

Processing involves the conversion of the weighted relations graph into a bidirectional star graph. For this purpose, the main component plays the role of the central vertex of the star graph, and the application components play the role of surrounding vertices, each of which is connected to the central vertex by an input edge and an output edge. The input edge with one component designates the input invocation(s) of one or several other components for which the main component acted as intermediary. It is weighted by the sum of weights of the components invoking this component.

Similarly, the output edge of a component represents the output results of this component transmitted to the main in order to be retransmitted to the related component(s). The weight of the output edge is the sum of the weights of the WRG edges receiving an input from this component.

REFRESHER.– A star graph is a connected graph all of the vertices but one of which are degree 1. It can also be seen as a tree with one node and k leaves, at least when k > 1.

Figure 6.3 illustrates the weighted star graph corresponding to the relation graph in Figure 6.1.

Figure 6.3. Star graph

6.3. MCC architecture

In order to clarify the offloading decision ecosystem, this section describes the generic architecture of MCC. Moreover, it presents the C-RAN-based architecture of MCC.

6.3.1. Generic architecture of MCC

As illustrated in Figure 6.4, the generic architecture of MCC is composed of five basic elements, namely the mobile device, the access network, the backhaul and backbone network, the cloud and the offloading decision middleware. A detailed presentation of each of these elements is given below.

Figure 6.4. Generic architecture of MCC (Gupta and Gupta 2012)

6.3.1.1. Mobile device

The mobile device is a wireless portable device executing computation-intensive tasks. Smartphones, laptops, personal digital assistants (PDA), wearable devices, sensors and embedded systems (such as RFID readers and biometric readers) are examples of mobile devices in charge of computation-intensive tasks. It is worth noting that mobile devices are, by their nature, characterized by limited battery lifetime and computing power.

6.3.1.2. Access network

The role of the radio access network is to transmit the input data of the components of the application to be outsourced. It also yields the final result of cloud computations. The transmission of input data to the cloud uses an ascending connection, while the transmission of the final result of the component processing to the mobile device uses the descending connection of the access network. The radio connection characteristics in terms of throughput, interference and channel stability greatly influence processing time and transmission energy. It is however difficult to determine its characteristics in advance and for the whole transmission duration, given their strong variation in time and space. This is due to the dependence of these parameters and other factors, such as the mobile user positioning with respect to the access point, the surrounding sources of interference, the number of customers in the cell as well as the users’ transmission power, etc.

6.3.1.3. Backhaul and backbone networks

The backhaul network connects the base station or the point of access to the core network (or backbone network), on which the cloud can be accessed. The backhaul network can be wired or wireless, while the core network is generally wired and Internet based. Given that, after having reached the point of access, the input and output data must be also transported on the backhaul and backbone networks, the quality of service offered by these two networks is among the important parameters influencing offloading efficiency.

6.3.1.4. Cloud

The cloud is a powerful remote computing and storage center, accessible through the Internet and usable for data storage or computing. Although the use of cloud storage provisioned by large providers on the Internet, here referred to as “distant cloud”, offers advantages in terms of computing power and enormous storage space, data transportation through backhaul and backbone connections adds up transmission time leading to an increase in the overall time required for remote task accomplishment.

To address this problem, the edge cloud concept emerged, according to which the processing and storage resources are brought in the proximity of the mobile user (Magurawalage et al. 2015; Mach and Becvar 2017). Although the resources of the edge cloud may not be as rich as those of the distant cloud, the former reduces the offloading delay and improves the processing agility by increasing the end-to-end bandwidth and sharing local resources (Zhang et al. 2016; Jiang et al. 2019).

A mobile form of the edge cloud, composed of close mobile devices, is also possible in order to take advantage of external resources when the connection to the point of access is inappropriate or inaccessible (Zhang et al. 2015). This mobile cloud, which is also known as an “ad hoc cloud” or a “D2D1 cloud”, is beneficial not only because it does not depend on wireless connection availability or quality at the point of access, but also due to the significant transmission throughput accessible among local devices, and to sparing the costs related to the use of radio resources. Obviously, the resources made available by the D2D cloud are well below those offered by the distant cloud or by the edge cloud.

6.3.1.5. Offloading decision middleware

The offloading decision middleware is a software element of the MCC architecture in charge with the offloading decision. In terms of physical position, this element can be located on the mobile device, outsourced, at the point of access, on the cloud or at a third point dedicated to the offloading decision.

NOTE.– The decision middleware can be implemented inside or outside the mobile device.

6.3.2. C-RAN-based architecture

In this approach, the classical radio network is replaced by C-RAN, enabling among others points the enrichment of the offloading decision by knowledge accessible via the cloud of the radio network.

REFRESHER.– C-RAN architecture is a new network architecture in which baseband and channel processing are outsourced to a centralized base band unit (BBU) located in the cloud. Consequently, the wireless antenna, known as the remote radio head (RRH), acts only as a relay that compresses and connects the signals received from the user equipment to the BBU pool through fronthaul wireless connections.

Given the radio communications-dedicated cloud, the offloading decision execution could also be outsourced to the C-RAN. This would enable the execution of the decision by the proximity servers, which have abundant resources. Moreover, the decision may take advantage of the information collected by the C-RAN, concerning user access network throughout its motion from one point to another (characteristics of the visited networks, etc.). This context knowledge would facilitate a more realistic decision making and would thus optimize the run time and the energy consumed to run the application.

6.4. Offloading decision

This section first presents the various models for the positioning of the offloading decision middleware. Then, it describes the decision variables and the offloading modeling.

6.4.1. Positioning of the offloading decision middleware

This section describes the various MCC architectures from the perspective of the offloading decision location. The decision middleware positioning is important, given its impact on the complexity admitted during the development of offloading decision algorithms.

6.4.1.1. Middleware embedded in the mobile device

In this architecture, whose representation is similar to the one illustrated in Figure 6.4, the offloading decision middleware is embedded in the mobile device. The main advantage of this approach is that offloading is fully independent from any third party as long as the access network and the cloud are accessible. This architectural approach however requires the use of light decision algorithms that can be run within acceptable times on mobile devices with limited computing power.

6.4.1.2. Outsourced middleware

In this second architectural approach, the offloading decision middleware is implemented outside the mobile device, most often in the edge cloud or on a server that is close to the mobile user. In the C-RAN-based architecture, the decision middleware can also be implemented in the BBU pool (Cai et al. 2016). Important decision parameters such as radio network and cloud characteristics should therefore be transmitted to the decision middleware.

Two models of outsourced decision-based architecture can be distinguished. The first is a model in which only the offloading decision is assigned to the distant middleware; this decision is, nevertheless, returned to the mobile device to be run by the application. The application components chosen for offloading are then transmitted from the mobile device to the cloud. In the second model, besides the WRG of the application, the program code is also transmitted to the middleware in the form of a virtual machine (VM) or container to enable the launching of the decision reached from the decision middleware.

NOTE.– In the outsourced decision-based architecture, the components of the application can thus be transmitted to the decision middleware. The latter makes it possible to apply the decision from the middleware, which avoids returning the decision to the mobile device prior to starting the execution of the application according to the decision made.

The model of the offloading decision application is not that important: the main advantage of this architecture is the computation power of the external middleware compared to that of the mobile device architecture. This property enables the use of more sophisticated decision algorithms with no decision agility loss. However, outsourcing the decision to a distant server introduces an additional delay in the transmission of decision parameters to the middleware.

6.4.2. General formulation

The formulation of the offloading problem depends on the single or multiple contexts of the cloud and of the access network in which the offloading is done. Multi-site offloading can use several clouds such as distant cloud, edge cloud, D2D cloud or a combination of these types of cloud. Moreover, in the context of multi-access networks, several access networks of a macro or micro type are accessible. Based on these terminologies, a distinction can be made between single-access single site, single-access multi-site, multi-access single-site and multi-access multi-site.

In what follows, this section considers the most general case of a multi-access multi-site context and proposes a generic formulation applicable to all four offloading environments.

6.4.2.1. Offloading decision variables

Considering the multi-access multi-site context, the definition of the offloading decision variables takes into account the problems to which the decision attempts to find a solution. These problems are follows:

- – for each application component, determine if it should be locally or remotely run in order to reach an optimal result (“how to run”). Given C ={c1,c2,...,cN} (set of components of the application where N = |C|), the solution to this problem is described by a set P ={p1,p2,...,pN} of size N, where each element pi is 0 (respectively, 1) if a decision is taken to locally run (respectively, offload) the corresponding component ci (pi ∈{0,1} ). It is worth noting that the representation of the set P is the same for the coarse-grain offloading, where N = |C| = |P| = 1;

- – for each component to be offloaded, determine the cloud where this component should be executed (“where to execute”). Given M = {m1,m2,...,mK} the set of cloud sites accessible for computation offloading, where K = |M|, the solution to this problem is a set Q={q1,q2,...,qN} , in which each element qi describes the execution cloud chosen for the component ci of the application (qi ∈ M). Similarly to the previous problem, the representation of the solution Q is the same for coarse-grain offloading, or |Q| = 1;

- – for the components to be offloaded, determine the access network through which the input data of these components should be transmitted (“who to transmit through”). Given F={f1, f2,..., fK} the set of points of access that are accessible at the data transmission moment, the solution to this problem is a variable f ∈ F that describes the access network for offloading the components chosen for execution in the cloud.

To minimize the number of decision variables, sets P and Q can be merged into a single set X = {x1, x2 ,..., xN }, in which each element xi represents the execution site of each application component irrespective of whether it is on the mobile device or in one of the accessible clouds (xi ∈ {local,m1,m2 ,...,mK}). The offloading solution S could be described by a pair (X, f) , in which each decision variable xi represents the site of execution of the ith component of the application and the variable f signifies the point of access from which the offloading data should be transmitted.

6.4.2.2. Objective function of the offloading problem

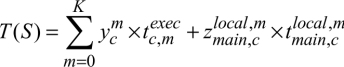

Independently of the offloading granularity, the objective of the offloading decision is to find the offloading solution that optimizes the application execution cost. Cost is generally expressed in terms of application execution time and energy consumption for the application execution. Nevertheless, other criteria such as price and security are worth being considered. The general objective function of the offloading is modeled as described by equation [6 .1]:

where:

- – S =(X, f): offloading solution whose details are provided in section 6.4.2.1;

- – cost(S): cost function of the offloading solution S .

REFRESHER.– The argument of minimum, denoted by “ arg min “, is the set of points in which an expression reaches its minimal value. Using mathematical notation, for a function f: X → Y, where Y is a fully ordered set, arg min is defined by:

The cost function is defined by the weighted sum of the application execution time, on the one hand, and the energy consumption, on the other hand, as described by equation [6.2]:

where:

- – T(S) and E(S) are, respectively, the execution time and the energy consumption resulting from solution S;

- – T(Slocal ) and E(Slocal) are, respectively, the time and the energy consumption required by the fully local execution of the application.

These two latter terms are used for the normalization of time and energy in order to render them summable in equation [6.2]. On the other hand, wt is a coefficient measuring the relative importance of the application execution time with respect to the energy consumption in the calculation of the total cost of an offloading solution S. The value of this parameter can be fixed depending on user preferences and application needs.

6.4.3. Modeling of offloading cost

6.4.3.1. Fine-grain offloading cost

The modeling of fine-grain offloading cost requires a distinction between two execution modes of program components: serial execution and parallel execution. In the serial execution mode, the application components are executed one after the other in an appropriate order, considering their granularity levels and input data. In the parallel execution mode, the components whose input data are not interdependent can be executed in parallel. For simplicity reasons, the rest of this chapter focuses on the serial execution mode.

Based on this principle, the cost of fine-grain offloading in terms of execution time is the sum of the time consumed for (1) the execution of the application components according to the chosen execution site and (2) the transmission of input data between two connected components. The offloading time in terms of execution time could therefore be modeled as indicated in equation [6.3]:

where:

- –

time for the execution of component c at site m;

time for the execution of component c at site m; - –

time for the invocation between two components c and c' executed at sites m and m', respectively.

time for the invocation between two components c and c' executed at sites m and m', respectively.

The binary variables ![]() and

and ![]() are defined depending on the following conditions:

are defined depending on the following conditions:

In equation [6.3], the time for the execution of component c at site m is defined by:

where:

- –

: workload of component c;

: workload of component c; - –

: CPU processing speed offered by site m.

: CPU processing speed offered by site m.

The time of invocation between two components c and c' executed at sites m and m' is represented by equation [6.7]:

with:

- – dc,c' : amount of data transmitted between components c and c' (represented by wcc' in WRG);

- –

: bandwidth of the network connecting sites m' and m'.

: bandwidth of the network connecting sites m' and m'.

The offloading energy is calculated from the standpoint of the mobile device. Consequently, the energy dedicated to the execution of a component in the cloud is not taken into account when modeling the energy cost. Offloading energy is modeled by equation [6.8]:

with:

- –

energy dedicated to the execution of component c on the mobile device;

energy dedicated to the execution of component c on the mobile device; - –

: energy dedicated to inter-component transmissions.

: energy dedicated to inter-component transmissions.

These two parameters are defined as follows:

with:

- –

: CPU energy consumption of the mobile device per processing unit;

: CPU energy consumption of the mobile device per processing unit; - –

and

and  transmission power and reception power of the mobile device antenna.

transmission power and reception power of the mobile device antenna.

6.4.3.2. Coarse-grain offloading cost

Coarse-grain offloading cost could be modeled as a specific fine-grain offloading case with a single component. This is represented as follows:

Similarly, the offloading energy cost is modeled as follows:

6.5. AI-based solutions

The previously described fine-grain offloading problem is an NP-hard problem (Wang et al. 2015; Wu et al. 2016). Therefore, the solution to this problem cannot be found in a polynomial time. Consequently, the optimal solutions and their AI-based variants are applicable only to small scenarios, with a limited number of decision variables. Non-optimal solutions based on AI, such as heuristics or metaheuristics, are used for wider scenarios. Some of the metaheuristics used are worth being mentioned: simulated annealing, tabu search method, evolutionary algorithms, ethology-based algorithms, etc. The following section presents several AI-based optimization algorithms for solving this problem.

6.5.1. Branch and bound algorithm

Branch and bound (B&B) is an optimal algorithm for solving combinatorial optimization problems. Several AI-based techniques were developed with the purpose of optimizing the performances of this algorithm in terms of time required for computing the optimal solution.

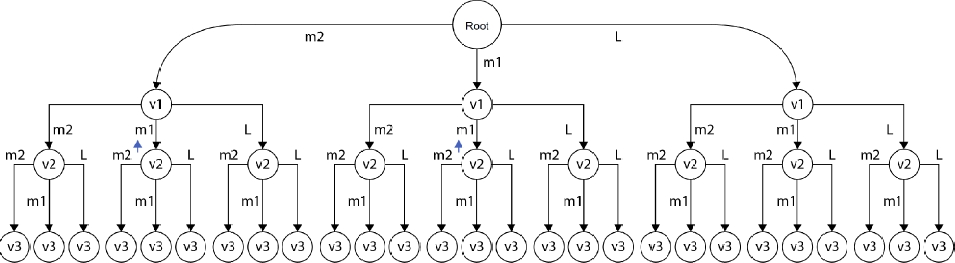

In the offloading solutions based on this algorithm, the WRG of the application is first transformed into a tree representing all the possible combinations of local or remote execution of each WRG vertex. For this purpose, the tree is built from an empty root. The first component of the application is added to this root in as many copies as the possible execution sites. Each node of this level therefore represents the first component labeled with one of the execution sites. Similarly, at each child thus produced, the second component of WRG is added in as many copies as the possible execution sites. This process is repeated until all the WRG vertices are added to the tree. Consequently, each branch of the tree thus produced represents a potential solution to this offloading problem. The weight of each vertex in WRG is copied in each copy of this component in the tree. However, the connection between a vertex and its parent in the tree takes the weight of the corresponding connection in the WRG, if there is a connection between these two components. Otherwise, it has zero weight. Figure 6.5 illustrates the example of a tree corresponding to a WRG with three vertices in a context with three possible execution sites (local site, Cloud m1 and Cloud m2).

In the evaluation phase, each branch is explored using the depth-first search strategy. When a branch is thus explored, its cost is progressively calculated using the cost function defined by equation [6.2]. In order to accelerate the tree exploration, a branch could be cut when its partial cost exceeds the bounding value. In our offloading decision problem, the bounding value is initiated at the minimum local or remote offloading cost of all the components in one of the cloud sites. When a branch is fully explored without being cut, the bounding value is replaced by the cost of the solution given by this branch.

To accelerate the resolution of the offloading problem, there are solutions based on the B&B algorithm that propose optimizations to enable the earlier cutting of inappropriate branches. For example, the solution proposed by Goudarzi et al. (2016) places the vertices with more significant weights higher in the tree, thus enabling inappropriate branches to be cut earlier. On the other hand, it is possible to initiate the bounding value at the cost of the solution found by heuristics or metaheuristics.

Figure 6.5. B&B tree

6.5.2. Bio-inspired metaheuristics algorithms

In order to solve the offloading decision problem in a polynomial time, some work relies on evolutionary metaheuristics algorithms, such as the genetic algorithm. The following part of this section describes the general idea of the works relying on this type of solution.

First of all, the initial population is generated from a number of potential solutions. Each solution is described by a chromosome composed of a set of genes. Each gene represents a component of the application labeled by a site of execution. The initial population is generally generated by random approach. Nevertheless, in some of the proposed optimizations, the initial population contains chromosomes with all the genes labeled to be executed in the same site. Figure 6.6 illustrates the chromosome example.

Figure 6.6. Chromosome

The algorithm tries to produce a new generation from a given population using selection and reproduction processes. These two processes enable the population optimization in each iteration. The selection process generally relies on the fitness function, which measures the relevance degree of a solution to the aimed objective. For our offloading decision problem, the fitness function corresponds to the cost function given by equation [6.2], which is expected to be minimized. Consequently, the selection stage relies on a probability that is inversely proportional to the cost calculated for each chromosome from equation [6.2].

After the selection phase, mutation and crossover functions are applied to the chromosomes selected for the reproduction of a new generation. In most works based on genetic algorithms, parent crossover relies on methods such as cutting and exchange. On the other hand, genes are chosen for mutation by roulette wheel selection function. The latter assigns a cost (fitness) to each gene of a chromosome.

The fitness of a gene is calculated as the cost of the gene divided by the cost of its chromosome. Consequently, the gene with the highest cost has the highest probability to be chosen for mutation. The selected gene is mutated by randomly changing its assigned site.

Selection, mutation and crossover stages unfold until (1) the maximal number of iterations is reached or (2) no significant improvement is observed when passing from one generation to the next.

6.5.3. Ethology-based metaheuristics algorithms

Some work focuses on an offloading decision relying on ethology-based metaheuristics, such as ant-colony optimization (ACO), particle swarm optimization (PSO) and artificial bee colony optimization (ABC). The following part of this section focuses on the ACO-based offloading decision, as a typical example of ethology-based metaheuristics.

ACO is a metaheuristics algorithm inspired by the collective behavior of ants when they try to find the best path to their nest and a food source. In nature, an ant starts by randomly looking for food. Once it finds food, the ant returns to the nest leaving a trail of a chemical substance known as pheromone. Because of this trail, other ants are able to find the food. In time, the pheromone trail starts to evaporate. The longer it takes for an ant to travel along the path, the stronger the evaporation of pheromones. By comparison, a short path is more often traveled up and down by ants and the pheromone density is higher on shorter paths than on longer paths.

Relying on this principle, some work represents each potential solution to the offloading problem by the path traveled by the ant. Initially, a number of ants are randomly generated. With each algorithm iteration, each ant updates its path depending on the relevance of the execution site chosen for each component in its previous path. For our problem, the latter is defined by a fitness function given by equation [6.2]. These stages unfold until a maximal number of iterations are reached.

6.6. Conclusion

This chapter presents the MCC paradigm, which extends the capacities of mobile devices taking advantage of abundant cloud resources. The notion of computation offloading is defined in this context. This involves the outsourcing of complicated computations to the cloud. Two offloading types are introduced, namely the finegrain and the coarse-grain offloading, reflecting the full or subpart application offloading. Given the variable conditions of radio channel and cloud site, the necessity of an offloading decision is highlighted. In this context, the offloading decision mechanism should determine which cloud and access network should be preferred for computation offloading. This multi-site multi-access environment leads to an NP-hard problem, which cannot be solved in a polynomial time. To solve this problem, the literature proposes AI-based solutions, including heuristics and metaheuristics algorithms. To clarify the approaches taken to solve this problem, various classes of applied algorithms are presented.

Although the general offloading problem described in this chapter is thoroughly studied in the literature, there are no in-depth studies of problems related to dynamic and mobile context, multi-clouds and multi-users. In particular, the dynamic and mobile context requires the introduction of very light decision algorithms, which can be reactively executed following context changes. Certain works along this axis rely on user mobility prediction and deal also with shifted offloading. On the other hand, determining the price of radio resources and cloud is a very interesting domain for which solutions based on game theory and auction theory should be proposed. A further research direction involves proposing algorithms for the allocation of edge cloud and access network resources according to the needs of all users. Joint allocation of radio and cloud resources enables significant optimization of the quality of service, user admission rate and use of resources.

6.7. References

Abolfazli, S., Sanaei, Z., Ahmed, E., Gani, A., and Buyya, R. (2014). Cloud-based augmentation for mobile devices: Motivation, taxonomies, and open challenges. IEEE Communications Surveys & Tutorials, 16(1), 337–368.

Cai, Y., Yu, F.R., and Bu, S. (2016). Dynamic operations of cloud radio access networks (C-RAN) for mobile cloud computing systems. IEEE Transactions on Vehicular Technology, 65(3), 1536–1548.

Chen, M., Hao, Y., Li, Y., Lai, C.F., and Wu, D. (2015). On the computation offloading at ad hoc cloudlet: Architecture and service modes. IEEE Communications Magazine, 53(6), 18–24.

Goudarzi, M., Movahedi, Z., and Pujolle, G. (2016). A priority-based fast optimal computation offloading planner for mobile cloud computing. International Journal of Information & Communication Technology Research, 8(1), 43–49.

Gupta, P. and Gupta, S. (2012). Mobile cloud computing: The future of cloud. International Journal of Advanced Research in Electrical, Electronics and Instrumentation Engineering, 1(3), 134–145.

Hong, S. and Kim, H. (2019). QoE-aware computation offloading to capture energy-latency-pricing tradeoff in mobile clouds. IEEE Transactions on Mobile Computing, 18(9), 2174–2189.

Jiang, C., Cheng, X., Gao, H., Zhou, X., and Wan, J. (2019). Toward computation offloading in edge computing: A survey. IEEE Access, 7(1), 131543–131558.

Khan, A.U.R., Othman, M., Madani, S.A., and Khan, S.U. (2014). A survey of mobile cloud computing application models. IEEE Communications Surveys & Tutorials, 16(1), 393–413.

Khan, A.U.R., Othman, M., Xia, F., and Khan, A.N. (2015). Context-aware mobile cloud computing and its challenges. IEEE Cloud Computing, 2(3), 42–49.

Li, B., Liu, Z., Pei, Y., and Wu, H. (2014). Mobility prediction based opportunistic computational offloading for mobile device cloud. IEEE 17th International Conference on Computational Science and Engineering (CSE). IEEE, Chengdu, 786–792.

Mach, P. and Becvar, Z. (2017). Mobile edge computing: A survey on architecture and computation offloading. IEEE Communications Surveys & Tutorials, 19(3), 1628–1656.

Magurawalage, C.S., Yang, K., and Wang, K. (2015). Aqua computing: Coupling computing and communications [Online]. Available at: https://arxiv. org/abs/1510.07250, [Accessed January 2019].

Movahedi, Z. (2018). Green, trust and computation offloading perspectives to optimize network management and mobile services. Doctoral Thesis, Sorbonne Universities, Paris.

Wang, X., Wang, J., Wang, X., and Chen, X. (2015). Energy and delay tradeoff for application offloading in mobile cloud computing. IEEE Systems Journal, 11(2), 858–867.

Wu, H. (2018). Multi-objective decision-making for mobile cloud offloading: A survey. IEEE Access, 6(1), 3962–3976.

Wu, H., Knottenbelt, Z., Wolter, K., and Sun, Y. (2016). An optimal offloading partitioning algorithm in mobile cloud computing. Quantitative Evaluation of Systems, Agha, G. and Van Houdt, B. (eds). Springer, Berlin, 311–328.

Zhang, Y., Niyato, D., and Wang, P. (2015). Offloading in mobile cloudlet systems with intermittent connectivity. IEEE Transactions on Mobile Computing, 14(12), 2516–2529.

Zhang, K., Mao, Y., Leng, S., Zhao, Q., Li, L., Peng, X., Pan, L., Maharjan, S., and Zhang, Y. (2016). Energy-efficient offloading for mobile edge computing in 5g heterogeneous networks. IEEE Access, 4(1), 5896–5907.

- 1 Device-to-Device.