Now let's see how gradient agreement works step by step:

- Let's say we have a model

parameterized by a parameter

parameterized by a parameter  and a distribution over tasks

and a distribution over tasks  . First, we randomly initialize the model parameter

. First, we randomly initialize the model parameter  .

. - We sample some batch of tasks

from a distribution of tasks—that is,

from a distribution of tasks—that is,  . Let's say we sampled two tasks, then

. Let's say we sampled two tasks, then  .

. - Inner loop: For each task (

) in tasks (

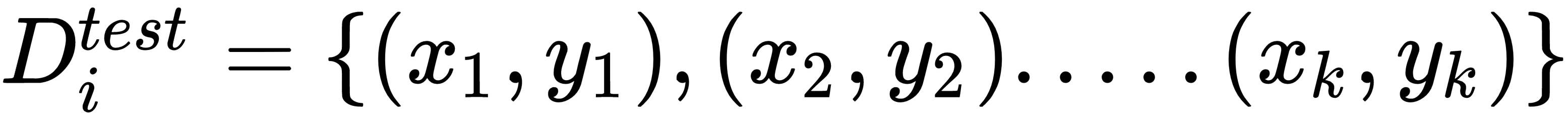

) in tasks ( ), we sample k data points and prepare our train and test datasets:

), we sample k data points and prepare our train and test datasets:

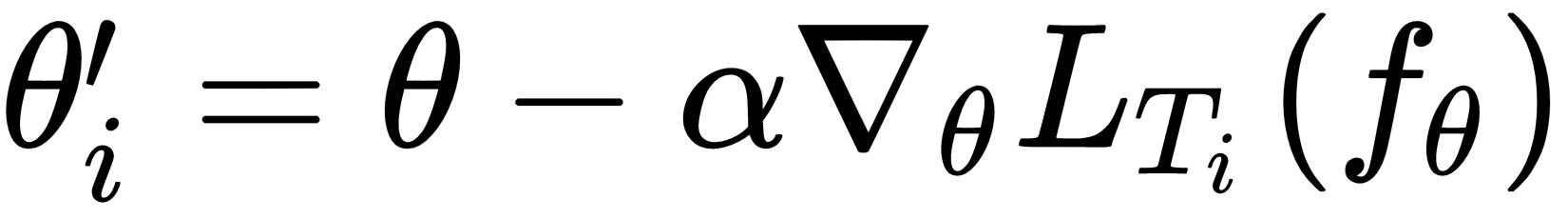

We calculate loss and minimize the loss on the  using gradient descent and get the optimal parameters

using gradient descent and get the optimal parameters  —that is,

—that is,  .

.

Along with this, we also store the gradient update vector as:  .

.

So, for each of the tasks, we sample k data points and minimize the loss on the train set  and get the optimal parameters

and get the optimal parameters  . As we sampled two tasks, we'll have two optimal parameters,

. As we sampled two tasks, we'll have two optimal parameters,  , and we'll have a gradient update vector for each of these two tasks as

, and we'll have a gradient update vector for each of these two tasks as  .

.

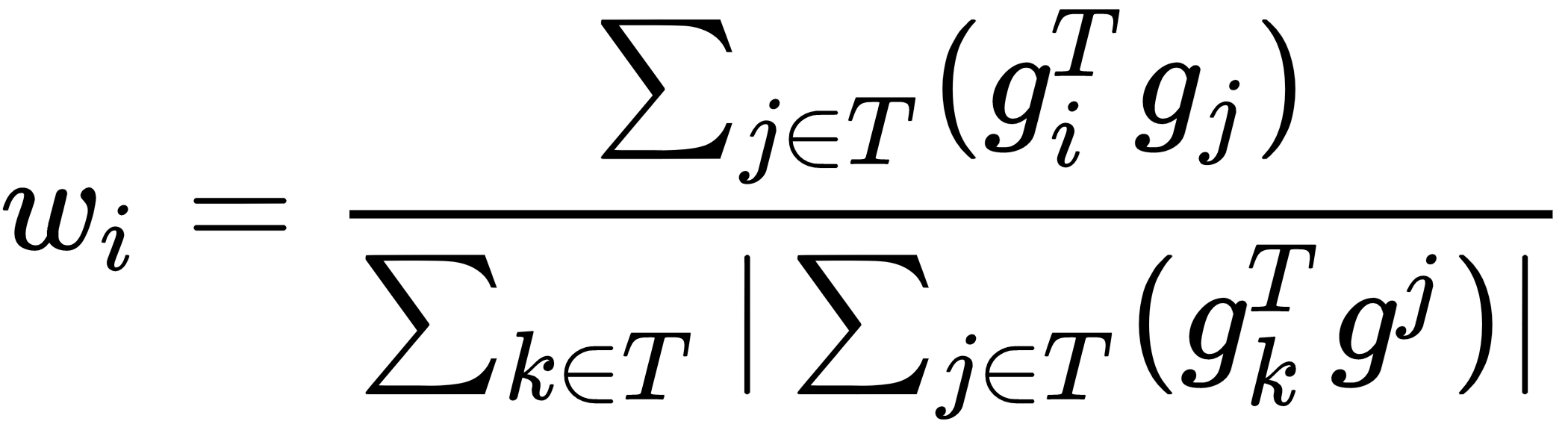

- Outer loop: Now, before performing meta optimization, we'll calculate the weights as follows:

After calculating the weights, we now perform meta optimization by associating the weights with the gradients. We minimize the loss in the  by calculating gradients with respect to the parameters obtained in the previous step and multiply the gradients with the weights.

by calculating gradients with respect to the parameters obtained in the previous step and multiply the gradients with the weights.

If our meta learning algorithm is MAML, then the update equation is as follows:

If our meta learning algorithm is Reptile, then the update equation is as follows:

- We repeat steps 2 to 5 for n number of iterations.