Chapter 2: Business Acceleration Using a Multi-Cloud Strategy

This chapter discusses how enterprises could accelerate business results by implementing a multi-cloud strategy. Every cloud platform/technology has its own benefits and by analyzing business strategies and defining what cloud technology fits best, enterprises can really take advantage of multi-cloud. A strategy should not be "cloud first" but "cloud fit." But before we get into the technical strategy and the actual cloud planning, all the way up to even developing and implementing Twelve-Factor Apps, we have to explore the business or enterprise strategy and the financial aspects that drive this strategy. In this chapter, we're going to cover the following main topics:

- Analyzing the enterprise strategy for the cloud

- Mapping the enterprise or business strategy to a technology roadmap

- Keeping track of technology changes in multi-cloud environments

- Exploring the different cloud strategies and how they help accelerate the business

Let's get started!

Analyzing the enterprise strategy for the cloud

Before we get into a cloud strategy, we need to understand what an enterprise strategy is and how businesses define such a strategy. As we learned in the previous chapter, every business should have the goal of generating revenue and earning money. That's not really a strategy. The strategy is defined by how they generate money with the products the business makes or the services that they deliver.

A good strategy comprises a well-thought-out balance between timing, access to and use of data, and something that has to do with braveness – daring to make decisions at a certain point of time. That decision has to be based on – you guessed it – proper timing, planning, and the right interpretation of data that you have access to. If a business does this well, they will be able to accelerate growth and, indeed, increase revenue. The overall strategy should be translated into use cases.

Important Note

The success of a business is obviously not only measured in terms of revenue. There are a lot of parameters that define success as a whole and for that matter, these are not limited to just financial indicators. Nowadays, companies rightfully also have social indicators to report on. Think of sustainability and social return. However, a company that does not earn money, one way or the other, will likely not last long.

Let's start with time and timing. At the time of writing (March 2020), the world is holding its breath because of the breakout of the COVID-19 virus, better known as coronavirus. Governments are trying to contain the virus by closing schools, universities, forbidding businesses to organize meetings and big events, and even locking down whole regions and entire countries. A lot of people are being confronted by travel restrictions. The breakout is severely impacting the global economy and a number of companies are struggling to survive because of a steep decline in orders. Needless to say, Spring 2020 wasn't a good time to launch new products, unless the product was related to containing the virus or curing people that were contaminated.

That's exactly what happened. Companies that produced, for example, protective face masks started skyrocketing, but companies relying on production and services in locked-down regions saw their markets plummeting at breathtaking speed. In other words, time is one of the most important factors to consider when planning for business acceleration. Having said that, it's also one of the most difficult things to grasp. No-one plans for a virus outbreak. However, it was a reason for businesses not to push for growth at that time. The strategy for a lot of companies probably changed from pushing for growth to staying in business by, indeed, trying to drive the costs down. Here, we have our first parameter in defining a cloud strategy: cost control and, even more so, cost agility.

The second one is access to and use of data. It looks like use of data in business is something completely new, but of course nothing could be further from the truth. Every business in every era can only exist by the use of data. We might not always consider something to be data since it isn't always easy to identify, especially when it's not stored in a central place. This is an odd example, but let's look at Mozart. We're talking mid to late 18th century, ages before the concept of computing was invented. In his era, Mozart was already acknowledged as a great musician since he studied all the major streams of music in his era, from piano pieces to Italian opera. He combined this with a highly creative gift of translating this into original music. All this existing music was data; the gift to translate and transform the data to new music could be perceived as a form of analytics.

Data is the key. It's not only about raw data that a business (can) have access to, but also in analyzing that data. Where are my clients? What are their demands? Under what circumstances are these demands valid and what makes these demands change? How can I respond to these changes? How much time would I have to fulfill the initial demands and apply these changes if required? This all comes from data. Nowadays, we have a lot of data sources. The big trick is how we can make these sources available to a business – in a secured way, with respect to confidentiality, privacy, and other compliance regulations.

Finally, business decisions do take braveness. With a given time and all the data of the world, you need to be bold enough to make a decision at a certain point. Choose a direction and go for it. To quote the famous Canadian ice hockey player Wayne Gretzky:

The million-dollar question is: how can multi-cloud help you in making decisions and fulfilling the enterprise strategy? At the enterprise level, there are four domains that define the strategy at the highest level (source: University of Virginia, Strategic Thinking and Action, April 2018):

- Industry position

- Enterprise core competence

- Long-term planning

- Financial structure

In the following sections, we will address these four domains.

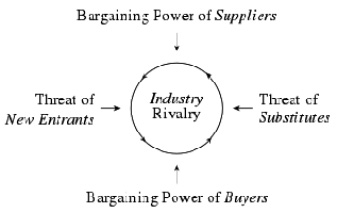

Industry position

The first thing a company should do is analyze what its position is in the industry in which it is operating. Or, if it's entering the market, what position it could initially gain and hold. Analysts often use Michael Porter's five forces model, which comprises competitive rivalry, threat of new entrants, threat of substitutes, bargaining power of suppliers, and the bargaining power of customers. To execute this model, obviously, a lot of data needs to be investigated. This is not a one-time thing. It's a constant analysis of the industry's position. Outcomes will lead to change in the enterprise's strategy. Here's the big thing: in this era of digitalization, these changes occur at an ever-increasing speed and that requires the business to be agile. This model can be depicted with the following diagram:

Figure 2.1 – Porter's five forces model

Let's, for example, look at one the model's parameters: the threat of substitutes. Today, customers can easily switch products or services. What's cool today is completely out tomorrow. Plus, as an outcome of globalization of markets and the fact that we are literally globally connected through the internet, customers can easily find cheaper products or services. They're no longer bound to a specific location to purchase products or services. They can get it from all around the world. Therefore, the threat of a substitute is more than substantial and requires a constant drive to adapt the strategy to mitigate this threat. It's all about the time to market. Businesses need platforms that enable that level of agility.

Enterprise core competence

With the behavior of markets and businesses themselves constantly changing, it's not easy to define the core competency. Businesses have become more and more T-shaped over the last few decades. We've already looked at banks. It's not that long ago that the core activity of a bank was to keep the money of consumers and companies safe on a deposit, issue loans, and invest and enable payments. For that, banks needed financial experts. That has all changed. Their core business still employs the aforementioned factors, but now, delivering these services is done in a completely digitized way with internet sites – even more so with mobile apps. Banks need software developers and IT engineers to execute their core activities. The bank has become T-shaped.

Long-term planning

When a business is clear on their position and their core competencies, they need to set out a plan. Where does the company want to be in 5 years from now? This is the most difficult part. Based on data and data analytics, it has to determine how the market will develop and how the company can anticipate change. Again, data is the absolute key, but also the swiftness in which companies can change course since market demands do change extremely rapidly.

Financial structure

Finally, any business needs a clear financial structure. How is the company financed and how are costs related to different business domains, company divisions, and its assets? As we will find out as part of financial operations, the cloud can be of great help in creating fine-grained insight into financial flows throughout a business. With correct and consistent naming and tagging, you can precisely pinpoint how much cost a business generates in terms of IT consumption. The best part of cloud models is that the foundation of cloud computing is pay for what you use. Cloud systems can breathe at the same frequency as the business itself. When business increases, IT consumption can increase. When business drops, cloud systems can be scaled down and with that, they generate lower costs, whereas traditional IT is way more static.

So, nothing is holding us back from getting our business into the cloud. It does take quite some preparation in terms of (enterprise) architecture. In the following section, we will explore this further.

Fitting cloud technology to business requirements

We are moving business into the cloud because of the required agility and, of course, to control our costs.

The next two questions will be: with what and how? Before we explore the how and basically the roadmap, we will discuss the first question: with what? A cloud migration plan starts with business planning, which covers business processes, operations, finance, and lastly the technical requirements. We will have to evaluate the business demands and the IT fulfillment of these requirements.

When outsourcing contracts, the company that takes over the services performs a so-called due diligence. As per its definition, due diligence is "a comprehensive appraisal of a business undertaken by a prospective buyer, especially to establish its assets and liabilities and evaluate its commercial potential" (source: https://www.lexico.com/en/definition/due_diligence). This may sound way too heavy of a process to get a cloud migration asset started, yet it is strongly recommended as a business planning methodology. Just replace the words prospective buyer with the words cloud provider and you'll immediately get the idea behind this.

Business planning

Business planning involves the following items:

- Discovery of the entire IT landscape, including applications, servers, network connectivity, storage, APIs, and services from third-party providers.

- Mapping IT landscape components to business-critical or business-important services.

- Identification of commodity and shared services and components in the IT landscape.

- Evaluation of IT support processes with regards to commodity services and critical and important business services. This includes the levels of automation in the delivery of these services.

One really important step in the discovery phase that's crucial to creating a good mapping to cloud services is evaluating the service levels and performance indicators of applications and IT systems. Service levels and key performance indicators (KPIs) will be applicable to applications and underlying IT infrastructure based on specific business requirements. Think of indicators like metrics of availability, durability, and levels of backup including RTO/RPO specifications, requirements for business continuity (BC), and disaster recovery (DR). Are systems monitored 24/7 and what are the support windows? These all need to be considered. As we will find out, service levels and derived service-level agreements might be completely different in cloud deployments, especially when looking at PaaS and SaaS, where the responsibility of platforms (PaaS) and even application (SaaS) management is largely transferred to the solution provider.

If you ask a CFO what the core system is, he/she will probably answer that financial reporting is absolutely critical. It's the role of the enterprise architect to challenge that. If the company is a meat factory, then the financial reporting system is not the most critical system. The company doesn't come to a halt when financial reporting can't be executed. The company does, however, come to a halt when the meat processing systems stop; that immediately impacts the business. What would that mean in planning the migration to cloud systems? Can these processing applications be hosted from cloud systems? And if so, how? Or maybe, when?

Financial planning

We're not there yet. After the business planning phase, we also need to perform financial analyses. After all, one of the main rationales to move to cloud platforms is cost control. Be aware: moving to the cloud is not at all times a matter of lowering costs. It's about making your costs responsive to actual business activity. Setting up a business case to decide whether cloud solutions are an option from a financial perspective is, therefore, not an easy task. Public cloud platforms offer Total Cost of Ownership (TCO) calculators.

TCO is indeed the total cost of owning a platform and it should include all direct and indirect costs. What do we mean by that? When calculating the TCO, we have to include the costs that are directly related to systems that we run: costs for storage, network components, compute, licenses for software, and so on. But we also need to consider the costs of the labor that is involved in managing systems for engineers, service managers, or even the accountant that evaluates the costs related to the systems. These are all costs; however, these indirect costs are often not taken into the full scope. Especially in the cloud, these should be taken into account. Think of this: what costs can be avoided by, for example, automating service management and financial reporting?

So, there's a lot to cover when evaluating costs and the financial planning driving architecture. Think of the following:

- All direct costs related to IT infrastructure and applications. This also includes hosting and housing costs; for example, (rental of) floor space and power.

- Costs associated with all staff working on IT infrastructure and applications. This includes contractors and staff from third-party vendors working on these systems.

- All licenses and costs associated with vendor or third-party support for systems.

- Ideally, these costs can be allocated to a specific business process, division, or even (groups of) users so that it's evident where IT operations costs come from.

Why is this all important in drafting the architecture? A key financial driver to start a cloud journey is the shift from CapEx to OpEx. In essence, CapEx – capital expenditure – concerns upfront investments; for example, in buying physical machines or software licenses. These are often one-off investments, of which the value is depreciated over an economic life cycle. OpEx – operational expenditure – is all about costs related to day-to-day operations and for that reason is much more granular. Usually, OpEx is divided into smaller budgets that teams need to have to perform their daily tasks. In most cloud deployments, the client really only pays for what they're using. If resources sit idle, these can be shut down and costs will stop. A single developer could – if mandated for this – decide to spin up an extra resource if required.

That's true for a pay-as-you-go (PAYG) deployment, but we will discover that a lot of enterprises have environments for which it's not feasible to run in full PAYG. You simply don't shut down instances of large, critical ERP systems. So, for these systems, businesses will probably use more stateful resources, such as reserved instances that are fixed for a longer period. For cloud providers, this means a steady source of income for a longer time and therefore they offer reserved instances against lower tariffs or to apply discounts. The downside is that companies can be obliged to pay for these reserved resources upfront. Indeed, that's CapEx. Cutting a long story short: the cloud is not OpEx by default.

In Chapter 11, Defining Principles for Resource Provisioning and Consumption, on FinOps, we will explore this in much more detail, including the pitfalls.

Technical planning

Finally, we've gotten to technical planning, which starts with a foundation architecture. You can plan to build an extra room on top of your house, but you'll need to build the house first – assuming that it doesn't exist. The house needs a firm foundation that can hold the house in the first place, but can also carry the extra room in the future. The room needs to be integrated with the house – for one, since it would really be completely useless to have it standalone from the rest of the house. It all takes good planning. Good planning requires information – data, if you like.

In the world of multi-cloud, you don't have to figure it out all by yourself. The major cloud providers all have reference architectures, best practices, and use cases that will help you plan and build the foundation, making sure that you can fit in new components and solutions almost all the time.

That's exactly what we are going to do in this book: plan, design, and manage a future-proof multi-cloud environment. In the next section, we will take our first look at the foundation architecture.

IT4IT

The problem that many organizations face is controlling the architecture from the business perspective. IT4IT is a framework that helps organizations with that. It's complementary to TOGAF and, for this reason, also issued as a standard by The Open Group. IT4IT is also complementary to ITIL, where ITIL provides best practices for IT service management. IT4IT provides the foundation to enable IT service management processes with ITIL. It is meant to align and manage the digital enterprise. It deals with the challenges that these enterprises have, such as the ever-speeding push for embracing and adopting new technology. The base concept of IT4IT consists of four value streams:

- Strategy to portfolio: The portfolio contains technology standards, plans, and policies. It deals with the IT demands from the business and mapping these demands to IT delivery. An important aspect of the portfolio is project management, to align business and IT.

- Requirements to deploy: This stream focuses on creating and implementing new services or adapting existing services, in order to reach a higher standard of quality or to obtain a lower cost level. According to the documentation of The Open Group, this is complementary to methods such as Agile Scrum and DevOps.

- Request to fulfill: To put it very simply, this value stream is all about making life easy for the end customers of the deployed services. As companies and their IT adopt structures such as IaaS, PaaS, and SaaS, this stream enables service brokering by offering and managing a catalog and with that, speeds up fulfilling new requests being made by end users.

- Detect to correct: Services will change. This stream enables monitoring, management, remediation, and other operational aspects that drive these changes.

The following diagram shows the four streams of IT4IT:

Figure 2.2 – IT4IT value streams (The Open Group)

In this section, we learned how to map business requirements and plans to an IT strategy. Frameworks such as IT4IT are very valuable in successfully executing this mapping. In the next section, we will focus on how cloud technology fits into the business strategy.

Keeping track of cloud developments – focusing on the business strategy

Any cloud architect or engineer will tell you that it's hard to keep up with developments. Just for reference, AWS and Azure issue over 2,000 features in their respective cloud platforms over just 1 year. These can be big releases or just some minor tweaks.

What would be major releases of features? Think about Azure Arc, Bastion, or Lighthouse in Azure, the absolutely stunning quantum computing engine Braket, and the open source operating system for container hosts known as Bottlerocket, in AWS. In GCP, we got Cloud Run in 2019, which combines serverless technology with containerized application development and a dozen open source integrations for data management and analytics. In March 2020, VMware released vSphere 7, including a lot of cool cloud integration features such as VMware Cloud Foundation 4 with Pacific, as well as Tanzu Kubernetes Grid, a catalog powered by Bitnami and Pivotal Labs to deploy and manage containers in multi-cloud environments.

It is a constant stream of innovations: big, medium, and small. There is, however, two overall movements: from software to service and from virtual machine to container. We will discuss this further in the last section of this chapter.

Anyway, try to keep up with all these innovations, releases, and new features. It's hard, if not undoable.

And it gets worse. It's not only the target cloud platforms, but also a lot of tools that we need to execute in order to migrate or develop applications. Just have a look at the Periodic Table of DevOps by XebiaLabs, which is continuously refreshed with new technology and tools.

In the final part of this book, we will have a deeper look at cutting-edge technology with AIOps, a rather new domain in managing cloud platforms but one that's gathering a lot of ground right now. Over the last year, a number of tools have been launched in this domain, from the well-known Splunk, which already had a settled name in monitoring, to relative newcomers on the market such as StackState (founded in 2015) and Moogsoft (founded in 2011). AIOps has been included in the Periodic Table:

Figure 2.3 – XebiaLabs Periodic Table of DevOps Tools

Tip

Check out the interactive version of XebiaLab's Periodic Table of DevOps Tools at https://xebialabs.com/periodic-table-of-devops-tools/. For a full overview of the cloud-native landscape, refer to the following web page, which contains the Cloud Native Trail Map of the Cloud Native Computing Foundation: https://landscape.cncf.io/.

There's no way to keep up with all the developments. A business needs to have focus and that should come from the strategy that we discussed in the previous sections. In other words, don't get carried away with all the new technology that is launched.

There are three important aspects that have to be considered when managing cloud architecture: the foundation architecture, the cost of delay, and the benefit of opportunity.

Foundation architecture

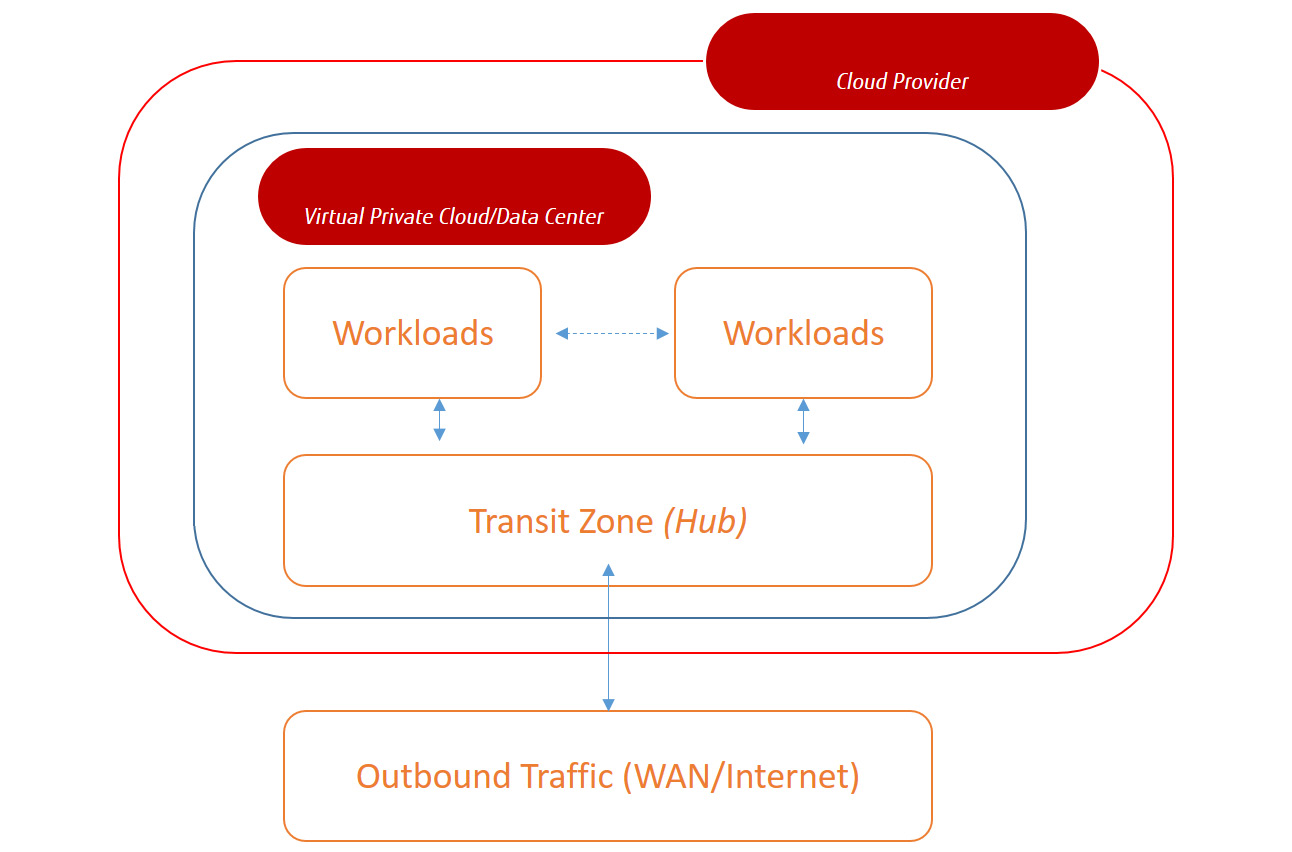

Any architecture starts with a baseline, something that we call a foundation architecture or even a reference architecture. TOGAF comprises two domains: the technical reference model and the standards information base. The technical reference model describes the generic platform services. In a multi-cloud environment, that would be the basic setup of the used platforms.

As an example, in Azure, the common practice is to have a hub and spoke-model deployed. The hub contains generic services that are applicable to all workloads in the different spokes. Think of connectivity gateways to the outside world (on-premises or the internet), bastion hosts or jump servers, management servers, and centrally managed firewalls. Although the terminology in AWS and GCP differ greatly from Azure, the base concepts are more or less the same. Within the cloud service, the client creates a virtual data center – a subscription or a virtual private cloud (VPC) – that is owned and managed by the client. In that private section of the public cloud, a transit zone is created, as well as the segments that can hold the actual workloads:

Figure 2.4 – High-level diagram of a virtual private cloud/data center

Now, how this virtual data center (VDC) or subscription is set up has to be described in the foundation architecture. That will be the baseline. Think about it: you wouldn't rebuild the physical data center every time a new application was introduced, would you? The same rule applies for a virtual data center that we're building on top of public cloud services.

The Standards Information Base is the second component that makes up our foundation architecture. We follow TOGAF: it provides a database of standards that can be used to define particular services and other components of an organization-specific architecture that is derived from the TOGAF Foundation Architecture. In short, it's a library that contains the standards. For multi-cloud, this can be quite an extensive list. Think about the following:

- Network protocol standards for communication and network security, including level of segmentation and network management.

- Standards for virtual machines; for example, type and versions of used operating systems.

- Standards for storage and storage protocols. Think of types of storage and use cases, as offered by cloud providers.

- Security baselines.

- Compliancy baselines; for example, frameworks that are applicable to certain business domains or industry branches.

Tip

As a starting point, you can use the Standards Information Base (SIB) provided by The Open Group. It contains a list of standards that might be considered when drafting the architecture for multi-cloud. The SIB is published on https://www6.opengroup.org/sib.html.

Cost of delay

We have our foundation or reference architecture set out, but now, our business gets confronted with new technologies that have been evaluated: what will they bring to the business? As we mentioned previously, there's no point in adopting every single new piece of tech that is released. The magic word here is business case.

A business case determines whether the consumption of resources supports a specific business need. A simple example is as follows: a business consumes a certain bandwidth on the internet. It can upgrade the bandwidth, but that will take an investment. That is out-of-pocket cost, meaning that the company will have to pay for that extra bandwidth. However, it may help workers to get their job done much faster. If workers can pick up more tasks just by the mere fact that the company invests in a faster internet connection, the business case will, at the end, be positive, despite the investment.

If market demands occur and businesses do not adopt to this fast enough, a company might lose part of the market and with that lose revenue. Adopting new technology or speeding up the development of applications to cater for changing demands will lead to costs. These costs correspond with missing specific timing and getting services or products to the market in time. This is what we call cost of delay.

Cost of delay, as a piece of terminology, was introduced by Donald Reinertsen in his book The Principles of Product Development Flow, published by Celeritas Publishing, 2009:

Although it's mainly used as a financial parameter, it's clear that the cost of delay can be a good driver for evaluating the business case in adopting cloud technology. Using and adopting consumption from cloud resources that are more or less agile by default can mitigate the financial risk of cost of delay.

Benefit of opportunity

If there's something that we could call a cost of delay, there should also be something that we could call a benefit of opportunity. Where cost of delay is a risk in missing momentum because changes have not been adopted timely enough, a benefit of opportunity is really about accelerating the business by exploring future developments and related technology. It can be very broad. As an example, let's say a retailer is moving into banking by offering banking services using the same app that customers use to order goods. Alternatively, think of a car manufacturer, such as Tesla, moving into the assurance business.

The accessibility and even ease of use of cloud services enable these shifts. In marketing terms, this is often referred to as blurring. In the traditional world, that same retailer would really have much more trouble offering banking services to its customers, but with the launch of SaaS in financials apps, from a technological point of view, it's not that hard to integrate this into other apps. Of course, this is not considering things such as the requirement of a banking license from a central financial governing institution and having to adhere to different financial compliancy frameworks. The mere message is that with cloud technologies, it has become easier for businesses to explore other domains and enable a fast entrance into these domains, from a pure technological perspective.

The best example? AWS. Don't forget that Amazon was originally an online bookstore. Because of the robustness of their ordering and delivery platform, Amazon figured out that they also could offer storage systems "for rent" to other parties. After all, they had the infrastructure, so why not fully capitalize on that? Hence, S3 storage was launched as the first AWS cloud service and by all means, it got AWS to become a leading cloud provider, next to the core business of retailing. That was truly a benefit of opportunity.

With that, we have discussed how to define a strategy. We know where we're coming from and we know where we're heading to. Now, let's bring everything together and make it more tangible by creating the business roadmap and finally mapping that roadmap to our cloud strategy, thereby evaluating the different deployment models and cloud development stages.

Creating a comprehensive business roadmap

There are stores filled with books on how to create business strategies and roadmaps. This book absolutely doesn't have any pretention to wrap this all into just one paragraph. However, for an enterprise architect, it is important to understand how the business roadmap is evaluated:

- The mission and vision of the business, including the strategic planning of how the business will target the market and deliver its goods or services.

- Objectives, goals, and direction. Again, this includes planning in which the business sets out when and how specific goals are met and what it will take to meet the objectives in terms of resources.

- Strengths, weaknesses, opportunities, and threats (SWOT). The SWOT analysis shows whether the business is doing the right things at the right time or that a change in terms of the strategy is required.

- Operational excellence. Every business has to review how it is performing on a regular basis. This is done through KPI measurements: is the delivery on time? Are the customers happy (customer satisfaction—CSAT)?

Drivers for a business roadmap can be very diverse, but the most common ones are as follows:

- Revenue

- Gross margin

- Sales volume

- Number of leads

- Time to market

- Customer satisfaction

- Brand recognition

- Return on investment

These are shared goals, meaning that every division or department should adhere to these goals and have their planning aligned with the business objectives. These goals end up in the business roadmap. These can be complex in the case of big enterprises, but also rather straightforward, as shown in the following screenshot:

Figure 2.5 – Template for a business roadmap (by ProductPlan)

IT is the engine that drives everything: development, resource planning, CRM systems, websites for marketing, and customer apps. And these days, the demands get more challenging; the life cycle is getting shorter and the speed is getting faster. Where IT was the bottleneck for ages, it now has all the technology available to facilitate the business in every aspect. IT is no longer considered a cost center, but a business enabler.

Mapping the business roadmap to the cloud-fit strategy

Most businesses start their cloud migrations from traditional IT environments, although a growing number of enterprises are already quite far into cloud-native development too. We don't have to exclude the other: we can plan to migrate our traditional IT to the cloud, while already developing cloud-native applications in the cloud itself. Businesses can have separate cloud tracks, running at different speeds. It makes sense to execute the development of new applications in cloud environments using cloud-native tools. Next, the company can also plan to migrate their traditional systems to a cloud platform. There are a number of ways to do that. We will be exploring these, but also look at drivers that start these migrations. The key message is that it's likely that we will not be working with one roadmap. Well, it might be one roadmap, but one that is comprised of several tracks with different levels of complexity and different approaches, at different speeds.

There was a good reason that we have been discussing the enterprise strategy, business requirements, and even financial planning. The composition of the roadmap with these different tracks is fully dependent on the outcome of our assessments and planning. And that is architecture too, let there be no mistake on that.

Here, we're quoting technology leader Radhesh Balakrishnan of RedHat:

He adds the following:

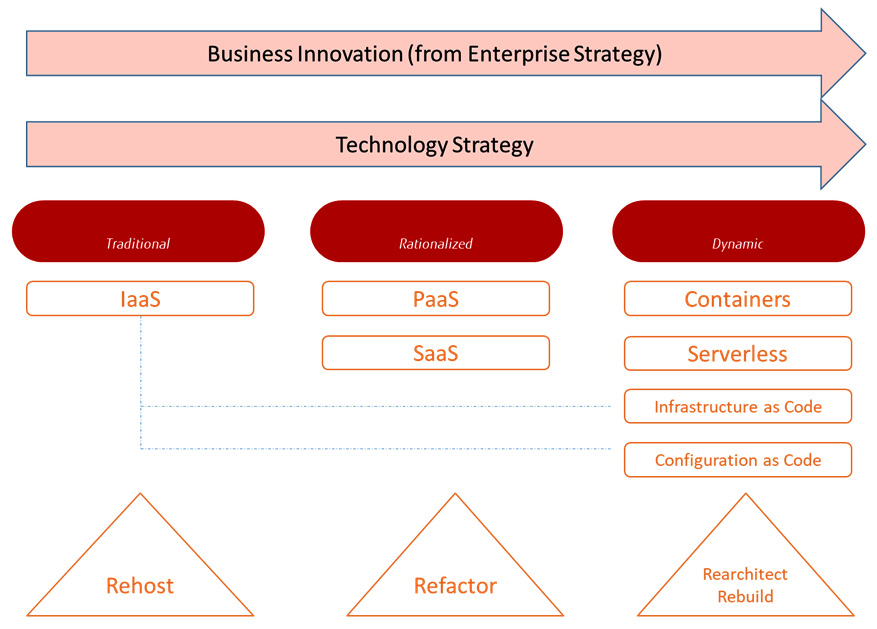

These business requirements will drive the cloud migration approach. We recognize the following technological strategies:

- Rehost: The application, data, and server are migrated as-is to the target cloud platform. This is also referred to as lift and shift. The benefits are often quite low. This way, we're not taking any advantage of cloud-native services.

- Replatform: The application and data are migrated to a different target technology platform, but the application architecture remains as-is. For example, let's say an application with a SQL database is moved to PaaS in Azure with Azure SQL Server. The architecture of the application itself is not changed.

- Repurchase: In this scenario, an existing application is replaced by SaaS functionality. Note that we are not really repurchasing the same application. We are replacing it with a different type of solution.

- Refactor: Internal redesign and optimization of the existing application. This can also be a partial refactoring where only parts of an application are modified to operate in an optimized way in the cloud. In the case of full refactoring, the whole application is modified for optimization in terms of performance and at lower costs. Refactoring is, however, a complicated process. Refactoring usually targets PaaS and SaaS.

- Rearchitect: This is one step further than refactoring and is where the architecture of an application, as such, is not modified. This strategy does comprise an architectural redesign to leverage multi-cloud target environments.

- Rebuild: In this strategy, developers build a new cloud-native application from scratch, leveraging the latest tools and frameworks.

- Retire: This strategy is valid if an application is not strategically required for the business going forward. When an application is retired, data needs to be cleaned up and often archived, before the application and underlying infrastructure is decommissioned. Of course, an application can be retired as a follow-up strategy when the functionality in an application is refactored, rearchitected, rebuilt, or repurchased.

- Retain: Nothing changes. The existing applications remain on their current platform and are managed as-is.

It's not easy to predict the outcomes of the business case as many parameters can play a significant role. Benchmarks conducted by institutes such as Gartner point out that total costs savings will vary between 20 and 40 percent in the case of rehost, replatform, and repurchase. Savings may be higher when an application is completely rearchitected and rebuilt. Retiring an application will lead to the highest savings, but then again, assuming that the functionality provided by that application is still important for a business, it will need to fulfill that functionality in another way – generating costs for purchase, implementation, adoption, and/or development. This all has to be taken into account when drafting the business case and choosing the right strategy.

At a high level, we can plot these strategies into three stages:

- Traditional: Although organizations will allow developers to work with cloud-native tools directly on cloud platforms, most of them will still have traditional IT. When migrating to the cloud, they can opt for two scenarios:

- Lift and shift systems "as-is" to the cloud and start the application's modernization in the cloud.

- Modernize the applications before migrating them to the target cloud platform.

A third scenario would be a mix between the first two.

- Rationalized: This stage is all about modernizing the applications and optimizing them for usage in the target cloud platform. This is the stage where PaaS and SaaS are included to benefit from cloud-native technology.

- Dynamic: This is the final stage where applications are fully cloud-native and therefore completely dynamic: they're managed through agile workstreams using continuous improvement and development (CI/CD), fully scalable using containers and serverless solutions, and fully automated using the principle of everything as code, making IT as agile as the business requires.

It all comes together in the following model. This model suggests that the three stages are sequential, but as we have already explained, this doesn't have to be the case:

Figure 2.6 – Technology strategy following business innovation

This model shows three trends that will dominate the cloud strategy in the forthcoming years:

- Software to service: Businesses do not have to invest in software anymore, nor in infrastructure to host that software. Instead, they use software that is fully managed by external providers. The idea is that businesses can now focus on fulfilling business requirements using this software, instead of having to worry about the implementation details and hosting and managing the software itself. It's based on the economic theory of endogenous growth, which states that economic growth can be achieved through developing new technologies and improvements in production efficiency. Using PaaS and SaaS, this efficiency can be sped up significantly.

- VM to container: Virtualization brought a lot of efficiency to data centers. Yet, containers are even more efficient in utilizing compute power and storage. Virtual machines still use a lot of system resources with a guest operating system, where containers utilize the host operating system and only some supporting libraries on top of that. Containers tend to be more flexible and due to that have become increasingly popular in distributing software in a very efficient way. Even large software providers such as SAP already distribute and deploy components using containers. SAP Commerce is supported using Docker containers, running instances of Docker images. These images are built from special file structures mirroring the structures of the components of the SAP Commerce setup.

- Serverless computing: Serverless is about writing and deploying code without worrying about the underlying infrastructure. Developers only pay for what they use, such as processing power or storage. It usually works with triggers and events: an application registers an event (a request) and triggers an action in the backend of that application; for instance, retrieving a certain file. Public cloud platforms offer different serverless solutions: Azure Functions, AWS Lambda, and Google Knative. It offers a maximum amount of scalability against maximum cost control. One remark has to be made at this point: although serverless concepts will become more and more important, it will not be technically possible to fit everything into serverless propositions.

The Twelve-Factor App

One final topic that we need to discuss in this chapter is the Twelve-Factor App. It's where everything comes together.

The Twelve-Factor App is a methodology for building modern apps, ready for deployment on cloud platforms, abstracted from server infrastructure. In other words, the Twelve-Factor App adopts the serverless concept. Because of the high abstraction layers, the app can scale without changing the architecture or development methodology. The format of the Twelve-Factor App is based on the book Patterns of Enterprise Application Architecture and Refactoring, by Martin Fowler, published by Addison-Wesley Professional, 2005. Although it was written in 2005 – lightyears ago in terms of cloud technology – it is still very relevant in terms of its architecture.

The 12 factors are as follows:

- Code base: One code base is tracked in revision control, with many deployments (including Infrastructure as Code).

- Dependencies: Explicitly declare and isolate dependencies.

- Config: Store config in the environment (Configuration as Code).

- Backing services: Treat backing services as attached resources.

- Build, release, run: Strictly separate build and run stages (pipeline management, release trains).

- Processes: Execute the app as one or more stateless processes.

- Port binding: Export services via port binding.

- Concurrency: Scale out via the process model.

- Disposability: Maximize robustness with fast startup and graceful shutdown.

- Dev/prod parity: Keep development, staging, and production as similar as possible.

- Logs: Treat logs as event streams.

- Admin processes: Run admin/management tasks as one-off processes.

More on these principles can be found at https://12factor.net. We will find out that a number of these 12 principles are also captured in different frameworks and embedded in the governance of multi-cloud environments, such as DevOps and Site Reliability Engineering.

Summary

In this chapter, we explored methodologies that are used to analyze enterprise or business strategies and mapped these to a cloud technology roadmap. We also learned that it is close to impossible to keep track of all the new releases and features that are launched by cloud and technology providers. We need to determine what our business goals and objectives are and define a clear architecture that is as future-proof as possible, yet agile enough to adopt new features if the business demands this.

Enterprise architectures using frameworks such as TOGAF and IT4IT help us in designing and managing a multi-cloud architecture that is robust but also scalable in every aspect. We have also seen how IT will shift along with the business demands coming from traditional to rationalized and dynamic environments using software as a service, containers, and serverless concepts, eventually maybe adopting the Twelve-Factor methodology.

In the next chapter, we will be getting a bit more technical and will look at cloud connectivity and networking.

Questions

- In this chapter, we discussed the metrics surrounding availability, durability, levels of backup (including RTO/RPO specifications), and the requirements for business continuity (BC) and disaster recovery (DR). What do we call these metrics or measurements?

- What would be the first thing to define if we created a business roadmap?

- In this chapter, we discussed cloud transformation strategies. Rehost and replatform are two of them. Name two more.

- In this chapter, we identified two major developments in the cloud market. The growth of serverless concepts is one of them. What is the other major development?

Further reading

- The Twelve-Factor App (https://12factor.net)

- How Competitive Forces Shape Strategy, by Michael E. Porter, Harvard Business Review