Chapter 2: Securing Compute Services

Speaking about cloud services, specifically Infrastructure as a Service (IaaS), the most common resource everyone talks about is compute – from the traditional virtual machines (VMs), through managed databases (run on VMs on the backend), to modern compute architecture such as containers and eventually serverless.

This chapter will cover all types of compute services and provide you with best practices on how to securely deploy and manage each of them.

In this chapter, we will cover the following topics:

- Securing VMs (authentication, network access control, metadata, serial console access, patch management, and backups)

- Securing Managed Database Services (identity management, network access control, data protection, and auditing and monitoring)

- Securing Containers (identity management, network access control, auditing and monitoring, and compliance)

- Securing serverless/function as a service (identity management, network access control, auditing and monitoring, compliance, and configuration change)

Technical requirements

For this chapter, you need to have an understanding of VMs, what managed databases are, and what containers (and Kubernetes) are, as well as a fundamental understanding of serverless.

Securing VMs

Each cloud provider has its own implementation of VMs (or virtual servers), but at the end of the day, the basic idea is the same:

- Select a machine type (or size) – a ratio between the amount of virtual CPU (vCPU) and memory, according to their requirements (general-purpose, compute-optimized, memory-optimized, and so on).

- Select a preinstalled image of an operating system (from Windows to Linux flavors).

- Configure storage (adding additional volumes, connecting to file sharing services, and others).

- Configure network settings (from network access controls to micro-segmentation, and others).

- Configure permissions to access cloud resources.

- Deploy an application.

- Begin using the service.

- Carry out ongoing maintenance of the operating system.

According to the shared responsibility model, when using IaaS, we (as the customers) are responsible for the deployment and maintenance of virtual servers, as explained in the coming section.

Next, we are going to see what the best practices are for securing common VM services in AWS, Azure, and GCP.

Securing Amazon Elastic Compute Cloud (EC2)

Amazon EC2 is the Amazon VM service.

General best practices for EC2 instances

Following are some of the best practices to keep in mind:

- Use only trusted AMI when deploying EC2 instances.

- Use a minimal number of packages inside an AMI, to lower the attack surface.

- Use Amazon built-in agents for EC2 instances (backup, patch management, hardening, monitoring, and others).

- Use the new generation of EC2 instances, based on the AWS Nitro System, which offloads virtualization functions (such as network, storage, and security) to dedicated software and hardware chips. This allows the customer to get much better performance, with much better security and isolation of customers' data.

For more information, please refer the following resources:

Best practices for building AMIs:

https://docs.aws.amazon.com/marketplace/latest/userguide/best-practices-for-building-your-amis.html

https://aws.amazon.com/amazon-linux-2/

https://aws.amazon.com/ec2/nitro/

Best practices for authenticating to an instance

AWS does not have access to customers' VMs.

It doesn't matter whether you choose to deploy a Windows or a Linux machine, by running the EC2 launch deployment wizard, you must choose either an existing key pair or create a new key. This set of private/public keys is generated at the client browser – AWS does not have any access to these keys, and therefore cannot log in to your EC2 instance.

For Linux instances, the key pair is used for logging in to the machine via the SSH protocol.

Refer to the following link: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ec2-key-pairs.html.

For Windows instances, the key pair is used to retrieve the built-in administrator's password.

Refer to the following link: https://docs.amazonaws.cn/en_us/AWSEC2/latest/WindowsGuide/ec2-windows-passwords.html.

The best practices are as follows:

- Keep your private keys in a secured location. A good alternative for storing and retrieving SSH keys is to use AWS Secrets Manager.

- Avoid storing private keys on a bastion host or any instance directly exposed to the internet. A good alternative to logging in using SSH, without an SSH key, is to use AWS Systems Manager, through Session Manager.

- Join Windows or Linux instances to an Active Directory (AD) domain and use your AD credentials to log in to the EC2 instances (and avoid using local credentials or SSH keys completely).

For more information, please refer the following resources:

How to use AWS Secrets Manager to securely store and rotate SSH key pairs:

Allow SSH connections through Session Manager:

Seamlessly join a Windows EC2 instance:

https://docs.aws.amazon.com/directoryservice/latest/admin-guide/launching_instance.html

Seamlessly join a Linux EC2 instance to your AWS-managed Microsoft AD directory:

https://docs.aws.amazon.com/directoryservice/latest/admin-guide/seamlessly_join_linux_instance.html

Best practices for securing network access to an instance

Access to AWS resources and services such as EC2 instances is controlled via security groups (at the EC2 instance level) or a network access control list (NACL) (at the subnet level), which are equivalent to the on-premises layer 4 network firewall or access control mechanism.

As a customer, you configure parameters such as source IP (or CIDR), destination IP (or CIDR), destination port (or predefined protocol), and whether the port is TCP or UDP.

You may also use another security group as either the source or destination in a security group.

For remote access and management of Linux machines, limit inbound network access to TCP port 22.

For remote access and management of Windows machines, limit inbound network access to TCP port 3389.

The best practices are as follows:

- For remote access protocols (SSH/RDP), limit the source IP (or CIDR) to well-known addresses. Good alternatives for allowing remote access protocols to an EC2 instance are to use a VPN tunnel, use a bastion host, or use AWS Systems Manager Session Manager.

- For file sharing protocols (CIFS/SMB/FTP), limit the source IP (or CIDR) to well-known addresses.

- Set names and descriptions for security groups to allow a better understanding of the security group's purpose.

- Use tagging (that is, labeling) for security groups to allow a better understanding of which security group belongs to which AWS resources.

- Limit the number of ports allowed in a security group to the minimum required ports for allowing your service or application to function.

For more information, please refer the following resources:

Amazon EC2 security groups for Linux instances: https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/ec2-security-groups.html

Security groups for your virtual private cloud (VPC): https://docs.aws.amazon.com/vpc/latest/userguide/VPC_SecurityGroups.html

AWS Systems Manager Session Manager:

https://docs.aws.amazon.com/systems-manager/latest/userguide/session-manager.html

Compare security groups and network ACLs:

https://docs.aws.amazon.com/vpc/latest/userguide/VPC_Security.html#VPC_Security_Comparison

Best practices for securing instance metadata

Instance metadata is a method to retrieve information about a running instance, such as the hostname and internal IP address.

An example of metadata about a running instance can be retrieved from within an instance, by either opening a browser from within the operating system or using the command line, to a URL such as http://169.254.169.254/latest/meta-data/.

Even though the IP address is an internal IP (meaning it cannot be accessed from outside the instance), the information, by default, can be retrieved locally without authentication.

AWS allows you to enforce authenticated or session-oriented requests to the instance metadata, also known as Instance Metadata Service Version 2 (IMDSv2).

The following command uses the AWS CLI tool to enforce IMDSv2 on an existing instance:

aws ec2 modify-instance-metadata-options

--instance-id <INSTANCE-ID>

--http-endpoint enabled --http-tokens required

For more information, please refer the following resource:

Configure the instance metadata service:

https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/configuring-instance-metadata-service.html

Best practices for securing a serial console connection

For troubleshooting purposes, AWS allows you to connect using a serial console (a similar concept to what we used to have in the physical world with network equipment) to resolve network or operating system problems when SSH or RDP connections are not available.

The following command uses the AWS CLI tool to allow serial access at the AWS account level to a specific AWS Region:

aws ec2 enable-serial-console-access --region <Region_Code>

Since this type of remote connectivity exposes your EC2 instance, it is recommended to follow the following best practices:

- Access to the EC2 serial console should be limited to the group of individuals using identity and access management (IAM) roles.

- Only allow access to EC2 serial console when required.

- Always set a user password on an instance before allowing the EC2 serial console.

For more information, please refer the following resource:

Configure access to the EC2 serial console:

https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/configure-access-to-serial-console.html

Best practices for conducting patch management

Patch management is a crucial part of every instance of ongoing maintenance.

To deploy security patches for either Windows or Linux-based instances in a standard manner, it is recommended to use AWS Systems Manager Patch Manager, following this method:

- Configure the patch baseline.

- Scan your EC2 instances for deviation from the patch baseline at a scheduled interval.

- Install missing security patches on your EC2 instances.

- Review the Patch Manager reports.

The best practices are as follows:

- Use AWS Systems Manager Compliance to make sure all your EC2 instances are up to date.

- Create a group with minimal IAM privileges to allow only relevant team members to conduct patch deployment.

- Use tagging (that is, labeling) for your EC2 instances to allow patch deployment groups per tag (for example, prod versus dev environments).

- For stateless EC2 instances (where no user session data is stored inside an EC2 instance), replace an existing EC2 instance with a new instance, created from an up-to-date operating system image.

For more information, please refer the following resource:

Software patching with AWS Systems Manager:

https://aws.amazon.com/blogs/mt/software-patching-with-aws-systems-manager/

Best practices for securing backups

Backing up is crucial for EC2 instance recovery.

The AWS Backup service encrypts your backups in transit and at rest using AWS encryption keys, stored in AWS Key Management Service (KMS) (as explained in Chapter 7, Applying Encryption in Cloud Services), as an extra layer of security, independent of your Elastic Block Store (EBS) volume or snapshot encryption keys.

The best practices are as follows:

- Configure the AWS Backup service with an IAM role to allow access to the encryption keys stored inside AWS KMS.

- Configure the AWS Backup service with an IAM role to allow access to your backup vault.

- Use tagging (that is, labeling) for backups to allow a better understanding of which backup belongs to which EC2 instance.

- Consider replicating your backups to another region.

For more information, please refer the following resources:

Protecting your data with AWS Backup:

https://aws.amazon.com/blogs/storage/protecting-your-data-with-aws-backup/

Creating backup copies across AWS Regions:

https://docs.aws.amazon.com/aws-backup/latest/devguide/cross-region-backup.html

Summary

In this section, we have learned how to securely maintain a VM, based on AWS infrastructure – from logging in to securing network access, troubleshooting using a serial console, patch management, and backup.

Securing Azure Virtual Machines

Azure Virtual Machines is the Azure VM service.

General best practices for Azure Virtual Machines

Following are some of the best practices to keep in mind:

- Use only trusted images when deploying Azure Virtual Machines.

- Use a minimal number of packages inside an image, to lower the attack surface.

- Use Azure built-in agents for Azure Virtual Machines (backup, patch management, hardening, monitoring, and others).

- For highly sensitive environments, use Azure confidential computing images, to ensure security and isolation of customers' data.

For more information, please refer the following resources:

https://docs.microsoft.com/en-us/azure/virtual-machines/image-builder-overview

Using Azure for cloud-based confidential computing:

Best practices for authenticating to a VM

Microsoft does not have access to customers' VMs.

It doesn't matter whether you choose to deploy a Windows or a Linux machine, by running the create a virtual machine wizard, to deploy a new Linux machine, by default, you must choose either an existing key pair or create a new key pair.

This set of private/public keys is generated at the client side – Azure does not have any access to these keys, and therefore cannot log in to your Linux VM.

For Linux instances, the key pair is used for logging in to the machine via the SSH protocol.

For more information, please refer the following resource:

Generate and store SSH keys in the Azure portal:

https://docs.microsoft.com/en-us/azure/virtual-machines/ssh-keys-portal

For Windows machines, when running the create a new virtual machine wizard, you are asked to specify your own administrator account and password to log in to the machine via the RDP protocol.

For more information, please refer the following resource:

The best practices are as follows:

- Keep your credentials in a secured location.

- Avoid storing private keys on a bastion host (VMs directly exposed to the internet).

- Join Windows or Linux instances to an AD domain and use your AD credentials to log in to the VMs (and avoid using local credentials or SSH keys completely).

For more information, please refer the following resources:

Azure Bastion:

https://azure.microsoft.com/en-us/services/azure-bastion

Join a Windows Server VM to an Azure AD Domain Services-managed domain using a Resource Manager template:

https://docs.microsoft.com/en-us/azure/active-directory-domain-services/join-windows-vm-template

Join a Red Hat Enterprise Linux VM to an Azure AD Domain Services-managed domain:

https://docs.microsoft.com/en-us/azure/active-directory-domain-services/join-rhel-linux-vm

Best practices for securing network access to a VM

Access to Azure resources and services such as VMs is controlled via network security groups, which are equivalent to the on-premises layer 4 network firewall or access control mechanism.

As a customer, you configure parameters such as source IP (or CIDR), destination IP (or CIDR), source port (or a predefined protocol), destination port (or a predefined protocol), whether the port is TCP or UDP, and the action to take (either allow or deny).

For remote access and management of Linux machines, limit inbound network access to TCP port 22.

For remote access and management of Windows machines, limit inbound network access to TCP port 3389.

The best practices are as follows:

- For remote access protocols (SSH/RDP), limit the source IP (or CIDR) to well-known addresses. Good alternatives for allowing remote access protocols to an Azure VM is to use a VPN tunnel, use Azure Bastion, or use Azure Privileged Identity Management (PIM) to allow just-in-time access to a remote VM.

- For file sharing protocols (CIFS/SMB/FTP), limit the source IP (or CIDR) to well-known addresses.

- Set names for network security groups to allow a better understanding of the security group's purpose.

- Use tagging (that is, labeling) for network security groups to allow a better understanding of which network security group belongs to which Azure resources.

- Limit the number of ports allowed in a network security group to the minimum required ports for allowing your service or application to function.

For more information, please refer the following resources:

https://docs.microsoft.com/en-us/azure/virtual-network/network-security-groups-overview

How to open ports to a VM with the Azure portal:

https://docs.microsoft.com/en-us/azure/virtual-machines/windows/nsg-quickstart-portal

Azure Bastion:

https://azure.microsoft.com/en-us/services/azure-bastion

https://docs.microsoft.com/en-us/azure/active-directory/privileged-identity-management/pim-configure

Best practices for securing a serial console connection

For troubleshooting purposes, Azure allows you to connect using a serial console (a similar concept to what we used to have in the physical world with network equipment) to resolve network or operating system problems when SSH or RDP connections are not available.

The following commands use the Azure CLI tool to allow serial access for the entire Azure subscription level:

subscriptionId=$(az account show --output=json | jq -r .id)

az resource invoke-action --action enableConsole

--ids "/subscriptions/$subscriptionId/providers/Microsoft.SerialConsole/consoleServices/default" --api-version="2018-05-01"

Since this type of remote connectivity exposes your VMs, it is recommended to follow the following best practices:

- Access to the serial console should be limited to the group of individuals with the Virtual Machine Contributor role for the VM and the Boot diagnostics storage account.

- Always set a user password on the target VM before allowing access to the serial console.

For more information, please refer the following resources:

Azure serial console for Linux:

https://docs.microsoft.com/en-us/troubleshoot/azure/virtual-machines/serial-console-linux

Azure serial console for Windows:

https://docs.microsoft.com/en-us/troubleshoot/azure/virtual-machines/serial-console-windows

Best practices for conducting patch management

Patch management is a crucial part of every instance of ongoing maintenance.

To deploy security patches for either Windows or Linux-based instances in a standard manner, it is recommended to use Azure Automation Update Management, using the following method:

- Create an automation account.

- Enable Update Management for all Windows and Linux machines.

- Configure the schedule settings and reboot options.

- Install missing security patches on your VMs.

- Review the deployment status.

The best practices are as follows:

- Use minimal privileges for the account using Update Management to deploy security patches.

- Use update classifications to define which security patches to deploy.

- When using an Azure Automation account, encrypt sensitive data (such as variable assets).

- When using an Azure Automation account, use private endpoints to disable public network access.

- Use tagging (that is, labeling) for your VMs to allow defining dynamic groups of VMs (for example, prod versus dev environments).

- For stateless VMs (where no user session data is stored inside an Azure VM), replace an existing Azure VM with a new instance, created from an up-to-date operating system image.

For more information, please refer the following resources:

Azure Automation Update Management:

https://docs.microsoft.com/en-us/azure/architecture/hybrid/azure-update-mgmt

Manage updates and patches for your VMs:

https://docs.microsoft.com/en-us/azure/automation/update-management/manage-updates-for-vm

Update management permissions:

Best practices for securing backups

Backing up is crucial for VM recovery.

The Azure Backup service encrypts your backups in transit and at rest using Azure Key Vault (as explained in Chapter 7, Applying Encryption in Cloud Services).

The best practices are as follows:

- Use Azure role-based access control (RBAC) to configure Azure Backup to have minimal access to your backups.

- For sensitive environments, encrypt data at rest using customer-managed keys.

- Use private endpoints to secure access between your data and the recovery service vault.

- If you need your backups to be compliant with a regulatory standard, use Regulatory Compliance in Azure Policy.

- Use Azure security baselines for Azure Backup (Azure Security Benchmark).

- Enable the soft delete feature to protect your backups from accidental deletion.

- Consider replicating your backups to another region.

For more information, please refer the following resources:

Security features to help protect hybrid backups that use Azure Backup:

https://docs.microsoft.com/en-us/azure/backup/backup-azure-security-feature

Use Azure RBAC to manage Azure backup recovery points:

https://docs.microsoft.com/en-us/azure/backup/backup-rbac-rs-vault

Azure Policy Regulatory Compliance controls for Azure Backup:

https://docs.microsoft.com/en-us/azure/backup/security-controls-policy

https://docs.microsoft.com/en-us/azure/backup/backup-azure-security-feature-cloud

Cross Region Restore (CRR) for Azure Virtual Machines using Azure Backup:

Summary

In this section, we have learned how to securely maintain a VM, based on Azure infrastructure – from logging in to securing network access, troubleshooting using a serial console, patch management, and backup.

Securing Google Compute Engine (GCE) and VM instances

General best practices for Google VMs

Following are some of the best practices to keep in mind:

- Use only trusted images when deploying Google VMs.

- Use a minimal number of packages inside an image, to lower the attack surface.

- Use GCP built-in agents for Google VMs (patch management, hardening, monitoring, and so on).

- For highly sensitive environments, use Google Confidential Computing images, to ensure security and isolation of customers' data.

For more information, please refer the following resources:

List of public images available on GCE:

https://cloud.google.com/compute/docs/images

Confidential Computing:

https://cloud.google.com/confidential-computing

Best practices for authenticating to a VM instance

Google does not have access to customers' VM instances.

When you run the create instance wizard, no credentials are generated.

For Linux instances, you need to manually create a key pair and add the public key to either the instance metadata or the entire GCP project metadata to log in to the machine instance via the SSH protocol.

For more information, please refer the following resource:

Managing SSH keys in metadata:

https://cloud.google.com/compute/docs/instances/adding-removing-ssh-keys

For Windows machine instances, you need to manually reset the built-in administrator's password to log in to the machine instance via the RDP protocol.

For more information, please refer the following resource:

Creating passwords for Windows VMs:

https://cloud.google.com/compute/docs/instances/windows/creating-passwords-for-windows-instances

The best practices are as follows:

- Keep your private keys in a secured location.

- Avoid storing private keys on a bastion host (machine instances directly exposed to the internet).

- Periodically rotate SSH keys used to access compute instances.

- Periodically review public keys inside the compute instance or GCP project-level SSH key metadata and remove unneeded public keys.

- Join Windows or Linux instances to an AD domain and use your AD credentials to log in to the VMs (and avoid using local credentials or SSH keys completely).

For more information, please refer the following resources:

Quickstart: Joining a Windows VM to a domain:

https://cloud.google.com/managed-microsoft-ad/docs/quickstart-domain-join-windows

Quickstart: Joining a Linux VM to a domain:

https://cloud.google.com/managed-microsoft-ad/docs/quickstart-domain-join-linux

Best practices for securing network access to a VM instance

Access to GCP resources and services such as VM instances is controlled via VPC firewall rules, which are equivalent to the on-premises layer 4 network firewall or access control mechanism.

As a customer, you configure parameters such as the source IP (or CIDR), source service, source tags, destination port (or a predefined protocol), whether the port is TCP or UDP, whether the traffic direction is ingress or egress, and the action to take (either allow or deny).

For remote access and management of Linux machines, limit inbound network access to TCP port 22.

For remote access and management of Windows machines, limit inbound network access to TCP port 3389.

The best practices are as follows:

- For remote access protocols (SSH/RDP), limit the source IP (or CIDR) to well-known addresses.

- For file sharing protocols (CIFS/SMB/FTP), limit the source IP (or CIDR) to well-known addresses.

- Set names and descriptions for firewall rules to allow a better understanding of the security group's purpose.

- Use tagging (that is, labeling) for firewall rules to allow a better understanding of which firewall rule belongs to which GCP resources.

- Limit the number of ports allowed in a firewall rule to the minimum required ports for allowing your service or application to function.

For more information, please refer the following resource:

Use fewer, broader firewall rule sets when possible:

https://cloud.google.com/architecture/best-practices-vpc-design#fewer-firewall-rules

Best practices for securing a serial console connection

For troubleshooting purposes, GCP allows you to connect using a serial console (a similar concept to what we used to have in the physical world with network equipment) to resolve network or operating system problems when SSH or RDP connections are not available.

The following command uses the Google Cloud SDK to allow serial access on the entire GCP project:

gcloud compute project-info add-metadata

--metadata serial-port-enable=TRUE

Since this type of remote connectivity exposes your VMs, it is recommended to follow these best practices:

- Configure password-based login to allow users access to the serial console.

- Disable interactive serial console login per compute instance when not required.

- Enable disconnection when the serial console connection is idle.

- Access to the serial console should be limited to the required group of individuals using Google Cloud IAM roles.

- Always set a user password on the target VM instance before allowing access to the serial console.

For more information, please refer the following resource:

Troubleshooting using the serial console:

https://cloud.google.com/compute/docs/troubleshooting/troubleshooting-using-serial-console

Best practices for conducting patch management

Patch management is a crucial part of every instance of ongoing maintenance.

To deploy security patches for either Windows- or Linux-based instances, in a standard manner, it is recommended to use Google operating system patch management, using the following method:

- Deploy the operating system config agent on the target instances.

- Create a patch job.

- Run patch deployment.

- Schedule patch deployment.

- Review the deployment status inside the operating system patch management dashboard.

The best practices are as follows:

- Use minimal privileges for the accounts using operating system patch management to deploy security patches, according to Google Cloud IAM roles.

- Gradually deploy security patches zone by zone and region by region.

- Use tagging (that is, labeling) for your VM instances to allow defining groups of VM instances (for example, prod versus dev environments).

- For stateless VMs (where no user session data is stored inside a Google VM), replace an existing Google VM with a new instance, created from an up-to-date operating system image.

For more information, please refer the following resources:

Operating system patch management:

https://cloud.google.com/compute/docs/os-patch-management

https://cloud.google.com/compute/docs/os-patch-management/create-patch-job

Best practices for operating system updates at scale:

Summary

In this section, we have learned how to securely maintain a VM, based on GCP infrastructure – from logging in to securing network access, troubleshooting using the serial console, and patch management.

Securing managed database services

Each cloud provider has its own implementation of managed databases.

According to the shared responsibility model, if we choose to use a managed database, the cloud provider is responsible for the operating system and database layers of the managed database (including patch management, backups, and auditing).

If we have the requirement to deploy a specific build of a database, we can always deploy it inside a VM, but according to the shared responsibility model, we will oversee the entire operating system and database maintenance (including hardening, backup, patch management, and monitoring).

A managed solution for running the database engine – either a common database engine such as MySQL, PostgreSQL, Microsoft SQL Server, an Oracle Database server, or proprietary databases such as Amazon DynamoDB, Azure Cosmos DB, or Google Cloud Spanner, but at the end of the day, the basic idea is the same:

- Select the database type according to its purpose or use case (relational database, NoSQL database, graph database, in-memory database, and others).

- Select a database engine (for example, MySQL, PostgreSQL, Microsoft SQL Server, or Oracle Database server).

- For relational databases, select a machine type (or size) – a ratio between the amount of vCPU and memory, according to their requirements (general-purpose, memory-optimized, and so on).

- Choose whether high availability is required.

- Deploy a managed database instance (or cluster).

- Configure network access control from your cloud environment to your managed database.

- Enable logging for any access attempt or configuration changes in your managed database.

- Configure backups on your managed database for recovery purposes.

- Connect your application to the managed database and begin using the service.

There are various reasons for choosing a managed database solution:

- Maintenance of the database is under the responsibility of the cloud provider.

- Security patch deployment is under the responsibility of the cloud provider.

- Availability of the database is under the responsibility of the cloud provider.

- Backups are included as part of the service (up to a certain amount of storage and amount of backup history).

- Encryption in transit and at rest are embedded as part of a managed solution.

- Auditing is embedded as part of a managed solution.

Since there is a variety of database types and several database engines, in this chapter, we will focus on a single, popular relational database engine – MySQL.

This chapter will not be focusing on non-relational databases.

Next, we are going to see what the best practices are for securing common managed MySQL database services from AWS, Azure, and GCP.

Securing Amazon RDS for MySQL

Amazon Relational Database Service (RDS) for MySQL is the Amazon-managed MySQL service.

Best practices for configuring IAM for a managed MySQL database service

MySQL supports the following types of authentication methods:

- Local username/password authentication against MySQL's built-in authentication mechanism.

- AWS IAM database authentication.

- AWS Directory Service for Microsoft AD authentication.

The best practices are as follows:

- For the local MySQL master user, create a strong and complex password (at least 15 characters, made up of lowercase and uppercase letters, numbers, and special characters), and keep the password in a secured location.

- For end users who need direct access to the managed database, the preferred method is to use the AWS IAM service, since it allows you to centrally manage all user identities, control their password policy, conduct an audit on their actions (that is, API calls), and, in the case of a suspicious security incident, disable the user identity.

- If you manage your user identities using AWS Directory Service for Microsoft AD (AWS-managed Microsoft AD), use this service to authenticate your end users using the Kerberos protocol.

For more information, please refer the following resources:

IAM database authentication for MySQL:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/UsingWithRDS.IAMDBAuth.html

Using Kerberos authentication for MySQL:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/mysql-kerberos.html

Best practices for securing network access to a managed MySQL database service

Access to a managed MySQL database service is controlled via database security groups, which are equivalent to security groups and the on-premises layer 4 network firewall or access control mechanism.

As a customer, you configure parameters such as the source IP (or CIDR) of your web or application servers and the destination IP (or CIDR) of your managed MySQL database service, and AWS configures the port automatically.

The best practices are as follows:

- Managed databases must never be accessible from the internet or a publicly accessible subnet – always use private subnets to deploy your databases.

- Configure security groups for your web or application servers and set the security group as target CIDR when creating a database security group.

- If you need to manage the MySQL database service, either use an EC2 instance (or bastion host) to manage the MySQL database remotely or create a VPN tunnel from your remote machine to the managed MySQL database.

- Since Amazon RDS is a managed service, it is located outside the customer's VPC. An alternative to secure access from your VPC to the managed RDS environment is to use AWS PrivateLink, which avoids sending network traffic outside your VPC, through a secure channel, using an interface VPC endpoint.

- Set names and descriptions for the database security groups to allow a better understanding of the database security group's purpose.

- Use tagging (that is, labeling) for database security groups to allow a better understanding of which database security group belongs to which AWS resources.

For more information, please refer the following resources:

Controlling access to RDS with security groups:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/Overview.RDSSecurityGroups.html

Amazon RDS API and interface VPC endpoints (AWS PrivateLink):

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/vpc-interface-endpoints.html

Best practices for protecting data stored in a managed MySQL database service

A database is meant to store data.

In many cases, a database (and, in this case, a managed MySQL database) may contain sensitive customer data (from a retail store containing customers' data to an organization's sensitive HR data).

To protect customers' data, it is recommended to encrypt data both in transport (when data passes through the network), to avoid detection by an external party, and at rest (data stored inside a database), to avoid data being revealed, even by an internal database administrator.

Encryption allows you to maintain data confidentiality and data integrity (make sure your data is not changed by an untrusted party).

The best practices are as follows:

- Enable SSL/ TLS 1.2 transport layer encryption to your database.

- For non-sensitive environments, encrypt data at rest using AWS KMS (as explained in Chapter 7, Applying Encryption in Cloud Services).

- For sensitive environments, encrypt data at rest using customer master key (CMK) management (as explained in Chapter 7, Applying Encryption in Cloud Services).

For more information, please refer the following resources:

Using SSL with a MySQL database instance:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/CHAP_MySQL.html#MySQL.Concepts.SSLSupport

Updating applications to connect to MySQL database instances using new SSL/TLS certificates:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/ssl-certificate-rotation-mysql.html

Select the right encryption options for Amazon RDS database engines:

CMK management:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/Overview.Encryption.Keys.html

Best practices for conducting auditing and monitoring for a managed MySQL database service

Auditing is a crucial part of data protection.

As with any other managed service, AWS allows you to enable logging and auditing using two built-in services:

- Amazon CloudWatch: A service that allows you to log database activities and raise an alarm according to predefined thresholds (for example, a high number of failed logins)

- AWS CloudTrail: A service that allows you to monitor API activities (basically, any action performed as part of the AWS RDS API)

The best practices are as follows:

- Enable Amazon CloudWatch alarms for high-performance usage (which may indicate anomalies in the database behavior).

- Enable AWS CloudTrail for any database, to log any activity performed on the database by any user, role, or AWS service.

- Limit access to the CloudTrail logs to the minimum number of employees – preferably in an AWS management account, outside the scope of your end users (including outside the scope of your database administrators), to avoid possible deletion or changes to the audit logs.

For more information, please refer the following resources:

Using Amazon RDS event notifications:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/USER_Events.html

Working with AWS CloudTrail and Amazon RDS:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/logging-using-cloudtrail.html

Summary

In this section, we have learned how to securely maintain a managed MySQL database, based on AWS infrastructure – from logging in, to securing network access, to data encryption (in transit and at rest), and logging and auditing.

Securing Azure Database for MySQL

Azure Database for MySQL is the Azure-managed MySQL service.

Best practices for configuring IAM for a managed MySQL database service

MySQL supports the following types of authentication methods:

- Local username/password authentication against the MySQL built-in authentication mechanism

- Azure AD authentication

The best practices are as follows:

- For the local MySQL master user, create a strong and complex password (at least 15 characters, made up of lowercase and uppercase letters, numbers, and special characters), and keep the password in a secured location.

- For end users who need direct access to the managed database, the preferred method is to use Azure AD authentication, since it allows you to centrally manage all user identities, control their password policy, conduct an audit on their actions (that is, API calls), and, in the case of a suspicious security incident, disable the user identity.

For more information, please refer the following resources:

Use Azure AD for authenticating with MySQL:

https://docs.microsoft.com/en-us/azure/mysql/concepts-azure-ad-authentication

Use Azure AD for authentication with MySQL:

https://docs.microsoft.com/en-us/azure/mysql/howto-configure-sign-in-azure-ad-authentication

Best practices for securing network access to a managed MySQL database service

Access to a managed MySQL database service is controlled via firewall rules, which allows you to configure which IP addresses (or CIDR) are allowed to access your managed MySQL database.

The best practices are as follows:

- Managed databases must never be accessible from the internet or a publicly accessible subnet – always use private subnets to deploy your databases.

- Configure the start IP and end IP of your web or application servers, to limit access to your managed database.

- If you need to manage the MySQL database service, either use an Azure VM (or bastion host) to manage the MySQL database remotely or create a VPN tunnel from your remote machine to the managed MySQL database.

- Since Azure Database for MySQL is a managed service, it is located outside the customer's virtual network (VNet). An alternative to secure access from your VNet to Azure Database for MySQL is to use a VNet service endpoint, which avoids sending network traffic outside your VNet, through a secure channel.

For more information, please refer the following resources:

Azure Database for MySQL server firewall rules:

https://docs.microsoft.com/en-us/azure/mysql/concepts-firewall-rules

Use VNet service endpoints and rules for Azure Database for MySQL:

https://docs.microsoft.com/en-us/azure/mysql/concepts-data-access-and-security-vnet

Best practices for protecting data stored in a managed MySQL database service

A database is meant to store data.

In many cases, a database (and, in this case, a managed MySQL database) may contain sensitive customer data (from a retail store containing customers' data to an organization's sensitive HR data).

To protect customers' data, it is recommended to encrypt data both in transport (when the data passes through the network), to avoid detection by an external party, and at rest (data stored inside a database), to avoid data being revealed, even by an internal database administrator.

Encryption allows you to maintain data confidentiality and data integrity (make sure your data is not changed by an untrusted party).

The best practices are as follows:

- Enable TLS 1.2 transport layer encryption to your database.

- For sensitive environments, encrypt data at rest using customer-managed keys stored inside the Azure Key Vault service (as explained in Chapter 7, Applying Encryption in Cloud Services).

- Keep your customer-managed keys in a secured location for backup purposes.

- Enable the soft delete and purge protection features on Azure Key Vault to avoid accidental key deletion (which will harm your ability to access your encrypted data).

- Enable auditing on all activities related to encryption keys.

For more information, please refer the following resources:

Azure Database for MySQL data encryption with a customer-managed key:

https://docs.microsoft.com/en-us/azure/mysql/concepts-data-encryption-mysql

Azure security baseline for Azure Database for MySQL:

https://docs.microsoft.com/en-us/security/benchmark/azure/baselines/mysql-security-baseline

Best practices for conducting auditing and monitoring for a managed MySQL database service

Auditing is a crucial part of data protection.

As with any other managed service, Azure allows you to enable logging and auditing using two built-in services:

- Built-in Azure Database for MySQL audit logs

- Azure Monitor logs

The best practices are as follows:

- Enable audit logs for MySQL.

- Use the Azure Monitor service to detect failed connections.

- Limit access to the Azure Monitor service data to the minimum number of employees to avoid possible deletion or changes to the audit logs.

- Use Advanced Threat Protection for Azure Database for MySQL to detect anomalies or unusual activity in the MySQL database.

For more information, please refer the following resources:

Audit logs in Azure Database for MySQL:

https://docs.microsoft.com/en-us/azure/mysql/concepts-audit-logs

Configure and access audit logs for Azure Database for MySQL in the Azure portal:

https://docs.microsoft.com/en-us/azure/mysql/howto-configure-audit-logs-portal

Best practices for alerting on metrics with Azure Database for MySQL monitoring:

Security considerations for monitoring data:

Summary

In this section, we have learned how to securely maintain a managed MySQL database, based on Azure infrastructure – from logging in, to securing network access, to data encryption (in transit and at rest), and logging and auditing.

Securing Google Cloud SQL for MySQL

Google Cloud SQL for MySQL is the Google-managed MySQL service.

Best practices for configuring IAM for a managed MySQL database service

MySQL supports the following types of authentication methods:

- Local username/password authentication against the MySQL built-in authentication mechanism

- Google Cloud IAM authentication

The best practices are as follows:

- For the local MySQL master user, create a strong and complex password (at least 15 characters, made up of lowercase and uppercase letters, numbers, and special characters), and keep the password in a secured location.

- For end users who need direct access to the managed database, the preferred method is to use Google Cloud IAM authentication, since it allows you to centrally manage all user identities, control their password policy, conduct an audit on their actions (that is, API calls), and, in the case of a suspicious security incident, disable the user identity.

For more information, please refer the following resources:

Creating and managing MySQL users:

https://cloud.google.com/sql/docs/mysql/create-manage-users

MySQL users:

https://cloud.google.com/sql/docs/mysql/users

Roles and permissions in Cloud SQL:

https://cloud.google.com/sql/docs/mysql/roles-and-permissions

Best practices for securing network access to a managed MySQL database service

Access to a managed MySQL database service is controlled via one of the following options:

- Authorized networks: Allows you to configure which IP addresses (or CIDR) are allowed to access your managed MySQL database

- Cloud SQL Auth proxy: Client installed on your application side, which handles authentication to the Cloud SQL for MySQL database in a secure and encrypted tunnel

The best practices are as follows:

- Managed databases must never be accessible from the internet or a publicly accessible subnet – always use private subnets to deploy your databases.

- If possible, the preferred option is to use the Cloud SQL Auth proxy.

- Configure authorized networks for your web or application servers to allow them access to your Cloud SQL for MySQL.

- If you need to manage the MySQL database service, use either a GCE VM instance to manage the MySQL database remotely or a Cloud VPN (configures an IPSec tunnel to a VPN gateway device).

For more information, please refer the following resources:

Authorizing with authorized networks:

https://cloud.google.com/sql/docs/mysql/authorize-networks

Connecting using the Cloud SQL Auth proxy:

https://cloud.google.com/sql/docs/mysql/connect-admin-proxy

Cloud VPN overview:

https://cloud.google.com/network-connectivity/docs/vpn/concepts/overview

Best practices for protecting data stored in a managed MySQL database service

A database is meant to store data.

In many cases, a database (and, in this case, a managed MySQL database) may contain sensitive customer data (from a retail store containing customer data to an organization's sensitive HR data).

To protect customers' data, it is recommended to encrypt data both in transport (when the data passes through the network), to avoid detection by an external party, and at rest (data stored inside a database), to avoid data being revealed, even by an internal database administrator.

Encryption allows you to maintain data confidentiality and data integrity (make sure your data is not changed by an untrusted party).

The best practices are as follows:

- Enforce TLS 1.2 transport layer encryption on your database.

- For sensitive environments, encrypt data at rest using customer-managed encryption keys (CMEKs) stored inside the Google Cloud KMS service (as explained in Chapter 7, Applying Encryption in Cloud Services).

- When using CMEKs, create a dedicated service account, and grant permission to the customers to access the encryption keys inside Google Cloud KMS.

- Enable auditing on all activities related to encryption keys.

For more information, please refer the following resources:

Configuring SSL/TLS certificates:

https://cloud.google.com/sql/docs/mysql/configure-ssl-instance#enforce-ssl

https://cloud.google.com/sql/docs/mysql/client-side-encryption

Overview of CMEKs:

https://cloud.google.com/sql/docs/mysql/cmek

Using CMEKs:

https://cloud.google.com/sql/docs/mysql/configure-cmek

Best practices for conducting auditing and monitoring for a managed MySQL database service

Auditing is a crucial part of data protection.

As with any other managed service, GCP allows you to enable logging and auditing using Google Cloud Audit Logs.

The best practices are as follows:

- Admin activity audit logs are enabled by default and cannot be disabled.

- Explicitly enable data access audit logs to log activities performed on the database.

- Limit the access to audit logs to the minimum number of employees to avoid possible deletion or changes to the audit logs.

For more information, please refer the following resources:

Audit logs:

https://cloud.google.com/sql/docs/mysql/audit-logging

https://cloud.google.com/logging/docs/audit

Configuring data access audit logs:

https://cloud.google.com/logging/docs/audit/configure-data-access

Permissions and roles:

https://cloud.google.com/logging/docs/access-control#permissions_and_roles

Summary

In this section, we have learned how to securely maintain a managed MySQL database, based on GCP infrastructure – from logging in, to securing network access, to data encryption (in transit and at rest), and logging and auditing.

Securing containers

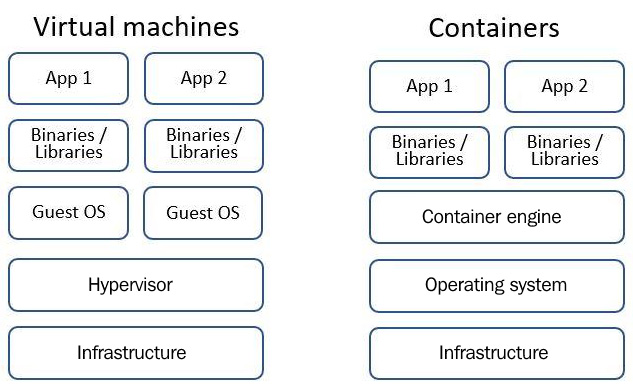

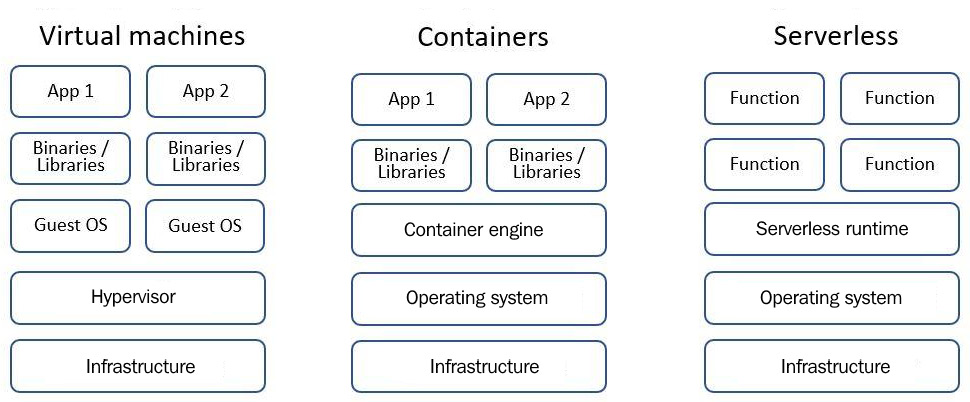

Following VMs, the next evolution in the compute era is containers.

Containers behave like VMs, but with a much smaller footprint.

Instead of having to deploy an application above an entire operating system, you could use containers to deploy your required application, with only the minimum required operating system libraries and binaries.

Containers have the following benefits over VMs:

- Small footprint: Only required libraries and binaries are stored inside a container.

- Portability: You can develop an application inside a container on your laptop and run it at a large scale in a production environment with hundreds or thousands of container instances.

- Fast deployment and updates compared to VMs.

The following diagram presents the architectural differences between VMs and containers:

Figure 2.1 – VMs versus containers

If you are still in the development phase, you can install a container engine on your laptop and create a new container (or download an existing container) locally, until you complete the development phase.

When you move to production and have a requirement to run hundreds of container instances, you need an orchestrator – a mechanism (or a managed service) for managing container deployment, health check monitoring, container recycling, and more.

Docker was adopted by the industry as a de facto standard for wrapping containers, and in the past couple of years, more and more cloud vendors have begun to support a new initiative for wrapping containers called the Open Container Initiative (OCI).

Kubernetes is an open source project (developed initially by Google) and is now considered the industry de facto standard for orchestrating, deploying, scaling, and managing containers.

In this section, I will present the most common container orchestrators available as managed services.

For more information, please refer the following resources:

What is a container?

https://www.docker.com/resources/what-container

Open Container Initiative:

OCI artifact support in Amazon ECR:

https://aws.amazon.com/blogs/containers/oci-artifact-support-in-amazon-ecr/

Azure and OCI images:

GCP and OCI image format:

https://cloud.google.com/artifact-registry/docs/supported-formats#oci

The Kubernetes project:

Next, we are going to see what the best practices are for securing common container and Kubernetes services from AWS, Azure, and GCP.

Securing Amazon Elastic Container Service (ECS)

ECS is the Amazon-managed container orchestration service.

It can integrate with other AWS services such as Amazon Elastic Container Registry (ECR) for storing containers, AWS IAM for managing permissions to ECS, and Amazon CloudWatch for monitoring ECS.

Best practices for configuring IAM for Amazon ECS

AWS IAM is the supported service for managing permissions to access and run containers through Amazon ECS.

The best practices are as follows:

- Grant minimal IAM permissions for the Amazon ECS service (for running tasks, accessing S3 buckets, monitoring using CloudWatch Events, and so on).

- If you are managing multiple AWS accounts, use temporary credentials (using AWS Security Token Service or the AssumeRole capability) to manage ECS on the target AWS account with credentials from a source AWS account.

- Use service roles to allow the ECS service to assume your role and access resources such as S3 buckets, RDS databases, and so on.

- Use IAM roles to control access to Amazon Elastic File System (EFS) from ECS.

- Enforce multi-factor authentication (MFA) for end users who have access to the AWS console and perform privileged actions such as managing the ECS service.

- Enforce policy conditions such as requiring end users to connect to the ECS service using a secured channel (SSL/TLS), connecting using MFA, log in at specific hours of the day, and so on.

- Store your container images inside Amazon ECR and grant minimal IAM permissions for accessing and managing Amazon ECR.

For more information, please refer the following resources:

Amazon ECS container instance IAM role:

https://docs.aws.amazon.com/AmazonECS/latest/developerguide/instance_IAM_role.html

IAM roles for tasks:

https://docs.aws.amazon.com/AmazonECS/latest/developerguide/task-iam-roles.html

Authorization based on Amazon ECS tags:

Using IAM to control filesystem data access:

https://docs.aws.amazon.com/efs/latest/ug/iam-access-control-nfs-efs.html

Amazon ECS task and container security:

https://docs.aws.amazon.com/AmazonECS/latest/bestpracticesguide/security-tasks-containers.html

Best practices for securing network access to Amazon ECS

Since Amazon ECS is a managed service, it is located outside the customer's VPC. An alternative to secure access from your VPC to the managed ECS environment is to use AWS PrivateLink, which avoids sending network traffic outside your VPC, through a secure channel, using an interface VPC endpoint.

The best practices are as follows:

- Use a secured channel (TLS 1.2) to control Amazon ECS using API calls.

- Use VPC security groups to allow access from your VPC to the Amazon ECS VPC endpoint.

- If you use AWS Secrets Manager to store sensitive data (such as credentials) from Amazon ECS, use a Secrets Manager VPC endpoint when configuring security groups.

- If you use AWS Systems Manager to remotely execute commands on Amazon ECS, use Systems Manager VPC endpoints when configuring security groups.

- Store your container images inside Amazon ECR and for non-sensitive environments, encrypt your container images inside Amazon ECR using AWS KMS (as explained in Chapter 7, Applying Encryption in Cloud Services).

- For sensitive environments, encrypt your container images inside Amazon ECR using CMK management (as explained in Chapter 7, Applying Encryption in Cloud Services).

- If you use Amazon ECR to store your container images, use VPC security groups to allow access from your VPC to the Amazon ECR interface's VPC endpoint.

For more information, please refer the following resource:

Amazon ECS interface VPC endpoints (AWS PrivateLink):

https://docs.aws.amazon.com/AmazonECS/latest/developerguide/vpc-endpoints.html

Best practices for conducting auditing and monitoring in Amazon ECS

Auditing is a crucial part of data protection.

As with any other managed service, AWS allows you to enable logging and auditing using two built-in services:

- Amazon CloudWatch: A service that allows you to log containers' activities and raise an alarm according to predefined thresholds (for example, low memory resources or high CPU, which requires up-scaling your ECS cluster)

- AWS CloudTrail: A service that allows you to monitor API activities (basically, any action performed on the ECS cluster)

The best practices are as follows:

- Enable Amazon CloudWatch alarms for high-performance usage (which may indicate an anomaly in the ECS cluster behavior).

- Enable AWS CloudTrail for any action performed on the ECS cluster.

- Limit the access to the CloudTrail logs to the minimum number of employees – preferably in an AWS management account, outside the scope of your end users (including outside the scope of your ECS cluster administrators), to avoid possible deletion or changes to the audit logs.

For more information, please refer the following resources:

Logging and monitoring in Amazon ECS:

https://docs.aws.amazon.com/AmazonECS/latest/developerguide/ecs-logging-monitoring.html

Logging Amazon ECS API calls with AWS CloudTrail:

https://docs.aws.amazon.com/AmazonECS/latest/developerguide/logging-using-cloudtrail.html

Best practices for enabling compliance on Amazon ECS

Security configuration is a crucial part of your infrastructure.

Amazon allows you to conduct ongoing compliance checks against well-known security standards (such as the Center for Internet Security Benchmarks).

The best practices are as follows:

- Use only trusted image containers and store them inside Amazon ECR – a private repository for storing your organizational images.

- Run the Docker Bench for Security tool on a regular basis to check for compliance with CIS Benchmarks for Docker containers.

- Build your container images from scratch (to avoid malicious code in preconfigured third-party images).

- Scan your container images for vulnerabilities in libraries and binaries and update your images on a regular basis.

- Configure your images with a read-only root filesystem to avoid unintended upload of malicious code into your images.

For more information, please refer the following resources:

Docker Bench for Security:

https://github.com/docker/docker-bench-security

Amazon ECR private repositories:

https://docs.aws.amazon.com/AmazonECR/latest/userguide/Repositories.html

Summary

In this section, we have learned how to securely maintain Amazon ECS, based on AWS infrastructure – from logging in, to securing network access, to logging and auditing, and security compliance.

Securing Amazon Elastic Kubernetes Service (EKS)

EKS is the Amazon-managed Kubernetes orchestration service.

It can integrate with other AWS services, such as Amazon ECR for storing containers, AWS IAM for managing permissions to EKS, and Amazon CloudWatch for monitoring EKS.

Best practices for configuring IAM for Amazon EKS

AWS IAM is the supported service for managing permissions to access and run containers through Amazon EKS.

The best practices are as follows:

- Grant minimal IAM permissions for accessing and managing Amazon EKS.

- If you are managing multiple AWS accounts, use temporary credentials (using AWS Security Token Service or the AssumeRole capability) to manage EKS on the target AWS account with credentials from a source AWS account.

- Use service roles to allow the EKS service to assume your role and access resources such as S3 buckets and RDS databases.

- For authentication purposes, avoid using service account tokens.

- Create an IAM role for each newly created EKS cluster.

- Create a service account for each newly created application.

- Always run applications using a non-root user.

- Use IAM roles to control access to storage services (such as Amazon EBS, Amazon EFS, and Amazon FSx for Lustre) from EKS.

- Enforce MFA for end users who have access to the AWS console and perform privileged actions such as managing the EKS service.

- Store your container images inside Amazon ECR and grant minimal IAM permissions for accessing and managing Amazon ECR.

For more information, please refer the following resources:

How Amazon EKS works with IAM:

https://docs.aws.amazon.com/eks/latest/userguide/security_iam_service-with-iam.html

IAM roles for service accounts:

https://docs.aws.amazon.com/eks/latest/userguide/iam-roles-for-service-accounts.html

IAM:

https://aws.github.io/aws-eks-best-practices/security/docs/iam/

Best practices for securing network access to Amazon EKS

Since Amazon EKS is a managed service, it is located outside the customer's VPC. An alternative to secure access from your VPC to the managed EKS environment is to use AWS PrivateLink, which avoids sending network traffic outside your VPC, through a secure channel, using an interface VPC endpoint.

The best practices are as follows:

- Use TLS 1.2 to control Amazon EKS using API calls.

- Use TLS 1.2 when configuring Amazon EKS behind AWS Application Load Balancer or AWS Network Load Balancer.

- Use TLS 1.2 between your EKS control plane and the EKS cluster's worker nodes.

- Use VPC security groups to allow access from your VPC to the Amazon EKS VPC endpoint.

- Use VPC security groups between your EKS control plane and the EKS cluster's worker nodes.

- Use VPC security groups to protect access to your EKS Pods.

- Disable public access to your EKS API server – either use an EC2 instance (or bastion host) to manage the EKS cluster remotely or create a VPN tunnel from your remote machine to your EKS cluster.

- If you use AWS Secrets Manager to store sensitive data (such as credentials) from Amazon EKS, use a Secrets Manager VPC endpoint when configuring security groups.

- Store your container images inside Amazon ECR, and for non-sensitive environments, encrypt your container images inside Amazon ECR using AWS KMS (as explained in Chapter 7, Applying Encryption in Cloud Services).

- For sensitive environments, encrypt your container images inside Amazon ECR using CMK management (as explained in Chapter 7, Applying Encryption in Cloud Services).

- If you use Amazon ECR to store your container images, use VPC security groups to allow access from your VPC to the Amazon ECR interface VPC endpoint.

For more information, please refer the following resources:

Network security:

https://aws.github.io/aws-eks-best-practices/security/docs/network

Amazon EKS networking:

https://docs.aws.amazon.com/eks/latest/userguide/eks-networking.html

Introducing security groups for Pods:

https://aws.amazon.com/blogs/containers/introducing-security-groups-for-pods/

EKS best practice guides:

https://aws.github.io/aws-eks-best-practices/

Best practices for conducting auditing and monitoring in Amazon EKS

Auditing is a crucial part of data protection.

As with any other managed service, AWS allows you to enable logging and auditing using two built-in services:

- Amazon CloudWatch: A service that allows you to log EKS cluster activities and raise an alarm according to predefined thresholds (for example, low memory resources or high CPU, which requires up-scaling your EKS cluster).

- AWS CloudTrail: A service that allows you to monitor API activities (basically, any action performed on the EKS cluster).

The best practices are as follows:

- Enable the Amazon EKS control plane when logging in to Amazon CloudWatch – this allows you to log API calls, audit, and authentication information from your EKS cluster.

- Enable AWS CloudTrail for any action performed on the EKS cluster.

- Limit the access to the CloudTrail logs to the minimum number of employees – preferably in an AWS management account, outside the scope of your end users (including outside the scope of your EKS cluster administrators), to avoid possible deletion or changes to the audit logs.

For more information, please refer the following resources:

Amazon EKS control plane logging:

https://docs.aws.amazon.com/eks/latest/userguide/control-plane-logs.html

Auditing and logging:

https://aws.github.io/aws-eks-best-practices/security/docs/detective/

Best practices for enabling compliance on Amazon EKS

Security configuration is a crucial part of your infrastructure.

Amazon allows you to conduct ongoing compliance checks against well-known security standards (such as CIS Benchmarks).

The best practices are as follows:

- Use only trusted image containers and store them inside Amazon ECR – a private repository for storing your organizational images.

- Run the kube-bench tool on a regular basis to check for compliance with CIS Benchmarks for Kubernetes.

- Run the Docker Bench for Security tool on a regular basis to check for compliance with CIS Benchmarks for Docker containers.

- Build your container images from scratch (to avoid malicious code in preconfigured third-party images).

- Scan your container images for vulnerabilities in libraries and binaries and update your images on a regular basis.

- Configure your images with a read-only root filesystem to avoid unintended upload of malicious code into your images.

For more information, please refer the following resources:

Configuration and vulnerability analysis in Amazon EKS:

https://docs.aws.amazon.com/eks/latest/userguide/configuration-vulnerability-analysis.html

Introducing the CIS Amazon EKS Benchmark:

https://aws.amazon.com/blogs/containers/introducing-cis-amazon-eks-benchmark/

Compliance:

https://aws.github.io/aws-eks-best-practices/security/docs/compliance/

Image security:

https://aws.github.io/aws-eks-best-practices/security/docs/image/

Pod security:

https://aws.github.io/aws-eks-best-practices/security/docs/pods/

kube-bench:

https://github.com/aquasecurity/kube-bench

Docker Bench for Security:

https://github.com/docker/docker-bench-security

Amazon ECR private repositories:

https://docs.aws.amazon.com/AmazonECR/latest/userguide/Repositories.html

Summary

In this section, we have learned how to securely maintain Amazon EKS, based on AWS infrastructure – from logging in, to securing network access, to logging and auditing, and security compliance.

Securing Azure Container Instances (ACI)

ACI is the Azure-managed container orchestration service.

It can integrate with other Azure services, such as Azure Container Registry (ACR) for storing containers, Azure AD for managing permissions to ACI, Azure Files for persistent storage, and Azure Monitor.

Best practices for configuring IAM for ACI

Although ACI does not have its own authentication mechanism, it is recommended to use ACR to store your container images in a private registry.

ACR supports the following authentication methods:

- Managed identity: A user or system account from Azure AD

- Service principal: An application, service, or platform that needs access to ACR

The best practices are as follows:

- Grant minimal permissions for accessing and managing ACR, using Azure RBAC.

- When passing sensitive information (such as credential secrets), make sure the traffic is encrypted in transit through a secure channel (TLS).

- If you need to store sensitive information (such as credentials), store it inside the Azure Key Vault service.

- For sensitive environments, encrypt information (such as credentials) using customer-managed keys, stored inside the Azure Key Vault service.

- Disable the ACR built-in admin user.

For more information, please refer the following resources:

Authenticate with ACR:

https://docs.microsoft.com/en-us/azure/container-registry/container-registry-authentication

ACR roles and permissions:

https://docs.microsoft.com/en-us/azure/container-registry/container-registry-roles

Encrypt a registry using a customer-managed key:

https://docs.microsoft.com/en-us/azure/container-registry/container-registry-customer-managed-keys

Best practices for conducting auditing and monitoring in ACI

Auditing is a crucial part of data protection.

As with any other managed service, Azure allows you to enable logging and auditing using Azure Monitor for containers – a service that allows you to log container-related activities and raise an alarm according to predefined thresholds (for example, low memory resources or high CPU, which requires up-scaling your container environment).

The best practices are as follows:

- Enable audit logging for Azure resources, using Azure Monitor, to log authentication-related activities of your ACR.

- Limit the access to the Azure Monitor logs to the minimum number of employees to avoid possible deletion or changes to the audit logs.

For more information, please refer the following resources:

Container insights overview:

https://docs.microsoft.com/en-us/azure/azure-monitor/containers/container-insights-overview

Container monitoring solution in Azure Monitor:

https://docs.microsoft.com/en-us/azure/azure-monitor/containers/containers

Best practices for enabling compliance on ACI

Security configuration is a crucial part of your infrastructure.

Azure allows you to conduct ongoing compliance checks against well-known security standards (such as CIS Benchmarks).

The best practices are as follows:

- Use only trusted image containers and store them inside ACR – a private repository for storing your organizational images.

- Integrate ACR with Azure Security Center, to detect non-compliant images (from the CIS standard).

- Build your container images from scratch (to avoid malicious code in preconfigured third-party images).

- Scan your container images for vulnerabilities in libraries and binaries and update your images on a regular basis.

For more information, please refer the following resources:

Azure security baseline for ACI:

Azure security baseline for ACR:

Security considerations for ACI:

https://docs.microsoft.com/en-us/azure/container-instances/container-instances-image-security

Introduction to private Docker container registries in Azure:

https://docs.microsoft.com/en-us/azure/container-registry/container-registry-intro

Summary

In this section, we have learned how to securely maintain ACI, based on Azure infrastructure – from logging in to auditing and monitoring and security compliance.

Securing Azure Kubernetes Service (AKS)

AKS is the Azure-managed Kubernetes orchestration service.

It can integrate with other Azure services, such as ACR for storing containers, Azure AD for managing permissions to AKS, Azure Files for persistent storage, and Azure Monitor.

Best practices for configuring IAM for Azure AKS

Azure AD is the supported service for managing permissions to access and run containers through Azure AKS.

The best practices are as follows:

- Enable Azure AD integration for any newly created AKS cluster.

- Grant minimal permissions for accessing and managing AKS, using Azure RBAC.

- Grant minimal permissions for accessing and managing ACR, using Azure RBAC.

- Create a unique service principal for each newly created AKS cluster.

- When passing sensitive information (such as credential secrets), make sure the traffic is encrypted in transit through a secure channel (TLS).

- If you need to store sensitive information (such as credentials), store it inside the Azure Key Vault service.

- For sensitive environments, encrypt information (such as credentials) using customer-managed keys, stored inside the Azure Key Vault service.

For more information, please refer the following resources:

AKS-managed Azure AD integration:

https://docs.microsoft.com/en-us/azure/aks/managed-aad

Best practices for authentication and authorization in AKS:

https://docs.microsoft.com/en-us/azure/aks/operator-best-practices-identity

Best practices for securing network access to Azure AKS

Azure AKS exposes services to the internet – for that reason, it is important to plan before deploying each Azure AKS cluster.

The best practices are as follows:

- Avoid exposing the AKS cluster control plane (API server) to the public internet – create a private cluster with an internal IP address and use authorized IP ranges to define which IPs can access your API server.

- Use the Azure Firewall service to restrict outbound traffic from AKS cluster nodes to external DNS addresses (for example, software updates from external sources).

- Use TLS 1.2 to control Azure AKS using API calls.

- Use TLS 1.2 when configuring Azure AKS behind Azure Load Balancer.

- Use TLS 1.2 between your AKS control plane and the AKS cluster's nodes.

- For small AKS deployments, use the kubenet plugin to implement network policies and protect the AKS cluster.

- For large production deployments, use the Azure CNI Kubernetes plugin to implement network policies and protect the AKS cluster.

- Use Azure network security groups to block SSH traffic to the AKS cluster nodes, from the AKS subnets only.

- Use network policies to protect the access between the Kubernetes Pods.

- Disable public access to your AKS API server – either use an Azure VM (or Azure Bastion) to manage the AKS cluster remotely or create a VPN tunnel from your remote machine to your AKS cluster.

- Disable or remove the HTTP application routing add-on.

- If you need to store sensitive information (such as credentials), store it inside the Azure Key Vault service.

- For sensitive environments, encrypt information (such as credentials) using customer-managed keys, stored inside the Azure Key Vault service.

For more information, please refer the following resources:

Best practices for network connectivity and security in AKS:

https://docs.microsoft.com/en-us/azure/aks/operator-best-practices-network

Best practices for cluster isolation in AKS:

https://docs.microsoft.com/en-us/azure/aks/operator-best-practices-cluster-isolation

Create a private AKS cluster:

https://docs.microsoft.com/en-us/azure/aks/private-clusters

Best practices for conducting auditing and monitoring in Azure AKS

Auditing is a crucial part of data protection.

As with any other managed service, Azure allows you to enable logging and auditing using Azure Monitor for containers – a service that allows you to log container-related activities and raise an alarm according to predefined thresholds (for example, low memory resources or high CPU, which requires up-scaling your container environment).

The best practices are as follows:

- Enable audit logging for Azure resources, using Azure Monitor, to log authentication-related activities of your ACR.

- Limit the access to the Azure Monitor logs to the minimum number of employees to avoid possible deletion or changes to the audit logs.

For more information, please refer the following resources:

ACI overview:

https://docs.microsoft.com/en-us/azure/azure-monitor/containers/container-insights-overview

Container monitoring solution in Azure Monitor:

https://docs.microsoft.com/en-us/azure/azure-monitor/containers/containers

Best practices for enabling compliance on Azure AKS

Security configuration is a crucial part of your infrastructure.

Azure allows you to conduct ongoing compliance checks against well-known security standards (such as CIS Benchmarks).

The best practices are as follows:

- Use only trusted image containers and store them inside ACR – a private repository for storing your organizational images.

- Use Azure Defender for Kubernetes to protect Kubernetes clusters from vulnerabilities.

- Use Azure Defender for container registries to detect and remediate vulnerabilities in container images.

- Integrated Azure Container Registry with Azure Security Center to detect non-compliant images (from the CIS standard).

- Build your container images from scratch (to avoid malicious code in preconfigured third-party images).

- Scan your container images for vulnerabilities in libraries and binaries and update your images on a regular basis.

For more information, please refer the following resources:

Introduction to Azure Defender for Kubernetes: