Chapter 3: Getting Started with OpenGL and Vulkan

In this chapter, we will cover the basic steps of modern OpenGL and Vulkan. We will also learn how to deal with textures, buffers, shaders, and pipelines. The recipes in this chapter will not focus solely on the graphics APIs that are available, but on various tips and tricks that are necessary for improving graphical application development and various 3D graphics algorithms. On the Vulkan side, we will cover the basics so that we can get it up and running.

In this chapter, we will cover the following recipes:

- Intercepting OpenGL API calls

- Working with Direct State Access (DSA)

- Loading and compiling shaders in OpenGL

- Implementing programmable vertex pulling (PVP) in OpenGL

- Working with cube map textures

- Compiling Vulkan shaders at runtime

- Initializing Vulkan instances and graphical devices

- Initializing the Vulkan swap chain

- Setting up Vulkan's debugging capabilities

- Tracking and cleaning up Vulkan objects

- Using Vulkan command buffers

- Dealing with buffers in Vulkan

- Using texture data in Vulkan

- Using mesh geometry data in Vulkan

- Using Vulkan descriptor sets

- Initializing Vulkan shader modules

- Initializing the Vulkan pipeline

- Putting it all together into a Vulkan application

Technical requirements

To complete the recipes in this chapter, you must have a computer with a video card that can support OpenGL 4.6 and Vulkan 1.1. Read Chapter 1, Establishing a Build Environment, if you want to learn how to configure your computer properly.

You can find the code files present in this chapter on GitHub at https://github.com/PacktPublishing/3D-Graphics-Rendering-Cookbook/tree/master/Chapter3

Intercepting OpenGL API calls

Sometimes, it is very desirable to intercept OpenGL API calls for debugging purposes or, for example, to manipulate the underlying OpenGL state before passing API calls into the real OpenGL system. You can do this to simulate mobile OpenGL on top of a desktop OpenGL implementation or vice versa. Manually writing wrappers for each and every API function is a tedious and thankless job. In this recipe, you will learn how to quickly make custom OpenGL hooks and use them in your applications.

Getting ready

This recipe uses a Python script to parse glcorearb.h and generate all the necessary scaffolding code for the wrapper functions. The complete source code for this recipe can be found in this book's source code bundle, under the name Chapter3/GL01_APIWrapping.

How to do it...

Let's write a small OpenGL application that prints all the GL API functions that have been used, along with their parameters, in the console window while the application is running:

- First, let's run the supplementary Python script with the following command:

python GetGLAPI.py > GLAPITrace.h

This script reads input from funcs_list.txt, which contains the list of OpenGL functions that we want to wrap, in the following format:

glActiveTexture

glAttachShader

glBeginQuery

glBindAttribLocation

glBindBuffer

...

This script creates two files called GLAPI.h and GLAPITrace.h.

- Now, we can declare the GL4API struct, including the first generated file, as follows:

struct GL4API {

# include "GLAPI.h"

};

This structure contains pointers to all the required OpenGL functions.

- Next, declare a function type and two function prototypes:

using PFNGETGLPROC = void* (const char*);

void GetAPI4(GL4API* api, PFNGETGLPROC GetGLProc);

void InjectAPITracer4(GL4API* api);

Their implementations can be found in GLAPITrace.h.

Now, we can use these functions in our application.

- Define a GL4API instance, fill it with OpenGL function pointers, and inject the wrapping code. Use glfwGetProcAddress() to retrieve the pointers to OpenGL functions:

GL4API api;

GetAPI4(&api, [](const char* func) -> void*

{ return (void *)glfwGetProcAddress(func); });

InjectAPITracer4(&api);

- Invoke all subsequent OpenGL commands using the api. structure:

const GLuint shaderVertex = api.glCreateShader(GL_VERTEX_SHADER);

api.glShaderSource( shaderVertex, 1, &shaderCodeVertex, nullptr);

api.glCompileShader(shaderVertex);

...

The console output of the running program should look as follows:

glViewport(0, 0, 1024, 768)

glClear(16384)

glUseProgram(3)

glNamedBufferSubData(1, 0, 64, 000000F5508FF6B0)

glDrawArrays(GL_TRIANGLES, 0, 3)

glViewport(0, 0, 1024, 768)

glClear(16384)

glUseProgram(3)

glNamedBufferSubData(1, 0, 64, 000000F5508FF6B0)

glDrawArrays(GL_TRIANGLES, 0, 3)

This approach can be used for logging and debugging, and it can even be extended to record sequences of OpenGL commands or similar purposes. By changing the Python script, it is easy to customize the generated wrappers to your own needs.

How it works...

The first generated file, GLAPI.h, contains a list of declarations in the following form:

PFNGLACTIVETEXTUREPROC glActiveTexture;

PFNGLATTACHSHADERPROC glAttachShader;

PFNGLBEGINQUERYPROC glBeginQuery;

PFNGLBINDATTRIBLOCATIONPROC glBindAttribLocation;

PFNGLBINDBUFFERPROC glBindBuffer;

...

The second generated file, GLAPITrace.h, contains a long list of actual wrappers for every specified OpenGL function call. Each wrapper prints parameters in the console, invokes the actual function through a pointer, which, in turn, might be a wrapper as well, and checks for GL errors once the function returns. Let's take a look at a couple of functions from this file:

void GLTracer_glCullFace(GLenum mode) {

printf("glCullFace(" "%s) ", E2S(mode));

apiHook.glCullFace(mode);

assert(apiHook.glGetError() == GL_NO_ERROR);

}

void GLTracer_glPolygonMode(GLenum face, GLenum mode) {

printf( "glPolygonMode(" "%s, %s) ", E2S(face), E2S(mode));

apiHook.glPolygonMode(face, mode);

assert(apiHook.glGetError() == GL_NO_ERROR);

}

...

The Enum2String() helper function, which is used inside the wrappers via the E2S() macro, converts a GLenum value into an appropriate string representation. This is just a hardcoded list of values; there's nothing really fancy here. For values not in the list, the function will return a numerical representation of the enum via std::to_string():

#define W( en ) if ( e == en ) return #en;

std::string Enum2String(GLenum e) {

W(GL_POINTS);

W(GL_LINES);

W(GL_LINE_LOOP);

W(GL_LINE_STRIP);

W(GL_TRIANGLES);

...

return std::to_string(e);

}

Besides that, there are two more function definitions that are generated here. The first one loads OpenGL function pointers into the GL4API structure using the supplied lambda, like so:

#define LOAD_GL_FUNC(f) api->func = (decltype(api->f))GetGLProc(#f);

void GetAPI4(GL4API* api, PFNGETGLPROC GetGLProc) {

LOAD_GL_FUNC(glActiveTexture);

LOAD_GL_FUNC(glAttachShader);

LOAD_GL_FUNC(glBeginQuery);

LOAD_GL_FUNC(glBindAttribLocation);

...

The second one, called InjectAPITracer4(), is defined as follows:

#define INJECT(S) api->S = &GLTracer_##S;

void InjectAPITracer4(GL4API* api) {

apiHook = *api;

INJECT(glActiveTexture);

INJECT(glAttachShader);

INJECT(glBeginQuery);

INJECT(glBindAttribLocation);

...

This function saves the previous value of GL4API into a static global variable and replaces the function pointers with pointers to the custom wrapper functions.

Working with Direct State Access (DSA)

Starting with version 4.5, OpenGL Core Profile allows us to modify the state of objects without enforcing the bind-to-edit model that was used in previous versions of OpenGL. Let's take a closer look at the new functions that provide a straightforward, object-oriented interface and do not affect the global state.

Getting ready

The OpenGL examples provided in this book use the DSA programming model, which you were exposed to in Chapter 2, Using Essential Libraries. If you are not familiar with DSA yet, it is recommended that you go through the source code for all the applications covered in Chapter 2, Using Essential Libraries, to get a solid grasp of this approach to small, self-contained examples.

All DSA functions can be separated into the following object families:

- Texture

- Framebuffer

- Buffer

- Transform feedback

- Vertex array

- Sampler

- Query

- Program

Let's go through a couple of these object families to understand how the new API works.

How to do it...

The first family of functions is related to texture objects. Let's take a look:

- Create a set of texture objects with the following command, which specifies a texture target right from the get-go:

void glCreateTextures( GLenum target, GLsizei n, GLuint* textures);

- All texture parameters should be set directly with this group function, based on the parameter type. It is the DSA equivalent of glTexParameter...() functions:

void glTextureParameter...( GLuint texture, GLenum pname, ...);

- Using glActiveTexture() and glBindTexture() is no longer required. Instead, a single command should be used:

void glBindTextureUnit(GLuint unit, GLuint texture);

Note

Typically, we would use the following pair of functions:

glActiveTexture(GL_TEXTURE0 + 2);glBindTexture(GL_TEXTURE_2D, texId);

Instead of this, you can use the one-liner shown here. The texture target will be inferred from the texture object itself, which means using GL_TEXTURE0 is no longer required:

glBindTextureUnit(2, texId);

Alternatively, if you want to bind a few textures to a sequence of texture units at the same time, use the following command:

void glBindTextures(GLuint first, GLsizei count,

const GLuint* textures);

- Generating texture mipmaps can now be done directly. Like all the DSA functions, this one takes the OpenGL GLuint name instead of a texture target:

void glGenerateTextureMipmap(GLuint texture);

- Uploading data into textures should be done in the following way. First, we should tell OpenGL how much and what kind of storage should be allocated for a texture using one of the following functions:

void glTextureStorage...();

- The actual pixels can be uploaded, compressed, or decompressed with one of the following calls:

void glTextureSubImage...();

void glCompressedTextureSubImage...();

Let's take a look at how a 2D texture can be uploaded in one of the examples from the previous chapter; that is, Chapter2�3_STBsrcmain.cpp:

GLuint t;

glCreateTextures(GL_TEXTURE_2D, 1, &t);

glTextureParameteri(t, GL_TEXTURE_MAX_LEVEL, 0);

glTextureParameteri(t, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTextureParameteri(t, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTextureStorage2D(t, 1, GL_RGB8, w, h);

glTextureSubImage2D( t, 0, 0, 0, w, h, GL_RGB, GL_UNSIGNED_BYTE, img);

This API prevents many situations where a texture object might remain in an incomplete state due to a wrong sequence of legacy glTexImage...() calls.

Let's look at another family of functions related to buffers. It all starts with a call to glCreateBuffers(), which will create a set of buffers. It does not require a specific buffer target, which means that buffers can be created and reused for specific purposes later, making them completely interchangeable. For example, a shader storage buffer can be filled on a GPU via a compute shader and be reused as an indirect buffer for draw commands. We will touch on this mechanic in the subsequent chapters. For now, let's focus on how to create and set up buffer objects using the new DSA functions. Check out Chapter2�7_Assimpsrcmain.cpp for the full source code:

- A uniform buffer object can be created in the following way:

const GLsizeiptr kBufSize = sizeof(PerFrameData);

GLuint buf;

glCreateBuffers(1, &buf);

- Now, we should specify the storage for our uniform buffer. The GL_DYNAMIC_STORAGE_BIT flag tells the OpenGL implementation that the contents of the buffer may be updated later through calls to glBufferSubData():

glNamedBufferStorage( buf, kBufSize, nullptr, GL_DYNAMIC_STORAGE_BIT);

- To make the entire buffer accessible from GLSL shaders at binding point 0, we should use the following function call:

glBindBufferRange( GL_UNIFORM_BUFFER, 0, buf, 0, kBufSize);

Other types of buffers can be created in a similar fashion. We will discuss them on an as-needed basis in subsequent chapters.

There is one more important thing to mention, which is how to set up the vertex attributes format for vertex array objects (VAOs). Let's take a closer look at how to store vertex positions in vec3 format inside a buffer and render from it:

- First, we should create a buffer to store vertex data in. The flag should be set to 0 as the contents of this buffer will be immutable:

GLuint buf;

glCreateBuffers(1, &buf);

glNamedBufferStorage( buf, sizeof(vec3) * pos.size(), pos.data(), 0);

- Now, let's use this buffer to set up a vertex array object:

GLuint vao;

glCreateVertexArrays(1, &vao);

- The data for GLSL attribute stream (location) 0, which we use for vertex 3D positions, should be sourced from the buf buffer, start from an offset of 0, and use a stride equal to the size of vec3. This means that positions are tightly packed inside the buffer, without any interleaving with other data, such as normal vectors or texture coordinates:

glVertexArrayVertexBuffer( vao, 0, buf, 0, sizeof(vec3));

glEnableVertexArrayAttrib(vao, 0 );

- Now, we should describe what the format our data will be in for attribute stream number 0. Each value contains 3 components of the GL_FLOAT type. No normalization is required. The relative offset, which is the distance between the elements within a buffer, should be zero in the case of tightly packed values. Here is how to set it up:

glVertexArrayAttribFormat( vao, 0, 3, GL_FLOAT, GL_FALSE, 0);

- The following call connects a vertex buffer binding point of 0 within the vertex attribute format we described as number 0:

glVertexArrayAttribBinding(vao, 0, 0);

This might sound confusing at first, but imagine that we have one big buffer containing interleaving positions, texture coordinates, and colors, as in the Chapter2�4_ImGui example. Let's look at a complete code fragment for how this VAO should be set up:

- Create a VAO, like so:

GLuint vao;

glCreateVertexArrays(1, &vao);

- Bind a buffer containing indices to this VAO:

glVertexArrayElementBuffer(vao, handleElements);

- Bind a buffer containing the interleaved vertex data to this VAO's buffer binding point; that is, 0:

glVertexArrayVertexBuffer( vao, 0, handleVBO, 0, sizeof(ImDrawVert));

- Enable all three vertex attributes streams:

glEnableVertexArrayAttrib(vao, 0);

glEnableVertexArrayAttrib(vao, 1);

glEnableVertexArrayAttrib(vao, 2);

- Specify a data format for each attribute stream. The streams have their indices set to 0, 1, and 2, which correspond to the location binding points in the GLSL shaders:

glVertexArrayAttribFormat(vao, 0, 2, GL_FLOAT, GL_FALSE, IM_OFFSETOF(ImDrawVert, pos));

glVertexArrayAttribFormat(vao, 1, 2, GL_FLOAT, GL_FALSE, IM_OFFSETOF(ImDrawVert, uv));

glVertexArrayAttribFormat(vao, 2, 4, GL_UNSIGNED_BYTE, GL_TRUE, IM_OFFSETOF(ImDrawVert, col));

- Now, tell OpenGL to read the data for streams 0, 1, and 2 from the buffer, which is attached to buffer binding point 0:

glVertexArrayAttribBinding(vao, 0, 0);

glVertexArrayAttribBinding(vao, 1, 0);

glVertexArrayAttribBinding(vao, 2, 0);

There's more...

The VAO setup is probably the most complicated part of the new DSA API. Other objects are much simpler to set up; we will discuss how to work with them in subsequent chapters.

Loading and compiling shaders in OpenGL

In Chapter 2, Using Essential Libraries, our tiny OpenGL examples loaded all the GLSL shaders directly from the const char* variables defined inside our source code. While this approach is acceptable in the territory of 100-line demos, it does not scale well beyond that. In this recipe, we will learn how to load, compile, and link shaders and shader programs. This approach will be used throughout the rest of the examples in this book.

Getting ready

Before we can proceed with the actual shader loading, we need two graphics API-agnostic functions. The first one loads a text file as std::string:

std::string readShaderFile(const char* fileName) {

FILE* file = fopen(fileName, "r");

if (!file) {

printf("I/O error. Cannot open '%s' ", fileName);

return std::string();

}

fseek(file, 0L, SEEK_END);

const auto bytesinfile = ftell(file);

fseek(file, 0L, SEEK_SET);

char* buffer = (char*)alloca(bytesinfile + 1);

const size_t bytesread = fread( buffer, 1, bytesinfile, file);

fclose(file);

buffer[bytesread] = 0;

The important thing to mention here is that we parse and eliminate the UTF byte-order marker. If present, it might not be handled properly by some legacy GLSL compilers, especially on Android:

static constexpr unsigned char BOM[] = { 0xEF, 0xBB, 0xBF };

if (bytesread > 3)

if (!memcmp(buffer, BOM, 3)) memset(buffer, ' ', 3);

std::string code(buffer);

We should also handle #include directives inside the shader source code. This code is not robust enough to be shipped, but it is good enough for our purposes:

while (code.find("#include ") != code.npos) {

const auto pos = code.find("#include ");

const auto p1 = code.find('<', pos);

const auto p2 = code.find('>', pos);

if (p1 == code.npos || p2 == code.npos || p2 <= p1) {

printf("Error while loading shader program: %s ", code.c_str());

return std::string();

}

const std::string name = code.substr(p1 + 1, p2 - p1 – 1);

const std::string include = readShaderFile(name.c_str());

code.replace(pos, p2-pos+1, include.c_str());

}

return code;

}

The second helper function prints shader source code in the console. Each source code line is annotated with a line number, making it extremely easy to debug shader compilation using the error line number generated by the GLSL compiler's output:

static void printShaderSource(const char* text) {

int line = 1;

printf(" (%3i) ", line);

while (text && *text++) {

if (*text == ' ') printf(" (%3i) ", ++line);

else if (*text == ' ') {}

else printf("%c", *text);

}

printf(" ");

}

The source code for these functions can be found in the shared/Utils.cpp and shared/GLShader.cpp files.

How to do it...

Let's create some C++ resource acquisition is initialization (RAII) wrappers on top of our OpenGL shaders and programs:

- First, we need to convert a shader file name into an OpenGL shader type based on the file's extension:

GLenum GLShaderTypeFromFileName(const char* fileName)

{

if (endsWith(fileName, ".vert")) return GL_VERTEX_SHADER;

if (endsWith(fileName, ".frag")) return GL_FRAGMENT_SHADER;

if (endsWith(fileName, ".geom")) return GL_GEOMETRY_SHADER;

if (endsWith(fileName, ".tesc")) return GL_TESS_CONTROL_SHADER;

if (endsWith(fileName, ".tese")) return GL_TESS_EVALUATION_SHADER;

if (endsWith(fileName, ".comp")) return GL_COMPUTE_SHADER;

assert(false);

return 0;

}

- The endsWith() helper function is basically a one-liner and checks if a string ends with a specified substring:

int endsWith(const char* s, const char* part) {

return (strstr(s, part) - s) == (strlen(s) – strlen(part));

}

- The shader wrapper interface looks as follows. Constructors take either a filename or a shader type and source code as input:

class GLShader {

public:

explicit GLShader(const char* fileName);

GLShader(GLenum type, const char* text);

~GLShader();

- The shader type will be required later, once we want to link our shaders to a shader program:

GLenum getType() const { return type_; }

GLuint getHandle() const { return handle_; }

private:

GLenum type_;

GLuint handle_;

};

- The two-parameter constructor does all the heavy lifting, as shown here:

GLShader::GLShader(GLenum type, const char* text)

: type_(type)

, handle_(glCreateShader(type))

{

glShaderSource(handle_, 1, &text, nullptr);

glCompileShader(handle_);

Once the shader has been compiled, we can retrieve its compilation status via glGetShaderInfoLog(). If the message buffer is not empty, which means there were some issues during the shader's compilation, we must print the annotated shader's source code:

char buffer[8192];

GLsizei length = 0;

glGetShaderInfoLog( handle_, sizeof(buffer), &length, buffer);

if (length) {

printf("%s ", buffer);

printShaderSource(text);

assert(false);

}

}

- Let's define the explicit constructor, which takes a filename as input and delegates all the work to the first constructor we mentioned previously:

GLShader::GLShader(const char* fileName)

: GLShader(GLShaderTypeFromFileName(fileName), readShaderFile(fileName).c_str())

{}

- Deallocate the shader object in the destructor:

GLShader::~GLShader() {

glDeleteShader(handle_);

}

- Finally, we should load some shaders from files using GLShader, like so:

GLShader shaderVertex( "data/shaders/chapter03/GL02.vert");

GLShader shaderGeometry( "data/shaders/chapter03/GL02.geom");

GLShader shaderFragment( "data/shaders/chapter03/GL02.frag");

If we compile the shader source code and make a mistake, the output from our helper class will look similar to the following listing. The compiler error message, which mentions that line 12 contains an error, can now be directly matched to the shader source code:

0(12) : error C1503: undefined variable "texture12"

( 1) //

( 2) #version 460 core

( 3)

( 4) layout (location=0) in vec3 dir;

( 5)

( 6) layout (location=0) out vec4 out_FragColor;

( 7)

( 8) layout (binding=1) uniform samplerCube texture1;

( 9)

( 10) void main()

( 11) {

( 12) out_FragColor = texture(texture12, dir);

( 13) };

( 14)

Assertion failed: false, file SourcessharedGLShader.cpp, line 53

We can use compiled shaders in OpenGL by linking them to a shader program. In a similar fashion, let's write a RAII wrapper for that purpose:

- First, let's declare a helper class called GLProgram. Constructors that take multiple GLShader arguments are declared to make the class's use more convenient. Normally, we use GLProgram with just a pair of shaders; that is, a vertex and a fragment shader. However, sometimes, other shaders will be linked together:

class GLProgram {

public:

GLProgram(const GLShader& a, const GLShader& b);

GLProgram(const GLShader& a, const GLShader& b, const GLShader& c);

...

~GLProgram();

void useProgram() const;

GLuint getHandle() const { return handle_; }

private:

GLuint handle_;

};

- Let's add yet another constructor that takes two shaders. The other constructors look identical and have been skipped here for the sake of brevity:

GLProgram::GLProgram( const GLShader& a, const GLShader& b)

: handle_(glCreateProgram())

{

glAttachShader(handle_, a.getHandle());

glAttachShader(handle_, b.getHandle());

glLinkProgram(handle_);

printProgramInfoLog(handle_);

}

- Now, let's write a function that handles the program linking information. The printProgramInfoLog() function is reused across all GLProgram constructors and prints the messages reported by the OpenGL implementation:

void printProgramInfoLog(GLuint handle) {

char buffer[8192];

GLsizei length = 0;

glGetProgramInfoLog( handle, sizeof(buffer), &length, buffer);

if (length) {

printf("%s ", buffer);

assert(false);

}

}

- The destructor deletes the shader program in a RAII way:

GLProgram::~GLProgram() {

glDeleteProgram(handle_);

}

- To install a program object as part of the current rendering state, use the following method:

void GLProgram::useProgram() const {

glUseProgram(handle_);

}

Once the shaders have compiled, the shader program can be linked and used like so:

GLProgram program( shaderVertex, shaderGeometry, shaderFragment);

program.useProgram();

The helper classes we implemented in this recipe will make our OpenGL programming less verbose and will let us focus on the actual graphics algorithms.

There's more...

There is yet another way to use GLSL shaders in modern OpenGL. It is possible to link a single shader to a separate, standalone shader program and combine those programs into a program pipeline, like so:

const char* vtx = ...

const char* frg = ...

const GLuint vs = glCreateShaderProgramv( GL_VERTEX_SHADER, 1, &vtx);

const GLuint fs = glCreateShaderProgramv( GL_FRAGMENT_SHADER, 1, &frg);

GLuint pipeline;

glCreateProgramPipelines(1, &pipeline);

glUseProgramStages(pipeline, GL_VERTEX_SHADER_BIT, vs);

glUseProgramStages(pipeline, GL_FRAGMENT_SHADER_BIT, fs);

glBindProgramPipeline(pipeline);

This approach allows you to mix and match shaders where, for example, a single vertex shader can be reused with many different fragment shaders. This provides much better flexibility and reduces shader combinations exploding exponentially. We recommend using this approach if you decide to stick with modern OpenGL.

Implementing programmable vertex pulling (PVP) in OpenGL

The concept of programmable vertex pulling (PVP) was proposed in 2012 by Daniel Rákos in the amazing book OpenGL Insights. This article goes deep into the architecture of the GPUs of that time and why it was beneficial to use this data storage approach. Initially, the idea of vertex pulling was to store vertex data inside one-dimensional buffer textures and, instead of setting up standard OpenGL vertex attributes, read the data using texelFetch() and a GLSL samplerBuffer in the vertex shader. The built-in GLSL gl_VertexID variable was used as an index to calculate texture coordinates for texel fetching. The reason this trick was implemented was because developers were hitting CPU limits with many draw calls. Due to this, it was beneficial to combine multiple meshes inside a single buffer and render them in a single draw call, without rebinding any vertex arrays or buffer objects to improve draw calls batching.

This technique opens possibilities for merge instancing, where many small meshes can be merged into a bigger one, to be handled as part of the same batch. We will use this technique extensively in our examples, starting from Chapter 7, Graphics Rendering Pipeline.

In this recipe, we will use shader storage buffer objects to implement a similar technique with modern OpenGL.

Getting ready

The complete source code for this recipe can be found in this book's source code bundle, under the name Chapter3/GL02_VtxPulling.

How to do it...

Let's render the 3D rubber duck model from Chapter 2, Using Essential Libraries. However, this time, we will be using the programmable vertex pulling technique. The idea is to allocate two buffer objects – one for the indices and another for the vertex data – and access them in GLSL shaders as shader storage buffers. Let's get started:

- First, we must load the 3D model via Assimp:

const aiScene* scene = aiImportFile( "data/rubber_duck/scene.gltf", aiProcess_Triangulate);

- Next, convert the per-vertex data into a format suitable for our GLSL shaders. Here, we are going to use vec3 for our positions and vec2 for our texture coordinates:

struct VertexData {

vec3 pos;

vec2 tc;

};

const aiMesh* mesh = scene->mMeshes[0];

std::vector<VertexData> vertices;

for (unsigned i = 0; i != mesh->mNumVertices; i++) {

const aiVector3D v = mesh->mVertices[i];

const aiVector3D t = mesh->mTextureCoords[0][i];

vertices.push_back({ .pos = vec3(v.x, v.z, v.y), .tc = vec2(t.x, t.y) });

}

- For simplicity, we will store the indices as unsigned 32-bit integers. In real-world applications, consider using 16-bit indices for small meshes and ensure you can switch between them:

std::vector<unsigned int> indices;

for (unsigned i = 0; i != mesh->mNumFaces; i++) {

for (unsigned j = 0; j != 3; j++)

indices.push_back(mesh->mFaces[i].mIndices[j]);

}

- Once the index and vertex data is ready, we can upload it into the OpenGL buffers. We should create two buffers – one for the vertices and one for the indices:

const size_t kSizeIndices = sizeof(unsigned int) * indices.size();

const size_t kSizeVertices = sizeof(VertexData) * vertices.size();

GLuint dataIndices;

glCreateBuffers(1, &dataIndices);

glNamedBufferStorage( dataIndices, kSizeIndices, indices.data(), 0);

GLuint dataVertices;

glCreateBuffers(1, &dataVertices);

glNamedBufferStorage( dataVertices, kSizeVertices, vertices.data(), 0);

- Now, we should create a vertex array object. In this example, we will make OpenGL read indices from the VAO and use them to access vertex data in a shader storage buffer:

GLuint vao;

glCreateVertexArrays(1, &vao);

glBindVertexArray(vao);

glVertexArrayElementBuffer(vao, dataIndices);

Important Note

Please note that it is completely possible to store indices inside a shader storage buffer as well, and then read that data manually in the vertex shader. We will leave this as an exercise for you.

- Before we proceed with the actual rendering, we should bind our vertex data shader storage buffer to binding point 1. Here, we are using sequential binding point indices for uniforms and storage buffers for the sake of simplicity:

glBindBufferBase( GL_SHADER_STORAGE_BUFFER, 1, dataVertices);

- Let's load and set up the texture for this model:

int w, h, comp;

const uint8_t* img = stbi_load( "data/rubber_duck/textures/Duck_baseColor.png", &w, &h, &comp, 3);

GLuint tx;

glCreateTextures(GL_TEXTURE_2D, 1, &tx);

glTextureParameteri( tx, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTextureParameteri( tx, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTextureStorage2D(tx, 1, GL_RGB8, w, h);

glPixelStorei(GL_UNPACK_ALIGNMENT, 1);

glTextureSubImage2D(tx, 0, 0, 0, w, h, GL_RGB, GL_UNSIGNED_BYTE, img);

glBindTextures(0, 1, &tx);

This is the complete initialization code for C++. Now, let's look at the GLSL vertex shader to understand how to read the vertex data from buffers. The source code for this shader can be found in datashaderschapter03GL02.vert.

How it works...

The declaration of our PerFrameData remains the same and just stores the combined model-view-projection matrix:

#version 460 core

layout(std140, binding = 0) uniform PerFrameData {

uniform mat4 MVP;

};

The Vertex structure here should match the VertexData structure in C++ that we used previously to fill in the data for our buffers. Here, we are using arrays of float instead of vec3 and vec2 because GLSL has alignment requirements and will pad vec3 to vec4. We don't want that:

struct Vertex {

float p[3]; float tc[2];

};

The actual buffer is attached to binding point 1 and is declared as readonly. The buffer holds an unbounded array of Vertex[] elements. Each element corresponds to exactly one vertex:

Note

The binding points for uniforms and buffers are separate entities, so it is perfectly fine to use 0 for both PerFrameData and Vertices. However, we are using different numbers here to avoid confusion.

layout(std430, binding = 1) readonly buffer Vertices {

Vertex in_Vertices[];

};

The accessor functions are required to extract the vec3 position data and the vec2 texture coordinates data from the buffer. Three consecutive floats are used in getPosition(), while two are used in getTexCoord():

vec3 getPosition(int i) {

return vec3( in_Vertices[i].p[0], in_Vertices[i].p[1], in_Vertices[i].p[2]);

}

vec2 getTexCoord(int i) {

return vec2(in_Vertices[i].tc[0], in_Vertices[i].tc[1]);

}

The vertex shader only outputs texture coordinates as vec2:

layout (location=0) out vec2 uv;

Now, we can read the data from the buffer by using the built-in GLSL gl_VertexID variable as an index. Because we used VAO with a buffer containing indices to set up our rendering code, the values of gl_VertexID will follow the values of the provided indices. Hence, we can use this value directly as an index into the buffer:

void main() {

vec3 pos = getPosition(gl_VertexID);

gl_Position = MVP * vec4(pos, 1.0);

uv = getTexCoord(gl_VertexID);

}

That's it for the programmable vertex pulling part. The fragment shader applies the texture and uses the barycentric coordinates trick for wireframe rendering, as we described in the previous chapter. The resulting output from the program should look as follows:

Figure 3.1 – Textured mesh rendered using programmable vertex pulling

There's more...

Programmable vertex pulling is a complex topic and has different performance implications. There is an open source project that does an in-depth analysis of this and provides runtime metrics of PVP performance based on different vertex data layouts and access methods, such as storing data as array of structures or structure of arrays, reading data as multiple floats or a single vector type, and so on.

Check it out at https://github.com/nlguillemot/ProgrammablePulling. It should be one of your go-to tools when you're designing PVP pipelines in your OpenGL applications.

Working with cube map textures

A cube map is a texture that contains six individual 2D textures, comprising six sides of a cube. A useful property of cube maps is that they can be sampled using a direction vector. This comes in handy when you're representing light coming into a scene from different directions. For example, we can store the diffuse part of a physically-based lighting equation in an irradiance cube map.

Loading six faces of a cube map into OpenGL is a fairly straightforward operation. However, instead of just six faces, cube maps are often stored as equirectangular projections or as vertical or horizontal crosses. In this recipe, we will learn how to convert this cube map representation into six faces and load them into OpenGL.

Getting ready

There are many websites that offer high-dynamic range environment textures under various licenses. Check out https://hdrihaven.com and https://hdrmaps.com for useful content.

The complete source code for this recipe can be found in this book's source code bundle, under the name Chapter3/GL03_CubeMap.

Before we start working with cube maps, let's introduce a simple helper class for working with bitmap images in 8-bit and 32-bit floating-point formats:

- Let's declare the interface part of the Bitmap class, as follows:

class Bitmap {

public:

Bitmap() = default;

Bitmap(int w, int h, int comp, eBitmapFormat fmt);

Bitmap( int w, int h, int d, int comp, eBitmapFormat fmt);

Bitmap(int w, int h, int comp, eBitmapFormat fmt, const void* ptr);

- Declare the width, height, depth, and number of components per pixel:

int w_ = 0;

int h_ = 0;

int d_ = 1;

int comp_ = 3;

- Set the type of a single component, either unsigned byte or float. The type of this bitmap should be a 2D texture or a cube map. We will store the actual bytes of this bitmap in an std::vector container for simplicity:

eBitmapFormat fmt_ = eBitmapFormat_UnsignedByte;

eBitmapType type_ = eBitmapType_2D;

std::vector<uint8_t> data_;

- The following helper function gets the number of bytes necessary for storing one component of a specified format:

static int getBytesPerComponent(eBitmapFormat fmt);

- Finally, we need a getter and a setter for our two-dimensional image. We will come back to this later:

void setPixel(int x, int y, const glm::vec4& c);

glm::vec4 getPixel(int x, int y) const;

};

This implementation is located in shared/Bitmap.h. Now, let's use this class to build more high-level cube map conversion functions.

How to do it...

We have a cube map called data/piazza_bologni_1k.hdr that was originally downloaded from https://hdrihaven.com/hdri/?h=piazza_bologni. The environment map image is provided as an equirectangular projection and looks like this:

Figure 3.2 – Equirectangular projection

Let's convert this project into a vertical cross. In vertical cross format, each cube map's face is represented as a rectangle inside the entire image, as follows:

Figure 3.3 – Vertical cross

If we naively convert the equirectangular projection into cube map faces by iterating over its pixels, calculating the Cartesian coordinates for each pixel, and saving the pixel into a cube map face using these Cartesian coordinates, we will end up with a texture that's been heavily damaged by a Moiré pattern. Here, it's best to do things the other way around; that is, iterate over each pixel of the resulting cube map faces, calculate the source floating-point equirectangular coordinates corresponding to each pixel, and sample the equirectangular texture using bilinear interpolation. This way, the final cube map will be free of artifacts. Let's take a look at this:

- The first step is to introduce a helper function that maps integer coordinates inside a specified cube map face as floating-point normalized coordinates. This helper is handy because all the faces of the vertical cross cube map have different vertical orientations:

vec3 faceCoordsToXYZ( int i, int j, int faceID, int faceSize) {

const float A = 2.0f * float(i) / faceSize;

const float B = 2.0f * float(j) / faceSize;

if (faceID == 0) return vec3(-1.0f, A - 1.0f, B – 1.0f);

if (faceID == 1) return vec3(A - 1.0f, -1.0f, 1.0f - B);

if (faceID == 2) return vec3(1.0f, A - 1.0f, 1.0f - B);

if (faceID == 3) return vec3(1.0f - A, 1.0f, 1.0f - B);

if (faceID == 4) return vec3(B - 1.0f, A - 1.0f, 1.0f);

if (faceID == 5) return vec3(1.0f - B, A - 1.0f, -1.0f);

return vec3();

}

- The conversion function starts as follows and calculates the required faceSize, width, and height of the resulting bitmap:

Bitmap convertEquirectangularMapToVerticalCross( const Bitmap& b) {

if (b.type_ != eBitmapType_2D) return Bitmap();

const int faceSize = b.w_ / 4;

const int w = faceSize * 3;

const int h = faceSize * 4;

Bitmap result(w, h, 3);

- The following points define the locations of individual faces inside the cross:

const ivec2 kFaceOffsets[] = { ivec2(faceSize, faceSize * 3), ivec2(0, faceSize), ivec2(faceSize, faceSize), ivec2(faceSize * 2, faceSize), ivec2(faceSize, 0), ivec2(faceSize, faceSize * 2) };

- Two constants will be necessary to clamp the texture lookup:

const int clampW = b.w_ - 1;

const int clampH = b.h_ - 1;

- Now, we can start iterating over the six cube map faces and each pixel inside each face:

for (int face = 0; face != 6; face++) {

for (int i = 0; i != faceSize; i++) {

for (int j = 0; j != faceSize; j++) {

- Use trigonometry functions to calculate the latitude and longitude coordinates of the Cartesian cube map coordinates:

const vec3 P = faceCoordsToXYZ( i, j, face, faceSize);

const float R = hypot(P.x, P.y);

const float theta = atan2(P.y, P.x);

const float phi = atan2(P.z, R);

- Now, we can map the latitude and longitude of the floating-point coordinates inside the equirectangular image:

const float Uf = float(2.0f * faceSize * (theta + M_PI) / M_PI);

const float Vf = float(2.0f * faceSize * (M_PI / 2.0f – phi) / M_PI);

- Based on these floating-point coordinates, we will get two pairs of integer UV coordinates. We will use these to sample four texels for bilinear interpolation:

const int U1 = clamp(int(floor(Uf)), 0, clampW);

const int V1 = clamp(int(floor(Vf)), 0, clampH);

const int U2 = clamp(U1 + 1, 0, clampW);

const int V2 = clamp(V1 + 1, 0, clampH);

- Get the fractional part for bilinear interpolation:

const float s = Uf - U1;

const float t = Vf - V1;

- Fetch four samples from the equirectangular map:

const vec4 A = b.getPixel(U1, V1);

const vec4 B = b.getPixel(U2, V1);

const vec4 C = b.getPixel(U1, V2);

const vec4 D = b.getPixel(U2, V2);

- Perform bilinear interpolation and set the resulting pixel value in the vertical cross cube map:

const vec4 color = A * (1 - s) * (1 - t) + B * (s) * (1 - t) + C * (1 - s) * t + D * (s) * (t);

result.setPixel( i + kFaceOffsets[face].x, j + kFaceOffsets[face].y, color);

}

}

}

return result;

}

The Bitmap class takes care of the pixel format inside the image data.

Now, we can write some code to cut the vertical cross into tightly packed rectangular cube map faces. Here's how to do it:

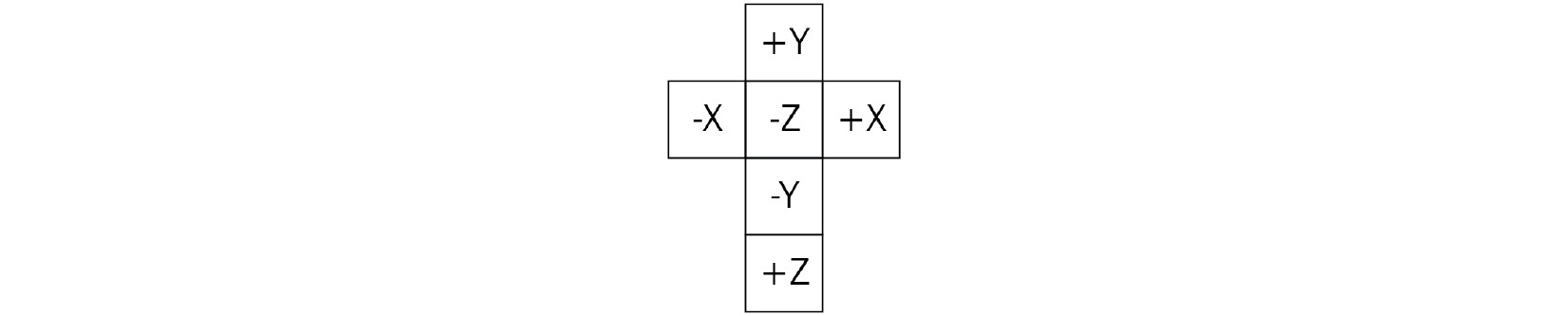

- First, let's review the layout of the vertical cross image that corresponds to the OpenGL cube map faces layout:

Figure 3.4 – Layout of the vertical cross image

- The layout is 3x4 faces, which makes it possible to calculate the dimensions of the resulting cube map as follows:

Bitmap convertVerticalCrossToCubeMapFaces( const Bitmap& b) {

const int faceWidth = b.w_ / 3;

const int faceHeight = b.h_ / 4;

Bitmap cubemap( faceWidth, faceHeight, 6, b.comp_, b.fmt_);

- Let's set up some pointers to read and write the data. This function is pixel-format agnostic, so it needs to know the size of each pixel in bytes to be able to memcpy() pixels around:

const uint8_t* src = b.data_.data();

uint8_t* dst = cubemap.data_.data();

const int pixelSize = cubemap.comp_ * Bitmap::getBytesPerComponent(cubemap.fmt_);

- Iterate over the faces and over every pixel of each face. The order of the cube map faces here corresponds to the order of the OpenGL cube map faces, as defined by the GL_TEXTURE_CUBE_MAP_* constants:

for (int face = 0; face != 6; ++face) {

for (int j = 0; j != faceHeight; ++j) {

for (int i = 0; i != faceWidth; ++i) {

int x = 0;

int y = 0;

- Calculate the source pixel position in the vertical cross layout based on the destination cube map face index:

switch (face) {

// GL_TEXTURE_CUBE_MAP_POSITIVE_X case 0: x = i; y = faceHeight + j; break;

// GL_TEXTURE_CUBE_MAP_NEGATIVE_X case 1: x = 2 * faceWidth + i; y = 1 * faceHeight + j; break;

// GL_TEXTURE_CUBE_MAP_POSITIVE_Y case 2: x = 2 * faceWidth - (i + 1); y = 1 * faceHeight - (j + 1); break;

// GL_TEXTURE_CUBE_MAP_NEGATIVE_Y case 3: x = 2 * faceWidth - (i + 1); y = 3 * faceHeight - (j + 1); break;

// GL_TEXTURE_CUBE_MAP_POSITIVE_Z case 4: x = 2 * faceWidth - (i + 1); y = b.h_ - (j + 1); break;

// GL_TEXTURE_CUBE_MAP_NEGATIVE_Z case 5: x = faceWidth + i; y = faceHeight + j; break;

}

- Copy the pixel and advance to the next one:

memcpy(dst, src + (y * b.w_ + x) * pixelSize, pixelSize);

dst += pixelSize;

}

}

}

return cubemap;

}

The resulting cube map contains a stack of six images. Let's write some more C++ code to load and convert the actual texture data and upload it to OpenGL.

- Use the STB_image floating-point API to load a high dynamic range image from a .hdr file:

int w, h, comp;

const float* img = stbi_loadf( "data/piazza_bologni_1k.hdr", &w, &h, &comp, 3);

Bitmap in(w, h, comp, eBitmapFormat_Float, img);

stbi_image_free((void*)img);

- Convert an equirectangular map into a vertical cross and save the resulting image in a .hdr file for further inspection:

Bitmap out = convertEquirectangularMapToVerticalCross(in);

stbi_write_hdr("screenshot.hdr", out.w_, out.h_, out.comp_, reinterpret_cast<const float*>(out.data_.data()));

- Convert the vertical cross into the actual cube map faces:

Bitmap cm = convertVerticalCrossToCubeMapFaces(out);

- Now, uploading to OpenGL is straightforward. All we need to do is create a texture, set the texture parameters, allocate storage for an RGB floating-point texture, and upload the individual faces one by one. Note how the glTextureSubImage3D() function is used to upload individual cube map faces. The zoffset parameter of the function is used to specify the i index of the face:

glCreateTextures(GL_TEXTURE_CUBE_MAP, 1, &tex);

glTextureParameteri( tex, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_BORDER);

glTextureParameteri( tex, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_BORDER);

glTextureParameteri( tex, GL_TEXTURE_WRAP_R, GL_CLAMP_TO_BORDER);

glTextureParameteri( tex, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTextureParameteri( tex, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTextureStorage2D(tex, 1, GL_RGB32F, cm.w_, cm.h_);

const uint8_t* data = cm.data_.data();

for (unsigned i = 0; i != 6; ++i) {

glTextureSubImage3D(tex, 0, 0, 0, i, cm.w_, cm.h_, 1, GL_RGB, GL_FLOAT, data);

data += cm.w_ * cm.h_ * cm.comp_ * Bitmap::getBytesPerComponent(cm.fmt_);

}

Now, let's learn how to write the GLSL shaders for this example:

- Let's make the vertex shader take a separate model matrix and a world space camera position as its inputs:

layout(std140, binding = 0) uniform PerFrameData {

uniform mat4 model;

uniform mat4 MVP;

uniform vec4 cameraPos;

};

- The per-vertex output should be calculated as follows. Positions, normal vectors, and texture coordinates are read from the SSBO buffer using the PVP technique we discussed in the previous recipe. Normal vectors are transformed with a matrix calculated as the inverse transpose of the model matrix:

struct PerVertex {

vec2 uv;

vec3 normal;

vec3 worldPos;

};

layout (location=0) out PerVertex vtx;

void main() {

vec3 pos = getPosition(gl_VertexID);

gl_Position = MVP * vec4(pos, 1.0);

mat3 normalMatrix = mat3(transpose(inverse(model)));

vtx.uv = getTexCoord(gl_VertexID);

vtx.normal = getNormal(gl_VertexID) * normalMatrix;

vtx.worldPos = (model * vec4(pos, 1.0)).xyz;

}

- The fragment shader uses samplerCube to sample the cube map. Reflected and refracted direction vectors are calculated using GLSL's built-in reflect() and refract() functions, respectively:

layout (binding = 0) uniform sampler2D texture0;

layout (binding = 1) uniform samplerCube texture1;

void main() {

vec3 n = normalize(vtx.normal);

vec3 v = normalize(cameraPos.xyz - vtx.worldPos);

vec3 reflection = -normalize(reflect(v, n));

- To add some more complicated visual appearances, use the index of refraction of ice and calculate the specular reflection coefficient using Schlick's approximation (https://en.wikipedia.org/wiki/Schlick%27s_approximation):

float eta = 1.00 / 1.31;

vec3 refraction = -normalize(refract(v, n, eta));

const float R0 = ((1.0-eta) * (1.0-eta)) / ((1.0+eta) * (1.0+eta));

const float Rtheta = R0 + (1.0 – R0) * pow((1.0 – dot(-v, n)), 5.0);

vec4 color = texture(texture0, vtx.uv);

- Sample the cube map using the calculated direction vectors:

vec4 colorRefl = texture(texture1, reflection);

vec4 colorRefr = texture(texture1, refraction);

- The combined reflection and refraction color is modulated here with the diffuse texture to produce a clean looking image. It doesn't attempt to physically correct the process:

color = color * mix(colorRefl, colorRefr, Rtheta);

out_FragColor = color;

};

The resulting output from the application looks as follows. Note the blown out white areas of the sky due to how a high dynamic range image is being displayed directly on a low dynamic range framebuffer. We will come back to this issue in Chapter 8, Image-Based Techniques, and implement a simple tone mapping operator:

Figure 3.5 – Reflective rubber duck

There's more...

Modern rendering APIs can filter cube maps seamlessly across all faces. To enable this feature for all cube map textures in the current OpenGL context, use glEnable():

glEnable(GL_TEXTURE_CUBE_MAP_SEAMLESS);

Besides that, seamless cube map filtering can be enabled on a per-texture basis using the ARB_seamless_cubemap_per_texture extension, as follows:

glTextureParameteri(tex, GL_TEXTURE_CUBE_MAP_SEAMLESS,

GL_TRUE);

Make sure you use this OpenGL functionality since seamless cube map filtering is almost always what you want from a cube map.

In Vulkan, all cube map texture fetches are seamless (see Cube Map Edge Handling in the Vulkan specification), except the ones with VK_FILTER_NEAREST enabled on them, which are clamped to the face edge.

Compiling Vulkan shaders at runtime

In the previous recipes, we only covered OpenGL, while Vulkan was only mentioned a few times now and again. In the rest of this chapter, we will show you how to create a Vulkan rendering application with functionality similar to what we've done with OpenGL so far. The code from this and the subsequent recipes will be reused later to build more complex Vulkan demos.

Before we start using Vulkan, we must learn how to significantly speed up the iterative process of writing shaders. Vulkan consumes shaders in their final compiled binary form, called SPIR-V, and it uses a standalone shader compiler to precompile shaders offline. While being perfect for a released product, this approach slows down early stages of graphics application development and rapid prototyping, where shaders are changed a lot and should be recompiled on each application run. In this recipe, we will show you how to compile Vulkan shaders at runtime using Kronos' reference shader compiler, known as glslang.

Getting ready

Our application is statically linked to the glslang shader compiler. The compiler version we used in this recipe was downloaded using the following Bootstrap snippet:

{

"name": "glslang",

"source": {

"type": "git",

"url": "https://github.com/KhronosGroup/glslang.git",

"revision": "6fe560f74f472726027e4059692c6eb1e7d972dc"

}

}

The complete source code for this recipe can be found in this book's source code bundle, under the name Chapter3/VK01_GLSLang.

How to do it...

Let's learn how to compile a shader using glslang:

- First, we should declare storage for our compiled binary SPIR-V shaders:

struct ShaderModule {

std::vector<unsigned int> SPIRV;

VkShaderModule shaderModule;

};

- Use the following helper function to compile a shader from its source code for a specified Vulkan pipeline stage; then, save the binary SPIR-V result in the ShaderModule structure:

size_t compileShader(glslang_stage_t stage, const char* shaderSource, ShaderModule& shaderModule) {

- The compiler's input structure initialization is pretty verbose. We should specify the source shader language as GLSLANG_SOURCE_GLSL and use proper targets to generate SPIR-V 1.3 for Vulkan version 1.1. Using the new C++20 feature known as designated initializers for this task is a breeze. The same applies to low-level Vulkan development in general, since the most frequent thing a developer will do is fill in structure members with values:

const glslang_input_t input = { .language = GLSLANG_SOURCE_GLSL, .stage = stage, .client = GLSLANG_CLIENT_VULKAN, .client_version = GLSLANG_TARGET_VULKAN_1_1, .target_language = GLSLANG_TARGET_SPV, .target_language_version =GLSLANG_TARGET_SPV_1_3, .code = shaderSource, .default_version = 100, .default_profile = GLSLANG_NO_PROFILE, .force_default_version_and_profile = false, .forward_compatible = false, .messages = GLSLANG_MSG_DEFAULT_BIT, .resource = (const glslang_resource_t*) &glslang::DefaultTBuiltInResource,

};

- Let's create a shader using the constructed input:

glslang_shader_t* shd = glslang_shader_create(&input);

- First, the shader needs to be preprocessed by the compiler. This function returns true if all the extensions, pragmas, and version strings mentioned in the shader source code are valid:

if ( !glslang_shader_preprocess(shd, &input) ) {

fprintf(stderr, "GLSL preprocessing failed " );

fprintf(stderr, " %s", glslang_shader_get_info_log(shd));

fprintf(stderr, " %s", glslang_shader_get_info_debug_log(shd));

fprintf(stderr, "code: %s", input.code );

return 0;

}

- Then, the shader gets parsed in an internal parse tree representation inside the compiler:

if ( !glslang_shader_parse(shd, &input) ) {

fprintf(stderr, "GLSL parsing failed ");

fprintf(stderr, " %s", glslang_shader_get_info_log(shd) );

fprintf(stderr, " %s", glslang_shader_get_info_debug_log(shd));

fprintf(stderr, "%s", glslang_shader_get_preprocessed_code(shd));

return 0;

}

- If everything went well during the previous stages, we can link the shader to a program and proceed with the binary code generation stage:

glslang_program_t* prog = glslang_program_create();

glslang_program_add_shader(prog, shd);

int msgs = GLSLANG_MSG_SPV_RULES_BIT | GLSLANG_MSG_VULKAN_RULES_BIT;

if ( !glslang_program_link(prog, msgs) ) {

fprintf(stderr, "GLSL linking failed ");

fprintf(stderr, " %s", glslang_program_get_info_log(prog));

fprintf(stderr, " %s", glslang_program_get_info_debug_log(prog));

return 0;

}

- Generate some binary SPIR-V code and store it inside the shaderModule output variable:

glslang_program_SPIRV_generate(prog, stage);

shaderModule.SPIRV.resize( glslang_program_SPIRV_get_size(prog));

glslang_program_SPIRV_get( prog, shaderModule.SPIRV.data());

- Some messages may be produced by the code generator. Check and print them if there are any:

const char* spirv_messages = glslang_program_SPIRV_get_messages(prog);

if (spirv_messages)

fprintf(stderr, "%s", spirv_messages);

- Clean up and return the number of uint32_t values in the generated binary blob. This is how Vulkan requires the size to be specified:

glslang_program_delete(program);

glslang_shader_delete(shader);

return shaderModule.SPIRV.size();

}

How it works...

The demo application is straightforward: it loads the shader source code from a text file and uses the compileShader() function we just wrote to compile it into SPIR-V:

size_t compileShaderFile( const char* file, ShaderModule& shaderModule)

{

if (auto shaderSource = readShaderFile(file); !shaderSource.empty())

return compileShader( glslangShaderStageFromFileName(file), shaderSource.c_str(), shaderModule);

return 0;

}

Each generated SPIR-V binary blob is saved in a file for further inspection:

void testShaderCompilation( const char* sourceFilename, const char* destFilename)

{

ShaderModule;

if (compileShaderFile(sourceFilename, shaderModule) < 1) return;

saveSPIRVBinaryFile(destFilename, shaderModule.SPIRV.data(), shaderModule.SPIRV.size());

}

The main() function, which drives the demo application, initializes the glslang compiler and runs the necessary tests:

int main() {

glslang_initialize_process();

testShaderCompilation( "data/shaders/chapter03/VK01.vert", "VK01.vrt.bin");

testShaderCompilation( "data/shaders/chapter03/VK01.frag", "VK01.frg.bin");

glslang_finalize_process();

return 0;

}

The aforementioned program produces the same SPIR-V output as the following commands:

glslangValidator -V110 --target-env spirv1.3 VK01.vert -o VK01.vrt.bin

glslangValidator -V110 --target-env spirv1.3 VK01.frag -o VK01.frg.bin

There's more...

While being convenient during application development phases, shipping a big compiler alongside a release version of your application is a questionable practice. Unless compiling shaders at runtime is a feature of your application, you should prefer shipping precompiled SPIR-V shader binaries in the release version. One transparent way to do this is to implement a shader caching mechanism in your application. Once a shader is required, the application checks if a compiled shader is present. If there are none, it can load the glslang compiler from .dll or .so at runtime and compile the shader. This way, you can ensure that you always have compiled shaders for the release version of your app and that you do not need to ship shared libraries of the compiler.

If you want to learn how to load compiled shaders from .bin files produced by glslangValidator, take a look at this tutorial: https://vulkan-tutorial.com/Drawing_a_triangle/Graphics_pipeline_basics/Shader_modules.

Initializing Vulkan instances and graphical devices

The new Vulkan API is much more verbose, so we must split creating a graphical demo into separate, smaller recipes. In this recipe, we will learn how to create a Vulkan instance, enumerate all the physical devices in the system that are capable of 3D graphics rendering, and initialize one of these devices to create a window with an attached surface.

Getting ready

Teaching Vulkan from scratch is not the goal of this book, so we recommend starting with the book Vulkan Cookbook, published by Packt, and Vulkan Programming Guide: The Official Guide to Learning Vulkan, by Addison-Wesley Professional.

The hardest part of transitioning from OpenGL to Vulkan, or any other similar modern graphics API, is getting used to the amount of explicit code necessary to set up the rendering process, which, thankfully, only needs to be done once. It is also useful to get a grasp of Vulkan's object model. As a good starting point, we recommend reading https://gpuopen.com/understanding-vulkan-objects/ as a reference. For the remaining recipes in this chapter, we aim to start rendering 3D scenes with the bare minimum amount of setup.

All our Vulkan recipes use the Volk meta loader for the Vulkan API, which can be downloaded from https://github.com/zeux/volk using the following Bootstrap snippet. The meta loader allows you to dynamically load the entry points required to use Vulkan, without having to statically link any Vulkan loaders:

{

"name": "volk",

"source": {

"type": "git",

"url": "https://github.com/zeux/volk.git",

"revision": "1.2.170"

}

}

The complete Vulkan example for this recipe can be found in Chapter3/VK02_DemoApp.

How to do it...

Let's start with some error checking facilities:

- Any function call from a complex API may fail. To handle failure, or to at least let the developer know the exact location of the failure, we can wrap most of the Vulkan calls in the VK_CHECK() and VK_CHECK_RET() macros, which internally call the following VK_ASSERT() function:

static void VK_ASSERT(bool check) {

if (!check) exit(EXIT_FAILURE);

}

- The VK_CHECK() and VK_CHECK_RET() macros compare the result of a Vulkan call with the success value and return either a Boolean flag or a result value. If the comparison fails, the program should exit immediately:

#define VK_CHECK(value) if ( value != VK_SUCCESS ) { VK_ASSERT(false); return false; }

#define VK_CHECK_RET(value) if ( value != VK_SUCCESS ) { VK_ASSERT(false); return value; }

Now, we can start creating our first Vulkan object. The VkInstance object serves as an interface to the Vulkan API:

- The createInstance() routine is called at the beginning of the initialization process. Using the Vulkan instance, we can acquire a list of physical devices with the required properties:

void createInstance(VkInstance* instance) {

- First, we will declare a list of so-called layers, which will allow us to enable debugging output for every Vulkan call. The only layer we will be using is the debugging layer:

const std::vector<const char*> layers = {

"VK_LAYER_KHRONOS_validation"

};

- Next, we must declare the array with a list of extensions. The minimum number of extensions to allow rendering to take place is two. We need VK_KHR_surface and another platform-specific extension that takes an OS window handle and attaches a rendering surface to it. Amazingly, the following code is the only part of this example that explicitly requires us to use macros to detect the OS and assign the extension name:

const std::vector<const char*> exts = {

"VK_KHR_surface",

#if defined (WIN32)

"VK_KHR_win32_surface",

#endif

- macOS is supported via the MoltenVK implementation. However, most of the examples in this book are based on Vulkan 1.2, which is not supported by MoltenVK yet:

#if defined (__APPLE__)

"VK_MVK_macos_surface",

#endif

- On Linux, only libXCB-based window creation is supported. Similarly, the Wayland protocol is also supported, but that is outside the scope of this book:

#if defined (__linux__)

"VK_KHR_xcb_surface",

#endif

VK_EXT_DEBUG_UTILS_EXTENSION_NAME,

VK_EXT_DEBUG_REPORT_EXTENSION_NAME

};

- After constructing the list of surface-related extensions, we should fill in some mandatory information about our application:

const VkApplicationInfo appInfo = { .sType = VK_STRUCTURE_TYPE_APPLICATION_INFO, .pNext = nullptr, .pApplicationName = "Vulkan", .applicationVersion = VK_MAKE_VERSION(1, 0, 0), .pEngineName = "No Engine", .engineVersion = VK_MAKE_VERSION(1, 0, 0), .apiVersion = VK_API_VERSION_1_1 };

- To create a VkInstance object, we should fill in the VkInstanceCreateInfo structure. Use a pointer to the aforementioned appInfo constant and the list of extensions in the member fields of createInfo:

const VkInstanceCreateInfo createInfo = { .sType = VK_STRUCTURE_TYPE_INSTANCE_CREATE_INFO, .pNext = nullptr, .flags = 0, .pApplicationInfo = &appInfo, .enabledLayerCount = static_cast<uint32_t>(layers.size()), .ppEnabledLayerNames = layers.data(), .enabledExtensionCount = static_cast<uint32_t>(exts.size()), .ppEnabledExtensionNames = exts.data() };

VK_ASSERT(vkCreateInstance( &createInfo, nullptr, instance) == VK_SUCCESS);

- Finally, we must ask the Volk library to retrieve all the Vulkan API function pointers for all the extensions that are available for the created VkInstance:

volkLoadInstance(*instance);

}

Once we have a Vulkan instance ready and the graphics queue index set up with the selected physical device, we can create a logical representation of a GPU. Vulkan treats all devices as a collection of queues and memory heaps. To use a device for rendering, we need to specify a queue that can execute graphics-related commands, and a physical device that has such a queue. Let's get started:

- The createDevice() function accepts a list of required device features (for example, geometry shader support), a graphics queue index, a physical device, and an output handle for the logical device as input:

VkResult createDevice(

VkPhysicalDevice physicalDevice,

VkPhysicalDeviceFeatures deviceFeatures,

uint32_t graphicsFamily,

VkDevice* device)

{

- Let's declare a list of extensions that our logical device must support. For our early demos, we need the device to support the swap chain object, which allows us to present rendered frames on the screen. This list is going to be extended in subsequent chapters:

const std::vector<const char*> extensions = { VK_KHR_SWAPCHAIN_EXTENSION_NAME };

- We will only use a single graphics queue that has maximum priority:

const float queuePriority = 1.0f;

const VkDeviceQueueCreateInfo qci = { .sType =VK_STRUCTURE_TYPE_DEVICE_QUEUE_CREATE_INFO, .pNext = nullptr, .flags = 0, .queueFamilyIndex = graphicsFamily, .queueCount = 1, .pQueuePriorities = &queuePriority

};

- To create something in Vulkan, we should fill in a ...CreateInfo structure and pass all the required object properties to an appropriate vkCreate...() function. Here, we will define a VkDeviceCreateInfo constant with a reference to a single queue:

const VkDeviceCreateInfo ci = { .sType = VK_STRUCTURE_TYPE_DEVICE_CREATE_INFO, .pNext = nullptr, .flags = 0, .queueCreateInfoCount = 1, .pQueueCreateInfos = &qci, .enabledLayerCount = 0, .ppEnabledLayerNames = nullptr, .enabledExtensionCount = static_cast<uint32_t>(extensions.size()), .ppEnabledExtensionNames = extensions.data(), .pEnabledFeatures = &deviceFeatures

};

return vkCreateDevice( physicalDevice, &ci, nullptr, device );

}

- The createDevice() function expects a reference to a physical graphics-capable device. The following function finds such a device:

VkResult findSuitablePhysicalDevice(

VkInstance instance,

std::function<bool(VkPhysicalDevice)> selector,

VkPhysicalDevice* physicalDevice)

{

uint32_t deviceCount = 0;

VK_CHECK_RET(vkEnumeratePhysicalDevices(instance, &deviceCount, nullptr));

if (!deviceCount) return VK_ERROR_INITIALIZATION_FAILED;

std::vector<VkPhysicalDevice> devices(deviceCount);

VK_CHECK_RET(vkEnumeratePhysicalDevices( instance, &deviceCount, devices.data()));

for (const auto& device : devices)

if (selector(device)) {

*physicalDevice = device;

return VK_SUCCESS;

}

return VK_ERROR_INITIALIZATION_FAILED;

}

- Once we have a physical device reference, we will get a list of its queues. Here, we must check for the one with our desired capability flags:

uint32_t findQueueFamilies(

VkPhysicalDevice device, VkQueueFlags desiredFlags)

{

uint32_t familyCount;

vkGetPhysicalDeviceQueueFamilyProperties( device, &familyCount, nullptr);

std::vector<VkQueueFamilyProperties> families(familyCount);

vkGetPhysicalDeviceQueueFamilyProperties( device, &familyCount, families.data());

for (uint32_t i = 0; i != families.size(); i++)

if ( families[i].queueCount && (families[i].queueFlags & desiredFlags) )

return i;

return 0;

}

At this point, we have selected a suitable physical device, but we are far from finished with rendering the Vulkan pipeline. The next thing we will do is create a swap chain object. Let's move on to the next recipe to learn how to do this.

Initializing the Vulkan swap chain

Normally, each frame is rendered as an offscreen image. Once the rendering process is complete, the offscreen image should be made visible. An object that holds a collection of available offscreen images – or, more specifically, a queue of rendered images waiting to be presented to the screen – is called a swap chain. In OpenGL, presenting an offscreen buffer to the visible area of a window is performed using system-dependent functions, namely wglSwapBuffers() on Windows, eglSwapBuffers() on OpenGL ES embedded systems, glXSwapBuffers() on Linux, and automatically on macOS. Using Vulkan, we need to select a sequencing algorithm for the swap chain images. Also, the operation that presents an image to the display is no different from any other operation, such as rendering a collection of triangles. The Vulkan API object model treats each graphics device as a collection of command queues where rendering, computation, or transfer operations can be enqueued.

In this recipe, we will show you how to create a Vulkan swap chain object using the Vulkan instance and graphical device we initialized in the previous recipe.

Getting ready

Revisit the previous recipe, which discusses Vulkan instance creation and enabling the validation layer.

How to do it...

Before we can create a swap chain object, we need some helper functions:

- First, let's write a function that retrieves swap chain support details based on the specified physical device and the Vulkan surface. The result is returned inside the SwapchainSupportDetails structure:

struct SwapchainSupportDetails {

VkSurfaceCapabilitiesKHR capabilities = {};

std::vector<VkSurfaceFormatKHR> formats;

std::vector<VkPresentModeKHR> presentModes;

};

SwapchainSupportDetails querySwapchainSupport(

VkPhysicalDevice device, VkSurfaceKHR surface) {

- Query the basic capabilities of a surface:

SwapchainSupportDetails details;

vkGetPhysicalDeviceSurfaceCapabilitiesKHR( device, surface, &details.capabilities);

- Get the number of available surface formats. Allocate the storage to hold them:

uint32_t formatCount;

vkGetPhysicalDeviceSurfaceFormatsKHR( device, surface, &formatCount, nullptr);

if (formatCount) {

details.formats.resize(formatCount);

vkGetPhysicalDeviceSurfaceFormatsKHR( device, surface, &formatCount, details.formats.data());

}

- Retrieve the supported presentation modes in a similar way:

uint32_t presentModeCnt;

vkGetPhysicalDeviceSurfacePresentModesKHR( device, surface, &presentModeCnt, nullptr);

if (presentModeCnt) {

details.presentModes.resize(presentModeCnt);

vkGetPhysicalDeviceSurfacePresentModesKHR( device, surface, &presentModeCnt, details.presentModes.data());

}

return details;

}

- Let's write a helper function for choosing the required surface format. We will use a hardcoded value here for the RGBA 8-bit per channel format with the sRGB color space:

VkSurfaceFormatKHR chooseSwapSurfaceFormat( const std::vector<VkSurfaceFormatKHR>& availableFormats) {

return { VK_FORMAT_B8G8R8A8_UNORM,VK_COLOR_SPACE_SRGB_ NONLINEAR_KHR};

}

- Now, we should select presentation mode. The preferred presentation mode is VK_PRESENT_MODE_MAILBOX_KHR, which specifies that the Vulkan presentation system should wait for the next vertical blanking period to update the current image. Visual tearing will not be observed in this case. However, it's not guaranteed that this presentation mode will be supported. In this situation, we can always fall back to VK_PRESENT_MODE_FIFO_KHR. The differences between all possible presentation modes are described in the Vulkan specification at https://www.khronos.org/registry/vulkan/specs/1.1-extensions/man/html/VkPresentModeKHR.html:

VkPresentModeKHR chooseSwapPresentMode( const std::vector<VkPresentModeKHR>& availablePresentModes) {

for (const auto mode : availablePresentModes)

if (mode == VK_PRESENT_MODE_MAILBOX_KHR) return mode;

return VK_PRESENT_MODE_FIFO_KHR;

}

- The last helper function we need will choose the number of images in the swap chain object. It is based on the surface capabilities we retrieved earlier. Instead of using minImageCount directly, we will request one additional image to make sure we are not waiting on the GPU to complete any operations:

uint32_t chooseSwapImageCount( const VkSurfaceCapabilitiesKHR& caps)

{

const uint32_t imageCount = caps.minImageCount + 1;

const bool imageCountExceeded = caps.maxImageCount && imageCount > caps.maxImageCount;

return imageCountExceeded ? caps.maxImageCount : imageCount;

}

- Once we have all of our helper functions in place, the createSwapchain() wrapper function becomes rather short and mostly consists of filling in the VkSwapchainCreateInfoKHR structure:

VkResult createSwapchain( VkDevice device, VkPhysicalDevice physicalDevice, VkSurfaceKHR surface, uint32_t graphicsFamily, uint32_t width, uint32_t height, VkSwapchainKHR* swapchain)

{

auto swapchainSupport = querySwapchainSupport( physicalDevice, surface);

auto surfaceFormat = chooseSwapSurfaceFormat( swapchainSupport.formats);

auto presentMode = chooseSwapPresentMode( swapchainSupport.presentModes);

- Let's fill in the VkSwapchainCreateInfoKHR structure. Our initial example will not use a depth buffer, so only VK_IMAGE_USAGE_COLOR_ATTACHMENT_BIT will be used. The VK_IMAGE_USAGE_TRANSFER_DST_BIT flag specifies that the image can be used as the destination of a transfer command:

const VkSwapchainCreateInfoKHR ci = { .sType = VK_STRUCTURE_TYPE_SWAPCHAIN_CREATE_INFO_KHR, .flags = 0, .surface = surface, .minImageCount = chooseSwapImageCount( swapchainSupport.capabilities), .imageFormat = surfaceFormat.format, .imageColorSpace = surfaceFormat.colorSpace, .imageExtent = {.width = width, .height = height }, .imageArrayLayers = 1, .imageUsage = VK_IMAGE_USAGE_COLOR_ATTACHMENT_BIT | VK_IMAGE_USAGE_TRANSFER_DST_BIT, .imageSharingMode = VK_SHARING_MODE_EXCLUSIVE, .queueFamilyIndexCount = 1, .pQueueFamilyIndices = &graphicsFamily, .preTransform = swapchainSupport.capabilities.currentTransform, .compositeAlpha = VK_COMPOSITE_ALPHA_OPAQUE_BIT_KHR, .presentMode = presentMode, .clipped = VK_TRUE, .oldSwapchain = VK_NULL_HANDLE };

return vkCreateSwapchainKHR( device, &ci, nullptr, swapchain);

}

- Once the swapchain object has been created, we should retrieve the actual images from the swapchain. Use the following function to do so:

size_t createSwapchainImages( VkDevice device, VkSwapchainKHR swapchain, std::vector<VkImage>& swapchainImages, std::vector<VkImageView>& swapchainImageViews)

{

uint32_t imageCount = 0;

VK_ASSERT(vkGetSwapchainImagesKHR(device, swapchain, &imageCount, nullptr) == VK_SUCCESS);

swapchainImages.resize(imageCount);

swapchainImageViews.resize(imageCount);

VK_ASSERT(vkGetSwapchainImagesKHR(device, swapchain, &imageCount, swapchainImages.data()) == VK_SUCCESS);

for (unsigned i = 0; i < imageCount; i++)

if (!createImageView(device, swapchainImages[i], VK_FORMAT_B8G8R8A8_UNORM, VK_IMAGE_ASPECT_COLOR_BIT, &swapchainImageViews[i]))

exit(EXIT_FAILURE);

return imageCount;

}

- One last thing to mention is the helper function that creates an image view for us:

bool createImageView(VkDevice device, VkImage image, VkFormat format, VkImageAspectFlags aspectFlags, VkImageView* imageView)

{

const VkImageViewCreateInfo viewInfo = { .sType = VK_STRUCTURE_TYPE_IMAGE_VIEW_CREATE_INFO, .pNext = nullptr, .flags = 0, .image = image, .viewType = VK_IMAGE_VIEW_TYPE_2D, .format = format, .subresourceRange = { .aspectMask = aspectFlags, .baseMipLevel = 0, .levelCount = 1, .baseArrayLayer = 0, .layerCount = 1 }

};

VK_CHECK(vkCreateImageView(device, &viewInfo, nullptr, imageView));

return true;

}

Now, we can start the Vulkan initialization process. In the next recipe, we will show you how to catch errors that are encountered during the initialization phase.

Setting up Vulkan's debugging capabilities

Once we have created a Vulkan instance, we can start tracking all possible errors and warnings that may be produced by the validation layer. To do so, we should create a couple of callback functions and register them with the Vulkan instance. In this recipe, we will learn how to set up and use them.

How to do it...

There are two callback functions that catch the debug output from Vulkan: vulkanDebugCallback() and vulkanDebugReportCallback(). Let's get started:

- The first function, vulkanDebugCallback() prints all messages coming into the system console:

static VKAPI_ATTR VkBool32 VKAPI_CALL

vulkanDebugCallback( VkDebugUtilsMessageSeverityFlagBitsEXT Severity, VkDebugUtilsMessageTypeFlagsEXT Type, const VkDebugUtilsMessengerCallbackDataEXT* CallbackData, void* UserData)

{

printf("Validation layer: %s ", CallbackData->pMessage);

return VK_FALSE;

}

- vulkanDebugReportCallback() is more elaborate and provides information about an object that's causing an error or a warning. Some performance warnings are silenced to make the debug output more readable:

static VKAPI_ATTR VkBool32 VKAPI_CALL

vulkanDebugReportCallback( VkDebugReportFlagsEXT flags, VkDebugReportObjectTypeEXT objectType, uint64_t object, size_t location, int32_t messageCode, const char* pLayerPrefix, const char* pMessage, void* UserData)

{

if (flags & VK_DEBUG_REPORT_PERFORMANCE_WARNING_BIT_EXT)

return VK_FALSE;

printf("Debug callback (%s): %s ", pLayerPrefix, pMessage);

return VK_FALSE;

}

- To associate these callbacks with a Vulkan instance, we should create two more objects, messenger and reportCallback, in the following function. They will be destroyed at the end of the application:

bool setupDebugCallbacks( VkInstance instance, VkDebugUtilsMessengerEXT* messenger, VkDebugReportCallbackEXT* reportCallback)

{

const VkDebugUtilsMessengerCreateInfoEXT ci1 = { .sType = VK_STRUCTURE_TYPE_DEBUG_UTILS_MESSENGER _CREATE_INFO_EXT, .messageSeverity = VK_DEBUG_UTILS_MESSAGE_SEVERITY _WARNING_BIT_EXT | VK_DEBUG_UTILS_MESSAGE_SEVERITY_ERROR_BIT_EXT, .messageType = VK_DEBUG_UTILS_MESSAGE_TYPE_GENERAL_BIT_EXT| VK_DEBUG_UTILS_MESSAGE_TYPE_VALIDATION_BIT_EXT| VK_DEBUG_UTILS_MESSAGE_TYPE _PERFORMANCE_BIT_EXT, .pfnUserCallback = &VulkanDebugCallback, .pUserData = nullptr };

VK_CHECK(vkCreateDebugUtilsMessengerEXT( instance, &ci1, nullptr, messenger));

const VkDebugReportCallbackCreateInfoEXT ci2 = { .sType = VK_STRUCTURE_TYPE_DEBUG_REPORT _CALLBACK_CREATE_INFO_EXT, .pNext = nullptr, .flags = VK_DEBUG_REPORT_WARNING_BIT_EXT | VK_DEBUG_REPORT_PERFORMANCE_WARNING_BIT_EXT | VK_DEBUG_REPORT_ERROR_BIT_EXT | VK_DEBUG_REPORT_DEBUG_BIT_EXT, .pfnCallback = &VulkanDebugReportCallback, .pUserData = nullptr };

VK_CHECK(vkCreateDebugReportCallbackEXT(instance, &ci, nullptr,reportCallback));

return true;

}

This code is sufficient to get you started with reading the validation layer messages and debugging your Vulkan applications.

There's more…

To make our validation layers even more useful, we can add symbolic names to Vulkan objects. This is useful for debugging Vulkan applications in situations where the validation layer reports object handles. Use the following code snippet to do this:

bool setVkObjectName(VulkanRenderDevice& vkDev, void object, VkObjectType objType, const char name) {

VkDebugUtilsObjectNameInfoEXT nameInfo = { .sType = VK_STRUCTURE_TYPE_DEBUG_UTILS