Chapter 11. Intelligent search

| This chapter covers |

|

Search is ubiquitous and a multi-billion-dollar business. According to Nielsen Net Ratings, in the month of July 2008, an estimated 4.8 billion searches[1] were carried out by Google. This amounted to 60 percent of all searches performed in the US during this period. There’s hardly any application now that doesn’t provide a search capability within the application. In this chapter, we look at how you can add search capabilities to your application using Lucene and how you can make the search intelligent.

If you haven’t done so, it’s worthwhile to review chapter 6. In that chapter, we looked at intelligent web crawling using Nutch, which uses Lucene. As a precursor to this chapter, you should have also reviewed chapter 8, in which we introduced the various Lucene analyzers and the fundamentals of text parsing.

We begin the chapter by looking at some of the fundamental concepts related to text search. We briefly review the process of creating an index and searching the index with Lucene. After that, we take a deeper look at indexing. Then we review the process of searching with Lucene and some of the advanced search concepts that we later use in the section about intelligent search. We look at some useful search-related tools and frameworks. Finally, we look at how search within your application can be made more intelligent and personalized for the user. At the end of this chapter, you should be able to add search to your application, make it intelligent, and personalize it for the user.

11.1. Search fundamentals

Given a query—a keyword or a phrase—search is the process of retrieving documents that are relevant to the query. The list of documents is generally returned in the order of how relevant they are to the query. You’re already familiar with term vectors; in essence, search is the process of creating a term-vector representation for the query and retrieving documents whose term vector is most similar to that of the query term vector. Of course, we want the results to return quickly, and to make our search service scalable so that it can handle multiple simultaneous queries.

Recall and precision are two commonly used metrics to quantify the quality of search results—this is similar to evaluating the quality of documents retrieved by a crawler, as discussed in chapter 6. Recall is the percentage of relevant documents that were returned, while precision is the percentage of documents that you found relevant. For example, if there are 10 relevant documents and a search result returned 8 results, 5 of which were relevant, then recall is 5/10 = 50%, while precision is 5/8 = 62.5%. Furthermore, we want to make our search intelligent by using additional contextual information, such as the user’s interests.

Manning has an excellent book on Lucene, Lucene in Action, by Gospodnetic and Hatcher. This book is a must read if you’re going to be doing any serious work with Lucene in your application.

In this section, we briefly introduce some of the key search concepts. We review the key Lucene classes that will be used and end the section by implementing an example that demonstrates the search process. We begin with a brief introduction reviewing the process involved in adding search to your application. This is followed by looking at some of the core Lucene classes and working through an example.

11.1.1. Search architecture

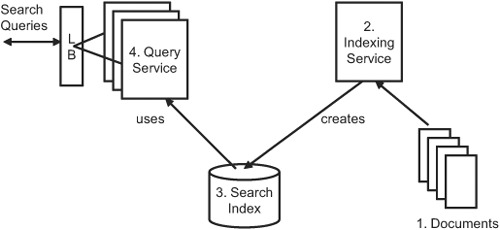

As shown in figure 11.1, there are four entities involved in adding search to your application: documents to be indexed, an asynchronous indexing service, the search index, and a synchronous query service.

Figure 11.1. The entities involved with adding search to your application

There are two main services that you need to build to add search to your application. First is an asynchronous indexing service, which is responsible for creating asearch index—a reverse index of terms with related documents. Depending on the size of your documents, it may take a long time to create a full index, and this process is typically done offline or asynchronously. Once a search index has been created, this service may update the index, either through periodic polling for document changes or by being notified of changes through an event notification. The indexing service is responsible for keeping the search index created up-to-date.

The second service is the query or search service. This service is responsible for taking a user query and converting it into an appropriate term-vector representation and retrieving relevant documents from the search index. This search service is state-less—it receives a query and returns a response back, without holding on to any state information—which makes it possible to have multiple instances of this service servicing client requests. The service can be collocated in the same JVM as your web application, or it may be distributed on remote machines. Having multiple instances of the stateless search services, front-ended with a load balancer and servicing requests over HTTP, is a common architecture used to deploy search services. In this architecture, as load increases, more instances of the service are added to the load balancer.

With that brief overview, let’s look at some of the core classes that are used for indexing and searching using Lucene.

11.1.2. Core Lucene classes

As shown in figure 11.2, IndexWriter is the main class for creating and maintaining a Lucene index. An IndexWriter uses a Directory object to create an index. It also uses an Analyzer (refer to section 8.1) to analyze documents. A Document is a set of fields with a name and a textual value. Each field should correspond to information that you’ll search against or display in the search results. Documents are atomic entities that are added to an index and retrieved from the index through search. Not all documents in an index need have the same set of fields. Also, the weight associated with each document or field for searching can be different, using a process known as boosting.

Figure 11.2. The key Lucene classes for creating and searching an index

The IndexReader class contains methods for deleting Document instances from the index. At any given time, only one thread, either IndexReader or IndexWriter, should modify an index. If you look at the source of the IndexWriter and IndexReader, you’ll find a number of synchronized checks that ensure that the same instance can be shared across multiple threads. An IndexReader is unaware of any changes to the index once it’s been opened; you may need to periodically reopen it to see any changes made after it was opened.

A QueryParser uses an Analyzer—this should be the same as the one used for indexing—to convert a user query into a Query object. An instance of a Searcher then uses this Query to search through the search index to return a Hits object. It’s safe to have multiple search queries—read-only operations—executed on an index in parallel, even while an index is being created or modified by a different thread.

The search index is accessed using the Directory object. The Hits object contains by composition a number of Hit objects. Each Hit object provides access to the underlying document. Figure 11.2 shows two types of the Searcher—the IndexSearcher for searching a single index and the MultiSearcher for searching through multiple indexes sequentially. ParallelMultiSearcher searches multiple indexes in parallel. Figure 11.2 also shows two kinds of Directory classes—the first, FSDirectory, is used for storing the index on the file system, while the second one, RAMDirectory, is used for creating an in-memory index. The in-memory index is useful for writing tests, whereas the index is created as a part of the test and then destroyed at the end of the test. Since RAMDirectory does everything in memory, it’s much faster than FSDirec-tory and can also be used for services that require fast performance, such as an auto-complete service.

Next, let’s put these core classes into action. We illustrate the basic process of indexing and searching by applying it to an example.

11.1.3. Basic indexing and searching via example

We build on our example from the previous chapters of retrieving blog entries from the blogosphere using Technorati. We first create an index of all the blog entries we’ve retrieved and then search through them using the Lucene APIs. If you haven’t done so, it’ll be worthwhile to review chapter 5 and section 9.1.2, which contains the implementation for BlogDataSetCreatorImpl. BlogDataSetCreatorImpl is used in this example to retrieve data from the blogosphere.

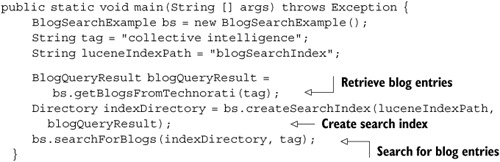

This example is split into three main parts and relates to the steps shown in figure 11.1:

1. Retrieving blog data from the blogosphere

2. Creating a Lucene index using the blog entries

3. Searching the Lucene index for certain phrases

We implement a class called BlogSearchExample for this section. The code for this class is split into three parts, one for each of the three parts. At this stage it’s helpful to look at listing 11.1, which shows the main method for the BlogSearchExample.

Listing 11.1. The main method for the BlogSearchExample

The main method consists of three main lines of code, corresponding to the three parts enumerated previously.

Retrieving Blog Entries From Technorati

Listing 11.2 shows the first part of the code for BlogSearchExample, which deals with retrieving blog entries from Technorati.

Listing 11.2. Retrieving blog entries from Technorati

package com.alag.ci.search.lucene;

import java.io.IOException;

import java.util.*;

import org.apache.lucene.analysis.Analyzer;

import org.apache.lucene.document.*;

import org.apache.lucene.index.IndexWriter;

import org.apache.lucene.queryParser.QueryParser;

import org.apache.lucene.search.*;

import org.apache.lucene.store.Directory;

import com.alag.ci.blog.dataset.impl.BlogDataSetCreatorImpl;

import com.alag.ci.blog.search.*;

import com.alag.ci.textanalysis.lucene.impl.*;

public class BlogSearchExample {

public BlogQueryResult getBlogsFromTechnorati(String tag)

throws BlogSearcherException {

return (new BlogDataSetCreatorImpl()).getBlogsFromTechnorati(tag);

}

To retrieve data from Technorati, we use BlogDataSetCreatorImpl, which we developed in section 9.1.2 and listing 9.6. The method getBlogsFromTechnorati returns a BlogQueryResult object using the passed-in tag. Next, let’s look at how this data is converted into a search index.

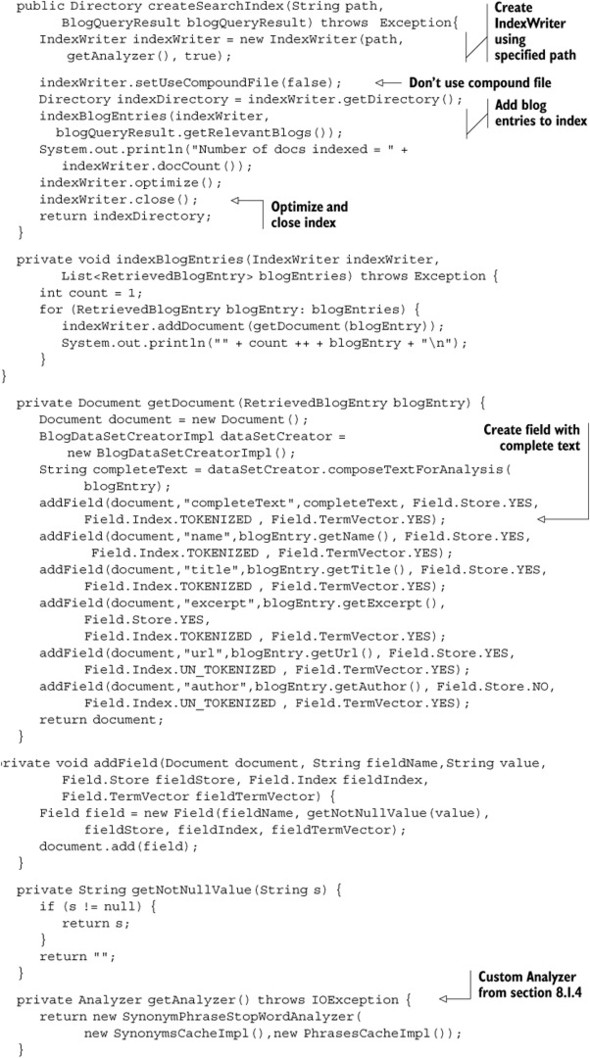

Creating a Search Index

Listing 11.3 shows the next part of the code for BlogSearchExample, which deals with creating a search index.

Listing 11.3. Creating a search index

We first create an instance of the IndexWriter using the specified directory path name and the Analyzer. The last parameter, true, specifies that it’s okay to create the index or overwrite the existing one. Passing in false would’ve appended to an existing index:

IndexWriter indexWriter = new IndexWriter(path, getAnalyzer(), true);

Note that we use the SynonymPhraseStopWordAnalyzer, which we developed in section 8.1.4. The following code

indexWriter.setUseCompoundFile(false);

implies that we don’t want to use compound files. If it had been turned on, it would signify that multiple files for each segment would be merged into a single file when a new segment is flushed. Refer to http://lucene.apache.org/java/docs/fileformats.html if you’re interested in learning more about the Lucene index format.

The following line of code

indexBlogEntries(indexWriter, blogQueryResult.getRelevantBlogs());

iterates over each blog entry, creates a document, and adds it to the index. The method getDocument() creates a Document object from a blog entry. For each blog entry, we first create a Field using the complete text representation for the blog entry:

String completeText = dataSetCreator.composeTextForAnalysis(

blogEntry);

To create the Field:

Field field = new Field(fieldName, getNotNullValue(value),

Field.Store.YES, fieldIndex, fieldTermVector);

We specify the field name, set a non-null value and specify whether the field is to be stored in the index and whether it’s to be tokenized, and whether we want to store the term vector for that field in the index. Storing a field implies that the content of the field will be literally stored in the index and can be retrieved later from search results. A field that isn’t stored is still indexed by Lucene. Note that we don’t tokenize the URL. Fields that are tokenized (Field.Index.TOKENIZED) can’t be used for sorting the results obtained by querying the index. So if you plan to use Lucene for sorting the results using a sort order other than relevance, you need to add a field that’s Field.Index.UN_TOKENIZED. Setting the option to Field.Index.NO implies that the value of the field won’t be indexed by Lucene. When the document.add() method is called multiple times with the same field name but different value objects, Lucene internally will concatenate all the values together for that particular field. As a rule of thumb, in most applications, you want to store all the parameters that you’ll show in your search results. This typically includes title, URL, and abstract; avoid having to make a database call to show each of the results in your search results page. Try to retrieve all the information that’s displayed from Lucene.

Once all the blog entries have been added to the index, we print out the number of entries added to the index using indexWriter.docCount(). Lastly, we optimize and close the index using

indexWriter.optimize();

indexWriter.close();

Listing 11.4 shows sample output from the code for one of the runs.

Listing 11.4. Sample output from indexing the blogs

1 Name: Wikinomics Url: http://204.15.36.163:8080/blog Title: Is Digg Making Us Dumber? Excerpt: If you started reading this post based on the title, you've already half proven the point I'm about to argue. Sensationalism combined with social med LastUpdateTime: Mon Feb 25 20:03:23 PST 2008 2 Name: Wikinomics Url: http://www.wikinomics.com/blog Title: Is Digg Making Us Dumber? Excerpt: If you started reading this post based on the title, you've already half proven the point I'm about LastUpdateTime: Tue Feb 26 06:19:03 PST 2008 3 Name: BSG Alliance - Next Generation Enterprises. On Demand. Url: http://www.bsgalliance.com Title: Is Digg Making Us Dumber? Excerpt: Citation - content was aggregated by Kalivo Listener from 3rd party: Credited Author: Naumi Haque So LastUpdateTime: Mon Feb 25 22:40:00 PST 2008 4 Name: Social Media Explorer Url: http://www.socialmediaexplorer.com Title: Exploring Social Media Measurement: Collective Intellect Excerpt: This entry in our ongoing exploration of social media measurement firms focuses on Collective Intel LastUpdateTime: Mon Feb 25 11:00:53 PST 2008 Author: Jason Falls .......... 10 Name: Et si l'on parlait Marketing Url: http://henrikaufman.typepad.com/et_si_lon_parlait_marketi Title: Imagination 3.0 Excerpt: En Octobre 2007, j'avais analysé l'excellent livre de mon ami Brice Auckenthaler : L'Imagination C LastUpdateTime: Mon Feb 25 23:22:20 PST 2008

That’s it! We’ve added all the blog entries to our index and we can now search the index.

Searching the Index

Searching the index using a query is fairly straightforward. Listing 11.5 shows the third part of the code for this example, which deals with searching the index.

Listing 11.5. Searching the index

We first create an instance of the IndexSearcher using the Directory that was passed in to the index. Alternatively, you can use the path to the index to create an instance of a Directory using the static method in FSDirectory:

Directory directory = FSDirectory.getDirectory(luceneIndexPath);

Next, we create an instance of the QueryParser using the same analyzer that we used for indexing. The first parameter in the QueryParser specifies the name of the default field to be used for searching. For this we specify the completeText field that we created during indexing. Alternatively, one could use MultiFieldQueryParser to search across multiple fields. Next, we create a Query object using the query string and the QueryParser. To search the index, we simply invoke the search method in the IndexSearcher:

Hits hits = indexSearcher.search(query);

The Hits object holds the ranked list of resulting documents. It has a method to return an Iterator over all the instances, along with retrieving a document based on the resulting index. You can also get the number of results returned using hits.length(). For each of the returned documents, we print out the title and excerpt fields using the get() method on the document. Note that in this example, we know that the number of returned blog entries is small. In general, you should iterate over only the hits that you need. Iterating over all hits may cause performance issues. If you need to iterate over many or all hits, you should use a HitCollector, as shown later in section 11.3.7.

The following code demonstrates how Lucene scored the document for the query:

Explanation explanation = indexSearcher.explain(weight, hit.getId());

We discuss this in more detail in section 11.3.1.

It is useful to look at listing 11.6, which shows sample output from running the example. Note that your output will be different based on when you run the exam-ple—it’s a function of whichever blog entries on collective intelligence have been created in the blogosphere around the time you run the program.

Listing 11.6. Sample output from our example

Number of docs indexed = 10

Number of results = 3 for collective intelligence

Collective Knowing Gates of the Future From the Middle I

recently wrote an article on collective intelligence that I will share h

0.8109757 = (MATCH) sum of:

0.35089532 = (MATCH) weight(completeText:collective in 7), product of:

0.5919065 = queryWeight(completeText:collective), product of:

1.9162908 = idf(docFreq=3)

0.30888134 = queryNorm

0.5928222 = (MATCH) fieldWeight(completeText:collective in 7),

product of:

1.4142135 = tf(termFreq(completeText:collective)=2)

1.9162908 = idf(docFreq=3)

0.21875 = fieldNorm(field=completeText, doc=7)

0.46008033 = (MATCH) weight(completeText:intelligence in 7), product of:

0.80600667 = queryWeight(completeText:intelligence), product of:

2.609438 = idf(docFreq=1)

0.30888134 = queryNorm

0.57081455 = (MATCH) fieldWeight(completeText:intelligence in 7),

product of:

1.0 = tf(termFreq(completeText:intelligence)=1)

2.609438 = idf(docFreq=1)

0.21875 = fieldNorm(field=completeText, doc=7)

Exploring Social Media Measurement: Collective Intellect Social Media

Explorer Jason Falls This entry in our ongoing exploration of

social media measurement firms focuses on Collective Intel

0.1503837 = (MATCH) product of:

0.3007674 = (MATCH) sum of:

0.3007674 = (MATCH) weight(completeText:collective in 3), product of:

0.5919065 = queryWeight(completeText:collective), product of:

1.9162908 = idf(docFreq=3)

0.30888134 = queryNorm

0.5081333 = (MATCH) fieldWeight(completeText:collective in 3),

product of:

1.4142135 = tf(termFreq(completeText:collective)=2)

1.9162908 = idf(docFreq=3)

0.1875 = fieldNorm(field=completeText, doc=3)

0.5 = coord(1/2)

Boites a idées et ingeniosité collective Le perfologue, le blog pro de

la performance et du techno management en entreprise. Alain Fernandez

Alain Fernandez Les boîte á idées de new génération Pour capter

l'ingéniosité collective, passez donc de la boîte á

0.1002558 = (MATCH) product of:

0.2005116 = (MATCH) sum of:

0.2005116 = (MATCH) weight(completeText:collective in 4), product of:

0.5919065 = queryWeight(completeText:collective), product of:

1.9162908 = idf(docFreq=3)

0.30888134 = queryNorm

0.33875555 = (MATCH) fieldWeight(completeText:collective in 4),

product of:

1.4142135 = tf(termFreq(completeText:collective)=2)

1.9162908 = idf(docFreq=3)

0.125 = fieldNorm(field=completeText, doc=4)

0.5 = coord(1/2)

As expected, 10 documents were retrieved from Technorati and indexed. One of them had collective intelligence appear in the retrieved text and was ranked the highest, while the other two contained the term collective.

This completes our overview and example of the basic Lucene classes. You should have a good understanding of what’s required to create a Lucene index and for searching the index. Next, let’s take a more detailed look at the process of indexing in Lucene.

11.2. Indexing with Lucene

During the indexing process, Lucene takes in Document objects composed of Fields. It analyzes the text associated with the Fields to extract terms. Lucene deals only with text. If you have documents in nontext format such as PDF or Microsoft Word, you need to convert it into plain text that Lucene can understand. A number of open source tool kits are available for this conversion; for example PDFBox is an open source library available for handling PDF documents.

In this section, we’take a deeper look at the indexing process. We begin with a brief introduction of the two Lucene index formats. This is followed by a review of the APIs related to maintaining the Lucene index, some coverage of adding incremental indexing to your application, ways to access the term vectors, and finally a note on optimizing the indexing process.

11.2.1. Understanding the index format

A Lucene index is an inverted text index, where each term is associated with documents in which the term appears. A Lucene index is composed of multiple segments. Each segment is a fully independent, searchable index. Indexes evolve when new documents are added to the index and when existing segments are merged together. Each document within a segment has a unique ID within that segment. The ID associated with a document in a segment may change as new segments are merged and deleted documents are removed. All files belonging to a segment have the same filename with different file extensions. When the compound file format is used, all the files are merged into a single file with a .CFS extension. Figure 11.3 shows the files created for our example in section 11.1.3 using a non-compound file structure and a compound file structure.

Figure 11.3. Non-compound and compound index files

Once an index has been created, chances are that you may need to modify the index. Let’s next look at how this is done.

11.2.2. Modifying the index

Document instances in an index can be deleted using the IndexReader class. If a document has been modified, you first need to delete the document and then add the new version of the document to the index. An IndexReader can be opened on a directory that has an IndexWriter opened already, but it can’t be used to delete documents from the index at that point.

There are two ways to delete documents from an index, as shown in listing 11.7.

Listing 11.7. Deleting documents using the IndexReader

Each document in the index has a unique ID associated with it. Unfortunately, these IDs can change as documents are added and deleted from the index and as segments are merged. For fast lookup, the IndexReader provides access to documents via their document number. There are four static methods that provide access to an IndexReader using the open command. In our example, we get an instance of the IndexReader using the Directory object. Alternatively, we could have used a File or String representation to the index directory.

IndexReader indexReader = IndexReader.open(indexDirectory);

To delete a document with a specific document number, we simply call the delete-Document method:

indexReader.deleteDocument(docIndexNum);

Note that at this stage, the document hasn’t been actually deleted from the index—it’s simply been marked for deletion. It’ll be deleted from the index when we close the index:

indexReader.close();

A more useful way of deleting entries from the index is to create a Field object within the document that contains a unique ID string for the document. As things change in your application, simply create a Term object with the appropriate ID and field name and use it to delete the appropriate document from the index. This is illustrated in the method deleteByTerm(). The IndexReader provides a convenient method, undeleteAll(), to undelete all documents that have been marked for deletion.

Opening and closing indexes for writing tends to be expensive, especially for large indexes. It’s more efficient to do all the modifications in a batch. Further, it’s more efficient to first delete all the required documents and then add new documents, as shown in listing 11.8.

Listing 11.8. Batch deletion and addition of documents

Note that an instance of IndexReader is used for deleting the documents, while an instance of IndexWriter is used for adding new Document instances.

Next, let’s look at how you can leverage this to keep your index up to date by incrementally updating your index.

11.2.3. Incremental indexing

Once an index has been created, it needs to be updated to reflect changes in the application. For example, if your application is leveraging user-generated content, the index needs to be updated with new content being added, modified, or deleted by the users. A simple approach some sites follow is to periodically—perhaps every few hours—re-create the complete index and update the search service with the new index. In this mode, the index, once created, is never modified. However, such an approach may be impractical if the requirement is that once a user generates new content, the user should be able to find the content shortly after addition. Furthermore, the amount of time taken to create a complete index may be too long to make this approach feasible. This is where incremental indexing comes into play. You may still want to re-create the complete index periodically, perhaps over a longer period of time.

As shown in figure 11.4, one of the simplest deployment architectures for search is to have multiple instances of the search service, each having its own index instance. These search services never update the index themselves—they access the index in read-only mode. An external indexing service creates the index and then propagates the changes to the search service instances. Periodically, the external indexing service batches all the changes that need to be propagated to the index and incrementally updates the index. On completion, it then propagates the updated index to the search instances, which periodically create a new version of the IndexSearcher. One downside of such an approach is the amount of data that needs to be propagated between the machines, especially for very large indexes.

Figure 11.4. A simple deployment architecture where each search instance has its own copy of a read-only index. An external service creates and updates the index, pushing the changes periodically to the servers.

Note that in the absence of an external index updater, each of the search service instances would have to do work to update their indexes, in essence duplicating the work.

Figure 11.5 shows an alternate architecture in which multiple search instances are accessing and modifying the same index. Let’s assume that we’re building a service, IndexUpdaterService, that’s responsible for updating the search index. For incremental indexing, the first thing we need to ensure is that at any given time, there’s only one instance of an IndexReader modifying the index.

Figure 11.5. Multiple search instances sharing the same index

First, we need to ensure that there’s only one instance of IndexUpdaterService in a JVM—perhaps by using the Singleton pattern or using a Spring bean instance. Next, if multiple JVMs are accessing the same index, you’ll need to implement a global-lock system to ensure that only one instance is active at any given time. We discuss two solutions for this, first using an implementation that involves the database, and second using the Lock class available in Lucene. The second approach involves less code, but doesn’t guard against JVM crashes. When a JVM crashes, the lock is left in an acquired state and you have to manually release or delete the lock file.

The first approach uses a timer-based mechanism that periodically invokes the IndexUpdaterService and uses a row in a database table to create a lock. The Index-UpdaterService first checks to see whether any other service is currently updating the index. If no services are updating the index—if there’s no active row in the database table—it inserts a row and sets its state to be active. This service now has a lease on updating the index for a period of time. This service would then process all the changes—up to a maximum number that can be processed in the time frame of the lease—that have to be made to the index since the last update. Once it’s done, it sets the state to inactive in the database, allowing other service instances to then do an update. To avoid JVM crashes, there’s also a timeout associated with the active state for a service.

The second approach is similar, but uses the file-based locking provided by Lucene. When using FSDirectory, lock files are created in the directory specified by the system property org.apache.lucene.lockdir if it’s set; otherwise the files are created in the computer’s temporary directory (the directory specified by the java.io.tmpdir system directory). When multiple JVM instances are accessing the same index directory, you need to explicitly set the lock directory so that the same lock file is seen by all instances.

There are two kinds of locks: write locks and commit locks. Write locks are used whenever the index needs to be modified, and tend to be held for longer periods of time than commit locks. The IndexWriter holds on to the write lock when it’s instantiated and releases it only when it’s closed. The IndexReader obtains a write lock for three operations: deleting documents, undeleting documents, and changing the normalization factor for a field. Commit locks are used whenever segments are to be merged or committed. A file called segments names all of the other files in an index. An IndexReader obtains a commit lock before it reads the segments file. IndexReader keeps the lock until all the other files in the index have been read. The IndexWriter also obtains the commit lock when it has to write the segments file. It keeps the lock until it deletes obsolete index files. Commit locks are accessed more often than write locks, but for smaller durations, as they’re obtained only when files are opened or deleted and the small segments file is read or written.

Listing 11.9 illustrates the use of the isLocked() method in the IndexReader to check whether the index is currently locked.

Listing 11.9. Adding code to check whether the index is locked

public void illustrateLockingCode(Directory indexDirectory,

List<Term> deletionTerms,

List<Document> addDocuments) throws Exception {

if (!IndexReader.isLocked(indexDirectory)) {

IndexReader indexReader = IndexReader.open(indexDirectory);

//do work

} else {

//wait

}

}

Another alternative is to use an application package such as Solr (see section 11.4.2), which takes care of a lot of these issues. Having looked at how to incrementally update the index, next let’s look at how we can access the term frequency vector using Lucene.

11.2.4. Accessing the term frequency vector

You can access the term vectors associated with each of the fields using the IndexReader. Note that when creating the Field object as shown in listing 11.3, you need to set the third argument in the static method for creating a field to Field.TermVector.YES. Listing 11.10 shows some sample code for accessing the term frequency vector.

Listing 11.10. Sample code to access the term frequency vector for a field

public void illustrateTermFreqVector(Directory indexDirectory)

throws Exception {

IndexReader indexReader = IndexReader.open(indexDirectory);

for (int i = 0; i < indexReader.numDocs(); i ++) {

System.out.println("Blog " + i);

TermFreqVector termFreqVector =

indexReader.getTermFreqVector(i, "completeText");

String [] terms = termFreqVector.getTerms();

int [] freq = termFreqVector.getTermFrequencies();

for (int j =0 ; j < terms.length; j ++) {

System.out.println(terms[j] + " " + freq[j]);

}

}

}

The following code

TermFreqVector termFreqVector = indexReader.getTermFreqVector(i, "completeText");

passes in the index number for a document along with the name of the field for which the term frequency vector is required. The IndexReader supports another method for returning all the term frequencies for a document:

TermFreqVector[] getTermFreqVectors(int docNumber)

Finally, let’s look at some ways to manage performance during the indexing process.

11.2.5. Optimizing indexing performance

Methods to improve[2] the time required by Lucene to create its index can be broken down into the following three categories:

- Memory settings

- Architecture for indexing

- Other ways to improve performance

Optimizing Memory Settings

When a document is added to an index (addDocument in IndexWriter), Lucene first stores the document in its memory and then periodically flushes the documents to disk and merges the segment. setMaxBufferedDocs controls how often the documents in the memory are flushed to the disk, while setMergeFactor sets how often index segments are merged together. Both these parameters are by default set to 10. You can control this number by invoking setMergeFactor() and setMaxBufferedDocs() in the IndexWriter. More RAM is used for larger values of mergeFactor. Making this number large helps improve the indexing time, but slows down searching, since searching over an unoptimized index is slower than searching an optimized index. Making this value too large may also slow down the indexing process, since merging more indexes at once may require more frequent access to the disk. As a rule of thumb, large values for this parameter (greater than 10) are recommended for batch indexing and smaller values (less than 10) are recommended during incremental indexing.

Another alternative to flushing the memory based on the number of documents added to the index is to flush based on the amount of memory being used by Lucene. For indexing, you want to use as much RAM as you can afford—with the caveat that it doesn’t help beyond a certain point.[3]Listing 11.11 illustrates the process of flushing the Lucene index based pm the amount of RAM used.

3 See discussion http://www.gossamer-threads.com/lists/lucene/java-dev/51041.

Listing 11.11. Illustrate flushing by RAM

It’s important to first set the number of maximum documents that will be used before merging to a large number, to prevent the writer from flushing based on the document count.[4] Next, the RAM size is checked after each document addition. When the amount of memory used exceeds the maximum RAM for Lucene, invoking the flush() method flushes the changes to disk.

4 See discussion http://issues.apache.org/jira/browse/LUCENE-845.

To avoid the problem of very large files causing the indexing to run out of memory, Lucene by default indexes only the first 10,000 terms for a document. You can change this by setting setMaxFieldLength in the IndexWriter. Documents with large values for this parameter will require more memory.

Indexing Architecture

Here are some tips for optimizing indexing performance:

- In memory indexing, using RAMDirectory is much faster than disk indexing using FSDirectory. To take advantage of this, create a RAMDirectory-based index and periodically flush the index to disk using the FSDirectory index’s addIndexes() method.

- To speed up the process of adding documents to the index, it may be helpful to use multiple threads to add documents. This approach is especially helpful when it may take time to create a Document instance and when using hardware that can effectively parallelize multiple threads. Note that a part of the addDocument() method is synchronized in the IndexWriter.

- For indexes with large number of documents, you can split the index into n instances created on separate machines and then merge the indexes into one index using the addIndexesNoOptimize method.

- Use a local file system rather than a remote file system.

Other Ways to Optimize

Here are some way to optimize indexing time:

- Version 2.3 of Lucene exposes methods that allow you to set the value of a Field, enabling it to be reused across documents. It’s efficient to reuse Document and Field instances. To do this, create a single Document instance. Add to it multiple Field instances, but reuse the Field instances across multiple document additions. You obviously can’t reuse the same Field instance within a document until the document has been added to the index, but you can reuse Field instances across documents.

- Make the analyzer reuse Token instances, thus avoiding unnecessary object creation.

- In Lucene 2.3, a Token can represent its text as a character array, avoiding the creation of String instances. By using the char [] API along with reusing Token instances, the creation of new objects can be avoided, which helps improve performance.

- Select the right analyzer for the kind of text being indexed. For example, indexing time increases if you use a stemmer, such as PorterStemmer, or if the analyzer is sophisticated enough to detect phrases or applies additional heuristics.

So far, we’ve looked in detail at how to create an index using Lucene. Next, we take a more detailed look at searching through this index.

11.3. Searching with Lucene

In section 11.3, we worked through a simple example that demonstrated how the Lucene index can be searched using a QueryParser. In this section, we take a more detailed look at searching.

In this section, we look at how Lucene does its scoring, the various query parsers available, how to incorporate sorting, querying on multiple fields, filtering results, searching across multiple indexes, using a HitCollector, and optimizing search performance.

11.3.1. Understanding Lucene scoring

At the heart of Lucene scoring is the vector-space model representation of text (see section 2.2.4). There is a term-vector representation associated with each field of a document. You may recall from our discussions in sections 2.2.4 and 8.2 that the weight associated with each term in the term vector is the product of two terms—the term frequency in the document and the inverse document frequency associated with the term across all documents. For comparison purposes, we also normalize the term vector so that shorter documents aren’t penalized. Lucene uses a similar approach, where in addition to the two terms, there’s a third term based on how the document and field have been boosted—we call this the boost value. Within Lucene, it’s possible to boost the value associated with a field and a document; see the setBoost() method in Field and Document. By default, the boost value associated with the field and document is 1.0. The final field boost value used by Lucene is the product of the boost values for the field and the document. Boosting fields and documents is a useful method for emphasizing certain documents or fields, depending on the business logic for your domain. For example, you may want to emphasis documents that are newer than historical ones, or documents written by users who have a higher authority (more well-known) within your application.

Given a query, which itself is converted into a normalized term vector, documents that are found to be most similar using the dot product of the vectors are returned. Lucene further multiplies the dot product for a document with a term that’s proportional to the number of matching terms in the document. For example, for a three-term query, this factor will be larger for a document that has two of the queried terms than a document that has one of the query terms.

More formally, using the nomenclature used by Lucene, the Similarity[5] class outlines the score that’s computed between a document d for a given query q:

5http://lucene.zones.apache.org:8080/hudson/job/Lucene-Nightly/javadoc/org/apache/lucene/search/Similarity.html

![]()

Note that the summation is in essence taking a dot product. Table 11.1 contains an explanation of the various terms used in scoring.

Table 11.1. Explanation of terms used for computing the relevance of a query to a document

|

Term |

Description |

|---|---|

| Score(q,d) | Relevance of query q to a document d |

| tf( t in d) | Term frequency of term t in the document |

| Idf(t) | Inverse document frequency of term t across all documents |

| Boost(t field in d) | Boost for the field—product of field and document boost factors |

| Norm(t,d) | Normalization factor for term t in the document |

| Coord(q,d) | Score factor based on the number of query terms found in document d |

| Norm(q) | Normalization factor for the query |

The DefaultSimilarity class provides a default implementation for Lucene’s similarity computation, as shown in Figure 11.6. You can extend this class if you want to override the computation of any of the terms.

Figure 11.6. The default implementation for the Similarity class

The IndexSearcher class has a method that returns an Explanation object for a Weight and a particular document. The Weight object is created from a Query(query.weight(Searcher)). The Explanation object contains details about the scoring; listing 11.12 shows a sample explanation provided for the query term collective intelligence, using the code as in listing 11.4 for searching through blog entries.

Listing 11.12. Sample explanation of Lucene scoring

Link permanente a Collective Intelligence SocialKnowledge

Collective Intelligence Pubblicato da Rosario Sica su

Novembre 18, 2007 [IMG David Thorburn]Segna

0.64706594 = (MATCH) sum of:

0.24803483 = (MATCH) weight(completeText:collective in 9), product of:

0.6191303 = queryWeight(completeText:collective), product of:

1.5108256 = idf(docFreq=5)

0.409796 = queryNorm

0.40061814 = (MATCH) fieldWeight(completeText:collective in 9),

product of:

1.4142135 = tf(termFreq(completeText:collective)=2)

1.5108256 = idf(docFreq=5)

0.1875 = fieldNorm(field=completeText, doc=9)

0.3990311 = (MATCH) weight(completeText:intelligence in 9), product of:

0.7852883 = queryWeight(completeText:intelligence), product of:

1.9162908 = idf(docFreq=3)

0.409796 = queryNorm

0.5081333 = (MATCH) fieldWeight(completeText:intelligence in 9),

product of:

1.4142135 = tf(termFreq(completeText:intelligence)=2)

1.9162908 = idf(docFreq=3)

0.1875 = fieldNorm(field=completeText, doc=9)

Using the code in listing 11.4, first a Weight instance is created:

Weight weight = query.weight(indexSearcher);

Next, while iterating over all result sets, an Explanation object is created:

Iterator iterator = hits.iterator();

while (iterator.hasNext()) {

Hit hit = (Hit) iterator.next();

Document document = hit.getDocument();

System.out.println(document.get("completeText"));

Explanation explanation = indexSearcher.explain(weight,

hit.getId());

System.out.println(explanation.toString());

}

Next, let’s look at how the query object is composed in Lucene.

11.3.2. Querying Lucene

In listing 11.4, we illustrated the use of a QueryParser to create a Query instance by parsing the query string. Lucene provides a family of Query classes, as shown in figure 11.7, which allow you to construct a Query instance based on the requirements.

Figure 11.7. Query classes available in Lucene

Table 11.2 contains a brief description for queries shown in figure 11.7. Next, let’s work through an example that combines a few of these queries, to illustrate how they can be used.

Table 11.2. Description of the query classes

Let’s extend our example in section 11.1.3, where we wanted to search for blog entries that have the phrase collective intelligence as well as a term that begins with web*. Listing 11.13 shows the code for this query.

Listing 11.13. Example code showing the use of various Query classes

We first create an instance of the PhraseQuery and add the terms collective and intelligence. Each phrase query has a parameter called slop. Slop by default is set to 0, which enables only exact phrase matches. When the slop value is greater than 0, the phrase query works like a within or near operator. The slop is the number of moves required to convert the terms of interest into the query term. For example, if we’re interested in the query collective intelligence and we come across a phrase collective xxxx intelligence, the slop associated with this phrase match is 1, since one term —xxx—needs to be moved. The slop associated with the phrase intelligence collective is 2, since the term intelligence needs to be moved two positions to the right. Lucene matches exact matches higher than sloppy matches.

For the preceding Boolean query, invoking the toString() method prints out the following Lucene query:

+completeText:“collective intelligence”~1 +completeText:web*

Next, let’s look at how search results can be sorted using Lucene.

11.3.3. Sorting search results

In a typical search application, the user types in a query and the application returns a list of items sorted in order of relevance to the query. There may be a requirement in the application to return the result set sorted in a different order. For example, the requirement may be to show the top 100 results sorted by the name of the author, or the date it was created. One naïve way of implementing this feature would be to query Lucene, retrieve all the results, and then sort the results in memory. There are a couple of problems with this approach, both related to performance and scalability. First, we need to retrieve all the results into memory and sort them. Retrieving all items consumes valuable time and computing resources. The second problem is that all the items are retrieved even though only a subset of the results will eventually be shown in the application. For example, the second page of results may just need to show items 11 to 20 in the result list. Fortunately, Lucene has built-in support for sorting the results sets, which we briefly review in this section.

The Sort class in Lucene encapsulates the sort criteria. Searcher has a number of overloaded search methods that, in addition to the query, also accept Sort as an input, and as we see in section 11.3.5, a Filter for filtering results. The Sort class has two static constants: Sort.INDEXORDER, which sorts the results based on the index order, and Sort.RELEVANCE, which sorts the results based on relevance to the query. Fields used for sorting must contain a single term. The term value indicates the document’s relative position in the sort order. The field needs to be indexed, but not tokenized, and there’s no need to store the field. Lucene supports three data types for sorting fields: String, Integer, and Float. Integers and Floats are sorted from low to high. The sort order can be reversed by creating the Sort instance using either the constructor:

public Sort(String field, boolean reverse)

or the setSort() method:

setSort(String field, boolean reverse)

The Sort object is thread safe and can be reused by using the setSort() method.

In listing 11.3, we created a field called “author”. Let’s use this field for sorting the results:

addField(document,"author",blogEntry.getAuthor(), Field.Store.NO, Field.Index.UN_TOKENIZED , Field.TermVector.YES);

Listing 11.14 shows the implementation for the sorting example using the "author" field.

Listing 11.14. Sorting example

In the case of two documents that have the same values in the Sort field, the document number is used for displaying the items. You can also create a multiple field Sort by using the SortField class. For example, the following code first sorts by the author field, in reverse alphabetical order, followed by document relevance to the query, and lastly by using the document index number:

SortField [] sortFields = {new SortField("author", false),

SortField.FIELD_SCORE, SortField.FIELD_DOC};

Sort multiFieldSort = new Sort(sortFields);

So far we’ve been dealing with searching across a single field. Let’s look next at how we can query across multiple fields.

11.3.4. Querying on multiple fields

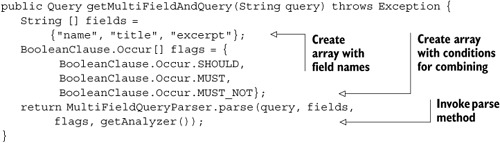

In listing 11.3, we created a “completeText” field that concatenated text from the title and excerpt fields of the blog entries. In this section, we illustrate how you can search across multiple fields using the MultiFieldQueryParser, which extends FieldQueryParser as shown in figure 11.2.

Let’s continue with our example from section 11.1.3. We’re interested in searching across three fields—"name", "title", and "excerpt". For this, we first create a String array:

String [] fields = {"name", "title", "excerpt"};

Next, a new instance of the MultiFieldQueryParser is created using the constructor:

new MultiFieldQueryParser(fields, getAnalyzer());

Lucene will search for terms using the OR operator—the query needs to match any one of the three fields. Next, let’s look at how we can query multiple fields using different matching conditions. Listing 11.15 illustrates how a multifield query can be composed, specifying that the match should occur in the “name” field, and the “title” field, and shouldn’t occur in the “excerpt” field.

Listing 11.15. MultiFieldQueryParser example

This example constructs the following query for Lucene:

(name:query) +(title:query) -(excerpt:query)

Next, let’s look at how we can use Filters for filtering out results using Lucene.

11.3.5. Filtering

Lots of times, you may need to constrain your search to a subset of available documents. For example, in an SaaS application, where there are multiple domains or companies supported by the same software and hardware instance, you need to search through documents only within the domain of the user. As shown in figure 11.8, there are five Filter classes available in Lucene.

Figure 11.8. Filters available in Lucene

Table 11.3 contains a brief description of the various filters that are available in Lucene.

Table 11.3. Description of the filter classes

|

Class |

Description |

|---|---|

| Filter | Abstract base class for all filters. Provides a mechanism to restrict the search to a subset of the index. |

| CachingWrapperFilter | Wraps another filter’s results and caches it. The intent is to allow filters to simply filter and then add caching using this filter. |

| QueryFilter | Constrains search results to only those that match the required query. It also caches the result so that searches on the same index using this filter are much faster. |

| RangeFilter | Restricts the search results to a range of values. This is similar to a RangeQuery. |

| PrefixFilter | Restricts the search results to those that match the prefix. This is similar to a PrefixQuery. |

Next, let’s look at some code that illustrates how to create a filter and invoke the search method using the filter. Listing 11.16 shows the code for creating a RangeFilter using the "modifiedDate" field. Note that the date the document was modified is converted into a String representation using yyyymmdd format.

Listing 11.16. Filtering the results

The constructor for a RangeFilter takes five parameters. First is the name of the field to which the filter has to be applied. Next are the lower and the upper term for the range, followed by two Boolean flags indicating whether to include the lower and upper values. One of the advantages of using Filters is the caching of the results. It’s easy enough to wrap the RangeFilter instance using the CachingWrapperFilter. As long as the same IndexReader or IndexSearcher instance is used, Lucene will use the cached results after the first query is made, which populates the cache.

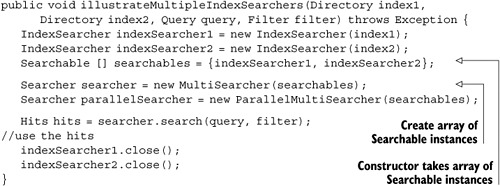

11.3.6. Searching multiple indexes

In figure 11.2, you may have noticed two Searcher classes, MultiSearcher and ParallelMultiSearcher. These classes are useful if you need to search across multiple indexes. It’s common practice to partition your Lucene indexes, once they become large. Both MultiSearcher and ParallelMultiSearcher, which extends Multi-Searcher, can search across multiple index instances and present search results combined together as if the results were obtained from searching a single index. Listing 11.17 shows the code for creating and searching using the MultiSearcher and ParallelMultiSearcher classes.

Listing 11.17. Searching across multiple instances

ParallelMultiSearcher parallelizes the search and filter operations across each index by using a separate thread for each Searchable.

Next, let’s look at how we can efficiently iterate through a large number of documents.

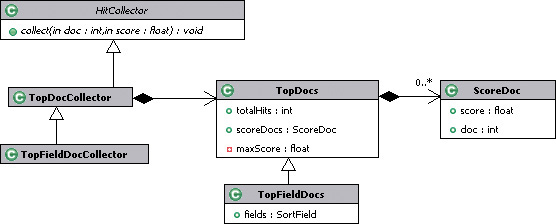

11.3.7. Using a HitCollector

So far in this chapter, we’ve been using Hits to iterate over the search results. Hits has been optimized for a specific use case. You should never use Hits for anything other than retrieving a page of results, or around 10–30 instances. Hits caches documents, normalizes the scores (between 0 and 1), and stores IDs associated with the document using the Hit class. If you retrieve a Document from Hit past the first 100 results, a new search will be issued by Lucene to grab double the required Hit instances. This process is repeated every time the Hit instance goes beyond the existing cache. If you need to iterate over all the results, a HitCollector is a better choice. Note that the scores passed to the HitCollector aren’t normalized.

In this section, we briefly review some of the HitCollector classes available in Lucene and shown in figure 11.9. This will be followed by writing our own HitCollector for the blog searching example we introduced in section 11.1.

Figure 11.9. HitCollector-related classes

Table 11.4 contains a brief description for the list of classes related to a HitCollector. HitCollector is an abstract base class that has one abstract method that each HitCollector object needs to implement:

public abstract void collect(int doc,float score)

Table 11.4. Description of the HitCollector-related classes

|

Class |

Description |

|---|---|

| HitCollector | Base abstract class for all HitCollector classes. It has one primary abstract method: collect(). |

| TopDocCollector | HitCollector implementation that collects the specified number of top documents. It has a method that returns the TopDocs. |

| TopDocs | Contains the number of results returned and an array of ScoreDoc, one for each returned document. |

| ScoreDoc | Bean class containing the document number and its score. |

| TopFieldDocCollector | HitCollector that returns the top sorted documents, returning them as TopFieldDocs. |

| TopFieldDocs | Extends TopDocs. Also contains the list of fields that were used for the sort. |

In a search, this method is called once for every matching document, with the following arguments: its document number and raw score. Note that this method is called in an inner search loop. For optimal performance, a HitCollector shouldn’t call Searcher.doc(int) or IndexReader.document(int) on every document number encountered. The TopDocCollector contains TopDocs, which has methods to return the total number of hits along with an array of ScoreDoc instances. Each ScoreDoc has the document number, along with the unnormalized score for the document. TopFieldDocs extends TopDocs and contains the list of fields that were used for sorting.

Next, let’s look at a simple example to demonstrate how the HitCollector-related APIs can be used. This is shown in listing 11.18.

Listing 11.18. Example using TopDocCollector

In this example, we first create an instance of the TopDocCollector, specifying the maximum number of documents that need to be collected. We invoke a different variant of the search method for the Searcher, which takes in a HitCollector. We then iterate over the results, retrieving the Document instance using the ScoreDoc.

Next, it’s helpful to write a custom HitCollector for our example. Listing 11.19 contains the code for RetrievedBlogHitCollector, which is useful for collecting RetrievedBlogEntry instances obtained from searching.

Listing 11.19. Implementing a custom HitCollector

In our example, we create an instance of RetrievedBlogEntryImpl and populate it with the attributes that will be displayed in the UI. The list of resulting RetrievedBlog-Entry instances can be obtained by invoking the getBlogEntries() method.

Before we end this section, it’s useful to look at some tips for improving search performance.

11.3.8. Optimizing search performance

In section 11.2.5, we briefly reviewed some ways to make Lucene indexing faster. In this section, we briefly review some ways to make searching using Lucene faster[6]:

6 Refer to http://wiki.apache.org/lucene-java/ImproveSearchingSpeed.

- If the amount of available memory exceeds the amount of memory required to hold the Lucene index in memory, the complete index can be read into memory using the RAMDirectory. This will allow the SearchIndexer to search through an in-memory index, which is much faster than the index being stored on the disk. This may be particularly useful for creating auto-complete services—services that provide a list of options based on a few characters typed by a user.

- Use adequate RAM and avoid remote file systems.

- Share a single instance of the IndexSearcher. Avoid reopening the Index-Searcher, which can be slow for large indexes.

- Optimized indexes have only one segment to search and can be much faster than a multi-segment index. If the index doesn’t change much once it’s created, it’s worthwhile to optimize the index once it’s built. However, if the index is being constantly updated, optimizing will likely be too costly, and you should decrease mergeFactor instead. Optimizing indexes is expensive.

- Don’t iterate over more hits than necessary. Don’t retrieve term vectors and fields for documents that won’t be shown on the results page.

At this stage, you should have a good understanding of using Lucene, the process of creating an index, searching using Lucene, sorting and filtering in Lucene, and using a HitCollector. With this background, we’re ready to look at some ways to make searching using Lucene intelligent. Before that, let’s briefly review some tools and frameworks that may be helpful.

11.4. Useful tools and frameworks

Given the wide popularity and use of Lucene, a number of tools and frameworks have been built. In chapter 6, we used Nutch, which is an open source crawler built using Lucene. In section 6.3.4, we also discussed Apache Hadoop, a framework to run applications that need to process large datasets using commodity hardware in a distributed platform. In this section, we briefly look at Luke, a useful tool for looking at the Lucene index, and three other frameworks related to Lucene that you should be aware of: Solr, Compass, and Hibernate search. Based on your application and need, you may find it useful to use one of these frameworks.

11.4.1. Luke

Luke is an open source toolkit for browsing and modifying the Lucene index. It was created by Andrzej Bialecki and is extensible using plug-ins and scripting. Using Luke, you can get an overview of the documents in the index; you can browse through documents and see details about their fields and term vectors. There’s also an interface where you can search and see the results of the search query. You can start Luke using the Java Web Start link from the Luke home page at http://www.getopt.org/luke/. Figure 11.10 shows a screenshot of Luke in the document browse mode. You can browse through the various documents and look at their fields and associated terms and term vector. If you’re experimenting with different analyzers or building your own analyzer, it’s helpful to look at the contents of the created index using Luke.

Figure 11.10. Screenshot of Luke in the Documents tab

11.4.2. Solr

Solr is an open source enterprise search server built using Lucene that provides simple XML/HTTP and JSON APIs for access. Solr needs a Java servlet container, such as Tomcat. It provides features such as hit highlighting, caching, replication, and a web administration interface.

Solr began as an in-house project at CNET Networks and was contributed to the Apache Foundation as a subproject of Lucene in early 2006. In January 2007, Solr graduated from an incubation period to an official Apache project. Even though it’s a relatively new project, it’s being used extensively by a number of high-traffic sites.[7]Figure 11.11 shows a screenshot of the Solr admin page.

Figure 11.11. Screenshot of the Solr admin page

11.4.3. Compass

Compass is a Java search engine framework that was built on top of Lucene. It provides a high level of abstraction. It integrates with both Hibernate and Spring, and allows you to declaratively map your object domain model to the underlying search engine and synchronize changes with the data source. Compass also provides a Lucene JDBC, allowing Lucene to store the search index in the database. Compass is available using the Apache 2.0 license. Read more about Compass at http://www.opensymphony.com/compass/.

11.4.4. Hibernate search

Hibernate search solves the problem of mapping a complex object-oriented domain model to a full-text search-based index. Hibernate search aims to synchronize changes between the domain objects and the index transparently, and returns objects in response to a search query. Hibernate is an open source project distributed under the GNU Lesser General Public License. Read more about Hibernate at http://www.hibernate.org/410.html.

In this section, we’ve looked at tools built on top of Lucene. For most applications, using a framework such as Solr should be adequate, and should expedite adding search capabilities. Now that we have a good understanding of the basics of search, let’s look at how we can make our search intelligent.

11.5. Approaches to intelligent search

One of the aims of this chapter is to make search more intelligent. In this section, we focus on techniques that leverage some of the clustering, classification, and predictive models that we developed in part 2 of the book. We also look at some of the current approaches being used by search companies. There are a lot of companies innovating within the search space.[8] While it’s impossible to cover them all, we discuss a few of the well-known ones.

In this section, we cover six main approaches to making search more intelligent:

- Augmenting the document by creating new fields using one or more of the following: clustering, classification, and regression models

- Clustering the results from a search query to determine clusters of higher-level concepts

- Using contextual and user information to boost the search results toward a particular term vector

- Creating personal search engines, which search through a subset of sites, where the list of sites is provided by a community of users; and using social networking, where users can tag sites, and the search engine blocks out irrelevant sites and selects sites selected by other users

- Linguistic-based search, where the level of words and their meanings is used

- Searching through data and looking for relevant correlations

For most of them, we also briefly look at how you could apply the same concept in your application.

11.5.1. Augmenting search with classifiers and predictors

Consider a typical application that uses user-generated comment (UGC). The UGC could be in many forms; for example, it could be questions and answers asked by users, images, articles or videos uploaded and shared by the user, or tagged bookmarks created by the user. In most applications, content can be classified into one or more categories. For example, one possible classification for this book’s content could be tagging, data collection, web crawling, machine learning, algorithms, search, and so on. Note that these classifications need not be mutually exclusive—content can belong to multiple categories. For most applications, it’s either too expensive or just not possible to manually classify all the content. Most applications like to provide a “narrow by” feature to the search results. For example, you may want to provide a general search feature and then allow the user to subfilter the results based on a subset of classification topics that she‘s interested in.

One way to build such functionality is to build a classifier that predicts whether a given piece of content belongs to a particular category. Here is the recipe for adding this functionality:

- Create a classifier for each of the categories. Given a document, each classifier predicts whether the document belongs to that category.

- During indexing, add a field, classificationField, to the Lucene Document, which contains the list of applicable classifiers for that document.

- During search, create a Filter that narrows the search to appropriate terms in the classificationField.

Predictive models can be used in a manner similar to classifiers.

An example of using a classification model to categorize content and use it in search is Kosmix[9]: Kosmix, which aims at building a home page for every topic, uses a categorization engine that automatically categorizes web sites. Figure 11.12 shows a screenshot of the home page for collective intelligence generated by Kosmix.

Figure 11.12. Screenshot of the home page for collective intelligence at Kosmix

11.5.2. Clustering search results

The typical search user rarely goes beyond the second or third page of results and prefers to rephrase the search query based on the initial results. Clustering results, so as to provide categories of concepts gathered from analyzing the search results, is an alternative approach to displaying search results. Figure 11.13 shows the results of clustering using Carrot2[10] clustering for the query collective intelligence. You can see the higher-level concepts discovered by clustering the results. The user can then navigate to dig deeper into concepts of interest. Clusty[11] is a well-known search engine that applies this principle. Clusty carries out a meta-search across multiple search engines and then clusters the results.

Figure 11.13. Clustering search results using Carrot2 clustering

Carrot2 is an open source engine that automatically clusters search results. Integration with Lucene results is fairly straightforward.[12] Carrot2 has been successfully used in a number of commercial applications.

12http://carrot2.svn.sourceforge.net/viewvc/carrot2/trunk/carrot2/applications/carrot2-demo-api-exam-ple/src/org/carrot2/apiexample/LuceneExample.java?view=markup

11.5.3. Personalizing results for the user

The main motivation behind this approach is to use any available contextual information to modify the search query to make the search more relevant. The contextual information could be in the form of a term-vector representation for the user’s interests or profile. Or a click on a tag might modify the search query to add additional contextual information. An example should help you understand the concept better. Let’s say that a user makes a general query computers on a site that sells computers. Now, if there is a profile associated with the user that has information on the kind of products that the user tends to buy or look at—perhaps whether the user enjoys expensive products or a particular brand—this additional information can be used to modify and sort the information that’s retrieved.

11.5.4. Community-based search

Community-based search engines allow users to create custom search engines by specifying a set of web sites. These sites are either emphasized or are the only web sites searched. This approach is useful for creating vertically focused search engines. Google custom search (http://www.google.com/coop/), Eurekster (http://www.eurekster.com/), and Rollyo (http://rollyo.com/) are a few of the companies that follow this approach and allow users to create custom search engines. Figure 11.14 shows a screenshot of a personalized search engine that I created on Google using the URLs that I obtained running the focused crawler we developed in chapter 6.

Figure 11.14. Screenshot of a personalized search engine on collective intelligence developed using Google Custom Search

One way of applying this concept within your application is to allow your users to tag content within the application. In essence, each tag categorizes the content. You can allow users to create custom search engines within your application by allowing them to combine sets of tags. Hence, when a search is carried out within a custom search engine, only content that has one or more of the required tags is considered for search results.

11.5.5. Linguistic-based search

Natural language–based search engines, such as Hakia (http://www.hakia.com/), Powerset (http://www.powerset.com/), and Lexee (http://www.lexxe.com/), aim to go beyond simple text matching by trying to get the content of the query. They look at the syntactic relationships and try to retrieve pages that are similar based on analyzing their content.

11.5.6. Data search

On February 29, 2008, Bloomberg.com reported that George Church, a professor of genetics at Harvard Medical School, plans to spend $1 billion to create a database for finding new drugs by correlating each person’s personal health history to DNA-related information. Church, whose research led to the first direct genomic sequencing method and also helped initiate the Human Genome Project, is backed by Google and OrbiMed Advisors, LLC. Church plans to decode the DNA of 100,000 people in the world’s biggest gene sequencing project.

The entire human genome has more than 3 billion DNA base pairs. Humans have 24 unique chromosomes and an estimated 20,000–25,000 unique genes. A gene is a portion of the genomic sequence that encodes proteins—building blocks of cells and tissues. Variations in genes and other parts of the DNA have been linked to various types of diseases. DNA chips or microarrays enable researchers to generate large amounts of DNA-related data. Computational biology or bioinformatics is an active area of research that deals with deriving intelligence from biological data. Companies such as 23andme.com, navigenics.com, and decodeme.com provide a service by which consumers’ DNA is converted into single nucleotide polymorphisms (SNP) data, with the promise that it can be used to calculate the levels of risks associated with various diseases.

These are just some examples of the growing trends in the life sciences area. Data is being generated at a fast pace within this field. So far in this chapter, we’ve mainly concentrated on text-based search. However, searching through large amounts of experimental data, where you normalize the data and use actual experimental values from the data along with any meta-text or associated annotations to discover new relationships, is a form of data-based search. Such search engines typically also leverage an ontology and complex biological relationships to guide their searches. One such company that I’m associated with is NextBio.[13] At NextBio, we leverage all publicly available life sciences–related data, along with user-contributed data, to provide a platform for life scientists to discover new relationships, perhaps between genes, diseases, and treatments. Going back to the original example of Church’s plan to decode the DNA of 100,000 people, I hope that all that data will be publicly available in the future for search engines to use and help discover new drugs for diseases.

13www.nextbio.com. Disclaimer: I’m currently the VP of engineering at NextBio.

Figure 11.15 shows a screenshot from NextBio for the gene TP53. Note that the search engine has returned lists of diseases, tissues, and treatments that may be related to this gene.

Figure 11.15. Screenshot from NextBio showing the Gene TP53, along with inferences from analyzing the data

In this section, we’ve looked at six current trends in the area of making search more intelligent. It is impossible to discuss all the innovation happening in the large number of new search engines being built. You may want to look at a couple of additional upcoming search engines: Cuil,[14] which claims to have a larger index than Google, and Searchme,[15] which has an innovative way of displaying search results. Search is a multi-billion-dollar business, so expect more innovation in this area in the years to come.

11.6. Summary

Search is the process of retrieving relevant results in response to a query. The process of searching consists of first creating an inverted index of terms and then searching through the inverted index. The vector-space model and the term-vector representation of content are the basis for retrieving relevant documents in response to a search query.

Lucene provides two main classes, FSDirectory and RAMDirectory, for creating an index. Content is added to the index using a Document instance. Each Document instance consists of Field instances, each of which has a name and a String value. Field objects can be stored in the index for future retrieval, tokenized for search, or untokenized for sorting, and can have an associated term vector stored with them. The same analyzer needs to be used for both indexing and searching.

Searches within Lucene are carried out using an instance of a Searcher and a Query object. Query instances are created using either a QueryParser or by instantiating appropriate Query instances. Hits, which is a container for results from a query, contains access to the resulting documents. It’s more appropriate to use a HitCollector when you need to traverse through a large number of result documents. Lucene provides extensive support for sorting and filtering the results.

Approaches to make search more intelligent include using classification and prediction algorithms to classify content, clustering results to present concepts, creating personal search engines, leveraging user tagging information to filter results, and using natural language processing to determine concepts to aid search.

Now that we have a good understanding of search, in the next chapter, we look at how we can build a recommendation engine using both collaborative and content-based analysis.

11.7. Resources

Apache Lucene Index File Format. http://lucene.apache.org/java/docs/fileformats.html

Apache Lucene Scoring. http://lucene.apache.org/java/docs/scoring.html

“The Best of Web 2.0 Searching and Search Engine.” http://www.allthingsweb2.com/mtree/SEARCH_2.0/

Carrot and Lucene. http://project.carrot2.org/faq.html#lucene-integration

Carrot Clustering. http://project.carrot2.org/

Compass. http://www.opensymphony.com/compass/content/about.html

Delecretaz, Bertrand. “Solr: Indexing XML with Lucene and REST.” xml.com, August 2006. http://www.xml.com/pub/a/2006/08/09/solr-indexing-xml-with-lucene-andrest.html

Ezzy, Ebharim. Search 2.0 Vs Traditional Search. 2006. http://www.readwriteweb.com/archives/search_20_vs_tr.php

Fleisher, Peter. “Google’s search policy puts the user in charge.” Financial Times, May 2007. http://www.ft.com/cms/s/2/560c6a06-0a63-11dc-93ae-000b5df10621.html

Google Custom Search. http://www.google.com/coop/

Hatcher, Otis Gospodnetic and Erik. Lucene in Action. 2004. Manning Publications.

Hibernate Search Apache Lucene Integration. http://www.hibernate.org/hib_docs/search/reference/en/html/index.html

Hibernate Search. http://www.hibernate.org/410.html

Hotchkiss, Gord. “The Pros & Cons Of Personalized Search.” Search Engine Land, March 2007. http://searchengineland.com/070309-081324.php

Hunter, David J., Muin J. Khoury, and Jeffrey M. Drazen. “Letting the genome out of the bottle—will we get our wish?” New England Journal of Medicine. 2008 Jan 10;358(2):105-7 http://content.nejm.org/cgi/content/full/358/2/105

Improve Indexing Speed. Lucene Wiki. http://wiki.apache.org/lucene-java/ImproveIndexingSpeed

Lauerman, John. “Google Backs Harvard Scientist’s 100,000-Genome Quest.” February 29, 2008. http://www.bloomberg.com/apps/news?pid=newsarchive&sid=abC4lpqJ0TZs

Lucene FAQ. http://wiki.apache.org/lucene-java/LuceneFAQ

Lucene Resources. http://wiki.apache.org/lucene-java/Resources

Luke. http://www.getopt.org/luke/

Newcomb, Kevin. “A Look at the Next Generation of Search?” February, 2007. http://searchenginewatch.com/showPage.html?page=3624837

Nielsen online. “Nielsen Announces February US Search Share Rankings.” March 2--8. http://www.nielsen-netratings.com/pr/pr_080326.pdf

Owens, Steven J. Lucene Tutorial. http://www.darksleep.com/lucene/

Rich Il’s Conference Trip. http://ils501.blogspot.com/

Smart, John Ferguson. Integrate advanced search functionalities into your apps. Java-World.com. 2006. http://www.javaworld.com/javaworld/jw-09-2006/jw-0925-lucene.html

Solr. http://lucene.apache.org/solr/

“Updating an index.” http://wiki.apache.org/lucene-java/UpdatingAnIndex