Chapter 1

The Value Proposition for MDM and Big Data

Abstract

This chapter gives a definition of master data management (MDM) and describes how it generates value for organizations. It also provides an overview of Big Data and the challenges it brings to MDM.

Keywords

Master data; master data management; MDM; Big Data; reference data management; RDMDefinition and Components of MDM

Master Data as a Category of Data

Modern information systems use four broad categories of data including master data, transaction data, metadata, and reference data. Master data are data held by an organization that describe the entities both independent and fundamental to the organization’s operations. In some sense, master data are the “nouns” in the grammar of data and information. They describe the persons, places, and things that are critical to the operation of an organization, such as its customers, products, employees, materials, suppliers, services, shareholders, facilities, equipment, and rules and regulations. The determination of exactly what is considered master data depends on the viewpoint of the organization.

If master data are the nouns of data and information, then transaction data can be thought of as the “verbs.” They describe the actions that take place in the day-to-day operation of the organization, such as the sale of a product in a business or the admission of a patient to a hospital. Transactions relate master data in a meaningful way. For example, a credit card transaction relates two entities that are represented by master data. The first is the issuing bank’s credit card account that is identified by the credit card number, where the master data contains information required by the issuing bank about that specific account. The second is the accepting bank’s merchant account that is identified by the merchant number, where the master data contains information required by the accepting bank about that specific merchant.

Master data management (MDM) and reference data management (RDM) systems are both systems of record (SOR). A SOR is “a system that is charged with keeping the most complete or trustworthy representation of a set of entities” (Sebastian-Coleman, 2013). The records in an SOR are sometimes called “golden records” or “certified records” because they provide a single point of reference for a particular type of information. In the context of MDM, the objective is to provide a single point of reference for each entity under management. In the case of master data, the intent is to have only one information structure and identifier for each entity under management. In this example, each entity would be a credit card account.

Metadata are simply data about data. Metadata are critical to understanding the meaning of both master and transactional data. They provide the definitions, specifications, and other descriptive information about the operational data. Data standards, data definitions, data requirements, data quality information, data provenance, and business rules are all forms of metadata.

Reference data share characteristics with both master data and metadata. Reference data are standard, agreed-upon codes that help to make transactional data interoperable within an organization and sometimes between collaborating organizations. Reference data, like master data, should have only one system of record. Although reference data are important, they are not necessarily associated with real-world entities in the same way as master data. RDM is intended to standardize the codes used across the enterprise to promote data interoperability.

Reference codes may be internally developed, such as standard department or building codes or may adopt external standards, such as standard postal codes and abbreviations for use in addresses. Reference data are often used in defining metadata. For example, the field “BuildingLocation” in (or referenced by) an employee master record may require that the value be one of a standard set of codes (system of reference) for buildings as established by the organization. The policies and procedures for RDM are similar to those for MDM.

Master Data Management

In a more formal context, MDM seems to suffer from lengthy definitions. Loshin (2009) defines master data management as “a collection of best data management practices that orchestrate key stakeholders, participants, and business clients in incorporating the business applications, information management methods, and data management tools to implement the policies, procedures, services, and infrastructure to support the capture, integration, and shared use of accurate, timely, consistent, and complete master data.” Berson and Dubov (2011) define MDM as the “framework of processes and technologies aimed at creating and maintaining an authoritative, reliable, sustainable, accurate, and secure environment that represents a single and holistic version of the truth for master data and its relationships…”

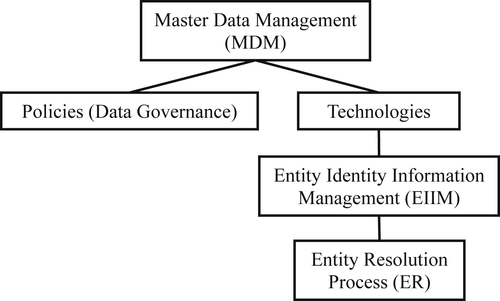

These definitions highlight two major components of MDM as shown in Figure 1.1. One component comprises the policies that represent the data governance aspect of MDM, while the other includes the technologies that support MDM. Policies define the roles and responsibilities in the MDM process. For example, if a company introduces a new product, the policies define who is responsible for creating the new entry in the master product registry, the standards for creating the product identifier, what persons or department should be notified, and which other data systems should be updated. Compliance to regulation along with the privacy and security of information are also important policy issues (Decker, Liu, Talburt, Wang, & Wu, 2013).

The technology component of MDM can be further divided into two major subcomponents, the entity resolution (ER) process and entity identity information management (EIIM).

Entity Resolution

The base technology is entity resolution (ER), which is sometimes called record linking, data matching, or de-duplication. ER is the process of determining when two information system references to a real-world entity are referring to the same, or to different, entities (Talburt, 2011). ER represents the “sorting out” process when there are multiple sources of information that are referring to the same set of entities. For example, the same patient may be admitted to a hospital at different times or through different departments such as inpatient and outpatient admissions. ER is the process of comparing the admission information for each encounter and deciding which admission records are for the same patient and which ones are for different patients.

ER has long been recognized as a key data cleansing process for removing duplicate records in database systems (Naumann & Herschel, 2010) and promoting data and information quality in general (Talburt, 2013). It is also essential in the two-step process of entity-based data integration. The first step is to use ER to determine if two records are referencing the same entity. This step relies on comparing the identity information in the two records. Only after it has been determined that the records carry information for the same entity can the second step in the process be executed, in which other information in the records is merged and reconciled.

Most de-duplication applications start with an ER process that uses a set of matching rules to link together into clusters those records determined to be duplicates (equivalent references). This is followed by a process to select one best example, called a survivor record, from each cluster of equivalent records. After the survivor record is selected, the presumed duplicate records in the cluster are discarded with only the single surviving records passing into the next process. In record de-duplication, ER directly addresses the data quality problem of redundant and duplicate data prior to data integration. In this role, ER is fundamentally a data cleansing tool (Herzog, Scheuren & Winkler, 2007). However, ER is increasingly being used in a broader context for two important reasons.

The first reason is that information quality has matured. As part of that, many organizations are beginning to apply a product model to their information management as a way of achieving and sustaining high levels of information quality over time (Wang, 1998). This is evidenced by several important developments of recent years, including the recognition of Sustaining Information Quality as one of the six domains in the framework of information quality developed by the International Association for Information and Data Quality (Yonke, Walenta & Talburt, 2012) as the basis for the Information Quality Certified Professional (IQCP) credential.

Another reason is the relatively recent approval of the ISO 8000-110:2009 standard for master data quality prompted by the growing interest by organizations in adopting and investing in master data management (MDM). The ISO 8000 standard is discussed in more detail in Chapter 11.

Entity Identity Information Management

Entity Identity Information Management (EIIM) is the collection and management of identity information with the goal of sustaining entity identity integrity over time (Zhou & Talburt, 2011a). Entity identity integrity requires that each entity must be represented in the system one, and only one, time, and distinct entities must have distinct representations in the system (Maydanchik, 2007). Entity identity integrity is a fundamental requirement for MDM systems.

EIIM is an ongoing process that combines ER and data structures representing the identity of an entity into specific operational configurations (EIIM configurations). When these configurations are all executed together, they work in concert to maintain the entity identity integrity of master data over time. EIIM is not limited to MDM. It can be applied to other types of systems and data as diverse as RDM systems, referent tracking systems (Chen et al., 2013a), and social media (Mahata & Talburt, 2014).

Identity information is a collection of attribute-value pairs that describe the characteristics of the entity – characteristics that serve to distinguish one entity from another. For example, a student name attribute with a value such as “Mary Doe” would be identity information. However, because there may be other students with the same name, additional identity information such as date-of-birth or home address may be required to fully disambiguate one student from another.

Although ER is necessary for effective MDM, it is not, in itself, sufficient to manage the life cycle of identity information. EIIM is an extension of ER in two dimensions, knowledge management and time. The knowledge management aspect of EIIM relates to the need to create, store, and maintain identity information. The knowledge structure created to represent a master data object is called an entity identity structure (EIS).

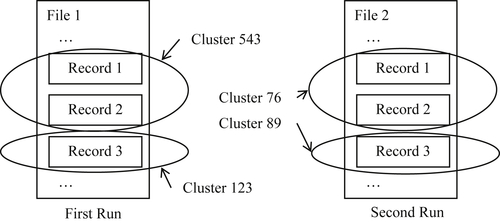

The time aspect of EIIM is to assure that an entity under management in the MDM system is consistently labeled with the same, unique identifier from process to process. This is only possible through an EIS that stores the identity information of the entity along with its identifier so both are available to future processes. Persistent entity identifiers are not inherently part of ER. At any given point in time, the only goal of an ER process is to correctly classify a set of entity references into clusters where all of the references in a given cluster reference the same entity. If these clusters are labeled, then the cluster label can serve as the identifier of the entity. Without also storing and carrying forward the identity information, the cluster identifiers assigned in a future process may be different.

The problem of changes in labeling by ER processes is illustrated in Figure 1.2. It shows three records, Records 1, 2, and 3, where Records 1 and 2 are equivalent references to one entity and Record 3 is a reference to a different entity. In the first ER run, Records 1, 2, and 3 are in a file with other records. In the second run, the same Records 1, 2, and 3 occur in context with a different set of records, or perhaps the same records that were in Run 1, but simply in a different order. In both runs the ER process consistently classifies Records 1 and 2 as equivalent and places Record 3 in a cluster by itself. The problem from an MDM standpoint is that the ER processes are not required to consistently label these clusters. In the first run, the cluster comprising Records 1 and 2 is identified as Cluster 543 whereas in the second run the same cluster is identified as Cluster 76.

ER that is used only to classify records into groups or clusters representing the same entity is sometimes called a “merge-purge” operation. In a merge-purge process the objective is simply to eliminate duplicate records. Here the term “duplicate” does not mean that the records are identical, but that they are duplicate representations of the same entity. To avoid the confusion in the use of the term duplicate, the term “equivalent” is preferred (Talburt, 2011) – i.e. records referencing the same entity are said to be equivalent.

The designation of equivalent records also avoids the confusion arising from use of the term “matching” records. Records referencing the same entity do not necessarily have matching information. For example, two records for the same customer may have different names and different addresses. At the same time, it can be true that matching records do not reference the same entity. This can occur when important discriminating information is missing, such as a generational suffix or age. For example, the records for a John Doe, Jr. and a John Doe, Sr. may be deemed as matching records if one or both records omit the Jr. or Sr. generation suffix element of the name field.

Unfortunately, many authors use the term “matching” for both of these concepts, i.e. to mean that the records are similar and reference the same entity. This can often be confusing for the reader. Reference matching and reference equivalence are different concepts, and should be described by different terms.

The ability to assign each cluster the same identifier when an ER process is repeated at a later time requires that identity information be carried forward from process to process. The carrying forward of identity information is accomplished by persisting (storing) the EIS that represents the entity. The storage and management of identity information and the persistence of entity identifiers is the added value that EIIM brings to ER.

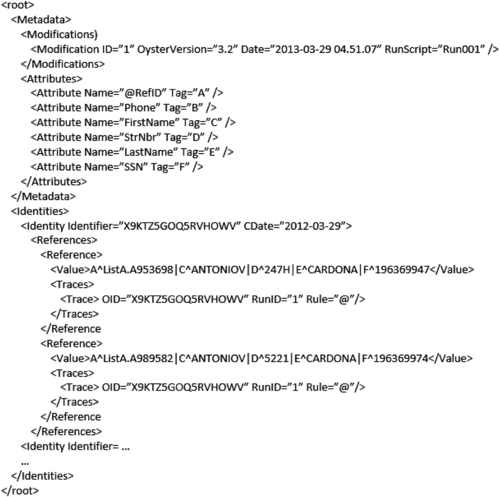

A distinguishing feature of the EIIM model is the entity identity structure (EIS), a data structure that represents the identity of a specific entity and persists from process to process. In the model presented here, the EIS is an explicitly defined structure that exists and is maintained independently of the references being processed by the system. Although all ER systems address the issue of identity representation in some way, it is often done implicitly rather than being an explicit component of the system. Figure 1.3 shows the persistent (output) form of an EIS as implemented in the open source ER system called OYSTER (Talburt & Zhou, 2013; Zhou, Talburt, Su & Yin, 2010">).

During processing, the OYSTER EIS exists as in-memory Java objects. However, at the end of processing, the EIS is written as XML documents that reflect the hierarchical structure of the memory objects. The XML format also serves as a way to serialize the EIS objects so that they can be reloaded into memory at the start of a later ER process.

The Business Case for MDM

Aside from the technologies and policies that support MDM, why is it important? And why are so many organizations investing in it? There are several reasons.

Customer Satisfaction and Entity-Based Data Integration

MDM has its roots in the customer relationship management (CRM) industry. The CRM movement started at about the same time as the data warehousing (DW) movement in the 1980s. The primary goal of CRM was to understand all of the interactions that a customer has with the organization so that the organization could improve the customer’s experience and consequently increase customer satisfaction. The business motivation for CRM was that higher customer satisfaction would result in more customer interactions (sales), higher customer retention rates and a lower customer “churn rate,” and additional customers would be gained through social networking and referrals from more satisfied customers.

If there is one number most businesses understand, it is the differential between the higher cost of acquiring a new customer versus the lower cost of retaining an existing customer. Depending upon the type of business, small increases in customer retention can have a dramatic effect on net revenues. Loyal customers often provide a higher profit margin because they tend to continue purchasing from the same company without the need for extensive marketing and advertising. In highly competitive markets such as airlines and grocery retailers, loyalty programs have a high priority.

The underpinning of CRM is a technology called customer data integration (CDI) (Dyché & Levy, 2006), which is basically MDM for customer entities. Certainly customer information and product information qualify as master data for any organization selling a product. Typically both customers and products are under MDM in these organizations. CDI technology is the EIIM for CRM. CDI enables the business to recognize the interactions with the same customer across different sales channels and over time by using the principles of EIIM.

CDI is only one example of a broader class of data management processes affecting data integration (Doan, Halevy & Ives, 2012). For most applications, data integration is a two-step process called entity-based data integration (Talburt & Hashemi, 2008). When integrating entity information from multiple sources, the first step is to determine whether the information is for the same entity. Once it has been determined the information is for the same entity, the second step is to reconcile possibly conflicting or incomplete information associated with a particular entity coming from different sources (Holland & Talburt, 2008, 2010a; Zhou, Kooshesh & Talburt, 2012). MDM plays a critical role in successful entity-based data integration by providing an EIIM process consistently identifying references to the same entity.

Entity-based data integration has a broad range of applications in areas such as law enforcement (Nelson & Talburt, 2008), education (Nelson & Talburt, 2011; Penning & Talburt, 2012), and healthcare (Christen, 2008; Lawley, 2010).

Better Service

Many organizations’ basic value proposition is not primarily based on money. For example, in healthcare, although there is clearly a monetary component, the primary objective is to improve the quality of people’s lives through better drugs and medical treatments. This also holds true for many government and nonprofit agencies where the primary mission is service to a particular constituency. MDM systems bring value to these organizations as well.

As another example, law enforcement has a mission to protect and serve the public. Traditionally, criminal and law enforcement information has been fragmented across many agencies and legal jurisdictions at the city, county, district, state, and federal levels. However, law enforcement as a whole is starting to take advantage of MDM. The tragic events of September 11, 2001 brought into focus the need to “connect the dots” across these agencies and jurisdictions in terms of linking records referencing the same persons of interest and the same events. This is also a good example of Big Data, because a single federal law enforcement agency may be managing information on billions of entities. The primary entities are persons of interest identified through demographic characteristics and biometric data such as fingerprints and DNA profiles. In addition, they also manage many nonperson entities such as locations, events, motor vehicles, boats, aircrafts, phones, and other electronic devices. Linking law enforcement information for the same entities across jurisdictions with the ability to derive additional information from patterns of linkage have made criminal investigation both more efficient and more effective.

Reducing the Cost of Poor Data Quality

Each year United States businesses lose billions of dollars due to poor data quality (Redman, 1998). Of the top ten root conditions of data quality problems (Lee, Pipino, Funk, & Wang, 2006), the number one cause listed is “multiple source of the same information produces different values for this information.” Quite often this problem is due to missing or ineffective MDM practices. Without maintenance of a system or record that includes every master entity with a unique and persistent identifier, then data quality problems will inevitably arise.

For example, if the same product is given a different identifier in different sales transactions, then sales reports summarized by product will be incorrect and misleading. Inventory counts and inventory projections will be off. These problems can in turn lead to the loss of orders and customers, unnecessary inventory purchases, miscalculated sales commissions, and many other types of losses to the company. Following the principle of Taguchi’s Loss Function (Taguchi, 2005), the cost of poor data quality must be considered not only in the effort to correct the immediate problem but also must include all of the costs from its downstream effects. Tracking each master entity with precision is considered fundamental to the data quality program of almost every enterprise.

MDM as Part of Data Governance

MDM and RDM are generally considered key components of a complete data governance (DG) program. In recent years, DG has been one of the fastest growing trends in information and data quality and is enjoying widespread adoption. As enterprises recognize information as a key asset and resource (Redman, 2008), they understand the need for better communication and control of that asset. This recognition has also created new management roles devoted to data and information, most notably the emergence of the CDO, the Chief Data Officer (Lee, Madnick, Wang, Wang, & Zhang, 2014). DG brings to information the same kind of discipline governing software for many years. Any company developing or using third-party software would not think of letting a junior programmer make even the smallest ad hoc change to a piece of production code. The potential for adverse consequences to the company from inadvertently introducing a software bug, or worse from an intentional malicious action, could be enormous. Therefore, in almost every company all production software changes are strictly controlled through a closely monitored and documented change process. A production software change begins with a proposal seeking broad stakeholder approval, then moves through a lengthy testing process in a safe environment, and finally to implementation.

Until recently this same discipline has not been applied to the data architecture of the enterprise. In fact, in many organizations a junior database administrator (DBA) may actually have the authority to make an ad hoc change to a database schema or to a record layout without going through any type of formal change process. Data management in an enterprise has traditionally followed the model of local “ownership.” This means divisions, departments, or even individuals have seen themselves as the “owners” of the data in their possession with the unilateral right to make changes as suits the needs of their particular unit without consulting other stakeholders.

An important goal of the DG model is to move the culture and practice of data management to a data stewardship model in which the data and data architecture are seen as assets controlled by the enterprise rather than individual units. In the data stewardship model of DG, the term “ownership” reflects the concept of accountability for data rather than the traditional meaning of control of the data. Although accountability is the preferred term, many organizations still use the term ownership. A critical element of the DG model is a formal framework for making decisions on changes to the enterprise’s data architecture. Simply put, data management is the decisions made about data, while DG is the rules for making those decisions.

The adoption of DG has largely been driven by the fact that software is rapidly becoming a commodity available to everyone. More and more, companies are relying on free, open source systems, software-as-a-service (SaaS), cloud computing services, and outsourcing of IT functions as an alternative to software development. As it becomes more difficult to differentiate on the basis of software and systems, companies are realizing that they must derive their competitive advantage from better data and information (Jugulum, 2014).

DG programs serve two primary purposes. One is to provide a mechanism for controlling changes related to the data, data processes, and data architecture of the enterprise. DG control is generally exercised by means of a DG council with senior membership from all major units of the enterprise having a stake in the data architecture. Membership includes both business units as well as IT units and, depending upon the nature of the business, will include risk and compliance officers or their representatives. Furthermore, the DG council must have a written charter that describes in detail the governance of the change process. In the DG model, all changes to the data architecture must first be approved by the DG council before moving into development and implementation. The purpose of first bringing change proposals to the DG council for discussion and approval is to try to avoid the problem of unilateral change. Unilateral change occurs when one unit makes a change to the data architecture without notification or consultation with other units who might be adversely affected by the change.

The second purpose of a DG program is to provide a central point of communication about all things related to the data, data processes, and data architecture of the enterprise. This often includes an enterprise-wide data dictionary, a centralized tracking system for data issues, a repository of business rules, the data compliance requirements from regulatory agencies, and data quality metrics. Because of the critical nature of MDM and RDM, and the benefits of managing this data from an enterprise perspective, they are usually brought under the umbrella of a DG governance program.

Better Security

Another important area where MDM plays a major role is in enterprise security. Seemingly each day there is word of yet another data breach, leakage of confidential information, or identity theft. One of the most notable attempts to address these problems is the Center for Identity at the University of Texas, Austin, taking an interdisciplinary approach to the development of a model for identity threat assessment and prediction (Center for Identity, 2014). Identity and the management of identity information (EIIM) both play a key role in systems that attempt to address security issues through user authentication. Here again, MDM provides the support needed for these kinds of systems.

Measuring Success

No matter what the motivations are for adopting MDM, there should always be an underlying business case. The business case should clearly establish the goals of the implementation and metrics for how to measure goal attainment. Power and Hunt (2013) list “No Metrics for Measuring Success” as one of the eight worst practices in MDM. Best practice for MDM is to measure both the system performance, such as false positive and negative rates, and also business performance, such as return-on-investment (ROI).

Dimensions of MDM

Many styles of MDM implementation address particular issues. Capabilities and features of MDM systems vary widely from vendor to vendor and industry to industry. However, some common themes do emerge.

Multi-domain MDM

In general, master data are references to key operational entities of the enterprise. The definition for entities in the context of master data is somewhat different from the general definition of entities such as in the entity-relation (E-R) database model. Whereas the general definition of entity allows both real-world objects and abstract concepts to be an entity, MDM is concerned with real-world objects having distinct identities.

In keeping with the paradigm of master data representing the nouns of data, major master data entities are typically classified into domains. Sørensen (2011) classifies entities into four domains: parties, products, places, and periods of time. The party domain includes entities that are persons, legal entities, and households of persons. These include parties such as customers, prospects, suppliers, and customer households. Even within these categories of party, an entity may have more than one role. For example, a person may be a patient of a hospital and at the same time a nurse (employee) at the hospital.

Products more generally represent assets, not just items for sale. Products can also include other entities, such as equipment owned and used by a construction company. The place domain includes those entities associated with a geographic location – for example, a customer address. Period entities are generally associated with events with a defined start and end date such as fiscal year, marketing campaign, or conference.

As technology has evolved, more vendors are providing multi-domain solutions. Power and Lyngsø (2013) cite four main benefits for the use of multi-domain MDM including cost-effectiveness, ease of maintenance, enabling proactive management of operational information, and prevention of MDM failure.

Hierarchical MDM

Hierarchies in MDM are the connections among entities taking the form of parent–child relationships where some or all of the entities are master data. Conceptually these form a tree structure with a root and branches that end with leaf nodes. One entity may participate in multiple relations or hierarchies (Berson & Dubov, 2011).

Many organizations run independent MDM systems for their domains: for example, one system for customers and a separate system for products. In these situations, any relationships between these domains are managed externally in the application systems referencing the MDM systems. However, many MDM software vendors have developed architectures with the capability to manage multiple master data domains within one system. This facilitates the ability to create hierarchical relationships among MDM entities.

Depending on the type of hierarchy, these relationships are often implemented in two different ways. One implementation style is as an “entity of entities.” This often happens in specific MDM domains where the entities are bound in a structural way. For example, in many CDI implementations of MDM, a hierarchy of household entities is made up of customer (person) entities containing location (address) entities. In direct-mail marketing systems, the address information is almost always an element of a customer reference. For this reason, both customer entities and address entities are tightly bound and managed concurrently.

However, most systems supporting hierarchical MDM relationships define the relationships virtually. Each set of entities has a separately managed structure and the relationships are expressed as links between the entities. In CDI, a customer entity and address entity may have a “part of” relationship (i.e. a customer entity “contains” an address entity), whereas the household to customer may be a virtual relationship (i.e. a household entity “has” customer entities). The difference is the customers in a household are included by an external link (by reference).

The advantage of the virtual relationship is that changes to the definition of the relationship are less disruptive than when both entities are part of the same data structure. If the definition of the household entity changes, then it is easier to change just that definition than to change the data schema of the system. Moreover, the same entity can participate in more than one virtual relationship. For example, the CDI system may want to maintain two different household definitions for two different types of marketing applications. The virtual relationship allows the same customer entity to be a part of two different household entities.

Multi-channel MDM

Increasingly, MDM systems must deal with multiple sources of data arriving through different channels with varying velocity, such as source data coming through network connections from other systems (e.g. e-commerce or online inquiry/update). Multi-channel data sources are both a cause and effect of Big Data. Large volumes of network data can overwhelm traditional MDM systems. This problem is particularly acute for product MDM in companies with large volumes of online sales.

Another channel that has become increasingly important, especially for CDI, is social media. Because it is user-generated content, it can provide direct insight into a customer’s attitude toward products and services or readiness to buy or sell (Oberhofer, Hechler, Milman, Schumacher & Wolfson, 2014). The challenge is that it is largely unstructured, and MDM systems have traditionally been designed around the processing of structured data.

Multi-cultural MDM

As commerce becomes global, more companies are facing the challenges of operating in more than one country. From an MDM perspective, even though an entity domain may remain the same – e.g. customer, employee, product, etc. – all aspects of their management can be different. Different countries may use different character sets, different reference layouts, and different reference data to manage information related to the same entities. This creates many challenges for MDM systems assuming traditional data to be uniform. For example, much of the body of knowledge around data matching has evolved around U.S. language and culture. Fuzzy matching techniques such as Levenshtein Edit Distance and SOUNDEX phonetic matching do not apply to master data in China and other Asian countries.

Culture is not only manifested in language, but in the representation of master data as well, especially for party data. The U.S. style of first, middle, and last name attributes for persons is not always a good fit in other cultures. The situation for address fields can be even more complicated. Another complicating factor is countries often having different regulations and compliance standards around certain data typically included in MDM systems.

The Challenge of Big Data

What Is Big Data?

Big Data has many definitions. Initially Big Data was just the recognition that, as systems produced and stored more data, the volume had increased to the point that it could no longer be processed on traditional system platforms. The traditional definition of Big Data simply referred to volumes of data requiring new, large-scale systems/software to process the data in a reasonable time frame.

Later the definition was revised when people recognized the problem was not just the volume of data being produced, but also the velocity (transactional speed) at which it was produced and variety (structured and unstructured) of data. This is the origin of the so-called “Three Vs” definition, which sometimes has been extended to four Vs by including the “veracity” (quality) of the data.

The Value-Added Proposition of Big Data

At first organizations were just focused on developing tools allowing them to continue their current processing for larger data volumes. However, as organizations were more driven to compete based on data and data quality, they began to look at Big Data as a new opportunity to gain an advantage. This is based largely on the premise that large volumes of data contain more potential insight than smaller volumes. The thinking was that, instead of building predictive models based on small datasets, it is now feasible to analyze the entire dataset without the need for model development.

Google™ has been the leader in the Big Data revolution, demonstrating the value of the thick-data-thin-model approach to problem solving. An excellent example is the Google Translate product. Previously most attempts to translate sentences and phrases from one language to another consisted of building sophisticated models of the source and target and implementing them as natural language processing (NLP) applications. The Google approach was to build a large corpus of translated documents; when a request to translate text is received, the system searches the corpus to see if the same or similar translation was already available in the corpus. The same method has been applied to replace or supplement other NLP applications such as named entity recognition in unstructured text (Osesina & Talburt, 2012; Chiang et al., 2008).

For example, given a large volume of stock trading history and indicator values, which of the indicators, if any, are highly predictive leading indicators of the stock price? A traditional approach might be to undertake a statistical factor analysis on some sample of the data. However, with current technologies it might be feasible to actually compute all of the possible correlations between the combinations of indicators at varying lead times.

In addition to allowing companies to answer the same directed questions as before for much larger datasets (supervised learning), Big Data is now giving companies the ability to conduct much broader undirected searches for insights not previously known (unsupervised learning) (Provost & Fawcett, 2013).

Data analysis has added a second dimension to the Big Data concept. “Data science” is the new term combining the large volume, or 3V, aspect of Big Data with the analytics piece. Even though the term should refer to both, sometimes data science only refers to one or the other, i.e. the tools and technology for processing Big Data (the engineering side) or the tools and techniques for analyzing Big Data (the data analytics side).

Challenges of Big Data

Along with the added value and opportunities it brings, Big Data also brings a number of challenges. The obvious challenge is storage and process performance. Fortunately, technology has stepped up to the challenge with cheaper and larger storage systems, distributed and parallel computing platforms, and cloud computing. However, using these new technologies requires changes in ways of thinking about data processing/management and the adoption of new tools and methodologies. Unfortunately, many organizations tend to be complacent and lack the sense of urgency required to undergo successful change and transformation (Kotter, 1996).

Big Data is more than simply a performance issue to be solved by scaling up technology; it has also brought with it a paradigm shift in data processing and data management practices. For example, Big Data has had a big impact on data governance programs (Soares, 2013a, 2013b, 2014). For example, the traditional system design is to move data to a program or process, but in many Big Data applications it can be more efficient to move processes to data. Another is the trend toward denormalized data stores. Normalization of relational databases has been a best practice for decades as a way to remove as much data redundancy as possible from the system. In the world of Big Data tools, there is a growing trend toward allowing, or even deliberately creating, data redundancy in order to gain performance.

The Big Data paradigm shift has also changed traditional approaches to programming and development. Developers already in the workforce are having to stop and learn new knowledge and skills in order to use Big Data tools, and colleges and universities require time to change their curricula to teach these tools. Currently, there is a significant gap between industry–education supply and demand. The people training in Big Data tools and analysis are typically referred to as data scientists, and many schools are rebranding their programs as data science.

MDM and Big Data – The N-Squared Problem

Although many traditional data processes can easily scale to take advantage of Big Data tools and techniques, MDM is not one of them. MDM has a Big Data problem with Small Data. Because MDM is based on ER, it is subject to the O(n2) problem. O(n2) denotes the effort and resources needed to complete an algorithm or data process growing in proportion to the square of the number of records being processed. In other words, the effort required to perform ER on 100 records is 4 times more than the effort to perform the same ER process on 50 records because 1002 = 10,000 and 502 = 2,500 and 10,000/2,500 = 4. More simply stated, it takes 4 times more effort to scale from 50 records to 100 records because (100/50)2 = 4.

Big Data not only brings challenging performance issues to ER and MDM, it also exacerbates all of the dimensions of MDM previously discussed. Multi-domain, multi-channel, hierarchical, and multi-cultural MDM are impacted by the growing volume of data that enterprises must deal with. Although the problems are formidable, ER and MDM can still be effective for Big Data. Chapters 9 and 10 focus on Big Data MDM.

Concluding Remarks

Although MDM requires addressing a number of technical issues, overall it is a business issue implemented for business reasons. The primary goal of MDM is to achieve and maintain entity identity integrity for a domain of master entities managed by the organization. The MDM system itself comprises two components – a policy and governance component, and an IT component. The primary IT component is EIIM which is, in turn, supported by ER. EIIM extends the cross-sectional, one-time matching process of ER with a longitudinal management component of persistent EIS and entity identifiers.

Except for External Reference Architecture, the other principal MDM architectures share the common feature of a central hub. Most of the discussion in the remaining chapters will focus on the operation of the hub, and its identity knowledge base (IKB).

Big Data and data science have seemingly emerged overnight with great promise for added value. At the same time, they have created a paradigm shift in IT in a short time. The impacts are being felt in all aspects of the organization, and everyone is struggling to understand both the technology and how best to extract business value. The impact of Big Data is particularly dramatic for MDM and the implementation of MDM, because Big Data pushes the envelope of current computing technology.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.