Chapter 2. Manage backup and restore of databases

An organization’s data is one of its most important assets and in the twenty-first century data loss is not an option. In Chapter 1, “Configure data access and auditing,” we looked at the skills required to protect sensitive data through encryption, control data access and configure auditing for data governance reasons. We continue this data protection journey in this chapter where we learn how to help minimize data loss through the development and implementation of a Disaster Recovery Plan (DRP). Since a DRP is typically not sufficient to prevent data loss by itself, SQL Server supports a number of High-Availability (HA) technologies. We will look at the different High-Availability (HA) technologies in Chapter 4, “Manage high availability and disaster recovery.”

Data loss comes in many forms, including hardware failure, database corruption, malicious activity, and user error, and you need to develop a DRP to protect against all of these eventualities. It is common for organizations to have data governance requirements, so you will need to factor these into your data disaster strategy.

Skill 2.1 starts with the development of your backup strategy. We will examine the different requirements and considerations that you must take into account before you are ready to design your backup strategy. In Skill 2.2 we will turn to how you restore databases. Although your restore strategy is determined by your backup strategy, there are commonly different paths that you can take to restoring your database. In the exam you will be tested on your ability to assess what data has been lost as a result of a disaster and the appropriate restore path that you should take to make the database available again in the least possible time. Finally, in Skill 2.3, we deal with how you manage database integrity and recover from database corruption. Although database corruption is rare, you need to be prepared with an action plan just in case.

Skill 2.1: Develop a backup strategy

A correct backup strategy helps ensure that you incur minimal or no data loss. Developing this correct backup strategy is probably the most important task you will have as a database administrator. Do not make assumptions about what your organization requires, but instead engage the relevant stakeholders within your organization.

In Skill 2.1 we cover how you design your backup strategy by engaging your organization to determine a particular database’s data loss and recovery requirements. We will then look at the backup operations that can be used to translate the backup strategy into a set of scheduled backup operations. To minimize data loss you need to understand the internals of the backup operation and how a database’s transaction log operates, which we cover next. Finally, we will look at how to operationalize your backup operations and related tasks.

Design a backup strategy

To design a backup strategy a database administrator typically starts by engaging management within their organization to determine the amount of data loss that is acceptable and how quickly a database needs to be recovered from a disaster. Some organizations have hundreds of databases, so you will need to prioritize which ones are more important. Without such feedback from your organization it is very difficult to design a backup strategy.

A backup strategy is typically made up of the following elements:

![]() A set of backup operations.

A set of backup operations.

![]() There will potentially be different types of backup operations.

There will potentially be different types of backup operations.

![]() A schedule or frequency of when the backup operations will be performed.

A schedule or frequency of when the backup operations will be performed.

Although it sounds counter-intuitive, a backup strategy is really determined by your restore strategy or specifically how long it will take to restore your database when a disaster occurs. That is why it is critical to engage your business to determine what the recovery objectives for the database are. Without these recovery objectives it is impossible for you to design a backup strategy that will meet your organization’s business requirements. Make sure you document your backup (and restore) strategies so that both yourself, and other database administrators, can recover the database in a timely manner.

Define recovery objectives

To design a disaster recovery plan your organization’s management or business stakeholders will need to define the following three main requirements:

![]() Recovery Time Objective (RTO) The RTO defines the maximum allowable downtime following a disaster incident. The goal for the database administrator is to restore normal business operations within this time frame.

Recovery Time Objective (RTO) The RTO defines the maximum allowable downtime following a disaster incident. The goal for the database administrator is to restore normal business operations within this time frame.

![]() Recovery Point Objective (RPO) The RPO defines the maximum acceptable amount of data loss following a disaster incident. The RPO is commonly expressed in minutes.

Recovery Point Objective (RPO) The RPO defines the maximum acceptable amount of data loss following a disaster incident. The RPO is commonly expressed in minutes.

![]() Recovery Level Objective (RLO) The RLO defines granularity of the data that needs to be restored following a disaster incident. For SQL Server it could be a SQL Server instance, group of databases, a database, a set of tables, or a table.

Recovery Level Objective (RLO) The RLO defines granularity of the data that needs to be restored following a disaster incident. For SQL Server it could be a SQL Server instance, group of databases, a database, a set of tables, or a table.

Although there is an implied relationship between the RPO and RTO, the RPO is not always less than the RTO, as most people intuitively think. For example, one company might have a RTO of one hour, but a RPO of 24 hours. In other words, they need to restore normal business processes as soon as possible. They are willing to lose a day’s worth of data. Resumption of normal operations is paramount. Potentially, the lost data can be added after the resumption of normal operations through paper records, or other data sources.

All stakeholders need to understand that zero data loss is very difficult to achieve, if not impossible for most organizations. A backup strategy is not sufficient by itself. Typically, some sort of high availability technology also needs to be used. It is important to stress to management and other relevant stakeholders that your backup strategy is not a substitute for high availability. We will cover high availability in Chapter 4, “Manage high availability and disaster recovery.”

The RTO, RPO, and RLO form part of your service level agreements (SLAs). The SLAs represent an agreement between the business and IT operations on how your IT infrastructure is managed and what services are delivered.

Remember that the restore operation is typically asymmetric to the backup operation. For example, a backup that takes four hours to complete might take twenty hours to restore. As an alternative, a four hour backup might take two hours to restore. That is why it is you should periodically test your restore procedure. Yes, you need to ensure that it works. But more importantly you need to gather various metrics, such as how long it takes.

That is why the restore process drives your backup strategy. Once you have your RTO, RPO, and RLO defined, you can determine what backup operations and their frequency will make up your backup strategy.

Review backup operations

Your backup strategy will consist of a number of scheduled backup operations. These backup operations will potentially perform different types of backups, which we need to review for the exam. SQL Server supports the following types of backups.

![]() Full A full backup captures everything in the database.

Full A full backup captures everything in the database.

![]() Differential A differential backup captures everything in the database since the last full backup.

Differential A differential backup captures everything in the database since the last full backup.

![]() Incremental An incremental backup captures everything since the last incremental backup. In SQL Server it is called a log backup. You need to perform a full backup before you can perform log backups.

Incremental An incremental backup captures everything since the last incremental backup. In SQL Server it is called a log backup. You need to perform a full backup before you can perform log backups.

To design a backup strategy, you need to take into account a number of factors including:

![]() The size of your database A larger database will take longer to backup. Your database might grow to a size where the backup operation can no longer be completed within an appropriate maintenance window. At that stage you might have to use different hardware or redesign your database and backup strategy.

The size of your database A larger database will take longer to backup. Your database might grow to a size where the backup operation can no longer be completed within an appropriate maintenance window. At that stage you might have to use different hardware or redesign your database and backup strategy.

![]() The structure of your database files A database that consists of a single primary data file will be difficult to back up within an appropriate maintenance window as it gets larger. You have no choice but to back up the database in its entirety. As an alternative, if the database consists of multiple secondary data files these files can be backed up individually at different frequencies.

The structure of your database files A database that consists of a single primary data file will be difficult to back up within an appropriate maintenance window as it gets larger. You have no choice but to back up the database in its entirety. As an alternative, if the database consists of multiple secondary data files these files can be backed up individually at different frequencies.

![]() The speed/throughout of the network and storage subsystems involved The underlying hardware and networking infrastructure can substantially impact the duration your backup and restore operations. This will in turn impact your RTO.

The speed/throughout of the network and storage subsystems involved The underlying hardware and networking infrastructure can substantially impact the duration your backup and restore operations. This will in turn impact your RTO.

![]() How heavily utilized is the processor subsystem If your SQL Server instance is using all of the processor subsystem you might not be able to take advantage of backup compression. Backup compression consumes additional processor resources.

How heavily utilized is the processor subsystem If your SQL Server instance is using all of the processor subsystem you might not be able to take advantage of backup compression. Backup compression consumes additional processor resources.

![]() The volume of data modifications in the database A database that is heavily modified might have substantial transaction log growth. This in turn might force you to perform log backups more frequently so that the transaction log does not grow out of control.

The volume of data modifications in the database A database that is heavily modified might have substantial transaction log growth. This in turn might force you to perform log backups more frequently so that the transaction log does not grow out of control.

![]() The size of the data modifications in the database Similar to the above point, large transactions might also result in the transaction log growing to an unacceptable size. Again, you might have to perform more frequent log backups to remedy this scenario.

The size of the data modifications in the database Similar to the above point, large transactions might also result in the transaction log growing to an unacceptable size. Again, you might have to perform more frequent log backups to remedy this scenario.

![]() The type of data modifications in the database, for example, if they are predominantly update, or insert operations This will impact the size of your differential and log backups. If the same records within a database are continually updated, your differential backups will not increase in size and represent a good candidate for your backup strategy. As an alternative, if all the records within a database get modified, the differential backup might be the same size as a full database backup. Understanding the types of data modifications will help you optimize your backup strategy.

The type of data modifications in the database, for example, if they are predominantly update, or insert operations This will impact the size of your differential and log backups. If the same records within a database are continually updated, your differential backups will not increase in size and represent a good candidate for your backup strategy. As an alternative, if all the records within a database get modified, the differential backup might be the same size as a full database backup. Understanding the types of data modifications will help you optimize your backup strategy.

![]() How compressible the data in the database is Backup compression consumes additional processor resources. If the data within your database is not very compressible there will be no point in using backup compression in your backup operations.

How compressible the data in the database is Backup compression consumes additional processor resources. If the data within your database is not very compressible there will be no point in using backup compression in your backup operations.

![]() Whether point-in-time recovery is required If your organization requires a database to be recovered to a specific point-in-time you will have no choice but to implement log backups. Furthermore, the database will have to use the full recovery model.

Whether point-in-time recovery is required If your organization requires a database to be recovered to a specific point-in-time you will have no choice but to implement log backups. Furthermore, the database will have to use the full recovery model.

![]() The recovery objectives defined by your organization Quantifying your RPO, RTO, and RLO are critical to your backup strategy.

The recovery objectives defined by your organization Quantifying your RPO, RTO, and RLO are critical to your backup strategy.

![]() How the transaction log is managed Some databases experience substantial transaction log growth. You might also have limited storage dedicated to the transaction log. As a result, you might have to take more frequent log backups than planned to manage the transaction log.

How the transaction log is managed Some databases experience substantial transaction log growth. You might also have limited storage dedicated to the transaction log. As a result, you might have to take more frequent log backups than planned to manage the transaction log.

![]() The database’s recovery model Different recovery models impact whether transaction log backups can be taken. If your database is using the simple recovery model, you will not be able to leverage log backups.

The database’s recovery model Different recovery models impact whether transaction log backups can be taken. If your database is using the simple recovery model, you will not be able to leverage log backups.

![]() The importance of the data within the database Some databases might not be important to your organization, they may be used in a staging or development environment, or they can be a replica of a production system. In such cases there might be no business requirement to back up the database at all.

The importance of the data within the database Some databases might not be important to your organization, they may be used in a staging or development environment, or they can be a replica of a production system. In such cases there might be no business requirement to back up the database at all.

In the exam you might be given a scenario where you will have to determine the RTO and the RPO. Watch out for statements in the questions that stipulate that only “fifteen minutes’ worth of data can be lost,” or that “the database needs to be recovered in two hours.” Pay attention to the factors discussed above, and in particular the sizes of the databases and the implied duration of any backup operations, because this will help you in the formulation of the backup strategy.

Evaluate potential backup strategies

Let’s examine a number of simple scenarios and what backup strategy could potentially be used for them. The purpose of these scenarios is to show you the types of considerations that help you design your backup strategy.

The first scenario is for a data warehouse solution where a read-only database is used for analytical and reporting purposes. At the end of each day a process changes the database to read/write mode, populates the database with that day’s transactions, and then changes the database back to read-only mode. This process starts at midnight and takes 1-2 hours to complete. The database uses the simple recovery model. Users query the database between 08:00 and 18:00. Management has stipulated a RTO of 4 hours and a RPO of 0 minutes.

For this data warehouse solution a full database backup at 03:00 every day can be used as the backup strategy. Our full backup completes within 2 hours. There is no need for differential backups.

The second scenario is for a transactional database used by a customer relations management (CRM) solution. Users are continually modifying the database during business hours (08:00 and 18:00) by adding new customer, activity, and opportunity records. Management requires a RTO of 4 hours and a RPO of 2 hours. The database will also potentially need to be recovered to any specific point in time. A number of re-indexing jobs start at midnight and take 2 hours to complete. You notice that after 75 minutes, the transaction log fills up 95% of the storage allocated to it.

For this CRM solution the following strategy can be used. Although management requires a RPO of 2 hours, the transaction log will consume all of its allocated storage within that time frame. Differential backups cannot be used, because they do not support point-in-time recovery.

![]() Full database backup at 03:00 every day

Full database backup at 03:00 every day

![]() Incremental backups every hour during business hours from 08:00 and 18:00

Incremental backups every hour during business hours from 08:00 and 18:00

The final scenario is for a large, mission-critical, 24x7 database used in a manufacturing context. The existing tables are continually being updated by a variety of sensors in the manufacturing process. The transaction log grows rapidly in size. Although new records are also added to the database, it does not grow substantially in size. A full database backup cannot be performed daily since there is insufficient storage. The database needs to be restored to any point-in-time up to 3 months back. Management has indicated a RTO of 5 minutes.

For this manufacturing database the following backup strategy can be used. Differential backups are used to minimize the amount of storage consumed by backups. Transaction log backups allow you to recover to a point-in-time and only lose 5 minutes’ worth of data.

![]() Full database backup at 03:00 every Monday

Full database backup at 03:00 every Monday

![]() Incremental backups every five minutes

Incremental backups every five minutes

![]() A differential backup at the end of each day at 23:00

A differential backup at the end of each day at 23:00

Back up databases

Earlier we discussed the different high-level backup operations that potentially make up your backup strategy. In reality, SQL Server supports more types of backup operations. These different types of backup operations allow you to further customize your backup strategy and potentially capture any data modifications made to the database since the last backup operation after a disaster incident.

In this section we will cover the different types of database backup operations supported by SQL Server. We will also have a look at various techniques that can be used to back up larger databases where you might not have an appropriate maintenance window.

SQL Server supports the following types of backup operations:

![]() Full This contains the entire contents of the database and any changes made to the database during the backup operation. Consequently, a full backup represents the database at the point in time when the backup operation finished.

Full This contains the entire contents of the database and any changes made to the database during the backup operation. Consequently, a full backup represents the database at the point in time when the backup operation finished.

![]() Differential This contains only the differences between the last full database backup and the point in time when the differential backup operation was executed.

Differential This contains only the differences between the last full database backup and the point in time when the differential backup operation was executed.

![]() Log This contains all of the log records that were performed since the last log backup.

Log This contains all of the log records that were performed since the last log backup.

![]() File This contains either a file or filegroup that makes up the database.

File This contains either a file or filegroup that makes up the database.

![]() Partial This is similar to a full backup, but it excludes all read-only filegroups by default. For a read-write database, a partial backup contains the primary filegroup and all read-write filegroups. For a read-only database, a partial backup contains only the primary filegroup.

Partial This is similar to a full backup, but it excludes all read-only filegroups by default. For a read-write database, a partial backup contains the primary filegroup and all read-write filegroups. For a read-only database, a partial backup contains only the primary filegroup.

![]() Tail-Log This contains all the transaction log records that have not been backed up since the last log backup. A tail-log backup operation is typically performed as the first task in a disaster recovery process. This ensures that no data is lost up to the point-in-time when the disaster incident occurred. For the tail-log backup to be successful the database’s transaction log file must be available.

Tail-Log This contains all the transaction log records that have not been backed up since the last log backup. A tail-log backup operation is typically performed as the first task in a disaster recovery process. This ensures that no data is lost up to the point-in-time when the disaster incident occurred. For the tail-log backup to be successful the database’s transaction log file must be available.

The exam will most likely cover only full, differential, and log backups. However, you need to be prepared for some of the less commonly used backup operations that are designed more for edge and border cases. Make sure you understand the use cases of where file and partial backups are used.

When you perform a backup operation you need to specify a backup destination. Although tape devices are supported they are rarely used in the industry today due to their speed. Most organizations back up directly to a disk based destination and then potentially backup these database backups to tape.

Unfortunately, the legacy of using tapes as a destination still exists in the backup operation. Consequently, for the exam you will need to understand the following concepts used by SQL Server backup operations:

![]() Backup device A disk or tape device to which the database engine performs a backup operation.

Backup device A disk or tape device to which the database engine performs a backup operation.

![]() Media family A backup created on a single non-mirrored device, or a set of mirrored devices in a media set.

Media family A backup created on a single non-mirrored device, or a set of mirrored devices in a media set.

![]() Backup set This represents a successful backup operation’s content.

Backup set This represents a successful backup operation’s content.

![]() Media set A set of backup media that contains one or more backup sets.

Media set A set of backup media that contains one or more backup sets.

![]() Log sequence number (LSN) An internal numbering sequence used for each operation within the transaction log. This is used internally by the database engine and typically not used by database administrators.

Log sequence number (LSN) An internal numbering sequence used for each operation within the transaction log. This is used internally by the database engine and typically not used by database administrators.

![]() Sequence number This indicates the order of the physical media within a media family. Also, media families are numbered sequentially according to their position within the media set.

Sequence number This indicates the order of the physical media within a media family. Also, media families are numbered sequentially according to their position within the media set.

Need more Review? Log sequence number (LSN)

To learn about log sequence numbers visit https://docs.microsoft.com/en-us/sql/relational-databases/backup-restore/recover-to-a-log-sequence-number-sql-server.

Backing up to an explicitly created backup device is not commonly used in the industry today. They represent more of a legacy architecture in earlier versions of SQL Server where you created a backup device before backing up to it. It is more common now to backup directly to disk without creating an explicit backup device first.

Every backup device starts with a media header that is created by the first backup operation and remains until the media is reformatted. The media header contains information about the backup device’s contents and the media family it belongs to. The information includes:

![]() The name of the media

The name of the media

![]() The unique identification number of the media set

The unique identification number of the media set

![]() The number of media families in the media set

The number of media families in the media set

![]() The sequence number of the media family containing this media

The sequence number of the media family containing this media

![]() The unique identification number for the media family

The unique identification number for the media family

![]() The sequence number of this media in the media family

The sequence number of this media in the media family

![]() Whether the media description contains an MTF media label or a media description

Whether the media description contains an MTF media label or a media description

![]() The Microsoft Tape Format media label or the media description

The Microsoft Tape Format media label or the media description

![]() The name of the backup software that wrote the label

The name of the backup software that wrote the label

![]() The unique vendor identification number of the software vendor that formatted the media

The unique vendor identification number of the software vendor that formatted the media

![]() The date and time the label was written

The date and time the label was written

![]() The number of mirrors in the set (1-4); 1 indicates an un-mirrored device

The number of mirrors in the set (1-4); 1 indicates an un-mirrored device

![]() Exam Tip

Exam Tip

Make sure you understand what sequence numbers are represented in backup devices and how they are ordered. The exam might ask you to restore a series of backups in order using Transact-SQL and the sequence number will be important to the answer.

Consider the following series of backup operations that perform a full, differential, and incremental backup to four backup devices concurrently as shown in Listing 2-1.

LISTING 2-1 Multiple backups to four backup devices

-- Initial full backup

BACKUP DATABASE AdventureWorks TO

DISK = 'R:SQLBackupAdventureWorks_BackupDevice1.bak''

DISK = 'R:SQLBackupAdventureWorks_BackupDevice2.bak ''

DISK = 'R:SQLBackupAdventureWorks_BackupDevice 3.bak ''

DISK = 'R:SQLBackupAdventureWorks_BackupDevice 4.bak '

WITH

FORMAT'

MEDIANAME = ' AdventureWorksMediaSet1';

GO

-- Differential backup

BACKUP DATABASE AdventureWorks TO

DISK = 'R:SQLBackupAdventureWorks_BackupDevice1.bak''

DISK = 'R:SQLBackupAdventureWorks_BackupDevice 2.bak ''

DISK = 'R:SQLBackupAdventureWorks_BackupDevice 3.bak ''

DISK = 'R:SQLBackupAdventureWorks_BackupDevice 4.bak '

WITH

NOINIT'

MEDIANAME = 'AdventureWorksMediaSet1''

DIFFERENTIAL;

GO

-- Incremental backup

BACKUP LOG AdventureWorks TO

DISK = 'R:SQLBackupAdventureWorks_BackupDevice1.bak''

DISK = 'R:SQLBackupAdventureWorks_BackupDevice 2.bak ''

DISK = 'R:SQLBackupAdventureWorks_BackupDevice 3.bak ''

DISK = 'R:SQLBackupAdventureWorks_BackupDevice 4.bak '

WITH

NOINIT'

MEDIANAME = 'AdventureWorksMediaSet1';

GO

These three backup operations result in the following media set, as shown in Figure 2-1.

FIGURE 2-1 Backup media set

Now that we have covered the different types of backup operations and destinations let’s look at these operations in more detail. Understanding how these backup operations work will help you design an appropriate backup strategy.

Performing full backups

Full backups form the baseline of your backup strategy. Differential and log backups do not work unless they have a baseline full database backup. If a full backup is corrupted or lost you will not be able to restore any subsequent differential and log backups. This highlights the need to periodically perform a full database backup.

A full backup contains a copy of the database and the transaction log operations performed during the database backup phase. The backup operation only backs up the allocated pages within the database. Unallocated pages are not backed up. For a 100GB database that only has 10GB of data, the full database backup is only approximately 10GB in size uncompressed.

Need more Review? SQL Server internal architecture

Understanding the database engine’s internal architecture will help you understand how the different backup operations work internally. To learn about extents and pages visit https://docs.microsoft.com/en-us/sql/relational-databases/pages-and-extents-architecture-guide.

With a full database backup, the database engine performs the following high-level actions:

![]() Checkpoint Performs a database checkpoint. The checkpoint process flushes all dirty data from the buffer pool to disk to minimize the amount of work required by the restore process.

Checkpoint Performs a database checkpoint. The checkpoint process flushes all dirty data from the buffer pool to disk to minimize the amount of work required by the restore process.

![]() Record backup start LSN Examines the transaction log and records the log sequence number (LSN) of when the backup operation started.

Record backup start LSN Examines the transaction log and records the log sequence number (LSN) of when the backup operation started.

![]() Backup data Backs up all the extents (unit of eight physically contiguous 8KB pages) from the data files in the database to the backup destination.

Backup data Backs up all the extents (unit of eight physically contiguous 8KB pages) from the data files in the database to the backup destination.

![]() Record backup end LSN Re-examines the transaction log and records the LSN of the start of the oldest active transaction and re-examines the transaction log and records the LSN of when the backup operation started.

Record backup end LSN Re-examines the transaction log and records the LSN of the start of the oldest active transaction and re-examines the transaction log and records the LSN of when the backup operation started.

![]() Calculate minimum LSN Determines the minimum LSN required for the log backup by taking the earliest in time between the backup end LSN and the oldest active transaction’s LSN. The oldest active transaction could have started before the backup statement was executed.

Calculate minimum LSN Determines the minimum LSN required for the log backup by taking the earliest in time between the backup end LSN and the oldest active transaction’s LSN. The oldest active transaction could have started before the backup statement was executed.

![]() Backup log Backs up the transaction log between the calculated minimum LSN and the backup end LSN. This ensures that the recovered database will be consistent as of the time of the backup operation’s completion.

Backup log Backs up the transaction log between the calculated minimum LSN and the backup end LSN. This ensures that the recovered database will be consistent as of the time of the backup operation’s completion.

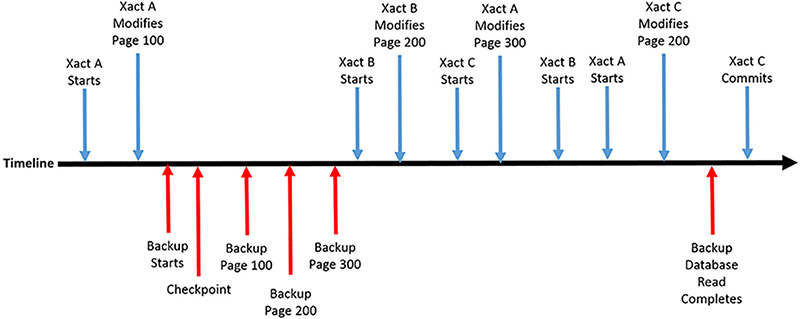

Consider the following sequence of events, as shown in Figure 2-2.

FIGURE 2-2 Backup example timeline

2. Transaction A changes page 100

3. A full backup begins

4. Backup checkpoint occurs

5. Backup reads page 100

6. Backup reads page 200

7. Backup reads page 300

8. Transaction B begins

9. Transaction B changes page 200

10. Transaction C begins

11. Transaction A modifies page 300

12. Transaction B commits

13. Transaction A commits

14. Transaction C modifies page 200

15. Backup database read ends

In this example, the Backup Data phase captures the modification to page 100 by transaction A, but not the subsequent modification to pages 200 and 300 made by transactions A, B, and C. The Backup Data phase neither re-reads pages that have been backed up but then modified, nor does it block transactions by reading the page before allowing the modification. The Backup Log phase has to read enough of the transaction log so as to be able to recover the database to a consistent state as of the end of the Backup Data phase. Consequently, the Backup Log phase would have to capture the modifications made to pages 200 and 300. If you were to restore this database backup it would not include the changes made to page 200 by transaction C, because it did not commit before the Backup Data phase completed. This modification would be undone (rolled back) by the recovery phase of the restore operation.

![]() Exam Tip

Exam Tip

It is very likely that the exam will have a question with a similar timeline of scheduled backup operations and what has gone wrong. For you to be able to answer the exam question correctly you will have to understand the importance of events and incidents in the time line and be able to formulate the correct recovery plan. Watch out in particular of when transactions commit.

Figure 2-3 shows the internal operations that were performed during a full database against a timeline. You can see how the database engine initially allocates a number of resources to the backup operation. You can control some of these resources such as the maximum transfer size and buffer count. Note how the full backup operation clears the differential bitmaps before performing the initial backup discussed above. It then scans the allocation bitmaps so as to predict the size of the backup file. This estimation is used to create the backup destination file before it can start writing to it. At the very end, after the data copy phase it backups up the transaction log and closes off the backup set. The resultant backup set file can be shorter than the initial estimation.

FIGURE 2-3 Backup media set

Use the following statement to perform a full backup:

BACKUP DATABASE { database_name | @database_name_var }

TO <backup_device> [ '...n ]

[ <MIRROR TO clause> ] [ next-mirror-to ]

[ WITH <general_WITH_options> [ '...n ] ] ]

[;]

Performing differential backups

Differential backups represent a delta within the database between the time of the differential backup and the last full backup. The database engine keeps track of all changes made to the database, at the extent level, via an internal bitmap called a Differential Change Map (DCM). Performing a full database backup clears the DCM by default.

Although a single row in a table might be modified, the differential backup will back up the entire 64KB extent. This is done for performance reasons, but results in backup sizes being larger than they strictly need to be. Figure 2-4 shows how a differential backup backs up Database Extents via the DCM.

FIGURE 2-4 Differential backup high-level internals

Typically, differential backups get larger in size as more time passes between subsequent differential backup operations and the baseline full backup. At some point in time this size increase impacts your RTO. Don’t forget that it takes longer to restore the full and differential backups when compared to restoring a standalone full backup.

Perform differential database backups when any of the following apply:

![]() You want to reduce the time taken by the backup operation as compared to the full backup and you do not need point-in-time recovery.

You want to reduce the time taken by the backup operation as compared to the full backup and you do not need point-in-time recovery.

![]() You want to reduce the size of the database set as compared to the full backup and you do not need point-in-time recovery.

You want to reduce the size of the database set as compared to the full backup and you do not need point-in-time recovery.

Use the following statement to perform a differential backup:

BACKUP DATABASE { database_name | @database_name_var }

TO <backup_device> [ '...n ]

[ <MIRROR TO clause> ] [ next-mirror-to ]

WITH DIFFERENTIAL

[ WITH <general_WITH_options> [ '...n ] ] ]

[;]

The size, or potential size of the differential backup will be the determining factor in any potential exam question. So pay attention to how records are being modified in any exam questions. A lot of insert operations within the database imply that the differential backups will get larger in size as time progresses. A lot of update operations to existing data implies that a “steady state” will be achieved with respect to the size of the differential backups as time elapses. You will need to compare both the size and recovery impact of differential backups to transaction log backups, and we will look at them next.

Performing log backups

Log backups represent an incremental backup as discussed earlier as they capture all changes made to the database since the last full or previous log backup. By default, the log backup also reclaims the space within the transaction log by removing records that are no longer required for recovery purposes. This is typically called “truncating the log”. A log backup cannot be performed unless the database is in full recovery model and an initial full backup has been performed. We will cover recovery models in detail later in the “Configure database recovery models” section in this chapter.

Log backups are the primary mechanism used to manage the transaction log so that it does not grow too large. For busy databases, it is common to back up the transaction log more frequently. The log backup frequency directly impacts your RPO. Backing up the log every 15 minutes means that you lose a maximum of 15 minutes of committed transaction in the worst case scenario, where you cannot perform a tail-log backup. Tail-log backups are discussed in more detail later in the “Perform tail-log backups” section of this chapter.

Important Controlling logging of successful backup operations

By default, every successful backup operation records an entry in the database engine’s error log. If log backups are performed very frequently, these messages can result in excessive error log growth and make troubleshooting errors more difficult due to the size of the error log. Consider suppressing these messages using trace flag 3226. This trace flag applies to all backup operations. Unsuccessful backup operations are still logged to the error log.

For more information on trace flags and trace flag 3226 visit https://docs.microsoft.com/en-us/sql/t-sql/database-console-commands/dbcc-traceon-trace-flags-transact-sql

Perform transaction log backups when any of the following apply.

![]() Your database has smaller RPO than the frequency of your full/differential backups.

Your database has smaller RPO than the frequency of your full/differential backups.

![]() You need to recover the database up until the time that it crashed.

You need to recover the database up until the time that it crashed.

![]() You need point-in-time recovery.

You need point-in-time recovery.

![]() You need to ensure that the database’s transaction log does not grow too large.

You need to ensure that the database’s transaction log does not grow too large.

Use the following statement to perform a log backup:

BACKUP LOG { database_name | @database_name_var }

TO <backup_device> [ '...n ]

[ <MIRROR TO clause> ] [ next-mirror-to ]

[ WITH { <general_WITH_options> | <log-specific_optionspec> } [ '...n ] ]

[;]

For most databases a combination of full, differential and log backups are sufficient. However, for more “exotic” use cases you can leverage file backups that do not backup the entire database. Such use cases make great exam questions although they are not commonly “seen in the wild.” Let’s have a look at how you can perform file and partial backups of your databases next. Remember that they typically all require log backups so as to synchronize the database to a consistent state.

Performing file backups

File backups are typically used where a multi-file database is very large and cannot be completely backed up in the existing maintenance window. Consequently, you rotate the backup of the various files that make up your database at different time intervals.

Perform file backups in the following scenarios:

![]() You have a very large database and plan to rotate the backups of the files that make up the database.

You have a very large database and plan to rotate the backups of the files that make up the database.

![]() Your database is made up of different files, some of which might contain read-only data, or data that is rarely modified. In this case you can back up the different files at different frequencies.

Your database is made up of different files, some of which might contain read-only data, or data that is rarely modified. In this case you can back up the different files at different frequencies.

Use the following statement to perform a file/filegroup backup:

BACKUP DATABASE { database_name | @database_name_var }

<file_or_filegroup> [ '...n ]

TO <backup_device> [ '...n ]

[ <MIRROR TO clause> ] [ next-mirror-to ]

[ WITH { DIFFERENTIAL | <general_WITH_options> [ '...n ] } ]

[;]

File backups have been supported in SQL Server for a very long time and were probably designed for large databases. We will examine how you can backup very large databases in the”Backup VLDBs” section later in this chapter.

Performing partial backups

Partial backups were introduced in SQL Server 2012 to make backing up very large databases that contain one or more read-only filegroups easier. By default, partial backups do not backup up the read-only filegroups, although read-only file groups can be optionally added to a partial backup. SQL Server Management Studio and the Maintenance Plan Wizard do not support partial backups.

A typical scenario is a very large database that has a read-only filegroup used to store archive data. That archive data is substantial in size and modified only annually. As a result there is no need to back up the read-only filegroup at the same frequency as the full backups.

Use the following statement to perform a partial backup:

BACKUP DATABASE { database_name | @database_name_var }

READ_WRITE_FILEGROUPS [ ' <read_only_filegroup> [ '...n ] ]

TO <backup_device> [ '...n ]

[ <MIRROR TO clause> ] [ next-mirror-to ]

[ WITH { DIFFERENTIAL | <general_WITH_options> [ '...n ] } ]

[;]

Use backup options

Now that we have covered the different types of backups supported in SQL Server we need to examine the important backup options. The exam is going to test your ability to understand these options and use them in the appropriate circumstances. Backup questions in the exam typically have Transact-SQL statements as answer choices and not screen captures.

The BACKUP statement has a large number of WITH options. For the exam, make sure you understand what the following options do and when to use them:

![]() CHECKSUM | NO_CHECKSUM These options help ensure that your backup can be successfully restored without errors. The CHECKSUM option specifies that the backup operation verifies that each page of the database does not have a checksum or torn page error, if those database options are enabled and available. It also generates a checksum for the entire backup. The default behavior is NO_CHECKSUM, which does not validate pages and does not generate a backup checksum. Page validation and checksum generation consumes more processor resources during the backup operation. But their value is important in disaster recovery scenarios.

CHECKSUM | NO_CHECKSUM These options help ensure that your backup can be successfully restored without errors. The CHECKSUM option specifies that the backup operation verifies that each page of the database does not have a checksum or torn page error, if those database options are enabled and available. It also generates a checksum for the entire backup. The default behavior is NO_CHECKSUM, which does not validate pages and does not generate a backup checksum. Page validation and checksum generation consumes more processor resources during the backup operation. But their value is important in disaster recovery scenarios.

![]() CONTINUE_AFTER_ERROR | STOP_ON_ERROR These options tell the database engine what to do in the case of a backup operation encountering a page checksum error. The CONTINUE_AFTER_ERROR option tells the backup operation to continue on if it encounters any page validation errors. The default option is STOP_ON_ERROR, which instructs the backup operation to stop.

CONTINUE_AFTER_ERROR | STOP_ON_ERROR These options tell the database engine what to do in the case of a backup operation encountering a page checksum error. The CONTINUE_AFTER_ERROR option tells the backup operation to continue on if it encounters any page validation errors. The default option is STOP_ON_ERROR, which instructs the backup operation to stop.

![]() COPY_ONLY This specifies that the backup is a copy only backup, which means your backup sequence is not affected. For all intents and purposes the backup operation did not occur. For a full backup, a copy only option does not reset the DMC, so subsequent differential backups are not affected. For a log backup the copy only option does not truncate the transaction log, so the log chain is not broken. Copy only backups are typically used to refresh non-production environments with production backups when the existing backup sequence should not be impacted. A copy only backup is still recorded in the ERRORLOG.

COPY_ONLY This specifies that the backup is a copy only backup, which means your backup sequence is not affected. For all intents and purposes the backup operation did not occur. For a full backup, a copy only option does not reset the DMC, so subsequent differential backups are not affected. For a log backup the copy only option does not truncate the transaction log, so the log chain is not broken. Copy only backups are typically used to refresh non-production environments with production backups when the existing backup sequence should not be impacted. A copy only backup is still recorded in the ERRORLOG.

![]() COMPRESSION | NO_COMPRESSION Backup compression has been available since the SQL Server 2008 Enterprise Edition and the SQL Server 2008 R2 Standard Edition. The COMPRESSION option enables backup compression. The NO_COMPRESSION option explicitly disables backup compression. The compression level cannot be controlled. Backup compression can consume extra processor resources so be careful with its usage in high-performance environments. The default behavior of the database engine is not to use backup compression. Setting the backup compression default server configuration option can change this default.

COMPRESSION | NO_COMPRESSION Backup compression has been available since the SQL Server 2008 Enterprise Edition and the SQL Server 2008 R2 Standard Edition. The COMPRESSION option enables backup compression. The NO_COMPRESSION option explicitly disables backup compression. The compression level cannot be controlled. Backup compression can consume extra processor resources so be careful with its usage in high-performance environments. The default behavior of the database engine is not to use backup compression. Setting the backup compression default server configuration option can change this default.

![]() DESCRIPTION This option allows you to describe the backup set using up to 255 characters.

DESCRIPTION This option allows you to describe the backup set using up to 255 characters.

![]() ENCRYPTION | NO_ENCRYPTION These options controls whether the backup should be encrypted. The default behavior is the same as the NO_ENCRYPTION option, which is not to encrypt. When you encrypt a backup you need to specify which encryption algorithm to use. The following encryption algorithms are supported:

ENCRYPTION | NO_ENCRYPTION These options controls whether the backup should be encrypted. The default behavior is the same as the NO_ENCRYPTION option, which is not to encrypt. When you encrypt a backup you need to specify which encryption algorithm to use. The following encryption algorithms are supported:

![]() AES_128

AES_128

![]() AES_192

AES_192

![]() AES_256

AES_256

![]() TRIPLE_DES_3KEY

TRIPLE_DES_3KEY

When you encrypt a backup you also have to specify the encryptor using one of the following options:

![]() SERVER CERTIFICATE = Encryptor_Name

SERVER CERTIFICATE = Encryptor_Name

![]() SERVER ASYMMETRIC KEY = Encryptor_Name

SERVER ASYMMETRIC KEY = Encryptor_Name

![]() EXPIREDATE | RETAIN_DAYS These two options allow you to control when the backup set expires and can be subsequently overwritten. RETAINSDAYS takes precedence over EXPIREDATE.

EXPIREDATE | RETAIN_DAYS These two options allow you to control when the backup set expires and can be subsequently overwritten. RETAINSDAYS takes precedence over EXPIREDATE.

![]() FORMAT | NO FORMAT The FORMAT option is destructive as it causes a new media set to be created. All existing backup sets are unrecoverable. If you format a single tape that belongs to an existing striped media set, the entire media set is useless. The default option, NOFORMAT, preserves the existing media header and backup sets on the media volumes used by the backup.

FORMAT | NO FORMAT The FORMAT option is destructive as it causes a new media set to be created. All existing backup sets are unrecoverable. If you format a single tape that belongs to an existing striped media set, the entire media set is useless. The default option, NOFORMAT, preserves the existing media header and backup sets on the media volumes used by the backup.

![]() INIT | NOINIT The INIT option specifies that all backup sets should be overwritten. The media header is preserved. The existing backup sets are not overwritten if the EXPIRYDATE/RETAINDAYSA have not expired, or if the backup set name provided does not match the one in the backup media. The NOINIT option, which is the default, specifies that the backup set be appended to the existing ones in the media set.

INIT | NOINIT The INIT option specifies that all backup sets should be overwritten. The media header is preserved. The existing backup sets are not overwritten if the EXPIRYDATE/RETAINDAYSA have not expired, or if the backup set name provided does not match the one in the backup media. The NOINIT option, which is the default, specifies that the backup set be appended to the existing ones in the media set.

![]() NAME This option gives the backup set a name. Up to 128 characters can be used.

NAME This option gives the backup set a name. Up to 128 characters can be used.

![]() MEDIADESCRIPTION This option allows you to describe the media set. It is limited to 255 characters.

MEDIADESCRIPTION This option allows you to describe the media set. It is limited to 255 characters.

![]() MEDIANAME This allows you to use up to 128 characters to give the media set a name.

MEDIANAME This allows you to use up to 128 characters to give the media set a name.

![]() RETAINDAYS This option allows you to control when the backup set expires and can be subsequently overwritten.

RETAINDAYS This option allows you to control when the backup set expires and can be subsequently overwritten.

![]() SKIP | NOSKIP The SKIP option specifies that the backup operation should ignore the “safety check” that normally checks the backup set’s expiration date or name before overwriting it.

SKIP | NOSKIP The SKIP option specifies that the backup operation should ignore the “safety check” that normally checks the backup set’s expiration date or name before overwriting it.

![]() STATS This controls at what percentage intervals the database engine should display a message indicating the progress of the backup operation. The default value is 10, which means you are notified whenever another 10 percent of the backup operation completes.

STATS This controls at what percentage intervals the database engine should display a message indicating the progress of the backup operation. The default value is 10, which means you are notified whenever another 10 percent of the backup operation completes.

Furthermore, the BACKUP statement supports the ability to back up the same data/log to a number of mirrored backup devices via the MIRROR TO clause. The MIRROR TO clause must have the same type and number of the backup devices as the TO clause. A maximum of three MIRROR TO clauses can be used, so a total of four mirrors is possible per media set. The primary reason for using mirrored media sets is to provide redundancy at the backup device level.

Listing 2-2 shows a full backup operation that has been mirrored to a number of different servers.

LISTING 2-2 Mirrored backups

BACKUP DATABASE WorldWideImporters

TO DISK = 'B:SQLBackupWorldWideImporters.bak'

MIRROR TO DISK = '\DEVSERVERSQLBackupSQLBackupWorldWideImporters.bak''

MIRROR TO DISK = '\TESTSERVERSQLBackupSQLBackupWorldWideImporters.bak''

MIRROR TO DISK = '\STAGINGSERVERSQLBackupSQLBackupWorldWideImporters.bak''

WITH FORMAT;

When backing up a large database it can be useful to know how far an executing backup operation has come and how long it will take to complete. You can query the [sys].[dm_exec_requests] dynamic management view (DMV) to monitor a backup operation’s progress. Use the query in Listing 2-3 to show the progress of a running BACKUP operation:

LISTING 2-3 Progress of backup operation

SELECT session_id' db_name(database_id) as database_name'

start_time' command' percent_complete' estimated_completion_time

FROM sys.dm_exec_requests

WHERE command LIKE 'backup %';

Perform database snapshots

Database snapshots are a read-only, static view of a database at the point-in-time when the database snapshot was taken. A database snapshot is a sparse file that is created separately from the database. This snapshot file holds the old versions of the database’s pages as data in the database is modified. A database can have multiple database snapshots, and each database snapshot has a unique name. Database snapshots have to be removed explicitly.

Database snapshots make great questions in the exam. If you don’t know how database snapshots work at the database engine level, you might easily choose them as an answer when they are clearly not the correct answer choice. Database snapshots work at the page level. Whenever a page is modified its pre-modified version is written to the snapshot file. Consequently, the database snapshot will consume more disk space as more data is modified within the database. A database can have multiple database snapshots. Although the predominant use case for database snapshots is for reporting purposes, they can be used for safeguarding against user mistakes. Reverting a database snapshot is quicker in most cases than restoring a database and replaying all log backups, up until the user mistake is made. For example, you might want to take a database snapshot before you execute some sort of end-of-day batch process. If an error occurs, or you decide you need to roll back this batch process, you can simply revert the database back to the time of the database snapshot. All data modifications after the database snapshot are to be expunged.

Important Database snapshot dependency

Database snapshots are dependent on the parent database. If the parent database’s data files are corrupted or lost, queries against the database snapshot will not work.

Listing 2-4 shows how to create a database snapshot. Ensure you provide a unique, meaningful name to the database snapshot.

LISTING 2-4 Create a database snapshot

CREATE DATABASE WorldWideImporters_20160917

ON (

NAME = WorldWideImporters_Data'

FILENAME = 'R:SQLDataWorldWideImporters_20160917.ss')

AS SNAPSHOT OF [WorldWideImporters];

![]() Exam Tip

Exam Tip

Watch out for any exam items that include database snapshots. If the underlying database files are unavailable you will not be able to revert the database snapshot.

Back up databases to Azure

There are a number of compelling reasons for backing up your database to the cloud, including triple redundancy, off-site location, and cost-effectiveness. SQL Server supports the following tools and features:

![]() Backup to URL

Backup to URL

![]() Backup to Microsoft Azure tool

Backup to Microsoft Azure tool

![]() Managed backup to Microsoft Azure

Managed backup to Microsoft Azure

SQL Server 2012 Service Pack 1 Cumulative Update 2 introduced the capability to back up directly to (and restore from) Microsoft Azure Blob Storage. Both the Backup Task in SQL Server Management Studio and the Maintenance Plan Wizard support backups to Microsoft Azure Blob Storage.

Backing up databases to Microsoft Azure Blob Storage is great if your databases are hosted in Microsoft Azure. For on-premise database solutions, however, you need to take into account your database size, volume of modifications, and upload/download bandwidth.

To back up to Microsoft Azure Blob Storage with the BACKUP statement, use the TO URL clause as show in Listing 2-5.

LISTING 2-5 Backup to URL

-- Create storage account identity and access key

CREATE CREDENTIAL MyCredentialName

WITH IDENTITY = 'MyStorageAccountName',

SECRET = '<MyStorageAccountAccessKey>';

GO

-- Backup database to URL using storage account identity and access key

BACKUP DATABASE MyDB

TO URL = 'https://<MyStorageAccountName>.blob.core.windows.net/<MyStorageAccountContainerN

ame>/MyDB.bak'

WITH CREDENTIAL = 'MyCredentialName'

Need more Review? SQL Server backup to URL

To learn how to configure Microsoft Azure Blob Storage to support SQL Server backup operations to URL visit https://docs.microsoft.com/en-us/sql/relational-databases/backup-restore/sql-server-backup-to-url.

Introduced in SQL Server 2014, the SQL Server Managed Backup to Microsoft Azure feature automatically manages your backups to Microsoft Azure. This feature can be enabled at the instance or database level, through the [smart_admin].[sp_set_instance_backup] or [smart_admin].[sp_set_db_backup] system stored procedures respectively. The database engine then automatically performs full and log backups automatically. Backups are retained for up to 30 days.

Full backups are performed whenever any of the following are true:

![]() The last full backup is over a week old.

The last full backup is over a week old.

![]() The log has grown more than 1GB since the last full backup.

The log has grown more than 1GB since the last full backup.

![]() Log chain is broken.

Log chain is broken.

Log backups are performed whenever any of the following are true:

![]() More than two hours have expired since the last log backup.

More than two hours have expired since the last log backup.

![]() The log has grown in 5MB.

The log has grown in 5MB.

![]() The log backup is behind the full backup.

The log backup is behind the full backup.

Listing 2-6 shows an example of how to configure SQL Server Managed Backup to Microsoft Azure at the instance level.

LISTING 2-6 SQL Server Managed Backup to Microsoft Azure at the instance level

USE [msdb];

GO

EXEC [smart_admin].[sp_set_instance_backup]

@enable_backup=1

,@storage_url = 'https://mystorageaccount.blob.core.windows.net/'

,@retention_days=30

,@credential_name='MyCredential'

,@encryption_algorithm ='AES_256'

,@encryptor_type= 'ServerCertificate'

,@encryptor_name='MyBackupCertificate';

GO

Listing 2-7 shows an example of how to configure SQL Server Managed Backup to Microsoft Azure at the database level.

LISTING 2-7 SQL Server Managed Backup to Microsoft Azure at the instance level

USE [msdb];

GO

EXEC [smart_admin].[sp_set_db_backup]

@database_name='MyDB'

,@enable_backup=1

,@storage_url = 'https://MyStorageAccount.blob.core.windows.net/'

,@retention_days=30

,@credential_name='MyCredential'

,@encryption_algorithm ='NO_ENCRYPTION';

GO

The Microsoft SQL Server Backup to Microsoft Azure Tool is an externally available tool that enables backup to Azure Blob Storage and encrypts and compresses SQL Server backups stored locally or in the cloud. It works with all versions and editions of SQL Server, even if they do not support compression and encryption.

Need more Review? Microsoft SQL Server Backup to Microsoft Azure Tool

You can learn about and download the Microsoft SQL Server Backup to Microsoft Azure Tool from https://www.microsoft.com/en-au/download/details.aspx?id=40740.

Back up VLDBs

As your database grows in size, the backup times, and more importantly the restore times, increase because both the backup and restore duration is dependent on the speed of your I/O subsystems. There are a number of techniques that can be used to decrease the time taken:

![]() Potentially implement data compression on tables within the database to reduce the size of the database. In most cases PAGE compression is superior to ROW compression.

Potentially implement data compression on tables within the database to reduce the size of the database. In most cases PAGE compression is superior to ROW compression.

![]() Assess taking advantage of columnstore indexes, which may substantially reduce the size of the tables. With columnstore indexes the table size can be potentially substantially reduced, as an example, from 120GB to 4GB. Be aware that columnstore indexes are designed primarily for data warehouse workloads where queries scan large volumes of data. For an On-Line Transaction Processing (OLTP) database you might still be able to use columnstore indexes on large tables that are rarely, if ever, queried. Examples of tables that fit this profile include auditing and logging tables.

Assess taking advantage of columnstore indexes, which may substantially reduce the size of the tables. With columnstore indexes the table size can be potentially substantially reduced, as an example, from 120GB to 4GB. Be aware that columnstore indexes are designed primarily for data warehouse workloads where queries scan large volumes of data. For an On-Line Transaction Processing (OLTP) database you might still be able to use columnstore indexes on large tables that are rarely, if ever, queried. Examples of tables that fit this profile include auditing and logging tables.

![]() Consider moving old data out of your very large database into an archive database. Old data is infrequently queried and should not live in your OLTP database forever.

Consider moving old data out of your very large database into an archive database. Old data is infrequently queried and should not live in your OLTP database forever.

![]() Take advantage of backup compression to reduce the size of the backup set.

Take advantage of backup compression to reduce the size of the backup set.

![]() Back up your database to multiple backup devices. The database engine is able to consume up to 64 threads to concurrently back up the extents of your database to 64 backup devices, one thread per device. You also need to maximize the I/O throughput at the database’s storage subsystem level.

Back up your database to multiple backup devices. The database engine is able to consume up to 64 threads to concurrently back up the extents of your database to 64 backup devices, one thread per device. You also need to maximize the I/O throughput at the database’s storage subsystem level.

Most of these techniques focus on reducing the size of your database, which in turn reduces the duration of your backup and restore operations. At some point in time these techniques are not sufficient because your database evolves into what is sometimes referred to as a very large database or VLDB.

A VLDB can be defined as a database whose restore or backup time SLAs cannot be easily met through faster networking/storage hardware resources, or by using any of the techniques discussed above. Additional consideration needs to be given to designing a backup strategy for such VLDBs.

For example, a 4TB database can easily take longer than one day to restore, due to the backup size, storage subsystem speed, networking infrastructure, backup compression, and the number and size of transaction log backups that also need to be to restored. (This is why you should periodically test the time taken by your restore procedure to ensure that your RTO can be met.)

Although VLDBs are not really that common in the field, expect the exam to have some questions about how to best backup and restore a VLDB. You will most likely be either asked to design a backup strategy for a VLDB or provide the series of restore steps that need to be performed to recover a VLDB in the shortest period of time.

The most common and easiest technique to reduce your backup (and restore) times for VLDBs is to take advantage of filegroups and re-architect your VLDB from a single primary file into multiple data files. Each secondary data file, or set of data files, would be contained in its own filegroup. Each file should ideally be located on a separate disk. That way if any single disk fails, the damage is contained to that file/filegroup. To recover from the disaster, restore just the damaged file/filegroup, as opposed to the entire database. This technique can substantially reduce the time it takes to recover from a disaster, depending on what has failed. It should also reduce the backup duration because you do not backup the same volume of data.

Important Configuring the primary data file for a VLDB

When creating a VLDB it is best practice to have no data within the primary data file of the database. This primary data file only contains the schema and code modules of our database. This ensures that the primary data file can be restored as quickly as possible when needed in a disaster recovery scenario. A database is never available until its primary data files are restored.

One commonly used technique is to simply spread your VLDB across a number of files. You would then back up only one of the files in your VLDB nightly, and rotate between the files over subsequent nights.

Let’s consider the following scenario of 4TB VLDB that has six separate data files as shown in Listing 2-8. The VLDB has a filegroup called [DATA] that consists of four 1TB secondary data files. This [DATA] filegroup is configured as the default filegroup. This helps ensure that there’s no data in the [PRIMARY] file group. Notice how these six data files are located on separate drives to help ensure that we do not lose two 1 TB data files at once. (Let’s assume that there is no need to perform file backups on the weekend because this organization only operates during weekdays.)

LISTING 2-8 Creating a VLDB

CREATE DATABASE [VLDB]

ON [PRIMARY]

(NAME = N'VLDB_System'' FILENAME = N'D:SQLDataVLDB_System.mdf'' SIZE = 100MB)'

FILEGROUP [DATA]

(NAME = N'VLDB_Data1'' FILENAME = N'E:SQLDataVLDB_Data1.ndf'' SIZE = 1TB)'

(NAME = N'VLDB_Data2'' FILENAME = N'F:SQLDataVLDB_Data2.ndf'' SIZE = 1TB)'

(NAME = N'VLDB_Data3'' FILENAME = N'G:SQLDataVLDB_Data3.ndf'' SIZE = 1TB)'

(NAME = N'VLDB_Data4'' FILENAME = N'H:SQLDataVLDB_Data4.ndf'' SIZE = 1TB)'

(NAME = N'VLDB_Data5'' FILENAME = N'I:SQLDataVLDB_Data5.ndf'' SIZE = 1TB)

LOG ON

(NAME = N'VLDB_log'' FILENAME = N'L:SQLLogVLDB_Log.ldf' ' SIZE = 100GB) ;

GO

ALTER DATABASE [VLDB] MODIFY FILEGROUP [DATA] DEFAULT;

The VLDB in this scenario could potentially use the following backup strategy:

![]() Back up the primary data file nightly.

Back up the primary data file nightly.

![]() Back up the [VLDB_Data1] file on Monday.

Back up the [VLDB_Data1] file on Monday.

![]() Back up the [VLDB_Data2] file on Tuesday.

Back up the [VLDB_Data2] file on Tuesday.

![]() Back up the [VLDB_Data3] file on Wednesday.

Back up the [VLDB_Data3] file on Wednesday.

![]() Back up the [VLDB_Data4] file on Thursday.

Back up the [VLDB_Data4] file on Thursday.

![]() Back up the [VLDB_Data5] file on Friday.

Back up the [VLDB_Data5] file on Friday.

![]() Back up the transaction log every 15 minutes.

Back up the transaction log every 15 minutes.

If a single data drive failed, it would be sufficient to replace the damaged disk, restore the failed data file, and replay the transaction log until the data file is synchronized with the rest of the database.

The problem with this approach is that all of the data would be spread across all of the files. If a single data file is lost in a disaster, nobody can access any of the data within the VLDB until the restore and recover have completed. So you have improved your backup duration, and your RPO potentially, but not necessarily your availability (or RTO).

SQL Server supports a feature called partial availability. With partial availability users can still access portions of the database even though certain filegroups are unavailable. For partial availability to work, the primary data file and transaction log files must always be available. This approach also works with partitioning where a single table is split into multiple partitions, and each partition is stored in a separate filegroup.

Consequently, a better technique is to locate your data more intelligently on the filegroups within the VLDB. This requires more domain knowledge about the database and how your organization uses the VLDB.

It is common for VLDBs to have very large tables that contain the following types of data:

![]() Archive/historical data

Archive/historical data

![]() Audit information

Audit information

![]() Logging information

Logging information

![]() Read-only data

Read-only data

![]() Reference data

Reference data

These tables might take up the majority of the capacity within the database. This data might not be as important as the rest of the data and can consequently be restored last. Also, it might not need to be backed up as frequently. In these cases, place these tables onto their own separate filegroups. Again, back up these filegroups at a separate frequency from the rest of the database. Back up read-only filegroups only when they are modified.

An alternative technique in deciding what tables should be split into their own filegroups takes into account the relative importance of the tables in the database.

Consider an online shopping VLDB with hundreds of tables that have the following tables:

![]() [Orders]

[Orders]

![]() [OrderHistory]

[OrderHistory]

![]() [Products]

[Products]

![]() [Customers]

[Customers]

In the case of a disaster incident it is critical to get these tables restored as soon as possible. You want to minimize the downtime for customer shopping! The database could be restored in the following order:

1. Primary data file

2. Products

3. Customers

4. Orders (at this stage customers could begin placing new orders)

5. Order history

6. The rest of the database

At some point in time a database can be too large to fail. At that stage, you need to consider implementing the appropriate high availability technology, such as Availability Groups and/or Log Shipping. These high availability technologies maintain multiple, separate copies of the database, which protects against instance and storage failure

Manage transaction log backups

So far we have looked at how you perform database backups and the different backup operations supported. Remember, no database backup operation automatically manages the transaction log for you by truncating it. In most cases the transaction logs for all your databases are typically co-located on the same disk. You don’t want the transaction log of an unimportant database filling up the disk and effectively crashing all your mission-critical databases as a result. Consequently it is important to manage the transaction log to both minimize the amount of data loss in the event of a disaster incident, and to ensure that the transaction log does not grow out of control and fill up the disk on which it is located.

In this section we will examine how you perform log backups and what to do in the case of an incident where your transaction log fills up. We will also look at some transaction log configuration options and how they will impact your disaster recovery strategy. But before we can look at how to perform log backups we need to look at the crucial concept of database recovery models, which control how much information is logged to the transaction log and potentially what amount of data you will lose in the case of a disaster occurring.

In the exam you should expect questions on what recovery models to use in given scenarios, how to deal with a full transaction log incidents and broken log chains. An exam question on tail-logs is virtually guaranteed, so make sure you understand what tail-logs, how to back them up and the recovery scenarios in which they are used.

Configure database recovery models

Understanding the different recovery models that are supported by SQL Server is critical because they directly impact your disaster recovery planning and how much data you can potentially lose in the case of a disaster. They also impact your high-availability solution design, capacity planning, and transaction log management.

SQL Server supports the following recovery models:

![]() Full Under the FULL recovery model the database engine fully logs every operation in the transaction log for recoverability purposes. Transaction log backups have no dependency on the database’s data files. Under normal operations on a correctly managed SQL Server instance, no data loss occurs. The full recovery model is typically used in production environments.

Full Under the FULL recovery model the database engine fully logs every operation in the transaction log for recoverability purposes. Transaction log backups have no dependency on the database’s data files. Under normal operations on a correctly managed SQL Server instance, no data loss occurs. The full recovery model is typically used in production environments.

![]() Bulk-Logged The BULK_LOGGED recovery model reduces the amount of logging information written to the transaction log during minimally logged operations by only recording the extents that were modified. Consequently, transaction log backups rely on the database’s data files that were modified by the minimally logged operations being available during the backup operation. The bulk logged recovery model is not typically used in production environments.

Bulk-Logged The BULK_LOGGED recovery model reduces the amount of logging information written to the transaction log during minimally logged operations by only recording the extents that were modified. Consequently, transaction log backups rely on the database’s data files that were modified by the minimally logged operations being available during the backup operation. The bulk logged recovery model is not typically used in production environments.

![]() Simple With the SIMPLE recovery model the database engine automatically reclaims the space used by operations in the transaction log. No transaction log backups are possible. The simple recovery model is typically used in non-production environments, such as development, user acceptance, and testing.

Simple With the SIMPLE recovery model the database engine automatically reclaims the space used by operations in the transaction log. No transaction log backups are possible. The simple recovery model is typically used in non-production environments, such as development, user acceptance, and testing.