This chapter describes the main principles and architecture choices behind the libvirt API and the Python libvirt module. These include the object model, the driver model, and management options. The details of these models and options are described in this chapter.

Object Model

The goal of both the libvirt API and the Python libvirt module is to provide all the functions necessary for deploying and managing virtual machines. This includes the management of both the core hypervisor functions and the host resources that are required by virtual machines, such as networking, storage, and PCI/USB devices. Most of the classes and methods exposed by libvirt have a pluggable internal back end, providing support for different underlying virtualization technologies and operating systems. Thus, the extent of the functionality available from a particular API or method is determined by the specific hypervisor driver in use and the capabilities of the underlying virtualization technology.

Hypervisor Connections

A connection is the primary, or top-level, object in the libvirt API and Python libvirt module. An instance of this object is required before attempting to use almost any of the classes or methods. A connection is associated with a particular hypervisor, which may be running locally on the same machine as the libvirt client application or on a remote machine over the network. In all cases, the connection is represented by an instance of the virConnect class and identified by a URI. The URI scheme and path define the hypervisor to connect to, while the host part of the URI determines where it is located. Refer to the section called “URI Formats” in Chapter 3 for a full description of valid URIs.

An application is permitted to open multiple connections at the same time, even when using more than one type of hypervisor on a single machine. For example, a host may provide both KVM full machine virtualization and Linux containers. A connection object can be used concurrently across multiple threads. Once a connection has been established, it is possible to obtain handles to other managed objects or create new managed objects, as discussed in the next section, “Guest Domains.”

Guest Domains

ID: This is a positive integer, unique among the running guest domains on a single host. An inactive domain does not have an ID.

Name: This is a short string, unique among all the guest domains on a single host, both running and inactive. To ensure maximum portability between hypervisors, it is recommended that names include only alphanumeric (a–Z, 0–9), hyphen (-), and underscore (_) characters.

UUID: This consists of 16 unsigned bytes, guaranteed to be unique among all guest domains on any host. RFC 4122 defines the format for UUIDs and provides a recommended algorithm for generating UUIDs with guaranteed uniqueness.

A guest domain may be transient or persistent. A transient guest domain can be managed only while it is running on the host. Once it is powered off, all traces of it will disappear. A persistent guest domain has its configuration maintained in a data store on the host by the hypervisor, in an implementation-defined format. Thus, when a persistent guest is powered off, it is still possible to manage its inactive configuration. A transient guest can be turned into a persistent guest while it is running by defining a configuration for it.

Virtual Networks

Remain isolated to the host.

Allow routing of traffic off-node via the active network interfaces of the host OS. This includes the option to apply NAT to IPv4 traffic.

Name: This is a short string, unique among all the virtual networks on a single host, both running and inactive. For maximum portability between hypervisors, applications should use only alphanumeric (a–Z, 0–9), hyphen (-), and underscore (_) characters in names.

UUID: This consists of 16 unsigned bytes, guaranteed to be unique among all the virtual networks on any host. RFC 4122 defines the format for UUIDs and provides a recommended algorithm for generating UUIDs with guaranteed uniqueness.

A virtual network can be transient or persistent. A transient virtual network can be managed only while it is running on the host. When taken offline, all traces of it will disappear. A persistent virtual network has its configuration maintained in a data store on the host, in an implementation-defined format. Thus, when a persistent network is brought offline, it is still possible to manage its inactive configuration. A transient network can be turned into a persistent network on the fly by defining a configuration for it.

After the installation of libvirt, every host will get a single virtual network instance called default, which provides DHCP services to guests and allows NAT’d IP connectivity to the host’s interfaces. This service is of most use to hosts with intermittent network connectivity such as laptops using wireless networking.

Refer to Chapter 6 for further information about using virtual network objects.

Storage Pools

Name: This is a short string, unique among all the storage pools on a single host, both running and inactive. For maximum portability between hypervisors, applications should rely on using only alphanumeric (a–Z, 0–9), hyphen (-), and underscore (_) characters in names.

UUID: This consists of 16 unsigned bytes, guaranteed to be unique among all the storage pools on any host. RFC 4122 defines the format for UUIDs and provides a recommended algorithm for generating UUIDs with guaranteed uniqueness.

A storage pool can be transient or persistent. A transient storage pool can be managed only while it is running on the host, and when powered off, all traces of it will disappear. A persistent storage pool has its configuration maintained in a data store on the host by the hypervisor, in an implementation-defined format. Thus, when a persistent storage pool is deactivated, it is still possible to manage its inactive configuration. A transient pool can be turned into a persistent pool on the fly by defining a configuration for it.

Refer to Chapter 5 for further information about using storage pool objects.

Storage Volumes

Name: This is short string, unique among all storage volumes within a storage pool. For maximum portability between implementations, applications should rely on using only alphanumeric (a–Z, 0–9), hyphen (-), and underscore (_) characters in names. The name is not guaranteed to be stable across reboots or between hosts, even if the storage pool is shared between hosts.

Key: This is a unique string, of arbitrary printable characters, intended to uniquely identify the volume within the pool. The key is intended to be stable across reboots and between hosts.

Path: This is a file system path referring to the volume. The path is unique among all the storage volumes on a single host. If the storage pool is configured with a suitable target path, the volume path may be stable across reboots and between hosts.

Refer to Chapter 5 for further information about using storage volume objects.

Host Devices

Host devices provide a view to the hardware devices available on the host machine. This covers both the physical USB or PCI devices and the logical devices these provide, such as NICs, disks, disk controllers, sound cards, and so on. Devices can be arranged to form a tree structure, allowing relationships to be identified.

A host device is represented by an instance of the virNodeDev class and has one general identifier (a name), though specific device types may have their own unique identifiers. A name is a short string, unique among all the devices on the host. The naming scheme is determined by the host operating system. The name is not guaranteed to be stable across reboots.

Physical devices can be detached from the host OS driver, which implicitly removes all associated logical devices. If the device is removed, then it will also be removed from any domain that references it. Physical device information is also useful when working with the storage and networking APIs to determine what resources are available to configure. Host devices are currently not covered in this guide.

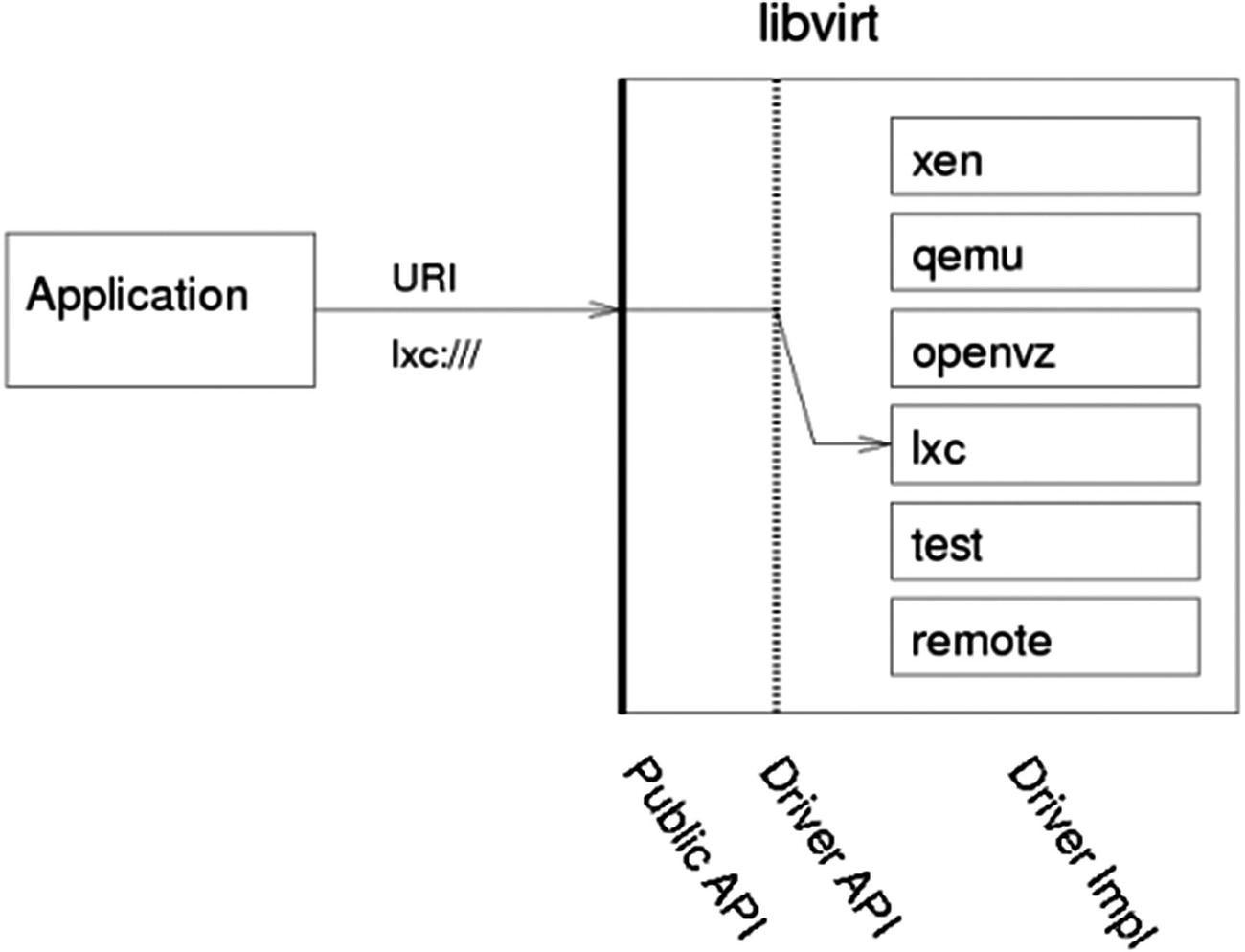

Driver Model

The libvirt library exposes a guaranteed stable API and ABI, both of which are decoupled from any particular virtualization technology. In addition, many of the APIs have associated XML schemata, which are considered part of the stable ABI guarantee. Internally, there are multiple implementations of the public ABI, each targeting a different virtualization technology. Each implementation is referred to as a driver. When obtaining an instance of the virConnect class, the application developer can provide a URI to determine which hypervisor driver is activated.

No two virtualization technologies have the same functionality. The libvirt goal is not to restrict applications to a lowest common denominator since this would result in an unacceptably limited API. Instead, libvirt attempts to define a representation of concepts and configuration that is hypervisor agnostic and adaptable to allow future extensions. Thus, if two hypervisors implement a comparable feature, libvirt provides a uniform control mechanism or configuration format for that feature.

Libvirt driver architecture

Internally, a libvirt driver will attempt to utilize whatever management channels are available for the virtualization technology in question. For some drivers, this may require libvirt to run directly on the host being managed, talking to a local hypervisor, while others may be able to communicate remotely over an RPC service. For drivers that have no native remote communication capability, libvirt provides a generic secure RPC service. This is discussed in detail later in this chapter.

Xen: The open source Xen hypervisor provides paravirtualized and fully virtualized machines. A single system driver runs in the Dom0 host talking directly to a combination of the hypervisor, xenstored, and xend. An example local URI scheme is xen:///.

QEMU: This supports any open source QEMU-based virtualization technology, including KVM. A single privileged system driver runs in the host managing QEMU processes. Each unprivileged user account also has a private instance of the driver. An example privileged URI scheme is qemu:///system. An example unprivileged URI scheme is qemu:///session.

UML: This is the User Mode Linux kernel, a pure paravirtualization technology. A single privileged system driver runs in the host managing UML processes. Each unprivileged user account also has a private instance of the driver. An example privileged URI scheme is uml:///system. An example unprivileged URI scheme is uml:///session.

OpenVZ: This is the OpenVZ container-based virtualization technology, using a modified Linux host kernel. A single privileged system driver runs in the host talking to the OpenVZ tools. An example privileged URI scheme is openvz:///system.

LXC: This is the native Linux container-based virtualization technology, available with Linux kernels since 2.6.25. A single privileged system driver runs in the host talking to the kernel. An example privileged URI scheme is lxc:///.

Remote: This is a generic secure RPC service talking to a libvirtd daemon. It provides encryption and authentication using a choice of TLS, x509 certificates, SASL (GSSAPI/Kerberos), and SSH tunneling. The URIs follow the scheme of the desired driver, but with a hostname filled in and a data transport name appended to the URI scheme. An example URI to talk to Xen over a TLS channel is xen+tls://somehostname/. An example URI to talk to QEMU over a SASL channel is qemu+tcp:///somehost/system.

Test: This is a mock driver, providing a virtual in-memory hypervisor covering all the libvirt APIs. It facilitates the testing of applications using libvirt by allowing automated tests to run the exercises’ libvirt APIs without needing to deal with a real hypervisor. An example default URI scheme is test:///default. An example customized URI scheme is test:///path/to/driver/config.xml .

Remote Management

While many virtualization technologies provide a remote management capability, libvirt does not assume this and provides a dedicated driver allowing for the remote management of any libvirt hypervisor driver. The driver has a variety of data transports providing considerable security for the data communication. The driver is designed such that there is 100 percent functional equivalence whether talking to the libvirt driver locally or via the RPC service.

In addition to the native RPC service included in libvirt, there are a number of alternatives for remote management that will not be discussed in this book. The libvirt-qpid project provides an agent for the QPid messaging service, exposing all libvirt managed objects and operations over the message bus. This keeps a fairly close, near one-to-one, mapping to the C API in libvirt. The libvirt-CIM project provides a CIM agent that maps the libvirt object model onto the DMTF virtualization schema.

Basic Usage

The server end of the RPC service is provided by the libvirtd daemon, which must be run on the host to be managed. In a default deployment, this daemon will be listening for connections only on a local UNIX domain socket. This allows for a libvirt client to use only the SSH tunnel data transport. With a suitable configuration of x509 certificates, or SASL credentials, the libvirtd daemon can be told to listen on a TCP socket for direct, nontunneled client connections.

As you can see from the previous example of libvirt driver URIs, the hostname field in the URI is always left empty for local libvirt connections. To make use of the libvirt RPC driver, only two changes are required to the local URI. At least one hostname must be specified, at which point libvirt will attempt to use the direct TLS data transport. An alternative data transport can be requested by appending its name to the URI scheme. The URIs formats will be described in detail in Chapter 6.

Data Transports

To cope with the wide variety of deployment environments, the libvirt RPC service supports a number of data transports, all of which can be configured with industry-standard encryption and authentication capabilities.

tls

This is a TCP socket running the TLS protocol on the wire. This is the default data transport if none is explicitly requested, and it uses a TCP connection on port 16514. At a minimum, it is necessary to configure the server with an x509 certificate authority and issue it a server certificate. The libvirtd server can, optionally, be configured to require clients to present x509 certificates as a means of authentication.

tcp

This is a TCP socket without the TLS protocol on the wire. This data transport should not be used on untrusted networks, unless the SASL authentication service has been enabled and configured with a plug-in that provides encryption. The TCP connection is made on port 16509.

unix

This is a local-only data transport, allowing users to connect to a libvirtd daemon running as a different user account. As it is accessible only on the local machine, it is unencrypted. The standard socket names are /var/run/libvirt/libvirt-sock for full management capabilities and /var/run/libvirt/libvirt-sock-ro for a socket restricted to read-only operations.

ssh

The RPC data is tunneled over an SSH connection to the remote machine. It requires that Netcat (nc) be installed on the remote machine and that libvirtd is running with the UNIX domain socket enabled. It is recommended that SSH be configured to not require password prompts to the client application. For example, if you’re using SSH public key authentication, it is recommended that you run an ssh-agent to cache key credentials. GSSAPI is another useful authentication mode for the SSH transport allowing use of a pre-initialized Kerberos credential cache.

ebxt

This supports any external program that can make a connection to the remote machine by means that are outside the scope of libvirt. If none of the built-in data transports is satisfactory, this allows an application to provide a helper program to proxy RPC data over a custom channel.

Authentication Schemes

To cope with the wide variety of deployment environments, the libvirt RPC service supports a number of authentication schemes on its data transports, with industry-standard encryption and authentication capabilities. The choice of authentication scheme is configured by the administrator in the /etc/libvirt/libvirtd.conf file.

sasl

SASL is an industry standard for pluggable authentication mechanisms. Each plug-in has a wide variety of capabilities, and discussion of their merits is outside the scope of this document. For the tls data transport, there is a wide choice of plug-ins, since TLS is providing data encryption for the network channel. For the tcp data transport, libvirt will refuse to use any plug-in that does not support data encryption. This effectively limits the choice to GSSAPI/Kerberos. SASL can optionally be enabled on the UNIX domain socket data transport if strong authentication of local users is required.

polkit

PolicyKit is an authentication scheme suitable for local desktop virtualization deployments, for use only on the UNIX domain socket data transport. It enables the libvirtd daemon to validate that the client application is running within the local X desktop session. It can be configured to allow access to a logged-in user automatically or can prompt them to enter their own password or the superuser (root) password.

x509

Although not strictly an authentication scheme, the TLS data transport can be configured to mandate the use of client x509 certificates. The server can then whitelist the client distinguished names to control access.

Generating TLS Certificates

- 1.

The client checks that it is connecting to the correct server by matching the certificate the server sends with the server’s hostname. This check can be disabled by adding ?no_verify=1.

- 2.The server checks to ensure that only allowed clients are connected. This is performed using either of the following:

- a.

The client’s IP address

- b.

The client’s IP address and the client’s certificate

- a.

Server checking can be enabled or disabled using the libvirtd.conf file.

For full certificate checking, you will need to have certificates issued by a recognized certificate authority (CA) for your server (or servers) and all clients. To avoid the expense of obtaining certificates from a commercial CA, there is the option to set up your own CA and tell your servers and clients to trust certificates issued by your own CA. To do this, follow the instructions in the next section.

It should be noted that using a certificate using the FODN/hostname where the host is not present in the DNS will cause problems unless the host is added to the hosts file. Alternatively, if you replace the hostname with the IP address, that too may cause problems.

Be aware that the default configuration for libvirtd.conf allows any client to connect, provided that they have a valid certificate issued by the CA for their own IP address. You may need to make this setting more or less permissive, depending upon your requirements.

Public Key Infrastructure Setup

Public Key Setup

Location | Machine | Description | Required Fields |

|---|---|---|---|

/etc/pki/CA/cacert.pem | Installed on all clients and servers | CA’s certificate | n/a |

/etc/pki/libvirt/private/serverkey.pem | Installed on the server | Server’s private key | n/a |

/etc/pki/libvirt/ servercert.pem | Installed on the server | Server’s certificate signed by the CA | CommonName (CN) must be the hostname of the server as it is seen by clients. |

/etc/pki/libvirt/private/clientkey.pem | Installed on the client | Client’s private key. | n/a |

/etc/pki/CA/cacert.pem | Installed on the client | Client’s certificate signed by the CA | Distinguished Name (DN) can be checked against an access control list (tls_allowed_dn_list). |

Summary

This chapter introduced the components that make up the architecture of libvirt. Much of the information in this chapter will be further expanded on in subsequent chapters. Later chapter will introduce the Python API and will show detailed functions that can communicate with these components.