7

Estimating GARCH Models by Quasi‐Maximum Likelihood

The quasi‐maximum likelihood (QML) method is particularly relevant for GARCH models because it provides consistent and asymptotically normal estimators for strictly stationary GARCH processes under mild regularity conditions, but with no moment assumptions on the observed process. By contrast, the least‐squares methods of the previous chapter require moments of order 4 at least.

In this chapter, we study in details the conditional QML method (conditional on initial values). We first consider the case when the observed process is pure GARCH. We present an iterative procedure for computing the Gaussian log‐likelihood, conditionally on fixed or random initial values. The likelihood is written as if the law of the variables

η

t

were Gaussian ![]() (we refer to pseudo‐ or quasi‐likelihood), but this assumption is not necessary for the strong consistency of the estimator. In the second part of the chapter, we will study the application of the method to the estimation of ARMA–GARCH models. The asymptotic properties of the quasi‐maximum likelihood estimator (QMLE) are established at the end of the chapter.

(we refer to pseudo‐ or quasi‐likelihood), but this assumption is not necessary for the strong consistency of the estimator. In the second part of the chapter, we will study the application of the method to the estimation of ARMA–GARCH models. The asymptotic properties of the quasi‐maximum likelihood estimator (QMLE) are established at the end of the chapter.

7.1 Conditional Quasi‐Likelihood

Assume that the observations ε1, …, ε n constitute a realisation (of length n ) of a GARCH(p, q) process, more precisely a non‐anticipative strictly stationary solution of

where (η t ) is a sequence of iid variables of variance 1, ω 0 > 0, α 0i ≥ 0 (i = 1, …, q), and β 0j ≥ 0 (j = 1, …, p). The orders p and q are assumed known. The vector of the parameters

belongs to a parameter space of the form

The true value of the parameter is unknown, and is denoted by

To write the likelihood of the model, a distribution must be specified for the iid variables

η

t

. Here we do not make any assumption on the distribution of these variables, but we work with a function called the (Gaussian) quasi‐likelihood, which, conditionally on some initial values, coincides with the likelihood when the

η

t

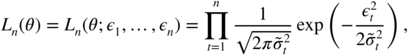

are distributed as standard Gaussian. Given initial values ![]() to be specified below, the conditional Gaussian quasi‐likelihood is given by

to be specified below, the conditional Gaussian quasi‐likelihood is given by

where the ![]() are recursively defined, for

t ≥ 1, by

are recursively defined, for

t ≥ 1, by

For a given value of θ , under the second‐order stationarity assumption, the unconditional variance (corresponding to this value of θ ) is a reasonable choice for the unknown initial values:

Such initial values are, however, not suitable for IGARCH models, in particular, and more generally when the second‐order stationarity is not imposed. Indeed, the constant (7.5) would then take negative values for some values of θ . In such a case, suitable initial values are

or

A QMLE of

θ

is defined as any measurable solution ![]() of

of

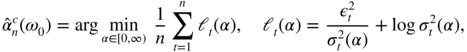

Taking the logarithm, it is seen that maximising the likelihood is equivalent to minimising, with respect to θ,

and ![]() is defined by (7.4). A QMLE is thus a measurable solution of the equation

is defined by (7.4). A QMLE is thus a measurable solution of the equation

It will be shown that the choice of the initial values is unimportant for the asymptotic properties of the QMLE. However, in practice this choice may be important. Note that other methods are possible for generating the sequence ![]() ; for example, by taking

; for example, by taking ![]() , where the

c

i

(θ) are recursively computed (see Berkes, Horváth, and Kokoszka 2003b). Note that for computing

, where the

c

i

(θ) are recursively computed (see Berkes, Horváth, and Kokoszka 2003b). Note that for computing ![]() , this procedure involves a number of operations of order

n

2

, whereas the one we propose involves a number of order

n

. It will be convenient to approximate the sequence

, this procedure involves a number of operations of order

n

2

, whereas the one we propose involves a number of order

n

. It will be convenient to approximate the sequence ![]() by an ergodic stationary sequence. Assuming that the roots of

ℬ

θ

(z) are outside the unit disk, the non‐anticipative and ergodic strictly stationary sequence

by an ergodic stationary sequence. Assuming that the roots of

ℬ

θ

(z) are outside the unit disk, the non‐anticipative and ergodic strictly stationary sequence ![]() is defined as the solution of

is defined as the solution of

Note that ![]() .

.

h3

Likelihood Equations

Likelihood equations are obtained by canceling the derivative of the criterion ![]() with respect to

θ

, which gives

with respect to

θ

, which gives

These equations can be interpreted as orthogonality relations, for large n . Indeed, as will be seen in the next section, the left‐hand side of Eq. (7.11) has the same asymptotic behaviour as

the impact of the initial values vanishing as n → ∞.

The innovation of ![]() is

is ![]() . Thus, under the assumption that the expectation exists, we have

. Thus, under the assumption that the expectation exists, we have

because ![]() is a measurable function of the ε

t − i

,

i > 0. This result can be viewed as the asymptotic version of condition ( 7.11) at

θ

0

, using the ergodic theorem.

is a measurable function of the ε

t − i

,

i > 0. This result can be viewed as the asymptotic version of condition ( 7.11) at

θ

0

, using the ergodic theorem.

7.1.1 Asymptotic Properties of the QMLE

In this chapter, we will use the matrix norm defined by ‖A‖ = ∑ ∣ a ij ∣ for all matrices A = (a ij ). The spectral radius of a square matrix A is denoted by ρ(A).

Strong Consistency

Recall that model (7.1) admits a strictly stationary solution if and only if the sequence of matrices A 0 = (A 0t ), where

admits a strictly negative top Lyapunov exponent, γ(A 0) < 0, where

Let

By convention, ![]() if

q = 0 and

ℬ

θ

(z) = 1 if

p = 0. To show strong consistency, the following assumptions are used.

if

q = 0 and

ℬ

θ

(z) = 1 if

p = 0. To show strong consistency, the following assumptions are used.

- A1: θ 0 ∈ Θ and Θ is compact.

- A2:

γ(A

0) < 0 and for all

θ ∈ Θ,

.

. - A3:

has a non‐degenerate distribution and

has a non‐degenerate distribution and  .

. - A4: If

p > 0,

and

and  have no common roots,

have no common roots,  , and

α

0q

+ β

0p

≠ 0.

, and

α

0q

+ β

0p

≠ 0.

Note that, by Corollary 2.2, the second part of assumption A2 implies that the roots of

ℬ

θ

(z) are outside the unit disk. Thus, a non‐anticipative and ergodic strictly stationary sequence ![]() is defined by (7.10). Similarly, define

is defined by (7.10). Similarly, define

The first result states the strong consistency of ![]() . The proof of this theorem, and of the next ones, is given in Section 7.4.

. The proof of this theorem, and of the next ones, is given in Section 7.4.

Asymptotic Normality

The following additional assumptions are considered.

- A5:

, where

, where  denotes the interior of Θ.

denotes the interior of Θ. - A6:

.

.

The limiting distribution of ![]() is given by the following result.

is given by the following result.

7.1.2 The ARCH(1) Case: Numerical Evaluation of the Asymptotic Variance

Consider the ARCH(1) model

with ω 0 > 0 and α 0 > 0, and suppose that the variables η t satisfy assumption A3. The unknown parameter is θ 0 = (ω 0, α 0)′ . In view of condition (2.10), the strict stationarity constraint A2 is written as

Assumption A1 holds true if, for instance, the parameter space is of the form Θ = [δ, 1/δ] × [0, 1/δ], where

δ > 0 is a constant, chosen sufficiently small so that

θ

0

belongs to Θ. By Theorem 7.1, the QMLE of

θ

0

is then strongly consistent. Since ![]() , the QMLE

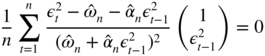

, the QMLE ![]() is characterised by the normal equation

is characterised by the normal equation

with, for instance ![]() . This estimator does not have an explicit form and must be obtained numerically. Theorem 7.2, which provides the asymptotic distribution of the estimator, only requires the extra assumption that

θ

0

belongs to

. This estimator does not have an explicit form and must be obtained numerically. Theorem 7.2, which provides the asymptotic distribution of the estimator, only requires the extra assumption that

θ

0

belongs to ![]() . Thus, if

α

0 = 0 (that is, if the model is conditionally homoscedastic), the estimator remains consistent but is no longer asymptotically normal. Matrix

J

takes the form

. Thus, if

α

0 = 0 (that is, if the model is conditionally homoscedastic), the estimator remains consistent but is no longer asymptotically normal. Matrix

J

takes the form

and the asymptotic variance of ![]() is

is

Table 7.1 displays numerical evaluations of this matrix. An estimation of J is obtained by replacing the expectations by empirical means, obtained from simulations of length 10 000, when η t is 풩(0, 1) distributed. This experiment is repeated 1 000 times to obtain the results presented in the table.

Asymptotic variance for the QMLE of an ARCH(1) process with η t ∼풩(0, 1).

| ω 0 = 1, α 0 = 0.1 | ω 0 = 1, α 0 = 0.5 | ω 0 = 1, α 0 = 0.95 | |

|

|

|

|

|

In order to assess, in finite samples, the quality of the asymptotic approximation of the variance of the estimator, the following Monte Carlo experiment is conducted. For the value

θ

0

of the parameter, and for a given length

n

,

N

samples are simulated, leading to

N

estimations ![]() of

θ

0

,

i = 1, …, N

. We denote by

of

θ

0

,

i = 1, …, N

. We denote by ![]() their empirical mean. The root mean squared error (RMSE) of estimation of

α

is denoted by

their empirical mean. The root mean squared error (RMSE) of estimation of

α

is denoted by

and can be compared to ![]() , the latter quantity being evaluated independently, by simulation. A similar comparison can obviously be made for the parameter

ω

. For

θ

0 = (0.2, 0.9)′

and

N = 1000, Table 7.2 displays the results, for different sample length

n

.

, the latter quantity being evaluated independently, by simulation. A similar comparison can obviously be made for the parameter

ω

. For

θ

0 = (0.2, 0.9)′

and

N = 1000, Table 7.2 displays the results, for different sample length

n

.

Comparison of the empirical and theoretical asymptotic variances, for the QMLE of the parameter α 0 = 0.9 of an ARCH(1), when η t ∼풩(0, 1).

| n |

|

RMSE( α ) |

|

|

| 100 | 0.85221 | 0.25742 | 0.25014 | 0.266 |

| 250 | 0.88336 | 0.16355 | 0.15820 | 0.239 |

| 500 | 0.89266 | 0.10659 | 0.11186 | 0.152 |

| 1000 | 0.89804 | 0.08143 | 0.07911 | 0.100 |

The similarity between columns 3 and 4 is quite satisfactory, even for moderate sample sizes. The last column gives the empirical probability (that is, the relative frequency within the

N

samples) that ![]() is greater than 1 (which is the limiting value for second‐order stationarity). These results show that, even if the mean of the estimations is close to the true value for large

n

, the variability of the estimator remains high. Finally, note that the length

n = 1000 remains realistic for financial series.

is greater than 1 (which is the limiting value for second‐order stationarity). These results show that, even if the mean of the estimations is close to the true value for large

n

, the variability of the estimator remains high. Finally, note that the length

n = 1000 remains realistic for financial series.

7.1.3 The Non‐stationary ARCH(1)

When the strict stationarity constraint is not satisfied in the ARCH(1) case, that is, when

one can define an ARCH(1) process starting with initial values. For a given value ε0 , we define

where

ω

0 > 0 and

α

0 > 0, with the usual assumptions on the sequence (η

t

). As already noted, ![]() converges to infinity almost surely when

converges to infinity almost surely when

and only in probability when the inequality (7.14) is an equality (see Corollary 2.1 and Remark 2.3 following it). Is it possible to estimate the coefficients of such a model? The answer is only partly positive: it is possible to consistently estimate the coefficient α 0 , but the coefficient ω 0 cannot be consistently estimated. The practical impact of this result thus appears to be limited, but because of its theoretical interest, the problem of estimating coefficients of non‐stationary models deserves attention. Consider the QMLE of an ARCH(1), that is to say a measurable solution of

where

θ = (ω, α), Θ is a compact set of (0, ∞)2

, and ![]() for

t = 1, …, n

(starting with a given initial value for

for

t = 1, …, n

(starting with a given initial value for ![]() ). The almost sure convergence of

). The almost sure convergence of ![]() to infinity will be used to show the strong consistency of the QMLE of

α

0

. The following lemma completes Corollary 2.1 and gives the rate of convergence of

to infinity will be used to show the strong consistency of the QMLE of

α

0

. The following lemma completes Corollary 2.1 and gives the rate of convergence of ![]() to infinity under condition (7.16).

to infinity under condition (7.16).

This result entails the strong consistency and asymptotic normality of the QMLE of α 0 .

In the proof of this theorem, it is shown that the score vector satisfies

In the standard statistical inference framework, the variance

J

of the score vector is (proportional to) the Fisher information. According to the usual interpretation, the form of the matrix

J

shows that, asymptotically and for almost all observations, the variations of the log‐likelihood ![]() are insignificant when

θ

varies from (ω

0, α

0) to (ω

0 + h, α

0) for small

h

. In other words, the limiting log‐likelihood is flat at the point (ω

0, α

0) in the direction of variation of

ω

0

. Thus, minimising this limiting function does not allow

θ

0

to be found. This leads us to think that the QML of

ω

0

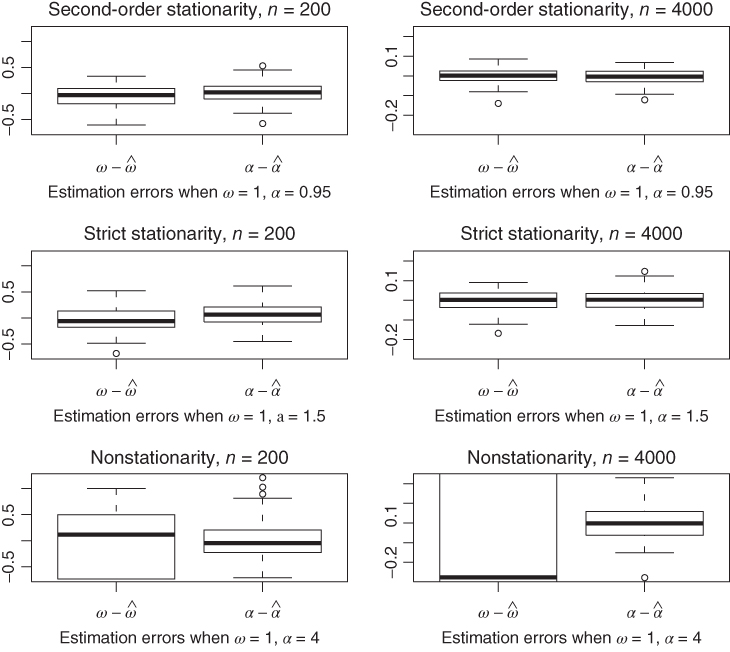

is likely to be inconsistent when the strict stationarity condition is not satisfied. Figure 7.2 displays numerical results illustrating the performance of the QMLE in finite samples. For different values of the parameters, 100 replications of the ARCH(1) model have been generated, for the sample sizes

n = 200 and

n = 4000. The top panels of the figure correspond to a second‐order stationary ARCH(1), with parameter

θ

0 = (1, 0.95). The panels in the middle correspond to a strictly stationary ARCH(1) of infinite variance, with

θ

0 = (1, 1.5). The results obtained for these two cases are similar, confirming that second‐order stationarity is not necessary for estimating an ARCH. The bottom panels, corresponding to the explosive ARCH(1) with parameter

θ

0 = (1, 4), confirm the asymptotic results concerning the estimation of

α

0

. They also illustrate the failure of the QML to estimate

ω

0

under the nonstationarity condition ( 7.16). The results even deteriorate when the sample size increases.

are insignificant when

θ

varies from (ω

0, α

0) to (ω

0 + h, α

0) for small

h

. In other words, the limiting log‐likelihood is flat at the point (ω

0, α

0) in the direction of variation of

ω

0

. Thus, minimising this limiting function does not allow

θ

0

to be found. This leads us to think that the QML of

ω

0

is likely to be inconsistent when the strict stationarity condition is not satisfied. Figure 7.2 displays numerical results illustrating the performance of the QMLE in finite samples. For different values of the parameters, 100 replications of the ARCH(1) model have been generated, for the sample sizes

n = 200 and

n = 4000. The top panels of the figure correspond to a second‐order stationary ARCH(1), with parameter

θ

0 = (1, 0.95). The panels in the middle correspond to a strictly stationary ARCH(1) of infinite variance, with

θ

0 = (1, 1.5). The results obtained for these two cases are similar, confirming that second‐order stationarity is not necessary for estimating an ARCH. The bottom panels, corresponding to the explosive ARCH(1) with parameter

θ

0 = (1, 4), confirm the asymptotic results concerning the estimation of

α

0

. They also illustrate the failure of the QML to estimate

ω

0

under the nonstationarity condition ( 7.16). The results even deteriorate when the sample size increases.

Figure 7.2 Box‐plots of the QML estimation errors for the parameters ω 0 and α 0 of an ARCH(1) process, with η t ∼풩(0, 1).

7.2 Estimation of ARMA–GARCH Models by Quasi‐Maximum Likelihood

In this section, the previous results are extended to cover the situation where the GARCH process is not directly observed, but constitutes the innovation of an observed ARMA process. This framework is relevant because, even for financial series, it is restrictive to assume that the observed series is the realisation of a noise. From a theoretical point of view, it will be seen that the extension to the ARMA–GARCH case is far from trivial. Assume that the observations X 1, …, X n are generated by a strictly stationary non‐anticipative solution of the ARMA(P, Q)‐GARCH(p, q) model

where (η t ) and the coefficients ω 0 , α 0i and β 0j are defined as in model ( 7.1). The orders P, Q, p, q are assumed known. The vector of the parameters is denoted by

where θ is defined as in equality (7.2). The parameter space is

The true value of the parameter is denoted by

We still employ a Gaussian quasi‐likelihood conditional on initial values. If q ≥ Q , the initial values are

These values (the last

p

of which are positive) are assumed to be fixed, but they could depend on the parameter and/or on the observations. For any

ϑ

, the values of ![]() , for

t = − q + Q + 1, …, n

, and then, for any

θ

, the values of

, for

t = − q + Q + 1, …, n

, and then, for any

θ

, the values of ![]() , for

t = 1, …, n, can thus be computed from

, for

t = 1, …, n, can thus be computed from

When q < Q , the fixed initial values are

Conditionally on these initial values, the Gaussian log‐likelihood is given by

A QMLE is defined as a measurable solution of the equation

h3

Strong Consistency

Let ![]() and

and ![]() . Standard assumptions are made on these AR and MA polynomials, and assumption A1 is modified as follows:

. Standard assumptions are made on these AR and MA polynomials, and assumption A1 is modified as follows:

- A7: ϕ 0 ∈ Φ and Φ is compact.

- A8: For all ϕ ∈ Φ, a ϑ (z)b ϑ (z) = 0 implies ∣z ∣ > 1.

- A9:

and

and  have no common roots,

a

0P

≠ 0 or

b

0Q

≠ 0.

have no common roots,

a

0P

≠ 0 or

b

0Q

≠ 0.

Under assumptions A2 and A8, (X

t

) is supposed to be the unique strictly stationary nonanticipative solution of model (7.21). Let ![]() and

and ![]() , where

, where ![]() is the non‐anticipative and ergodic strictly stationary solution of ( 7.10). Note that

e

t

= ε

t

(ϑ

0) and

is the non‐anticipative and ergodic strictly stationary solution of ( 7.10). Note that

e

t

= ε

t

(ϑ

0) and ![]() . The following result is an extension of Theorem 7.1.

. The following result is an extension of Theorem 7.1.

Asymptotic Normality When the Moment of Order 4 Exists

So far, the asymptotic results of the QMLE (consistency and asymptotic normality in the pure GARCH case, consistency in the ARMA–GARCH case) have not required any moment assumption on the observed process (for the asymptotic normality in the pure GARCH case, a moment of order 4 is assumed for the iid process, not for ε t ). One might think that this will be the same for establishing the asymptotic normality in the ARMA–GARCH case. The following example shows that this is not the case.

This example shows that it is not possible to extend the result of asymptotic normality obtained in the GARCH case to the ARMA–GARCH models without additional moment assumptions. This is not surprising because for ARMA models (which can be viewed as limits of ARMA–GARCH models when the coefficients α 0i and β 0j tend to 0) the asymptotic normality of the QMLE is shown with second‐order moment assumptions. For an ARMA with infinite variance innovations, the consistency of the estimators may be faster than in the standard case and the asymptotic distribution belongs to the class of the α-stable laws, but is non‐Gaussian in general. We show the asymptotic normality with a moment assumption of order 4. Recall that, by Theorem 2.9, this assumption is equivalent to ρ{E(A 0t ⊗ A 0t )} < 1. We make the following assumptions:

- A10

ρ{E(A

0t

⊗ A

0t

)} < 1 and, for all

θ ∈ Θ,

.

. - A11

, where

, where  denotes the interior of Φ.

denotes the interior of Φ. - A12 There exists no set Λ of cardinality 2 such that ℙ(η t ∈ Λ) = 1.

Assumption A10 implies that ![]() and makes assumption A2 superfluous. The identifiability assumption A12 is slightly stronger than the first part of assumption A3 when the distribution of

η

t

is not symmetric. We are now in a position to state conditions ensuring the asymptotic normality of the QMLE of an ARMA–GARCH model.

and makes assumption A2 superfluous. The identifiability assumption A12 is slightly stronger than the first part of assumption A3 when the distribution of

η

t

is not symmetric. We are now in a position to state conditions ensuring the asymptotic normality of the QMLE of an ARMA–GARCH model.

7.3 Application to Real Data

In this section, we employ the QML method to estimate GARCH(1, 1) models on daily returns of 11 stock market indices, namely the CAC, DAX, DJA, DJI, DJT, DJU, FTSE, Nasdaq, Nikkei, SMI, and S&P 500 indices. The observations cover the period from 2 January 1990 to 22 January 2009 1 (except for those indices for which the first observation is after 1990). The GARCH(1, 1) model has been chosen because it constitutes the reference model, by far the most commonly used in empirical studies. However, in Chapter 8, we will see that it can be worth considering models with higher orders p and q .

Table 7.4 displays the estimators of the parameters

ω, α, β

, together with their estimated standard deviations. The last column gives estimates of ![]() , obtained by replacing the unknown parameters by their estimates and

, obtained by replacing the unknown parameters by their estimates and ![]() by the empirical mean of the fourth‐order moment of the standardised residuals. We have

by the empirical mean of the fourth‐order moment of the standardised residuals. We have ![]() if and only if

ρ

4 < 1. The estimates of the GARCH coefficients are quite homogenous over all the series and are similar to those usually obtained in empirical studies of daily returns. The coefficients

α

are close to 0.1, and the coefficients

β

are close to 0.9, which indicates a strong persistence of the shocks on the volatility. The sum

α + β

is greater than 0.98 for 10 of the 11 series, and greater than 0.96 for all the series. Since

α + β < 1, the assumption of second‐order stationarity cannot be rejected, for any series (see Section 8.1). A fortiori, by Remark 2.6, the strict stationarity cannot be rejected. The existence of moments of order 4,

if and only if

ρ

4 < 1. The estimates of the GARCH coefficients are quite homogenous over all the series and are similar to those usually obtained in empirical studies of daily returns. The coefficients

α

are close to 0.1, and the coefficients

β

are close to 0.9, which indicates a strong persistence of the shocks on the volatility. The sum

α + β

is greater than 0.98 for 10 of the 11 series, and greater than 0.96 for all the series. Since

α + β < 1, the assumption of second‐order stationarity cannot be rejected, for any series (see Section 8.1). A fortiori, by Remark 2.6, the strict stationarity cannot be rejected. The existence of moments of order 4, ![]() , is questionable for all the series because

, is questionable for all the series because ![]() is extremely close to 1. Recall, however, that consistency and asymptotic normality of the QMLE do not require any moment on the observed process but do require strict stationarity.

is extremely close to 1. Recall, however, that consistency and asymptotic normality of the QMLE do not require any moment on the observed process but do require strict stationarity.

| Index | ω | α | β | ρ 4 |

| CAC | 0.033 (0.009) | 0.090 (0.014) | 0.893 (0.015) | 1.0067 |

| DAX | 0.037 (0.014) | 0.093 (0.023) | 0.888 (0.024) | 1.0622 |

| DJA | 0.019 (0.005) | 0.088 (0.014) | 0.894 (0.014) | 0.9981 |

| DJI | 0.017 (0.004) | 0.085 (0.013) | 0.901 (0.013) | 1.0020 |

| DJT | 0.040 (0.013) | 0.089 (0.016) | 0.894 (0.018) | 1.0183 |

| DJU | 0.021 (0.005) | 0.118 (0.016) | 0.865 (0.014) | 1.0152 |

| FTSE | 0.013 (0.004) | 0.091 (0.014) | 0.899 (0.014) | 1.0228 |

| Nasdaq | 0.025 (0.006) | 0.072 (0.009) | 0.922 (0.009) | 1.0021 |

| Nikkei | 0.053 (0.012) | 0.100 (0.013) | 0.880 (0.014) | 0.9985 |

| SMI | 0.049 (0.014) | 0.127 (0.028) | 0.835 (0.029) | 1.0672 |

| S&P 500 | 0.014 (0.004) | 0.084 (0.012) | 0.905 (0.012) | 1.0072 |

The estimated standard deviations are given in parentheses. ![]() .

.

7.4 Proofs of the Asymptotic Results*

We denote by K > 0 and ρ ∈ [0, 1) generic constants whose values can vary from line to line. As an example, one can write for 0 < ρ 1 < 1 and 0 < ρ 2 < 1, i 1 ≥ 0, i 2 ≥ 0,

To prove the asymptotic normality of the QMLE, we need the following intermediate result.

7.5 Bibliographical Notes

The asymptotic properties of the QMLE of the ARCH models have been established by Weiss (1986) under the condition that the moment of order 4 exists. In the GARCH(1, 1) case, the asymptotic properties have been established by Lumsdaine (1996) (see also Lee and Hansen 1994) for the local QMLE under the strict stationarity assumption. In Lumsdaine (1996), the conditions on the coefficients α 1 and β 1 allow to handle the IGARCH(1, 1) model. They are, however, very restrictive with regard to the iid process: it is assumed that E|η t |32 < ∞ and that the density of η t has a unique mode and is bounded in a neighbourhood of 0. In Lee and Hansen (1994), the consistency of the global estimator is obtained under the assumption of second‐order stationarity.

Berkes, Horváth, and Kokoszka (2003b) was the first paper to give a rigorous proof of the asymptotic properties of the QMLE in the GARCH(p, q) case under very weak assumptions; see also Berkes and Horváth (2003b, 2004), together with Boussama (1998, 2000). The assumptions given in Berkes, Horváth, and Kokoszka (2003b) were weakened slightly in Francq and Zakoïan (2004). The proofs presented here come from that paper. An extension to non‐iid errors was proposed by Escanciano (2009).

Jensen and Rahbek (2004a,b) have shown that the parameter α 0 of an ARCH(1) model, or the parameters α 0 and β 0 of a GARCH(1, 1) model, can be consistently estimated, with a standard Gaussian asymptotic distribution and a standard rate of convergence, even if the parameters are outside the strict stationarity region. They considered a constrained version of the QMLE, in which the intercept ω is fixed (see Exercises 7.13 and 7.14). These results were misunderstood by a number of researchers and practitioners, who wrongly claimed that the QMLE of the GARCH parameters is consistent and asymptotically normal without any stationarity constraint. We have seen in Section 3.1.3 that the QMLE of ω 0 is inconsistent in the non‐stationary ARCH(1) case. The asymptotic properties of the unconstrained QMLE for non‐stationary GARCH(1,1)‐type models were studied in Francq and Zakoian (, 2013b), as well as tests of strict stationarity and non‐stationarity.

For ARMA–GARCH models, asymptotic results have been established by Ling and Li (1997, 1998), Ling and McAleer (2003a,b), and Francq and Zakoïan (2004). A comparison of the assumptions used in these papers can be found in the last reference. We refer the reader to Straumann (2005) for a detailed monograph on the estimation of GARCH models, to Francq and Zakoïan (2009a) for a review of the literature, and to Straumann and Mikosch (2006) and Bardet and Wintenberger (2009) for extensions to other conditionally heteroscedastic models. Li, Ling, and McAleer (2002) reviewed the literature on the estimation of ARMA–GARCH models, including in particular the case of nonstationary models.

The proof of the asymptotic normality of the QMLE of ARMA models under the second‐order moment assumption can be found, for instance, in Brockwell and Davis (1991). For ARMA models with infinite variance noise, see Davis, Knight, and Liu (1992), Mikosch et al. (1995), and Kokoszka and Taqqu (1996).

7.6 Exercises

- 7.1 (The distribution of

η

t

is symmetric for GARCH models)

The aim of this exercise is to show property (7.24).

- Show the result for j < 0.

- For

j ≥ 0, explain why

can be written as

can be written as  for some function

h

.

for some function

h

. - Complete the proof of ( 7.24).

- 7.2 (Almost sure convergence to zero at an exponential rate)

Let (ε t ) be a strictly stationary process admitting a moment order s > 0. Show that if ρ ∈ (0, 1), then

a.s.

a.s. - 7.3 (Ergodic theorem for nonintegrable processes)

Prove the following ergodic theorem. If (X t ) is an ergodic and strictly stationary process and if EX 1 exists in ℝ ∪ {+∞}, then

The result is shown in Billingsley (1995, p. 284) for iid variables.

Hint: Consider the truncated variables

where

κ > 0 with

κ

tending to +∞.

where

κ > 0 with

κ

tending to +∞. - 7.4 (Uniform ergodic theorem)

Let {X t (θ)} be a process of the form

7.94

where (η t ) is strictly stationary and ergodic and f is continuous in θ ∈ Θ, Θ being a compact subset of ℝ d .

- Show that the process

is strictly stationary and ergodic.

is strictly stationary and ergodic. - Does the property still hold true if X t (θ) is not of the form (7.94), but it is assumed that {X t (θ)} is strictly stationary and ergodic and that X t (θ) is a continuous function of θ ?

- Show that the process

- 7.5 (OLS estimator of a GARCH)

In the framework of the GARCH(p, q) model (7.1), an OLS estimator of θ is defined as any measurable solution

of

of

where

and

is defined by (7.4) with, for instance, initial values given by (7.6) or (7.7). Note that the estimator is unconstrained and that the variable

is defined by (7.4) with, for instance, initial values given by (7.6) or (7.7). Note that the estimator is unconstrained and that the variable  can take negative values. Similarly, a constrained OLS estimator is defined by

can take negative values. Similarly, a constrained OLS estimator is defined by

The aim of this exercise is to show that under the assumptions of Theorem 7.1, and if

, the constrained and unconstrained OLS estimators are strongly consistent. We consider the theoretical criterion

, the constrained and unconstrained OLS estimators are strongly consistent. We consider the theoretical criterion

- Show that

almost surely as

n → ∞ .

almost surely as

n → ∞ .

- Show that the asymptotic criterion is minimised at

θ

0

,

and that θ 0 is the unique minimum.

- Prove that

almost surely as

n → ∞.

almost surely as

n → ∞. - Show that

almost surely as

n → ∞.

almost surely as

n → ∞.

- Show that

- 7.6 (The mean of the squares of the normalised residuals is equal to 1)

For a GARCH model, estimated by QML with initial values set to zero, the normalized residuals are defined by

,

t = 1, …, n

. Show that almost surely, for Θ large enough,

,

t = 1, …, n

. Show that almost surely, for Θ large enough,

Hint: Note that for all c > 0 and all θ ∈ Θ, there exists

such that

such that  for all

t ≥ 0, and consider the function

for all

t ≥ 0, and consider the function  .

. - 7.7 (

block‐diagonal)

block‐diagonal)Show that

have the block‐diagonal form given in Theorem 7.5 when the distribution of

η

t

is symmetric.

have the block‐diagonal form given in Theorem 7.5 when the distribution of

η

t

is symmetric. - 7.8 (Forms of ℐ and

in the AR(1)‐ARCH(1) case)

in the AR(1)‐ARCH(1) case)We consider the QML estimation of the AR(1)‐ARCH(1) model

assuming that ω 0 = 1 is known and without specifying the distribution of η t .

- Give the explicit form of the matrices

in Theorem 7.5 (with an obvious adaptation of the notation because the parameter here is (a

0, α

0)).

in Theorem 7.5 (with an obvious adaptation of the notation because the parameter here is (a

0, α

0)). - Give the block‐diagonal form of these matrices when the distribution of

η

t

is symmetric, and verify that the asymptotic variance of the estimator of the ARCH parameter

- does not depend on the AR parameter, and

- is the same as for the estimator of a pure ARCH (without the AR part).

- Compute Σ when α 0 = 0. Is the asymptotic variance of the estimator of a 0 the same as that obtained when estimating an AR(1)? Verify the results obtained by simulation in the corresponding column of Table 7.3.

- Give the explicit form of the matrices

- 7.9 (A useful result in showing asymptotic normality)

Let (J t (θ)) be a sequence of random matrices, which are function of a vector of parameters θ . We consider an estimator

which strongly converges to the vector

θ

0

. Assume that

which strongly converges to the vector

θ

0

. Assume that

where J is a matrix. Show that if for all ε > 0 there exists a neighbourhood V(θ 0) of θ 0 such that

7.95

where ‖ ⋅ ‖ denotes a matrix norm, then

Give an example showing that condition (7.95) is not necessary for the latter convergence to hold in probability.

- 7.10 (A lower bound for the asymptotic variance of the QMLE of an ARCH)

Show that, for the ARCH( q ) model, under the assumptions of Theorem 7.2,

in the sense that the difference is a positive semi‐definite matrix.

Hint: Compute

and show that

and show that  is a variance matrix.

is a variance matrix. - 7.11 (A striking property of

)

)For a GARCH(p, q) model we have, under the assumptions of Theorem 7.2,

The objective of the exercise is to show that

7.96

- Show the property in the ARCH case.

Hint: Compute

,

,  and

and  .

. - In the GARCH case, let

. Show that

. Show that

- Complete the proof of (7.96).

- Show the property in the ARCH case.

- 7.12 (A condition required for the generalised Bartlett formula)

Using (7.24), show that if the distribution of η t is symmetric and if

, then formula (B.13) holds true, that is,

, then formula (B.13) holds true, that is,

- 7.13 (Constrained QMLE of the parameter

α

0

of a nonstationary ARCH(1) process)

Jensen and Rahbek (2004a) consider the ARCH(1) model (7.15), in which the parameter ω 0 > 0 is assumed to be known ( ω 0 = 1 for instance) and where only α 0 is unknown. They work with the constrained QMLE of α 0 defined by

7.97

where

. Assume therefore that

ω

0 = 1 and suppose that the nonstationarity condition (7.16) is satisfied.

. Assume therefore that

ω

0 = 1 and suppose that the nonstationarity condition (7.16) is satisfied.- Verify that

and that

- Prove that

- Determine the almost sure limit of

- Show that for all

, almost surely

, almost surely

- Prove that if

almost surely (see Exercise 7.14) then

almost surely (see Exercise 7.14) then

- Does the result change when

and

ω

0 ≠ 1?

and

ω

0 ≠ 1? - Discuss the practical usefulness of this result for estimating ARCH models.

- Verify that

- 7.14 (Strong consistency of Jensen and Rahbek's estimator)

We consider the framework of Exercise 7.13, and follow the lines of the proof of (7.19)

- Show that

converges almost surely to

α

0

when

ω

0 = 1.

converges almost surely to

α

0

when

ω

0 = 1. - Does the result change if

is replaced by

is replaced by  and if

ω

and

ω

0

are arbitrary positive numbers? Does it entail the convergence result (7.19)?

and if

ω

and

ω

0

are arbitrary positive numbers? Does it entail the convergence result (7.19)?

- Show that