Now let's get back to the task of writing a neural network and thinking of it in terms of a mathematical expression expressed as a graph. Recall that the code looks something like this:

import (

G "gorgonia.org/gorgonia"

)

var Float tensor.Float = tensor.Float64

func main() {

g := G.NewGraph()

x := G.NewMatrix(g, Float, G.WithName("x"), G.WithShape(N, 728))

w := G.NewMatrix(g, Float, G.WithName("w"), G.WithShape(728, 800),

G.WithInit(G.Uniform(1.0)))

b := G.NewMatrix(g, Float, G.WithName("b"), G.WithShape(N, 800),

G.WithInit(G.Zeroes()))

xw, _ := G.Mul(x, w)

xwb, _ := G.Add(xw, b)

act, _ := G.Sigmoid(xwb)

w2 := G.NewMatrix(g, Float, G.WithName("w2"), G.WithShape(800, 10),

G.WithInit(G.Uniform(1.0)))

b2 := G.NewMatrix(g, Float, G.WithName("b2"), G.WithShape(N, 10),

G.WithInit(G.Zeroes()))

xw2, _ := G.Mul(act, w2)

xwb2, _ := G.Add(xw2, b2)

sm, _ := G.SoftMax(xwb2)

}

Now let's go through this code.

First, we create a new expression graph with g := G.NewGraph(). An expression graph is a holder object to hold the mathematical expression. Why would we want an expression graph? The mathematical expression that represents a neural network is contained in the *gorgonia.ExpressionGraph object.

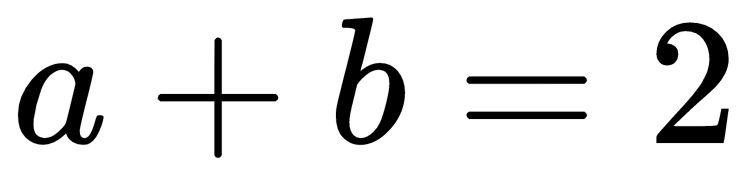

Mathematical expressions are only interesting if we use variables.  is quite an uninteresting expression because you can't do much with this expression. The only thing you can do with it is to evaluate the expression and see if it returns true or false.

is quite an uninteresting expression because you can't do much with this expression. The only thing you can do with it is to evaluate the expression and see if it returns true or false.  is slightly more interesting. But, then again, a can only be 1.

is slightly more interesting. But, then again, a can only be 1.

Consider, however, the expression  . With two variables, it suddenly becomes a lot more interesting. The values that a and b can take are dependent on one another, and there is a whole range of possible pairs of numbers that can fit into a and b.

. With two variables, it suddenly becomes a lot more interesting. The values that a and b can take are dependent on one another, and there is a whole range of possible pairs of numbers that can fit into a and b.

Recall that each layer of neural network is just a mathematical expression that reads like this:  . In this case, w, x, and b are variables. So, we create them. Note that in this case, Gorgonia treats variables as a programming language does: you have to tell the system what the variable represents.

. In this case, w, x, and b are variables. So, we create them. Note that in this case, Gorgonia treats variables as a programming language does: you have to tell the system what the variable represents.

In Go, you would do that by typing var x Foo, which tells the Go compiler that x should be a type Foo. In Gorgonia, the mathematical variables are declared by using NewMatrix, NewVector, NewScalar, and NewTensor. x := G.NewMatrix(g, Float, G.WithName, G.WithShape(N, 728)) simply says x is a matrix in expression graph g with a name x, and has a shape of (N, 728).

Here, readers may observe that 728 is a familiar number. In fact, what this tells us is that x represents the input, which is N images. x, therefore, is a matrix of N rows, where each row represents a single image (728 floating points).

The eagle-eyed reader would note that w and b have extra options, where the declaration of x does not. You see, NewMatrix simply declares the variable in the expression graph. There is no value associated with it. This allows for flexibility when the value is attached to a variable. However, with regards to the weight matrix, we want to start the equation with some initial values. G.WithInit(G.Uniform(1.0)) is a construction option that populates the weight matrix with values pulled from a uniform distribution with a gain of 1.0. If you imagine yourself coding in another language specific to building neural networks, it'd look something like this: var w Matrix(728, 800) = Uniform(1.0).

Following that, we simply write out the mathematical equation:  is simply a matrix multiplication between

is simply a matrix multiplication between  and

and  ; hence, xw, _ := G.Mul(x, w). At this point, it should be clarified that we are merely describing the computation that is supposed to happen. It is yet to happen. In this way, it is not dissimilar to writing a program; writing code does not equal running the program.

; hence, xw, _ := G.Mul(x, w). At this point, it should be clarified that we are merely describing the computation that is supposed to happen. It is yet to happen. In this way, it is not dissimilar to writing a program; writing code does not equal running the program.

G.Mul and most operations in Gorgonia actually returns an error. For the purposes of this demonstration, we're ignoring any errors that may arise from symbolically multiplying x and w. What could possibly go wrong with simple multiplication? Well, we're dealing with matrix multiplication, so the shapes must have matching inner dimensions. A (N, 728) matrix can only be multiplied by a (728, M) matrix, which leads to an (N, M) matrix. If the second matrix does not have 728 rows, then an error will happen. So, in real production code, error handling is a must.

Speaking of must, Gorgonia comes with a utility function, called, G.Must. Taking a cue from the text/template and html/template libraries found in the standard library, the G.Must function panics when an error occur. To use, simply write this: xw := G.Must(G.Mul(x,w)).

After the inputs are multiplied with the weights, we add to the biases using G.Add(xw, b). Again, errors may occur, but in this example, we're eliding the checks of errors.

Lastly, we take the result and perform a non-linearity: a sigmoid function, with G.Sigmoid(xwb). This layer is now complete. Its shape, if you follow, would be (N, 800).

The completed layer is then used as an input for the following layer. The next layer has a similar layout as the first layer, except instead of a sigmoid non-linearity, a G.SoftMax is used. This ensures that each row in the resulting matrix sums 1.