- Hadoop Beginner's Guide

- Table of Contents

- Hadoop Beginner's Guide

- Credits

- About the Author

- About the Reviewers

- www.PacktPub.com

- Preface

- 1. What It's All About

- 2. Getting Hadoop Up and Running

- Hadoop on a local Ubuntu host

- Time for action – checking the prerequisites

- Time for action – downloading Hadoop

- Time for action – setting up SSH

- Time for action – using Hadoop to calculate Pi

- Time for action – configuring the pseudo-distributed mode

- Time for action – changing the base HDFS directory

- Time for action – formatting the NameNode

- Time for action – starting Hadoop

- Time for action – using HDFS

- Time for action – WordCount, the Hello World of MapReduce

- Using Elastic MapReduce

- Time for action – WordCount on EMR using the management console

- Comparison of local versus EMR Hadoop

- Summary

- 3. Understanding MapReduce

- Key/value pairs

- The Hadoop Java API for MapReduce

- Writing MapReduce programs

- Time for action – setting up the classpath

- Time for action – implementing WordCount

- Time for action – building a JAR file

- Time for action – running WordCount on a local Hadoop cluster

- Time for action – running WordCount on EMR

- Time for action – WordCount the easy way

- Walking through a run of WordCount

- Startup

- Splitting the input

- Task assignment

- Task startup

- Ongoing JobTracker monitoring

- Mapper input

- Mapper execution

- Mapper output and reduce input

- Partitioning

- The optional partition function

- Reducer input

- Reducer execution

- Reducer output

- Shutdown

- That's all there is to it!

- Apart from the combiner…maybe

- Time for action – WordCount with a combiner

- Time for action – fixing WordCount to work with a combiner

- Hadoop-specific data types

- Time for action – using the Writable wrapper classes

- Input/output

- Summary

- 4. Developing MapReduce Programs

- Using languages other than Java with Hadoop

- Time for action – implementing WordCount using Streaming

- Analyzing a large dataset

- Time for action – summarizing the UFO data

- Time for action – summarizing the shape data

- Time for action – correlating of sighting duration to UFO shape

- Time for action – performing the shape/time analysis from the command line

- Time for action – using ChainMapper for field validation/analysis

- Time for action – using the Distributed Cache to improve location output

- Counters, status, and other output

- Time for action – creating counters, task states, and writing log output

- Summary

- 5. Advanced MapReduce Techniques

- Simple, advanced, and in-between

- Joins

- Time for action – reduce-side join using MultipleInputs

- Graph algorithms

- Time for action – representing the graph

- Time for action – creating the source code

- Time for action – the first run

- Time for action – the second run

- Time for action – the third run

- Time for action – the fourth and last run

- Using language-independent data structures

- Time for action – getting and installing Avro

- Time for action – defining the schema

- Time for action – creating the source Avro data with Ruby

- Time for action – consuming the Avro data with Java

- Time for action – generating shape summaries in MapReduce

- Time for action – examining the output data with Ruby

- Time for action – examining the output data with Java

- Summary

- 6. When Things Break

- Failure

- Time for action – killing a DataNode process

- Time for action – the replication factor in action

- Time for action – intentionally causing missing blocks

- Time for action – killing a TaskTracker process

- Time for action – killing the JobTracker

- Time for action – killing the NameNode process

- What just happened?

- Starting a replacement NameNode

- The role of the NameNode in more detail

- File systems, files, blocks, and nodes

- The single most important piece of data in the cluster – fsimage

- DataNode startup

- Safe mode

- SecondaryNameNode

- So what to do when the NameNode process has a critical failure?

- BackupNode/CheckpointNode and NameNode HA

- Hardware failure

- Host failure

- Host corruption

- The risk of correlated failures

- Task failure due to software

- What just happened?

- Time for action – causing task failure

- Time for action – handling dirty data by using skip mode

- Summary

- 7. Keeping Things Running

- A note on EMR

- Hadoop configuration properties

- Time for action – browsing default properties

- Setting up a cluster

- Time for action – examining the default rack configuration

- Time for action – adding a rack awareness script

- Cluster access control

- Time for action – demonstrating the default security

- Managing the NameNode

- Time for action – adding an additional fsimage location

- Time for action – swapping to a new NameNode host

- Managing HDFS

- MapReduce management

- Time for action – changing job priorities and killing a job

- Scaling

- Summary

- 8. A Relational View on Data with Hive

- Overview of Hive

- Setting up Hive

- Time for action – installing Hive

- Using Hive

- Time for action – creating a table for the UFO data

- Time for action – inserting the UFO data

- Time for action – validating the table

- Time for action – redefining the table with the correct column separator

- Time for action – creating a table from an existing file

- Time for action – performing a join

- Time for action – using views

- Time for action – exporting query output

- Time for action – making a partitioned UFO sighting table

- Time for action – adding a new User Defined Function (UDF)

- Hive on Amazon Web Services

- Time for action – running UFO analysis on EMR

- Summary

- 9. Working with Relational Databases

- Common data paths

- Setting up MySQL

- Time for action – installing and setting up MySQL

- Time for action – configuring MySQL to allow remote connections

- Time for action – setting up the employee database

- Getting data into Hadoop

- Time for action – downloading and configuring Sqoop

- Time for action – exporting data from MySQL to HDFS

- Time for action – exporting data from MySQL into Hive

- Time for action – a more selective import

- Time for action – using a type mapping

- Time for action – importing data from a raw query

- Getting data out of Hadoop

- Time for action – importing data from Hadoop into MySQL

- Time for action – importing Hive data into MySQL

- Time for action – fixing the mapping and re-running the export

- AWS considerations

- Summary

- 10. Data Collection with Flume

- A note about AWS

- Data data everywhere...

- Time for action – getting web server data into Hadoop

- Introducing Apache Flume

- Time for action – installing and configuring Flume

- Time for action – capturing network traffic in a log file

- Time for action – logging to the console

- Time for action – capturing the output of a command to a flat file

- Time for action – capturing a remote file in a local flat file

- Time for action – writing network traffic onto HDFS

- Time for action – adding timestamps

- Time for action – multi level Flume networks

- Time for action – writing to multiple sinks

- The bigger picture

- Summary

- 11. Where to Go Next

- A. Pop Quiz Answers

- Index

Regardless of these limitations, let's demonstrate that, in the right situations, we can use Sqoop to directly export data stored in Hive.

- Remove any existing data in the employee table:

$ echo "truncate employees" | mysql –u hadoopuser –p hadooptestYou will receive the following response:

Query OK, 0 rows affected (0.01 sec) - Check the contents of the Hive warehouse for the employee table:

$ hadoop fs –ls /user/hive/warehouse/employeesYou will receive the following response:

Found 1 items … /user/hive/warehouse/employees/part-m-00000

- Perform the Sqoop export:

sqoop export --connect jdbc:mysql://10.0.0.100/hadooptest --username hadoopuser –P --table employees --export-dir /user/hive/warehouse/employees --input-fields-terminated-by '�01' --input-lines-terminated-by ' '

Firstly, we truncated the employees table in MySQL to remove any existing data and then confirmed the employee table data was where we expected it to be.

The

Sqoop export command is like before; the only changes are the different source location for the data and the addition of explicit field and line terminators. Recall that Hive, by default, uses ASCII code 001 and

for its field and line terminators, respectively (also recall, though, that we have previously imported files into Hive with other separators, so this is something that always needs to be checked).

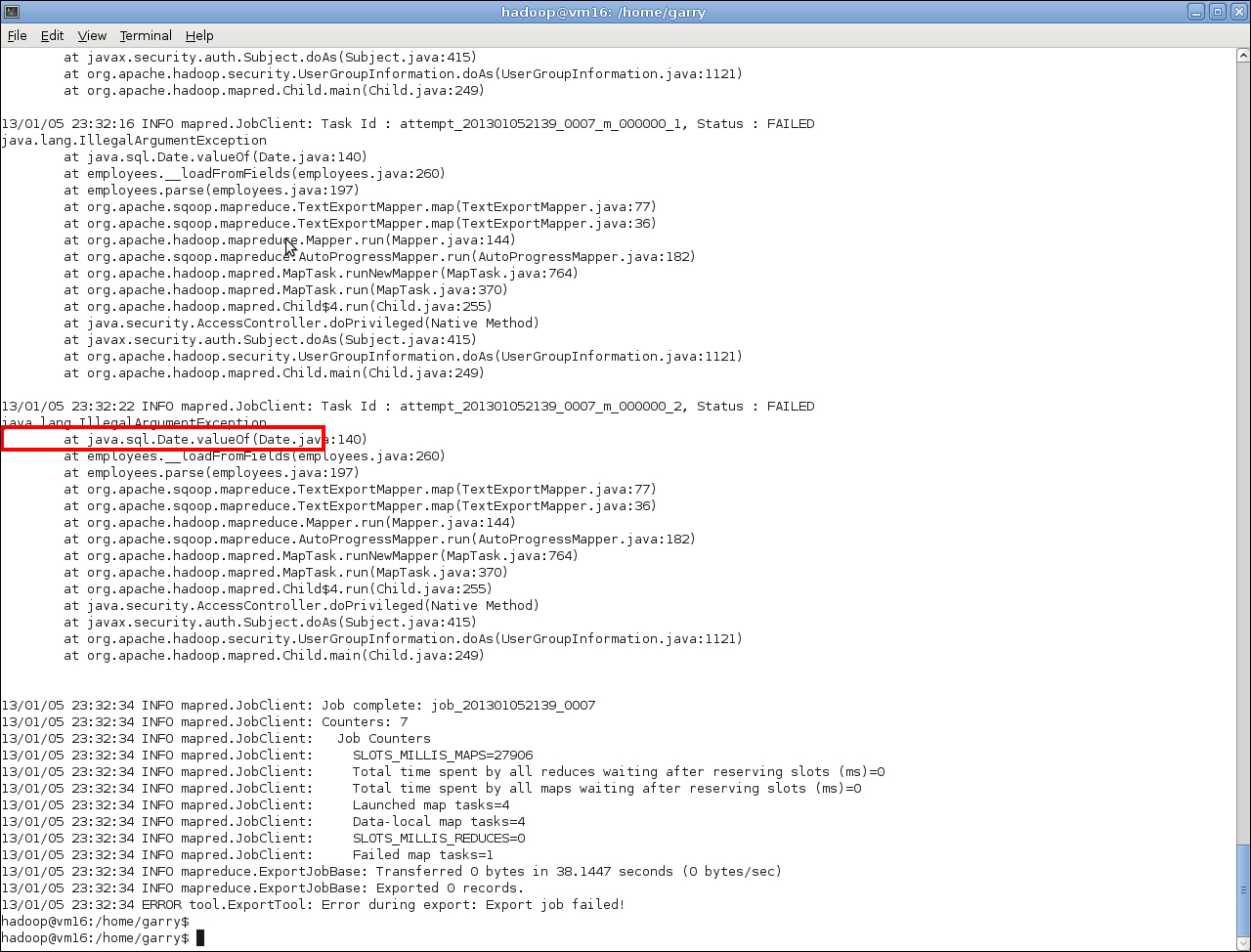

We execute the Sqoop command and watch it fail due to Java IllegalArgumentExceptions

when trying to create instances of java.sql.Date.

We are now hitting the reverse of the problem we encountered earlier; the original type in the source MySQL table had a datatype not supported by Hive, and we converted the data to match the available type of TIMESTAMP. When exporting data back again, however, we are now trying to create a DATE using a TIMESTAMP value, which is not possible without some conversion.

The lesson here is that our earlier approach of doing a one-way conversion only worked for as long as we only had data flowing in one direction. As soon as we need bi-directional data transfer, mismatched types between Hive and the relational store add complexity and require the insertion of conversion routines.

-

No Comment