In this section, we'll set up learning and optimization functions, compile the model, fit it to training and testing data, and then actually run the model and see an animation indicating the effects on loss and accuracy.

In the following screenshot, we are compiling our model with loss, optimizer, and metrics:

The loss function is a mathematical function that tells optimizer how well it's doing. An optimizer function is a mathematical program that searches the available parameters in order to minimize the loss function. The metrics parameter are outputs from your machine learning model that should be human readable so that you can understand how well your model is running. Now, these loss and optimizer parameters are laden with math. By and large, you can approach this as a cookbook. When you are running a machine learning model with Keras, you should effectively choose adam (it's the default). In terms of a loss function, when you're working with classification problems, such as the MNIST digits, you should use categorical cross-entropy. This cookbook-type formula should serve you well.

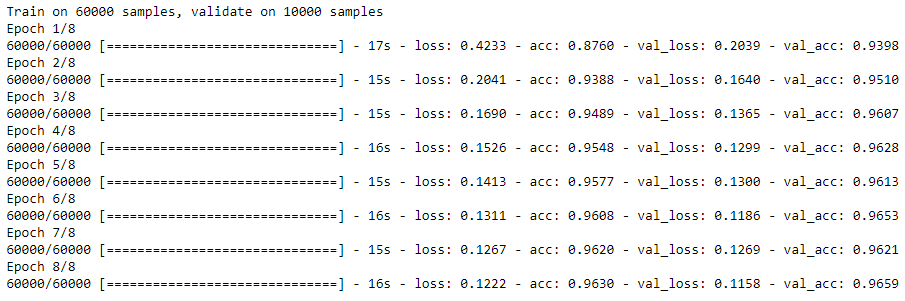

Now, we are going to prepare to fit the model with our x training data—which consists of the actual MNIST digit images—and the y training parameter, which consists of the zero to nine categorical output labels. One new concept we have here is batch_size. This is the number of images per execution loop. Generally, this is limited by the available memory, but smaller batch sizes (32 to 64) generally perform better. And how about this strange word: epoch. Epochs simply refer to the number of loops. For example, when we say eight epochs, what we mean is that the machine learning model will loop over the training data eight times and will use the testing data to see how accurate the model has become eight times. As a model repeatedly looks at the same data, it improves in accuracy, as you can see in the following screenshot:

Finally, we come to the validation data, also known as the testing data. This is actually used to compute the accuracy. At the end of each epoch, the model is partially trained, and then the testing data is run through the model generating a set of trial predictions, which are used to score the accuracy. Machine learning involves an awful lot of waiting on the part of humans. We'll go ahead and skip the progress of each epoch; you'll get plenty of opportunities to watch these progress bars grow on your own when you run these samples.

Now, let's talk a little bit about the preceding output. As the progress bar grows, you can see the number of sample images it's running through. But there's also the loss function and the metrics parameter; here, we're using accuracy. So, the loss function that feeds back into the learner, and this is really how machine learning learns; it's trying to minimize that loss by iteratively setting the numerical parameters inside the model in order to get that loss number to go down. The accuracy is there so that you can understand what's going on. In this case, the accuracy represents how often the model guesses the right digit. So, just in terms of thinking of this as a cookbook, categorical cross-entropy is the loss function you effectively always want to use for a classification problem like this, and adam is the learning algorithm that is the most sensible default to select; accuracy is a great output metrics that you can use to see how well your model's running.