Now, let's have a quick look at the Java code doing the convolution, and then build the Java application with the six filter types we have already seen, along with some different images of course.

This is the main class, EdgeDetection:

package ramo.klevis.ml;

import javax.imageio.ImageIO;

import java.awt.*;

import java.awt.image.BufferedImage;

import java.io.File;

import java.io.IOException;

import java.util.HashMap;

public class EdgeDetection {

We'll start by defining the six filters, with their values, that we saw in the previous section:

public static final String HORIZONTAL_FILTER = "Horizontal Filter";

public static final String VERTICAL_FILTER = "Vertical Filter";

public static final String SOBEL_FILTER_VERTICAL = "Sobel Vertical Filter";

public static final String SOBEL_FILTER_HORIZONTAL = "Sobel Horizontal Filter";

public static final String SCHARR_FILTER_VETICAL = "Scharr Vertical Filter";

public static final String SCHARR_FILTER_HORIZONTAL = "Scharr Horizontal Filter";

private static final double[][] FILTER_VERTICAL = {{1, 0, -1}, {1, 0, -1}, {1, 0, -1}};

private static final double[][] FILTER_HORIZONTAL = {{1, 1, 1}, {0, 0, 0}, {-1, -1, -1}};

private static final double[][] FILTER_SOBEL_V = {{1, 0, -1}, {2, 0, -2}, {1, 0, -1}};

private static final double[][] FILTER_SOBEL_H = {{1, 2, 1}, {0, 0, 0}, {-1, -2, -1}};

private static final double[][] FILTER_SCHARR_V = {{3, 0, -3}, {10, 0, -10}, {3, 0, -3}};

private static final double[][] FILTER_SCHARR_H = {{3, 10, 3}, {0, 0, 0}, {-3, -10, -3}};

Let's define our main method, detectEdges():

private final HashMap<String, double[][]> filterMap;

public EdgeDetection() {

filterMap = buildFilterMap();

}

public File detectEdges(BufferedImage bufferedImage, String selectedFilter) throws IOException {

double[][][] image = transformImageToArray(bufferedImage);

double[][] filter = filterMap.get(selectedFilter);

double[][] convolvedPixels = applyConvolution(bufferedImage.getWidth(),

bufferedImage.getHeight(), image, filter);

return createImageFromConvolutionMatrix(bufferedImage, convolvedPixels);

}

detectEdges is exposed to the graphical user interface, in order to detect edges, and it takes two inputs: the colored image, bufferedImage, and the filter selected by the user, selectedFilter. It transforms this into a three-dimensional matrix using the transformImageToArray() function. We transform it into a three-dimensional matrix because we have an RGB-colored image.

For each of the colors—red, green, and blue—we build a two-dimensional matrix:

private double[][][] transformImageToArray(BufferedImage bufferedImage) {

int width = bufferedImage.getWidth();

int height = bufferedImage.getHeight();

double[][][] image = new double[3][height][width];

for (int i = 0; i < height; i++) {

for (int j = 0; j < width; j++) {

Color color = new Color(bufferedImage.getRGB(j, i));

image[0][i][j] = color.getRed();

image[1][i][j] = color.getGreen();

image[2][i][j] = color.getBlue();

}

}

return image;

}

Sometimes, the third dimension is called a soul of the channel, or the channels. In this case, we have three channels, but with convolution, we'll see that it's not that uncommon to see quite high numbers of channels.

We're ready to apply the convolution:

private double[][] applyConvolution(int width, int height, double[][][] image, double[][] filter) {

Convolution convolution = new Convolution();

double[][] redConv = convolution.convolutionType2(image[0], height, width, filter, 3, 3, 1);

double[][] greenConv = convolution.convolutionType2(image[1], height, width, filter, 3, 3, 1);

double[][] blueConv = convolution.convolutionType2(image[2], height, width, filter, 3, 3, 1);

double[][] finalConv = new double[redConv.length][redConv[0].length];

for (int i = 0; i < redConv.length; i++) {

for (int j = 0; j < redConv[i].length; j++) {

finalConv[i][j] = redConv[i][j] + greenConv[i][j] + blueConv[i][j];

}

}

return finalConv;

}

Notice that we're applying a convolution separately for each of the basic colors:

- With convolution.convolutionType2(image[0], height, width, filter, 3, 3, 1);, we apply the two-dimensional matrix of red

- With convolution.convolutionType2(image[1], height, width, filter, 3, 3, 1);, we apply the two-dimensional matrix of green

- With convolution.convolutionType2(image[2], height, width, filter, 3, 3, 1);, we apply the two-dimensional matrix of blue

Then, with double[][], we get back three two-dimensional matrices of the three colors, which means they are convolved. The final convolved matrix, double[][] finalConv, will be the addition of redConv[i][j] + greenConv[i][j] + blueConv[i][j];. We'll go into more detail when we build the application, but for now, the reason why we add this together is because we aren't interested in a color any more, or not in the original form at least, but we are interested in the edge. So, as we will see, in the output image, the high-level features such as the edge, will be black and white because we are adding the three color convolutions together.

Now we have double[][] convolvedPixels, the two-dimensional convolved pixels defined in detectEdges(), and we need to show it in createImageFromConvolutionMatrix():

private File createImageFromConvolutionMatrix(BufferedImage originalImage, double[][] imageRGB) throws IOException {

BufferedImage writeBackImage = new BufferedImage(originalImage.getWidth(), originalImage.getHeight(), BufferedImage.TYPE_INT_RGB);

for (int i = 0; i < imageRGB.length; i++) {

for (int j = 0; j < imageRGB[i].length; j++) {

Color color = new Color(fixOutOfRangeRGBValues(imageRGB[i][j]),

fixOutOfRangeRGBValues(imageRGB[i][j]),

fixOutOfRangeRGBValues(imageRGB[i][j]));

writeBackImage.setRGB(j, i, color.getRGB());

}

}

File outputFile = new File("EdgeDetection/edgesTmp.png");

ImageIO.write(writeBackImage, "png", outputFile);

return outputFile;

}

First, we need to transform these pixels into an image. We do that using fixOutOfRangeRGBValues(imageRGB[i][j]), fixOutOfRangeRGBValues(imageRGB[i][j]));.

The only thing we want to see right now is the method, fixOutOfRangeRGBValues:

private int fixOutOfRangeRGBValues(double value) {

if (value < 0.0) {

value = -value;

}

if (value > 255) {

return 255;

} else {

return (int) value;

}

}

This takes the absolute value of the pixel, because, as we saw, sometimes we have negative values when the difference isn't from black to white, but actually from white to black. For us, it's not important since we want to detect only the edges, so we take the absolute value, with values that are greater than 255, as just 255 as the maximum, since Java and other similar languages, such as C#, can't handle more than 255 in the RGB format. We simply write it as edge, .png file EdgeDetection/edgesTmp.png.

Now, let's see this application with a number of samples.

Let's try a horizontal filter:

This edge is quite narrow this image has sufficient pixels:

Let's try with the Vertical Filter, which gives us that looks like an edge:

Let's try a Vertical Filter on a more complex image. As you can see in the following screenshot, all the vertical lines are detected:

Now, let's see the same image with a Horizontal Filter:

The horizontal filter didn't detect any of the vertical edges, but it actually detected the horizontal ones.

Let's see what the Sobel Horizontal Filter does:

It simply added a bit more light, and this is because adding more weight means you make these edges a bit wider.

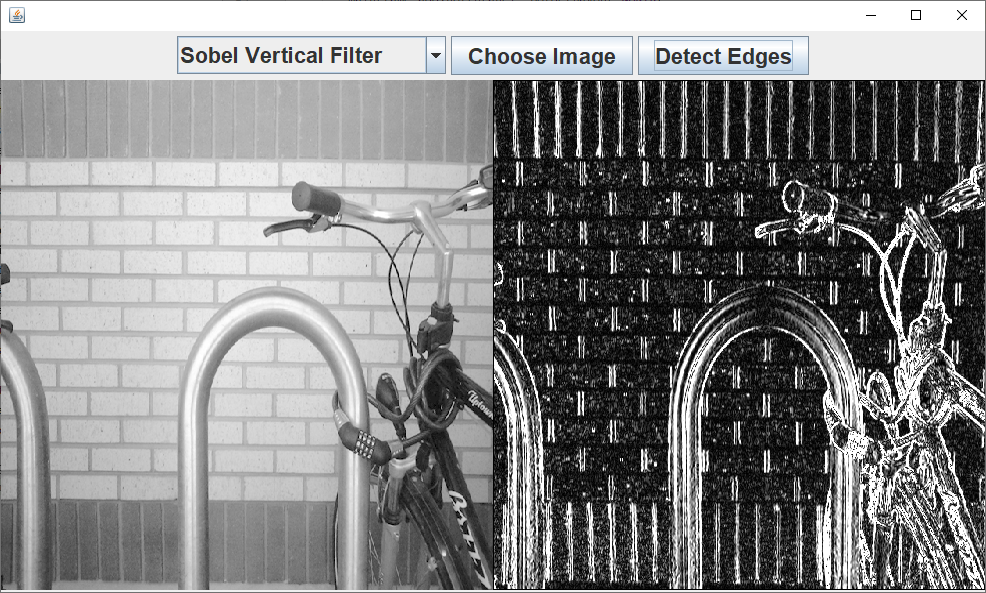

Let's now look at the Sobel Vertical Filter:

Again, this should be brighter.

And here's the Scharr Vertical Filter:

Not surprisingly, the Scharr Vertical Filter adds more weight, so we see more bright edges. The edges are wider and are more sensitive to the color changes from one side to the other—the horizontal filter wouldn't be any different.

In our color image, of a butterfly, the horizontal filter will be something like this:

Let's see the Sobel Horizontal Filter:

It's a bit brighter.

And let's see what the equivalent Scharr Horizontal Filter does:

It's also quite a bit brighter.

Let's also try the Scharr Vertical Filter:

Feel free to try it on your own images, because it won't be strange if you find a filter that actually performs better—sometimes, the results of the filters depend on the images.

The question now is how to find the best filter for our neural network. Is it the Sobel one, or maybe the Scharr, which is sensitive to the changes, or maybe a very simple filter, such as the vertical or horizontal one?

The answer, of course, isn't straightforward, and, as we mentioned, it depends partly on the images, their color, and low levels. So why don't we just let the neural network choose the filter? Aren't the neural networks the best at predicting things? The neural network will have to learn which filter is the best for the problem it's trying to predict.

Basically, the neural network will learn the classical ways we saw in the hidden layers of the neurons—in the dense layers. This will be exactly the same, just the operation is not the simple multiplication operation; it will be convolution multiplication. But these are just normal weights that the neural network has to learn:

Instead of giving the values, allow the neural network to work to find these weights.

This is a fundamental concept that enables deep neural networks to detect more specialized features, such as edge detection, and even more high-level features, such as eyes, the wheels of cars, and faces. And we'll see that the deeper you go with convolution layers, the more high-level features you detect.