Inference in the linear regression context aims to draw conclusions about the true relationship in the population from the sample data. This includes tests of hypothesis about the significance of the overall relationship or the values of particular coefficients, as well as estimates of confidence intervals.

The key ingredient for statistical inference is a test statistic with a known distribution. We can use it to assume that the null hypothesis is true and compute the probability of observing the value for this statistic in the sample, familiar as the p-value. If the p-value drops below a significance threshold (typically five percent) then we reject the hypothesis because it makes the actual sample value very unlikely. At the same time, we accept that the p-value reflects the probability that we are wrong in rejecting what is, in fact, a correct hypothesis.

In addition to the five GMT assumptions, the classical linear model assumes normality—the population error is normally distributed and independent of the input variables. This assumption implies that the output variable is normally distributed, conditional on the input variables. This strong assumption permits the derivation of the exact distribution of the coefficients, which in turn implies exact distributions of the test statistics required for similarly exact hypotheses tests in small samples. This assumption often fails—asset returns, for instance, are not normally distributed—but, fortunately, the methods used under normality are also approximately valid.

We have the following distributional characteristics and test statistics, approximately under GMT assumptions 1–5, and exactly when normality holds:

- The parameter estimates follow a multivariate normal distribution:

.

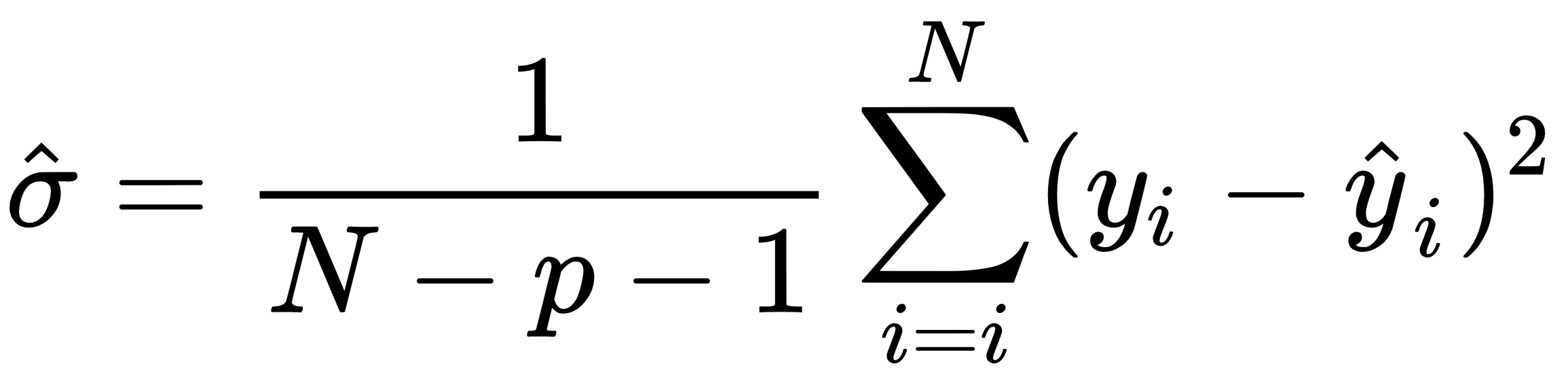

. - Under GMT 1–5, the parameter estimates are already unbiased and we can get an unbiased estimate of

, the constant error variance, using

, the constant error variance, using  .

. - The t statistic for a hypothesis tests about an individual coefficient

is

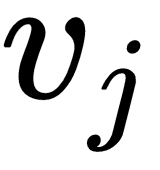

is  and follows a t distribution with N-p-1 degrees of freedom where

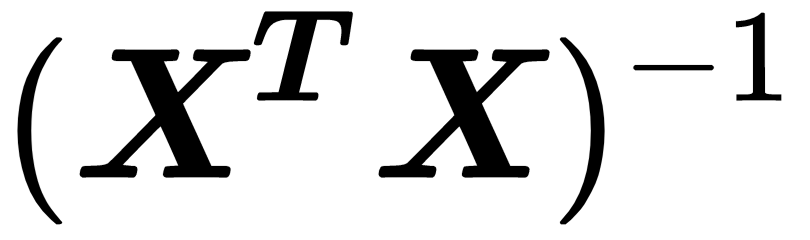

and follows a t distribution with N-p-1 degrees of freedom where  is the j's element of the diagonal of

is the j's element of the diagonal of  .

. - The t distribution converges to the normal distribution and since the 97.5 quantile of the normal distribution is 1.96, a useful rule of thumb for a 95% confidence interval around a parameter estimate is

. An interval that includes zero implies that we can't reject the null hypothesis that the true parameter is zero and, hence, irrelevant for the model.

. An interval that includes zero implies that we can't reject the null hypothesis that the true parameter is zero and, hence, irrelevant for the model. - The F statistic allows for tests of restrictions on several parameters, including whether the entire regression is significant. It measures the change (reduction) in the RSS that results from additional variables.

- Finally, the Lagrange Multiplier (LM) test is an alternative to the F test to restrict multiple restrictions.