The kNN classification algorithm in the Shark-ML library is implemented in the NearestNeighborModel class. An object of this class can be initialized with different nearest neighbor algorithms. The two main types are the brute-force option and the space partitioning trees option. In this sample, we will use the TreeNearestNeighbors algorithm, because it has better performance for medium-sized datasets. The following code block shows the use of the kNN algorithm with the Shark-ML library:

void KNNClassification(const ClassificationDataset& train,

const ClassificationDataset& test,

unsigned int num_classes) {

KDTree<RealVector> tree(train.inputs());

TreeNearestNeighbors<RealVector, unsigned int> nn_alg(train, &tree);

const unsigned int k = 5;

NearestNeighborModel<RealVector, unsigned int> knn(&nn_alg, k);

// estimate accuracy

ZeroOneLoss<unsigned int> loss;

Data<unsigned int> predictions = knn(test.inputs());

double accuracy = 1. - loss.eval(test.labels(), predictions);

// process results

for (std::size_t i = 0; i != test.numberOfElements(); i++) {

auto cluser_idx = predictions.element(i);

auto element = test.inputs().element(i);

...

}

}

The first step was the creation of the object of the KDTree type, which defined the KD-Tree space partitioning of our training samples. Then, we initialized the object of the TreeNearestNeighbors class, which takes the instances of previously created tree partitioning and the training dataset. We also predefined the k parameter of the kNN algorithm and initialized the object of the NearestNeighborModel class with the algorithm instance and the k parameter.

This model doesn't have a particular training method because the kNN algorithm uses all the data for evaluation. The building of a KD-Tree can, therefore, be interpreted as the training step in this case, because the tree doesn't change the evaluation data. So, after we initialized the object that implements the kNN algorithm, we can use it as a functor to classify the set of test samples. This API technique is the same for all classification models in the Shark-ML library. For the accuracy evaluation metric, we also used the object of the ZeroOneLoss type.

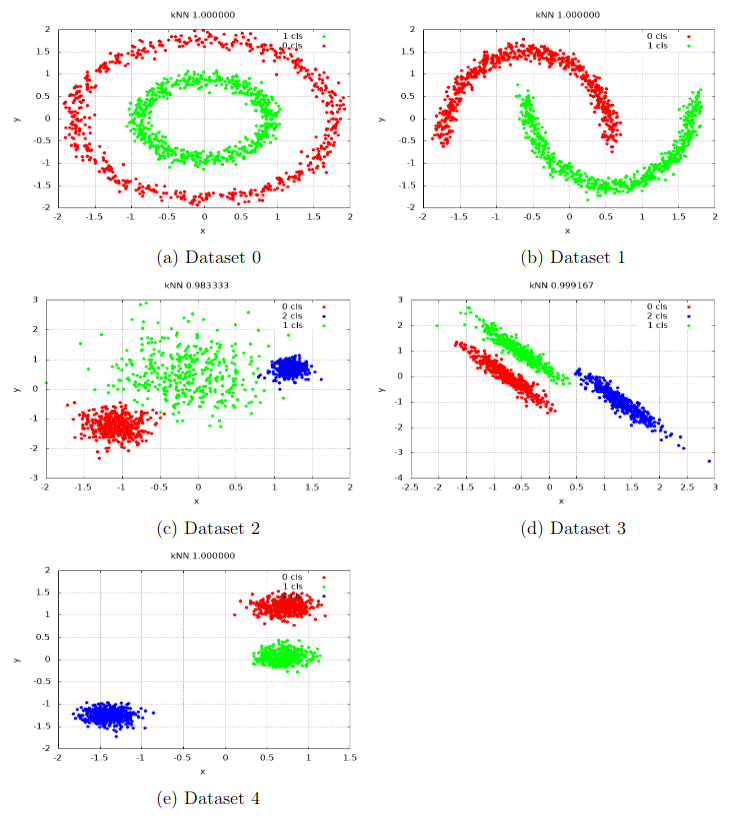

The following screenshot shows the results of applying the Shark-ML implementation of the kNN algorithm to our datasets:

You can see that the Shark-ML kNN algorithm implementation also did the correct classification on all datasets, without any significant number of errors.