Chapter 3

Voice in the User Interface

Andrew Breen, Hung H. Bui, Richard Crouch, Kevin Farrell, Friedrich Faubel, Roberto Gemello, William F. Ganong III, Tim Haulick, Ronald M. Kaplan, Charles L. Ortiz, Peter F. Patel-Schneider, Holger Quast, Adwait Ratnaparkhi, Vlad Sejnoha, Jiaying Shen, Peter Stubley and Paul van Mulbregt

Nuance Communications, Inc.

3.1 Introduction

Voice recognition and synthesis, in conjunction with natural language understanding, are now widely viewed as essential aspects of modern mobile user interfaces (UIs). In recent years, these technologies have evolved from optional ‘add-ons’, which facilitated text entry and supported limited command and control, to the defining aspects of a wide range of mainstream mobile consumer devices, for example in the form of voice-driven smartphone virtual assistants. Some commentators have even likened the recent proliferation of voice recognition and natural language understanding in the UI as the “third revolution” in user interfaces, following the introduction of the graphical UI controlled by a mouse, and the touch screen, as the first and second respectively.

The newfound prominence of these technologies is attributable to two primary factors: their rapidly improving performance, and their ability to overcome the inherent structural limitations of the prevalent ‘shrunken desktop’ mobile UI by accurately deducing user intent from spoken input.

The explosive growth in the use of mobile devices of every sort has been accompanied by an equally precipitous increase in ‘content’, functionality, services, and applications available to the mobile user. This wealth of information is becoming increasingly difficult to organize, find, and manipulate within the visual mobile desktop, with its hierarchies of folders, dozens if not hundreds of application icons, application screens, and menus.

Often, performing a single action with a touchscreen device requires multiple steps. For example, the simple act of transferring funds between a savings and checking accounts using a typical mobile banking application can require the user to traverse a dozen application screens.

The usability problem is further exacerbated by the fact that there exists great variability in the particular UIs of different devices. There are now many mobile device “form factors”, from tablets with large screens and virtual keyboards to in-car interfaces intended for eyes and hands-busy operation, television sets which may have neither a keyboard nor a convenient pointing device, as well as “wearable” devices (e.g., smart eyeglasses and watches). Users are increasingly attempting to interact with similar services – making use of search, email, social media, maps and navigation, playing music and video – through these very dissimilar interfaces.

In this context, voice recognition (VR) and natural language understanding (NLU) represent a powerful and natural control mechanism which is able to cut through the layers of the visual hierarchies, intermediate application screens, or Web pages. Natural language utterances encode a lot of information compactly. Saying, “Send a text to Ron, I'm running ten minutes late” implicitly specifies which application should be launched, and who the message is to be sent to, as well as the message to send, obviating the need to provide all the information explicitly and in separate steps. Similarly, instructing a TV to “Play the Sopranos episode saved from last night” is preferable to traversing the deep menu structure prevalent in conventional interfaces. These capabilities allow the creation of a new UI: a virtual assistant (VA), which interacts with a user in a conversational manner and enables a wide range of functionalities.

In the example above, the user does not need first to locate the email application icon in order to begin the interaction. Using voice and natural language thus makes it possible to find and manipulate resources – whether or not they are visible on the device screen, and whether they are resident on the device or in the Cloud – effectively expanding the boundary of the traditional interface by transparently incorporating other services.

By understanding the user's intent, preferences, and interaction history, an interface incorporating voice and natural language can resolve search queries by directly navigating to Web destinations deemed useful by the user, bypassing intermediate search engine results pages. For example, a particular user's product query might result in a direct display of the relevant page of her or his preferred online shopping site.

Alternately, such a system may be able to answer some queries directly by extracting the desired information from either structured or unstructured data sources, constructing an answer by applying natural language generation (NLG), and responding via speech synthesis.

Finally, actions which are difficult to specify in point-and-click interfaces become readily expressible in a voice interface, such as, for example, setting up a notification which is conditioned on some other event: “Tell me when I'm near a coffee shop.”

There are also other means of reducing the number of steps required to fulfill a user's intent. It is possible for users to speak their request naturally to a device without even picking it up or turning it on. In the so-called “seamless wake-up” mode, a device listens continuously for significant events, using energy-efficient algorithms residing on digital signal processors (DSP). When an interesting input is detected, the device activates additional processing modules in order to confirm that the event was a valid command spoken by the device's owner (using biometrics to confirm his or her identity), and it then takes the desired action.

Using natural language in this manner presupposes voice recognition which is accurate for a wide population of users and robust in noisy environments. Voice recognition performance has improved to a remarkable degree over the past several years, thanks to: an ever more powerful computational foundation (including chip architectures specialized for voice recognition); fast and increasingly ubiquitous connectivity, which brings access to Cloud-based computing to even the smallest mobile platforms; the development of novel algorithms and modeling techniques (including a recent resurgence of neural-network-based models); and the utilization of massive data sets to train powerful statistical models.

Voice recognition also benefits from increasingly sophisticated signal acquisition techniques, such as the use of steerable multi-microphone beamforming and noise cancellation algorithms to achieve high accuracy in noisy environments. Such processing is especially valuable in car interiors and living rooms, which present the special challenges of high ambient noise, multiple talkers and, frequently, background entertainment soundtracks.

The recent rate of progress on extracting meaning from natural utterances has been equally impressive. The most successful approaches blend three complementary approaches:

- machine learning, which discovers patterns from data;

- explicit linguistic “structural” models; and

- explicit forms of knowledge representation (“ontologies”) which encode known relationships and entities a priori.

As is the case for voice recognition, these algorithms are adaptive, and they are able to learn from each interaction.

Terse utterances can on their own be highly ambiguous, but can nonetheless convey a lot of information because human listeners apply context in resolving such inputs. Similarly, extracting the correct meaning algorithmically requires the application of a world model and a representation of the interaction context and history, as well as potentially other forms of information provided by other sensors and metadata. In cases where such information is insufficient to disambiguate the input, voice and natural language interfaces may engage in a dialog with the user, eliciting clarifying information.

Dialog, or conversation management, has evolved from early forms of “system initiative” which restricted users to only answering questions posed by an application (either visually, or via synthetic speech), to more flexible “mixed initiative” variants which allow users to provide relevant information proactively. The most advanced approaches apply formal reasoning – the traditional province of Artificial Intelligence (AI) – to eliminate the need to pre-define every possible interaction, and to infer goals and plans dynamically.

Whereas early AI proved to be brittle, today's state of the art systems rely on more flexible and robust approaches that do well in the face of ambiguity and produce approximate solutions where an exact answer might not be possible. The goal of such advanced systems is to successfully handle so-called “meta-tasks” – for example, requests such as “Book a table at Zingari's after my last meeting and let Tom and Brian know to meet me there”, rather than requiring users to perform a sequence of the underlying “atomic” tasks, such as checking calendars and making a reservation.

Thus, our broad view of the ‘voice interface’ is that it is, in fact, an integral part of an intelligent system which:

- interacts with users via multiple modalities;

- understands language;

- can converse and perform reasoning;

- uses context and user preferences;

- possess specialized knowledge;

- solves high-value tasks;

- is robust in realistic environments.

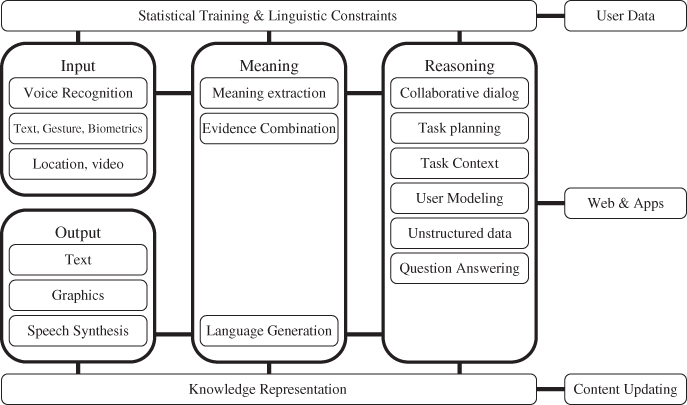

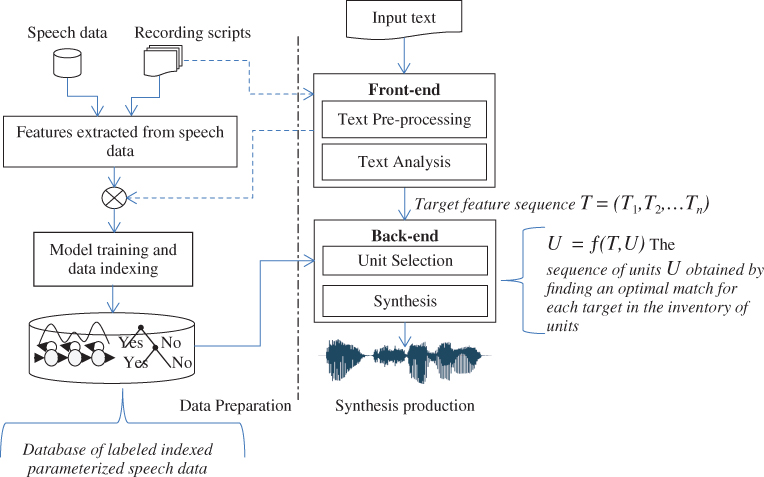

The elements of such a system, shown in Figure 3.1, are typically distributed across client devices and the cloud.

Figure 3.1 Intelligent voice interface architecture.

The reason for doing so includes optimizing computation, service availability, and latency, as well as providing users with a consistent experience across multiple clients with dissimilar characteristics and capabilities.

A distributed architecture further enables the aggregation of user data from multiple devices, which can be used to continually improve server- as well as device-specific recognition and NLU models. Furthermore, saving the interaction histories in a central repository means that users can seamlessly start an interaction on one device and complete it on another.

The following sections describe these concepts and the underlying technologies in detail.

3.2 Voice Recognition

3.2.1 Nature of Speech

Speech is uniquely human, optimized to allow people to communicate complex thoughts and feelings (apparently) effortlessly. Thus the ‘speech channel’ is heavily optimized for the task of human-to-human communication. The atomic linguistic building blocks of spoken utterances are called phonemes. These are the smallest units that, if changed, can alter the meaning of a word or an utterance. The physical manifestations of phonemes are “phones”; but a speech signal is not simply a sequence of concatenated sounds, like Morse code. We produce speech by moving our articulators (tongue, jaw, lips) in an incredibly fast and well-choreographed dance that creates a rapidly shifting resonance structure. Our vocal cords open and close between 100–300 times a second, producing a signal called the fundamental frequency or F0, which excites vocal tract resonances, resulting in a high bandwidth sound (e.g. 0–10 kHz).

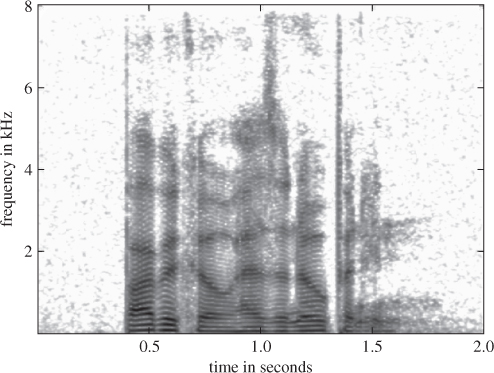

At other times, the resonances are excited by turbulent noise created at constrictions in the vocal tract, such as the sound of [s]. The acoustic expression of a phoneme is not fixed but, rather, the realization is influenced by both the preceding as well as the anticipated subsequent phoneme – a phenomenon called coarticulation. Additional variability is introduced when speakers adjust their speech to the current situation and the needs of their listeners. The resulting speech signal reflects these moving articulators and sound sources in a complex and rapidly varying signal. Figure 3.2 shows a speech spectrogram of a short phrase.

Figure 3.2 A speech spectrogram of the phrase “Barbacco has an opening”. The X-axis shows time, the Y-axis, frequency. Darkness shows the amount of energy in a frequency region.

Advances in speech recognition accuracy and performance are the result of thousands of person-years of scientific and engineering effort. Hence state-of-the-art recognizers include many carefully optimized, highly engineered components. From about 1990 to 2010, most state-of-the-art systems were fairly similar, and showed gradual, incremental improvements. In the following we will describe the fundamental components of a “canonical” voice recognition system, and briefly mention some recent developments.

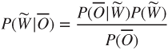

The problem solved by canonical speech recognizers is typically characterized by Bayes' rule:

That is, the goal of speech recognition is to find the highest probability sequence of words, ![]() , given the set of acoustic observations,

, given the set of acoustic observations, ![]() . Using Bayes' rule, we get:

. Using Bayes' rule, we get:

Note that ![]() does not depend on the word sequence

does not depend on the word sequence ![]() , so we want to find:

, so we want to find:

We evaluate ![]()

![]() using an acoustic model (AM), and

using an acoustic model (AM), and ![]() (

(![]() ) using a language model (LM).

) using a language model (LM).

Thus, the goal of most speech recognizers is to find the sequence of words with the highest joint probability of producing the acoustic observation, given the structure of the language.

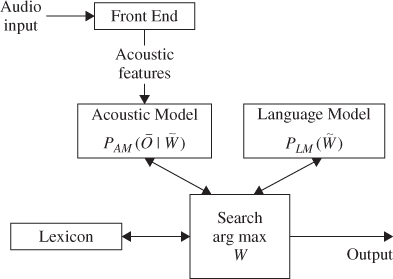

It turns out that the system diagram for a canonical speech recognition system maps well onto this equation, as shown in Figure 3.3.

Figure 3.3 Components of a canonical speech recognition system.

The evaluation of acoustic probabilities is handled by the acoustic front-end and an acoustic model; the evaluation of probabilities of word sequences is handled by a language model; and the code which finds the best scoring word sequence is called the search component. Although these modules are logically separate, their application in speech recognition is highly interdependent.

3.2.2 Acoustic Model and Front-end

- Front-end: Incoming speech is digitized and transformed to a sequence of vectors which capture the overall spectrum of the input by an “acoustic front-end”. For years, the standard front-end was based on using a vector of mel-frequency cepstral coefficients (MFCC) to represent each “frame” of speech (of about 25 msec) [1]. This representation was chosen to represent the whole spectral envelope of a frame, but to suppress harmonics of the fundamental frequency. In recent years, other representations have become popular [2] (see below).

- Acoustic model: In a canonical system, speech is modeled as a sequence of words, and words as sequences of phonemes. However, as mentioned above, the acoustic expression of a phoneme is very dependent on the sounds and words around them, as a result of coarticulation. Although the context dependency can span several phonemes or syllables, many systems approximate phonemes using “triphones”, i.e., phonemes conditioned by their left and right phonetic context. Thus, a sequence of words is represented as a sequence of triphones. There are many possible triphones (e.g.

), and many of them occur rarely. Therefore the standard technique is to cluster them, using decision trees, [3], then create models for the clusters rather than the individual triphones.

), and many of them occur rarely. Therefore the standard technique is to cluster them, using decision trees, [3], then create models for the clusters rather than the individual triphones.

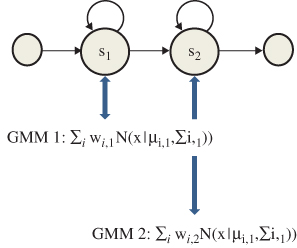

The acoustic features observed when a word contains a particular triphone are modeled as a Hidden Markov Model (HMM), [4] – see Figure 3.4. Hidden Markov models are simple finite state machines (FSMs), with states, transitions, and probabilities associated with the transitions. Also, each state is associated with a probability density function (PDF) over possible front-end vectors.

Figure 3.4 A simple HMM, consisting of states, probability distributions, and PDFs. The PDFs are Gaussian mixture models, which evaluate the probability of an input frame, given an HMM state.

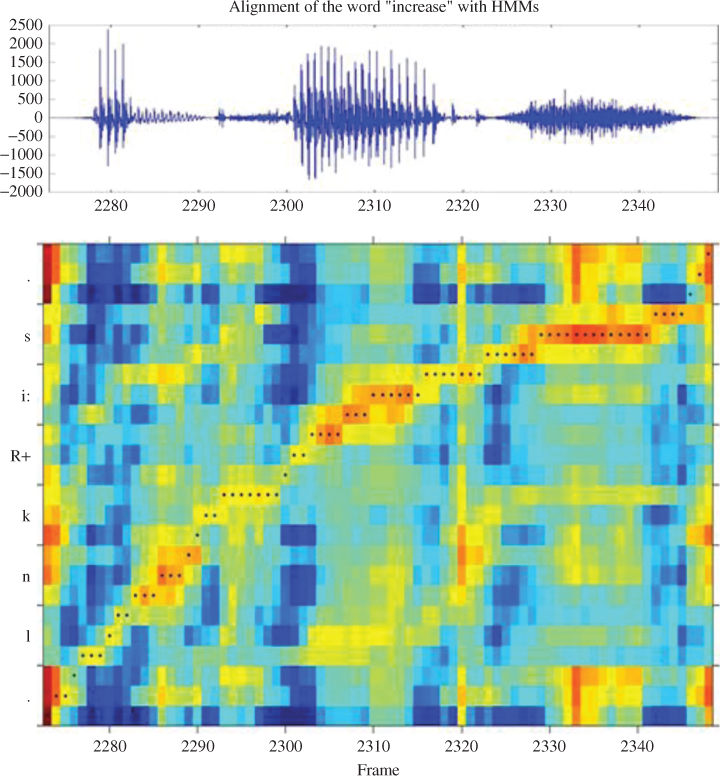

The probability density function is usually represented as a Gaussian Mixture Model (GMM). GMMs are well studied, easily trained PDFs which can approximate arbitrary PDF shapes well. A GMM is a weighted sum of Gaussians; each Gaussian can be written as:

Where ![]() is an input vector,

is an input vector, ![]() is a vector of means, and

is a vector of means, and ![]() is the covariance matrix.

is the covariance matrix. ![]() and

and ![]() are vectors of length

are vectors of length ![]() , and

, and ![]() is a square matrix of dimension

is a square matrix of dimension ![]() and each GMM is a simple weighted sum of Gaussians, i.e.,

and each GMM is a simple weighted sum of Gaussians, i.e.,

3.2.3 Aligning Speech to HMMs

In the speech stream, phonemes have different durations, so it's necessary to find an alignment between input frames and states of the HMM. That is, given input speech frames, ![]() and a sequence of HMM states

and a sequence of HMM states ![]() , an alignment

, an alignment ![]() maps the frames monotonically into the states of the HMMs. So the system needs to find the optimal (i.e. highest probability) alignment A between the frames

maps the frames monotonically into the states of the HMMs. So the system needs to find the optimal (i.e. highest probability) alignment A between the frames ![]() and the HMM states.

and the HMM states.

This is often done using a form of the Viterbi algorithm [5].

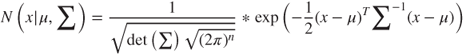

For each hypothesized word sequence, the system looks up the phonemes that make up the pronunciation of each word from the lexicon, and then looks up the triphone for each phoneme in context, using decision trees. Then, given the sequence of triphones, the system looks up the sequence of HMM states. The acoustic probability of that hypothesis is the probability of the optimal alignment of these states with the input. An example of such an alignment is shown in Figure 3.5.

Figure 3.5 Viterbi alignment of a speech signal (x axis); against a sequence of HMMs (y axis); lighter areas indicate higher probabilities as assessed by the HMM for the given frame; dotted line shows alignment.

3.2.4 Language Model

The language model computes the probability of various word sequences and helps the recognition system propose the most likely interpretation of input utterances. There are two fundamentally different types of language models used in voice recognition systems: grammar-based language models, and stochastic language models.

Grammar-based language models allow some word sequences, but not others. Often, these grammars are application-specific, and support utterances relevant to some specific task, such as making a restaurant reservation or issuing a computer command. These grammars specify the exact word sequences which the user is supposed to utter in order to instruct the system. For example, a grammar for a reservation system might recognize sequences like “find a Chinese restaurant nearby”, “reservation for two at seven”, or “show me the menu”. The same grammar would probably not recognize sequences like “pepperoni pizza”, “economic analysis of the restaurant business”, or “colorless green ideas sleep furiously”.

The set of word-sequences recognized by a grammar is described by a formal grammar, e.g. a finite-state machine, or a context-free grammar. Often, these grammars are written in formalism like Speech Recognition Grammar Specification (SRGS) (see [6]). While it is easy to construct simple example grammars, writing a grammar that covers all the ways a user might like to specify an input can be difficult. Thus, one might say “nearby Chinese restaurant”, “please find a nearby Chinese restaurant”, “I'd like to eat Chinese”, or “Where can I find some dim sum?” All these sentences mean the same thing (to a restaurant ordering app), but writing a grammar that can cover all the choices can be onerous because of users' creative word choices.

Stochastic language models (originally used for free-text dictation) estimate the probability of any word sequence (but some are much more likely than others.) Thus, “Chinese restaurant” would have a reasonable probability; “restaurant Chinese” would have a somewhat smaller probability; and “nearby restaurant Chinese a find” would have a much lower probability. Attempts to write grammar-based language models to cover all the ways a user could generate English text have not been successful; so for general dictation applications, stochastic language models have been preferred. It turns out that it is much easier to make a robust, application-specific language model by using a stochastic LM, followed by NLU processing even for quite specific applications.

The job of stochastic language modeling is to calculate an approximation to ![]() , which is, by definition:

, which is, by definition:

A surprising development in speech recognition was that a simple approximation (the trigram approximation) works very well:

The trigram approximation holds that the likelihood of the next word in a sentence depends only on the previous two words, (and an n-gram language model is the obvious generalization for longer spans). Scientifically and linguistically, this is inaccurate: many language phenomena span more than two words [7]! Yet it turns out that this approximation works very well in speech recognition [8].

3.2.5 Search: Solving Crosswords at 1000 Words a Second

The task of finding the jointly optimal word sequence to describe an acoustic observation is rather like solving a crossword, where the acoustic scores constrain the columns, and LM scores constrain the rows. However, there are too many possible word sequences to evaluate directly (a 100,000 word vocabulary generates ![]() ten-word sentences). The goal of the search component is to identify the correct hypothesis, and evaluate as few other hypotheses as possible. It does this using a number of heuristic techniques. One particularly important technique is called a beam search, which processes input frames one by one, and keeps “alive” a set of hypotheses with scores near that of the best hypothesis.

ten-word sentences). The goal of the search component is to identify the correct hypothesis, and evaluate as few other hypotheses as possible. It does this using a number of heuristic techniques. One particularly important technique is called a beam search, which processes input frames one by one, and keeps “alive” a set of hypotheses with scores near that of the best hypothesis.

How much computation is required? For a large vocabulary task, on a typical frame, the search component might have very roughly 1000 active hypotheses. Updating the scores for an active hypothesis involves extending the alignment by computing acoustic model (GMM) scores for the current HMM state of that hypothesis, and the next state, and then updating the alignment. If the hypothesis is at the end of a word, (about 20 word-end hypotheses per frame), then the system also needs to look up LM scores for potential next words (about 100 new words per word ending). Thus, we need about 2000 GMM, 1000 alignment computations, and 2000 LM lookups, per frame. At a typical frame rate of 100 Hz, we are computing about 200 k GMMs, 200 k LM lookups, and 100 k alignment updates per second.

3.2.6 Training Acoustic and Language Models

The HMMs used in acoustic models are created from large datasets by an elaborate training process. Speech data is transcribed, and provided to a training algorithm which utilizes a maximum likelihood objective function. This algorithm estimates acoustic model parameters so as to maximize the likelihood of observing the training data, given the transcriptions. The heart of this process bootstraps an initial approximate acoustic model into an improved version, by aligning the training speech against the transcriptions and re-training the HMMs. This process is repeated many times to produce Gaussian mixture models which score the training data as high likelihood.

However, the goal of speech recognition is not to represent the most likely sequence of acoustic states, but rather to give correct word sequence hypotheses higher probabilities than incorrect hypotheses. Hence, various forms of discriminative training have been developed, which adjust the acoustic models so as to decrease various measures related to the recognition error rate [9–11].

The resulting acoustic models routinely include thousands of states, hundreds of thousands of mixture model components, and millions of parameters. Canonical systems used “supervised” training, i.e. used both speech and the associated transcriptions for training. As speech data sets have grown, there has been substantial effort to find training schemes using un-transcribed or “lightly labeled” data.

Stochastic language models are trained from large text datasets containing billions of words. Large text databases are collected from the World Wide Web, from specialized text databases, and from deployed voice recognition applications. The underlying training algorithm is much simpler than that used in acoustic training (it is basically a form of counting), but finding good data, weighting data carefully, and handling unobserved word sequences require considerable engineering skill. The resulting language models often include hundreds of thousands to billions of n-grams, and have billions of parameters.

3.2.7 Adapting Acoustic and Language Models for Speaker Dependent Recognition

People speak differently. The words they pick, and the way they say words is influenced by their anatomy, accent, education, and the intentional style of speaking (e.g., dictating a formal document vs. an informal SMS message).

The resulting differences in pronunciation may confuse a speaker-independent voice recognition system, which may not have encountered training exemplars with these particular combinations of characteristics. In contrast, a speaker dependent system which models a single speaker might achieve a higher accuracy than a speaker-independent system. However, users are not inclined to record thousands of hours of speech in order to train a voice recognition system, and it is thus highly desirable to produce speaker-dependent acoustic and language models by adapting speaker-independent models utilizing limited data from a particular user.

There are many kinds of adaptation for acoustic models. Early work often used MAP (maximum ![]() posteriori) training, which modifies the means and variances of the GMMs used by the HMMs. MAP adaptation is generally “data hungry”, since it needs to utilize training examples for most of the GMMs used in the system. More data-efficient alternatives modify GMM parameters for whole classes of triphones (e.g. MLLR, maximum likelihood linear regression [12]). Transformations can be applied either to the models or to the input features. While “canonical” adaptation is “supervised” (i.e. uses speech data with transcriptions), some forms of adaptation now are unsupervised, using the input speech data and recognition hypotheses without a person checking the correctness of the transcriptions.

posteriori) training, which modifies the means and variances of the GMMs used by the HMMs. MAP adaptation is generally “data hungry”, since it needs to utilize training examples for most of the GMMs used in the system. More data-efficient alternatives modify GMM parameters for whole classes of triphones (e.g. MLLR, maximum likelihood linear regression [12]). Transformations can be applied either to the models or to the input features. While “canonical” adaptation is “supervised” (i.e. uses speech data with transcriptions), some forms of adaptation now are unsupervised, using the input speech data and recognition hypotheses without a person checking the correctness of the transcriptions.

Language models are also adapted for users or tasks. Adaptation can modify either single parameters (i.e. adjust the counts which model data for a particular n-gram, analogous to MAP acoustic adaptation), or can effectively adapt clusters of parameters (analogous to MLLR). For instance, when building a language model for a new domain, one can use interpolation weights to combine n-gram statistics from different corpora.

3.2.8 Alternatives to the “Canonical” System

In the previous paragraphs, we have outlined the basics of a voice recognition system. Many alternatives have been proposed; we mention a few prominent alternatives in Table 3.1.

Table 3.1 Alternative voice recognition options

| Technique | Explanation | Reference |

| Combining AM and LM scores | ||

| Weighting AM vs. LM | Instead of the formula in equation II, with |

[8] |

| Alternative front ends | ||

| PLP | Perceptual linear predictive analysis: find a linear predictive model for the input speech, using a perceptual weighting. | [2] |

| RASTA | “Relative spectra”: a front end designed to filter out slow variations in channel and background noise characteristics. | [13] |

| Delta and double delta | Given a frame based front-end, extend the vector to include differences and second order differences between frames around the frame in question. | [14] |

| Dimensionality reduction | Heteroscedastic linear discriminant analysis (HLDA): given MFCC or other speech features, along with delta and double delta features, or “stacked frames” (i.e. a range of frames), using a linear transformation to decrease the dimension of the front end feature. | [15] |

| Acoustic modeling | ||

| Approximations to full covariance | Using the full covariance matrixes in HMM requires substantial computation and training data. Earlier versions of HMM used simpler versions of covariance matrixes such as “single variance”, diagonal covariance. | [16] |

| Vocal Tract Length Normalization | VTLN is a speaker-dependent technique which modifies acoustic features in order to take into account differences in the length of speakers' vocal tracts. | [17] |

| Discriminative training | ||

| MMIE | Maximum Mutual Information Estimation: a training method which adjusts parameters of an acoustic model so as to maximize the probability of a correct word sequence vs. all other word sequences. | [9] |

| MCE | Minimum classification error: a training method which adjusts parameters of an acoustic model to minimize the number of words incorrectly recognized. | [10] |

| MPE | Minimum phone error: a training method which adjusts parameters of an acoustic model to minimize “phone errors”, i.e., the number of phonemes incorrectly recognized. | [11] |

| LM | ||

| Back-off | In a classic n-gram model, it is very important to predict not only the probability of observed n-grams, but also n-grams that do not appear in the training corpus. | [18] |

| Exponential models | Exponential models (also known as maximum entropy models) estimate the probability of word sequences by multiplying many different probability estimates and other functions and weigh these estimates in the log domain. They can include longer range features than n-gram models. | [19] |

| Neural Net LMs | Neural network language models extend exponential models by allowing the indicator functions to be determined automatically via non-linear transformations of input data. | [20] |

| System organization | ||

| FSTs | In order to reduce redundant computation, it is desirable to represent the phonetic decision trees which map phonemes to triphones, the phoneme sequences that make up words, and grammars as finite state machines (called weighted finite state transducers, WFSTs), then combine them into a large FSM and optimize this FSM. Compiling all the information into a more uniform data structure helps with efficiency, but can have problems with dynamic grammars or vocabulary lists. | [21] |

3.2.9 Performance

Speech recognition accuracy has steadily increased in performance during the past several decades. When early dictation systems were introduced in the late 1980s, a few early adopters eagerly embraced them and used them successfully. Many others found the error rate too high and decided that speech recognition “wasn't really ready yet”. Speech recognition performance had a bit of a breakthrough in popular consciousness in 2010, when David Pogue, the New York times technology reporter, reported error rates on general dictation of less than 1% [22]. Most speakers do not yet show nearly this level of performance, but performance has steadily increased each year through a combination of better algorithms, more computation, and utilization of ever-larger training corpora. In fact, on one specialized task, recognizing overlapping speech from multiple speakers, a voice recognition system was able to perform better than human listeners [23].

Over the past decade, the authors' experience has been that the average word error rate on a large vocabulary dictation task has decreased by approximately18% per year. This has meant that each year, the proportion of the user population that experiences acceptable performance without any system training has increased steadily. This progress has allowed us to take on challenging applications such as voice search, but also more challenging environments, such as in-car voice control. Finally, improving accuracy has meant that voice recognition has now become a viable front end for sophisticated natural language processing, giving rise to a whole new class of interfaces.

3.3 Deep Neural Networks for Voice Recognition

The pattern of steady improvements of “canonical” voice recognition systems has been disrupted in the last few years by the introduction of deep neural nets (DNNs), which are a form of artificial neural networks (ANN). ANNs are computational models, inspired by the brain, that are capable of machine learning and pattern recognition. They may be viewed as systems of interconnected “neurons” that can compute values from inputs by feeding information through the network.

Like other machine learning methods, neural networks have been used to solve a wide variety of tasks that are difficult to solve using ordinary rule-based programming, including computer vision and voice recognition.

In the field of voice recognition, ANNs were popular during late 1980s and early 1990s. These early, relatively simple ANN models did not significantly outperform the successful combination of HMMs with acoustic models based on GMMs. Researchers achieved some success using artificial neural networks with a single layer of nonlinear hidden units to predict HMM states from windows of acoustic coefficients [24].

At that time, however, neither the hardware nor the learning algorithms were adequate for training neural networks with many hidden layers on large amounts of data, and the performance benefits of using neural networks with a single hidden layer and context-independent phonemes as output were not sufficient to seriously challenge GMMs. As a result, the main practical contribution of neural networks at that time was to provide extra features in tandem with GMMs, or “bottleneck” systems that used ANN to extract additional features for GMMs. ANN were used with some success in voice recognition systems and commercial products only in a limited number of cases [25].

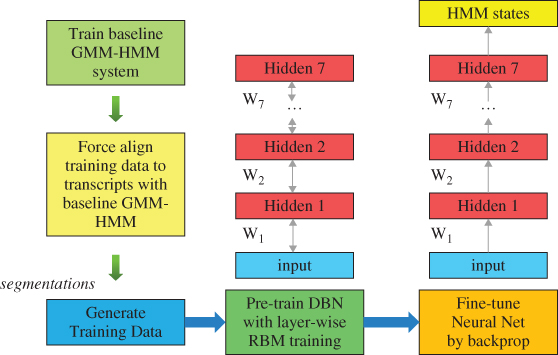

Up until a few years ago, most state of the art speech recognition systems were thus based on HMMs that used GMMs to model the HMM emission distributions. It was not until recently that new research demonstrated that hybrid acoustic models utilizing more complex DNNs, trained in a manner that was less likely to get “stuck” in a local optimum, could drastically improve performance on a small-scale phone recognition task [26]. These results were later extended to a large vocabulary voice search task [27, 28]. Since then, several groups have achieved dramatic gains due to the use of deep neural network acoustic models on large vocabulary continuous speech recognition (LVCSR) tasks [27]. Following this trend, systems using DNNs are quickly becoming the new state-of-the-art technique in voice recognition.

In practice, DNNs used for voice recognition are multi-layer perceptron neural networks with up to 5–9 layers of 1000–2000 units each. While the ANNs used in the 1990s output the context independent phonemes, DNNs use a very large number of tied state triphones (like GMMs). A comparison between the two models is shown in Figure 3.6.

Figure 3.6 A standard ANN used for ASR in the 1990s vs. a DNN used today.

DNNs are usually pre-trained with the Restricted Boltzmann Machine algorithm and fine-tuned with standard back-propagation. The segmentations are usually produced by existing GMM-HMM systems. The DNN training scheme, shown in Figure 3.7, consists of a number of distinct phases.

Figure 3.7 DNN training.

At run-time, a DNN is a standard feed-forward neural network with many layers of sigmoidal units and a top-most layer of softmax units. It can be executed efficiently both on conventional and parallel hardware.

DNN can be used for ASR in two ways:

- Using DNN to extract features for GMM (bottleneck features). This can be done by inserting a bottleneck layer in the DNN and using the activation of the units in that layer as features for GMM.

- Using DNN outputs (tied triphones probabilities) directly in the decoder (DNN-HMM hybrid model).

The first method enables quick improvements to existing GMM based ASR systems, with error rate reductions of 10–15%, but the second method yields larger improvements, usually resulting in an error reduction of 20–30% compared to state-of-the-art GMM systems.

Three main factors were responsible for the recent resurgence of neural networks as high-quality acoustic models:

- The use of deeper networks makes them more powerful, hence deep neural networks (DNN) instead of shallow neural networks.

- Initializing the weights appropriately and using much faster hardware makes it possible to train deep neural networks effectively: DNN are pre-trained with the Restricted Boltzmann Machine algorithm and fine-tuned with standard back-propagation; GPUs are used to speed-up the training.

- Using a larger number of context-dependent output units instead of context-independent phonemes. A very large output layer that accommodates the large number of HMM tied triphone states greatly improves DNN performances. Importantly, this choice keeps the decoding algorithm largely unchanged.

Other important findings which emerged in the DNN training recipes [27] include:

- DNNs work significantly better on filter-bank outputs than on MFCCs. In fact they are able to deal with correlated input features and prefer to use raw features than pre-transformed features.

- DNNs are more speaker-insensitive than GMM. In fact, speaker-dependent methods provide little improvement over speaker-independent DNNs.

- DNNs work well for noisy speech, subsuming many de-noising pre-processing methods.

- Using standard logistic neurons is reasonable but probably not optimal. Other units, like rectified linear units, seem very promising.

- The same methods can be used for applications other than acoustic modeling.

- The DNN architecture can be used for multi-task (e.g. multi-lingual) learning in several different ways and DNNs are far more effective than GMMs at leveraging data from one task to improve performance on related tasks.

3.4 Hardware Optimization

The algorithms described in the previous sections require quite substantial computational resources. Chip-makers increasingly recognize the importance of speech-based interfaces, so they are developing specialized processor architectures optimized for voice and NLU processing, as well as other input sensors.

Modern users complement their desktop computers and televisions with mobile devices, (laptops, tablets, smartphones, GPS devices), where battery life is often a limiting factor. These devices have become more complex, combining multiple functionalities into one single form factor, as vendors participate in an “arms race” to provide the next “must-have” bestselling device. Although users expect increased functionality, they have not changed their expectations for battery life: a laptop computer needs several hours of battery life; a smart phone should last a whole day without recharging. But the battery is part of the device, impacting both weight and size.

3.4.1 Lower Power Wake-up Computation

This has led to a need to reduce power consumption on mobile devices. Software can disable temporarily unused capabilities (Bluetooth, Wi-Fi, Camera, GPS, microphones) and quickly re-enable them when needed. Devices may even put themselves into various low-power modes, with the system able to respond to fewer and fewer actions. Think of Energy Star compliant TVs and other devices, but with more than just three (On-Off-Standby) states. How, then, does the system “wake up”? A physical action by the user, such as pressing a power key on the device, is the most common means today.

However, devices today have a variety of sensors that can also be used for this purpose. An infrared sensor can detect a signal from a remote control. A light sensor can trigger when the device is pulled out of a pocket. A motion sensor can detect movement. A camera can detect people. A microphone voice wakeup can detect voice activity or a particular phrase.

This is accomplished through low-power, digital signal processing (DSP)-based “wake-up-word” recognition, which allows users to speak to devices without having first to turn them on, further reducing the number of steps separating user intent and desired outcome. For instance, the Intel-inspired Ultrabook is integrating these capabilities, responding to “Hello Dragon” by waking up and listening to a users' command or taking dictation.

The security aspect starts to loom large here. A TV responds to its known signals, regardless of the origin of the signal. Anyone who has the remote control is able to operate it – or, indeed, anyone with a compatible remote control. While a living room typically has at most one TV in it, a meeting room may have 20 people, all with mobile phones. For a person to attempt to wake up his or her phone and actually wake up someone else's would be most unwelcome! Hence, there needs to be an element of security through personalization. The motion sensor may only respond to certain motions. The camera sensor may only respond to certain user(s), a technology known as “Facial Recognition”, and the voice wakeup may only respond to a particular phrase as spoken by a particular user – “Voice Biometrics” technology.

3.4.2 Hardware Optimization for Specific Computations

These sensors all draw power, especially if they are always active, and when the algorithms are run on the main CPU they incur a substantial drain on the battery. Having a full audio system running, with multiple microphones, acoustic echo cancellation and beam forming, draws significant amounts of power. Manufacturers are thus developing specialized hardware for these sensor tasks to reduce this power load, or are relying on DSPs, typically running at a much lower clock speed than the main CPU, say at 10 MHz, rather than 1–2 GHz.

The probability density function (pdf) associated with a single n-dimensional Gaussian Model is shown above in equation (1.4) The overall pdf of a Gaussian Mixture Model is a weighted sum of such pdfs, and some systems may have 100,000 or more of these pdfs, potentially needing to be evaluated 100 times a second. Algorithmic optimizations (only computing “likely” pdfs) and model approximations (such as assuming the covariance matrix is diagonal) are applied to reduce the computational load. The advent of SIMD (single-instruction, multiple data) hardware was a big breakthrough, as it enabled these linear algebra computations to be done for four or eight features at a time.

The most recent advance has been the use of Graphical Processing Units (GPUs). Initially GPUs were used to accelerate 3D computer graphics (particularly for games), which make extensive use of linear algebra. GPUs help with the pdfs such as those described above, but have proved particularly effective in the computation of DNNs.

As discussed above, DNNs have multiple layers of nodes, and each layer of nodes is the output from a mostly linear process applied to the layer of nodes immediately below it. Layers with 1000 nodes are common, with 5–10 layers, hence applying the DNN effectively requires the calculation of 5–10 matrix-vector multiplies, where each matrix is on the order of ![]() , and this occurs many times per second. Training the DNN is even more computationally expensive. Recent research has shown that training on a very small amount of data could take three months, but that using a GPU cut the time to three days, a 30-fold reduction in time [28].

, and this occurs many times per second. Training the DNN is even more computationally expensive. Recent research has shown that training on a very small amount of data could take three months, but that using a GPU cut the time to three days, a 30-fold reduction in time [28].

3.5 Signal Enhancement Techniques for Robust Voice Recognition

In real-world speech recognition applications, the desired speech signal is typically mixed acoustically with many interfering signals, such as background noise, loudspeaker output, competing speech, or reverberation. This is especially true in situations when the microphone system is far from the user's lips – for instance, in vehicles or home applications. In the worst case, the interfering signals can even dominate the desired signal, severely degrading the performance of a voice recognizer. With speech becoming more and more important as an efficient and essential instrument for user-machine interaction, noise robustness in adverse environments is a key element of successful speech dialog systems.

3.5.1 Robust Voice Recognition

Noise robustness can be achieved by modifying the voice recognition process, or by using a dedicated speech enhancement front end. Today's systems typically use a combination of both.

State-of-the-art techniques in robust voice recognition include using noise robust features such as MFCCs or neural networks, and training the acoustic models with noisy speech data that is representative of the kinds of noise present in normal use of the application. However, due to the large diversity of acoustic environments, it is impossible to cover all possible noise situations during training. Several approaches have been developed for rapidly adapting the parameters of the acoustic models to the noise conditions which are momentarily present in the input signal. These techniques have been successfully applied – for instance to enable robust distant-talk in varying reverberant environments.

Speech enhancement algorithms can be roughly grouped into single- and multi-channel methods. Due to the specific statistical properties of the various noise sources and environments, there is no universal solution that works well for all signals and interferers. Depending on the application, the speech enhancement front-end therefore often combines different methods. Most common is the combination of single-channel noise suppression with multi-channel techniques such as noise cancellation and spatial filtering.

3.5.2 Single-channel Noise Suppression

Single-channel noise suppression techniques are commonly based on the principle of spectral weighting. In this method, the signal is initially decomposed into overlapping data blocks with a duration of about 20–30 milliseconds. Each of these blocks is then transformed to the frequency or subband domain using either a Short-Term Fourier Transform (STFT) or a suitable analysis filterbank. Next, the spectral components of the noisy speech signal are weighted by attenuation factors, which are calculated as a function of the estimated instantaneous signal-to-noise ratio (SNR) in the frequency band or subband. This function is chosen so that spectral components with a low SNR are attenuated, while those with a high SNR are not. The goal is to create a best estimate of the spectral coefficients of the clean speech signal. Given the enhanced spectral coefficients, a clean time domain signal can be synthesized and passed to the recognizer. Alternatively, the feature extraction can be performed directly on the enhanced spectral coefficients, which avoids the transformation back into the time-domain.

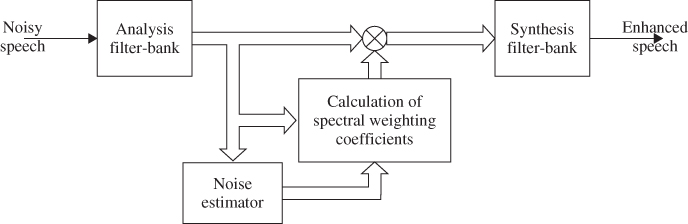

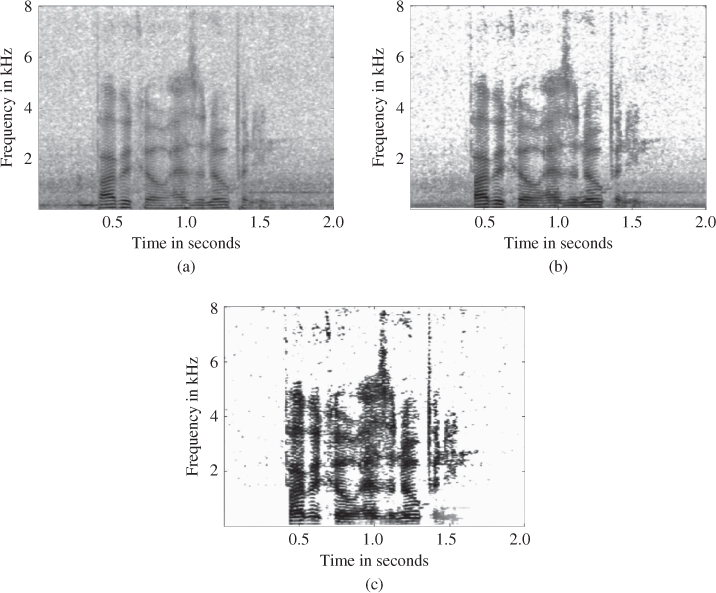

A large variety of linear and non-linear algorithms have been developed for calculating the spectral weighting function. These algorithms mainly differ in the underlying optimization criteria, as well as in the assumptions about the statistical characteristics of speech and noise. The most common examples for weighting functions are spectral subtraction, the Wiener filter, and the minimum mean-square error (MMSE) estimator [29]. The single-channel noise suppression scheme is illustrated by the generalized block diagram in figure 3.8. Figure 3.9 shows a spectrogram of the noisy phrase ‘Barbacco has an opening’ and the spectrogram of the enhanced signal after applying the depicted spectral weighting coefficients.

Figure 3.8 Block diagram of a single-channel noise suppression scheme based on spectral weighting.

Figure 3.9 Time-frequency analysis of the noisy (a) and enhanced speech signal (b); time-frequency plot of the attenuation factor applied to the noisy speech signal (c).

Single-channel noise suppression algorithms work well for stationary background noises like fan noise from an air-conditioning system, a PC fan, or driving noise in a vehicle, but they are not very suitable for non-stationary interferers like speech and music. In a single-channel system, the background noise can mostly be tracked in speech pauses only, due to the typically high frequency overlap of speech and interference in the noisy speech signal. This limits the single-channel noise reduction schemes mainly to slowly time-varying background noises which do not significantly change during speech activity.

Several optimizations have been proposed to overcome this restriction. These include model-based approaches which utilize an explicit clean speech model, or typical spatio-temporal features of speech and specific interferers in order to segregate speech from non-stationary noise. Efficient methods have been developed to reduce fan, wind-buffets in convertibles [30], and impulsive road or babble-noise.

Another drawback of single-channel noise suppression is the inherent speech distortion of the spectral weighting techniques, which significantly worsens at lower signal-to-noise ratios. Due to the SNR-dependent attenuation of the approach, more and more components of the desired speech signal are suppressed as the background noise increases. This increasing speech distortion degrades recognizer performance.

3.5.3 Multi-channel Noise Suppression

Unlike single-channel noise suppression, multi-channel approaches can provide low speech distortion and a high effectiveness against non-stationary interferers. Drawbacks are an increased computational complexity, and that additional microphones or input channels are required. Multi-channel approaches can be grouped into noise-cancellation techniques which use a separate noise reference channel, and spatial filtering techniques such as beamforming (discussed below).

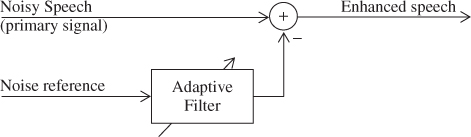

3.5.4 Noise Cancellation

Adaptive noise cancellation [31] can be applied if a correlated noise reference is available. That means that the noise signals in the primary channel (i.e. the microphone) and in the reference channel are linear transforms of a single noise source. An adaptive filter is used to identify the transfer function that maps the reference signal to the noise component in the primary signal. By filtering the reference signal with the transfer function, an estimate for the noise component in the primary channel is calculated. The noise estimate is afterwards subtracted from the primary signal to get an enhanced speech signal. The principle of adaptive noise cancellation is illustrated in figure 3.10. As signal and noise components superpose linearly at the microphone, the subtraction of signal components does not lead to any speech distortion, provided that uncorrelated noise components and crosstalk of the desired speech signal into the reference channel are sufficiently small.

Figure 3.10 Basic structure of a noise canceller.

The effectiveness of noise cancellation is, in fact, highly dependent on the availability of a suitable noise reference which is, in turn, application-specific. Noise cancellation techniques are successfully applied in mobile phones. Here, the reference microphone is typically placed as far as possible from the primary microphone – typically on the top or the rear of the phone – to reduce leakage of the speech signal into the reference channel. Noise cancellation techniques are, on the other hand, less effective in reducing background noise in vehicle applications, due to diffuse characteristics of the dominating wind and tire noise. As a result, the correlation rapidly drops if the primary and reference microphone are separated more than a few centimeters, which makes it practically impossible to avoid considerable leakage of the speech signal into the reference channel.

3.5.5 Acoustic Echo Cancellation

A classic application for noise cancellation is the removal of interfering loudspeaker signals, which is typically referred to as Acoustic Echo Cancellation (AEC) [32]. This method was originally developed to remove the echo of the far end subscriber in hands-free telephone conversations. In voice recognition, AEC is used to remove the prompt output of the speech dialog system or the stereo entertainment signal from a TV or mobile application.

Analogously to the noise canceller described above, the electric loudspeaker reference is filtered by an adaptive filter to get an estimate of the loudspeaker component in the microphone signal. The adaptive filter has to model the room transfer function, including the loudspeaker and microphone transfer functions. As the acoustic environment can change quickly – for instance due to movements of persons in the room – fast tracking capabilities of the adaptive filter are of major importance to remove loudspeaker components effectively.

Due to its robustness and simplicity, the so-called Normalized Least Mean Square (NLMS) algorithm [33] is widely used to adjust the filter coefficients of the adaptive filter. A drawback of the algorithm is that it converges slowly if the interference has a high spectral dynamic, as in the case of speech or music. For this reason, the NLMS is often implemented in the frequency or subband domain. As the spectral dynamic in the individual frequency subbands is much lower than over the complete frequency range, the tracking behavior is significantly improved. Another advantage of working in the subband domain is that AEC and spectral weighting techniques like noise reduction can be efficiently combined.

3.5.6 Beamforming

When speech is captured with an array of microphones, the resulting multi-channel signal also contains spatial information about the sound sources. This facilitates spatial filtering techniques such as beamforming, which extract the signal from a desired direction while attenuating noise and reverberation from other directions. The application of adaptive filtering techniques [34] allows adjusting the spatial characteristics of the beamformer to the actual sound-field, thus enabling effective suppression, even of moving sound sources. The directionality of such an adaptive beamformer, however, depends on the number of microphones, which are often limited in practical devices to 2–3 due to cost reasons.

To improve the directionality the adaptive beamformer can be combined with a so called spatial post-filter [35]. The spatial post-filter is based on the spectral weighting techniques also applied for noise reduction, but uses a spatial noise estimate of the adaptive beamformer. Although spatial filtering can significantly reduce interfering noise or competing speakers, it can also be harmful if the speaker is not at the specified location. This makes it imperative to have a robust speaker localization system, especially in the mobile context, where the direction of arrival changes if the user moves or tilts a device.

A simple approach is to perform the localization acoustically, by simply selecting the direction in which the sound level is strongest [36]. This works reasonably well if there is a single speaker at a relatively close distance to the device, such as when using a tablet. A more challenging scenario arises in the context of smart TVs, or smart home appliances in general, where there may be several competing speakers at a larger distance. Such conditions prevent acoustic source localization from working reliably. In this situation, it may be preferable to track the users visually with the help of a camera and to focus on the speaker that is, for instance, facing the device. An alternative is to use gestures to indicate who the device should listen to.

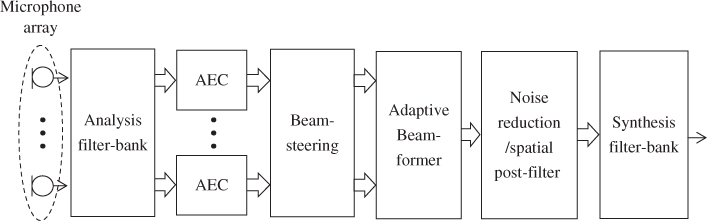

As mentioned above, the speech enhancement front-end often combines several techniques to cope effectively with complex acoustic environments. Figure 3.11 shows an example of a speech enhancement front end enabling far-talk operation of a TV. In this system, acoustic echo cancellation is applied to remove the multi-channel entertainment signal, while beamforming and noise reduction are used to suppress interfering sources such as family members and background noises.

Figure 3.11 Combined speech enhancement front-end for far-talk operation of a TV.

3.6 Voice Biometrics

3.6.1 Introduction

Many voice-driven applications on a mobile device need to verify a user's identity. This is sometimes for security purposes (e.g. allowing a user to make a financial transaction) or to make sure that a spoken command was issued by the device's owner.

Voice biometrics recognizes a person based on a sample of their voice. The primary use of voice biometrics in commercial applications is speaker verification. Here, a claimed identity is validated by comparing the characteristics of voice samples obtained during a registration phase and validation phase. Voice biometrics is also used for speaker identification where a voice sample is matched to one of a set of registered users. Finally, in cases where a recording may include voice data from multiple people, such as during a conversation between an agent and customer, “speaker diarization” extracts the voice data corresponding to each speaker. All of these technologies can play a role in human-machine interaction and, particularly, when security is a requirement.

Voice biometrics will be a key component of mobile user interfaces. Traditional security methods have involved tedious measures based on personal identification numbers, passwords, tokens, etc., which are particularly awkward when interacting with a mobile device. Voice biometrics provides a much more natural and convenient method of validating the identity of a user. This has numerous applications, including everyday activities such as accessing email and waking up a mobile device. For “seamless wakeup”, not only must the phrase be correct, but it must be spoken by the owner of the device. This can preserve battery life and prevent unauthorized device access. Other applications include transaction validation for mobile banking and purchase authorization.

Research dedicated to developing and improving technologies for performing speaker verification, identification, and diarization has been making progress for the last 50 years. Whereas early technologies focused mainly on template-based approaches, such as Dynamic Time Warping (DTW) [37], these have evolved towards statistical models such as the GMM (discussed above in Section 1.5.2 [39]). More recent speaker recognition technologies have used the GMM as an initial step towards modeling the voice of a user, but then apply further processing in the form of Nuisance Attribute Projection (NAP) [40], Joint Factor Analysis (JFA) [41], and Total Factor Analysis (TFA) [42]. The TFA approach, which yields a compact representation of a speaker's voice known as an I-vector (or Identity vector) represents the current state of the art in voice biometrics.

3.6.2 Existing Challenges to Voice Biometrics

One of the primary challenges in voice biometrics has been to minimize the error rate increase attributed to mismatch in the recording device between registration and validation. This can occur, for example, when a person registers their voice with a mobile phone, and then validates a web transaction using their personal computer. In this case, there will be an increase in the error rate due to the mismatch in the microphone and channel used for these different recordings. This specific topic has received a great deal of attention in the research community, and has been addressed successfully with the NAP, JFA, and TFA approaches. However, new scenarios are being considered for voice biometrics that will warrant further research. Another challenge is “voice aging”. This refers to the degradation in verification accuracy as the time elapse between registration and validation increases [43]. Model adaptation is one potential solution to this problem, where the model created during registration is adapted with data from validation sessions. Of course, this is applicable only if the user accesses the system on a regular basis.

Another challenge to voice biometrics technology is to maintain acceptable levels of accuracy with minimal audio data. This is a common requirement for commercial applications. In the case of “text-dependent” speaker verification – where the same phrase must be used for registration and validation – two to three seconds (or ten syllables) can often be enough for reliable accuracy. Some applications, however, such as using a wakeup word on a mobile device, require shorter utterances for validating a user.

Whereas leveraging the temporal information and using customized background modeling improve accuracy, this topic remains a challenge. Similarly, with “text-independent” speaker verification – where a user can speak any phrase during registration or validation – typically 30–60 seconds of speech is sufficient for reasonable accuracy. However, speaker verification and identification capabilities are often desired, with much shorter utterances, such as when issuing voice commands to a mobile device, having a brief conversation with a call-center agent, etc. The National Institute of Standards and Technology (NIST) has sponsored numerous speaker recognition evaluations that have included verification of short utterances [44], and this is still an active research area.

3.6.3 New Areas of Research in Voice Biometrics

Voice biometrics technologies have advanced significantly since their inception; however a number of areas require further investigation. Countermeasures for “spoofing” attacks (orchestrated with recording playback, voice splicing, voice transformation, and text-to-speech technology) provide new challenges. A number of such attacks have been recently covered in an international speech conference [45]. Ongoing work assesses the risk of these attacks and attempts to thwart them. This can be accomplished through improved liveness detection strategies, along with algorithms for detecting synthesized speech.

Voice biometrics represents an up-and-coming area for interactive voice systems. Whereas speech recognition, natural language understanding, and text-to-speech have had more deployment history, voice biometrics technology is rapidly growing in the commercial and Government sectors. Voice biometrics provides a convenient means of validating an identity or locating a user among an enrolled population, which can reduce identity theft, fraudulent account access, and security threats. The recent algorithmic advances in the voice biometrics field, as described in this section, increase the number of use-cases and will facilitate adoption of this technology.

3.7 Speech Synthesis

Many mobile applications not only recognize and act on a user's spoken input, but also present spoken information to the user, via text-to-speech synthesis (TTS). TTS has a rich history [46], and many elements have become standardized. As shown in Figure 3.12, TTS has two components, front-end (FE) and back-end (BE) processing. Front-end processing derives information from an analysis of the text. Back-end processing renders this information into audio in two stages:

- First, it searches an indexed knowledge base of pre-analyzed speech data, and finds the indexed data which most closely matches the information provided by the front-end (unit selection).

- Second, this information is used by a speech synthesizer to generate synthetic speech.

Figure 3.12 Speech synthesis architecture.

The pre-analyzed data may be stored as encoded speech or as a set of parameters used to drive a model of speech production or both.

In Figure 3.12, the front-end is subdivided into two constituent parts: text pre-processing and text analysis. Text pre-processing is needed in “real world” applications, where a TTS system is expected to interpret a wide variety of data formats and content, ranging from short, highly stylized dialogue prompts to long, structurally complex prose. Text pre-processing is application-specific, e.g. the pre-processing required to read out customer and product information extracted from a database will differ substantially from an application designed to read back news stories taken from an RSS feed. Also, a document may contain mark-up designed to aid visualization in a browser or on a page, e.g., titles, sub-headings. A pre-processor must re-interpret this information in a way which leads to rendered speech expressing the document's structure.

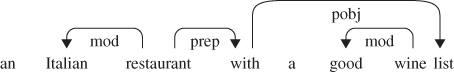

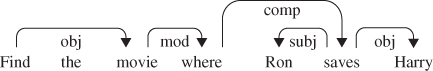

Text analysis falls into four processing activities: tokenization and normalization, syntactic analysis, prosody prediction and grapheme-to-phoneme conversion. Tokenization aids in the appropriate interpretation of orthography. For example, a telephone number is readily recognizable when written, and has a regular prosodic structure when spoken. During tokenization graphemes are grouped into tokens, where a token is defined as a sequence of characters belonging to a defined class. A digit is an example of a simple token, while a telephone number would be considered a complex token. Tokenization is particularly challenging in writing systems such as Chinese, where sentences are written as sequences of characters without white-space between words.

Text normalization is the process of converting orthography into an expanded standardized representation (e.g. $5.00 would be expanded into “five dollars”) and is a precursor to further syntactic analysis. Syntactic analysis typically includes part of speech and robust syntactic structure determination. Together, these processes aid in the selection of phonetic pronunciations and in the prediction of prosodic structure [47].

Prosody may be defined as the rhythm, stress and intonation of speech, and is fundamental to the communication of a speaker's intentions (e.g., questions, statements, imperatives) and emotional state [48]. In tone languages, there is also a relationship between word meaning and specific intonation patterns. The prosody prediction component expresses the prosodic realization of the underlying meaning and structure encoded within the text using symbolic information (e.g., stress patterns, intonation and breath groups) and sometimes parametric information (e.g., pitch, amplitude and duration trajectories). Parametric information may be quantized and used as a feature in the selection process, or directly within a parametric synthesizer, or both.

In most languages, the relationship between graphemes (i.e., letters) and the representation of sounds (the phonemes) is complex. In order to simplify the selection of the correct sounds, TTS systems first convert the grapheme sequence into a phonetic sequence which more closely represents the sounds to be spoken. TTS systems typically employ a combination of large pronunciation lexica and grapheme-to-phoneme (G2P) rules to convert the input into a sequence of phonemes. A pronunciation lexicon contains hundreds of thousands of entries (typically morphemes, but also full-form words) each consisting of phonetic representations of pronunciations of the word, but sometimes also other information such as part-of-speech. Pronunciations may be taken directly from the lexicon, or derived through a morphological parse of the word in combination with lexical lookup. No lexicon can be complete, as new words are continually being introduced into a language. G2Ps use phonological rules to generate pronunciations for out-of-vocabulary words.

The final stage in generating a phonemic sequence is post lexical processing, where common continuous speech production affects such liaison, assimilation, deletion and vowel reduction are applied to the phoneme sequence [49]. Speaker-specific transforms may also be applied to convert the canonical pronunciation stored in the lexicon or produced by the G2P rules into idiomatic pronunciations.

As previously stated, the back-end consists of two stages: unit selection and synthesis. There are two widely adopted forms of synthesis the most widely adopted of which is concatenative synthesis, whereby the selected sound fragments indexed by the units are optionally joined. Signal processing such as Pitch Synchronous Overlap and Add (PSOLA) may be used as an aid to smoothing the joins and to offer greater prosodic control at the cost of some signal degradation [47]. Parametric synthesis – for example, the widely used “HMM synthesis” approach – uses frames of spectrum and excitation parameters to drive a parametric speech synthesizer [50].

Table 3.2 highlights the differences between concatenation and parametric methods. As can be seen, concatenation offers the best fidelity at the cost of flexibility and size, while parametric synthesis offers considerable flexibility at a much smaller size but at the cost of fidelity. As a result, parametric solutions are typically deployed in embedded applications where memory is a limiting factor.

Table 3.2 Differences between concatenation and parametric methods

| Category | Concatenation synthesis | Parametric synthesis |

| Speech quality | Uneven quality, highly natural at best. Typically offers good segmental quality, but may suffer from poor prosody. | Consistent speech quality, but with a synthetic “processed” characteristic. |

| Corpus-size | Quality is critically dependent upon the size of the sound database | Works well when trained on a small amount of data |

| Signal manipulation | Minimal to none | Signal manipulation by default. Suitable for speaker and style adaptation. |

| Basic Unit topology | Waveforms | Speech parameters |

| System footprint | Simple coding of the speech inventory leads to a large system footprint | Heavy modeling of the speech signal results in a small system footprint. Systems are resilient to reduction in system footprint. |

| Generation quality | Quality is variable depending upon the length of continuous speech selected from the unit inventory. For example, limit domain systems, which tend to return long stretches of stored speech during selection, typically produce very natural synthesis. | Smooth and stable, more predictable behavior with respect to previously unseen contexts. |

| Corpus-quality | Need accurately labeled data | Tolerant towards labeling mistakes |

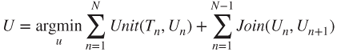

Unit selection [51, 52] attempts to find an optimal sequence of units ![]() from the pre-generated database which describes a target sequence

from the pre-generated database which describes a target sequence ![]() of features produced by the front-end for an analyzed sentence (Figure 3.12). Two heuristically derived cost functions are used to constrain the search and selection. These are unit costs (how closely unit features in the database match those of an element in the target sequence) and join costs (how well adjacent units match). Typically dynamic programming is used to construct a globally optimal sequence of units which minimizes the unit and join costs.

of features produced by the front-end for an analyzed sentence (Figure 3.12). Two heuristically derived cost functions are used to constrain the search and selection. These are unit costs (how closely unit features in the database match those of an element in the target sequence) and join costs (how well adjacent units match). Typically dynamic programming is used to construct a globally optimal sequence of units which minimizes the unit and join costs.

In HMM selection, the target sequence ![]() is used to construct an HMM from the concatenation of context clustered triphone HMMs. An optimal sequence of parameter vectors can be derived to maximize the following:

is used to construct an HMM from the concatenation of context clustered triphone HMMs. An optimal sequence of parameter vectors can be derived to maximize the following:

Where ![]() is the parameter vector sequence to be optimized,

is the parameter vector sequence to be optimized, ![]() is an HMM and

is an HMM and ![]() is the length of the sequence. In contrast to unit selection methods, which determine optimality based on local unit costs and join costs, statistical methods attempt to construct an optimal sequence which avoids abrupt step changes between states through a consideration of 2nd order features [50]. While still not widely deployed, there is also an emerging trend to hybridize these two approaches [53]. Hybrid methods use the state sequence to simultaneously generate candidate parametric and unit sequences. The decision on which method to select is made at each state, based on phonological rules for the language and an appreciation of the modeling power of the parametric solution.

is the length of the sequence. In contrast to unit selection methods, which determine optimality based on local unit costs and join costs, statistical methods attempt to construct an optimal sequence which avoids abrupt step changes between states through a consideration of 2nd order features [50]. While still not widely deployed, there is also an emerging trend to hybridize these two approaches [53]. Hybrid methods use the state sequence to simultaneously generate candidate parametric and unit sequences. The decision on which method to select is made at each state, based on phonological rules for the language and an appreciation of the modeling power of the parametric solution.

There are two fundamental challenges to generating natural synthetic speech. The first challenge is representation, which is the ability of the FE to identify and robustly extract features which closely correlate with characteristics observed in spoken language, and the companion of this, which is the ability to identify and robustly label speech data with the same features. A database of speech indexed with too few features will lead to poor unit discrimination, while an FE which can only generate a subset of the indexed features will lead to units in the database never being considered for training or selection. In other words, the expressive power of the FE must match the expressive power of the indexing.

The second challenge is sparsity, that is sufficient sound examples must exist to adequately represent the expressive power of the features produce by the FE. In concatenation synthesis, sparsity means that the system is forced to select a poorly matching sound, simply because it cannot find a good approximation. In HMM synthesis, sparsity results in poorly trained models. The audible effects of sparsity increase as the styles of speech become increasingly expressive. Sparsity can, to some extent, be mitigated through more powerful speech synthesis models, which are capable of generating synthetic sounds from high-level features. Recently, techniques such as CAT (Cluster Adaptive Training, [54]) and DNN (Deep Neural Networks [Zen et al., 2013 [55]]) are being used to make the best use of the available training data by avoiding fragmentation that compounds the effects of sparsity.

As suggested by Table 3.2, the commercial success of concatenation is due to the fact that high fidelity synthesis is possible, provided care is taken to control the recording style and to ensuring sufficient sound coverage in key application domains, when constructing the speech unit database. Surprisingly good results can be achieved with relatively simple FE analysis and simple BE synthesis. However, technologically, such approaches are likely to be an evolutionary cul-de-sac. While many of the traditional markets are well served by these systems, they are expensive and time-consuming to produce.

The growing commercial desire for highly expressive personalized agents is driving a trend towards trainable systems, both in the FE, where statistical classifiers are replacing rule-based analysis methods, and in the BE, where statistical selection and hybridized parametric systems are promising flexibility combined with fidelity [53]. The desire to synthesize complex texts such as news and Wikipedia entries more naturally is forcing developers to consider increasingly complex text analysis through the inclusion of semantic and pragmatics knowledge in the FE, and consequently, increasingly complex statistical mappings between these abstract concepts and their acoustic realization in the BE [54].

3.8 Natural Language Understanding