CHAPTER 2

Designing Effective Security Metrics

In Chapter 1 I discussed the basics of security measurement, including why some of the security metrics currently used in the industry are insufficient for helping you to understand your security activities. This chapter explores how you can choose more useful security metrics and proposes an approach adapted from empirical software engineering, the Goal-Question-Metric (GQM) method, to create useful security metrics.

Choosing Good Metrics

The security metrics literature often devotes space to defining metrics and discussing what characteristics make a metric good or bad. More often than not, books and articles about security metrics state that good metrics can be expressed only in numbers, and if a metric cannot be expressed in numbers, it is bad by definition. This implies that if you cannot measure something numerically, then you cannot measure it, analyze it, or understand it at all.

Holders of this opinion often invoke a quotation from the nineteenth century scientist William Thomson, a.k.a. Lord Kelvin, who said that unless you can express your measurements in numbers, your knowledge is poor, unsatisfying, and unscientific. Many of the books, articles, and general championing of IT security metrics cite Kelvin to support their preference for quantitative measurement. My response when someone quotes Kelvin to me is to ask them to rephrase their support of quantitative metrics in the form of a number. I have yet to meet anyone who can provide me quantitative evidence that shows why numbers make better metrics and provide more satisfactory knowledge than other forms of measurement. Instead, the person will tell me stories, recount anecdotes, and cite the opinions of others. At that point, depending on my mood, I will decide whether or not to hold the person to their adopted standard and make the case that he doesn’t know what he is talking about. If I’m irritated, I might remind him that Lord Kelvin also believed in the existence of the ether and said X-rays would prove to be a hoax, but I usually try not to be a jerk about it. As an academic, my Ph.D. research depended on a blend of quantitative and qualitative methods, which means I often have a different perspective on measurement and research, and questions and answers, than physicists or engineers.

As will be sometimes painfully clear in this book, this multimethod approach to inquiry has carried over significantly into my perspective regarding IT security metrics. I believe not only that nonquantitative approaches to measurement are possible in our world, but that they are necessary and vital, because security is inherently a social process as much as a technical one. The debate between the merits of quantitative and qualitative research and, more generally, between those of the hard sciences and the social sciences, has been ongoing for decades and is well beyond the scope of this book.

I must respectfully disagree with those in the security metrics field who discount nonquantitative metrics out of hand. I find it ironic that the evidence presented against qualitative measurement is itself qualitative. It is ironic because the argument itself shows how people use empirical data: raw facts or numbers do us little good. Instead, we engage evidence so that we can interpret it, and it is the interpretation of the data rather than the data itself that provides us value.

From a security perspective, understanding how the CISO thinks about security or what the e-mail administrator believes is the best way to approach a problem is just as important as quantitatively analyzing data produced by logs or tools. Measuring and improving IT security is about asking the right questions to reduce uncertainty and improve operations, not about arbitrarily deciding which questions and answers quite literally count or do not count.

Security metrics should be about choosing the best methods to determine what you need to know about security so that you can understand and improve your operational processes, within the resource constraints you face. Measuring a complex phenomenon such as your IT security requires an equally sophisticated and complex approach. Oversimplification of both the problems and the solutions will introduce more risk rather than removing it.

In Chapter 12, I talk about the characteristics of organizations in high-risk environments and how maintaining an appreciation for complexity is a secret to their successful operations. For now, understand that an appreciation of complexity means realizing that you cannot solve or even measure everything. Your metrics should be about choosing what you will measure and what you will improve while appreciating that you may not know what you may not know. It is not bad to use a few key metrics or to decide that certain metrics are outside of the organization’s resources at the time (real qualitative measurement is often more expensive and difficult to do correctly). But if you fall into the trap of always choosing simple or easily obtained answers, this becomes a serious risk for your security.

When metrics are limited or restricted to simplistic categories such as good or bad, people may ignore some measurement methods that would be useful to them simply because some expert said that they were not valuable. Worse, if you believe that only numbers make for good metrics, you may be tempted to numerically label things that have no business being expressed quantitatively. At the end of the day, you must decide what you need to know, regardless of what is recommended by others who are not as familiar with your security environment and challenges. Measurement is always a local activity, performed within the context of individual and organizational understanding. Few arbitrary limits should be placed on how you go about achieving your understanding of security operations if those efforts are rational and methodical. A definition is always a good place to start.

Defining Metrics and Measurement

I define metric broadly to mean some standard of measurement. I particularly like this definition because it is meaningless unless it combined with an understanding of the word measurement. Recall that metrics are a result and measurement is an activity. Measurement is defined as the act of judging or estimating the qualities of something, including both physical and nonphysical qualities, through comparison to something else. Usually the things being measured are not compared to one another directly, but to some accepted standard of measurement—which circles back around to the original definition of metric. Thus metrics are standards of measurement, and measurement is the comparison of things, usually against standards. Often these standards are expressed in numerical units that provide standard metrics for qualities such as length, weight, or quantity. But metrics don’t have to be expressed in this way.

Measurement allows us to do more than count and compute things. Remember that measurement did not originate in scientific inquiry, but rather in social relationships between individuals and groups of people. The division of hunt, harvest, or spoils based upon social status and the equivalencies necessary for trade and barter are measurement practices that significantly predate metrics being used for scientific analysis.

In addition to allowing for the rational analysis of security systems and activities, metrics can provide social and organizational benefits:

![]() Measurement allows us to predict things. The inferential statistical analysis of security data can compare samples and populations, providing generalizations beyond the immediate data.

Measurement allows us to predict things. The inferential statistical analysis of security data can compare samples and populations, providing generalizations beyond the immediate data.

![]() Measurement allows us to move beyond subjective language and individual experience by providing a common framework for the observation and comparison.

Measurement allows us to move beyond subjective language and individual experience by providing a common framework for the observation and comparison.

![]() Measurement helps us deal with disagreement and error, because it allows us to standardize our criteria and values and then assess our results against these agreed-upon baselines.

Measurement helps us deal with disagreement and error, because it allows us to standardize our criteria and values and then assess our results against these agreed-upon baselines.

![]() Measurement promotes fairness by requiring everyone to adhere to the same accepted standards, whatever those standards may be.

Measurement promotes fairness by requiring everyone to adhere to the same accepted standards, whatever those standards may be.

![]() Measurement allows us to refine our descriptions of things as our metrics become more sophisticated; over time, the distinctions we can make become more precise.

Measurement allows us to refine our descriptions of things as our metrics become more sophisticated; over time, the distinctions we can make become more precise.

Nothing Either Good or Bad, but Thinking Makes It So

As you develop your security metrics, you should be less concerned with what makes a metric intrinsically good or bad and much more concerned with how you develop measurement projects that provide value and organizational benefits to your security program. This means taking the time to develop metrics that are based on your unique requirements and not relying on “out-of-the-box” metrics that you apply without thinking about what the measurement is supposed to achieve.

Most security programs today already collect more data than they analyze, and metrics that generate unexamined data just add more to the pile. These are the types of metrics I would consider intrinsically bad, because they add no value to the security program and may even produce additional uncertainty and risk. What makes a metric good has less to do with the innate qualities of the metric and more to do with how you approach the measurement. If you want to know whether or not your metric is good, consider your answers to three basic questions.

Do You Understand the Metric?

Recall the general risk assessment matrix from Chapter 1, placed in the context of the preceding definitions. Can it be described in terms of a security metric? Sure it can. Building a risk matrix is an act of judging or estimating the qualities of something through comparison to something else, to some standard of measurement. The risk matrix is an instrument for the measurement.

But what exactly is the something being measured and what is the standard to which that something is compared? Here is where things get tricky. Based on how the matrix is described and used throughout the security world, the something being measured would be the risk to whatever system or organization is the target of the assessment. The standard of measurement therefore would be the combined risk score that determines where in the matrix the risk falls, be it very high, very low, or somewhere in between. But this is not accurate, because the data that has gone into the construction of the matrix does not directly involve the system itself or the threats to the system (some of which may only be the subject of speculation), or the realization of probable loss (which cannot be fully known until after a security incident has occurred). Instead, the data that is used to build the matrix are the statements of the people that (hopefully) understand the system in question and have enough expertise and experience to estimate the risk to the system. These statements do not measure actual risk, but rather what people think the risk may be (or at least what they are willing to say they think).

The problem is not that the risk assessment is bad measurement, but that the way many security professionals using the matrix have defined it guarantees that it will not be used effectively. When you use a tool improperly, it tends to give you poor results. We know that a risk assessment involves human judgment and cannot be completely accurate. The proper approach to the resulting uncertainties is to define, understand, and reduce them.

There are accepted methods for increasing the accuracy of judgment and prediction under uncertainty, including expert calibration and training to make estimates more precise and to leverage large bodies of past event data on which to base future extrapolations. These techniques require expertise and work on the part of the organization measuring the risk.

IT security capabilities are often too immature to effectively handle all the variables. Instead, risk assessments result in a bad mix of turning opinions into numbers (because 1–100 looks more credible than high/medium/low), treating estimates as facts (because you can’t tell your boss that your made-up numbers may also be wrong), and then rationalizing away failure by saying that the assessment was qualitative so no one should have expected accuracy to begin with. Poor results do not mean the risk matrix is flawed any more than a disappointing attempt at using a hammer as a can-opener means the hammer is flawed. Improper understanding of a problem increases the probability that you will choose the wrong tool.

When selecting metrics, be sure that you have given adequate thought to what you are trying to accomplish, and this includes more than just the immediate thing that you are trying to measure. You should take into account a number of considerations:

![]() The underlying reason for the measurement. Metrics designed to understand security are different from metrics designed to respond to a request for metrics.

The underlying reason for the measurement. Metrics designed to understand security are different from metrics designed to respond to a request for metrics.

![]() The audience for the results of the metrics. Do not assume that everyone thinks of security metrics (or security itself, for that matter) in the same way that you do.

The audience for the results of the metrics. Do not assume that everyone thinks of security metrics (or security itself, for that matter) in the same way that you do.

![]() The qualities or characteristics of your security program that you are trying to judge. It might be easier to measure an increase in attempted insider attacks over a given period of time, but those metrics will probably not explain the conditions that gave rise to the increase.

The qualities or characteristics of your security program that you are trying to judge. It might be easier to measure an increase in attempted insider attacks over a given period of time, but those metrics will probably not explain the conditions that gave rise to the increase.

![]() The data. You should be able to articulate what observations you made as part of your metrics, and how you made them. Are you actually observing the quality or characteristic you are trying to measure? If not, what are you observing, and does that impact your analysis and decisions based on the metrics?

The data. You should be able to articulate what observations you made as part of your metrics, and how you made them. Are you actually observing the quality or characteristic you are trying to measure? If not, what are you observing, and does that impact your analysis and decisions based on the metrics?

Do You Use the Metric?

I have been involved in delivering security consulting reports to customers for well over a decade, and most of these reports described the results of extensive and detailed measurements of the security vulnerabilities identified in customer networks. These metrics are sought after and usually well received by customers who hope to understand more about their security posture. Most of these customers do something with at least some of the resulting data, but experience has taught me that few customers actually use all the information that they contracted for. The same holds true for many other security-related data sources. Robust logging, monitoring, and event capture are all touted as important features by security product vendors, and security managers now have ready sources for metrics data being piped in from any number of systems. How is all this data used?

I am certainly not saying that you must use every single bit of security data that you collect, in real time, to have a mature measurement program. Measurements will need to be classified and prioritized, just like any other business information asset. But from a security metrics perspective, the point of capturing data is to reduce your uncertainty about aspects of your security activities.

Having no information regarding an element of security represents a certain state of uncertainty in that you don’t know about that element. But collecting metrics data on the element means that now, technically, you do know about that element because you have been making observations regarding it. If you use the data, you eliminate some of your uncertainty about that element. However, when you do not use the metrics data you are collecting, you actually add to your uncertainty and maybe your risk. In Chapter 1 I described how security metrics data is potentially discoverable during litigation. How much worse is a breach when it turns out that you actually knew about the vulnerability that led to the damage and loss in question because it had been identified on two previous network scans but was never remediated?

There are many reasons to collect security data that you may not use immediately, forensics being at the top. In the event of a breach, you want to be able to reconstruct the events leading up to it. Most organizations collect data to be able to reconstruct the past. Many organizations also implement defined records and document retention policies to provide a balance between the risks of not keeping enough information on hand and those of keeping too much. In the current environment of compliance and e-discovery, it is unwise to keep any information for longer than you need to do so. And if you are not using the information, why keep it at all? Your metrics should follow the same logic, and you should understand all the metrics that you have defined for your security program, why they were selected, and how they are used. The metrics catalog should be regularly reviewed, and if it turns out that some metrics are not utilized or acted upon, you should consider why you are even measuring those aspects of your security program.

Do You Gain Insight or Value from the Metric?

Security metrics are local. While a global set of security metrics with cross-industry adoption would enable companies to compare their performance in a standardized way similar to what occurs in other industries, we are not there yet. Today’s security metrics are about individual organizations and enterprises making observations regarding their own environments and attempting to measure those environments accordingly. But there is nothing inherently wrong with this situation, and it has a lot to do with the immaturity of the security industry in general.

Today local metrics are more valuable, but when enough companies have robust local security measurement data, the industry will be ready to improve and mature as a whole by sharing data for mutual benefit. In many ways, the whole current metrics push is indicative that this may be beginning to develop. Your organization likely has security concerns that you need to understand better to make improvements and increase the value of your operations. While it would be nice to know what your main competitor is doing to address its security concerns, this knowledge is currently a luxury. If it turned out the competitor’s security was worse than your own, you probably wouldn’t consider that justification for lowering your own posture, although you might feel a bit better about what you’ve done. Until an accepted standard of security performance metrics is available, what your peers and competitors are doing doesn’t really matter. You have to do what is necessary to protect your corporate interests and justify your security infrastructure against your own tolerance for risk and reward.

The local nature of security metrics is exactly the reason why blanket categorization of these metrics does not work. Assuming that you understand the metrics you have chosen, including the limitations they may impose on your knowledge, and assuming that you use the metrics that you select, the only real question is whether or not those metrics are giving you more insight than you had before you started using them. You may be collecting hard, quantitative data regarding system vulnerabilities and using that information to track remediation efforts over time. Or you may be using social media to conduct informal opinion surveys of users’ security attitudes and behaviors in the workplace. To state that either of these (or any of the myriad other ways that we can acquire information) is better or worse than the other is inappropriate. What matters is that you can assess the value that you get out of the metric and that the value you get is proportionate to the effort that you put into measuring to begin with.

What Do You Want to Know?

Many factors influence how useful a metric will be for a particular organization or purpose. Beneath these factors lies a more fundamental question: What are you trying to understand about your security environment and operations? Your answers will often depend on other, related questions about the nature of your enterprise. What kind of organization are you? What are your corporate goals? What is your business model? What information assets are more or less valuable to you? Surprisingly, figuring out what the organization wants or needs to know is often a neglected step in setting up a security metrics program. Rather than being driven by questions, metrics are often chosen because they are simple or easy to accomplish, or someone else says they are important. The result is that the metrics end up defining the problems and driving the questions. If you have not specifically considered and defined what you want to know through the use of security metrics, everything becomes exploratory, and you will have a much more difficult time assessing how effective your efforts towards knowledge were or are.

To Count or Not to Count

I have already covered the complementary nature of quantitative and qualitative metrics, as well as my arguments with those who believe anything not expressed numerically is a bad metric. Some aspects of security make for excellent quantitative data sources, and this data is also usually the most easily available, cheapest, and least ambiguous data regarding the security environment. In fact, we almost certainly do not leverage this data enough in our security reviews and assessments, and this may explain why the security metrics literature has swung to the side of overemphasizing quantitative metrics as best practice.

In the context of the various benefits of measurement mentioned earlier in the chapter, quantitative data allows for more precise and standardized comparison and even predictive power, with less reliance on the subjective language and interpretations of people doing the measuring. Numbers possess an unmistakable power to persuade, which is probably why we try to turn so many things into numbers. But it is important to remember that numbers must be interpreted just like any other data. They do not speak for themselves but instead must be reconciled with the standards of measurement to which they are associated.

Consider temperature as an example. Say your local weather forecast tells you that tomorrow will be twice as warm as it was today. If you are in the United Kingdom and today it was 10° Celsius, which is a little chilly, then tomorrow is looking to be quite a pleasant day. But in the United States this statement means that today’s mild 50° Fahrenheit will give way to a brutal 100° scorcher tomorrow. And if we’re speaking in Kelvin, then you should enjoy today’s 283° weather while it lasts because tomorrow we are all going to be roasted alive.

Numbers taken out of context can be as misleading and as confusing as any uninformed opinion. Security metrics already suffer from these distortions at times. The example of the vendor-sponsored Internet security report in Chapter 1, which correlated rise in vulnerabilities with a decline in security, shows how a lack of specificity regarding the scales or standards of data can make your findings less credible. Just because you have an unquestioned quantitative measurement about some state of your security program does not mean that the data means anything. A 100 percent increase in the number of security incidents over a month holds different implications if you had one incident last month or if you had 100 incidents. Numbers may not lie, but the people who use them are under no such restrictions.

Again the problem is often definitional. Some will argue that qualitative metrics are not even possible because by definition a metric is expressed in numbers. That’s not true, but I understand the argument. Definitions are the way that we standardize the meaning of words, the way we measure that meaning if you will. If you have never considered another meaning to a word, then that usage will make no sense to you, regardless of any sense it may make to others. You may recognize the word but not the context.

If your definition of a metric is an easily attainable number that reflects a state of affairs, then much of what I’m going to propose is not going to seem like measurement. But if you apply a definition of metrics that says they are standard expression of the act of comparing things, then what I’m proposing may seem perfectly valid. The question is how married you are to your own definitions. We all face the prospect sometimes of being trapped by our preconceptions.

One way to avoid these traps is to contextualize your metrics with the tried-and-true 5 Ws (and one H) formula: who, what, when, where, why, and how? If you can describe the security knowledge that you want to obtain in terms of these simple questions, it becomes much easier to decide whether quantitative or qualitative metrics are your best bet.

Who, What, When, Where?

If you accept that most of your desired security knowledge will involve knowing issues of who, what, when, where, why, and how in relation to your security program and environment, then you can likely address two-thirds of your knowledge with quantitative metrics. Identities, activities, events, and locations are all highly adaptable to numbers and counting, and can yield very useful data:

![]() Who? Which users have access to sensitive information? Who in the organization consistently chooses weak passwords?

Who? Which users have access to sensitive information? Who in the organization consistently chooses weak passwords?

![]() What? What ratio of the company’s systems is not configured according to company security policy? Is the security training and awareness program effective?

What? What ratio of the company’s systems is not configured according to company security policy? Is the security training and awareness program effective?

![]() When? How often does management review the company’s security strategy? Are security incidents more likely to occur during or outside of normal business hours?

When? How often does management review the company’s security strategy? Are security incidents more likely to occur during or outside of normal business hours?

![]() Where? Which organizational units have the fewest security policy violations per month? What is the most common source of reconnaissance scans against the corporate network perimeter?

Where? Which organizational units have the fewest security policy violations per month? What is the most common source of reconnaissance scans against the corporate network perimeter?

Most diagnostic and operational information regarding security can be obtained using metrics like these, with quantified data that can be analyzed, compared, and even generalized in some cases.

These metrics make up the backbone of a robust security measurement program, assuming that you understand the metrics you choose, that you use them, and that they provide you with insight that makes for more effective decision making. Metrics can often be automated as well, making collection and analysis easier. And because of the relatively unambiguous nature of the questions, the answers can be made equally unambiguous and objective. I’d say every security manager in the industry has some set of metrics that answer who/what/when/where questions. But this leaves a third of our security insight unaccounted for.

How and Why?

If having the facts was all we needed to make decisions, life would probably be a lot less complicated. From criminal investigations, to business school case studies, to historical documentaries, people do not satisfy themselves with just the facts. Facts give us the dots, but we must still connect them if we want to understand anything in our world.

The history of human science is one of collecting data not for the purposes of knowing who, what, when, and where, but because we are really interested at the end of the day with the how and why. Not every IT security decision depends upon understanding the answers to these two remaining questions, but if we do not make an attempt to understand them in some cases, we accept by default that our security will always have blind spots and risks into which we have no visibility.

Security technologies and controls are complex systems, and understanding how they impact security at a systems level involves more than just simple metrics. So do efforts to understand security as a psychological instead of a technical process, one in which people make choices based on whether or not they feel safe taking a particular action. These characteristics become far more interpretive:

![]() How? What are the most expensive bottlenecks in our current patch management process? Which user workflows are most closely aligned with the company’s e-discovery strategy?

How? What are the most expensive bottlenecks in our current patch management process? Which user workflows are most closely aligned with the company’s e-discovery strategy?

![]() Why? What is the root cause of the increase in virus infections over the past 12 months? Has the economic downturn made the organization more susceptible to insider threats?

Why? What is the root cause of the increase in virus infections over the past 12 months? Has the economic downturn made the organization more susceptible to insider threats?

Understanding people and the organizations they create together socially means exploring such things as ethical and behavioral norms, personal motivations, and even individual experiences (commonly known as “stories”). It’s enough to make a hardcore objectivist engineer’s skin crawl. But qualitative measurement techniques are designed specifically to get at this data in rigorous and verifiable ways. I will be spending much more time in coming chapters describing methods and techniques for qualitative metrics, but for now I will leave it at this: Quantitative metrics can give you a lot of information that you can use to support your security decisions. But you won’t fully understand your security environment and its effectiveness until you measure and explore the hows and whys that exist behind the numbers.

Observe!

A legitimate concern of skeptics of qualitative metrics is that that data collected from this type of measurement does not reflect what is actually going on. Asking people about whether or not their system has current virus signatures on a survey, for example, is not the same as assessing the virus signatures to ensure they are up to date. The former leaves a lot of room for guessing, confusion, and misinformation on the part of the person responding to the question. This same concern is equally legitimate when it comes to quantitative metrics, which also produce data that may not reflect what is actually going on.

Where qualitative data may prove inaccurate, quantitative data often proves incomplete. You can set up 50 different quantitative security metrics in the data center, ranging from badge reader access statistics, to login information, to the time reporting data of the operations staff, but these are not the same as knowing the people and the environment that make up that data center. The data will not tell you about culture or interpersonal quirks, perhaps that a few especially security-savvy staffers carry the load for the rest, or that security incident handling differs by business unit based on social networks rather than company policy. These insights might be common knowledge among the staff, but you’ll never know about them if you don’t ask the right questions. Observation includes listening to people, and security pros have a lot of experience and insights to offer (most are just waiting for someone to ask them what they think). Metrics are about decision support, and any information that helps a decision-maker is valuable—anyone can blindly follow numbers.

My point here is that the main challenge of metrics is not whether we can make them quantitative as often as possible, but whether we can make them empirical as often as possible. Empirical metrics, put simply, are based on direct observation and experience. Empirical data is produced when the metric uses methods that rely on our senses, whether as a result of actually looking at (or listening to, or touching) the thing being measured (for instance, measuring configuration errors by reviewing the configuration files and counting them up), or by experiment (changing a security process and observing whether that change affects the outcome of the process). One of my favorite examples of an empirical security metric came during a business impact analysis at a client. As we were asking a system administrator how he knew some of his machines were business critical he explained that, if a particular server’s purpose was not documented or known, he would unplug it. He measured criticality based on how quickly the users of the machine freaked out. I don’t recommend this as a best practice security metric, but it certainly has the potential to generate a lot of empirical data.

A lot of critics of qualitative metrics make the mistake of assuming that qualitative means “not empirical,” but this is actually wrong and shows a lack of understanding of real qualitative research methods. Empirical qualitative measurement is exactly like its quantitative cousin in that it is based on observation and experience. Where the two differ substantially is regarding what is actually being observed.

At the risk of generalizing, where quantitative metrics gather data in regard to anything that can be counted, qualitative metrics focus on measuring the activities, behaviors, and responses of people. Of course, people can be counted, too, but qualitative measurement seeks to understand how and why people do what they do and not just the mechanics of those activities. Qualitative security metrics are concerned with issues of organizational behavior, culture, and politics and with the interactions between people in what, as technical as it may be, is fundamentally a social environment. And to measure these security attributes requires empirical data and methodical techniques.

To return once again to the example of the “qualitative” risk assessment, you cannot say that this activity empirically measures the organization’s risks, because those are not observed. But these assessments do collect empirical data every time they ask someone to offer a judgment regarding what that risk may be. The secret is always to remember what it is you are really looking at.

GQM for Better Security Metrics

Up to this point, I have emphasized that, in selecting IT security metrics, it is more important that you know what you are trying to accomplish and to let this drive your measurement efforts than to let the metrics decide this for you. Starting with metrics is akin to hiring a general contractor to start building your house before you have engaged the architect. This is indicative of a common complaint more generally found in security (and IT in general), because it seems that often our infrastructures and systems do not seem to quite align with higher level business strategies.

As you consider developing your security metrics program, it would be nice to have a way to build that alignment in up front, so that you can always be reasonably sure that you are measuring what you should be measuring to meet your specific objectives. Luckily, there is a great way to do just that—one that comes out of the field of empirical software engineering called the Goal-Question-Metric (GQM) method.

What is GQM?

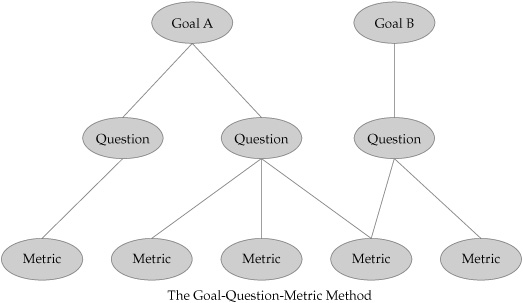

GQM is a simple, three-step process for developing security metrics. The first step in the process involves defining specific goals that the organization hopes to achieve. These goals are not measurement goals, but objectives that measurement is supposed to help achieve. The goals are then translated into even more specific questions that must be answered before assessing whether the organization has achieved or is achieving the goals. Finally, these questions are answered by identifying and developing appropriate metrics and collecting empirical data associated with the measurements. The method ensures that the resulting metrics data remains explicitly aligned with the higher level goals and objectives of the measurement sponsors. Figure 2-1 illustrates the basic GQM method.

Figure 2-1. The GQM method provides direct alignment between metrics and goals. Note that metrics may be shared between goals and questions.

Background

The GQM method traces its roots back through software engineering practices into the 1970s, primarily through the academic and industry research conducted by Victor Basili of the University of Maryland. Originally developed to support NASA, GQM was designed to move testing for software defects from the qualitative and subjective state it was currently in to an empirical model in which defects would be measured against defined goals and objectives that could then be linked to results.

It may be difficult to believe today that software design and testing was ever non-empirical, but every scientific and technical discipline goes through phases of maturing sophistication. IT security is no different—part of the reason this book and others like it are written. But I digress. In developing GQM, Basili and his successors built a simple and elegant framework for aligning software metrics with software goals. Since it was first proposed, GQM has been studied and used to improve software measurement and testing in many environments. And yet, somewhat amazingly, GQM has not suffered any significant methodology bloat or major modification in the nearly three decades that it has been in use. Part of the reason may be because GQM was born and has lived in a primarily academic environment and for whatever reason was not widely adopted by consultants with a vested interest in making something simple and open into something complex and proprietary. But another reason is that very simplicity itself. GQM is immediately intuitive and functional, and any attempt to improve on what it offers would seem to be an attempt to gild the lily.

Benefits and Requirements

Using GQM to build your security metrics provides at least three important benefits to a security measurement program:

![]() Metrics are designed from the top down, starting with goals and objectives, rather than from the bottom up.

Metrics are designed from the top down, starting with goals and objectives, rather than from the bottom up.

![]() Measurement activities are inherently constrained and bounded by the goals set for the project, reducing the chances that the project loses focus or suffers from “scope creep.”

Measurement activities are inherently constrained and bounded by the goals set for the project, reducing the chances that the project loses focus or suffers from “scope creep.”

![]() Metrics are customized to the unique needs and requirements of the organization, which are reflected in the goals that the organization sets for its security measurement activities.

Metrics are customized to the unique needs and requirements of the organization, which are reflected in the goals that the organization sets for its security measurement activities.

Achieving the benefits of the GQM method does, however, place certain demands on the organization implementing it. Chief among these demands is the requirement that the organization make the effort to define properly the goals and objectives against which they want to measure. If you are exploring IT security metrics, the first requirement in your efforts should be to understand what you are trying to accomplish. Do you want to have more visibility into your security operations or posture? Are you trying to ensure that you will pass next month’s audit against some regulatory requirement? Different goals will naturally involve measuring different aspects of the security program. In some cases, overlap will occur, as some metrics answer multiple questions and some questions support more than one goal, as illustrated in Figure 2-1. But if the goal is not stated, or is vague and unclear, any attempt at measurement becomes problematic.

GQM also encourages a project-oriented measurement activity structure. Goals are specific and bounded, as opposed to broad and open-ended, and must relate back to some system, process, or characteristic of your security program if they are to be measurable and verifiable. Measurement projects allow you to stay focused and in control of the measurement activities you undertake. But these smaller component projects do not have to stand alone and should not. Metrics created through GQM result in catalogs that can be shared and reused across measurement projects over time, and the data analysis and results of individual measurement projects become the building blocks for broad and ongoing security improvement capabilities. I will discuss how GQM supports the larger security improvement program in later chapters, but for now let us concentrate on using the methods to produce solid security metrics.

Setting Goals

Goals give GQM measurements their power, so setting appropriate goals becomes the most important part of the metrics process. But it is not always easy to develop good goals. Effective goals require us to move from abstract ideas to specific commitments. “I’m going to be a better person” is all well and good, but “I’m going to spend ten percent of my free time and income helping people less fortunate than myself” is a different goal entirely. The latter goal provides a set of assumptions and commitments that can be measured and verified. Who’s to say whether or not I failed to meet the former?

Many of the goals I see in my security work involve some variation of the goal of being a better person. I see customers setting goals to “improve our security,” “protect sensitive information more effectively,” or “reduce our vulnerabilities,” and then moving on to the methods and activities they think they need to meet those goals. Later these organizations may find that they cannot effectively articulate their success or the value of their efforts, or that a goal proved so open-ended that it has morphed through several iterations and now has little in common with the original objectives that drove those efforts. As we work toward better metrics and improved security, we cannot escape the fact that we need to work on creating good security goals first. And good goals under the GQM method share several common characteristics.

Good Goals Are Specific

The difference between a dream and a goal is that dreams are open-ended. Goals involve nailing down the details. The more you define the attributes and milestones of your goal, the better that goal will be. Making your goal specific also makes it easier to measure your results. Keeping the goal too general or vague reduces the value of your accomplishments even if you succeed.

General success makes it very difficult to tie what you actually did to what you committed to do, or to figure out which of your successes overcame which of your mistakes to get you across the finish line. Success could have simply been a product of dumb luck or other coincidences that had nothing to do with your actions.

The same holds true for failure. Without specific goals, you run a high risk of seeing your goal misinterpreted, or even hijacked, as situations and circumstances change. Goals need to be flexible, but flexibility should be about consciously altering known quantities and not about completely changing course midstream because your goal could be interpreted in several different ways.

Good Goals Are Limited

As the specifics of your goal show you how complex even simple problems can become, it pays to limit what you try to accomplish in a single effort. We often hear two competing pieces of advice coming from the common wisdom. We are told that we shouldn’t limit ourselves. Limit yourself artificially and you never know what you could have achieved. But at the same time, paradoxically, we are also told that we should know our limits. Extend your capabilities too far and you risk failure and even disaster. So how do we reconcile the two? These sayings actually reflect two aspects of the same problem.

Good goals are limited in the sense that they involve a bounded scope of accomplishment that is also well understood. Limiting a goal does not mean making the goal so easily achieved that it is no longer challenging. Instead, good goals have defined boundaries, which may include a business unit, a particular system, or a concept such as worm defense or compliance with an industry regulation. You do not have to have all the answers, but a good goal will at least have clearly defined the problem space in which those answers exist.

Limiting your goals does not mean that they lack strategic scope, but rather that strategy is embedded in clear hooks at the boundaries that allow goals to be chained into a series of interrelated tactical activities that becomes greater than the sum of its parts. If a goal is too strategic, details become lost in the grand picture. But as any builder of systems can tell you, if you lose control of the details you lose control of the whole.

Good Goals Are Meaningful

The worst goals you can imagine do not mean anything. Actually, the absolute worst goals are not only meaningless, but also known to be meaningless by most or all of the people involved. When a goal is meaningless, it negatively impacts everything involved. Objectives are not achieved, decisions regarding the goal are uninformed, and participant morale suffers. Two primary ways that you can ensure that your goals have meaning are to construct them so they are both attainable and verifiable.

Attainable An attainable goal can actually be met. Attainable goals are not open-ended but are developed in the context of a particular project or activity that has a beginning and an ending. At the end of the activity, whether you measure its duration in terms of time or in terms of some other criteria such as project milestones, you assess the activity against your stated goal. Attainable goals also involve deciding how much you want to attempt, your level of commitment, and your tolerance for risk of failure. Attainability involves striking the delicate balance between attempting too little and attempting too much. Developing attainable goals often requires that you do some research to decide where these limits currently exist, and then incorporate those insights into a goal’s overall limits and boundaries.

Verifiable In verifying our goals, we decide up front what criteria will be used to indicate our success or failure at achieving the goals. To make our goals meaningful we must be able to show not only that we have attained some end, but whether we in fact did or did not attain it. Depending on your goals, verification can be accomplished through positive indicators that prove the goals were achieved—for instance, a predetermined increase in the number of users who have formally reviewed and acknowledged the corporate security policy. Or verification can be accomplished through refutation by predefining criteria that indicates the goal was not achieved, such as a failed audit. Verification ensures that everyone knows exactly where they stand in regards to the goal, and it keeps all involved individuals honest about how much was accomplished.

Measurement is implicit in the concept of verification. While some goals may be straightforward (you either pass the audit or you do not), most goals will involve gathering necessary data to help you understand how well the goal was achieved or by how much it was missed. GQM addresses measurement against set goals directly, as you shall see.

Good Goals Have a Context

Few goals are made in a vacuum. Even my New Year’s resolution to lose ten pounds involves multiple circumstances including how bloated I’m feeling after holiday season gorging, my wife’s off-the-cuff reminder that I’m due for a physical, and my watching a neighbor take his new racing cycle out for a 50-mile ride (showoff...).

When we set goals in an organizational context such as IT security, we are also reacting to various situations and circumstances. Perhaps we suffered a security breach recently, or internal audit is knocking on our door for an annual review, or our peer on the network side just published an internal case study on her success rate against virus outbreaks (showoff...). Effective goals recognize and address the contexts in which they are attempted, from the stakeholders involved and the desired outcomes, to the unique environment in which the goal is being attempted. These are often considerations that we undertake almost unconsciously, knowing the lay of the land in which we operate, but a good goal will have made at least some of these considerations explicit.

Good Goals Are Documented

After you have put the effort into designing effective goals, it makes sense to formalize them. A good goal will demand a level of documentation that captures and organizes all the salient attributes and parameters involved. If your goal doesn’t seem to be something that you need to write down (“we’re going to implement a data loss prevention strategy...”), it is probably not a well-constructed goal. Documenting your goals also serves as an easy way to capture and solidify the support of multiple stakeholders. Putting a goal into writing and requiring individuals responsible for assigning as well as achieving the goal to review and sign off on its details allows for negotiation and debate before the project begins, instead of recriminations and rationalizations that might occur after it ends.

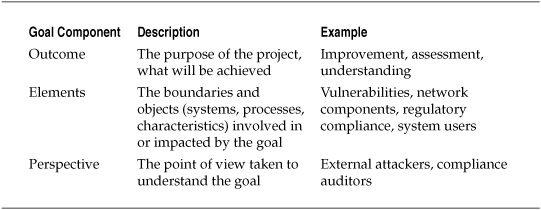

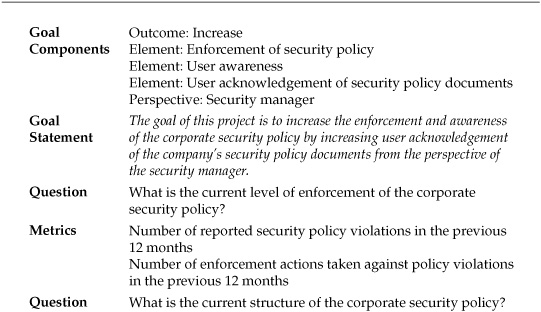

The GQM method includes a basic template concept for articulating the goals of a security measurement or improvement project quickly and succinctly. Specific information is captured regarding the goal, including explicitly defining the basic attributes and criteria for success. The resulting information is incorporated into the template and used to create a basic statement of the goal. These components are shown in Table 2-1.

Table 2-1. Goal Template for the GQM Method

After the components of the goal are defined, the template provides for the easy creation of a brief goal statement that captures the pertinent information necessary to begin working on the activity.

Let’s use the example of a security manager considering a project to improve user compliance with corporate security policies that are not effectively disseminated or enforced by the organization. The goal components for the activity could be broken down as follows:

![]() Outcome: Increase

Outcome: Increase

![]() Element: Enforcement of the corporate security policy

Element: Enforcement of the corporate security policy

![]() Element: User awareness

Element: User awareness

![]() Element: User acknowledgement of security policy documents

Element: User acknowledgement of security policy documents

![]() Perspective: Security manager

Perspective: Security manager

These components can then be combined into a simple, yet comprehensive statement: The goal of this project is to increase the enforcement and awareness of the corporate security policy by increasing user acknowledgement of the company’s security policy documents from the perspective of the security manager.

Constructing goal statements this way forces the stakeholders involved to keep their goals limited, specific, and meaningful. The short format of the statement also makes it much easier to communicate and evaluate the goal, and the natural constraints imposed by limiting the number of attributes and targets reduces the likelihood that multiple goals will become conflated and confused. Multiple goals, such as those for complex projects, are effectively parsed into subcomponents that can be addressed and evaluated individually.

Asking Questions

Developing and documenting good goals is critical to effective security measurement in general, and to the GQM method in particular, but it is just the first step toward effective metrics. Although the goal statements produced by the GQM template enable stakeholders to share and review their goals easily, these documented goals do not contain enough information to allow stakeholders to evaluate whether or not the goal was successfully achieved.

Goal statements are conceptual in nature. They do not define how the attributes and targets of the goal will be operationally addressed. To develop that information, individual goals are translated into a series of questions that enable the components of the goal to be achieved or evaluated for success. These questions articulate the goal and the measurement project in terms of what objects or activities must be observed and what data must be collected to address the individual components of the goal statement.

Using the example of the security policy improvement project, how would you translate the goal statement into operational questions? Several questions are already implied by examining the goal components:

![]() What is the current level of enforcement of the corporate security policy?

What is the current level of enforcement of the corporate security policy?

![]() What is the current structure of the corporate security policy?

What is the current structure of the corporate security policy?

![]() Do employees read and understand the corporate security policy?

Do employees read and understand the corporate security policy?

![]() Is enforcement of the security policy increasing?

Is enforcement of the security policy increasing?

Through the development of operational questions, the goal of the security improvement project can now be expressed in terms of tangible characteristics of processes, systems, and individuals that can be evaluated and measured. These questions remain tightly integrated with the overall goal of the project and ensure that any resulting data and conclusions remains aligned with the original intent of the stakeholders involved. GQM-derived questions also provide an intuitive second-order analysis of the resources that will be required to meet the goal by outlining the sources of data and resources necessary to provide adequate answers to the questions. The security manager in our example should immediately recognize that these questions mean she will need to understand specific details of the security policy and identify any data sources to which she does not have direct access.

Assigning Metrics

After questions have been developed to define the goal operationally, the goal can begin to be characterized at a data level, and metrics can be assigned that will provide answers. A key strength of GQM is that, by this point, designing metrics becomes much more intuitive, because only certain measurements will produce the data necessary to answer the very specific questions that the goal has produced. Many metrics are potentially able to answer these questions, and more emphasis can be placed on evaluating the feasibility of adopting certain metrics based on how difficult data may be to collect or how detailed the data needs to be. The questions also help the project stakeholders choose appropriate quantitative or qualitative measurement and analysis techniques in a way that is driven by the goal and not subject to arbitrary judgments about the metrics themselves.

Our intrepid security manager knows her goal and knows a few of the questions that she must ask to evaluate whether or not the project is achieving the goal. Now she uses those questions to develop a set of metrics by which she can measure achievement.

What Is the Current Level of Enforcement of the Corporate Security Policy?

Metrics supporting this question will involve data regarding how often security policies are violated within the company and how often the company takes action against these violations:

![]() Number of reported security policy violations in the previous 12 months

Number of reported security policy violations in the previous 12 months

![]() Number of enforcement actions taken against policy violations in the previous 12 months

Number of enforcement actions taken against policy violations in the previous 12 months

If there are fewer enforcement actions taken than there are violations, the policy is not being enforced in all situations. If there are no reported violations, this could mean that no one is violating the policy, but it more likely indicates that, not only is the policy not being enforced, but the company has little visibility even into how often employees are violating the policies. In this case, the goal of increasing enforcement may even develop a dependency on another goal—that of increasing the visibility into security policy violations, spawning another measurement project.

What Is the Current Structure of the Corporate Security Policy?

This question involves data different from measuring the frequency of an event. Understanding the structure of the security policy means measuring aspects of the policy infrastructure:

![]() Number of documents that make up the corporate security policy

Number of documents that make up the corporate security policy

![]() Format(s) of security policy documents (hard copy, HTML, PDF)

Format(s) of security policy documents (hard copy, HTML, PDF)

![]() Location(s) of security policy documents (content management system, static web page, three-ring binder)

Location(s) of security policy documents (content management system, static web page, three-ring binder)

![]() Types of policy acknowledgement mechanisms (e-mail notification of users, electronic acknowledgement of policy access or review, hard copy signoff sheet)

Types of policy acknowledgement mechanisms (e-mail notification of users, electronic acknowledgement of policy access or review, hard copy signoff sheet)

![]() Length of time since the last security policy review by management

Length of time since the last security policy review by management

The company’s security policy may exist as a single document or as a set of documents that define policies, guidelines, procedures, and even configurations. Knowing the structure of the security policy aids decision-makers by identifying ways to make employee acknowledgment of the policy more efficient and the policy more enforceable.

Do Employees Read and Understand the Corporate Security Policy?

Measuring human understanding and behavior gets interesting and touches on many of the points made in this chapter. Understanding cannot really be observed directly unless you are a neuroscientist studying brain activity, and even then the results are open to interpretation and not particularly useful to our security manager. (Requiring brain scans of all employees will probably not lead to an acceptable return on investment for the policy project.) Instead, we measure understanding by observing how people behave and respond and comparing that data to what we agree is appropriate for someone who understood:

![]() Ratio of employee job descriptions that specify responsibility for following the corporate security policy

Ratio of employee job descriptions that specify responsibility for following the corporate security policy

![]() Number of security policy awareness or training activities conducted in the previous 12 months

Number of security policy awareness or training activities conducted in the previous 12 months

![]() Ratio of employees who have formally acknowledged the corporate security policy in the previous 12 months

Ratio of employees who have formally acknowledged the corporate security policy in the previous 12 months

![]() Results of a user survey asking how familiar users are with the policy and how appropriate and usable the policy is judged to be

Results of a user survey asking how familiar users are with the policy and how appropriate and usable the policy is judged to be

Metrics of this kind can also provide good opportunities to explore alternative data sources and to combine observations of activities and processes with those of human responses for comparative purposes.

Is Enforcement of the Security Policy Increasing?

The questions and metrics so far have provided data that supports increasing security policy enforcement by describing the current environment. Without developing a sound baseline of performance, there can be no credible or verifiable way of judging whether the project is meeting or has met the goal. After the current performance baseline has been established, it becomes possible to consider metrics to define improvement or progress:

![]() Increase in security policy enforcement actions over baseline (expressed as either a raw count or a percentage, as appropriate)

Increase in security policy enforcement actions over baseline (expressed as either a raw count or a percentage, as appropriate)

![]() Increase in awareness of corporate security policy (number of awareness activities, number of user acknowledgements of the policy)

Increase in awareness of corporate security policy (number of awareness activities, number of user acknowledgements of the policy)

![]() Increase in efficiency of the security policy process (increased policy reviews, reduction in the number of policy documents or locations)

Increase in efficiency of the security policy process (increased policy reviews, reduction in the number of policy documents or locations)

![]() Improved response from surveyed users on policy familiarity and usability

Improved response from surveyed users on policy familiarity and usability

Using the data provided by these metrics, the security manager can analyze the effects of decisions or activities undertaken over the course of the project, describe how well the project achieved the goal, and produce conclusions and insights that can lead to more measurement and ongoing improvement over repeated activities.

Putting It All Together

Capturing and documenting GQM data for security measurement and improvement activities can be accomplished by expanding upon the GQM template for goal creation (Table 2-1). The full template includes the goal statement and associated goal components along with the questions and metrics necessary for fully implementing the project. This template can then be used as the baseline project charter and documentation. Table 2-2 shows the fully completed GQM template for the security policy enforcement project.

The Metrics Catalog

The GQM method results in a set of specific, documented metrics for a particular measurement project. These metrics are also tied directly to well-understood goals and questions regarding specific systems, processes, and characteristics of an IT security environment. Another strength of GQM is that the outputs of the methodology are naturally suited to the creation of metrics catalogs that can be reused over time and shared across projects as well as security and business organizations and stakeholders.

As seen in Figure 2-1, different goals and questions can rely on the same metrics for the data they need. As the metrics program becomes larger and more sophisticated, the structure and results of preceding measurement projects becomes invaluable in the brainstorming process that leads to the creation of new goals and projects. The new goal might be the direct result of the findings of a previous project. (In the security policy example, for instance, it was possible that the project would reveal not only that policies were not enforced but that violations were not even being reported, a situation requiring exploration.) New measurement projects can also result as security personnel become more comfortable using GQM to develop their metrics and project sponsors become more impressed with the results. In these cases, previous project goals and questions can act as inspiration for new metrics or as easily modified templates to apply to other scenarios.

Table 2-2. GQM Project Definition Template (Security Policy Enforcement)

Managing a metrics catalog does not require any special tools, although you can get as sophisticated as you want. Simple capture of GQM templates for each measurement project in a central archive for use by the security staff is one way of ensuring that everyone’s work can be reused and recycled. More sophisticated approaches to metrics cataloging might include building databases that permit more robust links between goals and metrics. Collaboration technology such as wikis are also a good fit for the metrics catalog, because they can be set up to allow metrics users to add content, comment on experiences with measurement projects, and dynamically grow the metrics program around a central repository of security-related data.

More Security Uses for GQM

I have already outlined how you might use GQM to develop goal-driven metrics for a particular project involving security policy enforcement. GQM is applicable to just about any situation in which you want to measure the security environment against some set of goals or objectives. The only limits are the ability of the organization to define specific goals and to commit resources to measurement projects. I will discuss detailed security measurement projects, including what to do after you have collected your metrics data, in later chapters. For now, we’ll look at how GQM lets you build defined goals, questions, and metrics for a number of security measurement problems.

Measuring Security Operations

Measuring the day-to-day systems and activities that make up our security and data protection programs is perhaps the most ubiquitous activity of security professionals. We measure things so that we know what is going on, to determine whether immediate fires must be extinguished, and to demonstrate that we are earning our keep. GQM provides a way to structure and standardize operational security measurements. In many cases, this sort of data is already being collected, but applying GQM to the problem ensures that metrics do not end up “orphans” that are unconnected or aligned with specific security goals.

If you have metrics for which you collect data, but they are not tied to specific objectives, GQM can provide the basis for a “ground-up” thought exercise as you ask yourself what the data actually supports. If you can’t answer that question, even the most “common sense” data starts to look suspect.

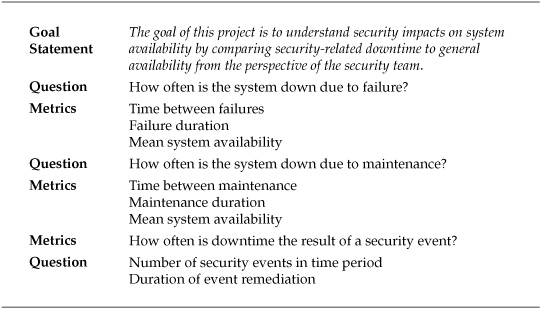

Example: Security-Related Downtime

Understanding how long your systems are up and available to users is a common IT metric. Understanding how security impacts availability is also important, particularly when you need to compare security to other IT challenges. Table 2-3 illustrates an example project for measuring security-related downtime.

Table 2-3. GQM Project for Security-Related Downtime

This scenario demonstrates the importance of the perspective component of the GQM template. For the security team, understanding how much impact on general availability results from security-related issues would be important. But from the perspective of a system user, downtime is downtime. Users usually don’t care that they are grounded as a result of a security problem, a misconfiguration, or the fact that Bob accidentally unplugged the wrong box—they just want the system back up.

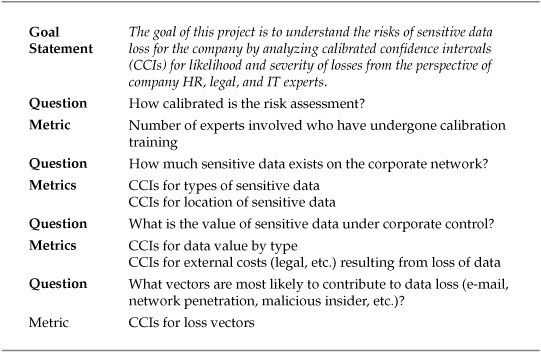

General Risk Assessment for Data Loss Prevention

I spent a bit of time in this chapter and the last critiquing general risk assessments as a measurement tool. But I do not believe that these assessments are as completely useless as some critics would contend. The challenge is to make them better; so it makes sense to adapt GQM to the challenge as a way of getting some closure on my arguments. A simplified example of a GQM project involving general risk assessment for data loss prevention (DLP) is illustrated in Table 2-4.

The use of confidence intervals and calibration of expert judgments are analytical techniques that allow you to move away from less-precise ranking scales (low–high, 1–10) that are often employed in security risk assessments. Detailed descriptions of how to use and apply these techniques to security measurement projects will be covered in later chapters.

Measuring Compliance to a Regulation or Standard

Metrics for daily operations are somewhat easier to grasp and are usually directly supported by information produced either by the systems under management or through well-understood metrics such as uptime or throughput. Measuring other environmental factors, such as regulatory compliance, challenges security managers to create metrics for something conceptual that cannot be directly observed (“compliance”) by identifying empirical measurements they can use to find answers. In the case of regulatory controls, this can be accomplished by understanding the requirements promulgated under a particular regulatory framework and extrapolating compliance by measuring how well those requirements are met.

Table 2-4. GQM Project for General DLP Risk Assessment

Compliance to Health Insurance Portability and Accountability Act Using NIST SP 800-66 Guidance

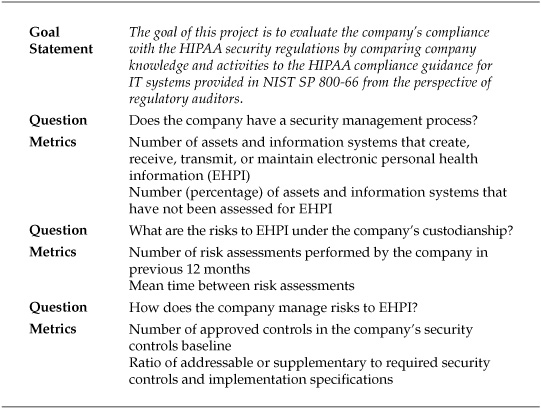

The Health Insurance Portability and Accountability Act (HIPAA) is a U.S. law that mandates, among other things, how personally identifiable healthcare information must be protected by healthcare entities covered under the law. Enforced through a series of regulations, including specific regulatory requirements for IT security, HIPAA requires covered entities to undertake a number of activities to achieve compliance. The U.S. National Institute of Standards and Technology (NIST) has developed a special publication, SP 800-66, that provides guidance for meeting these compliance requirements in language that is easier to understand than the formal legal jargon found in the law and accompanying regulations. A possible GQM project for HIPAA compliance is illustrated in Table 2-5.

Table 2-5. GQM Project for HIPAA Compliance Using NIST SP 800-66

HIPAA and NIST SP 800-66 have too many requirements to complete the entire template in Table 2-5. But the structure of GQM would allow you to create a complete template for the entire SP 800-66 guidance. Or you could choose to divide HIPAA requirements into smaller subprojects based on different aspects of the regulation (policy requirements versus technology requirements, for instance). The flexibility of GQM allows for either method to result in a metrics catalog that is tightly aligned with the overall goals in a formally documented way.

Measuring People and Culture

To close out these introductory examples, let’s explore how you can use GQM to create metrics for elements of your security environment that you may have previously thought were relatively unmeasurable such as people, behavior, or motivation.

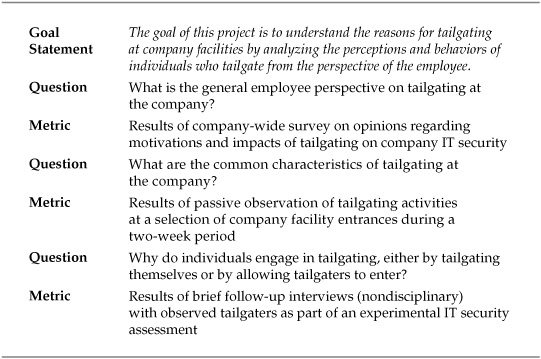

Measuring Tailgating Behavior and Motivation

My security experiences include physical IT security assessments, and in these situations I’ve observed a lot of tailgating (people using a secured entrance without authenticating by following another person who authenticates properly). If weak passwords are one of the most common logical banes of the security manager’s existence, people tailgating into facilities has to be the physical counterpart. I’ve tailgated into sensitive buildings while passing and reading large “Don’t Allow Tailgaters!” signs, as my accommodating new friend and I crossed the threshold. But I’ve always found it curious that, when asking why this occurs, organizations tend to throw up their hands. “It’s just something you have to deal with,” is a common reply. “Who knows why people do it?” is another. So the problem gets written off as, if not unsolvable, then at least not measurable, and efforts are put to find better technical solutions or to make the sign I read going in even larger (and maybe neon). As a social scientist, that strikes me as deliberately ignoring a lot of available empirical data.

Table 2-6 offers a possible GQM project for reclaiming some of that unknown information.

This project, of course, requires a bit of unorthodox thinking. Some of my clients have been reluctant to confront tailgaters at the time of the infraction, because this can be perceived as a disciplinary action or an interrogation. Yet at the same time, organizations recognize that if they cannot control their physical perimeters, they cannot hope to achieve effective information security.

Part of the problem, one that is not addressed in this project, is that most organizations have not measured the loss associated with physical breaches of IT security (another opportunity for metrics excellence!), so the full extent of the problem is unclear. If the organization knew it was losing hundreds of thousands of dollars due to physical breaches, it might decide it was worth confronting a few people on why they are behaving in this way. And this type of experiment does not need to be necessarily hostile. Often, in academic research, a small reward is given to survey or experiment participants. Tailgaters in this project could be assured that the interview is not disciplinary in nature, and then provided a $10 gift card as proof that their input is valued, even if the infraction is not.

Table 2-6. GQM Project for Analyzing Tailgating Behaviors

My physical security clients recognized that their awareness campaigns were usually fairly ineffective (yet often expensive—neon signs do not grow on trees, after all). Understanding the real motivations for a person’s behavior can provide insights into how to manage that behavior more successfully and can potentially improve the efficiency and return on investment of the security program in the process.

Applying GQM to Your Own Security Measurements

The GQM model does not relieve security professionals of their responsibility to understand what they are trying to accomplish. It is not a magic black box that will spit out good metrics from garbage inputs. Instead, GQM provides a logical and structured process for thinking about security, translating those thoughts into requirements, and then developing the data necessary both to document and meet requirements. GQM is a conceptual tool that reminds me of mind-mapping software. It does not give you ideas, but it helps you organize and structure your ideas in a way that allows them to be more valuable and productive.

You might try to apply GQM to some of your current security projects to determine whether it enhances your perspective on what you are trying to accomplish. At the least, GQM should help you to translate your goals into measurement activities and data in a systematic way and to document that process so that your projects are more precise and success is easier to evaluate. The metrics you create using GQM are the first step and the engine that drives forward movement of a larger framework for IT security improvement and are discussed in the next two chapters.

Summary

Debates exist within the IT security metrics community as to what constitutes a “good” metric, and many measurement proponents believe that only quantitative metrics are suitable or adequate for measuring security. But measurement has a number of definitions, and not all of them depend on using numbers. Measurement provides social as well as scientific benefits and can be defined as the judging of the qualities of a thing against accepted standards that may or may not be quantitative.

More important than deciding whether a metric is good or bad, quantitative or qualitative, security professionals should be more concerned with whether their metrics meet the following goals:

![]() They are well understood.

They are well understood.

![]() They are used.

They are used.

![]() They provide value and insight.

They provide value and insight.

Arguments between quantitative and qualitative metrics may tend to ignore the fact that numbers require interpretation and standards as well and can be as misleading as any subjective statement of opinion when not properly presented or understood. And these different types of measurement address different questions. Who, what, when, and where questions can be more easily answered using quantitative metrics than questions of how and why.

When evaluating your security metrics program, begin by looking at the questions that you want to answer and then choose the best metrics (within your resource limits) to provide data and insight. These metrics, whether qualitative or quantitative, should be supported by empirical data, based upon direct observation of the phenomena at hand. This may require you to rethink what you first believed you were observing.