Databases Versus File Systems

It ain’t so much the things we don’t know that get us in trouble. It’s the things we know that ain’t so.

—Artemus Ward (William Graham Sumner), American Writer and Humorist, 1834-1867

Perfecting oneself is as much unlearning as it is learning

—Edsgar Dijkstra

If you already have a background in data processing with traditional file systems, the first things to unlearn are

(0) Databases are not file sets.

(1) Tables are not files.

(2) Rows are not records.

(3) Columns are not fields.

(4) Values in RDBMS are scalar, not structured (arrays, lists, meta-data).

Do not feel ashamed of getting stuck in a conceptual rut; every new technology has this problem.

The US standard railroad gauge (distance between the rails) is 4 ft, 8.5 in. This gauge is used because the English built railroads to that gauge and US railroads were built by English expatriates.

Why did the English build railroads to that gauge? Because the first rail lines were built by the same people who built the pre-railroad tramways, and that’s the gauge they used. Why did those wheelwrights use that gauge then? Because the people who built the horse-drawn trams used the same jigs and tools that they used for building wagons, which used that wheel spacing.

Why did the wagons use that odd wheel spacing? For the practical reason that any other spacing would break an axle on some of the old, long distance roads, because this is the measure of the old wheel ruts.

So who built these old rutted roads? The first long distance roads in Europe were built by Imperial Rome for their legions and used ever since. The initial ruts were first made by Roman war chariots, which were of uniform military issue. The Imperial Roman chariots were made to be just wide enough to accommodate the back-ends of two war horses (this example is originally due to Professor Tom O’Hare, Germanic Languages, University of Texas at Austin; email: [email protected]).

This story does not end there, however. Look at a NASA Space Shuttle and the two big booster rockets attached to the sides of the main fuel tank. These are solid rocket boosters or SRBs. The SRBs are made by Thiokol at their factory at Utah. The engineers who designed the SRBs might have preferred to make them a bit fatter, but the SRBs had to be shipped by train from the factory to the launch site in Florida. The railroad line from the factory runs through a tunnel in the mountains and the SRBs have to fit through that tunnel. The tunnel is slightly wider than the railroad track. So, the major design feature of what is arguably the world’s most advanced transportation system was determined by the width of a horse’s ass.

In a similar fashion, modern data processing began with punch cards (Hollerith cards if you are really old) used by the Bureau of the Census. Their original size was that of a US dollar bill. This was set by their inventor, Herman Hollerith, because he could get furniture to store the cards from the US Treasury Department, just across the street. Likewise, physical constraints limited each card to 80 columns of holes in which to record a symbol.

The influence of the punch card lingered on long after the invention of magnetic tapes and disk for data storage. This is why early video display terminals were 80 columns across. Even today, files which were migrated from cards to magnetic tape files or disk storage still use 80 column physical records.

But the influence was not just on the physical side of data processing. The methods for handling data from the prior media were imitated in the new media.

Data processing first consisted of sorting and merging decks of punch cards (later, sequential magnetic tape files) in a series of distinct steps. The result of each step feed into the next step in the process. Think of the assembly line in a factory.

Databases and RDBMS in particular are nothing like the file systems that came with COBOL, FORTRAN, C, BASIC, PL/I, Java, or any of the procedural and OO programming languages. We used to say that SQL means “Scarcely Qualifies as a Language” because it has no I/O of its own. SQL depends on a host language to get and receive data to and from end users.

1.1 The Schema Statement

Programming languages are usually based on some underlying model; if you understand the model, the language makes much more sense. For example, FORTRAN is based on algebra. This does not mean that FORTRAN is exactly like algebra. But if you know algebra, FORTRAN does not look all that strange to you the way that LISP or APL would. You can write an expression in an assignment statement or make a good guess as to the names of library functions you have never seen before.

Likewise, COBOL is based on English narratives of business processes. The design of COBOL files (and almost every other early programming language) was derived from paper forms. The most primitive form of a file is a sequence of records that are ordered within the file and referenced by physical position.

You open a file (think file folder or in-basket on your desk) and then read a first record (think of the first paper form on the stack), followed by a series of next records (process the stack of paperwork, one paper form at a time) until you come to the last record to raise the end-of-file condition (put the file folder in the out-basket). Notice the work flow:

1. The records (paper forms) have to physically exist to be processed. Files are not virtual by nature. In fact, this mindset is best expressed by a quote from Samuel Goldwyn “a verbal contract ain’t worth the paper it is written on!”

2. You navigate among these records and perform actions, one record at a time. You can go backward or forward in the stack but nowhere else.

3. The actions you take on one file (think of a clerk with rubber stamps) have no effect on other files that are not in the same program. The files are like file folders in another in-basket.

4. Only programs (the clerk processing the paperwork) can change files. The in-basket will not pick up a rubber stamp and mark the papers by itself.

The model for SQL is data kept in abstract sets, not in physical files. The “unit of work” in SQL is the whole schema, not individual tables. This is a totally different model of work! Sets are those mathematical abstractions you studied in school. Sets are not ordered and the members of a set are all of the same type. When you do an operation on a set, the action happens “all at once” to the entire membership. That is, if I ask for the subset of odd numbers from the set of positive integers, I get all them back as a single set. I do not build the set of odd numbers by sequentially inspecting one element at a time. I define odd numbers with a rule—“If the remainder is ± 1 when you divide the number by 2, it is odd”—that could test any integer and classify it. Parallel processing is one of many, many advantages of having a set-oriented model. In RDBMS, everything happens all at once.

The Data Declaration Language (DDL) in SQL is what defines and controls access to the database content and maintains the integrity of that data for all programs that access the database. Data in a file is passive. It has no meaning until a program reads it. In COBOL, each program has a DATA DIVISION; in FORTRAN, each program has the FORMAT/READ statements; in Pascal, there is a RECORD declaration that serves the same purpose. Pick your non-SQL language.

These constructs provide a template or parsing rules to overlay upon the records in the file, split them into fields and get the data into the host program. Each program can split up the sequence of characters in a record anyway it wishes name and name the fields as it wished. This can lead to “job security” programming; I worked in a shop in the 1970’s where one programmer would pick a theme (nations of the world, flowers, etc.) and name his fields “Afghanistan” or “Chrysanthemum” or worse. Nobody could read his code, so we could not fire him.

Likewise, the Data Control Language (DCL) controls access to the schema objects that a user can create. Standard SQL divides the database users into USER and ADMIN roles. These schema objects require ADMIN privileges to be created, altered, or dropped (CREATE, ALTER, DROP, etc.). Those with USER privileges can invoke the schema objects with UPDATE, DELETE, INSERT, SELECT, and other statements that work with the data but not the structure of the schema.

I do not spend much time on DCL in this book, since I deal with the USER (programmer), but the basic model is that the ADMIN level can grant or revoke privileges on schema objects to USERs. This USER can also grant or revoke his privileges to other users at lower levels, if his admin allowed it. If this chain of privileges is broken at any level, the users at lower levels no longer have those privileges. The principle is that access privileges flow downhill.

There is no such access model in a file system. Traditionally, access control is external to a file system at the operating system level. Neither SQL nor file systems can be concerned with security; encryption, elaborate passwords, etc. are all external to the data.

There is a CREATE SCHEMA statement defined in the standards which brings an entire schema into existence all at once. In practice, each product has very different utility programs to allocate physical storage and define a schema. Much of the proprietary syntax is concerned with physical storage allocations.

A schema must have a name and a default character set, usually ASCII or a simple Latin alphabet as defined in the ISO Standards. There is an optional AUTHORIZATION clause that holds a < schema authorization identifier > for security. After that the schema is a list of schema elements:

< schema element > ::=

< domain definition > | < table definition > | < view definition >

| < grant statement > | < assertion definition >

| < character set definition >

| < collation definition > | < translation definition >

A schema is the skeleton of an SQL database; it defines the structures of the schema objects and the rules under which they operate. The data is the meat on that skeleton.

The only data structure in SQL is the table. Tables can be persistent (base tables), used for working storage (temporary tables), or virtual (VIEWs, common table expressions, and derived tables). The differences among these types are in implementation, not performance. One advantage of having only one data structure is that the results of all operations are also tables—you never have to convert structures, write special operators, or deal with any irregularity in the language.

The < grant statement > has to do with limiting access by users to only certain schema elements. The < assertion definition > is still not widely implemented yet, but it is like a constraint that applies to the schema as a whole. Finally, the < character set definition >, < collation definition >, and < translation definition > deal with the display of data. We are not really concerned with any of these schema objects; they are usually set in place by the DBA (Database Administrator) for the users and we mere programmers do not get to change them.

Conceptually, a table is a set of zero or more rows, and a row is a set of one or more columns. This hierarchy is important; actions apply at the schema, table, row, or column level. For example, the DELETE FROM statement removes rows, not columns, and leaves the base table in the schema. You cannot delete a column from a row.

Each column has a specific data type and constraints that make up an implementation of an abstract domain. The way a table is physically implemented does not matter, because you access it only with SQL. The database engine handles all the details for you and you never worry about the internals as you would with a physical file. In fact, almost no two SQL products use the same internal structures.

There are two common conceptual errors made by programmers who are accustomed to file systems or PCs. The first is thinking that a table is a file; the second is thinking that a table is a spreadsheet. Tables do not behave like either one of these, and you will get surprises if you do not understand the basic concepts.

It is easy to imagine that a table is a file, a row is a record, and a column is a field. This is familiar and when data moves from SQL to the host language, it has to be converted into host language data types and data structures to be displayed and used. The host languages have file systems built into them.

The big differences between working with a file system and working with SQL are in the way SQL fits into a host program. Using a file system, your programs must open and close files individually. In SQL, the whole schema is connected to or disconnected from the program as a single unit. The host program might not be authorized to see or manipulate all of the tables and other schema objects, but that is established as part of the connection.

The program defines fields within a file, whereas SQL defines its columns in the schema. FORTRAN uses the FORMAT and READ statements to get data from a file. Likewise, a COBOL program uses a Data Division to define the fields and a READ to fetch it. And so on for every 3GL’s programming, the concept is the same, though the syntax and options vary.

A file system lets you reference the same data by a different name in each program. If a file’s layout changes, you must rewrite all the programs that use that file. When a file is empty, it looks exactly like all other empty files. When you try to read an empty file, the EOF (end of file) flag pops up and the program takes some action. Column names and data types in a table are defined within the database schema. Within reasonable limits, the tables can be changed without the knowledge of the host program.

The host program only worries about transferring the values to its own variables from the database. Remember the empty set from your high school math class? It is still a valid set. When a table is empty, it still has columns but has zero rows. There is no EOF flag to signal an exception, because there is no final record.

Another major difference is that tables and columns can have constraints attached to them. A constraint is a rule that defines what must be true about the database after each transaction. In this sense, a database is more like a collection of objects than a traditional passive file system.

A table is not a spreadsheet, even though they look very much alike when you view them on a screen or in a printout. In a spreadsheet you can access a row, a column, a cell, or a collection of cells by navigating with a cursor. A table has no concept of navigation. Cells in a spreadsheet can store instructions and not just data. There is no real difference between a row and a column in a spreadsheet; you could flip them around completely and still get valid results. This is not true for an SQL table.

The only underlying commonality is that a spreadsheet is also a declarative programming language. It just happens to be a nonlinear language.

The schema model is that the data is separate from the programs using it. The database system maintains the integrity of that data. This has a lot of implications. There must be universal names for data elements; a uniform set of data element rules enforced by the “active storage management” system are part of the database.

Data in files has no integrity constraints, default values, or relationships among other files or even in the same file.

Another conceptual difference is that a file is usually data that deals with a whole business process. A file has to have enough data in itself to support applications for that one business process. Files tend to be “mixed” data which can be described by the name of the business process, such as “The Payroll file” or something like that. Tables can be either entities or relationships within a business process. This means that the data which was held in one file is often put into several tables. Tables tend to be “pure” data which can be described by single words. The payroll would now have separate tables for Personnel, Projects, and the relationship between those two things, timecards.

1.2 Tables as Entities

An entity is a physical or conceptual “thing” which has meaning by itself. A person, a sale, or a product would be an example. In a relational database, an entity is defined by its attributes, which are shown as values in columns in rows in a table.

To remind users that tables are sets of entities, ISO-11179 Standard likes to use collective or plural nouns that describe the set of those entities for the names of tables. Thus “Employee” is a bad name because it is singular; “Employees” is a better name because it is plural; “Personnel” is best because it is collective. Imagine the characters of DILBERT!

If you have tables with exactly the same structure, then they are sets of the same kind of elements. But you should have only one set for each kind of data element! Files, on the other hand, were physically separate units of storage which could be alike—each tape or disk file represents a step in the PROCEDURE, such as moving from raw data, to edited data, and finally to archived data. In SQL, this should be a status flag in a table.

1.3 Tables as Relationships

A relationship is shown in a table by columns which reference one or more entity tables. It can include attributes of the relationships.

Without the entities, the relationship has no meaning, but the relationship can have attributes of its own. For example, a show business contract might have an agent, an employer, and a talent. The method of payment (percentage of sales, foreign royalties, etc.) is an attribute of the contract itself and not of any of the three parties. This means that a column can have a REFERENCE to other tables. Files and fields do not do that.

1.3.1 E-R Diagrams

Peter Chen’s 1976 paper in ACM Transactions on Database Systems introduced the most popular variant of the idea of Entity-Relationship modeling and diagramming. The advantage of the simple version of this diagramming technique is that it is fast, easy, and can be read without special training. This makes it a good mind tool for explaining an RDBMS schema to users … or yourself.

The symbols are rectangles for entities, diamonds for relationships, and lines to connect them. The rules are easy to understand; each relationship connects to its entities; no two entities connect directly to each other; and no two relationships directly connect to each other.

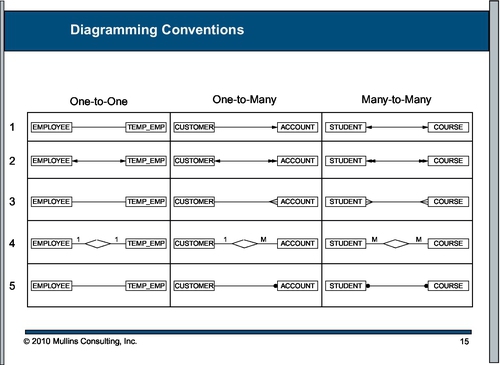

The connectors explain the relationship. Initially, we use straight lines just to show something is there. After that, we can add terminator symbols to the lines. This is easier to show with a diagram than with a narrative.

Cardinality constraints are expressed with graphic symbols for the minimal and maximal elements.

◆ A vertical line means one member of the entity set has to be involved (think one).

◆ A circle means no member of the entity set has to be involved (think zero).

◆ A “crow’s foot” means many members of the entity set have to be involved (think of a small child holding up bunch of finger, saying “many!”). This is used in Barker’s Notation, SSADM, and Information Engineering.

This diagram says that “at least one or more authors write zero or more books” in the data model. And yes, you can be listed as an author without doing anything on a particular book. In the book business, “Gloria Glamor’s True Story” as told to Ghenta Ghostwriter will list both Gloria and Ghenta as authors.

This diagram says “one or more authors must write exactly one book, no more no less” in the notation.

There are many E-R diagramming tools with different graphic conventions, free and commercial, but my point is that even a simple “pen and paper” tool helps. The “slash-circle-crows-foot” maps easily into SQL’s REFERENCES clauses declarative referential integrity (DRI) constraints.

Chen proposed the following “rules of thumb” for mapping natural language descriptions into ER diagrams:

1.4 Rows Versus Records

To reiterate, rows are not records. A record is defined in the application program which reads it; a row is named and defined in the DDL of the database schema and not by a program at all. The names of the fields are in the READ or INPUT statements of the application. Likewise, the PHYSICAL order of the fields in the READ statement is vital (“READ a,b,c;” is not the same as “READ c, a, b;”; but “SELECT a,b,c FROM Foobar;” is the same data as “SELECT c, a, b FROM Foobar;” in SQL).

All empty files look alike; they are a directory entry in the operating system with a name and a length of zero bytes of storage. But empty tables still have columns, constraints, security privileges, and other structures, even though they have no rows.

This is in keeping with the set theoretical model, in which the empty set is a perfectly good set. The difference between SQL’s set model and standard mathematical set theory is that set theory has only one empty set, but in SQL each table has a different structure, so they cannot be used in places where nonempty versions of themselves could not be used.

Another characteristic of rows in a table is that they are all alike in structure and they are all the “same kind of thing” in the model. In a file system, records can vary in size, data types, and structure by having flags in the data stream that tell the program reading the data how to interpret it. The most common examples are Pascal’s variant record, C’s struct syntax, and COBOL’s OCCURS clause.

The OCCURS keyword in COBOL and the VARIANT records in Pascal have a number which tells the program how many time a subrecord structure is to be repeated in the current record.

Unions in “C” are not variant records but variant mappings for the same physical memory. For example:

union x {int ival; char j[4];} mystuff;

defines mystuff to be either an integer (which are 4 bytes on most C compilers, but this code is nonportable) or an array of 4 bytes, depending on whether you say mystuff.ival or mystuff.j[0];

But even more than that, files often contained records which were summaries of subsets of the other records—so-called control break reports. There is no requirement that the records in a file be related in any way—they are literally a stream of binary data whose meaning is assigned by the program reading them.

1.5 Columns Versus Fields

A field within a record is defined by the application program that reads it. A column in a row in a table is defined by the database schema. The data types in a column are always scalar.

The order of the application program variables in the READ or INPUT statements is important because the values are read into the program variables in that order. In SQL, columns are referenced only by their names. Yes, there are shorthands like the SELECT * clause and INSERT INTO < table name > statements which expand into a list of column names in the physical order in which the column names appear within their table declaration, but these are shorthands which resolve to named lists.

The use of NULLs in SQL is also unique to the language. Fields do not support a missing data marker as part of the field, record, or file itself. Nor do fields have constraints which can be added to them in the record, like the DEFAULT and CHECK() clauses in SQL.

Files are pretty passive creatures and will take whatever an application program throws at them without much objection. Files are also independent of each other simply because they are connected to one application program at a time and therefore have no idea what other files look like.

A database actively seeks to maintain the correctness of all its data. The methods used are triggers, constraints, and DRI.

DRI says, in effect, that data in one table has a particular relationship with data in a second (possibly the same) table. It is also possible to have the database change itself via referential actions associated with the DRI.

For example, a business rule might be that we do not sell products which are not in inventory.

This rule would be enforced by a REFERENCES clause on the Orders table which references the Inventory table and a referential action of ON DELETE CASCADE. Triggers are a more general way of doing much the same thing as DRI. A trigger is a block of procedural code which is executed before, after, or instead of an INSERT INTO or UPDATE statement. You can do anything with a trigger that you can do with DRI and more.

However, there are problems with triggers. While there is a standard syntax for them since the SQL-92 standard, most vendors have not implemented it. What they have is very proprietary syntax instead. Secondly, a trigger cannot pass information to the optimizer like DRI. In the example in this section, I know that for every product number in the Orders table, I have that same product number in the Inventory table. The optimizer can use that information in setting up EXISTS() predicates and JOINs in the queries. There is no reasonable way to parse procedural trigger code to determine this relationship.

The CREATE ASSERTION statement in SQL-92 will allow the database to enforce conditions on the entire database as a whole. An ASSERTION is like a CHECK() clause, but the difference is subtle. A CHECK() clause is executed when there are rows in the table to which it is attached.

If the table is empty, then all CHECK() clauses are effectively TRUE. Thus, if we wanted to be sure that the Inventory table is never empty, and we wrote:

CREATE TABLE Inventory ( . . . CONSTRAINT inventory_not_empty CHECK ((SELECT COUNT(*) FROM Inventory) > 0), . . . );

it would not work. However, we could write:

CREATE ASSERTION Inventory_not_empty CHECK ((SELECT COUNT(*) FROM Inventory) > 0);

and we would get the desired results. The assertion is checked at the schema level and not at the table level.

I tell students that most of the work in SQL is in the DDL and not the DML. Unlike a field, a column can have all of these constraints on your data and keep them in one place, one way, and one time.