In the first part of this chapter, we are going to learn how to troubleshoot various Impala issues in different categories. We will use Impala logging to understand more about Impala execution, query processing, and possible issues. The objective of this chapter is to provide you some critical information about Impala troubleshooting and log analysis, so that you can manage the Impala cluster effectively and make it useful for your team and yourself. Let's start with troubleshooting various problems while managing the Impala cluster.

Impala runs on DataNodes in a distributed clustered environment. So when we consider the potential issues with Impala, we also need to think about the problems within the platform itself that can impact Impala. In this section, we will cover most of these issues along with query, connectivity, and HDFS-specific issues.

If you find that Impala is not performing as expected, and you want to make sure it is configured correctly, it is best to check the Impala configuration. With Impala installed using Cloudera Manager, you can use the Impala debug web server at port 25000 to check the Impala configuration. Here is a small list describing what you could see in the Impala debug web server:

In Chapter 5, Impala Administration and Performance Improvements, we have learned that enabling "block locality" helps Impala to process queries faster. However, it is possible that "block locality" is not configured properly and you might not be taking advantage of such functionality. You can make sure by checking the logs to verify if you see the following log message:

Unknown disk id. This will negatively affect performance. Check your hdfs settings to enable block location metadata

If you see the preceding log message, it means that tracking block locality is not enabled. Therefore, configure it correctly as described in Chapter 5, Impala Administration and Performance Improvements.

We have also studied in Chapter 5, Impala Administration and Performance Improvements, that having native checksumming improves performance. If you see the following log message, it means native checksumming is not enabled and you need to configure it correctly. This is described in Chapter 5, Impala Administration and Performance Improvements.

Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

In this section, we will cover various connectivity scenarios and learn what could go wrong in each and how to troubleshoot them.

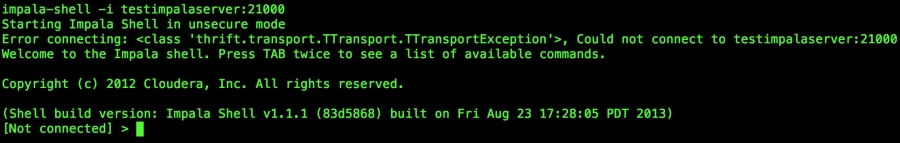

When you start Impala shell by passing the hostname using the -i option or the Impala shell, try connecting to the default Impala daemon that is running on the local machine. The connection can not be established. You will see a connection error as shown in following screenshot:

To troubleshoot the preceding connection problem, you can try the following options:

- Check if the hostname is correct and a connection between both machines is working. You can use

pingor another similar utility to check the connectivity between machines. - Make sure that the machine where the Impala shell is running can resolve the Impala hostname, and the default port 21000 (or the other configured port) is open for connectivity.

- Make sure that the firewall configuration is not blocking the connection.

- Check whether the respective process is running on the Impala daemon host machine. You can use Cloudera Manager or the

pscommand to get more information about the Impala process.

Impala provides connection through the third-party application that uses the ODBC/JDBC driver running on the machine, which is trying to connect to the Impala server. The connection may not work due to various reasons, which are given as follows:

- ODBC use the default 21000 port and JDBC uses the 21050 port in Impala to provide the connectivity; make sure that the incoming to these ports are working. Cloudera ODBC connection 2.0 and 2.5 uses the 21050 port when connecting to Impala.

- If your Impala cluster environment is secured through Kerberos or another security mechanism, use appropriate settings in the ODBC/JDBC configuration. In some cases, you may need to contact the application vendor to receive information about the problem.

- After the connection, you may find that some of the functions are not working over the ODBC/JDBC connection. It is very much possible that not all functions are supported, so you may be trying to use an ODBC/JDBC function that is not supported.

- The JDBC connectivity requires a specific Java Runtime depending on the JDBC version. Because of the Java Runtime compatibility requirement with JDBC, you must make sure that you do have a compatible Java Runtime on a machine that is making the JDBC connection to the Impala server.

The very first query-specific issue is a bad query. The Impala query interpreter is smart in various ways to guide you within the Impala shell for a bad query, or while using API to execute the query statement a detailed error in the log file about it will help you. Besides a bad query, you may also experience the following issues:

- You might use an unsupported statement or clause in your query, which will cause a problem in query execution.

- Using an unsupported data type or a bad data transformation is another prime reason for such issues and the resulting error or log will be helpful to troubleshoot what went wrong.

- Sometimes the query is localized. This means that it is not distributed on other nodes. The problem could be that either the current node could not connect to the other nodes due to connectivity issues, or the Impala daemon is not running there. You will have to troubleshoot this issue by using general connectivity troubleshooting methods between two machines. Also, make sure Impala daemons are running with proper configuration.

- Queries could return wrong or limited results. This is possible if metadata is not refreshed in the Impala cluster. Using the

REFRESHstatement, you can sync Hive metadata to solve this problem. Also, make sure that Impala daemons are running on all the nodes. - If you find that the

JOINoperations are failing, it is very mush possible that you are hitting the memory limitation. While checking Impala logs, you might look forOut of Memoryerrors logged to confirm memory limitation-specific errors. As theJOINoperation is performed among multiple tables, which requires comparatively large memory to process theJOINrequest, so adding more memory could solve this problem. - Your query performance could be slow. In the previous chapter, we discussed various ways to find the trouble and then expedite the query performance.

- Sometimes, when a query fails in Impala and you could not find a reason, try running the same query in Hive to see if it works there or not. If it works in Hive, it could be an Impala-specific configuration or a limitation issue.

During the Impala installation process, the Impala username and group is created. Impala runs under this username and accesses system resources within this group. If you delete this user or group, or modify its access, either Impala will start acting weird or it will show some undeterministic behavior. If you start Impala under the root user, it will also impact the Impala execution by disabling direct reading. So if you suddenly experience such issues, please check Impala user access settings and make sure that Impala is running as configured.

In this section, I will explain a few platform-specific issues so the an event an Impala execution is sporadic or not working at all, you can troubleshoot the problem and find the appropriate resolution.

Impala has two main services, Impala daemon and statestore, and both these services are configured to use internal and external ports for effective communication. This is described in the following table:

|

Component |

Port |

Type |

Service description |

|---|---|---|---|

|

Impalad |

21000 |

External |

Frontend port to communicate with the Impala shell |

|

Impalad |

21050 |

External |

Frontend port for ODBC 2.0 |

|

Impalad |

22000 |

Internal |

Backend port to communicate with each other |

|

Impalad |

23000 |

Internal |

Backend port to get update from the statestore |

|

Impalad |

25000 |

External |

Impala web interface for monitoring and troubleshooting |

|

Statestored |

24000 |

Internal |

Statestore listen for registration/unregistration |

|

Statestored |

25010 |

External |

Statestore web interface for monitoring and troubleshooting |

It is important to remember that if any of the preceding port configuration is wrong or blocked, you will experience various problems and would need to make sure that the preceding port configuration is correct.

Impala runs on the DataNode that has dependency on NameNode in the Hadoop environment. Various HDFS-specific issues such as permission to read or write data on HDFS, space limitation, memory swapping, or latency could impact the Impala execution. Any of these issues could introduce instability in HDFS or impact the whole cluster, depending on how serious the problem is. In this situation, you would need to work with your Hadoop administrator to resolve these problems and get Impala up and running.

Impala can load and query various kinds of datafiles stored on Hadoop. Sometimes you may receive an error while reading these datafiles or failed query requests. Most probably it is because either the file format is not supported, or Impala is limited to only queries and cannot process CREATE or INSERT requests. In the following table, you can see which file formats are supported and whether Impala can read and query those files:

|

File type |

Format type |

Compression type |

Is CREATE and INSERT supported |

Is the query supported? |

|---|---|---|---|---|

|

Text |

Unstructured |

LZO |

Yes |

Yes |

|

Avro |

Structured |

Snappy, GZIP, deflate, BZIP2 |

No |

Query only (use Hive to load file) |

|

RCFile |

Structured |

Snappy, GZIP, deflate, BZIP2 |

|

Query only (use Hive to load file) |

|

SequenceFile |

Structured |

Snappy, GZIP, deflate, BZIP2 |

|

Query only (use Hive to load file) |

|

Parquet |

Structured |

Snappy, GZIP |

Yes |

Yes |