Chapter 9: A review of deep learning approaches in glove-based gesture classification

Emmanuel Ayodelea; Syed Ali Raza Zaidia; Zhiqiang Zhanga; Jane Scottb; Des McLernona a School of Electronic and Electrical Engineering, University of Leeds, Leeds, United Kingdom

b School of Architecture, Planning and Landscape, Newcastle University, Newcastle, United Kingdom

Abstract

Data gloves are the optimal data acquisition devices in hand-based gesture classification. Gesture classification involves the interpretation of the acquired raw data into defined gestures by applying machine learning techniques. Recently, the application of deep learning algorithms has improved the accuracy obtained in glove-based gesture classification. This chapter will review these deep learning approaches. In particular, we analyze their current performance, advantages over classical machine learning algorithms and limitations in certain classification scenarios. Furthermore, we present other deep learning approaches that may outperform current algorithms. This chapter will provide readers with an all-encompassing review that will enable a clear understanding of the current trends in glove-based gesture classification and provide new ideas for further research.

Keywords

Data-glove; Deep learning; Gesture classification; Wearable technology

1: Introduction

Hand gestures are an important part of nonverbal communication and form an integral part of our interactions with the environment. Notably, sign language is a set of hand gestures that is valuable to millions of disabled people. However, deaf/dumb users experience difficulty in communicating with the outside world as most neither understand nor can use sign language. Gesture recognition and classification platforms can aid in translating the gestures to those who do not understand sign language (Yang et al., 2016). In addition, hand gesture recognition can aid in monitoring the progress of patients who are recovering from stroke and rheumatoid arthritis (Watson, 1993). Healthcare professionals can remotely monitor the performance of several patients using a gesture classification system at a lower cost and time than the traditional method of physically observing the joints in the hand. Furthermore, hand gesture classification is a vital tool in human-computer interaction. These gestures can be used to control equipment in the workplace and to replace traditional input devices such as a mouse/keyboard in virtual reality applications (Iannizzotto et al., 2001; Conn and Sharma, 2016).

There are two major approaches in the classification of hand gestures. The first approach is the vision-based approach. This involves the use of cameras to acquire the pose and movement of the hand and algorithms to process the recorded images (Kuzmanic and Zanchi, 2007). Although this approach is popular, it is very computationally intensive, as images or videos have to undergo significant preprocessing to segment features such as the image’s color, pixel values, and shape of hand (Rautaray and Agrawal, 2015). Furthermore, the current geopolitical climate prevents the widespread application of this approach because users are less inclined to the placement of cameras in their personal space, particularly in applications that require constant monitoring of the hands (Caine et al., 2006). Furthermore, camera-based approaches restrict the movement of the user to within the camera’s view. In applications where the user will need to perform their day-to-day activities (e.g., progress monitoring), multiple cameras are required to continuously track the user’s movement and will significantly increase the cost of the system.

In contrast, the glove-based approach involves the use of data gloves that record the flexion of the finger joints. This method is less computationally intensive because the glove’s sensory data is more easily processed than recorded images. In particular, the sensory data of a glove is simply the intensity of light (fiber-optic sensors), electrical resistance/capacitance (conductive strain sensors), or 3-dimensional positional coordinates (inertial sensors) (5DT, 2020; CyberGlove II, 2020; Lin et al., 2014). This means that researchers can classify the sensory data with little or no preprocessing. Moreover, a data glove allows continuous recording of the hand gestures without restricting the movement of the user. Furthermore, data gloves can be easily constructed with cheap off-the-shelf components such as bend sensors and a textile glove, which acts as a support structure. These advantages motivate a review of data glove-based gesture classification.

Gesture classification is the prediction of the hand gesture from the glove’s sensory data. Although for a simple set of gestures such as the opening and closing of the fist, the data can be classified easily because the difference between the two gestures can be visually observed and linearly separated. However, for a more complex set of gestures such as sign language where some gestures are identical, machine learning is required to accurately classify those gestures. In addition, dynamic gestures such as sentences can only be classified with machine learning algorithms.

Therefore, this chapter presents a rigorous review of glove-based gesture classification with machine learning. There have been studies reviewing the application of machine learning in camera-based hand gesture classification (Rautaray and Agrawal, 2015), but to the best of our knowledge, there has been no review of glove-based gesture classification since Watson’s 1993 study (Watson, 1993). Therefore, this chapter provides a one-stop destination for researchers interested in glove-based gesture classification. Moreover, we review the application of deep learning in glove-based gesture classification. This is a nascent field with significant work only published within the last 2 years. In addition, we highlight the advantages of deep learning algorithms over classical machine learning algorithms and discuss the limitations that prevent the rapid publication of studies within this field.

This chapter is structured as follows: Section 2 describes data gloves, their design, history, and sensing mechanism; Section 3 discusses gesture taxonomies; Section 4 describes classical machine learning and deep learning algorithms and their applications in glove-based gesture classification; Section 5 discusses the results of this review and postulates ideas for further research; and finally conclusions are presented in Section 6.

2: Data gloves

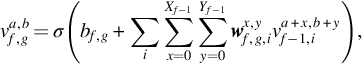

A data glove is a wearable device that is worn on a user’s hand with the intent of measuring the motion at specified joints in the hand. As shown in Fig. 1, the design of data gloves involves embedding strain or inertial sensors in a textile glove. These sensors are placed near the measured joints for increased accuracy. In addition, processing and power supply are embedded to form an incorporated wearable system.

2.1: Early and commercial data gloves

The first data glove was developed in 1977 by researchers in MIT (Massachusetts Institute of Technology). It was called the “Sayre Glove” and utilized elementary fiber-optic sensors (Sturman and Zeltzer, 1994). These sensors consisted of tubes that transmitted light between their two ends. The intensity of the light passing through the tubes decreased as the tubes were bent by the flexion at the finger joints. The light intensity was measured by the voltage of a photocell placed at one end of the tube. It was observed that there was a strong correlation between the angle of flexion and the voltage of the photocell. Other examples of early data gloves made in the early 1980s include the “Digital Entry Data” and the “Super Glove,” which used bend sensors and printed resistive inks respectively (Dipietro et al., 2008).

Recent commercial data gloves include the “Cyberglove,” “5DT Data Glove,” and “Didjiglove.” The Cyberglove developed by Stanford University consists of 18 or 22 piezoresistive sensors. The model with 18 sensors only measures the metacarpophalangeal (MCP) and proximal interphalangeal (PIP) joints, while the model with 22 sensors measures the MCP, PIP, and distal interphalangeal (DIP) joints (CyberGlove II, 2020). In addition, both models measure the abduction, adduction, and wrist movements. The 5DT glove measures movement at the joints using fiber-optic sensors (5DT, 2020). These sensors measure the angle of flexion by its correlation to the weakening of light. It utilizes only one sensor per finger. In particular, the overall flexion at the MP and IP of the thumb is measured by a single sensor, while for other fingers, the overall flexion at the MCP and PIP joints are measured by a single sensor. An upgraded version of the glove uses more sensors to measure abduction and adduction between the fingers. The Didjiglove employs capacitive sensors to measure the flexion at the MCP and PIP joint (Dipietro et al., 2008). The capacitive sensors comprise of two comb-shaped conductive layers that are separated by a dielectric. Although recent data gloves have improved the accuracy of early data gloves, the core design of embedding a strain sensor in a textile glove has been retained. Therefore, it is imperative that we discuss the sensing mechanism of the popular strain sensors used in these data gloves.

2.2: Sensing mechanism in data gloves

Data gloves can be categorized based on their sensing mechanism. The three main types of sensors used in data gloves are fiber-optic sensors, conductive strain sensors, and inertial sensors. This section reviews their operating principles, advantages, and disadvantages.

2.2.1: Fiber-optic sensors

Fiber-optic sensors measure strain by translating the weakening of the light across its fiber (Lau et al., 2013). They are known for very accurate measurements because of the consistent correlation between the attenuation and the contortion angle of the fiber. However, their main disadvantage is the requirement of a light source, which increases the weight and size of the data glove.

Enhanced configurations of fiber-optic sensors have been utilized in more recent data gloves. Particularly, fiber-Braggs gratings (FBG) sensors were implemented in a data glove to measure the flexion at the interphalangeal joints (da Silva et al., 2011). FBG sensors measure strain by changes in the wavelength of the reflected Bragg signal. However, the FBG sensors are very sensitive to temperature and the equation below illustrates the relationship between changes in the Bragg wavelength and changes in the temperature and strain.

where ΔλB, ΔT, and Δϵ represent the change in the Bragg wavelength, temperature, and strain respectively. In addition, ρe, ξt, and αt are, respectively, the photoelastic, thermooptic, and thermal expansion coefficients of the fiber core. Despite the high accuracy in measuring joint angles, their use in real-world applications is restricted due to their high sensitivity to temperature changes.

2.2.2: Conductive strain sensors

Data gloves have been fabricated by utilizing conductive strain sensors (Chen et al., 2016). These strain sensors are formed from embedding conductive nanomaterials on flexible textile polymers by coating, wet spinning, or knitting. Their sensing mechanism is based on the changes in the relationship between their electrical resistance or capacitance and the strain exerted on them as a result of changes in the flexion of the joints in the hand. This creates a data glove that is textile, accurate, and light weight.

Notably, a conductive strain sensor was formed by coating spandex and silk fibers with graphite flakes with a Mayer rod (Zhang et al., 2016). Another textile strain sensor was developed by coating a Lycra fabric with polypyrrole (Wu et al., 2005). Moreover, multifilament yarns formed from conductive and textile fibers can be knitted to form textile strain sensors (Atalay et al., 2014). Furthermore, conductive strain sensors were created with coaxial fibers comprising of a core-shell structure where a flexible shell wraps the conductive core. They are fabricated by either injecting a textile fiber with conductive nanomaterials or by wet spinning (Tang et al., 2018).

2.2.3: Inertial sensors

Inertial sensors in data gloves comprise of gyroscopes and accelerometers that track the position and orientation of the hand joints (Lin et al., 2014; Hsiao et al., 2015). They are more useful in tracking dynamic gestures that require the movement of the wrist rather than other sensors because of the higher degrees of freedom in the wrist compared to the interphalangeal joints. However, they are not as flexible as other sensors and they tend to make the data glove bulky.

3: Gesture taxonomies

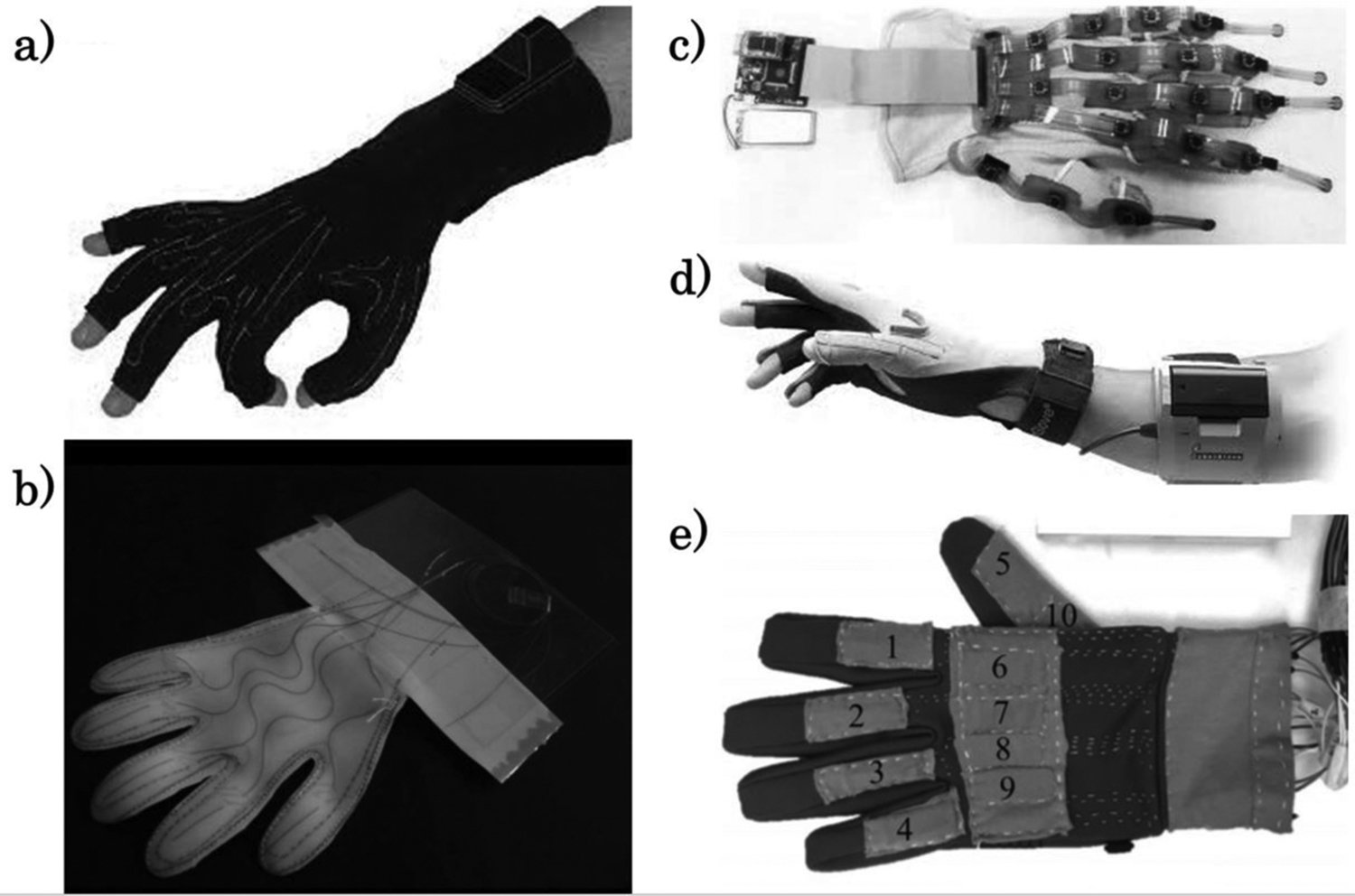

Gestures are a very important method of communication. For example, a “thumbs up” (G12 in Fig. 2B) can signify approval to the recipient, while a “thumbs down” can signify disapproval (Morris, 1979). A gesture taxonomy is a list of gestures. It helps to define what the gestures represent. This is important because a gesture can have several meanings across different cultures and geographical boundaries. In particular, the same thumbs up gesture, which denotes approval in most parts of the world, is seen as derogatory in the Middle East (Axtell and Fornwald, 1991). Gestures can be primarily divided into two categories: static and dynamic gestures. Static gestures are gestures in which the joints of the hand are stationary, while dynamic gestures are gestures that comprise of motion at joints in the hand. For example, a wave of the hand is a dynamic gesture, while a “thumbs-up” is a static gesture.

As illustrated in Fig. 2A, sign languages are gesture taxonomies that contain gestures that can be translated into letters or words and their respective meanings. In particular, a gesture taxonomy for sign language may comprise of static gestures that translate to letters, while another taxonomy may comprise of dynamic gestures that represent full sentences. Other taxonomies may contain gestures that represent the activities performed by the “expressor.” For example, the grasp taxonomy proposed by Schlesinger depicts several hand postures that can be easily translated to the shape of the object (Heumer et al., 2007; Schwarz and Taylor, 1955). A gesture taxonomy can also illustrate a list of dynamic gestures that convey the activities performed by the user such as writing, drinking a cup of coffee, etc.

In human-computer interaction (HCI), there are various applications of hand gesture taxonomies. They are used as input commands for the control of robotic equipment in workstations and aiding doctors in performing teleoperation (Jhang et al., 2017; Fang et al., 2015). They have enabled natural-like interactions with virtual objects in virtual reality applications (Weissmann and Salomon, 1999). In particular, virtual rehabilitation programs contain several gestures that the patient seeks to achieve. These programs enable the healthcare professional to measure the progress of the patient’s rehabilitation efficiently (Jack et al., 2001).

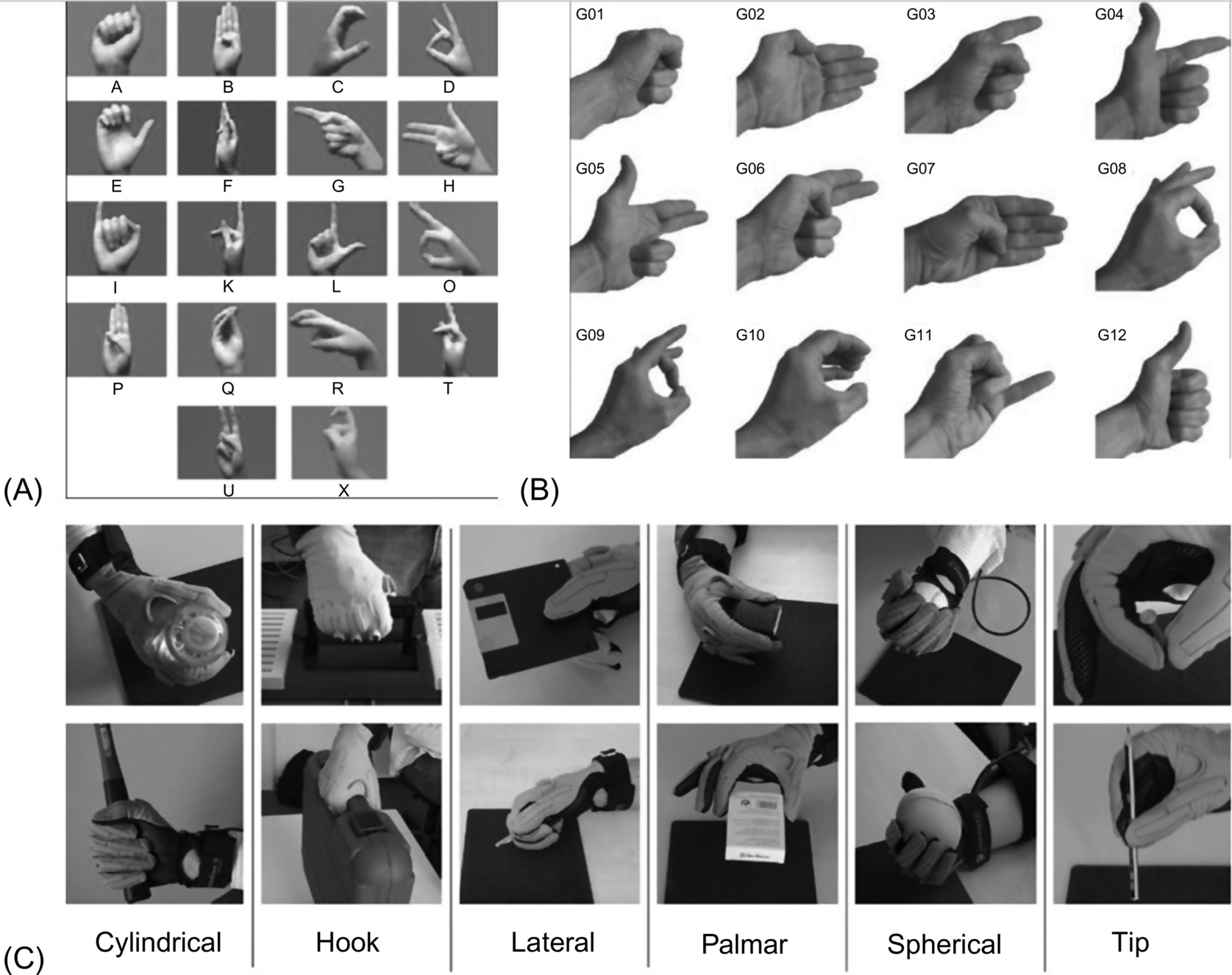

4: Gesture classification

Gesture classification aims to accurately predict the gesture performed by the user from the acquired sensory data of the glove. For a taxonomy with a small of amount of distinct gestures, this can be manually observed from the data (Chen et al., 2016) or calculated using simple linear algorithms (Lu et al., 2012). However, machine learning is required to classify a more complex taxonomy of gestures, especially gestures that are closely related. We define gestures that are closely related as gestures whose data values cannot be linearly separated. Fig. 3 illustrates a two-dimensional Sammons mapping of a self-organizing map (SOM) of a gesture data set. It illustrates the difficulty in linearly separating the different gestures. Moreover, it is impossible to linearly separate the tip, palmar, and cylindrical grasps. This data set exemplifies the relevance of machine learning in gesture classification as they can classify closely related gestures to a high accuracy.

4.1: Classical machine learning algorithms

Machine learning algorithm can be differentiated by their type of learning. Supervised learning occurs when correct input-output pairs are provided for the algorithm during training, while unsupervised learning requires the algorithm to determine clusters of similar input data as no target output is provided (Rautaray and Agrawal, 2015). In this section, we describe a summarized theoretical background of the popular classical machine learning algorithms used in glove-based gesture classification.

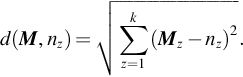

4.1.1: K-nearest neighbor

K-nn is a probabilistic pattern recognition technique that classifies a signal output based on the most common class of its k nearest neighbors in the training data. The most common class (also referred to as the similarity function) can be computed as a distance or correlation metric (Altman, 1992). Typically, the similarity function is calculated using the Euclidean distance; however, other distance metrics such as the Manhattan distance could also be utilized. The probability density function p(M, cj) of the output data M belonging to a class cj with jth training categories can be computed as:

where nz is a neighbor in the training set, V(nz, cj). The Euclidean distance d(M, nz) can be calculated as:

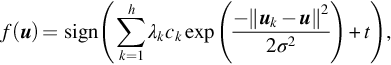

4.1.2: Support vector machine (SVM)

Traditionally, SVM was used in the linear classification of data. However, the use of a linear kernel limited its accuracy in nonlinear classification tasks. Therefore, the SVM algorithm was iterated by implementing a Gaussian kernel. This allows the algorithm to map data to an unlimited dimension space where data can become more separable in a higher dimension. The decision function for Gaussian SVM classification of an unknown pattern data u can be represented as:

where ck is the class label for the k-th support vector uk,λk is the Lagrange multiplier, and t is the bias (Cortes and Vapnik, 1995).

4.1.3: Decision tree

Decision tree is a supervised learning technique that aims to split classification into a set of decisions that determine the class of the signal. The output of the algorithm is a tree whose decision nodes have multiple branches with its leaf nodes deciding the classes (Yang et al., 2016). The configuration of the algorithm is determined by specifying the maximum number of splits.

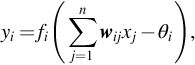

4.1.4: Artificial neural network (ANN)

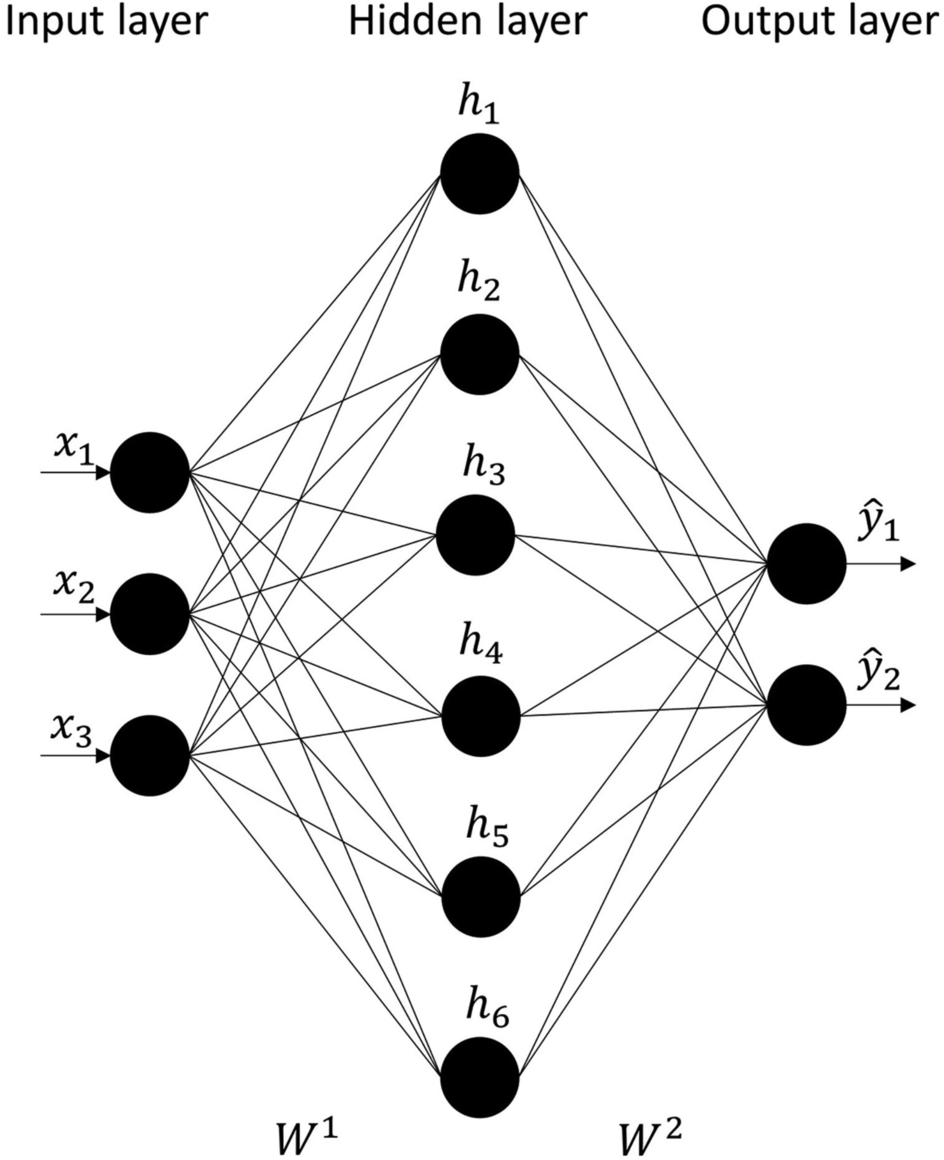

ANN is a biologically inspired machine learning algorithm. It consists of input, hidden, and output layers that comprise of neurons. These artificial neurons simulate neurons in the brain by receiving an input, processing it using an activation function and producing an output. The output of ith neuron can be calculated as:

where fi is the transfer function, yi is the output of the neuron i, xj is the jth input to the neuron, wij is the connection weight between the neurons, and θi is the bias of the neuron (Neto et al., 2013). Traditionally, the transfer function is either Gaussian, sigmoid, or Heaviside. Moreover, ANNs are trained by adjusting the connection weights. This can be achieved by algorithms such as backpropagation or reinforcement learning. The key factor in the operating principle of the learning algorithms is their weight-adjustment rules such as the Hebbian rule and the delta rule. Fig. 4 shows a feedforward neural network (FFNN), the simplest form of an ANN. It never feeds the output back to the output because it operates in a single forward direction.

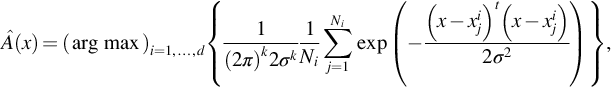

4.1.5: Probabilistic neural network (PNN)

PNNs are neural networks that use the probability density function to determine the likelihood of an input data belonging to a class. They consist of four layers, which are an input layer, which contains neurons representing each data sample that are fully connected to the next layer; a hidden layer comprising of Gaussian functions centered on the data samples; a summation layer that computes the average probability of an input sample belonging to each class; and the output layer which uses Bayes rule to determine the class for the input sample (Specht, 1990). The output illustrating the classification class of the input data is computed as:

where σ is the smoothing parameter, k is the size of the measurement space, Ni is the total number of training patterns, and xij is the jth training pattern from category Ai.

4.2: Glove-based gesture classification with classical machine learning algorithms

Several classical machine learning algorithms have been explored in the classification of gestures from data acquired by a data glove. Initially, ANN was used to classify five gestures from the American sign language (ASL) (Beale and Edwards, 1990). Thereafter, a modified version of ANN using backpropagation was implemented to recognize a taxonomy comprising of forty-two gestures from Japanese Kana (Murakami and Taguchi, 1991) acquired by a VPL data glove. Back propagation is a feedback algorithm that improves the classification results by using gradient descent to adjust the learning weights of the network. The network consisted of 16 nodes in the input layer representing 10 bend sensors and 6 positional sensors, 150 nodes in the hidden layer, and 10 nodes in the output layer representing the 10 dynamic gestures. Moreover, the input data was augmented and filtered to improve the accuracy of the network. The results show a 96% accuracy when the data was filtered and augmented; and 80% accuracy without augmentation and filtering.

Furthermore, a radial basis function (RBF) network were employed to classify twenty static gestures from five users of a Cyberglove (Weissmann and Salomon, 1999). The network was trained with four users and validated with the last user. The results showed that the average accuracy of the network was 88% during cross validation. In contrast when the validation user was included in the training set, the average accuracy was 98.3%. This study illustrates the difference in the accuracy of machine learning algorithms between “unseen” experiments and “seen” experiments. In unseen experiments, the data in the validation set is not included in the training set, while in seen experiments, some or all of the validation data is included in the training data set. We observed that machine learning algorithms are less accurate in classifying unseen data. Particularly, in glove-based gesture classification, the reduced accuracy of the machine learning algorithms in unseen experiments can be attributed to the difference in the hand dimensions of the unseen users in the validation data set and the users in the training data set. However, the difference in accuracies between unseen and seen experiments can be reduced by utilizing a large number of users in training the algorithm.

Furthermore, a feedforward ANN was utilized in classifying gestures for a VR driving application (Xu, 2006). Three hundred gestures were acquired from five participants with the Cyberglove. The gestures were split into 200 gestures for the training set and 100 gestures for the validation set. The average accuracy was 98%. This high accuracy was obtained because this was a seen experiment. In contrast, when data from three new (unseen) participants were used as a validation set, the recognition accuracy reduced to 92%.

In Luzanin’s study (Luzanin and Plancak, 2014), PNN was used to classify twelve static gestures acquired with the 5DT Data Glove. Clustering algorithms were implemented to reduce the training data without affecting the performance of PNN and to maintain the representation of the actual input data. These clustering algorithms were K-means, X-means, and Expected Maximization (EM) algorithms. The classification accuracies for the seen experiment were 93.4%, 96.18%, and 95.98% for K-means, X-means, and EM algorithms, respectively. Furthermore, for an unseen validation user, the results were 63.05%, 52.48%, and 77.14% for K-means, X-means, and EM algorithms, respectively.

Two ANNs connected in series were employed in gesture classification for human-robot control (Neto et al., 2013). As depicted in Fig. 5, the first ANN was used to classify static communicative gestures, while the second ANN was used to classify noncommunicative gestures that occurred within the transition between the communicative gestures in the continuous data. The data was acquired with Cyberglove II that contains 22 sensors. Therefore, in both ANNs, the input layer and hidden layer each comprised of 44 neurons that represent two frames per sensor. Classification accuracy was up to 99.8% for 10 gestures and 96.3% for a taxonomy of 30 gestures. The aim of the study was to accurately recognize static gestures within continuous data; therefore, the authors limited the validation data set to only seen data, hence the high accuracy.

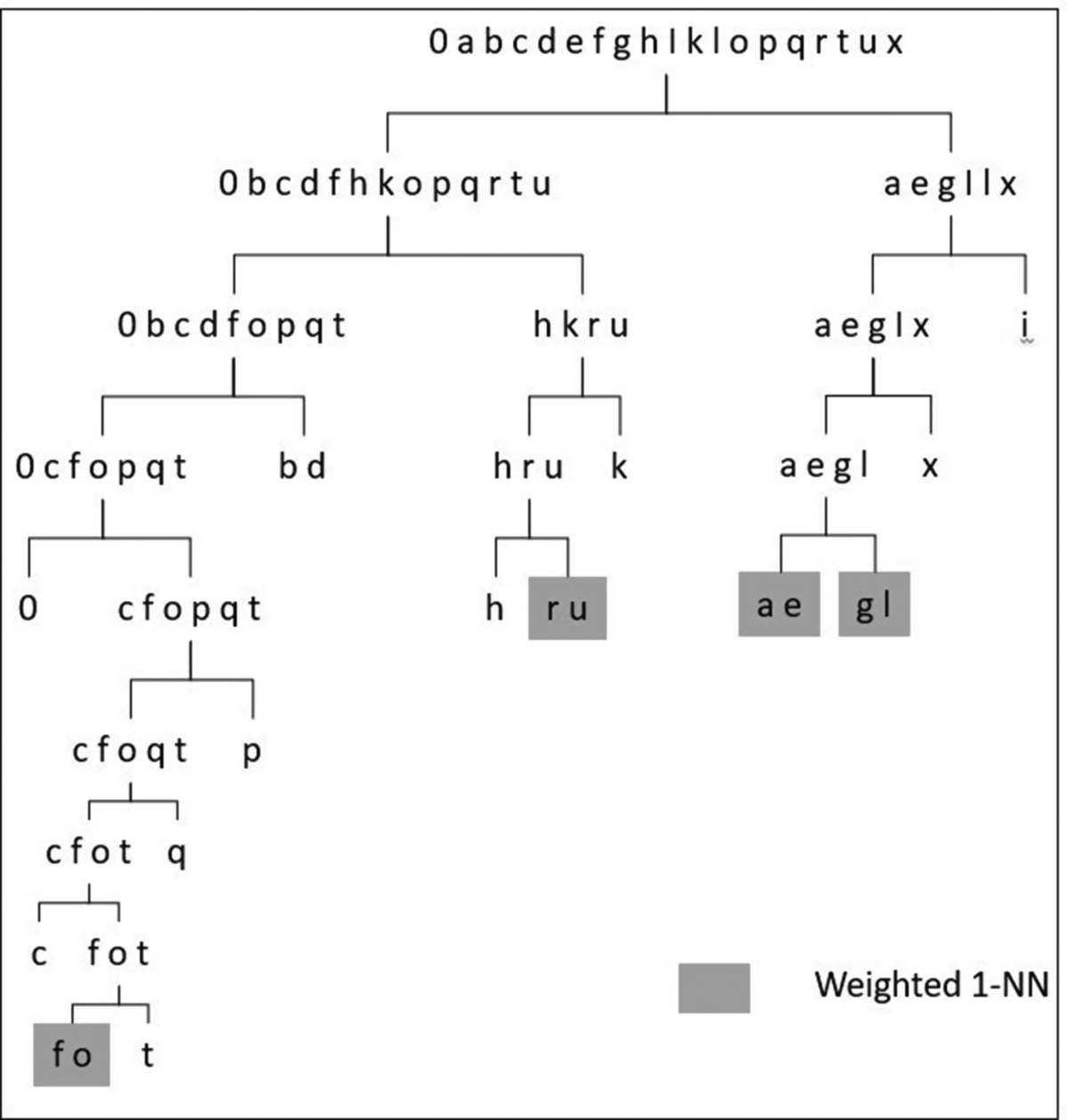

A multiclassifier approach was undertaken in (Ibarguren et al., 2010) to classify gestures acquired by a 5DT Data Glove. The gestures were eighteen ASL alphabets that do not require positional measurements of the wrist. The gestures were classified using a combination of decision tree and k-nn algorithms as shown in Fig. 6. A clustering method based on Euclidean distance generated a decision tree. Thereafter, k-nn (k = 1) was used to classify letters at the lowest level nodes. These letters such as a/e or f/o are very similar, and the 1-nn classifier aids in providing a more accurate classification. In addition, a segmentation layer is utilized before classification to separate the recorded gestures from the real-time continuous data. Experimental results show a 99.49% segmentation accuracy and a 94.61% classification accuracy.

A self-organizing map (SOM) was used to classify 10 static gestures acquired with a 5DT Data Glove (Jin et al., 2011). SOM is an unsupervised machine learning algorithm that aims to model the input data into a discretized lower dimension map. Training data was acquired from six participants, and the algorithm was validated with 10 participants that included two participants from the training data. The algorithm performed well with a 94.29% accuracy.

In addition, a custom IMU data was used alongside an Extreme Machine Learning (ELM) algorithm to classify 10 static gestures (Lu et al., 2016). Two sets of 44 and 45 features were extracted from the input gesture data set. The 45 feature set comprised of yaw, pitch, and roll angles of the five fingers, while the 44 feature set comprised of the 45 features of the fingers; and the yaw, pitch, and roll angles of the palm, forearm, and upper arm. ELM algorithm was utilized because of its low computational burden and reduced human reliance as its input weights and hidden layer neurons and biases are generated randomly. A modified version of ELM algorithm proposed by Huang et al. (Huang et al., 2011) that employs a kernel method was also used in the study. The original ELM algorithm, the ELM-kernel algorithm, and SVM were compared using both sets of extracted features. The classification accuracy using the 44 feature set were 68.05%, 89.59%, and 83.65% for the ELM, ELM-kernel, and SVM algorithms, respectively, while for the 45 feature set, the accuracy for ELM, ELM-kernel, and SVM algorithms were 84.40%, 85.51%, and 81.09%, respectively, thereby highlighting the superiority of the ELM-kernel algorithm for gesture classification.

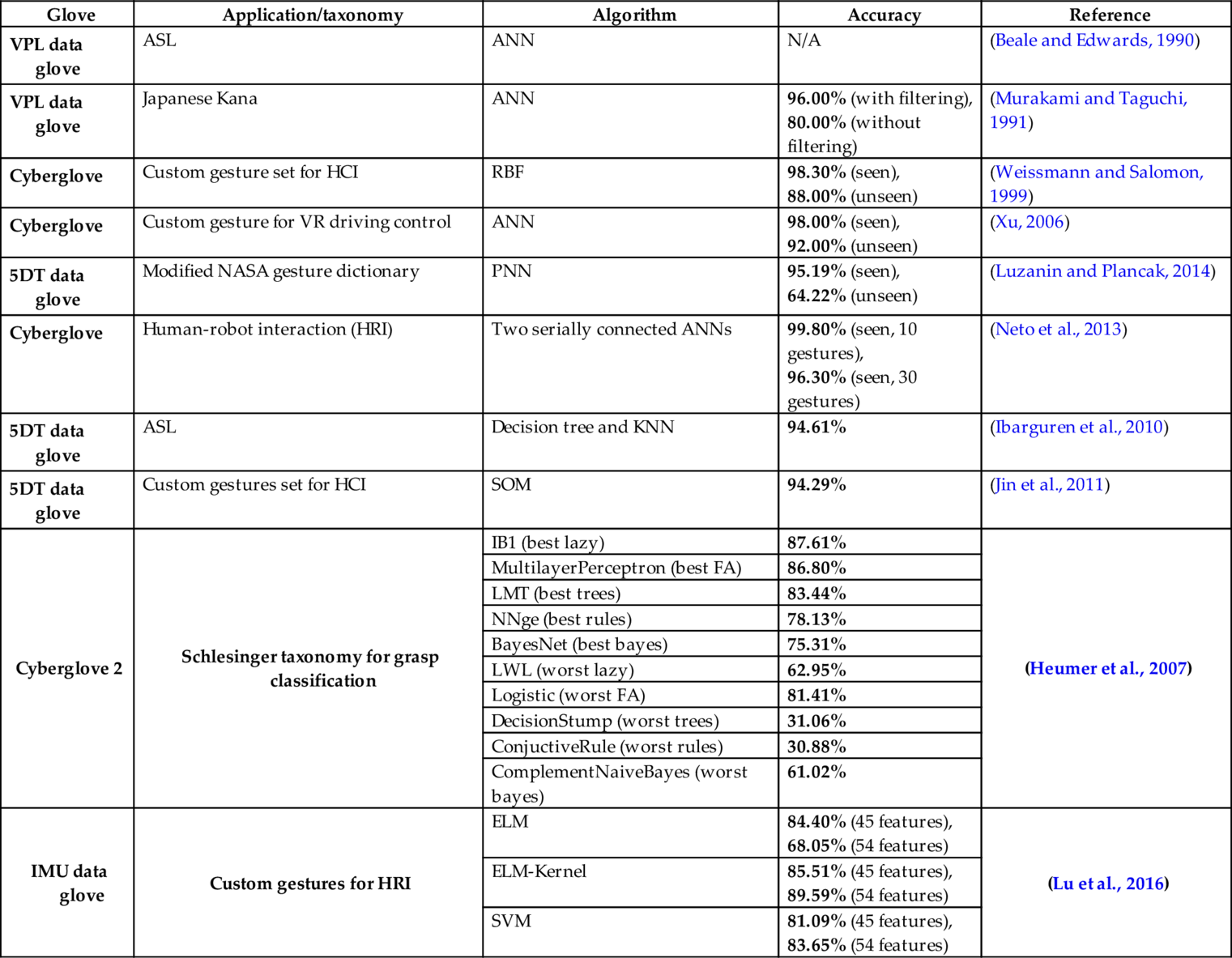

A more comprehensive comparison of classical machine learning algorithms in gesture classification was illustrated in the study cited herein (Heumer et al., 2007). A Cyberglove was employed in acquiring grasp types based on Schlesinger’s taxonomy. Subsequently, several classical ML algorithms were used to classify the data in six classification scenarios comprising of seen and unseen experiments. These 28 algorithms were obtained from a software package (Witten et al., 2005) and were grouped based into five categories: rule sets, trees, function approximators, lazy learners, and probabilistic methods. Rule algorithms classify the gestures by a set of logical rules, while tree algorithms (such as decision tree) classify gestures based on a pyramid of binary decisions. In addition, function approximators are supervised learning algorithms that derive an approximate function between the input data and the output class. Probabilistic algorithms produce probability models of each class and then determine (using a method such as Bayes theorem) the probability of each input data belonging to a specific class. Lazy learners delay classification of an input data until a request is received. Thereafter, the class of the data item is determined from the class of the closest data items based on the specified distance metric. The results depict that function approximating classifiers performed well with a minimum and maximum accuracy of 81.41% and 86.8%, respectively. Although the best classifier was a Lazy classifier at an accuracy of 87.61%, the average accuracy of Lazy classifiers was 78.77%. However, Bayesian, tree-based, and rule-based classifiers were poor performers. Particularly, Bayesian classifiers had a maximum and minimum accuracy of 75.31% and 61.02%, respectively. Tree-based classifiers had a maximum accuracy of 83.44% and a minimum accuracy of 31.06%, while rules-based classifiers had a maximum accuracy of 78.13% and a minimum accuracy of 30.88%. The best and worst classifier in each category is highlighted in Table 1.

Table 1

| Glove | Application/taxonomy | Algorithm | Accuracy | Reference |

|---|---|---|---|---|

| VPL data glove | ASL | ANN | N/A | (Beale and Edwards, 1990) |

| VPL data glove | Japanese Kana | ANN | 96.00% (with filtering), 80.00% (without filtering) | (Murakami and Taguchi, 1991) |

| Cyberglove | Custom gesture set for HCI | RBF | 98.30% (seen), 88.00% (unseen) | (Weissmann and Salomon, 1999) |

| Cyberglove | Custom gesture for VR driving control | ANN | 98.00% (seen), 92.00% (unseen) | (Xu, 2006) |

| 5DT data glove | Modified NASA gesture dictionary | PNN | 95.19% (seen), 64.22% (unseen) | (Luzanin and Plancak, 2014) |

| Cyberglove | Human-robot interaction (HRI) | Two serially connected ANNs | 99.80% (seen, 10 gestures), 96.30% (seen, 30 gestures) | (Neto et al., 2013) |

| 5DT data glove | ASL | Decision tree and KNN | 94.61% | (Ibarguren et al., 2010) |

| 5DT data glove | Custom gestures set for HCI | SOM | 94.29% | (Jin et al., 2011) |

| Cyberglove 2 | Schlesinger taxonomy for grasp classification | IB1 (best lazy) | 87.61% | (Heumer et al., 2007) |

| MultilayerPerceptron (best FA) | 86.80% | |||

| LMT (best trees) | 83.44% | |||

| NNge (best rules) | 78.13% | |||

| BayesNet (best bayes) | 75.31% | |||

| LWL (worst lazy) | 62.95% | |||

| Logistic (worst FA) | 81.41% | |||

| DecisionStump (worst trees) | 31.06% | |||

| ConjuctiveRule (worst rules) | 30.88% | |||

| ComplementNaiveBayes (worst bayes) | 61.02% | |||

| IMU data glove | Custom gestures for HRI | ELM | 84.40% (45 features), 68.05% (54 features) | (Lu et al., 2016) |

| ELM-Kernel | 85.51% (45 features), 89.59% (54 features) | |||

| SVM | 81.09% (45 features), 83.65% (54 features) |

4.3: Deep learning

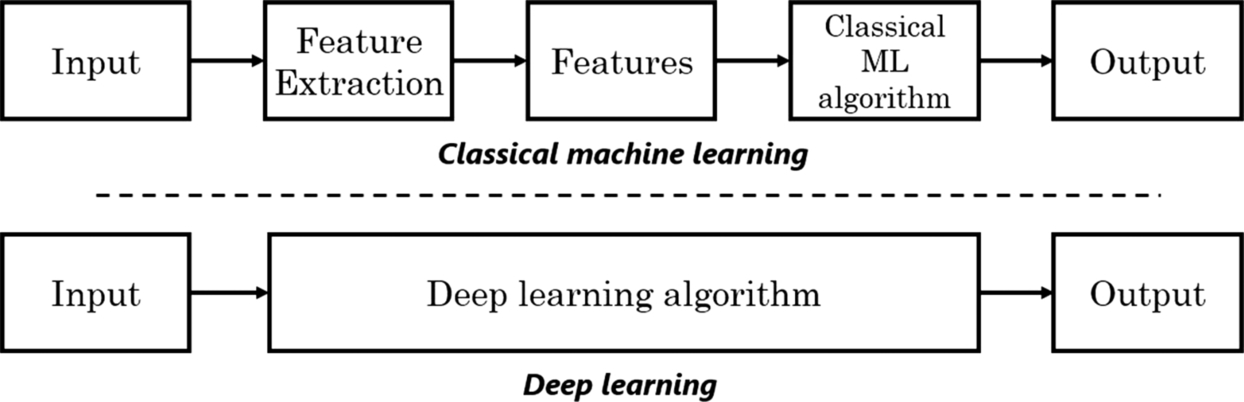

Deep learning (DL) is a class of machine learning whose algorithms comprise of neural networks with several hidden layers. Examples of popular deep learning algorithms are Deep Belief Network (DBN), Deep Boltzmann Machine (DBM), Recurrent Neural Network (RNN), and Convolutional Neural Network (CNN) (LeCun et al., 2015). The advantage of deep learning algorithms over traditional machine learning algorithm is the ability of DL algorithms to automatically extract features from the input data without the bias that comes with manual feature extraction in classical machine learning algorithms as illustrated in Fig. 7. However, DL algorithms require significantly more data and computation resources than classical machine learning algorithms.

4.3.1: Convolutional neural network (CNN)

CNN is the most popular architecture used in DL applications. It became popular after Alexnet (a CNN algorithm) won ILSVRC 2012, a prominent computer vision classification competition. Thereafter, it has been employed in a wide variety of applications spanning from classification of images in computer vision to the classification of physiological signals (e.g., ECG, EMG) and was seen to perform excellently (Yao et al., 2020; Qin et al., 2019; Goodfellow et al., 2016; Krizhevsky et al., 2012).

A typical convolutional neural network is a feed forward deep neural network with stacks of convolutional and pooling layers and one or more fully connected layers. Features are extracted from the input data by convolving the input data with filters comprising of neurons with adjustable weights and biases in the convolutional layers. The convolution operation of the gth feature map on the fth convolutional layer located at position (a,b) can be described as:

where b is the feature map’s bias, w is the weight matrix, X and Y are the kernel’s height and width, respectively. σ(‧) is a nonlinear activation function such as rectified linear unit (RELU), Sigmod, or Tanh. A pooling layer is utilized to reduce the variance on the feature map due to minor changes in the input data. This is achieved by representing the spatial region as an aggregate of neighboring outputs. Although earlier studies utilized average pooling; recently, maximum pooling has become very popular. Furthermore, fully connected layers classify the input signal based on the extracted features from previous layers.

4.3.2: Recurrent neural network (RNN)

RNN is a deep learning algorithm that feeds back its output to its input to produce temporal memory. This internal temporal memory enables it to process dynamic input sequences. This has ensured that RNNs outperform other machine learning algorithms in sequence prediction in applications such as speech recognition and computer vision. A popular example of RNN is long short-term memory (LSTM). LSTM has outperformed general RNNs because of its error backpropagation. This eliminates the error vanishing and exploding phenomena and enables LSTM to memorize several thousands of previous time steps (Schmidhuber, 2015).

4.4: Glove-based gesture classification using deep learning

In this section, we review the applications that have utilized deep learning in glove-based gesture classification. Notably, a simple CNN algorithm was used to classify dynamic sign language gestures from data obtained with an IMU data glove (Fang et al., 2019). The CNN comprised of a convolutional layer, a batch normalization layer and a fully connected layer. A pooling layer was noticeably absent as the authors felt it was redundant in this architecture. The performance of the algorithm was compared to an LSTM method and a PCA-SVM method. The CNN algorithm had the highest accuracy at 99.6%, while the PCA-SVM and LSTM algorithms had accuracies of 82% and 80.8% respectively.

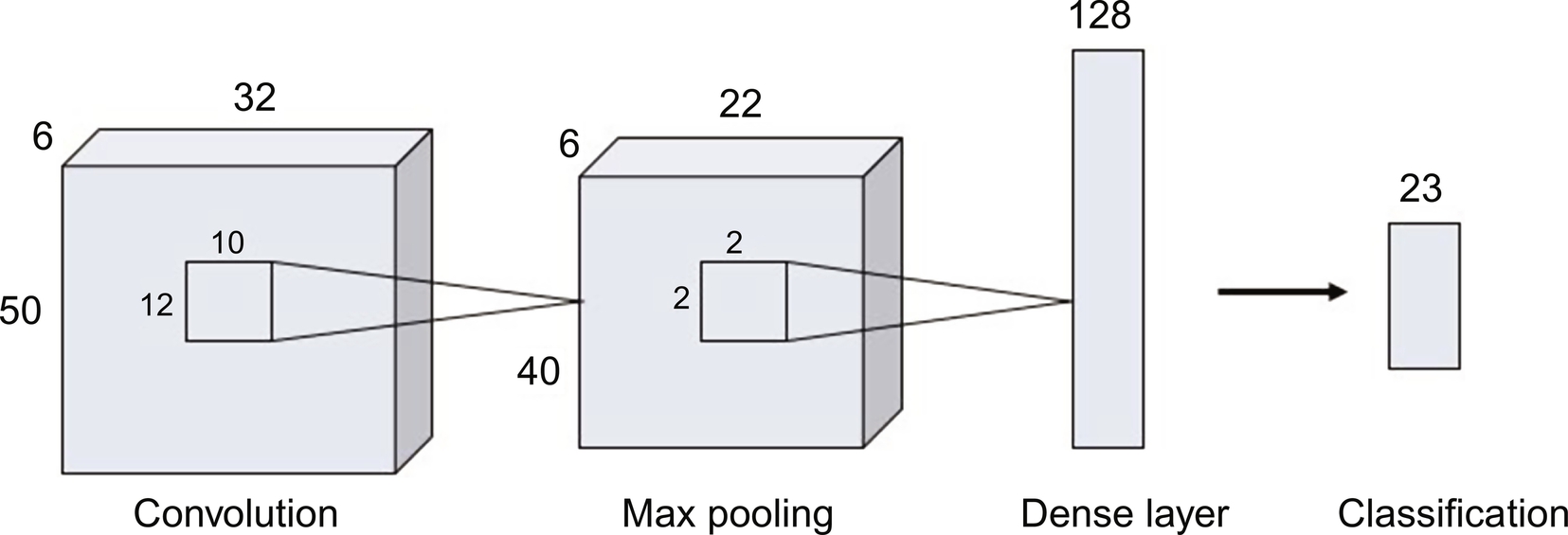

Furthermore, a light CNN architecture shown in Fig. 8 was implemented in a real time gesture recognition using a custom IMU data glove (Diliberti et al., 2019). The CNN was implemented using a very similar configuration to AlexNet. However, the authors performed a series of experiments to determine the optimal implementation of the network for their application. They achieved this by reducing the depth and width of the network till the set goal of at least 98% accuracy was met. The depth of the network was reduced by removing some layers in the network while reducing the width of the network is reducing the number of neurons in the layers. The depth percentage was measured as a percentage of the original number of layers, while the width factor, WF, was expressed as:

where Neuold and Neunew are the original and new number of neurons, respectively, in the layers of the network. The optimal architecture with an accuracy of 98.03% was found to have a 20% depth percentage and a width reduction factor of 4.

However, CNNs were outperformed by other deep learning algorithms. In particular, an LSTM algorithm was seen to perform better than a CNN algorithm in the classification of dynamic gestures acquired in a data glove (Simão et al., 2019). The LSTM classification accuracy was 96.5% for seen users and 89.1% for unseen users, while CNN achieved an accuracy of 81.9% and 54.7% for seen and unseen users, respectively. However, when a smaller percentage of the test users are used, CNN performs comparatively with LSTM and even outperforms it in some of these scenarios. This may have occurred because as the number of test users increases, the advantage of the memory properties of LSTM materializes.

Furthermore, a deep neural network (DNN) was utilized in classifying hand gestures acquired by a passive RFID data glove (Kantareddy et al., 2019). The DNN comprised of three fully connected hidden layers with 64, 128, and 32 neurons, respectively. Subsequently, its performance was compared with CNN, SVM, and random forest classifier (RFC). The CNN consisted of three 1D convolutional layers with 64, 128, and 64 filters, respectively, while the RFC had 10 trees, an average depth of 14.1 and an average number of 244.6 nodes. The DNN algorithm achieved an accuracy of 99%, while RFC, CNN, and SVM achieved an accuracy of 98%, 97%, and 86%, respectively. The CNN was outperformed by DNN because the DNN algorithm converged the global information, while the CNN algorithm only extracted the local information.

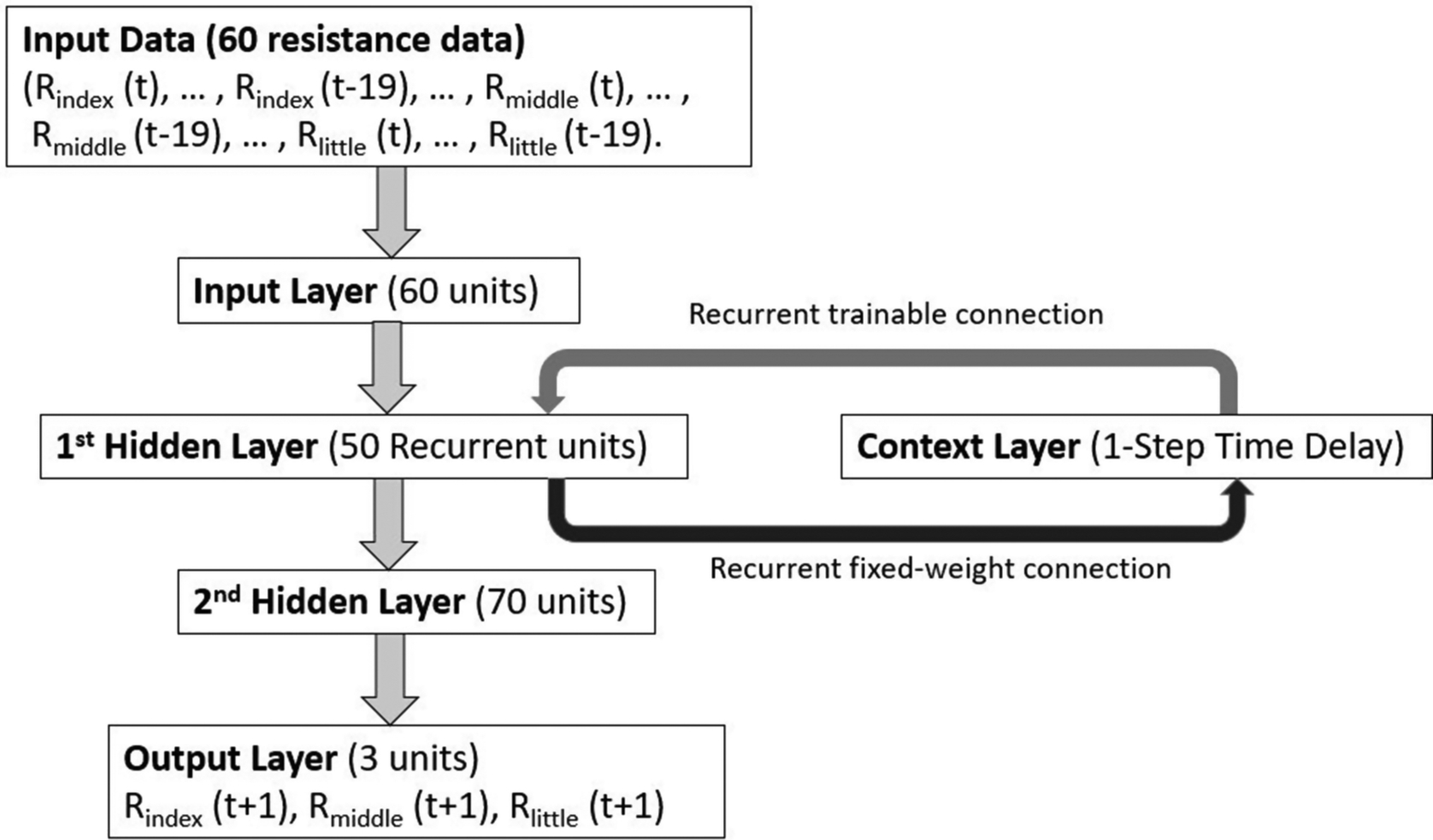

In addition, a deep learning algorithm was employed in the prediction of hand gestures. This involves predicting the next gesture to be performed by the user within a specific time frame. It helps to improve human-computer interactions by increasing the speed of gesture classification. Notably, RNN was used to predict hand gestures because of its ability to learn the temporal properties of the continuous data (Kanokoda et al., 2019). The performance of the RNN shown in Fig. 9 was compared to a time-delay neural network (TDNN) and a multiple linear regression (MLR) algorithm. Although TDNNs are proven algorithms in gesture prediction, they are limited to learning short-range dependencies and can only operate within fixed-size temporal windows (Sak et al., 2014). The results showed that the deep learning algorithm, RNN, outperformed both TDNN and MLR with a classification accuracy of 90.8% and 74.0% in predicting the next 100 ms and 300 ms of gestures, respectively.

5: Discussion and future trends

In the chapter, we have reviewed the applications of several machine learning algorithms on glove-based gesture classification. Moreover, we have shown that machine learning algorithms perform excellently in classifying hand gestures. However, the classification accuracy reduces in unseen experiments when the validation data set is made up of users that were not included in the training set. Seen experiments illustrate applications where the glove system will be used by known users, while unseen experiments illustrate commercial applications where a new user can use the glove system without re-training of the algorithm. The disparity between the accuracy in seen and unseen experiments is exemplified in Luzanin’s study (Luzanin and Plancak, 2014), where the classification accuracy dropped from 95.19% in a seen experiment to 64.22% in an unseen experiment. This phenomenon can be explained mainly by the inadequate number of users in the training data set. Particularly, most studies have less than 10 participants in both the training and validation data sets. This increases the significance of the disparities in hand dimensions on the classification accuracy of the algorithm, as the training data sets do not provide a good sample size of hand dimensions.

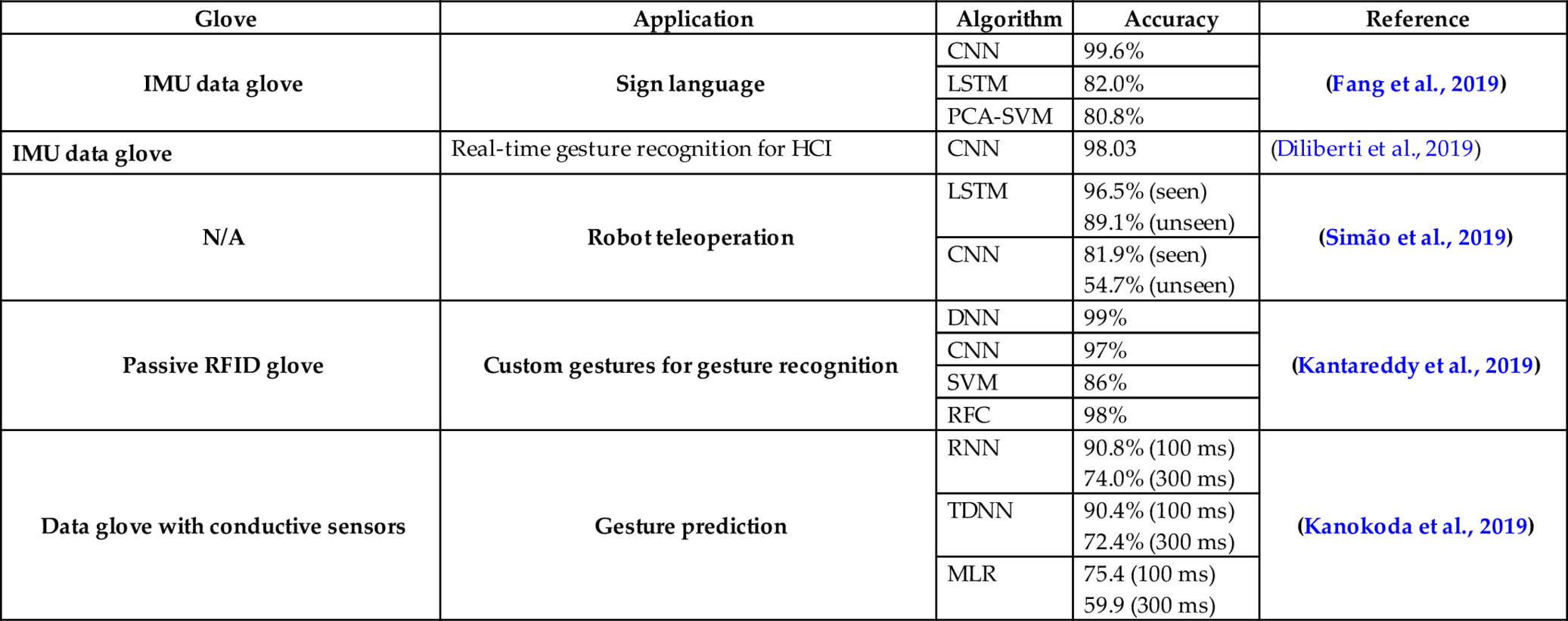

In addition, we analyze the performance of deep learning algorithms on gesture classification. Notably, we observe that they perform better than classical machine learning algorithms. Although the number of studies illustrating the application of deep learning on glove-based gesture classification are small, we observed that deep learning algorithms were better performers than classical ML algorithms. In particular, CNN outperformed PCA-SVM by 18.8% in classifying ASL gestures (Fang et al., 2019). Moreover, a DNN algorithm outperformed an SVM algorithm by 13% (Kantareddy et al., 2019). These results show significant increases in classification accuracy by deep learning algorithms. Therefore, they increase the commercial viability of data gloves in gesture classification applications. However, the limited amount of studies makes it impossible to select the best performing deep learning algorithm, but we observe that CNN and LSTM are the most prominent among the studies reviewed as illustrated in Table 2.

Table 2

| Glove | Application | Algorithm | Accuracy | Reference |

|---|---|---|---|---|

| IMU data glove | Sign language | CNN | 99.6% | (Fang et al., 2019) |

| LSTM | 82.0% | |||

| PCA-SVM | 80.8% | |||

| IMU data glove | Real-time gesture recognition for HCI | CNN | 98.03 | (Diliberti et al., 2019) |

| N/A | Robot teleoperation | LSTM | 96.5% (seen) 89.1% (unseen) | (Simão et al., 2019) |

| CNN | 81.9% (seen) 54.7% (unseen) | |||

| Passive RFID glove | Custom gestures for gesture recognition | DNN | 99% | (Kantareddy et al., 2019) |

| CNN | 97% | |||

| SVM | 86% | |||

| RFC | 98% | |||

| Data glove with conductive sensors | Gesture prediction | RNN | 90.8% (100 ms) 74.0% (300 ms) | (Kanokoda et al., 2019) |

| TDNN | 90.4% (100 ms) 72.4% (300 ms) | |||

| MLR | 75.4 (100 ms) 59.9 (300 ms) |

A limitation to the use of deep learning algorithms in glove-based classification scenario is the lack of public data sets on which to evaluate the algorithms. This restricts researchers to creating their own experiments with a small number of participants. The use of public data sets will greatly increase contributions to the field as researchers will concentrate on developing novel deep learning algorithms to accurately classify the data. Moreover, public data sets enable the comparison of several deep learning algorithms. Thereby, ensuring that the best performing deep learning algorithms are identified.

Another potential research area is the application of deep learning in more hand gesture classification scenarios. Due to the small amount of studies utilizing deep learning, there are no applications of deep learning in scenarios such as grasp classification and other custom taxonomies. Research in this field will reveal the performance and limitations of deep learning algorithms in these scenarios.

Furthermore, the limited amount of studies illustrate that this research area is still novel and can be very fertile. Notably, hybrid models of deep learning techniques such as CNN-RNN and CNN-LSTM have shown excellent performance in the classification of surface electromyography (sEMG) signals (Hu et al., 2018; Wu et al., 2018). Furthermore, popular deep learning algorithms like Deep Boltzmann Machine and generative adversarial networks (GAN) have also shown very high classification accuracy in camera-based gesture recognition (Rastgoo et al., 2018; Zhang and Shi, 2017). These algorithms have not been implemented in glove-based gesture classification and present a unique research gap in significantly increasing the classification of glove-based applications.

Therefore, we propose that researchers utilize novel deep learning algorithms such as CNN-LSTM for future glove-based gesture classification studies. We recommend CNN-LSTM because our review has shown that these two algorithms provide the highest classification accuracy in glove-based gesture classification studies, and the hybrid combination of these algorithms will provide robust feature extraction and better sequence prediction especially in the classification of dynamic gestures in activity classification scenarios. Furthermore, we recommend that these studies comprise of at least 10 participants to provide a large data set for the algorithm.

6: Conclusion

In this study, we have provided an extensive review of classical machine learning and deep learning algorithms implemented in glove-based gesture classification. We have also shown that deep learning algorithms perform better than machine learning algorithms. Moreover, the limitations restricting the application of deep learning algorithms have been identified alongside our proposed solutions. Furthermore, we highlight potential areas of research that may increase the commercial viability of glove-based gesture classification. Finally, we recommend CNN-LSTM for future glove-based classification studies because of its accurate feature extraction and sequence prediction capabilities.