1.5. WHAT TO RESEARCH

There are few discussions of research funding, research program planning, and execution that do not include comments about what really ought to be researched. Governmental agency and industry management hierarchies constantly talk about the need for a better focus on research programs so that research will meet agency and organization needs. Users in production departments, operational personnel in agencies, and consumers often complain about the lack of relevance of the research program and about the lack of timeliness of research results.

Let us take the case of a research laboratory where sponsors, though quite satisfied with the research output of the laboratory, nonetheless provided these kinds of comments about the research program:

Research takes too long.

Our need to solve the alternative fuel problem is now, not three years from now. We just can't wait for years for researchers to study the problem.

We need answers more quickly than researchers provide them.

The research program is too esoteric. We need solutions that are practical.

Researchers study the problem to death to find a 100 percent solution. What is wrong with a quicker solution that is not quite 100 percent?

This problem seems to go on forever. Five years ago I worked at the Department of the Interior. We thoroughly studied the problem of land disposal of hazardous toxic waste. I thought we solved the problem or at least put the issues to bed. When asked whose bed and what the results were, the sponsor did not know.

We always hear about your previous accomplishments. How about the future? What can we expect from you next year and the year after? Be specific.

First and foremost, R&D managers need to understand the sponsor's perspective and then develop a strategy for effective communication. Consequently, the focus of such research is rather "specific," "commercial," and "product-oriented." For the sponsors to raise questions, as exemplified in the preceding quotes, is to some degree understandable. Consequently, the response of the R&D manager or the PI need not be defensive. For basic research, however, issues are likely to be of a different nature.

How, then, should one respond? One could take each question and provide extensive documentation to refute the sponsor's assertion. For example, one could prove that studying and solving the alternate fuel problem, which was created through decades of neglect, would take some time. Solutions, especially cost-effective and environmentally safe solutions, may well take three years, or even longer, to find. One could also ignore sponsor assertions and go on with the research activity since the sponsor is not likely to find any other researcher who could do the work any faster anyway.

Another approach that an R&D manager could utilize would be a two-part strategy:

First, empathize with the sponsor's needs and be responsive in a genuine manner. This would translate to providing interim solutions, to the degree possible, for critical problems. Explain to the sponsor the limitations and uncertainties involved.

Second, educate the sponsor regarding the nature of the research enterprise. Focus on why it is in his or her best interest to follow a systematic, though time-consuming, process of research and development so that solutions developed are scientifically valid, are appropriate to the problem at hand, and truly provide a more advantageous solution to the problem than the existing technology does. This could involve undertaking a mix of research activities ranging from basic research that might take three to five years, to applied research that might provide some solutions within one to two years.

What to research is also affected by what our adversaries or competitors are doing. Some governmental agencies (for example, the Department of Defense) and some industries (for example, high technology) often are concerned about being surprised by a technological development by an adversary or competitor. This is simply because the payoff or effectiveness of the defense establishment of a nation, or profitability of an industry, depends on its own capabilities and also on the capabilities of its adversaries or competitors. New technological developments of an adversary or a competitor can have a profound effect on the security of a nation and on the competitive success of an enterprise.

Other questions and issues related to the issue of what to research often include the following:

How should user needs be considered?

Who are the real users?

How should a comprehensive and responsive research program be formulated?

How should the tradeoffs between long-range research needs and short-range or immediate requirements be made?

Many approaches to formulating research programs have been proposed. For example, Merten and Ryu (1983, pp. 24–25) have proposed dividing an industrial laboratory's research activities into five categories:

Background research

Exploratory research

Development of existing commercial activities

Technical services

Schmitt (1985) has discussed generic versus targeted research and market-driven versus technology-driven research. Shanklin and Ryans (1984) contend that high-technology companies can make a successful transition from being innovation-driven to being market-driven by linking R&D and marketing efforts.

A considerable literature is available related to R&D project selection. The proper approach applicable to an organization would clearly vary depending on the needs of an organization.

Two criteria seem most important in deciding what to research: (1) What will advance the science? (2) What do the customers of our research need? Once we have answered those questions, we need to ask: What are the prospects for a solution?

There are other considerations that may override them. Other criteria may apply in the solution of very specific problems. For example, in oil exploration, safety considerations may be a top research priority. Such problems may have to be solved regardless of cost because the organization would be wrong to ignore them. Research needed to protect human health and the environment from improper disposal of hazardous waste falls in the same category.

One of the most difficult problems is deciding when to abandon a problem that does not seem to be solvable. There is always the hope that with a few more months of work the problem will be solved. Yet, one usually has some sense of what is likely to happen. If one researcher is sure that the problem can be solved and no one else is so convinced, it is necessary to determine whether the one researcher is a "genius" or a "neurotic." People do get attached to hopeless causes, and when that happens they exhibit a variety of such symptoms as extreme tension and the inability to be self-critical. Managers must be sensitive to clues that indicate that the optimism about a project is unjustified. Since stopping such a project without destroying the motivation of the scientist is important, some suggested approaches to achieve this follow.

A manager may agree to give the scientist short deadlines and establish mutually agreed-upon milestones to ascertain whether tangible progress toward the goal is being made. If the project indeed is hopeless, lack of project progress during the milestone review would reveal the problem. In most cases, the scientist would, on his or her own initiative, agree to drop the project.

Should the scientist still request to continue the project, the manager should consider allowing the scientist to spend some time (say 20 percent) on the project and again establish agreed-upon milestones to review progress. If results again are not very promising and the scientist still perseveres and wants to continue, two options are possible. One, the manager may direct that the project be stopped. The other possibility is to still allow the scientist to spend some time on the project but strip away all support, such as for laboratory equipment, computer expenses, and technicians. In time the project will fade away.

The manager, however, should not be too surprised when some researchers supposedly pursuing unpromising theories or projects thought to be nonproductive in their early stages end up producing promising results. It is good for all concerned, especially for the manager, to keep in mind that predictions about the success or failure of research projects are most unreliable. Two examples come to mind, one dealing with fundamental research and the other with applied research.

Astrophysicist S. Chandrasekhar was working on the theory of black holes and white dwarfs. He sought to calculate what would happen in the collapse of larger stars when they burn out. He theorized that if the mass of a star was more than 1.4 times that of the sun, the dense matter resulting from the collapse could not withstand the pressure and thus would keep on shrinking. He wrote that such a star "cannot pass into the white dwarf stage." His paper on this theory was rejected by the Astrophysical Journal, of which he was later to become a well-respected editor.

As reported in the New York Times (October 20, 1983), Sir Arthur Edington, rejecting Dr. Chandrasekhar's theory, stated that "there should be a law of nature to prevent the star from behaving in this absurd way." Chandrasekhar was urged by other scientists to drop his research project because it did not seem very promising. Dr. Chandrasekhar persisted and in 1983 won the Nobel Prize for his discovery. His research led to the recognition of a state even denser than that of a white dwarf: the neutron star. The so-called Chandrasekhar limit has now become one of the foundations of modern astrophysics.

As another example, a group of researchers developing a complex environmental impact analysis system and associated relational databases chose to pursue this research project by using a higher-order computer language instead of the traditional FORTRAN. They also wanted to experiment using an operating system developed by the Bell Laboratories. Management attitudes ranged from enthusiastic support to tepid support, opposition, and downright hostility. The less technically knowledgeable managers were opposed; and the further removed they were from the research group; the more opposed they were to the continuation of this research project. Because of the creativity of the researchers and with some degree of support and acquiescence of the management, the project was allowed to continue in parallel with other activities. On completion the project was one of the most successful and one of the most widely used systems in the agency. It received the agency's highest R&D achievement award and became an archetype for future systems development research activities.

No one approach for categorizing or organizing research and for identifying the research needs of an agency or an industrial enterprise may satisfy the complex and, at times, unique needs of an organization. We propose a two-tier model for identifying "what to research," in an effort to develop an approach that provides a flexible, systematic framework for integrating various requirements that at times seem in conflict with each other. The model includes an economic index model and a portfolio model. This two-tier model may apply more readily to mission-oriented research than to scientific institutional or academic research. Further discussions of this model follow.

1.5.1. Economic Index Model

Under this model, research needs are defined as those needs designed to improve the operation or manufacturing efficiency of the organization or the enterprise. The emphasis is on building a "better mousetrap" to reduce the cost of doing things. Inputs for such needs come from the users, operation units, and scientists, as well as from looking at competitive products and operations.

1.5.2. Portfolio Model

Under this model, normative, comparative, and forecasted research needs are considered. Normative needs are those of the user (a user being the primary or follow-on beneficiary of the research product). Comparative needs relate to research needs derived from reviewing comparable organizations, competitive product lines, and related enterprises. Forecasted research needs focus on trend analysis in terms of consumer or organization needs derived from new requirements, changed consumer behavior, new technological developments, new regulations (e.g., environmental, health, and safety regulations), and new operational requirements. Often the effectiveness of a commercial enterprise or of a national defense effort depends not only on how well the organization itself does but also on how well the organization does in comparison with its competitor or adversary. Consequently, it is necessary to have effective intelligence concerning the portfolio of a competitor in order to focus properly on comparative and forecasted research needs.

After defining research needs using these two models, some research projects would be essentially modifying, adapting, or adopting existing scientific knowledge and would correspond to applied research and development; other research projects would fill technology gaps and would correspond to basic or fundamental research.

Inevitably, there are more projects to be researched than there are funds available. This is a normal and a healthy situation. A model derived from the work of Keeney and Raiffa (1993), which takes into account multiple objectives, preferences, and value tradeoffs, is suggested for deciding which projects to select among competing requirements. The main problem in using such an approach is the tendency on the part of many technical users to quantify items that do not lend themselves to quantification.

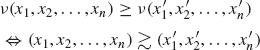

In developing a policy (at higher levels) or in making specific project choices among competing demands (at lower levels), the decision-maker can assign utility values to consequences associated with each path instead of using explicit quantification. The payoffs are captured conceptually by associating to each path of the tree a consequence that completely describes the implications of the path. It must be emphasized that not all payoffs are in common units and many are incommensurate. This can be mathematically described as follows (Keeney and Raiffa, 1993, p. 6):

where a′ and a″ represent choices, P probabilities, and U utilities; the symbol ⇔ reads "such that."

Utility numbers are assigned to consequences, even though some aspects of a choice are not in common units or are subjective in nature. This, then, becomes a multiattribute value problem. This can be done informally or explicitly by mathematically formalizing the preference structure. This can be stated mathematically (Keeney and Raiffa, 1993, p. 68) as:

where v is the value function that may be the objective of the decision-maker, xi is a point in the consequence space, and the symbol ![]() reads "preferred to" or "indifferent to."

reads "preferred to" or "indifferent to."

After the decision-maker structures the problem and assigns probabilities and utilities, an optimal strategy that maximizes expected utility can be determined. When a comparison involves unquantifiable elements, or elements in different units, a value tradeoff approach can be used either informally, that is, based on the decision-maker's judgment, or explicitly, using mathematical formulation.

After the decision-maker has completed the individual analysis and has ranked various policy alternatives or projects, then a group analysis can further prioritize the policy alternatives or specific projects. A modified Delphi technique (Jain et al., 1980) is suggested as an approach for accomplishing this.

After research project selection and prioritization, an overall analysis of the research portfolio should be made. The research project portfolio should contain both basic and applied research. The mix would depend on the following:

Technology of the organization

Size of the organization

Research facilities

It should be noted that the distinction between basic and applied research can become rather blurred. What is basic research to one organization can be applied to another and what is basic one year can be applied the next. Also, given the same general research project title, different emphases during project execution can affect the nature of research. As will be discussed below, to maximize R&D organizational effectiveness, scientists and work groups should be involved in a mix of basic and applied research.