Chapter 3. Testing APIs

In Chapter 2 we explained APIs of different flavors and the value that they bring to your architecture. Now that we know about APIs and want to incorporate them into our ecosystem it is crucial to understand how to build a quality product. When creating any sort of product, such as a mouthguard 1 or a building, the only way to verify that it works correctly is to test it. These products are stretched, hit, pushed and pulled in all different manners, simulations will be made to see how the product works under different scenarios. This is testing, and testing gives us confidence in the product that is being produced.

The testing of APIs in software development is no different.

Creating a well crafted and tested product gives us confidence to ship a API and know unequivocally that it will respond correctly for our consumer. As a consumer there is nothing worse then using an API that does not work as specified in the documentation. As discussed in “Specifying REST APIs”, an API should not return anything unexpected from its documented results. It is also infuriating when an API introduces breaking changes or causes timeouts due to the large duration of time to retrieve a result. These types of issues drive customers away and are all entirely preventable by creating quality tests around the API service. Any API built should be able to handle a variety of scenarios, including sending useful feedback to users who provide a bad input, being secure and returning results within a specified SLA (Service Level Agreement).

This chapter will introduce the different types of testing that should be applied to any API. We will highlight the positives and the negatives of each type of testing to understand where the most time should be invested. Throughout the chapter there we will reference some recommended resources for those readers seeking to gain a significantly more in depth and specialist knowledge about a subject area.

Scenario for this chapter

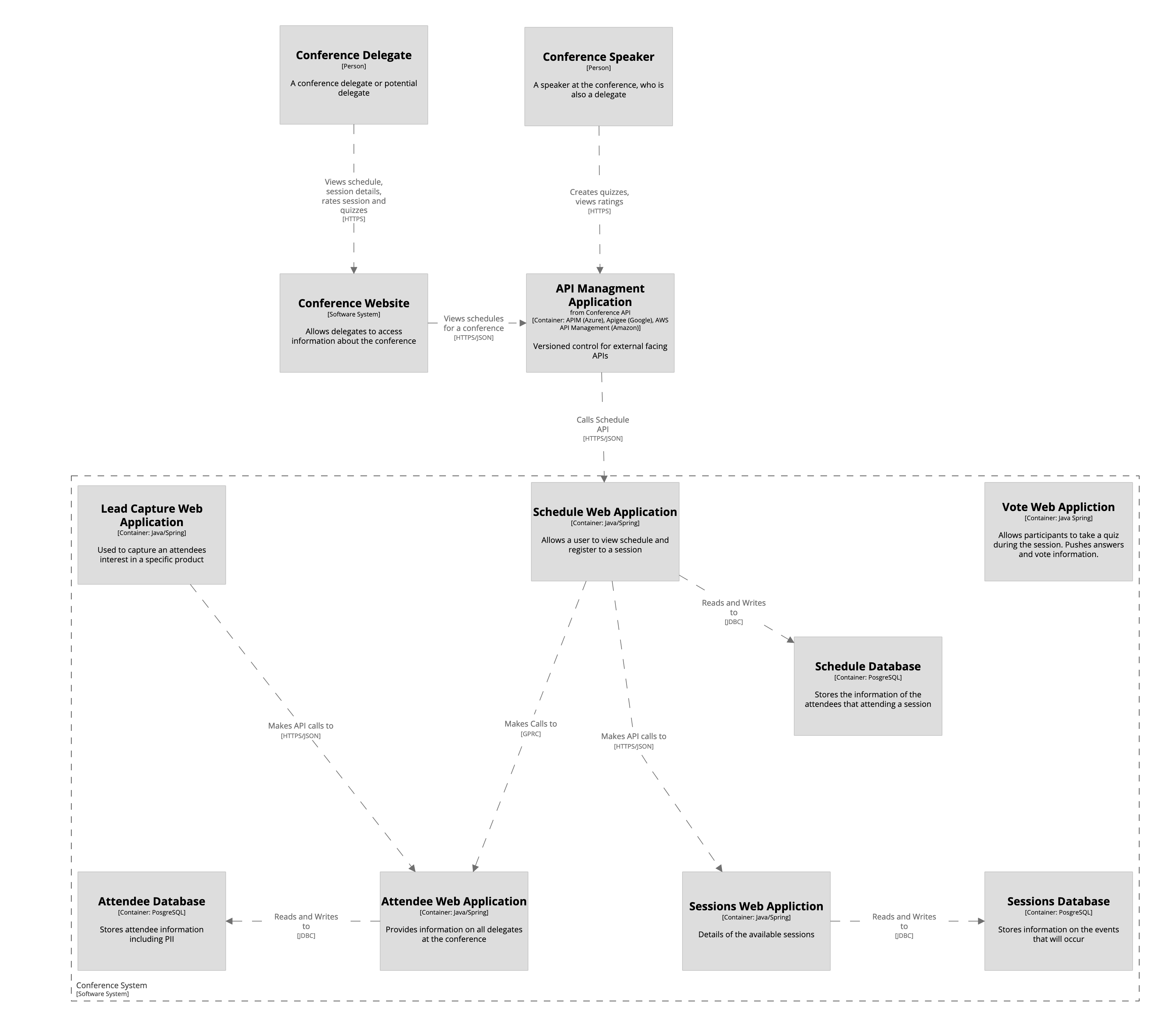

For this chapter let’s imagine a new greenfield project has been created. This is the Schedule service as part of our Conference API System. In each section the Schedule service will be referenced to demonstrate the testing practices that were applied during its development. While we are designing a microservice in this project, the information being presented should not be thought of just applying to a microservice design. It applies to all developments of a module—either a service like our schedule service or a module of code as part of a monolithic application.

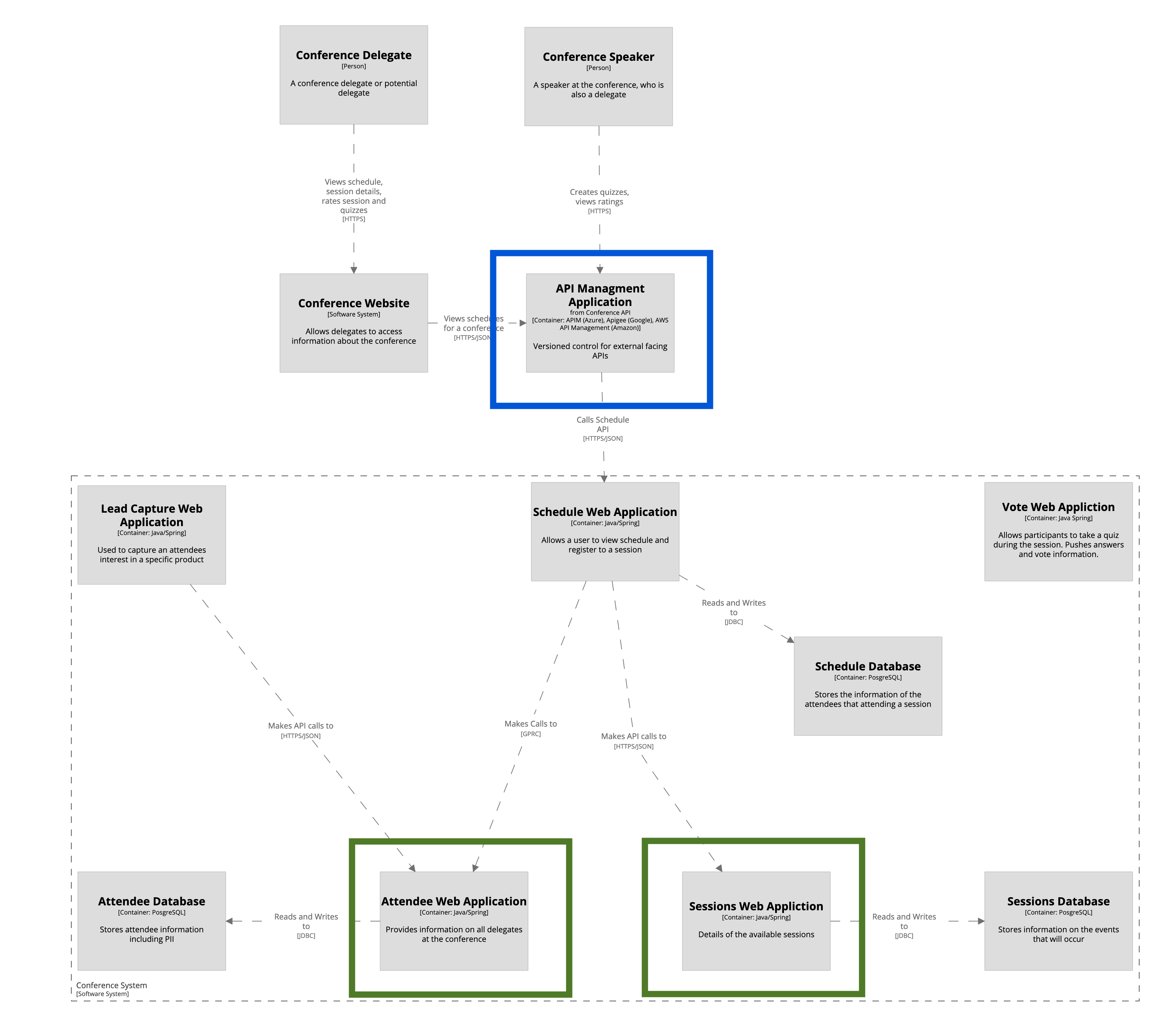

Figure 3-1 shows an image of the Schedule service and you can seen it connects to the Sessions service and the Attendee Service.

Figure 3-1. Conference system

The Sessions application is being migrated from SOAP to a RESTful JSON service. This needs to be factored in as part of build for the Schedule service as the two are integrated. The Schedule service is going to be called externally, meaning that it will be internet facing. The Schedules service is called by the API Management Application which could be initiated by the Conference website or third parties.

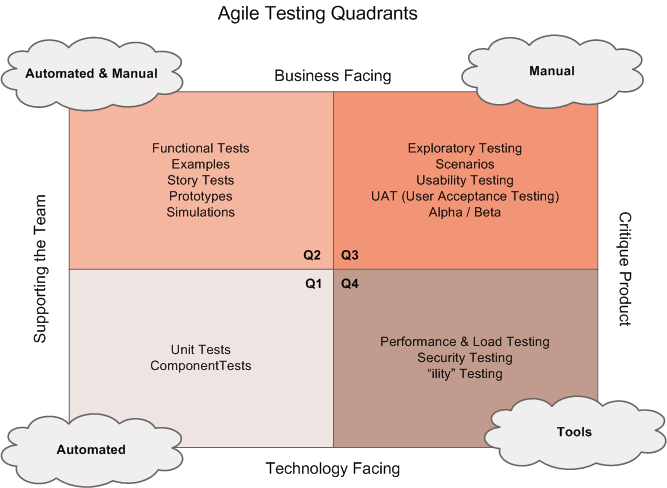

The Testing Quadrant

Before implementing some tests and researching what testing tools and frameworks are available, it is important to understand the strategies that can be used when testing a product. The testing quadrant was first introduced by Brian Marick in his blog series on agile testing. This became popularized in the book Agile Testing by Lisa Crispin and Janet Gregory (Addison-Wesley). The testing quadrant is bringing together Technology and the Business for testing the service that is being built. Technology cares that the service has been built correctly, the pieces respond as expected, are fault tolerant and continue to behave under abnormal circumstances. The Business cares that the right service is being developed i.e. in the case of the Conference System does the service show all the conference talks available, will the conference system send a push notification before the next talk.

There is a distinction between the priorities from each perspective and the Testing Quadrant brings these together to create a nice collaborative approach to develop testing. The popular image of the test quadrant is:

The testing Quadrant does not depict any order, this is a common source of confusion that Lisa describes in one of her blog posts. The four quadrants can be generally described as follows:

-

Q1 Unit and Component tests for technology, what has been created works as it should, automated testing.

-

Q2 Tests with the business to ensure what is being built is serving its purpose, combination of manual and automated.

-

Q3 Testing for the business, what should this system be and what should it do, exploratory and expectations being fulfilled, manual testing.

-

Q4 The system will work as expected from a technical standpoint, including aspects such as, security, SLA integrity, handle spike loads.

Again, the quadrants do not need to be fulfilled in any order. If our conference system was looking at selling tickets, which is a system that must handle large traffic spikes, it may be best to start at Q4.

The testing quadrant is a fantastic area to delve into and it is recommended that the reader look into this area more. When building APIs you will look at each of the quadrants, though in this chapter the primary focus will be on automating the testing.

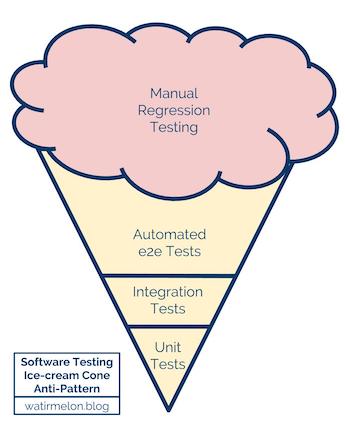

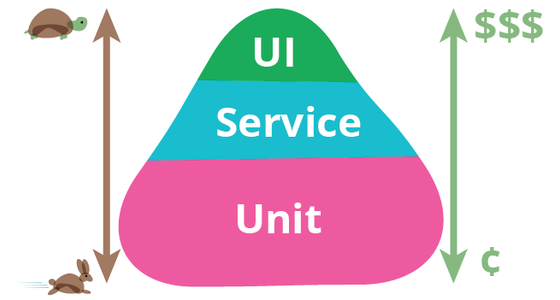

The Testing Pyramid

The Testing pyramid was first introduced in the book Succeeding with Agile by Mike Cohn. This pyramid the notion of how much time should be spent on a testing area, the difficulty and the returned value that it brings. If you search online for images of a testing pyramid thousands will appear, they all consist of different blocks, colours, arrows drawn on them and some even have clouds at the top. However, the Testing Pyramid at its core has remained unchanged. The Testing Pyramid has Unit Tests as its foundation, Service Tests as the middle block and UI tests at the peak of the pyramid.

Figure 3-3 shows a Testing pyramid which comes from Martin Fowlers online post https://martinfowler.com/bliki/TestPyramid.html

Figure 3-3. Martin Fowler Testing Pyramid

Firstly lets examine the test pyramid and then we can start to delve into what exactly is a unit, service and ui test.

When we look at this image we see intuitive icons that allow us to understand where we should dedicate our time and energy in creating tests for a product, in our case for the Schedule service.

Unit tests are at the bottom of the test pyramid and most importantly they form the foundation of this pyramid. The diagram highlights that they have the lowest cost in terms of development time to create and maintain and also they should run quickly. Unit tests are testing small, isolated units of your code to ensure that your defined unit is running as expected.

Service tests are next in the pyramid with a higher development and maintenance cost than unit tests and a slower run time than unit tests. The reason for the increased maintenance cost in a service test is that they are more complex as multiple units are tested together to verify they integrate correctly. As they are more complex this will mean that they will run more slowly than unit tests. This cost explains why there are less service tests than that of unit tests and the increased costs and decreased speed mean that the returned value decreases.

Finally we have UI tests which are are the peak of the pyramid. These are the most complex tests so they will have the most cost in terms of creating and maintaining and will also run the most slowly. The UI tests will test that an entire module is working together with all its integrations. This high cost and low speed demonstrates why UI tests are the peak of the pyramid as the cost to benefit diminishes.

This does not mean that one type of test has more value than another. When building a service it is important to have all of these types of test. We do not want a service that only has unit tests or just UI tests.

The testing pyramid is a guide to the proportions of each type of testing that should be done.

The reason that we need a testing pyramid is that from a high level as an Architect or a project owner standpoint, UI tests are the most tangible. Having something tangible makes these tests feel the safest and by giving someone a list of step by step tests to follow this will catch all the bugs. This gives the false sense of security these higher level tests provide better testing than the results of unit tests which are not in an architects control. This false fallacy gives rise to the ice cream cone which is the opposite of a testing pyramid. For a robust argument on this please read Steve Smiths blog post End-To-End Testing considered harmful

Here we have an image of the anti-pattern testing ice cream cone https://alisterbscott.com/kb/testing-pyramids/.

As can be seen the emphasis here is on the high level manual and end to end testing over the lower level and more isolated unit tests. This is exactly what you do not want to do, however, when looking at your own services.

Unit Testing

The foundation of the testing pyramid is unit testing and we have outlined some characteristics that unit tests should have, they should be fast numerous and cheap to maintain. The first question that might be raised by an architect is why are we spending time looking at unit tests in this discussion on APIs? Unit tests are for testing logic at small isolated parts. This gives a developer who is building the unit the knowledge that this piece has been built “correctly” and doing what it is supposed to do. When building an API it must be solid for the customer and it is essential that all these foundational units are working as expected.

At this point unit tests have been talked about,but only in the context of their purpose.

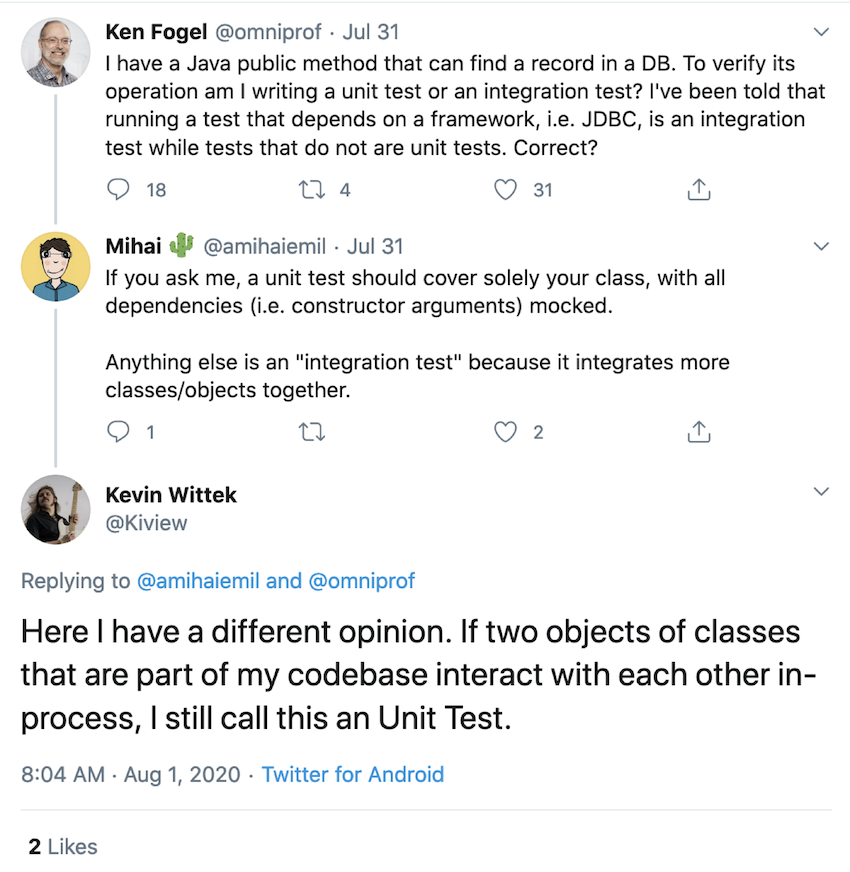

A Unit test should firstly be isolated and it should test a single unit. However, what is the unit?

The typical example of a Unit in Object Oriented (OO) languages is a Class though some make unit tests per public method of a Class. For a declarative language a unit may be defined as a Function or some Logic.

A Unit need not be defined exactly as one of these, however, you will need to define a unit for your case. To give a concrete example of this blurred line. Here we see Kevin Wittek defining a unit test to be anything that is interprocess.

Figure 3-5. Twitter discussion on Unit Tests

Let us look at the Schedule service that we are building. Here, since we are using Java, we shall define a unit as a Class. The code is functional in style and will be commented to explain what it does if you are not familiar with the language.

Before we write the implementation let us look at what we want this unit to do.

Test Driven Development

Here, we’ll test the class. We want to decorate the Session that we get from the Session Service with additional information on how it fits in the Schedule.

publicclassSessionDecorator{}

The criteria that we wish to fulfill (i.e., what we’ll be testing) are the following:

-

Given a

ConferenceSessionis to be decorated, When a request to the database is performed and a corresponding schedule exists, Then aConferenceSchedulewill be returned without errors or warnings -

Given a

ConferenceSessionis to be decorated, When a request to the database is performed and no corresponding schedule exists, Then a NoSuchElementException will be returned. -

Given a

ConferenceSessionwith no id value, Then an IllegalArgumentException will be returned

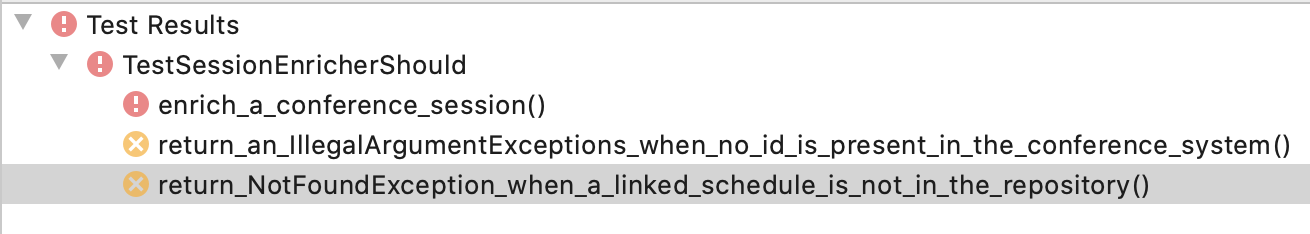

We can write the tests for this simple class before we write the implementation. This is known as TDD (Test Driven Development). The TDD process is defined as:

-

Write Test - Write the test that you wish your unit to fulfill

-

Run all the Tests - Your code should compile and run, however, your tests should not pass.

-

Write the code to make test pass

-

Run all the Tests - Validates that your code is working and nothing is broken

-

Refactor code - Tidy up the code

-

Repeat

The process is known as Red, Green, Refactor

This simple iterative process was discovered/re-discovered by Kent Beck and to learn more about this area refer to his works Test-Driven Development: By Example.

In our example we are using JUnit which is an xUnit framework for the Java language. Again to learn more about xUnit frameworks refer to the works of Kent Beck’s Test Driven Development: By Example(Addison-Wesley).

Here we see a sample test for a positive outcome. This example uses stubs for the test. A stub is an object used for a test that can return hard coded responses.

@Testvoiddecorate_a_conference_session(){// Given a ConferenceSession is to be decoratedfinalConferenceSessionconferenceSession=ConferenceSession.builder().id("session-123456").name("Continuous Delivery in Java").description("Continuous delivery adds enormous value to the "+"business and the entire software delivery lifecycle, "+"but adopting this practice means mastering new skills "+"typically outside of a developer’s comfort zone").speakers(Collections.singletonList("dan-12345")).build();// When a request to the database is performed and a corresponding schedule existsfinalDatabaseSchedulefoundSchedule=DatabaseSchedule.builder().id("schedule-98765").sessionId("session-123456").startDateTime(LocalDateTime.now()).endDateTime(LocalDateTime.now().plusHours(4)).build();finalStubScheduleRepositorystubScheduleRepository=newStubScheduleRepository(foundSchedule);// Then a ConferenceSchedule will be returned without errors or warningsfinalSessionDecoratorsessionDecorator=newSessionDecorator(stubScheduleRepository);finalConferenceSchedulereturnedConferenceSchedule=sessionDecorator.decorateSession(conferenceSession);finalConferenceScheduleexpectedResult=ConferenceSchedule.builder().id(foundSchedule.getId()).name(conferenceSession.getName()).description(conferenceSession.getDescription()).startDateTime(foundSchedule.getStartDateTime()).endDateTime(foundSchedule.getEndDateTime()).build();Assertions.assertEquals(expectedResult,returnedConferenceSchedule);}

We next present a test that uses mocks. Mocks are pre-programmed objects that work on behavior verification.

@Testvoidreturn_NotFoundException_when_a_linked_schedule_is_not_in_the_repository(){// Given a ConferenceSession is to be decoratedfinalConferenceSessionconferenceSession=ConferenceSession.builder().id("session-123456").name("Continuous Delivery in Java").description("Continuous delivery adds enormous value to the "+"business and the entire software delivery lifecycle, "+"but adopting this practice means mastering new skills "+"typically outside of a developer’s comfort zone").speakers(Collections.singletonList("dan-12345")).build();// When a request to the database is performed and no corresponding schedule existswhen(mockScheduleRepository.findAllBySessionId(anyString())).thenReturn(Collections.emptyList());// Then a NotFoundException will be returnedfinalSessionDecoratorsessionDecorator=newSessionDecorator(mockScheduleRepository);Assertions.assertThrows(NoSuchElementException.class,()->sessionDecorator.decorateSession(conferenceSession));}

Please refer to the book’s Github repository for further tests.

Classicist and Mockist

In these tests we show the use of using mocks, stubs and dummy’s. This is highly exaggerated case to show some of the test objects available. The tests shown have both a Classicist and Mockist approach. 2 The Classicist approach uses real objects, like the stub example while the Mockist will use mock objects instead of real objects. Is there a purest way to go? No there is not a single approach to take and both have there pros and cons. To learn a lot more on this, please read this excellent article by Martin Fowler titled “Mocks Aren’t Stubs”.

Now lets create the method though not write any implementation and run the test suite again.

publicclassSessionDecorator{publicConferenceScheduledecorateSession(ConferenceSessionsession){thrownewRuntimeException("Not Implemented");}}

From this we will see that no tests pass.

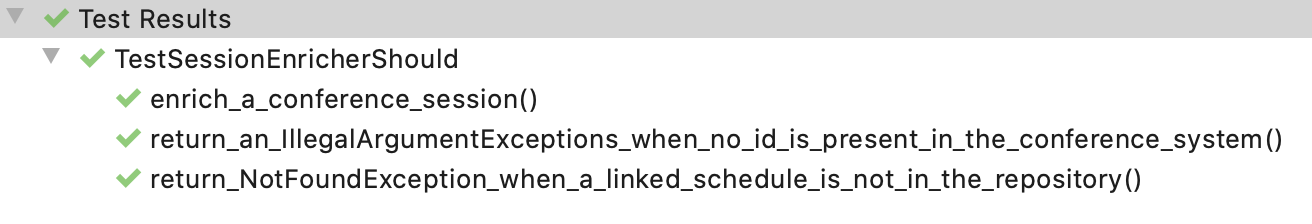

Let us create the implementation to make our tests pass.

publicConferenceScheduledecorateSession(ConferenceSessionsession){if(Objects.isNull(session.getId())){thrownewIllegalArgumentException();}finalList<DatabaseSchedule>allBySessionId=scheduleRepository.findAllBySessionId(session.getId());if(allBySessionId.isEmpty()){thrownewNoSuchElementException();}else{finalDatabaseScheduledatabaseSchedule=allBySessionId.get(0);finalConferenceScheduleconferenceSchedule=ConferenceSchedule.builder().id(databaseSchedule.getId()).name(session.getName()).description(session.getDescription()).startDateTime(databaseSchedule.getStartDateTime()).endDateTime(databaseSchedule.getEndDateTime()).build();returnconferenceSchedule;}}

Excellent the tests now pass.

The code has got a bit messy so let’s refactor it:

publicConferenceScheduledecorateSession(ConferenceSessionsession){Preconditions.checkArgument(Objects.nonNull(session.getId()));finalList<DatabaseSchedule>allBySessionId=scheduleRepository.findAllBySessionId(session.getId());if(allBySessionId.isEmpty()){thrownewNoSuchElementException();}finalDatabaseScheduledatabaseSchedule=allBySessionId.get(0);returnConferenceSchedule.builder().id(databaseSchedule.getId()).name(session.getName()).description(session.getDescription()).startDateTime(databaseSchedule.getStartDateTime()).endDateTime(databaseSchedule.getEndDateTime()).build();}

Finally the tests should be run again to ensure that nothing has broken by the refactor.

We are now confident in our implementation.

Note

When first performing the refactor the Author made a mistake which broke one of the tests. Even with such a small and simple piece of code mistakes can happen. Tests make refactoring safe and we have immediately gained value from having them.

This example should give you a flavour of the Test Driven Development process. When building applications the value of TDD has historically spoken for itself. Studies have shown that Developers who user TDD spend less time debugging and, on greenfield projects, build something more solid.

For more information on Test Driven Development in Industry and the value it gives, we recomend these two papers:

-

An Initial Investigation of Test Driven Development in Industry

-

Realizing quality improvement through test driven development

Unit testing is a valuable way to build a system and using TDD gives you a firm foundation for your testing pyramid. These unit tests give you isolated testing of your defined unit and coverage of your application. Being fast to run and cheap to maintain they give you a fast development cycle and reassurance your units are doing what you expect.

Tip

As an Architect it is important that foundations of the modules being built use these fundamental testing techniques. If TDD is not being applied then it is worth allowing developers to invest time learning to apply this process to develop better modules and in the long term allow teams to iterate faster with confidence.

Service Testing

Service tests are more wide ranging in what they consist of. In this section, we will be exploring what is a Service test, the value that they bring and pros and cons. Service tests consist of integration tests and testing your application across different boundaries.

There are two boundaries that exist. The first is are between your module (service if building a microservice) and an external component. The other is between multiple units together internal to your module. It is important to keep these distinctions and have these boundaries separated for your testing.

This is why we will have two definitions for our service tests.

-

Component test are testing our module and do not include external services (if they are needed then they are mocked or stubbed).

-

Integration tests are tests that verify egress communication across a module boundary (e.g. DB Service, External service, other Module).

Component tests

Component testing to see how our module works with multiple units is really valuable. It allows validation that the modules work as expected and our service can return the correct response from incoming consumer requests.

As component tests do not include external dependencies, any that are required will be mocked or stubbed. This is useful if you want to force a module to fail and trigger issues such as client errors and internal service errors which can help verify that responses are generated correctly.

Introducing REST-Assured

REST-Assured is a tool that will will be useful for our component tests when sending incoming requests to our Schedule service. This is a DSL that uses the Given When Then semantics and makes clear readable tests.

REST-Assured allows us to construct requests and to closely control the payloads that are sent to the controller. It is possible to pass specific headers and query params to the URL. REST-Assured then allows us to inspect the result and interrogate exactly what the response is. This includes the HTTP Status code, headers and response body.

REST-Assured has a variety of language implementations so should not just be seen as a Java tool. There are other implementations that employ a similar style for testing.

Security component test example

In our example, the components that are being tested are the incoming requests to our Schedules service. When a client makes a request to the endpoint “/schedules” it is important that it is routed to the correct Controller that will perform the business logic upon this request. There are further criteria that should be validated when requests are made such as to ensure that when making calls to our services that no information is leaked if a consumer probes our service. Validation that our service is not leaking any of this information is really important and where component testing is useful. Another key area to validate is security. In our Schedule service there is a security requirement that all authorized clients can make a request to see the schedules, however, only specific authorized end users are able to create a new schedule. Again a selection of units are grouped together to ensure that when a request is made to our service that it will allow an authorized user access to see the schedules.

For our Schedule service a controller has been created that will take requests from an authenticated user

@GetMappingpublicMono<ValueEntity<ConferenceSession>>getSchedules(@AuthenticationPrincipalJwtAuthenticationTokenprincipal){if(!READ_SCHEDULE_CLAIM.equals(principal.getToken().getClaimAsString("scope"))){returnMono.error(newAccessDeniedException("Not required Authorization"));}returnsessionService.getAllSessions().flatMap(sessionDecorator::decorateSession).collectList().map(conferenceSchedules->newValueEntity(conferenceSchedules));}

Note

This security check uses Spring Security. The Spring Security library has the ability to demonstrate this in a neater fashion, however, this more verbose method has been chosen for the purpose for brevity.

This controller was written using TDD, however, the Authenticated Principal passed in was created in the unit test. This is why it is important to verify the security of this controller and the Spring Security framework is working with our component. Component tests will verify the controller fulfilled the following criteria:

-

Requests will not reach the controller if no Authenticated principal is provided

-

Requests that have an incorrect scope will return a status of 403

-

Requests that are successful have response of 200

Here we show the second test case in the list above. Please refer to the github for the other test cases

@Testvoidreturn_a_forbidden_response_with_missing_read_scope_supplied(){given().webTestClient(webTestClient.mutateWith(mockJwt().jwt(jwt->jwt.claim("scope","schedules:WRITE")))).when().get("/schedules").then().status(HttpStatus.FORBIDDEN);}

What can be seen here is that the tests assert the expected behavior that was laid out. This essential testing gives us confidence that the endpoint is secure and will not leak data to unauthorized parties.

Component testing shows us the value of the units working together and can confirm whether the units work together as expected.

Integration tests

Integration tests in our definition are testing across boundaries between the module being developed and any external modules.

The schedule service talks to three other external services which are the PostgreSQL Database a RESTful Sessions Service and a gRPC Attendee service.

All of the code that is used to communicate with these boundaries needs to be tested.

For the Sessions Service the SessionsService Class is used to communicate between our service and RESTful service.

For the Attendee Service the AttendeeService Class is used to communicate between our service and the gRPC service

For communicating with the PostgreSQL database this is the ScheduleRepository Class, which uses Spring Data to create our connection between our application and our Database.

The external boundaries have been identified and the next step is to establish across each one what should be tested and verified. Each boundary will have its own set of tests and each one should be tested in its own environment. It is not advisable to have one test file that is used for all boundaries. This section of the book will give in depth coverage of testing the Sessions and Attendees services as well as mention the connection to the Database connection used for the Schedule Service.

External Service integration

A common requirement for a project is to integrate with another service or module.

The validation that should be performed is to confirm that when communicating across the boundary that the interaction is correct with this external service. For this external service integration the case that will be examined is the Schedule service communicating with the Sessions service.

How do we know that an external service integration is validated

-

Ensuring that an interaction is being made correctly e.g. for a RESTful service this may be specifying the correct URL or the payload body is correct

-

Is the data being sent in the correct format?

-

Can the unit that is interacting with external service handle the responses that will be returned?

Ideally, we would have a list of interactions with an external service and be able to validate these without having to speak to the actual service itself. If the Schedule service speaks to the actual Sessions service then this would be part of End to End testing which is later on in “UI Testing”. So, how can we validate that the two services can talk to each other?

Options for testing with an external service

The first and most obvious solution is to use what was learned in “Introduction to REST” and to look at an API specification that a Sessions service produces. By looking at the API specification it is possible to see all the endpoints and the requests and responses that are available to us. This also means that if an API first approach is taken to a system (“Specifying REST APIs”) an OpenAPI spec will be available for use.

Introduction to stubbing with Wiremock

Another option is to create a stub server of the Sessions service API that can be run locally as part of a test. It is possible to use a tool such as Wiremock to facilitate this stubbing.

By using WireMock it is possible to construct a stub server directly in code in tests or to create mappings files which are JSON files that describe an API interaction. WireMock can be ran as a standalone process so this does not limit you to a programming language or enforce only running it for testing. It is possible to run a stub server and use it in an environment as a replacement for a real service. An example case and mappings file is shown towards the end of “Why use API Contracts”.

The process of using WireMock is to standup the stub server for your tests to represent an external service. The WireMock server is then fed interactions that it should respond to. So when representing the Schedules service a mapping will be fed to it that effectively states, when a request is made to this endpoint (e.g. “/schedules”), then respond with this body. If no matches are made an error is returned.

However, there is an issue here that immediately causes concern. The concern is actually writing the stub code. As the developers wish to have a stubed external service they need to create it by hand with their understanding of how the Schedule service should work. What if interaction is incorrectly defined, such as the expected response is written incorrectly?

There is a solution to this if the API service is already available. WireMock again can be used to capture real requests and responses by proxying the requests through it. More information on how to do this is available in the WireMock documentation These captured requests and responses are saved as mapping files and then can be used to use WireMock for testing or as a stub service for numerous cases such as a dev test environment when actual requests to an API may cost actual money.

By capturing the API requests with another service the concerns of writing mappings by hand are averted as they are instead being generated. This does not solve the issue when a new API interaction is being defined or the API interaction is extended and again the solution is to write the new API interaction or extend the stub server.

Stubbing is a common practice and something that the Authors have used in the past as it works and provides value. As long as the team is aware of the limitations these are fair techniques to use and provides invaluable testing across the external boundary.

There is another technique to use for testing with external services, though first let’s walk through a typical scenario for a new project that many readers will be familiar with or looking to undertake: A new project is created and two teams want or need to integrate their products together. They decide on the core interactions that they need and write a document with a list of endpoints for the new RESTful service. Payloads are defined of what is sent and what will be received. After time passes the two teams deploy the first iteration and they find that some or all of the interactions are not working.

Classic examples of why interactions are not working include:

-

The URLs do not match the document as someone has decided on new and “better” convention

-

The client of the service has mistyped a property name

-

The response is expected as an object but gets returned as an array of objects as this will make it “future proof”

An API first approach has been tried in this example, all parties try to do the right thing and make the best product. However, there is nothing to hold the services to account on the agreement made in the document. The producer of the API needs to uphold what they will respond with and the consumer needs to validate it is calling the API correctly.

For a real life situation what is being described for this document is at best handshake agreement of what each party will do. What is desired is something like a that looks more like a contract!

There is good news that API Contracts do exist.

API Contracts

AAs a consumer of an API it has always been a good idea to test with a Test Stub service (like the WireMock services just described and as a producer of an API there is huge value in being able to know how your service is going to be called.

API Contracts are a written definition of an interaction between two entities a Consumer and a Producer. A Producer responds to the API requests, it is producing data. e.g. a RESTful Web Service A Consumer requests data from an API. e.g. web client, terminal shell

Producers are also known as Providers. In this book the word Producer will be used, though the reader should be aware that they are interchangeable and provider is the term of choice in some contract frameworks.

When looking at the C4 model of the the interactions of our Schedule service it is valuable to see the UI is calling the service through the API Management solution and that the service is requesting data from both the Attendee and Sessions service.

Figure 3-6. Producer and Consumer elements of schedule service highlighted

Therefore we can say that the Schedule service is a Producer for the API Management Application and it is a Consumer of the Sessions service and Attendee service.

Why use API Contracts

The beauty of defining an interaction with a contract is that it is possible to generate tests and stubs from this. Automated tests and stub services are what give us the ability to perform local testing without reaching out to the actual service, though still allow us to verify an interaction.

To understand of how tests can be auto generated and stub servers can be created it is important to see an API contract. For our example as the Schedule service is being written in Java the Contract framework that will be used is Spring Cloud Contracts. However, the section “API Contract Frameworks” discusses other API Contract frameworks.

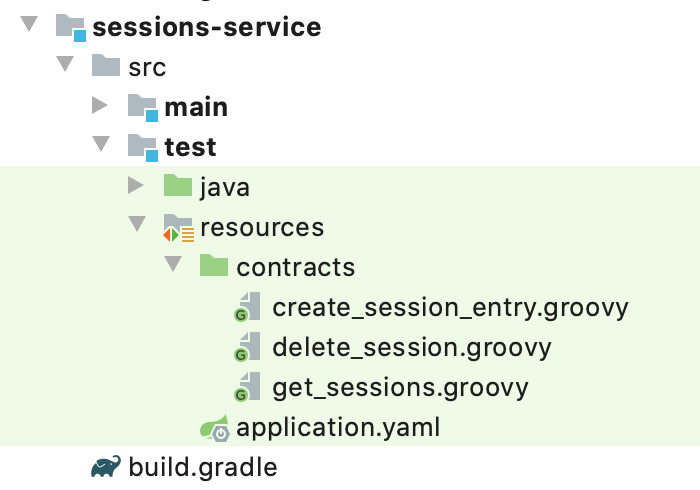

The Schedule service is communicating with the Sessions Service and it was decided as part of the build that the Sessions service would use API Contracts. We can see from the image the three contracts. These contracts are the Get sessions as a GET, a Create Session as a POST and a Remove Session as a DELETE.

Figure 3-7. Contracts of sessions service

The piece of functionality that the sessions service is lacking is the ability to modify a session. This is a candidate for submitting a contract for this new interaction with the Sessions service.

Here is the contract for the new interaction. It shows how the Producer shall respond and how the Consumer should interact with the new PUT endpoint.

import org.springframework.cloud.contract.spec.Contract

Contract.make {

// (1) Request entity

request {

// (2) Description of what the contract does

description("""Modify a Schedule Resource""")

// (3) The HTTP Method that the consumer will call

method PUT()

// (4) The URL endpoint that will be hit

urlPath '/sessions/session-id-1'

// (5) The Headers that should be passed by the callee

headers {

// (6) Header of ContentType with value 'application/json'

contentType('application/json')

}

// (7) The Body of the request

body(

// (8) A name property

name: "An Introduction to Contracts",

// (9) A description property

description: "A beginners guide to Contracts",

// (10) A speaker id array property

speakerIds: [

// (11) An array of speaker ids

"bryant-1", "auburn-1"

]

)

}

// (12) Response entity

response {

// (13) The status from producer should be NO CONTENT, 204

status NO_CONTENT()

}

}

This should hopefully look familiar as it has the look and feel of a generic Request and Response that you see in HTTP interactions. This is a real benefit as it makes writing and reading contracts simple for people not familiar with Groovy.

Let us go through this contract step by step and explain what is happening.

The first part, the most outer definition, is merely stating that we are defining a contract:

Contract.make{

}

The two entities are the request and the response so let us first check the request we have defined in the contract.

In this request it is shown what the Consumer should send to the Producer.

The response is next and shows what the Producer should respond with

As we look at the contract as a whole what is being said is, When a PUT request is sent to /sessions/session-id-1 which has a header with Content-Type that is equal to application/json and the body of the request has a name property that matches “An Introduction to Contracts” and a description that equals “A beginners guide to Contracts” a Response with the status 204 is returned.

We now have a full definition of a contract and to reiterate this is a definition of an interaction and NOT behavior. The contract is stating that the Producer has to return a 204 status code (when successful), it does not care how the producer does this in the background. If this were a legal contract and you are told to pay a fine, the court does not care how you procure the money (hopefully legally) so long as you pay the fine issued. This is a defined interaction, the court will get the money, and for an API the consumer will get the defined response.

One thing you may have noticed is hardcoded values such as the id of session-id-1 enforced by the contract. This works fine as from the definition we can easily tell what the contract is intended todo, however, we can make the contract more generic by using regular expressions for defined properties. Here we see the same contract in a more generic manor.

// (12) Response entity

response {

// (13) The status from producer should be NO CONTENT, 204

status NO_CONTENT()

}

As can be seen in the annotated code the values can be generated or inferred by the testing framework.

For a Consumer, When a PUT request is sent to /sessions/{ID} which has a header with Content-Type that is equal to application/json and the body of the request has a name property that is a non blank string and a description that is a non blank string a Response with the status 204 is returned.

For a Producer, When a PUT request is sent to /sessions/session-id-1 which has a header with Content-Type that is equal to application/json and the body of the request has a name property that is a non blank string and a description that is a non blank string a Response with the status 204 is returned

It is not suggested that all contracts be as generic as possible, hardcoded values are not bad, however, generic values can be useful for the generated contract tests.

As this contract is something that the producer (Schedule service) should fulfill it is possible to generate tests from this contract. This is logical as, this is an interaction that is entirely defined. The generated test from this contract is the following:

@SuppressWarnings("rawtypes")publicclassContractVerifierTestextendsBaseContract{@Testpublicvoidvalidate_modify_session()throwsException{// given:WebTestClientRequestSpecificationrequest=given().header("Content-Type","application/json").body("{"name":"KJSKASVJSGXRLXYKVSXY","+""description":"LXYIOGALHTTSXMUCRIPY","+""speakerIds":["FQJEPDMOAPIMDUPGFCKZ"]}");// when:WebTestClientResponseresponse=given().spec(request).put("/sessions/session-id-1");// then:assertThat(response.statusCode()).isEqualTo(204);}}

This generated test is testing the interaction against our producer. A real request is sent to our service against the defined endpoint and with random non blank strings for the name and description and speaker ids, just as defined in the contract. This should emphasize why it may not always be desired to have a more generic contract as the values are used for the generated test.

Eagle eyed readers may notice, it may be noticed the extends BaseContract in the test.

This is required in the setup of Spring Cloud Contracts to define how to configure the producer so it can receive the request sent by the generated test.

This makes sense as a contract can create a test, though it has no knowledge of how to start the service.

@SpringBootTestpublicabstractclassBaseContract{@AutowiredprivateApplicationContextcontext;@BeforeEachvoidsetUp(){RestAssuredWebTestClient.applicationContextSetup(context);}}

This base contract is an implementation detail to this specific framework for this generated test and to complete the story. The finer points of this framework will not be discussed any further.

These generated tests must be fulfilled by the producer as part of the build to ensure that no contracts fail. This is key when developing an API to ensure that defined interactions continue to be fulfilled and that the values and properties do not change by mistake or by accident.

The final part of this story is the generated stub service that can be used by the consumer for testing.

So what is generated?

{"id":"bfd7dd8b-1551-4fc8-b530-231613a201a4","request":{"urlPathPattern":"/sessions/[a-zA-Z0-9-]+","method":"PUT","headers":{"Content-Type":{"matches":"application/json.*"}},"bodyPatterns":[{"matchesJsonPath":"$[?(@.['name'] =~ /^\s*\S[\S\s]*/)]"},{"matchesJsonPath":"$[?(@.['description'] =~ /^\s*\S[\S\s]*/)]"},{"matchesJsonPath":"$.['speakerIds'][?(@ =~ /^\s*\S[\S\s]*/)]"}]},"response":{"status":204,"transformers":["response-template"]},"uuid":"bfd7dd8b-1551-4fc8-b530-231613a201a4"}

A mapping file is created that can be used by WireMock. These stub servers are extremely valuable to stand up as individual processes not just for testing but to run alongside the actual service to be able to have a working system whule the actual service is still being built.

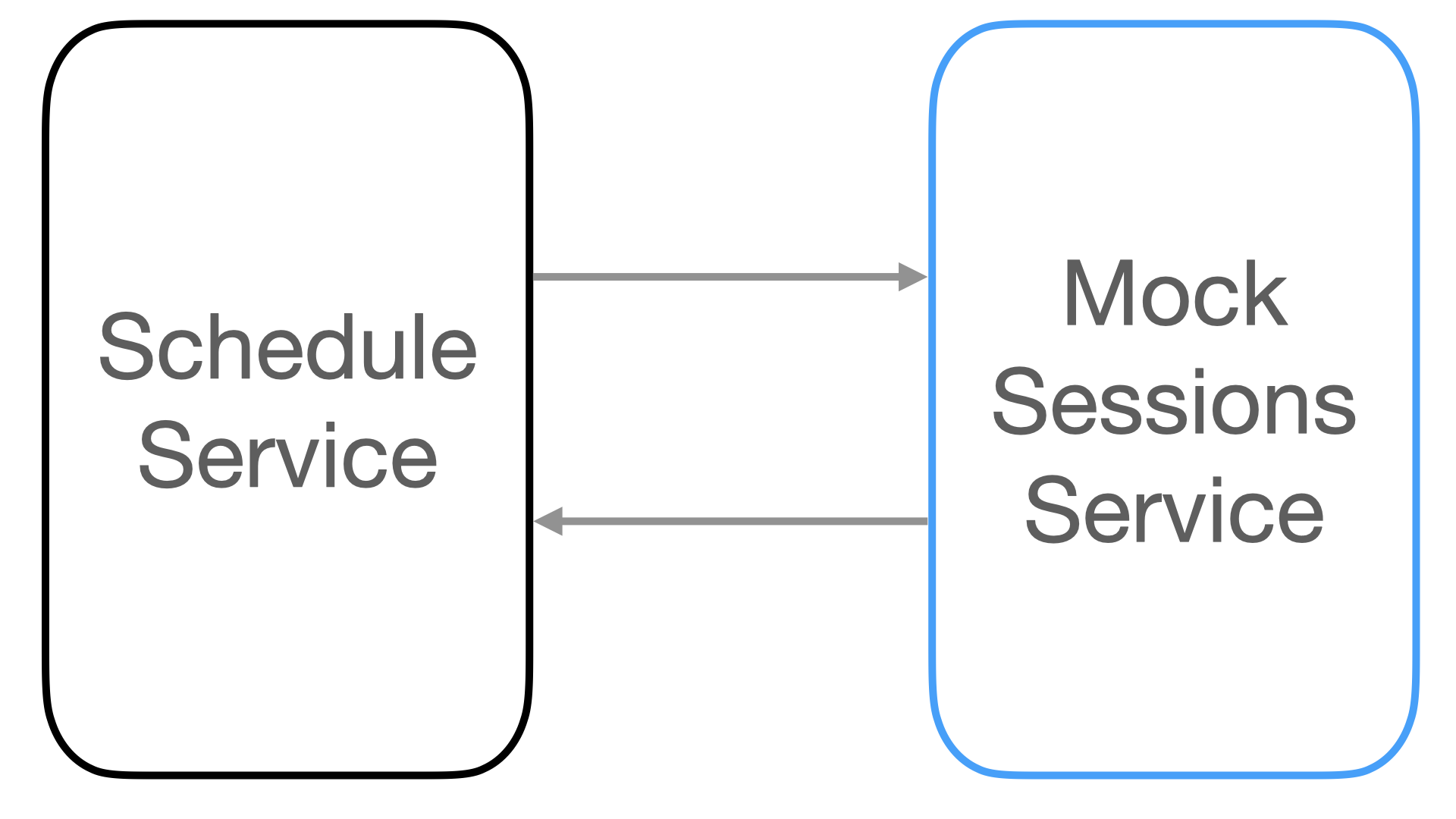

Figure 3-8. Schedule and mock Sessions service

Tip

The Authors have used these generated stub servers to run demos for stakeholders while the producer had not been deployed.

When used for testing, as it is herer, we can see a bad request is made. In this example a request is made with the speaker id property being a single value and not an array against this stub service. Again a snippet is shown for brevity to see the whole picture.

@ExtendWith(SpringExtension.class)@AutoConfigureStubRunner(repositoryRoot="stubs://file://location/of/sessions-service/build/stubs",ids="com.masteringapi:sessions-service:+:stubs",stubsMode=StubRunnerProperties.StubsMode.REMOTE)classTestSessionsServiceShould{@StubRunnerPort("sessions-service")intproducerPort;@Testvoidconnect_to_put(){finalWebTestClientwebTestClient=WebTestClient.bindToServer().baseUrl("http://localhost:"+producerPort).build();webTestClient.put().uri("/sessions/session-id-1").contentType(MediaType.APPLICATION_JSON).bodyValue("{"+""name": "A name","+""description": "A description","+""speakerIds": ["singular-speaker-id"],"+"}").exchange().expectStatus().isNoContent();}}

--------------------------------------------------------------------------------

| Closest stub | Request |

--------------------------------------------------------------------------------

|

PUT | PUT

/sessions/[a-zA-Z0-9-]+ | /sessions/session-id-1

|

Content-Type [matches] : | Content-Type:

application/json.* | application/json

|

$[?(@.['name'] |

=~ /^s*S[Ss]*/)] | {"name:" "A name","description:" "A <<<<<

| Body does not match

| description","speakerIds:" "singular-speaker-id",}

$[?(@.['description'] |

=~ /^s*S[Ss]*/)] | {"name:" "A name","description:" "A <<<<<

| Body does not match

| description","speakerIds:" "singular-speaker-id",}

$.['speakerIds'][?(@ |

=~ /^s*S[Ss]*/)] | {"name:" "A name","description:" "A <<<<<

| Body does not match

| description","speakerIds:" "singular-speaker-id",}

|

---------------------------------------------------------------------------------

API Contracts are valuable for both Consumers and Producers. By defining an interaction both usable tests and stub servers can be generated to validate integrations.

Using contracts promotes Acceptance Test Driven Development (ATDD). ATDD is where different teams can collaborate and come to an agreement on a set of criteria that will solve a problem. Here the problem being solved is the API interaction.

Note

It is tempting to use contracts for scenario tests. e.g. first perform a create session, second, use the get session to check the behavior is correct in the auto generated tests. This should be avoided. Frameworks do support this though also discourage it. A producer should verify behavior in component and unit tests and not in API Contracts, they are not designed for behavior testing but for testing API interactions.

API Contracts Development Methodologies

Now that we understood how contracts work and the value that they provide to the consumer and the producer, we need to know how to use them as part of the development process.

There are two main Contract Development Methodologies:

-

Producer Contract testing

-

Consumer Driven Contracts

Each methodology has a specific purpose but can be used in conjunction with each other. A similar example is that of the Classicist and Mockist mentioned in unit testing, both methodologies can be used together.

Producer Contracts

Producer contract testing is when a producer defines its own contracts. If starting out on an API program and wanting to introduce contracts to a project then this is a great place to start. New contracts can be created for the producer to ensure that that service fulfils the interaction criteria and will continue to fulfil them. If Consumers are complaining that a producers API is breaking on new releases then introducing contracts can help with this issue.

The other common reason for using Producer contract testing is when there is a large audience for your API. This is defined as an API that is being used outside your immediate organization and by unknown users, i.e external third parties. When developing an API that has a wide public audience it will need to maintain its integrity and though it is something that is updated and improved, immediate feedback and individual feedback will not be applied. A concrete example int the real world is the Microsoft Graph API, it can be used to look at the users registered in an Active Directory Tenant. A consumer may find it preferable to have an additional for preferred name on the Users endpoint of the API. This is something that can be messaged to Microsoft as a suggestion, however, this is not likely to be changed and if the suggestion was seen as a good idea it would unlikely happen quickly. This is something that would have to be weighed up and considered. Is this something that will be useful for others, is it backwards compatible change, how does this change the interactions across the rest of the service?

Here we take the same approach as the example given, with our Schedule API. We do not want consumers making suggested changes to the API that only benefits them. It is external facing and needs to be very solid, most importantly the structure of this API can not suddenly change. Breaking changes involve versioning which be be read about in “API Versioning”.

Consumer Driven Contracts

Consumer Driven Contracts (CDC) is when a consumer drives out the functionality that they wish to see. Consumers submit contracts to the producer for new API functionality and the producer will choose to accept or reject the contract.

CDC is very much an interactive and social process. The owners of the applications that are consumers and producers should be within reach. Recall the contract we defined earlier in “API Contracts”, where the Schedules Service is consuming from the Sessions Service. A new API interaction is desired and a contract is then defined. This contract is submitted as a pull request into the Sessions Service. The maintainers of the Sessions Service can then look at the PR and start reviewing this new interaction. At this point a discussion takes place about this new functionality to ensure that this is something that the Sessions service should and will fulfill and that the contract is correct. While this contract is to be a PUT request, a discussion can take place if this should be in fact a PATCH request. This is where a good part of the value of contracts comes from, this discussion for both parties about what the problem is, and using a contract to assert that this is what the two parties accept and agree to as a solution. Once the contract is agreed, the producer (Sessions service) accepts the contract as part of the project and can start fulfilling it.

As we’ve seen, contracts are required to pass as part of build. Therefore the next release of the Sessions service must fulfill this contract. From contracts the stubs can be generated which the consumer can use for local testing. Both services can develop independently of each other just by using contracts.

Contracts methodology overview

These methodologies should hopefully give an overview of how to use contracts as part of the development process. This should not be taken as gospel as variations do exist on the exact steps. For example, the consumer when writing the contract should also create a basic implementation of the producer code to fulfil the contract. In another example, the consumer should tdd the functionality they require and then create the contract before submitting the pull request. The exact process that is put in place may vary by team. By understanding the core concepts and patterns of CDC the exact process that is put in place is an implementation detail.

If starting out on a journey to add contracts it should be noted that there is a cost to it. This cost is the setup time to incorporate contracts into a project and also the cost of writing the contracts. It is worth looking at tooling that can create contracts for you based on an OpenAPI Specification. At the time of writing there are a few projects that are available, though none are actively maintained so it is difficult to recommend any.

API Contracts Storage and Publishing

Having seen how contracts work and methodologies of incorporating them to the development process the next question becomes where are contracts stored and how should they be published.

There are a few options for storing and publishing contracts and these again depend on the setup that is available to you and your organization.

Most commonly Contracts are stored along side the Producer code in version control (e.g., git). They can then be published alongside the Producer build into a artifact repository such as Artifactory. The contracts are then easily available for the Producer as part of the auto generated tests. Consumers that use the service can retrieve the contracts to generate stubs and can also submit new contracts for the project. The Producer has control over the contracts in the project and can ensure that undesired changes aren’t made or additional contracts are added. The downside to this approach is that in a large organization if can be difficult to find all the API services that use contracts.

Another option is to store all the contracts in a centralized location. A single location for all contracts is very useful as it also serves as a location to see other API interactions that are available. This central location would typically be a git repository, though if well organized could also be a folder structure. The downside to this approach is that unless organized and setup correctly it is possible and likely that contracts get pushed into a module that the producer has no intention on fulfilling.

Yet another option for storing contracts is to use a broker. The PACT contract framework has a broker product that can be used as a central location to host contracts. A broker can show all contracts that have been validated by the producer as the producer will publish those contracts have been fulfilled. A broker can also see who is using a contract to produce a network diagram, integrate with CI/CD pipelines and perform even more valuable information. With the pact broker a producer will be able to pull in submitted contracts to fulfill, it does not pull them in automatically. A broker seems to solve the problems of the previous suggestions, however, there is a setup cost and only the PACT framework has the PACT Broker product.

There are positives and negatives to each approach of storing contracts and it is tempting to think that a Broker is essential to an API Contract Testing program. When starting out in the world of contracts, following the standard of keeping contracts alongside a producer is great approach. It is possible to move to a broker later and should be noted that many users of contracts never use a broker and stick to the basics of contracts alongside the Producer.

API Contract Frameworks

We’ve explained what contracts are, how to use them as part of a project development and how they are stored and distributed. The next step is to choose a contract framework.

The good news is that there are multiple options that are available to choose from. When choosing it is valuable to see the languages that are supported, documentation and most importantly is it going to continue to be developed by the project maintainers. It is always possible to write your own, however, these frameworks handle setups that are common in industry.

As of writing the most popular API contract testing frameworks are:

-

Pact.io

-

Spring Cloud Contracts

Both frameworks support a range of languages though Pact has a large amount of native support in languages, while Spring Cloud Contracts uses a Docker container to support non-jvm languages.

Pact at its core embraces Consumer Driven Contracts and has been written for this purpose, Spring Cloud Contracts began more aimed at Producer Contract Testing. Both frameworks support both testing methodologies.

As Pact firmly embraces Consumer Driven Contracts, the consumer can define the contract which is a very nice way to develop an API.

It should be noted Spring Cloud Contracts does have partial support for the PACT Broker as it can read the PACT specification.

There is no clear cut rule about which framework is better, unfortunately as is always the case in software the answer is, it depends.

Please note that each contract framework has its own suggestions about publishing and storing contracts. Frameworks can support multiple different ways of storing and publishing so it is important to read the documentation to make an informed decision.

Checklist: Should we adopt API Contracts

Decision |

Do we need to API Contracts? |

Discussion Points |

Do we have APIs that keep breaking compatibility? Are producers making API changes that they shouldn’t? Do we need to enforce consistency for our APIs Are consumers finding it hard to integrate with our APIs? Are consumers using real instances of services for tests. Do the teams work closely together and is there dialogue between producers and consumers. Are the resources available to introduce contracts? |

Recommendations |

Contracts should be added for external facing APIs that are being used by customers. Breaking compatibility and APIs for a paying customer is not acceptable. This is a simple and effective starting point. Contracts are not a silver bullet, teams need to work together for a successful contracts program. There is a cost involved in setting up contracts and maintaining them. In the long term Contracts can allow consumers to integrate more rapidly when a successful Contracts program has been established. |

Introduction to Testcontainers

It is time to turn our attention to the Attendees Service that uses gRPC that we wish to integrate with and test this boundary. Here the Attendees Service team has created a simple docker image that contains a basic version of the Attendees Service that is a glorified stub. The Stub Attendees Service can be used to confirm that the Schedule service can make requests and receive a result. As this is gRPC a lot of this is generated for us, the proto files are effectively our contracts as they define the interactions and message formats. To learn more please read “Alternative API Formats”. The important part here is testing across this boundary and TestContainers can help launch this stub service to verify this interaction.

Testcontainers is a library that integrates with your testing framework to orchestrate containers. Testcontainers orchestrates these ephemeral instances, in our case the stub Attendee Service, can be brought up and down for lifetime of our test.

Lets look at our integration test between the Schedule service and the Stub Attendees Service. The strategy will be to start the container, read the connection details to the Stub Attendees Service and then connect to it for the test. Here is shown a the test setup for test containers

@SpringBootTest(classes=TestAttendeesServiceShould.DefaultConfig.class)classTestAttendeesServiceShould{privatestaticGenericContainercontainer=newGenericContainer(DockerImageName.parse("docker.io/library/attendees-server:0.0.1-SNAPSHOT")).withExposedPorts(9090);@DynamicPropertySourcestaticvoidgrpcClientProperties(DynamicPropertyRegistryregistry){container.start();registry.add("grpc.client.attendeesService.address",()->"static://"+container.getHost()+":"+container.getFirstMappedPort().toString());registry.add("grpc.client.attendeesService.negotiationType",()->"PLAINTEXT");}@AutowiredAttendeesServiceattendeesService;@BeforeEachvoidsetUp(){Assertions.assertTrue(container.isRunning());}

Let us describe some of the setup of this test:

-

The service is using a plaintext connection.

-

The Spring annotation

@SpringBootTestis used to help setup the testing. -

The

AttendeesServiceis autowired in, this is the class undertest. -

The

@DynamicPropertySourcecontains the configuration of the connection to the Attendee Service. This is required as when the container is started, it needs to be interrogating so information about the container can be supplied as property values. This is not the most idiomatic way to perform this task, however, this highlights very explicitly what is happening to the reader.

With all the setup clarified we can now look at our tests.

A validation that the test container has started is performed in the @BeforeEach section of the test.

This is not required, however, has been left in for brevity and also for sanity to confirm the container is running.

Here we see a test that validates that it is possible to get a list of attendees. Again it is important to note that this is a Stub Attendees Service and the response to this service has been hard coded to return the name of “James”

@Testvoidreturn_an_attendee(){finalAttendeeResponseattendees=attendeesService.getAttendees();finalAttendeejamesAttendee=attendees.getAttendees(0);Assertions.assertEquals("James",jamesAttendee.getGivenName());}

This is a sample of integration tests that are validating across a boundary. Again it is important to acknowledge that just one boundary integration is being validated and not any other components of the service.

Other dockerized services

The Testcontainers setup has shown a boundary being tested using docker images, and this same setup has been used in the Schedules Service to test a database connection . The options for testing integration boundaries for a database would be mocking out the Database, use an inMemory Database e.g. H2 or run a local version of the database using Testcontainers.

Testcontainers is a really powerful tool and should be considered when testing boundaries between any external services. Other common external boundaries that benefit from using Testcontainers include Kafka, Redis, NGINX.

Service testing Summary

We have covered a lot on service testing which includes testing your module and external modules. In case of integration testing we looked at integrating with a RESTful API, gRPC service and suggested how to test a database. All this is being performed without leaving the development environment. With service testing we can clearly see how the Schedules service we are building is able to work with its ingress and egress points, what we do not know is how it works as a whole when the other parts of the Conference System are deployed. Next we look at the UI testing portion of the test pyramid to help us answer this question.

Note

It is important to reiterate that though Service testing has been the largest section of this chapter Unit tests should still be the foundation of any module being developed. APIs have a lot of options for testing at a service level and we’ve presented these options so you can learn what might work best for your use case.

UI Testing

UI tests for an API seems a bit of an oxymoron. What we are designing is an API for other applications to call our system. If it is a UI that calls our system, fine. If it is a server side service or a cURL request that calls our system that is fine as well. The common misconception is that UI testing involves some sort of Web UI like a Single Page Application (SPA). However, in reality the UI can be cURL, POSTman or a SPA app.

So, what exactly is UI testing? The UI part of the the testing pyramid can mean a few things. There are many interpretations—for some this is testing with a Web UI with a partially stubbed out backend service and ensuring the UI works, for others it means End to End testing the whole system works and the other meaning is to run set of critical stories end to end driven through the UI. In reality it is some or all of these and it is up to you to understand what it is that you need. Across these examples of a UI test, a general observation is that multiple things are interacting together and all of them sound complex. This is why these tests are at the top of the testing pyramid. They are difficult to create and maintain and also take time to run.

The return for these tests is verifying the interactions from your UI and having validation that from an outside perspective the service is running correctly.

Having a full UI-driven test is really important for confidence and certainty that your module is working as expected. Let us reiterate the point, UI tests are complicated and should not be the dominant test of your API. It is incorrect to assume their is more value testing the system as a whole than the parts that make up the system. This is a cheap investment fallacy, please have a look at the excellently written article about this topic by Steve Smith.

WIth UI tests, we are looking to test is the module that is being built and is working correctly in its domain. When it deploys it should work with the other services that it calls and executes with and works as part of the system. With our UI test we look at putting our newly developed or updated service into the mix with real instances of the rest of the system as we are looking to ensure that we have the correct behavior. It is okay to stub out some entities of a system as this may be an external provider such as AWS S3 and you really do not want to run your tests and have to rely on an external entity where the network can go down.

When starting to UI test, define the boundary of your service and what should be tested. If we look at the Sessions service we can see that the service is being triggered through the Schedule service. This is a good point to ask, is a test for the UI required? Or does a Kafka cluster (which is not used, in this instance) need to be spun up? The answer to both questions is no. There is no benefit to this. Being able to define your boundary is useful for not trying to launch everything at once and make it more difficult to test. It could be said that in this case that it is fine to bring everything up as this could be done with Docker Compose and be run on most peoples laptop. However, in industry where you have 100’s or thousands of applications this does not make sense, especially when half of them may not talk to your system.

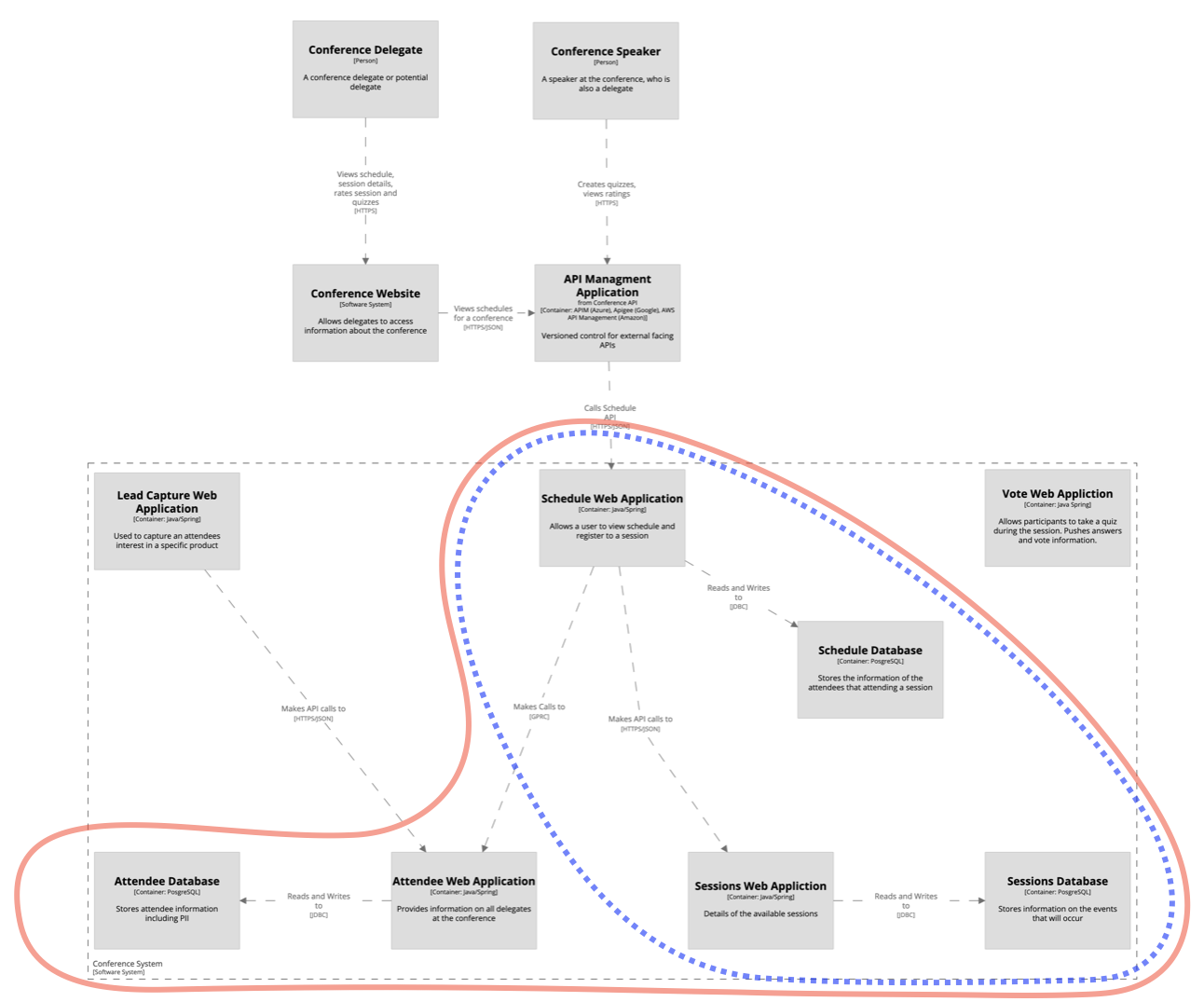

Figure 3-9. Test boundaries for the Schedule service

The visual of our conference system in Figure 3-9 shows the boundaries that we expect to to use as a valid UI test. The two boundaries are:

-

The dotted line of the Sessions and Schedule Service with the associated databases

-

The solid line, which contains the Attendees Service on top of all the services in the dotted line.

How should we test the Sessions Service and the Schedule service? The Sessions service is consumed by a WebUI (conference website) through the API Management layer and so it makes sense to add some UI tests to verify this behavior.

In our case here we have a WebApp that is directly in our control. This WebUI is part of our overall domain and so it is easy for us to test using a tool like Selenium. Selenium is a tool that drives a browser and can run tests such as entering data, executing button clicks and generally mimicking a users journey thorough a WebApp. As was mentioned there is a cost to these types of tests, especially with setting up. Is there a way to avoid a UI test, in this instance?

Subcutaneous Tests

Subcutaneous tests are tests that run just below the UI. An example is that instead of filling out a form and pressing the submit button on the UI. For a subcutaneous test the call would be made directly from a web client, e.g., cURL. Martin Fowler discusses using these types of tests “when you want to test end-to-end behavior, but it’s difficult to test through the UI itself”.

Using this technique is a great way to reduce the cost of setting up a UI driven test and still be able to get the full functionality of testing through your system.

If Subcutaneous tests are so good then why do they not replace UI driven tests? There are things that a UI may need or use that are not required when just running against the API directly via a web client. This includes things such as CORS or CSRF. When running tests by driving a test through the UI the requests and stories will be created using realistic payloads. This is sometimes forgotten with subcutaneous tests as values such as name will be set to “d” instead of realistic value such as “Daniel”. Realistic payloads provide value as it is too common to find cases where a test passes in development, but then in production a consumer sends a payload that is far bigger then was tested with and it breaks some validation rule.

Warning

If you are building an external facing API and you have multiple third parties that are consuming it, don’t try and copy the third party UI to replicate how their UI works. Doing so will mean that huge amounts of time will be spent trying to replicate something out of your domain.

Behavior Driven Development

Behavior Driven Development (BDD) is a development methodology where a behavior is defined that a system should fulfil. Like TDD, in BDD a test is written first and then the application will fulfil the behavior. Behavior should drive the implementation.

BDD has been discussed in this UI Section as normally BDD is done at a top level on the system or, groupings of components or services.

A common misconception is that BDD is that it is about creating automated test stories Test stories are tests in a defined ordering that look a bit like a narrative, thus these are known as stories. However, BDD is actually about communication between developers, business users and QA/testers and discussing how a service should behave. A common language between the parties should be used so that no software tools are required.

BDD is about writing tests as user stories. These user stories consist of scenarios.

TITLE: The Schedule being used by an Attendee Scenario 1: Given an Attendee requests a Schedule When they are registered for the day Then a schedule will be returned to them Scenario 2: Given an Attendee requests a Schedule When there is no conference scheduled on that day Then no schedule is returned

These scenarios can be documented and ran through by a tester. These user stories should be viewed as living documentation. If the tester runs through the user stories and they fail then either the service is broken or something has changed and the story needs to updated.

Think of the user stories as the business requirements that are being verified.

As mentioned a common language should be used between all the parties, this makes it possible to create or use an already well made DSL (Domain Specific Language). A side-effect is that tools need be developed to read this DSL and only then it is possible to have automated tests defining how a service should behave. An example of tool for BDD is cucumber where a DSL is used to then generate automated tests.

UI test summary

UI Testing is a good value add at the top of the pyramid to give us confidence in our system. The cost to build and maintain these tests is greater than the other tests, but this should not discourage you from using them. As long as these tests are concentrated on core journeys of the user and not edge cases. UI tests are not for just testing a UI as in most UI frameworks it is possible to add unit tests to a WebApp such as Jasmine for JavaScript.

Testing during the building of your application

When building your application you have the opportunity to verify your module is backwards compatible and has a valid OpenApi Specification. If the module you are changing is being driven by specification first or code first the OpenApi spec can be obtained and verified. It is possible then to add check to a GitHub Action, an Jenkins build pipeline or any other build tool.

We covered Open API diff tools and what they provide in Chapter 2 “OpenAPI Specification and Versioning”.

Can you test too much?

Testing gives us confidence and though it is recommended more time be spent on writing tests over business logic there is a point where too much testing can be done. If too much time is spent working on tests then the module will never be delivered. A balance is always needed to have a good test coverage and provide confidence. Being smart about what should be tested and what is irrelevant, such as creating tests that duplicate scenarios is a waste of resources.

An Architect must be able to recognize where the boundary is for excessive testing for an API/module/application, as its value for customers and the business is only realized when running in production.

Summary

In this chapter we have covered the core requirements to test an API, including what should be tested and where time should be dedicated. Key takeaways are as follows:

-

By sticking to the fundamentals of the Unit Testing and using TDD for the core of the application.

-

Performing service tests on your component and isolating the integrations to validate incoming and outgoing traffic.

-

Then finally using UI tests to validate your modules all integrate together and verifying this using journeys of core interactions.

This should deliver a solid product for your customers.

While we’ve given you lots of information, ideas and techniques for building a quality API, this is by no means an exhaustive list of tools available. We encourage you to do some research on frameworks and libraries that you may want to use, to ensure you are making an informed decision.

However, no matter how much testing is done upfront, nothing is as good as seeing how an application actually runs in production. To learn more about testing in production refer next to Chapter 5.

1 The authors’ friend owns Titan Mouthguards. One of the authors was on the receiving end of hearing about the arduous process for testing the integrity of the product. A mouthguard must use simulations, stress testing and other such integrity tests. No one wants a mouthguard where the only/majority of testing takes place in on the Rugby field!

2 The Classicist is also known as the Chicago School and Mockist as the the London School.