As the name suggests, parallel LINQ is an extension of the previous LINQ capabilities provided in previous versions of .NET.

In the first solution (Parallel LINQ), Microsoft expert Stephen Toub explains the reasons for this approach in Patterns Of Parallel Programming (available at https://www.microsoft.com/en-us/download/details.aspx?id=19222):

"A significant majority of the work in many applications and algorithms is done through loop control constructs. Loops, after all, often enable the application to execute a set of instructions over and over, applying logic to discrete entities, whether those entities are integral values, such as in the case of a for loop, or sets of data, such as in the case of a for each loop.

Many languages have built-in control constructs for these kinds of loops, Microsoft Visual C#® and Microsoft Visual Basic® being among them, the former with for and foreach keywords, and the latter with For and For Each keywords. For problems that may be considered delightfully parallel, the entities to be processed by individual iterations of the loops may execute concurrently: thus, we need a mechanism to enable such parallel processing."

One of these mechanisms is the AsParallel() method, applicable to expressions that imply the resources we've seen in relation to LINQ and Generic collections. Let's explore this in detail in an example (in this case, the sample will be linked to CPU-bound code).

Our demo will have a simple UI, and we're going to calculate prime numbers between 1 and 3,000,000.

I'll start by creating the prime calculation algorithm as an extension method of the int type, with the following code:

public static class BaseTypeExtensions

{

public static bool IsPrime(this int n)

{

if (n <= 1) return false;

if ((n & 1) == 0)

{

if (n == 2) return true;

else return false;

}

for (int i = 3; (i * i) <= n; i += 2)

{

if ((n % i) == 0) return false;

}

return n != 1;

}

}Now, we'll use three buttons to compare different behaviors: without parallelism, with parallelism, and with parallelism using ordered results.

Previously, we defined some basic values:

Stopwatch watch = new Stopwatch(); IEnumerable <int> numbers = Enumerable.Range(1, 3000000); string strLabel = "Time Elapsed: ";

The two important elements here are Stopwatch, to measure the time elapsed, and the initial collection of numbers, which we are going to generate using the static Range method of the Enumerable class, from 1 to 3,000,000.

The code in the first button is pretty straightforward:

private void btnGeneratePrimes_Click(object sender, EventArgs e)

{

watch.Restart();

var query = numbers.Where(n => n.IsPrime());

var primes = query.ToList();

watch.Stop();

label1.Text = strLabel + watch.ElapsedMilliseconds.ToString("0,000")+ " ms.";

listBox1.DataSource = primes.ToList();

}But, in the second button, we're including the AsParallel construct we mentioned earlier. It's quite similar to the previous one, but we indicate that before getting any results, we want the numbers collection to be treated in parallel.

When you execute the sample (the elapsed time values will vary slightly depending on the machine you're using), this second method is considerably faster than the previous one.

This means that the code has used all cores available in the machine to perform the task (the where method next to AsParallel).

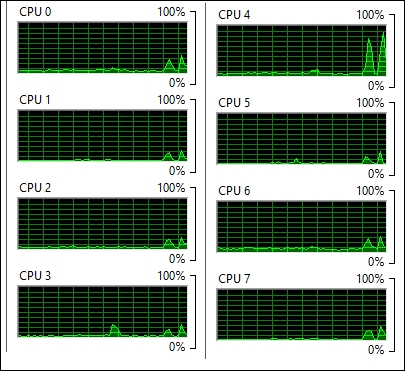

You have a way to prove this immediately: just open the Task Manager and select the Performance tab. In there (if you're using Windows 10 like me), you have to open Resource Monitor to view the activities of all the CPUs present in your machine.

At runtime, the difference becomes evident: in the first event handler, only one CPU appears to be working. If you do this with the parallel method, you'll see that there is an activity (probably in all CPUs if it's not configured in some other way).

This happens with the third option as well, (more about it soon), which uses the AsOrdered() clause. In my box (with eight cores), the resulting window shows the following output:

So, we're really using parallelism, with a very simple addition to our code! The difference in the results becomes evident (as an average, it's about one-third of the time with respect to the synchronous option).

But we still have a problem. If you take a look at the output of the second Listbox control, at some point, you'll see that the list is not ordered, as it happened in the first case. This is normal, since we're using several cores to run the results and the code adds these results in the order in which they are received from the eight cores (in my case).

This order will vary depending on the number of cores, the speed, and other factors difficult to foresee. So, if we really need the results ordered, just as in the first case, we can use the AsOrdered() method, applied right next to the AsParallel() indication.

In this way, the resulting code is fairly the same as in the second method, but the results are ordered now, just with a (usually negligible) delay.

In the next screenshot, I'm moving to prime number 18973 just to show the different way in which Listboxes were filled:

If there are no other processes consuming CPU, successive executions of these methods will offer slightly different results, but the variations will be minimal (actually, sometimes, you'll see that the AsOrdered() method appears to run faster than the non ordered one, but that's only because of the CPU activity).

In general, if you need to really evaluate the execution time, you should perform the benchmarks several times and vary some of the initial conditions.

Dealing with other issues appears to be an excellent way to use all the resources available in our machine, but other considerations may lead us to modify this code. For example, if our application should behave correctly under stress conditions, or we should respect the possible execution of other applications and the process to parallelize is much heavier than this one, it could be wise to use a feature called Parallelization Degree.

With this feature, we can establish the number of cores to use in our application by code, leaving the rest for other machines' applications and services.

We can use this code to include this feature in another button, which will use only a limited number of cores this time. But how do we determine the number of cores? A reasonable solution would be to use only half of the cores available in the system, leaving the other half free.

Fortunately, there's an easy way to find out this number (no need to use Platform/Invoke, Registry values, or WMI): the Environment class has static properties that allow simple access to certain useful values directly: in this case, the ProcessorCount property returns the number of cores. So we can write the following (I'm showing only the modified line):

var query = numbers.AsParallel().AsOrdered() .WithDegreeOfParallelism(Environment.ProcessorCount/2) .Where(n => n.IsPrime());

In this case, in my machine, I'll be using only four cores, which should show a gain in the performance although not as much as when using all cores (I've changed the numbers collection to 5,000.000 in order to better appreciate these values. Refer to the screenshot):

Another case that we should consider in our code in when the user, for whatever reason, wants to have the ability to cancel the process at a given moment.

The solution for this, as mentioned earlier in this section, is the cancellation feature. It is performed using token, which you pass to the process in its definition, and can be later used to force the cancelation (and the subsequent detention of the process).

For code brevity, we'll use a trick: extend again the int type so that it admits this token feature. We can write simple extension code, as follows:

public static bool IsPrime(this int n, Cancellation TokenSource cs)

{

if (n == 1000) cs.Cancel();

return IsPrime(n);

}As you can see, we now have an overload of IsPrime, which calls the basic implementation only while n is distinct to 1000. As we reach the thousandth integer, the Cancel method of the CancellationTokenSource instance is called.

The behavior of this depends on the possible previous configuration values of this class. As shown in the next screenshot, several values allow us to manipulate and find out related information, such as whether it can be really canceled, whether the cancelation has been requested, and even a low-level value WaitHandle, which is signaled when the token is canceled.

This WaitHandle property is another object that provides access to the native operating system handle for this thread and has properties and methods to release all resources held by the current WaitHandle property (the Close method) or to block the current thread until WaitHandle receives a signal:

Obviously, in this case, the process is a bit more complex, since we need to catch the exception launched by the token and act accordingly:

private void btnPrimesWithCancel_Click(object sender, EventArgs e)

{

List<int> primes;

using (var cs = newCancellationTokenSource())

{

watch.Restart();

var query = numbers.AsParallel().AsOrdered()

.WithCancellation(cs.Token)

.WithDegreeOfParallelism(Environment.ProcessorCount / 2)

.Where(n => n.IsPrime(cs));

try

{

primes = query.ToList();

}

catch (OperationCanceledException oc)

{

string msg1 = "Query cancelled.";

string msg2 = "Cancel Requested: " +

oc.CancellationToken.IsCancellationRequested.ToString();

listBox5.Items.Add(msg1);

listBox5.Items.Add(msg2);

}

}

watch.Stop();

lblCancel.Text = strLabel + watch.ElapsedMilliseconds.ToString("0,000") + " ms.";

}Note the use of WithCancellation(cs.Token) inside the query and also that the entire process in embedded in a using structure in order to guarantee the release of resources after the process ends.

Besides, instead of using another mechanism, we add a cancelation message to the corresponding Listbox control, indicating whether the token was really canceled. You can see this in the next screenshot (also, note that the time elapsed is considerably less than the rest of cases):

Nevertheless, there are some occasions in which the use of parallelism in this form might not be recommendable or is limited, such as when using operators, such as Take or SkipWhile, and also for the indexed versions of Select or ElementAt. In other circumstances, the overhead generated might be big, such as when using Join, Union, or GroupBy.