A common mistake we observe is to rely on what debuggers report without double-checking. Present day debuggers, like WinDbg or GDB, are symbol-driven; they do not possess much of that semantic knowledge that a human debugger has. Also, it is better to report more than less: what is irrelevant can be skipped over by a skilled memory analyst but what looks suspicious to the problem at hand shall be double-checked to see if it is not coincidental. One example we consider here is Coincidental Symbolic Information pattern (Volume 1, page 390).

An application is frequently crashing. The process memory dump file shows only one thread left inside without any exception handling frames. In order to hypothesize about the probable cause that thread raw stack data is analyzed. It shows a few C++ STL calls with a custom smart pointer class and memory allocator like this:

app!std::vector<SmartPtr<ClassA>, std::allocator<SmartPtr<ClassA> > >::operator[]+

WinDbg !analyze-v command output points to this code:

FOLLOWUP_IP: app!std::bad_alloc::~bad_alloc <PERF> (app+0×0)+0 00400000 4d dec ebp

Raw stack data contains a few symbolic references to bad_alloc destructor too:

[...] 0012f9c0 00000100 0012f9c4 00400100 app!std::bad_alloc::~bad_alloc <PERF> (app+0×100) 0012f9c8 00000000 0012f9cc 0012f9b4 0012f9d0 00484488 app!_NULL_IMPORT_DESCRIPTOR+0×1984 0012f9d4 0012fa8c 0012f9d8 7c828290 ntdll!_except_handler3 0012f9dc 0012fa3c 0012f9e0 7c82b04a ntdll!RtlImageNtHeaderEx+0xee 0012f9e4 00482f08 app!_NULL_IMPORT_DESCRIPTOR+0×404 0012f9e8 00151ed0 0012f9ec 00484c1e app!_NULL_IMPORT_DESCRIPTOR+0×211a 0012f9f0 00000100

0012f9f4 00400100 app!std::bad_alloc::~bad_alloc <PERF> (app+0×100) [...]

By linking all these three pieces together an engineer hypothesized that the cause of the failure is memory allocation. However, careful analysis reveals all of them as coincidental symbolic information and renders hypothesis much less plausible:

The address of app!std::bad_alloc::×bad_alloc is 00400000 which coincides with the start of the main application module:

0:000> lm a 00400000 start end module name 00400000 004c4000 app (no symbols)

As a consequence, its assembly language code makes no sense:

0:000> u 00400000 app: 00400000 4d dec ebp 00400001 5a pop edx 00400002 90 nop 00400003 0003 add byte ptr [ebx],al 00400005 0000 add byte ptr [eax],al 00400007 000400 add byte ptr [eax+eax],al 0040000a 0000 add byte ptr [eax],al 0040000c ff ???

All std::vector references are in fact fragments of a UNICODE string that can be dumped using du command:

[...] 0012ef14 00430056 app!std::vector<SmartPtr<ClassA>, std::allocator<SmartPtr<ClassA> > >::operator[]+0×16 0012ef18 00300038 0012ef1c 0043002e app!std::vector<SmartPtr<ClassA>, std::allocator<SmartPtr<ClassA> > >::size+0×1 [...] 0:000> du 0012ef14 l6 0012ef14 "VC80.C"

Raw stack data references to bad_alloc destructor are still module addresses in disguise, 00400100 or app+0×100, with nonsense assembly code:

0:000> u 00400100 app+0×100: 00400100 50 push eax 00400101 45 inc ebp 00400102 0000 add byte ptr [eax],al 00400104 4c dec esp 00400105 010500571aac add dword ptr ds:[0AC1A5700h],eax 0040010b 4a dec edx 0040010c 0000 add byte ptr [eax],al 0040010e 0000 add byte ptr [eax],al

Yet another common mistake is not looking past the first found evidence. For example, not looking further to prove or disprove a hypothesis after finding a pattern. Let me illustrate this by a complete memory dump from a frozen system. Careful analysis of wait chains[1] revealed a thread owning a mutant and blocking other threads from many processes:

THREAD 882e8730 Cid 0f64.2ce0 Teb: 7ff76000 Win32Thread: 00000000 WAIT:

(Unknown) UserMode Non-Alertable

89a76e08 SynchronizationEvent

IRP List:

883fbba0: (0006,0220) Flags: 00000830 Mdl: 00000000

Not impersonating

DeviceMap e1003880

Owning Process 89e264e8 Image: ProcessA.exe

Attached Process N/A Image: N/A

Wait Start TickCount 5090720 Ticks: 1981028 (0:08:35:53.562)

Context Switch Count 8376

UserTime 00:00:00.000

KernelTime 00:00:00.015

Win32 Start Address 0×010d22a3

LPC Server thread working on message Id 10d22a3

Start Address kernel32!BaseThreadStartThunk (0×77e617ec)

Stack Init b6e8b000 Current b6e8ac60 Base b6e8b000 Limit b6e88000 Call 0

Priority 10 BasePriority 10 PriorityDecrement 0

ChildEBP RetAddr

b6e8ac78 8083d26e nt!KiSwapContext+0×26

b6e8aca4 8083dc5e nt!KiSwapThread+0×2e5

b6e8acec 8092cd88 nt!KeWaitForSingleObject+0×346

b6e8ad50 8083387f nt!NtWaitForSingleObject+0×9a

b6e8ad50 7c82860c nt!KiFastCallEntry+0xfc (TrapFrame @ b6e8ad64)

0408f910 7c827d29 ntdll!KiFastSystemCallRet

0408f914 77e61d1e ntdll!ZwWaitForSingleObject+0xc

0408f984 77e61c8d kernel32!WaitForSingleObjectEx+0xac

0408f998 027f0808 kernel32!WaitForSingleObject+0×12

0408f9bc 027588d4 DllA!DriverB_DependentFunction+0×86

[...]

0408ffec 00000000 kernel32!BaseThreadStart+0×34

So did we found a culprit component, DllA, or not? Could this blockage have resulted from earlier problems? We search stack trace collection (Volume 1, page 409) for any other anomalous activity (Semantic Split, Volume 3, page 120) and we find indeed a recurrent stack trace pattern across process landscape:

THREAD 89edadb0 Cid 09fc.1050 Teb: 7ff54000 Win32Thread: 00000000 WAIT:

(Unknown) KernelMode Non-Alertable

8a38b82c SynchronizationEvent

IRP List:

893a7470:Ticks: 3366871 (0:14:36:47.359)

Context Switch Count 121867

UserTime 00:00:25.093

KernelTime 00:00:01.968

Win32 Start Address MSVCR71!_threadstartex (0×7c3494f6)

Start Address kernel32!BaseThreadStartThunk (0×77e617ec)

Stack Init f3a9c000 Current f3a9b548 Base f3a9c000 Limit f3a99000 Call 0

Priority 8 BasePriority 8 PriorityDecrement 0

ChildEBP RetAddr

f3a9b560 8083d26e nt!KiSwapContext+0×26

f3a9b58c 8083dc5e nt!KiSwapThread+0×2e5

f3a9b5d4 f6866255 nt!KeWaitForSingleObject+0×346

f3a9b5f4 f68663e3 DriverA!Block+0×1b

f3a9b604 f5ba05f1 DriverA!BlockWithTracker+0×19

f3a9b634 f5b9ba24 DriverB!DoRequest+0xc2

f3a9b724 f5b9b702 DriverB!QueryInfo+0×392

[...]

0: kd> !irp 893a7470DriverDriverB

Args: 0000015c 00000024 00120003 111af32c

We know that DllA!DriverB_DependentFunction will not work if DriverB device doesn't function (for example, remote file system access without network access). The thread 89edadb0 had been waiting for more 14 hours and the originally found thread 882e8730 was waiting for less than 9 hours. This suggests looking first into DriverB / DriverA functions first.

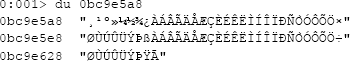

One of the common mistakes that especially happens during a rush to provide an analy-sis results is overlooking UNICODE or ASCII fragments on thread stacks and mistakenly assuming that found symbolic references have some significance:

0:001> dds 0bc9e5a8 0bc9e5d4 0bc9e5a800b900b80bc9e5ac00bb00ba0bc9e5b000bd00bc0bc9e5b400bf00beApplicationA!stdext::unchecked_uninitialized_fill_n<std::map<std:: basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> >,std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> >,std::less<std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> > >,std::allocator<std::pair<std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> > const ,std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> > > > > * *,unsigned int,std::map<std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> >,std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> >,std::less<std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> > >,std::allocator<std::pair<std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> > const ,std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> > > > > *,std::allocator<std::map<std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> >,std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> >,std::less<std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> > >,std::allocator<std::pair<std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> > const ,std::basic_string<unsigned short,std::char_traits<unsigned short>,std::allocator<unsigned short> > > > > *> >+0×1e 0bc9e5b800c100c0ApplicationA!EnumData+0×670 0bc9e5bc00c300c2ApplicationA!CloneData+0xe2 0bc9e5c000c500c4ApplicationA!LoadData+0×134 0bc9e5c400c700c6ApplicationA!decompress+0×1ca6 0bc9e5c800c900c8ApplicationA!_TT??_R2?AVout_of_rangestd+0×10c 0bc9e5cc00cb00caApplicationA!AppHandle <PERF> (ApplicationA+0xd00ca) 0bc9e5d000cd00cc0bc9e5d400cf00ce

We can see and also double check from disassembly by using u/ub WinDbg command that function names are coincidental (Volume 1, page 390). It just hashappened that ApplicationA module spans the address range including 00bf00be and 00cb00ca UNICODE fragment values (having the pattern 00xx00xx):

0:001> lm m ApplicationA start end module name 00be0000 00cb8000 ApplicationA (export symbols) ApplicationA.exe

One common question is to how determine a service name from a kernel memory dump where PEB information is not available (!peb WinDbg command). For example, there are plenty of svchost.exe processes running and one has a handle leak. We suggested to use the following empirical data:

Look at driver modules on stack traces (e.g. termdd)

Look at the relative position of svchost.exe in the list of processes that reflects service startup dependency (!process 0 0)

Execution residue (Volume 2, page 239) and string pointers on thread raw stacks (WinDbg script, Volume 1, page 236)

Process handle table (usually available for the current process according to my experience)

The number of threads and distribution of modules on thread stack traces (might require reference stack traces, Volume 1, page 707)

IRP information (e.g. a driver, device and file objects), for example:

THREAD fffffa800c21fbb0 Cid 0264.4ba4 Teb: 000007fffff92000 Win32Thread:

fffff900c2001d50 WAIT: (WrQueue) UserMode Alertable

fffffa800673f330 QueueObject

IRP List:

fffffa800c388010: (0006,0478) Flags: 00060070 Mdl: 00000000

Not impersonating

DeviceMap fffff88000006160

Owning Process fffffa8006796c10 Image: svchost.exe

Attached Process N/A Image: N/A

Wait Start TickCount 30553196 Ticks: 1359 (0:00:00:21.200)

Context Switch Count 175424 LargeStack

UserTime 00:00:05.834

KernelTime 00:00:32.541

Win32 Start Address 0×0000000077a77cb0

Stack Init fffffa60154c6db0 Current fffffa60154c6820

Base fffffa60154c7000 Limit fffffa60154bf000 Call 0

Priority 10 BasePriority 8 PriorityDecrement 0 IoPriority 2 PagePriority 5

Child-SP RetAddr Call Site

fffffa60`154c6860 fffff800`01ab20fa nt!KiSwapContext+0×7f

fffffa60`154c69a0 fffff800`01ab55a4 nt!KiSwapThread+0×13a

fffffa60`154c6a10 fffff800`01d17427 nt!KeRemoveQueueEx+0×4b4

fffffa60`154c6ac0 fffff800`01ae465b nt!IoRemoveIoCompletion+0×47

fffffa60`154c6b40 fffff800`01aaf933 nt!NtWaitForWorkViaWorkerFactory+0×1fe

fffffa60`154c6c20 00000000`77aa857a nt!KiSystemServiceCopyEnd+0×13 (TrapFrame @

fffffa60`154c6c20)

00000000`04e7fb58 00000000`00000000 0×77aa857a

3: kd> !irp fffffa800c388010

Irp is active with 6 stacks 6 is current (= 0xfffffa800c388248)

No Mdl: System buffer=fffffa800871b210: Thread fffffa800c21fbb0: Irp stack

trace.

cmd flg cl Device File Completion-Context

[ 0, 0] 0 0 00000000 00000000 00000000-00000000

Args: 00000000 00000000 00000000 00000000

[ 0, 0] 0 0 00000000 00000000 00000000-00000000

Args: 00000000 00000000 00000000 00000000

[ 0, 0] 0 0 00000000 00000000 00000000-00000000

Args: 00000000 00000000 00000000 00000000

[ 0, 0] 0 0 00000000 00000000 00000000-00000000

Args: 00000000 00000000 00000000 00000000

[ 0, 0] 0 0 00000000 00000000 00000000-00000000

Args: 00000000 00000000 00000000 00000000

>[ e, 0] 5 1 fffffa8006018060 fffffa8007bf0e60 00000000-00000000 pending

Driver

dpdr

Args: 00000100 00000000 00090004 00000000

3: kd> !fileobj fffffa8007bf0e60

TSCLIENTSCARD14

Device Object: 0xfffffa8006018060 Driver

dpdr

Vpb is NULL

Access: Read Write SharedRead SharedWrite

Flags: 0×44000

Cleanup Complete

Handle Created

FsContext: 0xfffff8801807c010 FsContext2: 0xfffff8801807c370

CurrentByteOffset: 0

Cache Data:

Section Object Pointers: fffffa800c50fdc8

Shared Cache Map: 00000000

Previously we wrote on how to get a 32-bit stack trace from a 32-bit process thread on an x64 system (Volume 3, page 43). There are situations when we are interested in all such stack traces, for example, from a complete memory dump. We wrote a script that extracts both 64-bit and WOW64 32-bit stack traces:

.load wow64exts !for_each_thread "!thread @#Thread 1f;.thread /w @#Thread; .reload; kb 256; .effmach AMD64"

Here is WinDbg example output fragment for a thread fffffa801f3a3bb0 from a very long debugger log file:

[...]

Setting context for owner process...

.process /p /r fffffa8013177c10

THREAD fffffa801f3a3bb0 Cid 4b4c.5fec Teb: 000000007efaa000 Win32Thread:

fffff900c1efad50 WAIT: (UserRequest) UserMode Non-Alertable

fffffa8021ce4590 NotificationEvent

fffffa801f3a3c68 NotificationTimer

Not impersonating

DeviceMap fffff8801b551720

Owning Process fffffa8013177c10 Image: application.exe

Attached Process N/A Image: N/A

Wait Start TickCount 14066428 Ticks: 301 (0:00:00:04.695)

Context Switch Count 248 LargeStack

UserTime 00:00:00.000

KernelTime 00:00:00.000

Win32 Start Address mscorwks!Thread::intermediateThreadProc

(0×00000000733853b3)

Stack Init fffffa60190e5db0 Current fffffa60190e5940

Base fffffa60190e6000 Limit fffffa60190df000 Call 0

Priority 11 BasePriority 10 PriorityDecrement 0 IoPriority 2 PagePriority 5

Child-SP RetAddr Call Site

fffffa60`190e5980 fffff800`01cba0fa nt!KiSwapContext+0×7f

fffffa60`190e5ac0 fffff800`01caedab nt!KiSwapThread+0×13a

fffffa60`190e5b30 fffff800`01f1d608 nt!KeWaitForSingleObject+0×2cb

fffffa60`190e5bc0 fffff800`01cb7973 nt!NtWaitForSingleObject+0×98

fffffa60`190e5c20 00000000`75183d09 nt!KiSystemServiceCopyEnd+0×13 (TrapFrame @

fffffa60`190e5c20)

00000000`069ef118 00000000`75183b06 wow64cpu!CpupSyscallStub+0×9

00000000`069ef120 00000000`74f8ab46 wow64cpu!Thunk0ArgReloadState+0×1a

00000000`069ef190 00000000`74f8a14c wow64!RunCpuSimulation+0xa

00000000`069ef1c0 00000000`771605a8 wow64!Wow64LdrpInitialize+0×4b4

00000000`069ef720 00000000`771168de ntdll! ?? ::FNODOBFM::`string'+0×20aa1

00000000`069ef7d0 00000000`00000000 ntdll!LdrInitializeThunk+0xe

.process /p /r 0 Implicit thread is now fffffa80`1f3a3bb0 WARNING: WOW context retrieval requires switching to the thread's process context. Use .process /p fffffa80`1f6b2990 to switch back. Implicit process is now fffffa80`13177c10 x86 context set Loading Kernel Symbols Loading User Symbols Loading unloaded module list Loading Wow64 Symbols ChildEBP RetAddr 06aefc68 76921270 ntdll_772b0000!ZwWaitForSingleObject+0×15 06aefcd8 7328c639 kernel32!WaitForSingleObjectEx+0xbe 06aefd1c 7328c56f mscorwks!PEImage::LoadImage+0×1af 06aefd6c 7328c58e mscorwks!CLREvent::WaitEx+0×117 06aefd80 733770fb mscorwks!CLREvent::Wait+0×17 06aefe00 73377589 mscorwks!ThreadpoolMgr::SafeWait+0×73 06aefe64 733853f9 mscorwks!ThreadpoolMgr::WorkerThreadStart+0×11c 06aeff88 7699eccb mscorwks!Thread::intermediateThreadProc+0×49 06aeff94 7732d24d kernel32!BaseThreadInitThunk+0xe 06aeffd4 7732d45f ntdll_772b0000!__RtlUserThreadStart+0×23 06aeffec 00000000 ntdll_772b0000!_RtlUserThreadStart+0×1b Effective machine: x64 (AMD64) [...]

Forthcoming CARE[2] and STARE[3] online systems additionally aim to provide software behaviour pattern identification via debugger log and trace analysis and suggest possible software troubleshooting patterns. This work started in October, 2006 with the identification of computer memory patterns[4] and later continued with software trace patterns[5]. Bringing all of them under a unified linked framework seems quite natural to the author.

Adding AI. Analysis Improvement.

There is a need to provide audit services for memory dump and software trace analysis[6]. One mind is good but two are better, especially if the second is a pattern-driven AI. Here are possible problem scenarios:

Problem: You are not satisfied with a crash report.

Problem: Your critical issue is escalated to the VP level. Engineers analyze memory dumps and software traces. No definite conclusion so far. You want to be sure that nothing has been omitted from the analysis.

Problem: You analyze a system dump or a software trace. You need a second pair of eyes but don't want to send your memory dump due to your company security policies.

100% CPU consumption was reported for one system and a complete memory dump was generated. Unfortunately, it was very inconsistent (Volume 1, page 269):

0: kd> !process 0 0

GetContextState failed, 0xD0000147

GetContextState failed, 0xD0000147

GetContextState failed, 0xD0000147

Unable to get program counter

GetContextState failed, 0xD0000147

Unable to read selector for PCR for processor 0

**** NT ACTIVE PROCESS DUMP ****

PROCESS 8b57f648 SessionId: none Cid: 0004 Peb: 00000000 ParentCid:

0000

DirBase: bffd0020 ObjectTable: e1000e10 HandleCount: 3801.

Image: System

[...]

PROCESS 8a33fd88 SessionId: 4294963440 Cid: 1508 Peb:

7ffdb000 ParentCid: 3a74

DirBase: bffd2760 ObjectTable: e653c110 HandleCount: 1664628019.

Image: explorer.exe

[...]

PROCESS 87bd9d88 SessionId: 4294963440 Cid: 3088 Peb:

7ffda000 ParentCid: 1508

DirBase: bffd23e0 ObjectTable: e4e73d30 HandleCount: 1717711416.

Image: iexplore.exe

[...]

PROCESS 88c741a0 SessionId: 0 Cid: 46b0 Peb: 7ffd9000 ParentCid:

01f8

DirBase: bffd2ac0 ObjectTable: e8b60c58 HandleCount: 1415935346.

Image: csrss.exe

[...]

!process 0 ff command was looping through the same system thread forever. Fortunately, !running WinDbg command was functional:

0: kd> !running

GetContextState failed, 0xD0000147

GetContextState failed, 0xD0000147

GetContextState failed, 0xD0000147

Unable to get program counter

System Processors 3 (affinity mask)

Idle Processors 0

Prcbs Current Next

0 ffdff120 888ab360 ................

1 f7727120 880d1db0 ................

GetContextState failed, 0xD0000147

GetContextState failed, 0xD0000147

GetContextState failed, 0xD0000147

Curiously !ready command showed a different thread running on the same processor 0 before infinitely looping (it was aborted):

0: kd> !ready

GetContextState failed, 0xD0000147

GetContextState failed, 0xD0000147

GetContextState failed, 0xD0000147

Unable to get program counter

Processor 0: Ready Threads at priority 6

THREAD 88fe2b30 Cid 3b8c.232c Teb: 7ffdf000 Win32Thread: bc6b38f0

RUNNING on processor 0

TYPE mismatch for thread object at ffdffaf0

TYPE mismatch for thread object at ffdffaf0

TYPE mismatch for thread object at ffdffaf0

TYPE mismatch for thread object at ffdffaf0

TYPE mismatch for thread object at ffdffaf0

TYPE mismatch for thread object at ffdffaf0

[...]

The both "running" threads were showing signs of a spiking thread (Volume 1, page 305):

0: kd> !thread 88fe2b30 1f GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 Unable to get program counter THREAD 88fe2b30 Cid 3b8c.232c Teb: 7ffdf000 Win32Thread: bc6b38f0 RUNNING on processor 0 Not impersonating DeviceMap e3899900 Owning Process 8862ead8 Image:ApplicationA.exeAttached Process N/A Image: N/A ffdf0000: Unable to get shared data Wait Start TickCount 1980369 Context Switch Count 239076 LargeStackUserTime 00:01:33.187 KernelTime 00:01:49.734Win32 Start Address 0×0066c181 Start Address 0×77e617f8 Stack Init b97bfbd0 Current b97bf85c Base b97c0000 Limit b97b9000 Call b97bfbd8 Priority 8 BasePriority 8 PriorityDecrement 0 Unable to get context for thread running on processor 0, HRESULT 0×80004002 GetContextState failed, 0×80004002 GetContextState failed, 0×80004002 GetContextState failed, 0×80004002 GetContextState failed, 0×80004002 GetContextState failed, 0×80004002 GetContextState failed, 0×80004002 GetContextState failed, 0×80004002 GetContextState failed, 0×80004002 GetContextState failed, 0×80004002 0: kd> !thread 888ab360 1f GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 Unable to get program counter THREAD 888ab360 Cid 2a3c.4260 Teb: 7ffde000 Win32Thread: bc190570 WAIT: (Unknown) UserMode Non-Alertable 88e4d8d8 SynchronizationEvent Not impersonating DeviceMap e62a50e0 Owning Process 8a1a5d88 Image:ApplicationA.exeAttached Process N/A Image: N/A Wait Start TickCount 1979505 Context Switch Count 167668 LargeStackUserTime 00:01:03.468 KernelTime 00:01:21.875Win32 Start Address ApplicationA (0×0066c181) Start Address kernel32!BaseProcessStartThunk (0×77e617f8) Stack Init ba884000 Current ba883bac Base ba884000 Limit ba87d000 Call 0 Priority 10 BasePriority 10 PriorityDecrement 0

GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 ChildEBP RetAddr ba883c14 bf8a1305 win32k!RGNOBJ::UpdateUserRgn+0×5d ba883c38 bf8a2a1a win32k!xxxSendMessage+0×1b ba883c64 bf8a2ac3 win32k!xxxUpdateWindow2+0×79 ba883c84 bf8a1a6a win32k!xxxInternalUpdateWindow+0×6f ba883cc8 bf8a291b win32k!xxxInternalInvalidate+0×148 ba883cf4 bf858314 win32k!xxxRedrawWindow+0×103 ba883d4c 8088b41c win32k!NtUserRedrawWindow+0xac ba883d4c 7c82860c nt!KiFastCallEntry+0xfc (TrapFrame @ ba883d64) 0012fd10 7739b82a ntdll!KiFastSystemCallRet 0012fd98 78a3ed73 USER32!UserCallWinProcCheckWow+0×5c 0012fdb8 78a3f68b mfc90u!CWnd::DefWindowProcW+0×44 0012fdd4 78a3e29a mfc90u!CWnd::WindowProc+0×3b 0012fe58 78585f1a mfc90u!AfxCallWndProc+0xa3 7739ceb8 c25d008b MSVCR90!_msize+0xf8 WARNING: Frame IP not in any known module. Following frames may be wrong. 7739cec0 9090f8eb 0xc25d008b 7739cec4 8b909090 0×9090f8eb 7739cec8 ec8b55ff 0×8b909090 7739cecc e8084d8b 0xec8b55ff 7739ced0 ffffe838 0xe8084d8b 7739ced4 0374c085 0xffffe838 7739ced8 5d78408b 0×374c085 7739cedc 900004c2 0×5d78408b 7739cee0 90909090 0×900004c2 7739cee4 8b55ff8b 0×90909090 7739cee8 18a164ec 0×8b55ff8b 7739ceec 83000000 0×18a164ec 7739cef0 0f004078 0×83000000 7739cef4 fe87d484 0xf004078 7739cef8 087d83ff 0xfe87d484 7739cefc 2c830f20 0×87d83ff 7739cf00 64ffff94 0×2c830f20 7739cf04 0018158b 0×64ffff94 7739cf08 828b0000 0×18158b 7739cf0c 00000000 0×828b0000 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147

GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147 GetContextState failed, 0xD0000147

We see that both threads belong to 2 process instances of ApplicationA.

The previously published script to dump raw stack of all threads (Volume 1, page 231) dumps only 64-bit raw stack from 64-bit WOW64 process memory dumps (a 32-bit process saved in a 64-bit dump). In order to dump WOW64 32-bit raw stack from such 64-bit dumps we need another script. We were able to create such a script after we found a location of 32-bit TEB pointers (WOW64 TEB32) inside a 64-bit TEB structure:

0:000> .load wow64exts 0:000> !teb Wow64TEB32 at 000000007efdd000Wow64 TEB at 000000007efdb000 ExceptionList:000000007efdd000StackBase: 000000000008fd20 StackLimit: 0000000000082000 SubSystemTib: 0000000000000000 FiberData: 0000000000001e00 ArbitraryUserPointer: 0000000000000000 Self: 000000007efdb000 EnvironmentPointer: 0000000000000000 ClientId: 0000000000000f34 . 0000000000000290 RpcHandle: 0000000000000000 Tls Storage: 0000000000000000 PEB Address: 000000007efdf000 LastErrorValue: 0 LastStatusValue: 0 Count Owned Locks: 0 HardErrorMode: 0 0:000:x86> !wow64exts.info PEB32: 0x7efde000 PEB64: 0x7efdf000 Wow64 information for current thread: TEB32: 0x7efdd000 TEB64: 0x7efdb000 32 bit,StackBase : 0×1a0000 StackLimit : 0×190000Deallocation: 0xa0000 64 bit, StackBase : 0x8fd20 StackLimit : 0x82000 Deallocation: 0x50000

0:000:x86> dd000000007efdd000L4 7efdd000 0019fa84001a0000 0019000000000000

The script obviously should be like this:

~*e r? $t1 = ((ntdll!_NT_TIB *)@$teb)->ExceptionList; !wow64exts.info; dds poi(@$t1+8) poi(@$t1+4)

Before running it against a freshly opened user dump we need to execute the following commands first after setting our symbols right:

.load wow64exts; .effmach x86

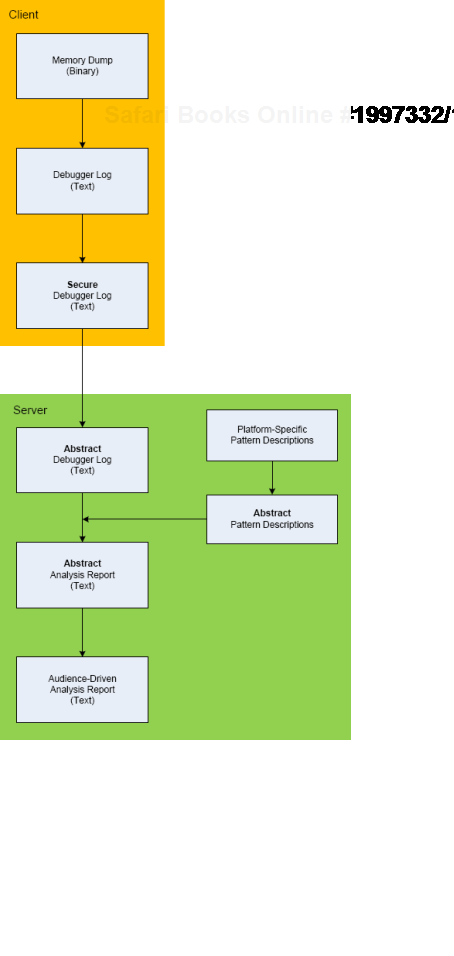

Here is the description of a high-level architecture of the project CARE (Crash Analysis Report Environment)[7]. To remind, the main idea of the project is to process memory dumps on a client to save debugger logs[8]. They can be sent to a server for pattern-driven analysis of software behaviour. Textual logs can also be inspected by a client security team before sending. Certain sensitive information can be excluded or modified to have generic meaning according to the built-in processing rules like renaming (for example, server names and folders). Before processing, verified secured logs are converted to abstract debugger logs. Abstracting platform-specific debugger log for-mat allows reuse of the same architecture for different computer platforms. We call it CIA (Computer Independent Architecture). Do not confuse it with ICA (Independent Computer Architecture) and CIA acronym is more appropriate for memory analysis (like similar MAFIA acronym, Memory Analysis Forensics and Intelligence Architecture). These abstract logs are checked for various patterns (in abstracted form) using abstract debugger commands[9] and an abstract report is generated according to various checklists. Abstract reports are then converted to structured reports for the required audience level. Abstract memory analysis pattern descriptions are prepared from plat-form-specific pattern descriptions. In certain architectural component deployment configurations both the client and server parts can reside on the same machine. Here's the simple diagram depicting the flow of processing:

[1] http://www.dumpanalysis.org/blog/index.php/2009/02/17/wait-chain-patterns/

[2] http://www.dumpanalysis.org/care

[3] http://www.dumpanalysis.org/blog/index.php/2010/01/18/plans-for-the-year-of-dump-analysis/

[4] http://www.dumpanalysis.org/blog/index.php/crash-dump-analysis-patterns/

[5] http://www.dumpanalysis.org/blog/index.php/trace-analysis-patterns/

[6] Please visit DumpAnalysis.com (Memory Dump Analysis Services)

[7] http://www.dumpanalysis.org/care

[8] http://www.dumpanalysis.org/blog/index.php/2008/02/18/debuggerlog-analyzer-inception/

[9] http://www.dumpanalysis.org/blog/index.php/2008/11/10/abstract-debugging-commands-adc-initiative/