Research methods for studying multilingual information management: an empirical investigation

Abstract

This chapter presents the findings of a study undertaken to apply the research framework and model devised and investigate the adoption of ICT by translators. Thus, the analysis of the collected data over the two stages of the study provided a comprehensive view of what the information and technology-related needs of this community of practice are.

Keywords

“Cross-disciplinary research, […] requires familiarity with measuring techniques in more than one discipline.”

(Oppenheim, 1992, p. 7)

7.1. Research approaches

7.2. Selecting a suitable approach

7.3. How to explore ICT adoption and use

7.3.1. Questionnaire design considerations

7.3.2. Instruments and structure of the questionnaire

7.3.2.1. Section A: Translator profile

7.3.2.2. Section B: Information Technology usage

7.3.2.3. Section C: Internet usage

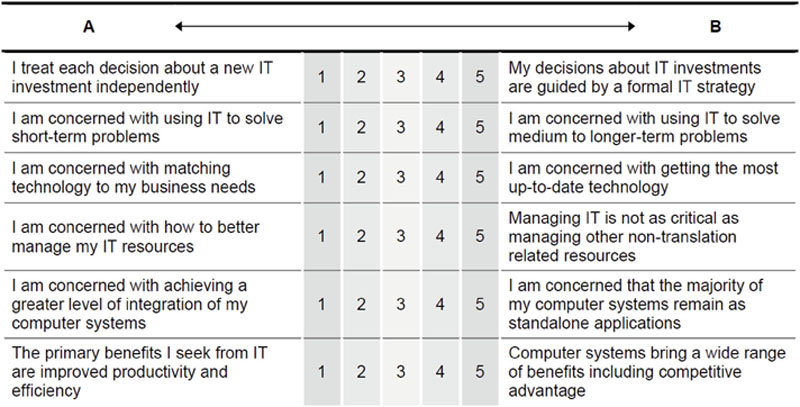

7.3.2.4. Section D: IT strategy

7.3.3. Questionnaire refinement

7.4. How to analyse organisational impacts and evaluate ICT sophistication

7.4.1. Online questionnaire design considerations

7.4.1.1. An online survey

7.4.2. Instruments and structure of the online questionnaire

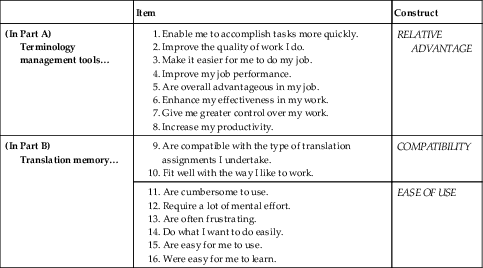

7.4.2.1. CAT tools: terminology management tools and translation memory

Table 7.1

Items for Question 1: using terminology management tools/translation memory

| Item | Construct | |

| (In Part A) Terminology management tools… |

1. Enable me to accomplish tasks more quickly.

2. Improve the quality of work I do.

3. Make it easier for me to do my job.

4. Improve my job performance.

5. Are overall advantageous in my job.

6. Enhance my effectiveness in my work.

7. Give me greater control over my work.

8. Increase my productivity.

| RELATIVE ADVANTAGE |

| (In Part B) Translation memory… |

9. Are compatible with the type of translation assignments I undertake.

10. Fit well with the way I like to work.

| COMPATIBILITY |

|

11. Are cumbersome to use.

12. Require a lot of mental effort.

13. Are often frustrating.

14. Do what I want to do easily.

15. Are easy for me to use.

16. Were easy for me to learn.

| EASE OF USE |

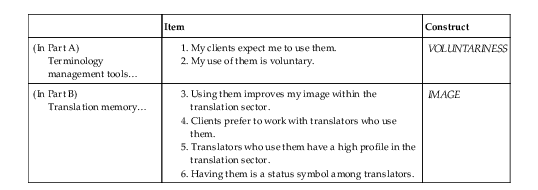

Table 7.2

Items for Question 2: terminology management tools/translation memory and the translation sector

| Item | Construct | |

| (In Part A) Terminology management tools… |

1. My clients expect me to use them.

2. My use of them is voluntary.

| VOLUNTARINESS |

| (In Part B) Translation memory… |

3. Using them improves my image within the translation sector.

4. Clients prefer to work with translators who use them.

5. Translators who use them have a high profile in the translation sector.

6. Having them is a status symbol among translators.

| IMAGE |

Table 7.3

Items for Question 3: learning about terminology management tools/translation memory

| Item | Construct | |

|

1. I have seen how other translators use them.

2. Many freelance translators use them.

| VISIBILITY | |

| (In Part A) Terminology management tools… |

3. Before deciding whether to use them, I was able to try them out fully.

4. I was permitted to use them on a trial basis long enough to see what they could do.

5. I had ample opportunity to try them out before buying.

| TRIALABILITY |

| (In Part B) Translation memory… |

6. I would have no difficulty telling others about what they can do.

7. I believe I could communicate to others the advantages and disadvantages of using them.

8. The benefits of using them are apparent to me.

| RESULT DEMONSTRABILITY |

Table 7.4

Items for impacts of terminology management tools/translation memory

| Items | |

| Impacts of Terminology management tools on… // Translation memory on… | Translators’ turnover Size of translators’ customer base Quality of translators’ translations Translators’ productivity Volume of work translators undertake Number of clients translators have Volume of work offered to translators by clients Prices translators charge for work they undertake |

7.4.2.2. Translation business characteristics

7.4.3. Online survey trial and piloting of the questionnaire

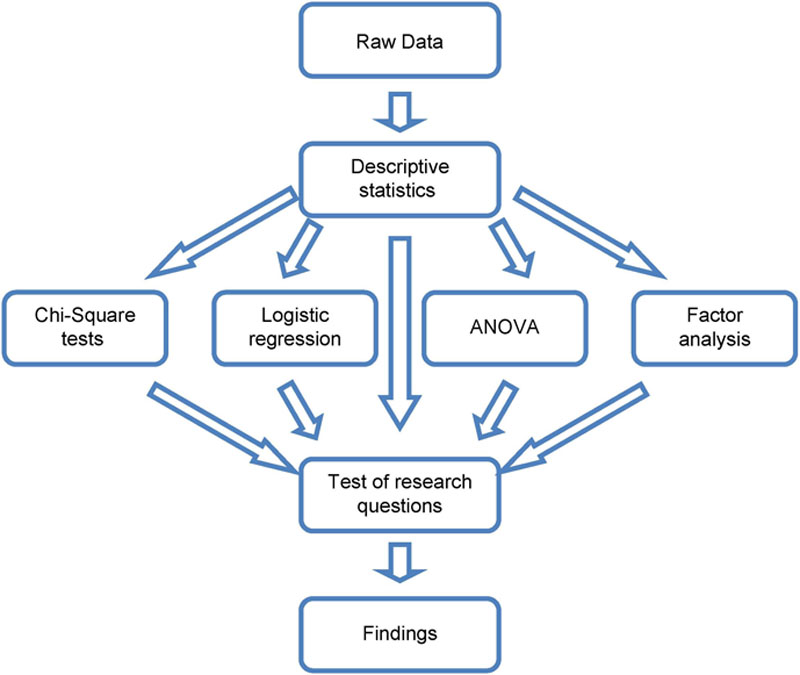

7.5. The data analysis scheme

7.5.1. A quantitative data analysis approach

7.5.1.1. Addressing non-response bias and generalisation of results

7.5.1.2. Exploring relationships between variables: chi-square, logistic regression and discriminant analysis

7.5.1.3. Using factor analysis to measure the perceptions of CAT Tools

7.5.1.4. Using ANOVA to compare CAT tool perceptions between adopters and non-adopters

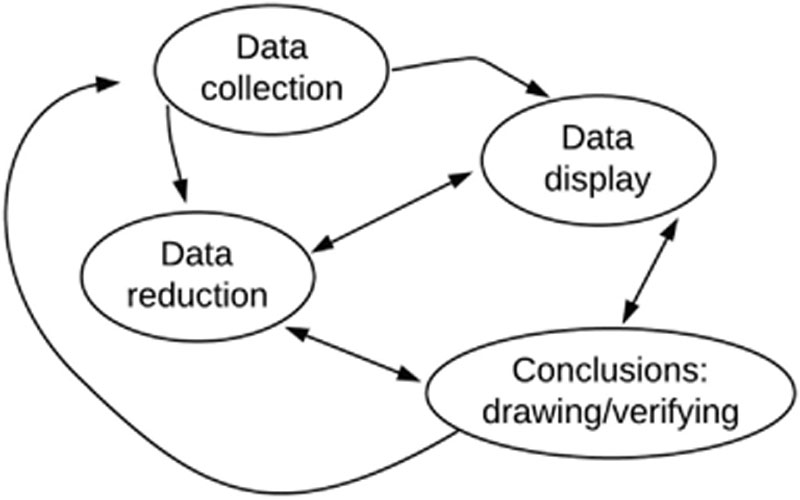

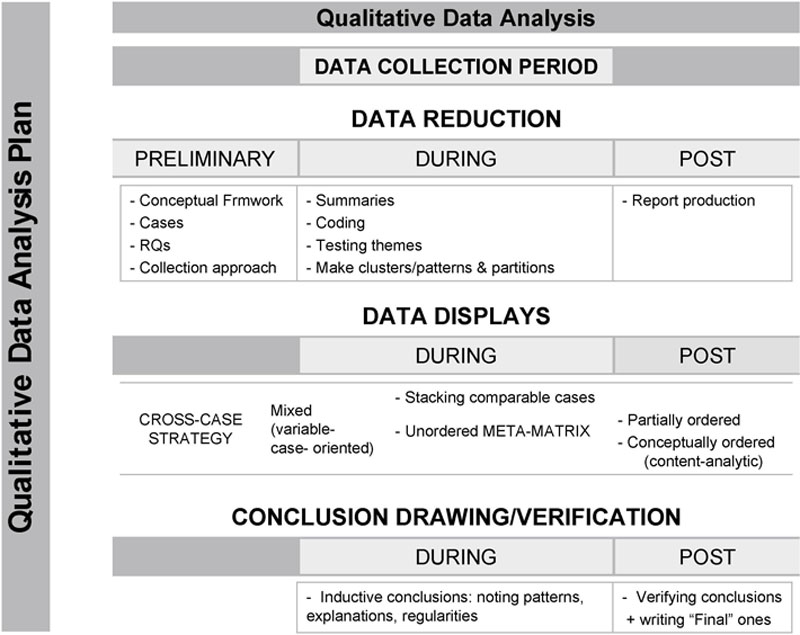

7.5.2. A qualitative data analysis approach