3

A Global Technique

The English mathematician Ada Lovelace (Figure 3.1), together with her contemporary Charles Babbage (1791–1871), helped to design an “Analytical Engine”, considered to be the ancestor of the computer [CHE 17]. She writes about her invention:

The Analytical Engine has no claim to create something by itself. It can do whatever we can tell it to do. It can follow an analysis: but it does not have the ability to imagine analytical relationships or truths. Its role is to help us do what we already know how to do… (cited in [LIG 87], author’s translation)

Figure 3.1. Ada Lovelace (1815–1852) by Alfred-Edward Chalon, 1840, watercolor (Source: Science Museum, London)

Lovelace designed a mathematical sequence to make it work, nowadays considered the first computer program (in the 1980s, computer scientists named a computer language after her). The development of digital simulation provides proof of the relevance of some of her intuitions. In the notes she transcribed to accompany the translation of Charles Babbage’s texts, she wrote:

Handling abstract symbols (will) allow us to see the relationships of the nature of problems in a new light and to have a better understanding of them (quoted by [CHE 17], author’s translation).

This is what digital simulation does nowadays, becoming more and more efficient. It benefits from developments in IT, and from the increasing performance of computing capacities, resulting from the doubling:

- – calculation speeds every 18 months or so (Moore’s law);

- – storage capacities every 13 months or so (Kryder’s law);

- – information transmission speeds approximately every 21 months (Nielsen’s law)1.

Digital simulation is flourishing in the 21st Century in a global context and its mastery is accompanied by high stakes of scientific and economic supremacy.

3.1 A very generalized technique

Developing a digital simulation tool consists in putting end-to-end lines of code expressed in a computer programming language, often known only to developers! The Arabic term al-jabr refers to splicing and reuniting. This word has given its name to algebra, and to a branch of mathematics whose concepts are useful for simulation and algorithms. It appears in one of the first treatises of the discipline, attributed to the Uzbek mathematician Al-Khwârizmî (790–850).

Algebra is the result of an intellectual construction initiated more than four thousand years ago by the Babylonian and Egyptian civilizations and to which the Indian, Chinese, Arab and European civilizations have contributed. Each of them has made its mark, theoretical or algorithmic: a simple object like the Asian abacus is one!

Nowadays, many innovations in computer science and applied mathematics serve the industrial practice of numerical simulation and are taking place in a globalized context. Teams of researchers work in networks, in universities or laboratories open to the world. Organized by scientific proximity and convergence of interests, they constantly integrate certain research results relating to computation algorithms, data processing and storage. They exchange information with different communities, academic or industrial: developers of digital techniques, calculation code editors, simulation users.

No single simulation code can cover all the needs of users and disciplines using simulation. The market open to this technique is large and since the early 2000s has seen a trend towards concentration among simulation tool publishers. The most recent example is the acquisition in 2016 by the German group SIEMENS of the publisher CD-ADPACO, distributing, among other things, simulation software in fluid mechanics. Based on a finite volume method, similar to the finite element method, it is operated by many industrial users. The transaction exceeded those that the general public has become accustomed to with the transfer of football stars: nearly a billion dollars [HUM 16]!

The example of the American publisher ANSYS Inc.2 shows how the market for digital simulation tools has been structured in recent years, by following the needs of users and proposing various solutions. ANSYS Inc. was created in 1970 by John Swanson, an American engineer and contractor, who initiated the development of the ANSYS code, based on finite element technology, and an application field, mechanics. The company’s headquarters are located in Canonsburg, Pennsylvania, in the northeastern United States. The users of the code belong to the academic world, to research centers or university laboratories, or they are industrialists from different sectors. The publisher periodically defines with its most important customers the development axes: for example, multi-physical simulations, driven by industrial needs and fostered by the algorithmic innovations proposed by numerical scientists. Every year, ANSYS Inc. organizes several conferences in different parts of the world in which many users meet to discuss their practices and offer useful feedback to improve its products. The company has acquired other calculation codes, such as the fluid mechanics codes CFX (in 2003) and FLUENT (in 2006) – and the algorithmic techniques they implement. By buying their distributors, sometimes competitors, ANSYS Inc. has opened up to new fields of application and new customers.

To date, the main publishers of calculation codes for the industry are American and European. The tools they offer meet many of the needs expressed by engineers in numerical simulation, mechanical engineering and other emerging disciplines, such as electromagnetism (Table 3.1).

Table 3.1. Main publishers of calculation codes used in the industry, in alphabetical order (Source: www.wikipedia.fr)

| Publisher (Country) | Tools marketed | Scope of application |

| ANSYS Inc. (United States) | ANSYS Multi-physics ANSYS CFX/Fluent | Multi-physics |

| COMSOL (Sweden) | COMSOL-Multiphysics | Generalist Physical Simulation |

| DASSAULT Systèmes (France) | CATIA ABAQUS | Design & Scientific Calculation Generalist |

| ESI Group (France) | Virtual Prototyping software package | Multi-disciplinary |

| MSC-Software (United States) | NASTRAN | Mechanics & Materials |

| SIEMENS (Germany) | SAMCEF, STAR-CCM+ | Fluid & Structural Dynamics |

For manufacturers facing an international market, the consequences of the globalization of tools are multiple. Some industrial programs provide for some form of technology transfer. With common simulation tools, a customer may want to develop contradictory expertise when purchasing a product – and may want to learn how to design it using numerical simulation. In this context, the advantage that an exporting company can maintain lies in the mastery of the technique and the skills of the people who understand it, practice it and improve it – most of the time being associated with its development – and achieve its qualification. Computer simulation has become a general and strategic technique, a character it began to acquire in the 1950s.

3.2 A strategic technique

Among others, the English mathematician Alan Turing (Figure 3.2) contributed to the theoretical foundations of computer science and modern computer calculation. During the Second World War, Alan Turing brought to the Allies his knowledge of data encryption methods, making it possible to decipher the codes of the German Enigma machine designed to transmit secret information between the armies of the Third Reich. While it is difficult to quantify its importance during the course of this terrible world conflict, modern historians agree on the major role played by the mathematician during this period.

Figure 3.2. Alan Turing (1912–1954)

COMMENT ON FIGURE 3.2.– Alan Turing’s fate was tragic. After a major contribution to his compatriots, he was convicted in 1952 for violating public morals. His story was partially told in the film The Imitation Game [TYL 14]. The latter brings Alan Turing back to life thanks to the British actor Benedict Cumberbatch. It also highlights the question of difference and social norms, the question of freedom and the form of power that knowledge provides – in particular, the question of making choices that involve lives. The film also recalls the role played by the English mathematician Joan Clarke (1917–1996), collaborator and friend of Alan Turing, in the design of the machine developed to decode German messages.

Turing and his team managed to decipher Enigma with the help of a computer machine and it was the Second World War that saw the birth of computer simulation as we know it today, with the “Manhattan” Project [JOF 89, KEL 07]. The design of the atomic bomb is the heir to the discoveries of the properties of matter made before the outbreak of the world conflict. In the 1930s, many European scientists contributed to the control of nuclear fission, which produces a significant amount of energy by disintegrating radioactive nuclei.

Some anticipate that this energy can be used for military purposes. In August 1939, Hungarian physicists Leo Szilard (1898–1964), Edward Teller (1908–2003) and Eugene Wigner (1902–1995) wrote a letter signed by Albert Einstein. The scientists wanted to bring to the attention of American President Franklin Roosevelt (1882–1945) and warn him of recent progress in nuclear physics and its possible consequences. One of their fears was that Germany would acquire an atomic weapon: the financial and industrial power of the United States seemed to be the only one capable of winning an inescapable arms race, engaged in Europe since the discoveries on the atom.

In August 1941, the United States entered the conflict and President Roosevelt approved in October of the same year a major technical and military scientific program to develop “extremely powerful bombs of a new type”. Led by American General Leslie Groves (1896–1970), the scientific component of the project was entrusted to American physicist Robert Oppenheimer (1904–1967). He benefited from the contribution of many eminent scientists, many of whom fled Nazi persecution before the war began in order to seek refuge in the United Kingdom and the United States. In 1943, the project was reinforced by a British scientific mission in which many eminent physicists participated: the Danish Niels Bohr, the British James Chadwick (1891–1974), the Austrian Otto Frisch (1904–1979) and the German Klaus Fuchs (1911–1988). It was later discovered that the latter was also an undercover agent with the Soviet secret service. His information enabled the USSR to catch up with the USA in controlling military nuclear energy. The Soviet Union acquired the atomic weapon in 1949: therefore, the balance of American and Soviet nuclear forces contributed, according to some, to future world peace [HAR 16].

Computer simulations help physicists understand the chain reaction. Studying the neutron scattering process in fissionable matter, Hungarian physicist John von Neumann (1903–1957) and Polish mathematician Stanislaw Ulam (1909–1984) did not have the means to carry out laboratory experiments. They used theoretical models, based on an idea by the Italian physicist Enrico Fermi (1901–1954). Each time a neutron collided with an atom, they left it to chance to decide whether the particle was absorbed or bounced and what its energy was after the shock. By representing a large set of particles, they reproduced neutron dynamics with an algorithm. Their models being random, the simulation methods they developed are named Monte-Carlo after a famous casino in the Principality of Monaco – probabilistic methods still use this name today! ENIAC (Figure 3.3) assisted them in this task.

Figure 3.3. Two women wiring the right side of ENIAC with a new program (Source: U.S. Army Photo)

COMMENT ON FIGURE 3.3.– ENIAC is the acronym for “Electronic Numerical Integrator and Calculator”. It was the first general-purpose electronic computer, designed and built between 1943 and 1946 by American engineers John Mauchly (1907–1980) and John Eckert (1919–1995) at the University of Pennsylvania. Originally intended for ballistic trajectory calculation, it was used in the Manhattan project for feasibility studies on the American atomic bomb. After the Second World War and until the end of the 1950s, it was used intensively for scientific computing for various applications of interest to the American army.

The Manhattan project identified the technical and scientific challenges of controlling the chain reaction, producing fissionable material and designing a weapon. On July 16, 1945, the first atomic bomb exploded in the New Mexico desert.

On August 6 and 9, two bombs were dropped on the Japanese cities of Hiroshima and Nagasaki: destroyed in an instant, damaged for decades [IMA 89]. The destructive power of some States with atomic weapons makes humanity aware that it has unprecedented and at the same time appalling power. For some, it paradoxically contributes to global peace: humanity has learned to live with bombs that it does not use. Developed – and, to date, never fired – by the States that possess them, atomic weapons assert their technical and diplomatic supremacy and contribute to subtle3 geopolitical balances:

Humanity has succeeded in meeting the nuclear challenge (and) it may well be the greatest political and moral achievement of all time… Nuclear weapons have changed the fundamental nature of war and politics. As long as humans are able to enrich uranium and plutonium, their survival will require that they prioritize the prevention of nuclear war over the interests of a particular nation [HAR 18].

The nuclear powers to date are the United States, Russia, France, India, Pakistan, China and the United Kingdom - and supposedly Israel and North Korea. The French nuclear program was launched in the middle of the Cold War, when French statesman Pierre Mendès-France (1907–1982) approved by decree France’s objective of developing a dissuasive force for political and diplomatic purposes, affirming the country’s military independence on the international scene. The management of the program was originally entrusted to the French physicist Yves Rocard (1903–1992). The first atmospheric test of the French nuclear bomb took place in Reggane in the Algerian Sahara. By becoming a tool considered sufficiently reliable at the beginning of the 21st Century, numerical simulation allows some States, such as France or the United States, to stop their underground tests [BER 03]. France’s last nuclear test was decided by French President Jacques Chirac and took place on the Polynesian atoll of Fangataufa in 1996. Between these dates, about fifty atmospheric tests were carried out by France.

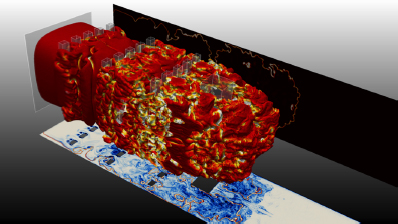

Numerical modeling is nowadays a strategic tool in the field of defense, using different underwater or air explosion simulation codes: a technique that few countries possess and master. Initially developed for military purposes, these tools are also widely used to meet the safety needs of civil installations or constructions (Figure 3.4).

Figure 3.4. Simulation of an accidental explosion in a building [VERM 17]. For a color version of this figure, see www.iste.co.uk/sigrist/simulation1.zip

3.3 Hercules of the calculation

Computing power also accompanies the technical and economic supremacy of certain States and especially of certain companies, such as those of high technology and digital technology. It also allows scientists to perform simulations of phenomena that were previously inaccessible to modeling within a reasonable time frame. Computing on a computer makes it possible to understand the real world, at various scales – sometimes inaccessible to experimentation (such as the infinitely small, at the heart of matter, or the infinitely large, at the heart of the universe).

3.3.1 High-performance computing

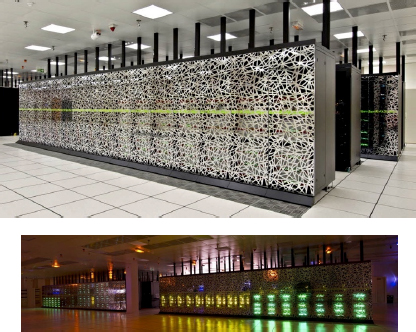

To this end, high-performance computing, using the power offered by real supercomputers (Figure 3.5), and massively parallel algorithms (Box 3.1), is developing very rapidly. It is driven both by the needs of certain scientific and industrial sectors, and by the offers of machine and software manufacturers.

Figure 3.5. The French supercomputer CURIE (Source: © Cadam/CEA)

COMMENT ON FIGURE 3.5.– CURIE is a supercomputer funded by the French public body GENCI. It is operated by the CEA in its Très Grand Centre de Calcul (TGCC) (very large computing center) in Bruyères-le-Châtel, in the Paris region. It offers European researchers a very wide range of applications in all scientific fields, from plasmas and high-energy, chemistry and nanotechnology, to energy and sustainable development.

One of the units of measurement of computing power is the processing speed of elementary operations. Computer scientists estimate it in terms of the number of floating point operations per second – referred to as flop/s. The current speeds are measured in Tflop/s: 1 Tflop/s represents an execution speed of one million million operations in one second. In order to imagine this figure, let us take the example of distances. For a second, a car moving at 100 km/h travels 300 m; light does not bother with material contingencies and covers more than 300 million meters. This is a considerable distance on our scale, but it is still three thousand times less extensive than a million million meters!

3.3.2 Stroke to computing power

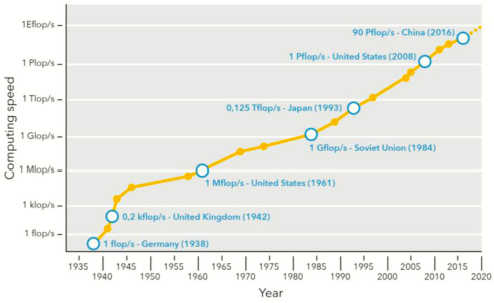

The increase in computing capacity is due to the possibility of integrating transistors with increasing density on the same material surface. Thus, between the first computer designed in the 1940s and the supercomputers of the 2020s, the increase in computing power is spectacular, as shown in Figure 3.7. The scale used on this graph is logarithmic, in a power of 10 and it can be seen that between 1955 and 2015, the computing power evolves globally as a line, which means that the increase is exponential!

A real race for computing equipment has been under way for several decades, and is a sign of the technical and scientific competition between the leading economic powers. For example, www.top500.org lists every six months the world’s top five hundred most efficient computing resources. In September 2017, the United States announced its intention to exceed the Eflop/s speed by 2021 with the Aurora supercomputer project [TRA 17]… and in June 2018, it took over the lead in the ranking, previously occupied by China, with the 200 Pflop/s of the Summit computer [RUS 18]. Europe does not want to lag behind: in December 2017, it declared it would join the race to the exascale with the Mont Blanc 2020 project [OLD 18].

Stéphane Requena, Director of Innovation at GENCI*, comments on these figures:

In less than a century, computing power has seen a spectacular increase, unprecedented in the history of technology: from flop/s in 1935 to Pflop/s in 2008, a multiplication by one million billion, or 15 orders of magnitude in powers of ten, has taken place in just over seventy years!

In order to get the idea, it should be recalled that the power delivered by one kilogram of dry wood burned in one hour is about 10 kW and that of a nuclear power plant 1000 MW. From fire control, to steam and to atomic control, human-designed energy production facilities have increased by five orders of magnitude in powers of ten over tens of thousands of years, with major qualitative and quantitative leaps over the past two centuries.

Figure 3.7. Race to computing power (units: 1k for103, 1M (Mega) for 106 or one million, 1G (Giga) for 109 or one billion, 1T (Tera) for 1012 or one thousand billion, 1P (Peta) for 1015 or one million billion and 1E (Exa) for 1018 or one billion billion) (Source: www.top500.org, www.wikipedia.fr)

The data published by the site www.top500.org show that:

- – the world’s leading supercomputer manufacturers are American (IBM, CRAY, HPE), Japanese (FUJITSU), Chinese (LENOVO) and French (BULL/ATOS);

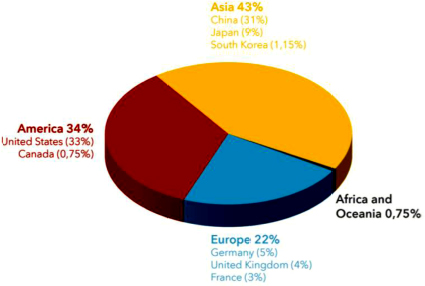

- – the computing powers are very logically grouped in Asia, America and Europe, in the most industrialized countries (Figure 3.8). The world’s leading supercomputers are dedicated to scientific research and mainly use the Linux operating system (Table 3.2).

The use of supercomputers covers various fields:

- – in China, the Sunway TaihuLight and Tianhe-2 are used in centers dedicated to high-performance computing. The country has acquired some of the most efficient machines in the world today. This massive Chinese investment in HPC is recent (in the early 2000s, the www.top500.org site had no Chinese calculators). It demonstrates its desire to become a major player in many areas. Defense, energy and advanced scientific fields (including digital): the uses of these computing resources are varied and serve China’s military and economic ambitions;

- – in the United States, the main computing power is distributed in generalist research centers (Oak Ridge National Laboratory, Lawrence Livermore National Laboratory, Argonne National Laboratory, Los Alamos National Laboratory4). The latter are partly the heirs of American investments in armaments and atomic energy control in the middle of the last century, against the backdrop of the Second World War and the Cold War. Nowadays, computing resources are used in a variety of ways, from scientific research to industrial applications, with defense and energy at the forefront (with the Energy Research Scientific Computing Center5). Numerical simulation supports all the latest studies and techniques in this field;

- – in Japan, the Joint Center for Advanced High Performance Computing and the Advanced Institute for Computational Science were created in 2010. They have a computing power mainly assigned to research and development in various scientific fields: energy, environment, climate, high-tech (computing, automation, robotics, digital, etc.) or biotech6;

- – in Switzerland, the Piz Daint supercomputer has the particularity of combining digital processors and graphics cards. It is mainly used for general scientific applications (astrophysics, particle physics, neuroscience, engineering, climate, health)7.

The computing power boosted in 2017 on these 500 supercomputers offers a cumulative speed of 750,000 Tflops/s spread over 45,000 cores (for comparison, the Facebook calculator is ranked 31st in the ranking, with five Pflop/s). If all humans had a laptop with a 2012 standard performance, which is far from being the case, they would together have less than 1% of this computing power!

Figure 3.8. Distribution of computing power by continent (June 2017 figures, see www.top500.org for updated data) (Source: www.top500.org). For a color version of this figure, see www.iste.co.uk/sigrist/simulation1.zip

In addition, the latter requires an electrical capacity of about 750 MW (Table 3.2). It should be noted that this power also represents half of the power delivered in France by onshore and offshore wind turbines8. Several data are used to determine the computing power: the number of cores (N) and the computing speed (R). The required electrical power (P) gives an indicator of the energy cost of the technique: by way of illustration, it should be noted that the Sunway TaihuLight supercomputer consumes €50,000 of electricity per year (the annual operating costs of 1 MW of computing power are estimated at €1 million).

Decision-makers in the industrial world want to gain a concrete understanding of how digital simulation improves the profitability and competitiveness of their companies. The return on investment in computing power and digital simulation is one of the criteria on which their decision is based. The latter remains difficult to establish, for two main reasons:

- – in order to evaluate it, it is necessary to take into account many capital expenditures (skills, tools and machines) contributing to the deployment of the simulation;

- – product improvement is not entirely attributable to digital simulation, it is also the result of other factors (human know-how, organization of production chains, raw material prices, etc.).

Table 3.2. Ranking of the world’s top 10 supercomputers (June 2018 figures, see www.top500.org for updated data) (Data: www.top500.org)

| Name | COUNTRY/Site | N | R (TFlop/s) | P (kW) |

| Summit | UNITED STATES/Oak Ridge National Laboratory | 2.282.544 | 187.659 | 8.806 |

| Sunway TaihuLight | CHINA/National Supercomputing Center in Wuxi | 10.649.600 | 125.436 | 15371 |

| Sierra | UNITED STATES/Lawrence Livermore National Laboratory | 1.572.480 | 119.193 | - |

| Tianhe-2 | CHINA/National Super Computer Center in Guangzhou | 4.981.760 | 100.678 | 18.482 |

| AI Bridging Cloud Infrastructure | JAPAN/National Institute of Advanced Industrial Science and Technology | 3.361.760 | 32.576 | 1.649 |

| Piz Daint | SWITZERLAND/Swiss National Supercomputing Center | 361.760 | 25.326 | 2.272 |

| Titan | UNITED STATES/Oak Ridge National Laboratory | 560.640 | 27.113 | 8.209 |

| Sequoia | UNITED STATES/Lawrence Livermore National Laboratory | 1.572.864 | 20.133 | 7.890 |

| Trinity | UNITED STATES/Los Alamos National Laboratory | 979.978 | 14.137 | 3.844 |

| Cori | UNITED STATES/National Energy Research Scientific Computing Center | 622.336 | 27.881 | 3.939 |

A study conducted with about 100 North American organizations found that a dollar invested in HPC can bring in forty times more [JOS 15]. CERFACS*, the French research institute dedicated to scientific computing, considers it more likely to be lower: “The return on investment in numerical simulation is around one in ten, taking into account various companies ranging from large high-tech groups to older industrial sectors” [www.cerfacs.fr].

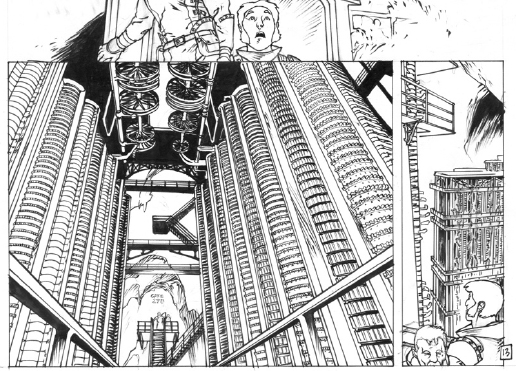

Inspired by the characters of Ada Lovelace and Charles Babbage, comic book authors have created a story featuring their analytical engine (Figure 3.9). The plot takes place at a time when Jules Verne’s heroes are designing futuristic machines. A ship of unusual dimensions, with a metal hull, is piloted by a supercomputer that the protagonists of this story discover in the bowels of the ship [MEI 04]. Beyond fiction, the current means of HPC computing, and perhaps tomorrow, the quantum computer (Box 3.2), have made Ada Lovelace’s dream, and all those who have contributed to developing this technique, a reality!

Figure 3.9. Ada Lovelace’s analytical engine as seen by comic book authors (Source: © Thibaud de Rochebrune, www.t2rbd.free.fr)

- 1 In 1965, Gordon Moore, co-founder of Intel Corporation, set out the law that bears his name – and which is still valid today. Mark Kryder and Jakob Nielsen are American and Swedish engineers who worked in the IT industry. They set out similar laws on increasing information transmission speeds and data storage capacities.

- 2 www.ansys.com.

- 3 The purpose developed here concerns strategic nuclear weapons, developed for deterrence purposes. The design of tactical nuclear weapons for the battlefield, on the other hand, seems to pose more risks to humanity [BOR 19, MIZ 18].

- 4 www.ornl.gov; www.llnl.gov; www.llnl.gov; www.anl.gov; www.lanl.gov.

- 5www.nersc.gov.

- 6 www.jcahpc.jp; www.riken.jp; www.riken.jp.

- 7 www.cscs.ch.

- 8 According to RTE data, the installed capacity of wind power in France is 1580 MW in 2017 (www.clients.rte-france.com) – barely the equivalent of a nuclear unit!

- 9 Abacus is referred to as “Abaqus” in Latin. It is also the name of a numerical simulation software in mechanics. Marketed by the French publisher Dassault Systèmes, it is one of the most widely used in the industry.