CHAPTER

13

Logical Replication

Adatabase, as the name implies, is a place to store data, but as DBAs, we are often most interested in the physical properties and implementation of the database. For example, we may want to create a backup of the database, create a standby database, or maintain a specific file of an unplugged, pluggable database (PDB). We know that if we understand these mechanisms well, when required, we can create a one-to-one copy of the database or restore the database to the exact same state as before—bit-for-bit. This is a physical copy, as the physical structure of the database is maintained. If we then keep this copy in sync with the source by continuously recovering the archive logs, for example, we call it a physical replication.

Developers—and their applications—tend to view the database as tables, rows, and columns, and should they want the data to appear somewhere else, they copy these structures, and their contents, again. They are not concerned with datafiles or archive logs, and as long as they see the same data reproduced, they are happy. This is often referred to as a logical copy, as only the logical structures, or data, are retained in the process. A logical replication, then, keeps such a target in sync with the source via a mechanism such as SQL statement replay.

Physical replication is more robust, because if our source and targets are identical at the bit level, bit-for-bit, then we can be 100-percent sure that the data is also identical. It follows then that in this context we also don’t need to be nervous about different character sets, length semantics, or cross-version feature support—or putting the data into a non-Oracle database.

The other approach, logical replication, offers a higher degree of flexibility, however, and there are far fewer limitations as to where we can put the data. We are interested in the changes made to the “source” data, and so long as we get an initial copy of this data, followed by all subsequent changes, we will have an identical copy at the target.

We can identify three major use cases in which the benefits of this method outweigh the disadvantages of its complexity:

![]()

We can enable databases with mismatched versions to be paired up. In this way, we can keep a copy of the database in another location, running on a different version and/or platform. A common use case for this is migration, which enables us to perform near-zero-time downtime upgrades or migrations. We create a copy in a new database, keep it in sync, and then switch users over to this new database.

![]()

We can copy the data to a different database. This database might be Oracle or some other RDBMS flavor, as required by the application, and the data may be complete or perhaps just a subset. This approach is commonly used to offload reporting from a source database to a secondary, less critical, one or from Oracle to a database such as MySQL, thereby saving on Oracle Database licensing costs. We can also develop distributed applications based on such replication configurations, giving each location its own copy of the data, which is then synchronized with all the others.

![]()

Once we work at the data level, we are not limited to keeping the data as-is. For example, we can treat the changes as a stream of events—in other words, see it as a continuous flow of changes made to the tables. This can in turn feed stream-oriented processing flows, using frameworks such as Apache Kafka, Apache NiFi, or Apache Samza, or it can simply be written to data stores, such as Hadoop, where it can be duly processed by custom logic. This opens an additional dimension of data for analysis: evolution over time. Analysts are often interested in how data has changed chronologically. For example, they want to see how a customer has added and removed items from a shopping cart before completing her purchase. So even if a database retains only details of the final order—the final state—the flow of changes leading up to that point is useful as it depicts how the shopping cart contents changed during the checkout process, which can give insight into customer behavior and the website’s effectiveness.

NOTE

Active Data Guard also targets the simple report offloading use case; it is, however, limited to read-only queries and cannot, for example, add indexes, materialized views, or summary tables. It is also limited to an Oracle Database Enterprise Edition as a target. On the other hand, it retains the simpler and more robust physical replication 100-percent copy method.

These use cases for logical replication are not exclusive to multitenant, nor are they in fact even Oracle-specific. However, multitenant is a major architectural change and not all logical replication features and products support it.

Oracle LogMiner

Oracle LogMiner is not a replication solution per se; instead, it extracts changes from the redo logs and displays them in the v$logmnr_contents view.

Other replication products (unless they implement a trigger-based approach) work on the same basis, collecting all the changes as they happen in the redo stream. So it is interesting to play with LogMiner and observe how database changes show up there, because it allows us to see and understand the various challenges and requirements facing the logical replication products. For example, changes are recorded row-by-row, meaning that a large update changing 1000 rows shows as 1000 separate update statements, each affecting exactly one row.

A number of third-party products actually make use of LogMiner, through the exposed PL/SQL API and v$ view. This works fine for basic operations, but it easily breaks for more complicated operations such as those dealing with large objects (LOBs).

Other products implement their own version of the log miner, completely independent of the Oracle code. These are much harder to implement than using LogMiner, but they enable more flexibility, are not limited only to the information that Oracle makes available in the v$ view, and are more efficient since they avoid calls to the database. The older “classic” version of the GoldenGate extract falls in this category.

Yet another approach is to use triggers, although this technique is less favored, because it increases load on the source database and does not scale well in terms of performance. From an administration point of view, it is also harder to maintain when DDL changes happen.

Obsolete Features

The list of logical replication features and functionality provided by Oracle is surprisingly long, although this has been extensively pruned since the introduction of Oracle Database 12c. For the sake of completeness, let’s quickly review features provided for logical replication in Oracle Database 11g, which were not updated to support PDBs.

Oracle CDC

Oracle Change Data Capture, or CDC, was a trigger- or redo log–based logical replication mechanism that was introduced in Oracle Database 9i. It provided a list of all changes, in the form of an event/log table stored in the database itself. But, as was already announced with the earlier Oracle Database 11gR2 release, Oracle CDC is no longer included in future versions and has been omitted from 12c.

A number of third-party tools used Oracle CDC in the past as a quick way to support Oracle as a source in logical replication, especially those sorts of applications for which the Oracle Database was not the primary database of interest.

Oracle Streams

Oracle Streams, introduced in Oracle 9iR2, is a redo log–based replication mechanism, which means that it reads the changes recorded in redo logs, instead of relying on changes captured by triggers defined on the table, thus putting no additional load on the database as a result. It then sends the source database changes, which have been harvested, downstream to a different Oracle Database.

Although still available in 12c, Streams is now deprecated. However, unlike Oracle CDC, which never gained significant popularity, Streams was adopted by many DBAs and organizations, and thus Oracle has been slower to retire this option.

Nevertheless, Oracle Streams doesn’t support any of the new features introduced in Oracle Database 12c, which means that PDBs are not supported by it and never will be.

Oracle Advanced Replication

Another casualty of the Oracle Database 12c “lay offs” was Advanced Replication, which is a replication method based on updateable materialized views. This feature was formally deprecated in Oracle Database 12.1.

Oracle GoldenGate

It is clear that Oracle regards Oracle GoldenGate as the future of its replication offering. This is the product that is intended to solve all our requirements for logical replication, and that’s why Oracle Streams, Oracle CDC, and Advanced Replication are no longer available.

It is true that Oracle GoldenGate is a superior product in many respects, because it is more robust, scales better, and supports databases in addition to Oracle, as both source and targets. On the other hand, it is expensive, whereas Streams and CDC were included for free with the Enterprise Edition. It also lacks support for some Oracle features, but this has rapidly improved since it was re-engineered to use elements of the Streams code for redo extraction.

Last but not least, it was created by a company that was later acquired by Oracle, so the user interface is very different from what we were used to with Streams and CDC. As a product, it is not particularly easy to learn or use, although the new GoldenGate Management Studio GUI is a huge step forward.

Multitenant Support in Oracle GoldenGate

In GoldenGate, only the new, integrated extract supports multitenant databases. The source extract process is defined at the CDB level and connects to the database as a common user. It can extract data for one or multiple PDBs.

On the target side, each replicat process connects to a single PDB. So if we replicate multiple PDBs on the source, we have to use multiple replicat processes on the target.

Let’s set up a simple replication for a multitenant database and examine the differences that multitenant brings.

Topology in the Example

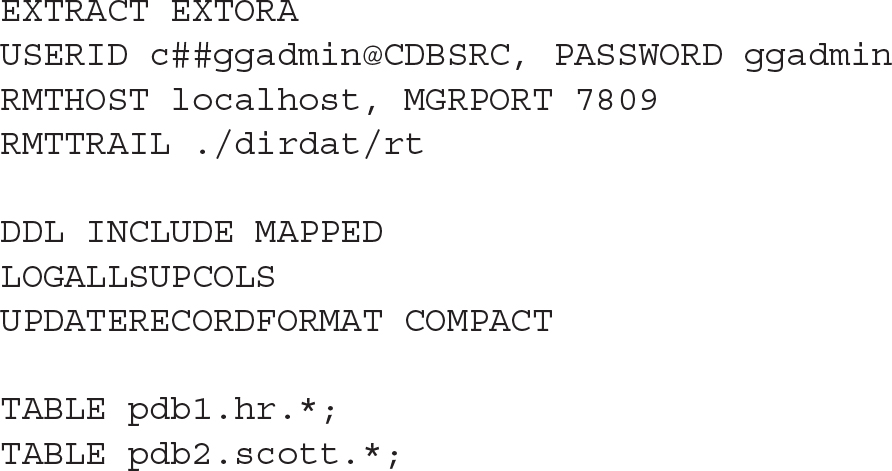

In this example configuration, the source database has multiple PDBs, but we are interested only in PDB1 and PDB2. On the target database, we have prepared PDBT1 and PDBT2 to hold the data, although there is, of course, no need to have all the PDBs in the same target database, since each has its own replicat process.

![]()

On PDB1 we will replicate tables from the HR sample schema to PDBT1; on PDB2 we will replicate the SCOTT schema to PDBT2:

Source Database Setup

First of all, we have to tell the database that it’s OK to use features that require a GoldenGate license, which assumes that we have actually purchased this license. A parameter, enable_goldengate_replication, was added in 11.2.0.4, and GoldenGate checks that this is set, or otherwise errors out, refusing to register the extract. The parameter also enables functionality such as disabling triggers on the target during replication.

In 11.2.0.3 and earlier, these features were free to use (included in the database license), before Oracle decided to disallow other third-party tools from using them, by virtue of requiring a GoldenGate license to enable them.

Run this SQL as a common user:

![]()

Every Oracle GoldenGate replication needs a connection to the source database—that is, to the CDB, to be more precise. For this we must grant a couple of additional privileges:

![]()

Every logical replication also needs to have basic supplemental logging enabled, because a row can be chained or migrated—that is, the database can store a row in multiple pieces. Minimal supplemental logging adds information that allows LogMiner, or the logical replication tools, to stitch these pieces together, and this is set at the PDB level:

![]()

Table Supplemental Logging

Logical replication also needs supplemental logging on primary keys enabled to ensure that primary key information is always written to the redo log. This is necessary for every logical replication tool, because Oracle writes only changed values to redo, by default. That is OK for recovery or physical standbys, as they identify rows by rowid (data block and row position in the block), but logical replication does not preserve rowids and thus needs a primary key, or similar, to uniquely identify the row affected.

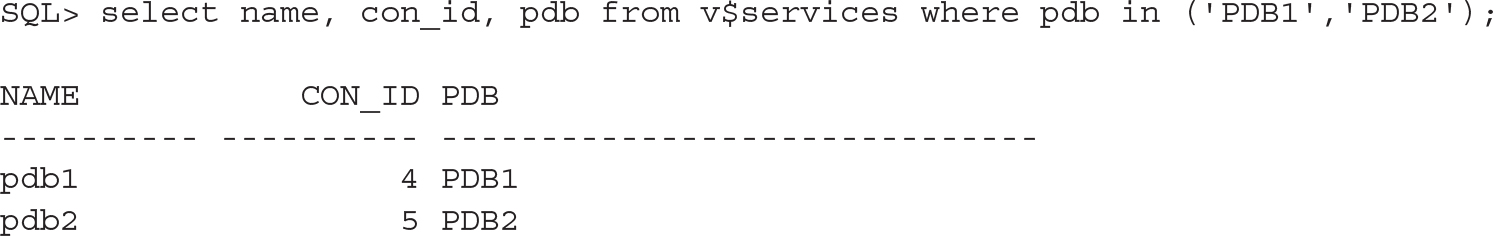

In our example, we want to replicate an entire schema, so we use SCHEMATRANDATA to set it for all tables in the schema—it would be TRANDATA if we wanted to set it for a table only. We do this through the GoldenGate Software Command Interface (GGSCI), the command interface between users and Oracle GoldenGate functional components.

![]()

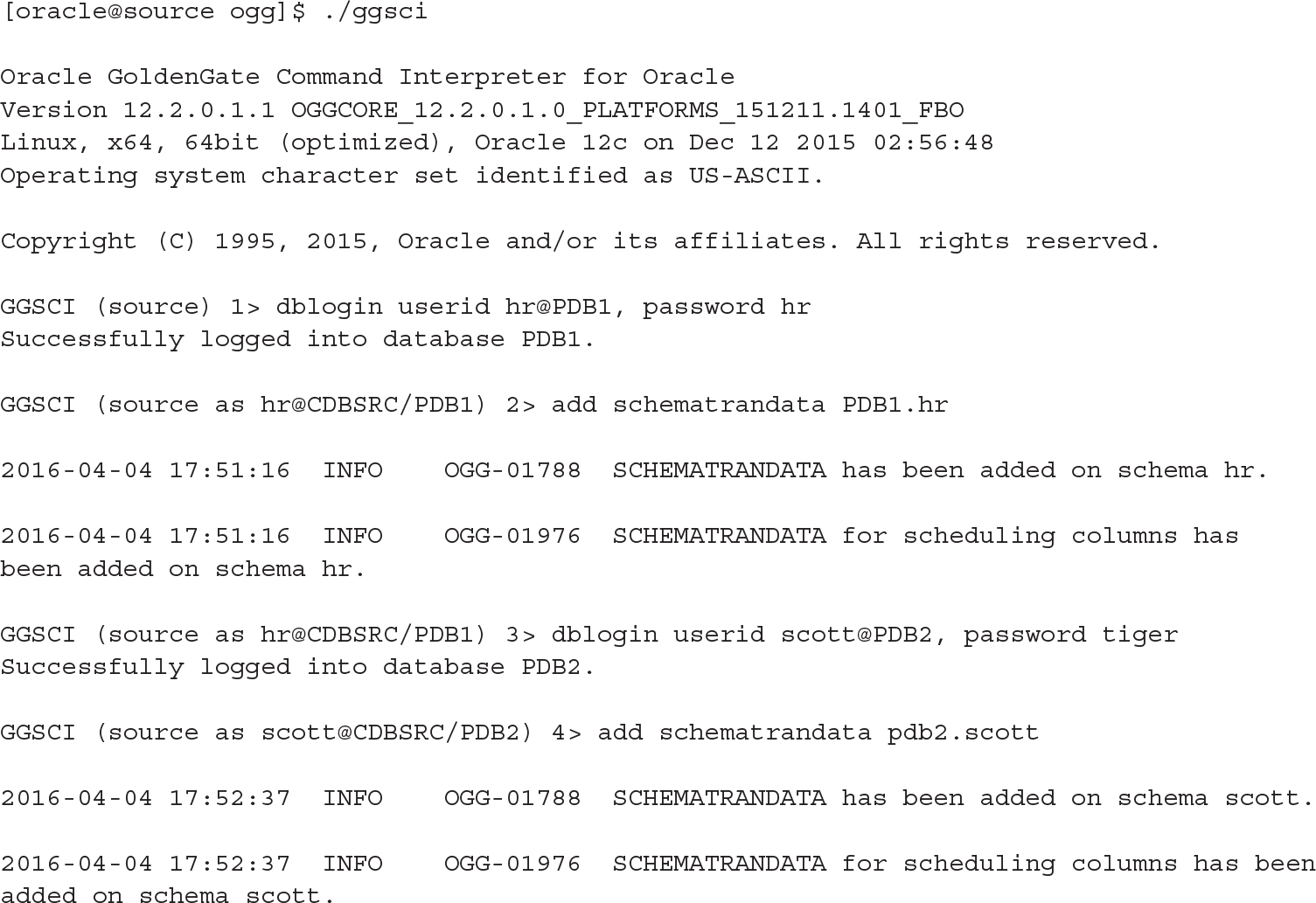

Configure and Start Manager

The next step is simple: let GGSCI create all necessary directories and start the manager processes.

![]()

Next, we configure the manager parameter file:

![]()

![]()

Then, in the editor, we simply enter the following:

![]()

![]()

Back in GGSCI, we can now start the manager:

![]()

![]()

And we also need to repeat these steps for the target server.

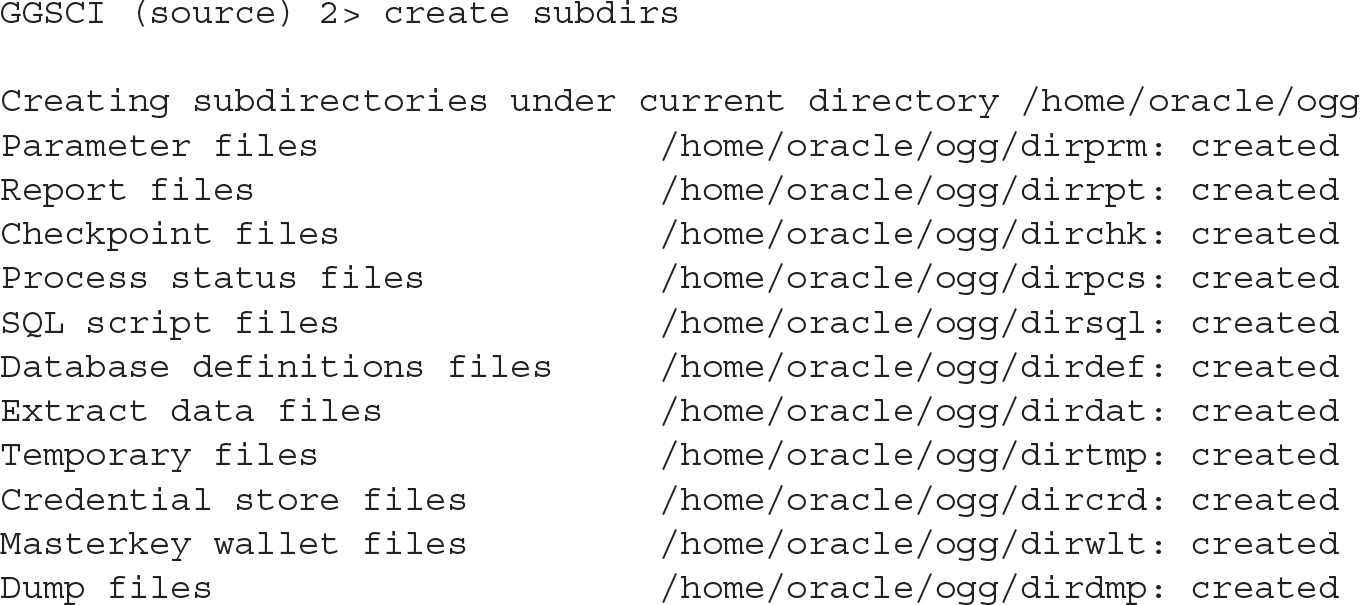

Extract Configuration File

Now we configure the extract process to read from the source database redo:

![]()

![]()

In our example, we will specify only the basic settings:

![]()

Because this is not a GoldenGate handbook, we have simply referred to the original product documentation for explanation on the settings as well as the steps to encrypt the password. However, notice the second and the last two lines, where you can see that the extract process connects to the container database (CDB) as a common user. In addition, we specify the tables to be replicated in a single configuration file, specifying the names with three parts—PDB, schema, and table.

Now let’s register the extract process just defined and configure the remote trail:

![]()

Set Up the Target Database

Let’s now move on to the target database. Unlike the extract, a replicat process connects to one specific target PDB. We have two PDBs replicated, so we have to create users in both of these databases. First of all, we must enable the “magic parameter” as we did for the source database, as follows:

![]()

Configure Parameter Files for Replicat Processes

Now let’s configure two replicat processes—one for each PDB:

![]()

And here’s the second one:

![]()

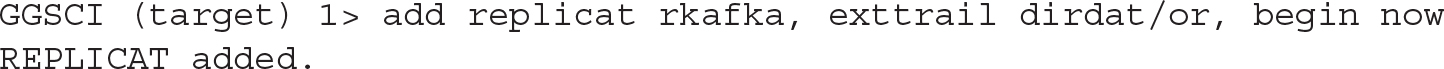

Now we can register the replicat processes:

![]()

Initial Extract

As with every replication, we must provision the source data to the target to create a baseline, so they match as of a specific starting point. Let’s set this starting point to the current database system change number (SCN):

![]()

We use GoldenGate’s initial extract for the provisioning, which means we ask GoldenGate to load the initial data as a set of inserts, obtained by a select into the source database.

NOTE

We have manually created empty tables at the target databases prior to this—for example, using metadata only Data Pump import.

![]()

![]()

Let’s use two extracts, as an example to demonstrate that we are not limited to a single extract in a CDB:

![]()

And similarly, for the second extract:

![]()

![]()

We use the SCN in the predicate for the tables, effectively turning this into a flashback query:

![]()

The TABLE clause can also accept a VIEW. This does not matter for regular extract processing, although a VIEW generates no redo, so no changes will ever be captured. However, for an initial extract, in which data is queried using a select, the data would be extracted, and replicat would fail, because it can’t insert into that VIEW. If it could, we would otherwise end up with primary key violations or duplicate data in the underlying table.

Let’s configure the replicat processes now:

![]()

![]()

There is nothing special about the configuration in this file:

![]()

Again, the second file differs from the first only in the replicat and filenames, as well as login and tables:

![]()

Finally, we can register the processes:

![]()

And then also on the target:

![]()

Run the Initial Extract

Now we run the provisioning load:

![]()

We verify that both ran successfully, and through to completion:

![]()

In the detailed report, which is too long to list here, we verify there were no errors:

![]()

Obviously, we need to perform the same check for the three other processes.

Start Extract and Replicats

Now, with the data loaded, we are at the place where can start the replication. Note, however, that we could actually start the extract much sooner, because only the replicats have to wait for all the data to be loaded:

![]()

![]()

Finally, we check that the processes are up and running correctly:

![]()

Big Data Adapters

Oracle GoldenGate now supports various Big Data or event streaming targets, and these adapters are available for Hadoop HDFS, HBase, Hive, Flume, Kafka, and Spark. Collectively, this is marketed as Oracle GoldenGate for Big Data. A complete example would span many pages, so let’s succinctly summarize how to use these Big Data adapters.

On the source side, nothing really changes, and we still have to define an extract process to get the data. For the target side, there is still a replicat process, but it needs to be pointed to a Java class and parameter file.

The easiest way is to start with the example for Kafka (Apache Kafka is fast, scalable, and durable publish-subscribe messaging, rethought as a distributed commit log, and distributed by design) provided in AdapterExamples/big-data/kafka directory in the Big Data adapters installation. We won’t go into detail on Kafka here; we recommend that you visit the Apache Kafka project web page for more details, documentation, and use cases. Here, we set up a very basic example.

We can use the example replicat definition right away, just changing the tables to be included in the replication:

![]()

The kafka.props file referenced here is where all the Kafka-specific settings are stored. Again, we can start off with the supplied example, although we will want to change at least the topic (stream of events describing changes on a table; in this example we put all source tables to a single event stream) and schema topic (event stream where replicat puts Avro schemas for the replicated tables) names, and possibly expand the classpath to include Avro libraries (if we go with the Avro format, as set in the example file):

![]()

This configuration in turn references one more configuration file: the description of the Kafka server we want to connect to. The only reference we need to change in this custom_kafka_producer.properties file is the first line—bootstrap servers (Kafka servers that provide information on the Kafka cluster configuration; in the simple case of a standalone server, just specify this server):

![]()

As discussed earlier, it is a simple process to register the replicat, as follows:

![]()

There is much more to be configured and set up for various use cases; see Oracle Golden Gate for Big Data documentation.

Oracle XStream

As we mentioned previously, Oracle GoldenGate now uses the integrated extract. This code, which sits in the Oracle executable itself, is based on LogMiner and Oracle Streams original code, further developed to satisfy the needs of Oracle GoldenGate and to accommodate all the new features.

However, since the Oracle GoldenGate extract and the Oracle Database executables are separate processes, Oracle had to introduce an API to enable them to talk to each other.

Oracle decided to make this API public and called it XStream. This API consists of PL/SQL packages and v$ views for management. For the actual processing of changes, there are Oracle Call Interface functions for C, along with a Java API. Logically, the API is split in two: one for data coming out of the database (“extract” in GoldenGate, XStream Out) and one for data coming into the database (“replicat” in GoldenGate, XStream In).

Oracle also decided that since all the code for GoldenGate for Oracle source databases is contained within XStream, we need an Oracle GoldenGate license to use it. This relegates the use of XStream to special use cases that GoldenGate does not directly support, meaning that it is anything but a “limited, but free” solution like the original Streams offering was.

The XStream API is indeed an API, meaning it’s intended for application programmers, not for database administrators. You need to write a C or Java program to make use of it.

Logical Standby

In Chapter 11, we covered Oracle Data Guard physical standby, ignoring at that time the fact that there is also another type available: the often forgotten logical standby.

Conceptually, this is similar to Streams or GoldenGate, in that redo is mined for changes, and these changes are then applied to the target using SQL statements. This implies similar limitations to those for Streams and GoldenGate, including limited datatype support and no duplicate rows in tables. Unlike Streams, however, logical standby is not deprecated, and unlike GoldenGate, it does not require any additional licensing. In reality, though, even logical standby is destined to be replaced by GoldenGate—with one notable use case exception, as you will see.

One special aspect of a logical standby to be aware of is that it is instantiated from a physical standby—that is, its setup begins with the same steps used for a physical standby, including the selection of PDBs to include in the standby, and the same steps also apply for including PDBs created later. In having been generated from a physical standby, it is always built at the CDB level and starts out with all PDBs, with one notable exception: application containers are skipped. Generally, we can’t convert a logical standby back to a physical one, because the two are no longer one-to-one copies of each other. However, in the special case of an upgrade (see the next section), it is indeed possible.

This instantiation from a physical standby is both a curse and a blessing. On the positive side of the ledger, it is very easy to do it right and to be 100-percent sure that all the source data is initially copied correctly to the target. On the other hand, though, it imposes the same restrictions that a physical standby does: it requires the same Oracle versions (when the logical standby is created) and platform compatibility.

Use in Upgrade

The unique feature of a logical standby, and the use case that has become its point of difference, is its function in a rolling upgrade. In this scenario, a logical standby is automatically converted from a physical standby. Oracle is then upgraded on this logical standby and it becomes the new primary, and then the other database in the configuration is upgraded. This makes the upgrade much simpler than it would have been with GoldenGate—we don’t need to rebuild any database after the upgrade is completed, because logical standby can convert back to physical standby in this case.

Oracle Database 12.1 introduced the DBMS_ROLLING package that, along with Active Data Guard, enables automation of such an upgrade process and can be especially beneficial if there are multiple standby databases in the configuration.

As for a multitenant database, not much is different, although in this special case, application containers are supported. Note, however, that an upgrade of an application container cannot happen at the same time a rolling upgrade is performed, and vice versa.

To ensure that there is no data loss during this process, all PDBs must be plugged in and opened when the transient logical standby becomes the new primary.

Other Third-Party Options

As mentioned, other companies provide alternatives to Oracle GoldenGate. In general, they lag behind Oracle GoldenGate in terms of features and performance, but they provide competitive alternatives by majoring on ease-of-use and price.

Dbvisit Replicate

Dbvisit Replicate, not to be confused with Dbvisit Standby (which is similar to physical standby), is a logical replication tool that uses its own implementation of a redo parsing engine. Only Oracle Database as source is supported.

PDBs are supported. The mine process connects to a single PDB at the source and replicates this sole PDB. Replication of multiple or all PDBs at the same time using a single replication is not supported as of the time of writing.

Although not as feature-rich as Oracle GoldenGate, Dbvisit Replicate’s strength lies in its ease-of-use. As a point of comparison, the example we went through earlier to configure GoldenGate requires significantly less manual work. In fact, most of the steps are performed automatically by a single script that the Replicate Setup Wizard creates, after asking a handful of simple questions about your databases and the tables/schemas you want replicated. Unfortunately, there is no GUI available at this point in time.

Replicate also supports Event Streaming mode, producing a stream of changes, similar to the output Oracle CDC produced. Using this functionality, or published APIs, it supports for example Apache Kafka and Apache NiFi targets.

Dell SharePlex

Dell SharePlex, known as Quest SharePlex before Dell acquired Quest Software, is another alternative to Oracle GoldenGate. Again, it is more cost-effective than GoldenGate, but it also supports only the Oracle Database as a source.

Since late 2014, SharePlex has supported PDBs, and each of the PDBs requires its own configuration and capture process.

The configuration is text-based, but the SharePlex Manager does offer a basic GUI for configuration and monitoring.

Summary

We have only briefly touched on the merits of logical replication. To be fair, it is a topic that warrants a book in its own right, and indeed, there are already multiple books on Oracle GoldenGate alone. With the advent of Big Data and event streaming, these tools are even more important to consider in our environments.

Oracle Multitenant is the direction that the Oracle Database is going in, so all logical replication tools need to support it. Unfortunately, this has become a stumbling block for many of the features we were familiar with prior to 12c, and the Oracle-recommended replacement is an expensive one.