CHAPTER

3

Oracle VM Architecture

Discussing the Oracle VM architecture is difficult without also including the Xen architecture, the underlying technology. In this chapter, you learn about the different components of both. By understanding how the components work, you can administer, tune, and size the virtual environments within the Oracle VM system more effectively.

Oracle VM Architecture

Oracle VM is a virtualization system that consists of both industry-standard, open-source components (mainly the Xen Hypervisor) and Oracle enhancements. Oracle does not use the stock Xen Hypervisor. Oracle has performed significant modifications and contributes to the open-source Xen Hypervisor development. In addition to Xen utilities, Oracle provides its own utilities and products to enhance and optimize Oracle VM. Oracle also continues to acquire new companies that provide new technology to improve Oracle VM.

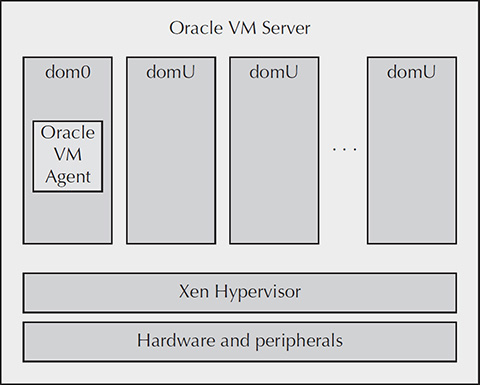

As discussed in previous chapters, the Oracle VM system is made up of two components: the Oracle VM Manager, which can be installed on either a standalone server or a virtual machine, and the Oracle VM Server, as shown in Figure 3-1.

FIGURE 3-1. Oracle VM architecture

The Oracle VM Server consists of a bare-metal installation of the Xen Hypervisor, which is a lightweight hypervisor that sits between the virtual machines and the hardware. By default, a special virtual machine called dom0 is installed. This virtual machine has special access to communicate with the hypervisor. As you will see later, dom0 plays a vital role in controlling all other virtual machines.

Oracle VM allows for multiple hardware systems to provide virtualization services beyond what a single server can provide. Oracle VM does this by creating a set of servers that work together in a server pool. A group of servers providing services that far exceed the capabilities of a single server is known as a server farm or data center. In addition, Oracle has vastly increased the amount of resources that can be utilized by an Oracle VM Server and allocated to a single guest virtual machine. This continues to increase with each new release.

Servers and Server Pools

A server pool is made up of one or more virtual machine servers. Traditionally, a server pool master was required, however, beginning with OVM 3.4, the server pool master has been deprecated. Now the OVM Manager communicates with the OVM Agent on each server in the server pool directly. When using more than one server in a server pool, it is best practice to configure it as a clustered server pool.

The server pool master (OVM 3.3 and earlier) controls the server pool, and the virtual machine server supports virtual machines. The server pool is a collection of systems that are designed to provide virtualization services and that serve specific purposes. A server pool is defined by a single Oracle VM Manager and shares some common resources, such as storage. By sharing storage, the load of running virtual machines (VM guests) can be easily shared among the virtual servers that are members of the server pool. Without a shared disk, a server pool can exist on only one server.

Server Pool Shared Storage

You can create a virtualization environment made up of multiple host servers as a single server pool or as multiple server pools. How you configure them is determined by several factors. As mentioned earlier, a shared disk subsystem is required. This shared disk system must have some type of hardware that can share disk storage safely—typically either SAN or NAS storage. When SAN storage is used, the disks are configured with the OCFS2 filesystem. Because OCFS2, which stands for Oracle Cluster File System 2, is a clustered filesystem, the sharing among VM servers functions properly.

When using Network Attached Storage (NAS) for Oracle VM guest storage repositories, you have a couple different options. NAS can be configured as either NFS or iSCSI. If NAS presents Network File System (NFS) storage, the Oracle VM Server can use that storage directly because NFS is inherently shared. If the storage is presented as iSCSI, then Oracle VM will format it as OCFS2, just like SAN storage. A clustered filesystem is required because of the shared nature of the VM server pool. When using NFS as a repository source, Oracle VM will use the distributed lock manager (DLM) from OCFS2 to handle file locking for cluster server pools. This locking service is called dm-nfs.

If you use a single host for the VM Server, you can use local storage. In a single-server environment, there is no need to share the VM guest storage. However, in a single-host environment, neither load balancing nor high availability is possible. By default, the Oracle VM Server installation uses the remainder of the hard drive to create a single, large OCFS2 partition for /OVS, where the virtual machines are stored.

Server Pool Requirements

In addition to shared storage, you must create the server pool on hardware that has the same architecture and supports the same hardware virtualization features. You cannot place a system with hardware virtualization into a server pool with a system that does not support hardware virtualization features. You can have up to 16 server pools controlled or owned by a single Oracle VM Manager, and each server pool can use servers from completely different manufacturers or that are completely different models.

Although not required, it is a good idea to create a server pool of the same speed and number of processors per system. This setup allows for better load balancing. Mixing various performance levels of VM servers can skew load balancing. Therefore, creating a server pool of similarly configured systems is a good idea.

Always consider the requirements of the largest VM. In order for High Availability (HA) to work (or for live migration), you need another server with sufficient resources. If a virtual machine has been granted 32 vCPUs, and only one server in the pool has that many vCPUs, then the virtual machine cannot be restarted or moved. This is not an argument for using larger servers in the pool as much as it is an argument for using horizontal scaling. Databases can use Real Application Clusters (RAC), and middleware can use its own clustering to keep vCPU and RAM requirements per VM reasonable.

Configuring the Server Pool

You can create server pools based on both the available hardware and the type of business use. If no shared storage is available, you can create individual server pools. If the hardware is diverse, you can create individual server pools. You can also create server pools based on how the hardware and the VM guests will be used. The recommended approach, however, is to create one or more VM Server farms that can support the entire environment.

If the VM Server farm needs to support VMs for many different departments, you might find it beneficial to create a separate server pool for each group of virtual machines. This setup creates both a physical and logical separation of systems. In other cases, where each group only has a few virtual machines, sharing all of the virtual machines in one server pool might be beneficial. Planning and architecting an Oracle VM environment is covered in more detail in the next chapter.

The primary factor when designing server pools is normalizing the loads on the pool. For example, you should mix virtual machines with high CPU use but low disk I/O with virtual machines that need high disk I/O but low CPU. You can also design a server pool to handle one type of load (for example, a compute cluster). In this case, servers are provisioned with a lot of CPU and memory but with inexpensive Network Attached Storage instead of expensive SAN resources.

The Oracle VM server pool is made up of one or more virtual machine servers, one or more utility servers, and a single server pool master (OVM 3.3 and earlier). Let’s look at these server roles in more detail.

Oracle VM Server The virtual machine server is the core of the Oracle VM server pool. The virtual machine server is the Oracle VM component that hosts the virtual machines. The Oracle VM Server is responsible for running one or more virtual machines and is installed on bare metal, leaving only a very small layer of software between the hardware and the virtual machines. This is known as the hypervisor. Included with the Oracle VM Server is a small Linux OS, which is used to assist with managing the hypervisor and virtual machines. This special Linux system is called domain 0 or dom0.

The terms domain, guest, VM guest, and virtual machine are sometimes used interchangeably, but there are slight differences in meaning. The domain is the set of resources on which the virtual machine runs. These resources were defined when the domain was created and include CPU, memory, and disk. The term guest or VM guest defines the virtual machine that is running inside the domain. A guest can be Linux, Solaris, or Windows and can be fully virtualized or paravirtualized. A virtual machine is the OS and application software that runs within the guest. Visit the Oracle virtualization website at http://www.oracle.com/us/technologies/virtualization/oraclevm/overview/index.html for the most up-to-date support information.

Dom0 is a small Linux distribution that contains the Oracle VM Agent. In addition, dom0 is visible to the underlying shared storage that is used for the VMs. Dom0 is also used to manage the network connections and all of the virtual machine and server configuration files. Because it serves a special purpose, you should not modify or use it for purposes other than managing the virtual machines.

In addition to the dom0 virtual machine, the VM Server also supports one or more virtual machines or domains. These are known as domU or user domains. The different domains and how they run are covered in more detail in the “Xen Architecture” section of this chapter. The Oracle VM domains are illustrated in Figure 3-2.

FIGURE 3-2. Oracle VM domains

The VM Server’s main responsibility is to host virtual machines. Many of the management responsibilities and support functions are handled by the Oracle VM Agent.

Oracle VM Agent The Oracle VM Agent is used to manage the VM Servers that are part of the Oracle VM system. The Oracle VM Agent communicates with the Oracle VM Manager and manages the virtual machines that run on the VM Server. The VM Agent consists of two components: the server pool master and the virtual machine server. These two components exist in the Oracle VM Agent but don’t necessarily run on all VM servers.

Server Pool Master (OVM 3.3 and Earlier) The server that currently has the server pool master role is responsible for coordinating the actions of the server pool. The server pool master receives requests from the Oracle VM Manager and performs actions such as starting VMs and performing load balancing. The VM Manager communicates with the server pool manager, and the server pool manager then communicates with the virtual machine servers via the VM Agents. As mentioned earlier, you can only have one server pool master in a server pool. In addition, the server pool master has been deprecated in OVM 3.4. Now the OVM Manager communicates with the OVM Agent on all virtual machine servers.

Virtual Machine Server The virtual machine server is responsible for controlling the VM Server virtual machines. It performs operations such as starting and stopping the virtual machines. It also collects performance information from the virtual machines and the underlying host operating systems. The virtual machine server controls the virtual machines. Because the virtual machines are actually part of the Oracle-enhanced Xen Hypervisor, this topic is covered in the “Xen Architecture” section, later in this chapter.

Oracle VM Manager

The Oracle VM Manager is an enhancement that provides a web-based graphical user interface (GUI) where you can configure and manage Oracle VM Servers and virtual machines. The Oracle VM Manager is a standalone application that you can install on a Linux system. In addition, Oracle provides another way to manage virtual machines via an add-on to Oracle Enterprise Manager (OEM) Cloud Control. Both options are covered in this book.

The Oracle VM Manager allows you to perform all aspects of managing, creating, and deploying virtual machines with Oracle VM. In addition, the Oracle VM Manager provides the ability to monitor a large number of virtual machines and easily determine the status of those machines. This monitoring is limited to the status of the virtual machines.

When using OEM Cloud Control, you have the additional advantage of using the enterprise-wide system-monitoring capabilities of this application as well. Within OEM Cloud Control, not only are you able to manage virtual machines through the VM Manager screens, but also you can add each virtual machine as a host target, as well as any applications that it might be running. In addition, OEM management packs can provide more extensive monitoring and alerting functions.

The VM Manager can perform a number of tasks, among which is virtual machine lifecycle management. Lifecycle management refers to the lifecycle of the virtual machine as it changes states. The most simple of these states are creation, power on, power off, and then deletion. Many other states and actions can occur within a virtual machine. The full range of lifecycle management states are covered in Chapter 4. The VM Manager is shown in Figure 3-3.

FIGURE 3-3. The VM Manager

Users and Roles

A user account is required to access and use the Oracle VM Manager. Users can be assigned the following roles, each having a different level of privilege:

![]() User The user role is granted permission to create and manage virtual machines. In addition, the user role has permission to import resources.

User The user role is granted permission to create and manage virtual machines. In addition, the user role has permission to import resources.

![]() Manager The manager role has all of the privileges of the user role. In addition, the manager role has permission to manage server pools, servers, and resources.

Manager The manager role has all of the privileges of the user role. In addition, the manager role has permission to manage server pools, servers, and resources.

![]() Administrator The administrator role has all of the privileges of the manager role. In addition, the administrator role is responsible for managing user accounts, importing resources, and approving imported resources.

Administrator The administrator role has all of the privileges of the manager role. In addition, the administrator role is responsible for managing user accounts, importing resources, and approving imported resources.

Administering users is done via the ovm_admin command-line utility.

Management Methods

You have several options for managing Oracle VM Manager, including the Oracle VM Manager GUI tool and the Oracle Enterprise Manager Cloud Control add-in for Oracle VM. You can also use an Oracle VM command-line tool from Oracle. Finally, you can use the Xen tools built into the Oracle VM Server. Which tool is right for you varies based on what you are trying to do.

For monitoring the Oracle VM system, a graphical tool is often the most efficient and easiest to use. You can quickly see the state of the system and determine if there are problems. You can sort and group the virtual machines by their server pool and determine the current resource consumption on the underlying hardware easily. In addition, performing tasks such as creating virtual machines is very straightforward with the assistance of wizards.

Xen Architecture

The core of the Oracle VM system is the software that runs the virtual machines. This software is the Xen virtualization system, which consists of the Xen Hypervisor and support software. Xen is a virtual machine monitor for x86, x86_64, Intel Itanium, and PowerPC architectures (Oracle only supports the x86_64 architecture). At the core of the Xen virtualization system is the Xen Hypervisor.

The Xen Hypervisor is the operating system that runs on the bare-metal server. The guest OSs are on top of the Xen Hypervisor. The Xen Hypervisor, after booting, immediately loads one virtual machine, dom0, which is nothing more than a standard virtual machine but with privileges to access and control the physical hardware. Each guest runs its own operating system, independent of the Xen Hypervisor. This OS is not a further layer of a single operating system, but a distinct operating system being executed by the Xen Hypervisor. The guest OSs consist of a single dom0 guest and zero or more domU guests. In Xen terminology, a guest operating system is called a domain.

Dom0

The first domain to be started is domain 0, or dom0. This domain has the following special privileges and capabilities:

![]() Boots first and automatically with the hypervisor

Boots first and automatically with the hypervisor

![]() Has special management privileges

Has special management privileges

![]() Has direct access to the hardware

Has direct access to the hardware

![]() Can see and manage the storage where the virtual machine images are stored

Can see and manage the storage where the virtual machine images are stored

![]() Contains network and I/O drivers used by the domU systems

Contains network and I/O drivers used by the domU systems

The Oracle VM dom0 is a Just enough OS (JeOS) Oracle Enterprise Linux (OEL) operating system with the utilities and applications necessary to manage the Oracle VM environment. The Oracle VM Server installation media installs dom0, which is a 32-bit OEL system including the Oracle VM Agent. The 32-bit system is installed even on 64-bit hardware.

In the small Linux OS, dom0 contains two special drivers, known as backend drivers: the backend network driver and the backend I/O or block driver. You can see both in Figure 3-4. Oracle calls these the netback and netfront drivers and the blkback and blkfront drivers, respectively.

FIGURE 3-4. Backend drivers

The network backend driver (netback) communicates directly with the hardware and takes requests from the domU guests and processes them via one of the network bridges that have been created. The block backend driver (blkback) communicates with the local storage and takes I/O requests from the domU systems and processes them. For this reason, dom0 must be up and running before the guest virtual machines can start.

DomU

All of the other guest virtual machines are known as domU or user domain guests. If they are paravirtualized guests, they are known as domU PV guests. Hardware virtualized guests are known as domU HVM guests. Access to the I/O and network is handled slightly differently, depending on whether the guest is a paravirtualized or a hardware virtualized system.

PV Network and I/O

The paravirtualized guest has a network and a block PV driver that communicates with the network and block drivers on the dom0 system via shared memory that resides in the hypervisor. The PV driver on the domU system shares this memory with the PV driver on the dom0 system. The dom0 system then receives a request from domU via an event channel that exists between the two domains. Here are a few examples for a PV read and write.

DomU Read Operation A domU read operation uses the event channel to signal the PV block driver on dom0, which fulfills the I/O operation. Here are the basic steps to perform a read:

1. An I/O request is made to the PV block driver on the domU system.

2. The guest PV block driver issues an interrupt through the event channel to the dom0 PV block driver, requesting the data.

3. The dom0 PV block driver receives the request and reads the data from disk.

4. The dom0 PV block driver places the data in memory in the hypervisor that is shared between dom0 and the domU guest.

5. The dom0 PV block driver issues an interrupt to the domU PV block driver via the event channel.

6. The domU PV block driver retrieves the data from the memory shared with the dom0 PV block driver.

7. The domU PV block driver returns the data to the calling process within the guest.

This process is illustrated in Figure 3-5.

FIGURE 3-5. A domU read operation

Even though this process seems sophisticated, it is an efficient way to perform I/O in a paravirtualized environment. Until the newer hardware virtualization enhancements were introduced, paravirtualization provided the most performance possible in a virtualized environment.

DomU Write Operation The domU PV write operation is similar to the PV read operation. A domU write operation uses the event channel to signal the PV block driver on dom0, which then fulfills the I/O operation. Here are the basic steps to perform a write operation:

1. A write request is made to the PV block driver on the domU system.

2. The domU PV block driver places the data in memory in the hypervisor that is shared between the domU guest and dom0.

3. The guest PV block driver issues an interrupt through the event channel to the dom0 PV block driver, requesting the data be written out.

4. The dom0 PV block driver retrieves the data from the memory that is shared with the domU PV block driver.

5. The dom0 PV block driver receives the request and writes the data to disk.

6. The dom0 PV block driver issues an interrupt to the domU PV block driver via the event channel.

7. The domU PV block driver returns the success code to the calling process.

This process is illustrated in Figure 3-6.

FIGURE 3-6. A domU write operation

Like the PV read operation, although this operation is somewhat complex, it is also efficient.

HVM Network and I/O

The HVM guest does not have the network and block PV drivers. Instead, a process (daemon) is started on the dom0 system for each domU guest. This daemon intercepts the network and I/O requests and performs them on behalf of the domU guest. This daemon is the Qemu-DM daemon, and it looks for calls to the disk or network and intercepts them. These calls are then processed in dom0 and eventually returned to the domU system that issued the request.

With hardware acceleration, the ability to access hardware at near native speed has been enabled. This is accomplished by taking the software interface that had been used to intercept I/O and network operations and processing it via hardware acceleration. Although it’s an evolving technology, operations at nearly the speed of direct OS-to-hardware interaction have recently been performed.

Hypervisor Operations

The Xen Hypervisor handles other operations that the OS normally performs, such as memory and CPU operations. When an operation such as a memory access is performed in the virtual guest, the hypervisor intercepts it and processes it there. The guest has a pagetable that maps the virtual memory to physical memory. The guest believes that it owns the memory, but it is retranslated to point to the actual physical memory via the hypervisor.

Here is where the introduction of new hardware has really made today’s virtualization possible. With the Intel VT and AMD-V architectures, the CPUs have added features to assist with some of the most common instructions, such as the virtual-to-physical translations. This advance allows a virtualized guest to perform at almost the same level as a system installed directly on the underlying hardware.

DomU-to-Dom0 Interaction

Because of the interaction between domU and dom0, several communication channels are created between the two. In a PV environment, a communication channel is created between dom0 and each domU, and a shared memory channel is created for each domU that is used for the backend drivers.

In an HVM environment, the Qemu-DM daemon handles the interception of system calls that are made. Each domU has a Qemu-DM daemon, which allows for the use of network and I/O requests from the virtual machine.

Networking

With Oracle VM/Xen, each physical network interface card in the underlying server has one bridge called an xenbr that acts like a virtual switch. Within the domain, a virtual interface card connects to the bridge, which then allows connectivity to the outside world. Multiple domains can share the same Xen bridge, and a domain can be connected to multiple bridges. The default is to map one xenbr to each physical interface, but through trunking/bonding, you can and should (it is recommended) take multiple physical NICs and present them as a single xenbr.

The bridges and Ethernet cards are visible to the dom0 system and can be modified there if needed. When the guest domain is created, a Xen bridge is selected. You can modify this later and/or add additional bridges to the guest domain. These additional bridges will appear as additional network devices, as shown in Figure 3-7.

FIGURE 3-7. Xen networking

Hardware Virtual Machine (HVM) vs. Paravirtualized Virtual Machine (PVM)

In this chapter and throughout the book, you will learn about the differences between fully virtualized and paravirtualized systems. Much debate remains over which is better to use. The fully virtualized system currently has the advantage in that you do not need to modify the OS to use this form of virtualization. In addition, both Intel and AMD have put great effort into optimizing for this type of virtualization.

Prior to the introduction of virtualization acceleration, using paravirtualization was much more efficient. Because the kernel and device drivers were aware that they were part of a virtualized environment, they were able to perform their functions more efficiently by not duplicating operations that would have to be redone at the dom0 layer. Paravirtualization, therefore, has always been seen as more efficient.

With the introduction of the hardware assist technology, however, fully virtualized systems now have an advantage. Many of the traps that required software emulation are now done by the hardware, thus making it more efficient and perhaps more optimal than paravirtualization. Now work is being done to provide hardware assist technology to paravirtualization as well. The next generation of hardware and software might possibly create a fully hardware-assisted paravirtualized environment that is the most optimal.

At the current stage of technology, both paravirtualization and hardware-virtualized machine (HVM) are high performing and efficient. Choosing which to use most likely depends on your environment and your preferences. We recommend and run both paravirtualized and HVM (and now PVHVM) guests. Both choices are good ones.

Xen Hypervisor or Virtual Machine Monitor (VMM)

The Xen Hypervisor is the lowest, most innermost layer of the Xen virtualization system. The hypervisor layer communicates with the hardware and performs various functions necessary to create and maintain the virtual machines; the most basic and important of these functions is the scheduling and allocation of CPU and memory resources. This area is also where the most activity has occurred in recent years in terms of improving the performance and capacity of virtual host systems. The hypervisor abstracts the hardware for the virtual machines, thus tricking the virtual machines into thinking that they are actually controlling the hardware, when they are actually operating on a software layer. In addition, the hypervisor schedules and controls the virtual machines. The hypervisor is also known as the virtual machine monitor (VMM).

The hypervisor has two layers. The bottommost layer is the hardware or physical layer. This layer communicates with the CPUs and memory. The top layer is the virtual machine monitor, or VMM. The hypervisor is used to manage the virtual machines by abstracting the CPU and memory resources, but other hardware resources, such as network and I/O, actually use dom0.

Type 1 Hypervisors

There are two types of hypervisors. The type 1 hypervisor is installed on, and runs directly on, the hardware. This hypervisor is also known as a bare-metal hypervisor. Many of the hypervisors on the market today (including Oracle VM) are type 1 hypervisors. This also includes products such as VMware, Microsoft Hyper-V, and others. The Oracle Sun Logical Domains (now known as Oracle VM Manager for SPARC) is also considered a type 1 hypervisor.

Type 2 Hypervisors

The type 2 hypervisor is known as a hosted hypervisor. A hosted hypervisor runs on top of an operating system and allows you to create virtual machines within its private environment. To the virtual environment, the virtual machine looks like any other virtual machine, but it is far removed from the hardware and is purely a software product. Type 2 hypervisors include VMware Server and VMware Workstation. The Oracle VM Solaris 10 container is considered a type 2 hypervisor as well.

Hypervisor Functionality

In a fully virtualized environment, the Xen Hypervisor (or VMM) uses a number of traps to intercept specific instructions that would normally be used to execute instructions on the hardware. The hypervisor traps and translates these instructions into virtualized instructions. The hypervisor looks for these instructions to be executed, and when it discovers them, it emulates the instruction in software. This happens at a very high rate and can cause significant overhead.

In a paravirtualized environment, the Xen-aware guest kernel knows it is virtualized and makes modified system calls to the hypervisor directly. This requires many kernel modifications but provides a more efficient way to perform the necessary OS functions. The paravirtualized environment is efficient and high performing.

The primary example of this is in memory management. The HVM environment believes that it is a normal OS, so it has its own pagetable and virtual-to-physical translation. In this case, the virtual-to-physical translation refers to virtual memory, not virtualization. The virtualized OS believes it has its own memory and addresses. For example, the virtualized environment might think it has 2GB of physical memory.

The pagetable contains the references between the virtualized system’s virtual memory and its (virtualized) physical memory; however, the hypervisor really translates its (virtualized) physical memory into the actual physical memory. Thus, the virtual-to-physical translation call is trapped (intercepted) and run in software by the hypervisor, which translates the memory call into the actual (hardware) memory address. This is probably the most-used system operation.

This is also where the hardware assist provides the biggest boost in performance. Now, instead of the operation being trapped by the hypervisor, this operation is trapped by the hardware. Thus, the most commonly used instructions that the hypervisor typically traps are not trapped and run in the hardware, which allows for almost native performance.

Features of Oracle VM

Oracle VM is a full-featured product. It is a fully functional virtualization environment that comes with an easy-to-use management console as well as a command-line interface. Here are some of the features of Oracle VM:

![]() Guest support Oracle VM supports many guests. The number of guests you can support on a single server is limited only by the memory and CPU resources available on that server.

Guest support Oracle VM supports many guests. The number of guests you can support on a single server is limited only by the memory and CPU resources available on that server.

![]() Live migration Oracle VM supports the ability to perform live migrations between different hosts in a server pool. This allows for both High Availability and load balancing.

Live migration Oracle VM supports the ability to perform live migrations between different hosts in a server pool. This allows for both High Availability and load balancing.

![]() Pause/resume The pause/resume function provides the ability to manage resources in the server pool by quickly stopping and restarting virtual machines as needed.

Pause/resume The pause/resume function provides the ability to manage resources in the server pool by quickly stopping and restarting virtual machines as needed.

![]() Templates The ability to obtain and utilize templates allows administrators to prepackage virtual systems that meet specific needs. The ability to download preinstalled templates from Oracle gives administrators an easy path to provide prepackaged applications.

Templates The ability to obtain and utilize templates allows administrators to prepackage virtual systems that meet specific needs. The ability to download preinstalled templates from Oracle gives administrators an easy path to provide prepackaged applications.

These features make Oracle VM an optimal platform for virtualization.

Hardware Support for Oracle VM

Although the Xen architecture supports several platforms, Oracle has chosen to focus on the Intel/AMD x86_64 architecture. Since the Oracle acquisition of Sun Microsystems, Oracle has rebranded some of the virtualization technologies built into the Sun hardware and Solaris operating system as Oracle VM. The Sun virtualization technology is not covered in this book. For the purposes of this book, Oracle VM refers only to the x86 virtualization technology.

As with most software products, the Oracle VM documentation provides a minimum hardware requirement. This minimum is usually very low and does not allow for even basic usage of the product. Therefore, the hardware requirements provided in Table 3-1 include both the Oracle minimum requirements and the minimum requirements recommended by the authors.

TABLE 3-1. Oracle VM Actual Minimum Requirements

The choice of hardware depends mostly on the type of virtual machines you intend to run as well as the number of machines. This is covered in much more detail later in the book.

Summary

This chapter provided some insight into the Oracle VM architecture. By understanding the architecture, you will find it is easier to understand the factors that influence performance and functionality. The beginning part of the chapter covered the components of the Oracle VM system—the Oracle VM Server, the Oracle VM Manager, and the Agent, the latter of which is a key component of the system.

Because of Oracle VM’s use of the Xen Hypervisor, this chapter also covered the architecture of the Xen virtualization environment and the Xen Hypervisor. The Xen virtualization system is an open-source project that is heavily influenced by Oracle (since Oracle relies on it). This chapter provided an overview of the Xen system and Xen Hypervisor, as well as detailed some of the hardware requirements necessary to run Oracle VM and Xen. Although Xen runs on a number of different platforms, Oracle VM only supports the x86_64 environment at this time.

This book is about the Oracle VM products based on Xen technology and does not cover the hardware virtualization products available with the Oracle line of products.

The next chapter covers virtual machine lifecycle management. Lifecycle management is the progression of the various states that the virtual machine can exist in—from creation to destruction. Within lifecycle management, you will also study the various states of the lifecycle of virtual machines.