Chapter 9: Fuzzy Matching Programming

Understand Data Values Distribution

Data comes in all forms, shapes, sizes, and complexities. Stored in files and data sets, SAS users across industries know all too well that data can be, and often is, problematic and plagued with a variety of issues. When unique and reliable identifiers are available, users routinely are able to match records from two or more data sets using merge, join, and/or hash programming techniques without problem. But, when data originating from multiple sources contain duplicate observations, duplicate and/or unreliable keys, missing values, invalid values, capitalization and punctuation issues, inconsistent matching variables, and imprecise text identifiers, the matching process is often compromised. These types of problems are common and are often found in files and data sets containing a misspelled customer name, mailing address, or email address, where one or more characters are transposed or incorrectly recorded.

When data issues like these exist, SAS users should do everything possible to identify and standardize any and all data irregularities before attempting to search, match, and join data. To assist in this time-consuming and costly process, users often apply special-purpose programming techniques including the application of one or more of the following SAS functions to resolve key identifier issues and to successfully search, merge, and join less than perfect or messy data:

● the family of CAT functions

● various data cleaning techniques

● user-defined validation techniques

● approximate string matching techniques

● an assortment of constructive programming techniques to standardize, combine, and transform data sets together

● the application of the SOUNDEX (for phonetic matching) algorithm

● the SPEDIS, COMPLEV, and COMPGED functionsthe use of SAS and Perl regular expression functions often offers a more compact solution to complicated string manipulation tasks such as when performing text matching. If you want to learn more, a great resource is Ron Cody’s book, SAS Functions by Example, Second Edition.

The examples presented in this chapter use three transaction data sets, Customers_with_messy_data, Manufacturers_with_messy_data, and Products_with_messy_data. The internal accounts department is sending us these transaction data sets so that we can add the data and all its content to each of our production data sets: Customers, Manufacturers, and Products. But, before adding the transaction data sets to our production data sets, our organization’s protocol requires us to first verify the cleanliness and accuracy of each data set.

After careful inspection, we find that each transaction data set contains data issues, including the existence of spelling errors, punctuation inconsistencies, and invalid values. Our analysis concludes the following information:

● Customers_with_messy_data (Figure 9.1) consists of 3 observations, a data structure of three variables: Custnum, a numeric variable; and Custname and Custcity, character variables. Several data issues are found, including spelling errors, punctuation inconsistencies, and invalid values.

● Manufacturers_with_messy_data (Figure 9.2) contains 2 observations and a data structure consisting of four variables: Manunum, a numeric variable; and Manuname, Manucity, and Manustat, character variables. Several data issues are found including, spelling errors, punctuation inconsistencies, and invalid values.

● Products_with_messy_data (Figure 9.3) contains 4 observations and a data structure consisting of five variables: Prodnum, Manunum, and Prodcost, numeric variables; and Prodname and Prodtype, character variables. Several data issues are found, including spelling errors, punctuation inconsistencies, and invalid values.

Figure 9.1: Customers_with_messy_data data set

Figure 9.2: Manufacturers_with_messy_data data set

Figure 9.3: Products_with_messy_data data set

To enable readers to work with these three tables (or data sets), I have included DATA steps so you can copy and paste the code and datalines to recreate the data sets for the purpose of following along with the examples.

Customers Table

data Customers;

input @1 Custnum 4.

@6 Custname $25.

@32 Custcity $20.;

datalines;

1901 Pacific Beach Metropolis Pacific Baech

2001 Solana Beach High Tech Solana Baech

2101 El Cajon Analytics Center La Kahone

;

run;

Manufacturers Table

data Manufacturers;

input @1 Manunum 4.

@6 Manuname $25.

@32 Manucity $20.

@52 Manustate $2.;

datalines;

800 21st Century Analytics Co Spring Valley ca

900 Absolute Best Apps Inc Spring Valley XA

;

run;

proc print data=Manufacturers;

run;

Products Table

data Products;

input @1 Prodnum 4.

@6 Prodname $25.

@32 Manunum 3.

@36 Prodtype $15.

@52 Prodcost 8.2;

format Prodcost Dollar10.2;

datalines;

5005 Analytics Software 500 Softwear 49900

5006 Storytelling Software 500 Softwares 39900

5007 Fuzzy Matching Software 500 Softwara 39900

5008 AI Software 500 Softwares 39900

;

run;

proc print data=Products;

run;

If you suspect that your data sets and files contain data issues and/or you want to learn how to prevent data issues such as spelling and punctuation errors, invalid values, and value inconsistencies from creeping into your production data sets, the steps shown in Figure 9.4 should be adhered to.

Figure 9.4: 6-step Fuzzy Matching Process

This first step determines whether any variables exist for matching purposes. Using PROC CONTENTS, PROC DATASETS, or metadata Dictionary tables, the contents of each transaction data set and a sampling of values are examined in greater detail to assess the severity of data issues that exist, as well as the distribution of data values for categorical variables.

PROC CONTENTS Code

PROC CONTENTS DATA=Customers_with_Messy_Data;

RUN;

PROC CONTENTS DATA=Manufacturers_with_Messy_Data;

RUN;

PROC CONTENTS DATA=Products_with_Messy_Data;

RUN;

Results

To derive a more accurate picture of the data sources, users should conduct some level of data analysis by identifying missing values, outliers, invalid values, minimum and maximum values, averages, value ranges, duplicate observations, distribution of values, and the number of distinct values a categorical variable contains. This important step provides an understanding of the data, while leveraging the data cleaning and standardizing activities that will be performed later. One of the first things data wranglers will want to do is explore the data using the SAS FREQ procedure, or an equivalent approach like Excel Pivot Tables.

PROC FREQ Code

PROC FREQ DATA=Customers_with_Messy_Data;

TABLES _ALL_ / NOCUM NOPERCENT MISSING;

RUN;

After reviewing the results, an assortment of data issues is found including data accuracy, inconsistent values, validation issues such as data type and range of values and capitalization versus mixed case, and incomplete (partial) data issues, as shown in the results below.

Results

Determining the number of distinct values a categorical variable has is critical to the fuzzy matching process. Acquiring this information helps everyone become more involved and have a better understanding of the number of distinct variable levels, the unique values, and the number of occurrences for developing data-driven programming constructs and elements (see Chapter 10, “Tuning for Performance and Efficiency”). The following SAS code provides us with the number of BY-group levels for each variable of interest we see in Figure 9.7.

PROC FREQ Code

TITLE "By-group NLevels in Customers_with_Messy_Data";

PROC FREQ DATA=Customers_with_Messy_Data NLEVELS;

RUN;

TITLE "By-group NLevels in Manufacturers_with_Messy_Data";

PROC FREQ DATA=Manufacturers_with_Messy_Data NLEVELS;

RUN;

TITLE "By-group NLevels in Products_with_Messy_Data";

PROC FREQ DATA=Products_with_Messy_Data NLEVELS;

RUN;

Results

Data cleaning, also called data cleansing or data scrubbing, is the process of identifying and fixing data quality issues including missing values, invalid character and numeric values, outlier values, value ranges, duplicate observations, and other anomalies found in data sets. SAS provides many powerful ways to perform data cleaning tasks. If you want to learn more, a great resource is Ron Cody’s book, Cody’s Data Cleaning Techniques Using SAS, Third Edition.

Use SAS Functions to Modify Data

SAS functions are an essential component of the Base SAS software. Representing a variety of built-in and callable routines, functions serve as the “work horses” in the SAS software providing users with “ready-to-use” tools designed to ease the burden of writing and testing often lengthy and complex code for a variety of programming tasks. The advantage of using SAS functions is evidenced by their relative ease of use, and their ability to provide a more efficient, robust, and scalable approach to simplifying a process or programming task.

It is sometimes necessary to concatenate fields when matching files, because the fields could be concatenated in one file while separate in another. SAS functions span many functional categories, and this paper focuses on those that are integral to the fuzzy matching process. The following is a list of alternative methods of concatenating strings and/or variables together.

● Use the STRIP function to eliminate leading and trailing blanks, and then concatenate the stripped fields using the concatenation operator, and insert blanks between the stripped fields.

● Use one of the following CAT functions to concatenate fields:

◦ CAT, the simplest of concatenation functions, joins two or more strings and/or variables together, end-to-end producing the same results as with the concatenation operator.

◦ CATQ is similar to the CATX function, but the CATQ function adds quotation marks to any concatenated string or variable.

◦ CATS removes leading and trailing blanks and concatenates two or more strings and/or variables together.

◦ CATT removes trailing blanks and concatenates two or more strings and/or variables together.

◦ CATX, perhaps the most robust CAT function, removes leading and trailing blanks and concatenates two or more strings and/or variables together with a delimiter between each.

Explore Data Issues with PROC FORMAT and PROC SQL

Problems with inaccurately entered data often necessitate time-consuming validation activities. A popular technique used by many to identify data issues is to use the FORMAT procedure. In the next example, a user-defined format called, $Prodtype_Validation, is created using PROC FORMAT, and a PROC SQL query is used to identify and display data issues associated with the values found in the Prodtype variable.

PROC FORMAT and PROC SQL Query

PROC FORMAT LIBRARY=WORK;

VALUE $Prodtype_Validation

'Laptop' = 'Laptop'

'Phone' = 'Phone'

'Software' = 'Software'

'Workstation' = 'Workstation'

Other = 'ERROR - Invalid Prodtype';

RUN;

PROC SQL;

TITLE "Validation Report for Products_with_messy_data Prodtype Variable";

SELECT Prodnum,

Prodname,

Manunum,

Prodtype,

Prodtype FORMAT=$Prodtype_Validation.,

Prodcost

FROM Products_with_messy_data;

QUIT;

Results

Once the invalid Prodtype categories are identified with the validation report, users have the option of using one or more data cleaning techniques to manually correct the data, automate the process, or apply fuzzy matching techniques to correct (or handle) each invalid product type category.

Here are a few additional things to consider using during the data cleaning process.

Add Categories, if Available, to the Start of the Name

Adding categories can help eliminate matches that might occur if two businesses in the same general geographic area have the same name. For example: “Johnson’s Best” could describe a hardware store, a restaurant, or another type of business. By adding a variable that contains the industry type (e.g., Agriculture, Bank, Restaurant, Hospital, Hotel, etc.) could prevent mismatches from occurring.

Remove Special or Extraneous Characters

Punctuation can differ even when names or titles are the same. Therefore, consider removing the following characters: ‘ “ & ? – from product names, company names, addresses, and other character values. For example, “Del Mar’s Tech Center” and “Del Mar Tech Center” most likely refers to the same customer name in the Customers data set even though the former contains an apostrophe and the latter does not.

Put All Characters in Uppercase Notation and Remove Leading Blanks

Different databases could have different standards for capitalization, and some character strings can be copied in with leading blanks. As found in our example data sets the value contained in the Title variable can be stored as all lowercase, uppercase, or in mixed-case which can impact the success of traditional merge and join matching techniques. Consequently, to remedy the issues associated with case and leading blanks, consider using the STRIP function to remove leading and trailing blanks along with the UPCASE function to convert all character values to uppercase.

Remove Words that Might or Might not Appear in Key Fields

Commonly used words in language, referred to as stop words, are frequently ignored by many search and retrieval processes. Stop words are classified as irrelevant and, as a result, are inserted into stop lists and are ignored. Examples include The, .com, Inc, LTD, LLC, DIVISION, CORP, CORPORATION, CO., and COMPANY. Some database tables might include these, while others might not.

Choose a Standard for Addresses and Phone Numbers

Address values can present a challenge when analyzing and processing data sources. To help alleviate comparison issues, decide whether to use Avenue or Ave, Road or Rd, Street or St, etc, and then convert the address values accordingly or create a user-defined lookup process using PROC FORMAT to match the standard values.

Phone numbers could be standardized to remove “,“ and “–“ to convert them to digits and validated using the ALLDIGITS() function (and similar functions) as well as for the valid number of digits.

Normalize ZIP Codes when Matching Addresses and Use Geocodes when Available

Another useful technique is to remove the last 4 digits of 9-digit ZIP codes, because some files might have only 5-digit ZIP codes. Since some files might have ZIP codes as numeric fields, and other files might have ZIP codes as character fields, be sure to include leading zeros. For example, ZIP codes with a leading zero, as in 04101, would appear in a numeric field as 4101 requiring the leading zero to be inserted along with the specification of a Z5. informat and format being assigned to the ZIP code variable.

If working with US ZIP codes, make sure they are all numeric. This may not apply for other countries. One common mistake to watch for is when state codes or abbreviations, such as Canada, with abbreviation CA, are put in as the state CA (California) instead of the country CA (Canada). Since Canada has an alphanumeric 6-character ZIP code, this, hopefully, will be caught when checking for numeric ZIP codes.

If the user has access to geocodes, or if they are in the input data, geocodes can provide a further level of validation in addition to the ZIP codes.

Specify the DUPOUT=, NODUPRECS, or NODUPKEYS Options

A popular and frequently used procedure, PROC SORT, identifies and removes duplicate observations from a data set. By specifying one or more of the SORT procedure’s three options: DUPOUT=, NODUPRECS, and NODUPKEYS, users are able to control how duplicate observations are identified and removed.

PROC SORT’s DUPOUT= option is often used to identify duplicate observations before removing them from a data set. A DUPOUT= option, often specified when a data set is too large for visual inspection, can be used with the NODUPKEYS or NODUPRECS options to review a data set that contains duplicate keys or duplicate observations. In the next example, the DUPOUT=, OUT= and NODUPKEY options are specified to identify duplicate keys. The NODUPKEY option removes observations that have the same key values, so that only one remains in the output data set. The PROC SORT is followed by PROC SQL queries so the results can be displayed and examined.

PROC SORT and PROC SQL Code

PROC SORT DATA=Products_with_Messy_Data /* Input data set */

DUPOUT=Products_Dupout_NoDupkey /* Data set containing dups */

OUT=Products_Sorted_Cleaned_NoDupkey /* Data set with dups removed */

NODUPKEY;

BY Prodname; /* Key */

RUN;

PROC SQL;

TITLE “Observations Slated for Removal”;

SELECT *

FROM Products_Dupout_NoDupkey;

TITLE “Cleaned Movies Data Set”;

SELECT *

FROM Movies_Sorted_Cleaned_NoDupkey;

QUIT;

The NODUPKEY option retains only one observation from any group of observations with duplicate keys. When observations with identical key values are not adjacent to each other, users may first need to specify the NODUPKEY or NODUPKEYS option and sort the data set by all the variables (BY _ALL_ ;) to ensure the observations are in the correct order to remove all duplicates (SAS Usage Note 1566, 2000).

Although the removal of duplicates using PROC SORT is a popular technique among many SAS users, an element of care should be given to using this method when processing large data sets. Since sort operations can often be CPU-intensive, it is recommended to compare PROC SORT to procedures like PROC SQL with the SELECT DISTINCT keyword and/or SAS PROC SUMMARY with the CLASS statement to determine the performance impact of one method versus another.

Data transformations can be necessary to compare files. Data set structures are sometimes not in the desired format and might need to be converted from wide to long or long to wide, and might need to be reconciled by having their variables grouped in different ways. When a data set’s structure and data are transformed, it is typically recommended that a new data set be created from the original one.

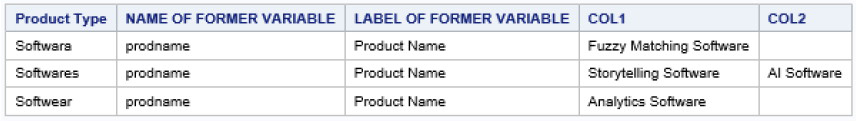

PROC TRANSPOSE is handy for restructuring data in a data set, and is typically used in preparation for special types of processing like array processing. In its simplest form, data can be transformed with or without grouping. In the next example, the Products data set is first sorted in ascending order by the variable Prodtype then the sorted data set is transposed using the Prodtype variable as the BY-group variable. The results show the product names transformed within each product type.

PROC TRANSPOSE Code

PROC SORT DATA=Products_with_Messy_Data

OUT=work.Products_Sorted;

BY Prodtype; /* BY-Group to Transpose */

RUN;

PROC TRANSPOSE DATA=work.Products_Sorted

OUT=work.Products_Transposed;

VAR Prodname; /* Variable to Transpose */

BY Prodtype; /* BY-Group to Transpose */

RUN;

PROC SQL;

SELECT *

FROM work.Products_Transposed;

QUIT;

Results

In an age of endless spreadsheets, text files, apps, and relational database management systems (RDBMS), it’s unusual to find a single spreadsheet, CSV file, table, or data set that contains all the data needed to answer an organization’s questions. Today’s data exists in many forms and all too often involves matching two or more data sources to create a combined file. The matching process typically involves combining two or more data sets, spreadsheets, and/or files possessing a shared, common and reliable, identifier (or key) to create a single data set, spreadsheet, and/or file. The matching process, illustrated in Figure 9.5, shows two tables, Manufacturers and Products, with a key, Manunum, to combine (or join) the two tables together.

Figure 9.5: Matching Process

Since we are trying to match entries that have an exact match, we can save processing time by immediately eliminating the observations (or rows) with missing key information. This can be accomplished in a number of ways, including constructing PROC SQL join queries to bypass processing observations with missing manufacturer and/or product information. Once missing observations with missing keys are eliminated, the focus can then be turned to processing observations that have exact matches on name, address, company name, and as with our example data sets, the Manunum variable.

PROC SQL Code

PROC SQL;

CREATE TABLE Manufacturers_Products_Matches AS

SELECT M.Manunum,

M.Manuname,

M.Manucity,

M.Manustat,

P.Prodnum,

P.Prodname,

P.Prodtype,

P.Prodcost

FROM Manufacturers M,

Products P

WHERE M.Manunum = P.Manunum

AND P.Prodname NE “”;

TITLE Matched Observations;

SELECT * FROM Manufacturers_Products_Matches;

QUIT;

Results

As we’ve seen, when a shared and reliable key exists between input data sources, the matching process is fairly uncomplicated. But when a shared key associated with the various input data sources is nonexistent, inexact, or unreliable, the matching process often becomes more involved and problematic. Stephen Sloan and Dan Hoicowitz suggest that special processes are needed to successfully match the names and addresses from different files when they are similar, but not exactly the same. In a constructive and systematic way, Lafler and Sloan, describe their six-step approach to cleaning data and performing fuzzy matching processes.

Once the data has been cleaned and transformed, a variety of fuzzy matching techniques are available for use. These techniques are designed to be used in a systematic way when a reliable key between data sources is nonexistent, inexact, or unreliable.

Fuzzy matching techniques are available with most, if not all, the leading software languages including R, Python, Java, and others. SAS offers four techniques to help make fuzzy matching easier and more effective: the SOUNDEX algorithm and the SPEDIS, COMPLEV, and COMPGED functions.

Soundex Algorithm

The Soundex (phonetic matching) algorithm involves matching files on words that sound alike. As one of the earliest fuzzy matching techniques, Soundex was invented and patented by Margaret K. Odell and Robert C. Russell in 1918 and 1922 to help match surnames that sound alike. It is limited to finding phonetic matches and adheres to the following rules when performing a search:

● Ignores case (case insensitive)

● Ignores embedded blanks and punctuation marks

● Is better at finding English-sounding names

Although the Soundex algorithm does a fairly good job with English-sounding names, it frequently falls short when dealing with the multitude of data sources found in today’s world economy where English and non-English sounding names are commonplace. It also has been known to miss similar-sounding surnames like Rogers and Rodgers while matching dissimilar surnames such as Smith, Snthe, and Schmitt.

So, how does the Soundex algorithm work? As implemented, SAS determines whether a name (or a variable’s contents) sounds like another by converting each word to a code. The value assigned to the code consists of the first letter in the word followed by one or more digits. Vowels, A, E, I, O, and U, along with H, W, Y, and non-alphabetical characters do not receive a coded value and are ignored. Double letters (e.g., ‘TT’) are assigned a single code value for both letters. The codes derived from each word conform to the letters and values are found in Table 9.1.

Table 9.1: Soundex Algorithm Rules

|

Letter |

Value |

|

B, P, F, V |

1 |

|

C, S, G, J, K, Q, X, Z |

2 |

|

D, T |

3 |

|

L |

4 |

|

M, N |

5 |

|

R |

6 |

The general syntax of the Soundex algorithm takes the form of:

Variable =* “character-string”

The following example illustrates how the customer city, “Pacific Beach”, is assigned a Soundex value. P has a value of 1 but is retained as P, A is ignored, C is assigned a value of 2, I is ignored, F is assigned a value of 1, I is ignored, C is assigned a value of 2, B is assigned a value of 1, E is ignored, A is ignored, C is assigned a value of 2, and H is ignored. The converted code of P21212 for “Pacific Beach” is then matched with any other customer city name that has the same assigned code.

In the next example, the Soundex algorithm is illustrated using the =* operator in a PROC SQL step with a WHERE clause to find a similar sounding customer city of “Solana Beach”, in the Customers_with_messy_data data set.

PROC SQL Code with SOUNDEX Algorithm

PROC SQL;

SELECT *

FROM Customers_with_messy_data

WHERE Custcity =* "Solana Beach";

QUIT;

The result from the SOUNDEX algorithm selected “Solana Baech” which has the same derived Soundex value as “Solana Beach”.

Results

SPEDIS Function

The SPEDIS, or Spelling Distance, function measures how close one word is to another word when it comes to spelling. By translating a keyword into a query, the SPEDIS function returns a value that can be used to determine the spelling distance between two words. Because the SPEDIS function evaluates numerous scenarios, users may experience varying performance issues in comparison to other fuzzy matching techniques such as the COMPLEV and COMPGED functions, particularly when using it with long strings.

The SPEDIS function evaluates query and keyword arguments returning nonnegative spelling distance values. A derived value of zero indicates an exact match. Generally, derived values are less than 100, but never greater than 200. It is recommended that when using the SPEDIS function for matching tasks to specify spelling distance values greater than zero and in increments of 10 (e.g., 10, 20, etc.).

So, how does the SPEDIS function work? As implemented, the SPEDIS function determines whether two names (or variables’ contents) are alike by computing an asymmetric spelling distance between two words. Before the SPEDIS function returns the distance between a query and a keyword, it removes trailing blanks. A derived value of 0 is returned if the keyword exactly matches the query. SPEDIS assigns costs (or penalty points) for each operation that is required to convert the keyword to the query. For example, when the first letter incorrectly matches then more points are assigned than when other letters do not match. Once the total costs have been computed, the resulting value represents a percentage of the length of the first argument. Table 9.2 illustrates the costs corresponding to the operations performed by the SPEDIS function.

Table 9.2: SPEDIS Cost Rules

|

Operation |

Cost |

Description |

|

|

Match |

0 |

No change |

|

|

Singlet |

25 |

Delete one of the double letters |

|

|

Doublet |

50 |

Double a letter |

|

|

Swap |

50 |

Reverse the order of two consecutive letters |

|

|

Truncate |

50 |

Delete a letter from the end |

|

|

Append |

35 |

Add a letter to the end |

|

|

Delete |

50 |

Delete a letter from the middle |

|

|

Insert |

100 |

Insert a letter in the middle |

|

|

Replace |

100 |

Replace a letter in the middle |

|

|

Firstdel |

100 |

Delete the first letter |

|

|

Firstins |

200 |

Insert a letter at the beginning |

|

|

Firstrep |

200 |

Replace the first letter |

|

The general syntax of the SPEDIS function takes the form of:

SPEDIS (query, keyword)

In this example, a simple PROC SQL query with a WHERE clause and CALCULATED keyword is specified to capture and show the observations derived by the SPEDIS function for finding exact matches for the customer city, “Solana Beach”.

PROC SQL Code with SPEDIS Function

PROC SQL;

TITLE “SPEDIS Function Matches”;

SELECT *,

SPEDIS(Custcity,“Solana Beach”) AS Spedis_Value

FROM Customers_with_messy_data

WHERE CALCULATED Spedis_Value LE 10;

QUIT;

The result from the SOUNDEX algorithm example is displayed below. The observation associated with “Solana Baech” was selected as a match for “Solana Beach” and derived a SPEDIS value of 4. This result is the same as what was selected by the SOUNDEX algorithm.

Results

In the next example, a PROC SQL query with a WHERE clause and CALCULATED keyword is specified to capture and display the observations derived by the SPEDIS function for the manufacturer state, “CA”, in the Manufacturers_with_messy_data data set.

PROC SQL Code with SPEDIS Function

PROC SQL;

TITLE "SPEDIS Function Matches";

SELECT *,

SPEDIS(Manustat,"CA") AS Spedis_Value

FROM Manufacturers_with_messy_data

WHERE CALCULATED Spedis_Value GE 0;

QUIT;

Results

The result from the SOUNDEX algorithm example is displayed below. The observations associated with “ca” and “XA” were selected for “CA” with the derived SPEDIS values of 150 and 100, respectively.

COMPLEV Function

The COMPLEV, or Levenshtein Edit Distance, function is another fuzzy matching SAS technique. COMPLEV counts the minimum number of single-character insert, delete, or replace operations needed to determine how close two strings are. Unlike the SPEDIS function and COMPGED function (discussed in the next section), the COMPLEV function

assigns a score for each operation and returns a value indicating the number of operations. The general syntax of the COMPLEV function takes the form of:

COMPLEV ( string-1, string-2 <, cutoff-value> <, modifier> )

Required Arguments:

string-1 specifies a character variable, constant or expression.

string-2 specifies a character variable, constant or expression.

Optional Arguments:

cutoff-value specifies a numeric variable, constant or expression. If the actual Levenshtein edit distance is greater than the value of cutoff, the value that is returned is equal to the value of cutoff.

modifier specifies a value that alters the action of the COMPLEV function. Valid modifier values are:

● i or I Ignores the case in string-1 and string-2.

● l or L Removes leading blanks before comparing the values in string-1 or string-2.

● n or N Ignores quotation marks around string-1 or string-2.

● : (colon) Truncates the longer of string-1 or string-2 to the length of the shorter string.

In the example below, the COMPLEV function determines the best possible match for the Custcity value, “Solana Beach”, in the Customers_with_messy_data data set. The COMPLEV_Number column displays the number of operations that have been performed. The lower the value the better the match (e.g., 0 = Best match, 1 = Next Best match, etc.). The search argument of, “Solana Baech”, produces a derived COMPLEV_Number value of 2.

PROC SQL Code with COMPLEV Function

PROC SQL;

SELECT *,

COMPLEV(Custcity,"Solana Beach") AS COMPLEV_Number

FROM Customers_with_messy_data

ORDER BY Custnum;

QUIT;

The output below shows the derived values for the Levenshtein Edit Distance for different spelling variations for, “Solana Beach”, in the column, Custcity.

Results

In the next example, the COMPLEV function’s computed value is limited to 1 or less using a WHERE clause. The results show the observation associated with the product type, “Software”.

PROC SQL Code with COMPLEV Function

PROC SQL;

SELECT *,

COMPLEV(Prodtype,"Software") AS COMPLEV_Number

FROM Products_with_messy_data

WHERE CALCULATED COMPLEV_Number LE 1

ORDER BY Prodname;

QUIT;

Results

In the next example, the COMPLEV function has a modifier value of “INL” to ignore the case, remove leading blanks, and ignore quotation marks around string-1 and string-2 and a value for the COMPLEV_Score of 0 (perfect match). The results show the observation associated with the Manustat, “CA” in the argument for string-1 matches the value of “ca” in the argument for string-2.

PROC SQL Code with COMPLEV Function and Arguments

PROC SQL;

TITLE "COMPLEV Function Matches";

SELECT *,

COMPLEV(Manustat,"CA",”INL”) AS COMPLEV_Score

FROM Manufacturers_with_messy_data

WHERE CALCULATED COMPLEV_Score = 0;

QUIT;

Results

COMPGED Function

The COMPGED function is another fuzzy matching technique that is facilitated as a SAS function. It works by computing a Generalized Edit Distance (GED) score when comparing two text strings. The Generalized Edit Distance score is a generalization of the Levenshtein edit distance, which is a measure of dissimilarity between two strings where the edit distance derived as the number of operations (deletions, insertions, or replacements) of a single character to transform string-1 into string-2. In Sloan and Hoicowitz’s paper, “Fuzzy Matching: Where Is It Appropriate and How Is It Done? SAS Can Help,” they describe using the COMPGED function to match data sets with unreliable identifiers (or keys), the higher the GED score the less likely the two strings match. Conversely, for the greatest likelihood of a match with the COMPGED function users should seek the lowest derived score from evaluating all the possible ways of comparing the two strings, string-1 with string-2.

The COMPGED function returns values that are multiples of 10, e.g., 20, 100, 200, etc. Based on experience, a COMPGED score of 100 or less often translates to valid matches for the comparison that they are performing. The COMPGED function compares two character strings, along with optional parameters to control case-sensitive scenarios, leading blanks, the use of quotation marks, and the handling of strings with unequal lengths. The general syntax of the COMPGED function takes the form of:

COMPGED ( string-1, string-2 <, cutoff-value> <, modifier> )

Required Arguments:

string-1 specifies a character variable, constant or expression.

string-2 specifies a character variable, constant or expression.

Optional Arguments:

cutoff-value specifies a numeric variable, constant or expression. If the actual generalized edit distance is greater than the value of cutoff, the value that is returned is equal to the value of cutoff.

modifier specifies a value that alters the action of the COMPGED function. Valid modifier values are:

● i or I Ignores the case in string-1 and string-2.

● l or L Removes leading blanks before comparing the values in string-1 or string-2.

● n or N Ignores quotation marks around string-1 or string-2.

● : (colon) Truncates the longer of string-1 or string-2 to the length of the shorter string.

Table 9.3 shows the different point values that COMPGED assigns for changes from one character string to another.

Table 9.3: COMPGED Scoring Algorithm

|

Operation |

Default Cost in Units |

Description of Operation |

|

APPEND |

50 |

When the output string is longer than the input string, add any one character to the end of the output string without moving the pointer. |

|

BLANK |

10 |

Do any of the following: |

|

Add one space character to the end of the output string without moving the pointer. |

||

|

When the character at the pointer is a space character, advance the pointer by one position without changing the output string. |

||

|

When the character at the pointer is a space character, add one space character to the end of the output string, and advance the pointer by one position. |

||

|

If the cost for BLANK is set to zero by the COMPCOST function, the COMPGED function removes all space characters from both strings before doing the comparison. |

||

|

DELETE |

100 |

Advance the pointer by one position without changing the output string. |

|

DOUBLE |

20 |

Add the character at the pointer to the end of the output string without moving the pointer. |

|

FDELETE |

200 |

When the output string is empty, advance the pointer by one position without changing the output string. |

|

FINSERT |

200 |

When the pointer is in position one, add any one character to the end of the output string without moving the pointer. |

|

FREPLACE |

200 |

When the pointer is in position one and the output string is empty, add any one character to the end of the output string, and advance the pointer by one position. |

|

INSERT |

100 |

Add any one character to the end of the output string without moving the pointer. |

|

MATCH |

0 |

Copy the character at the pointer from the input string to the end of the output string, and advance the pointer by one position. |

|

PUNCTUATION |

30 |

Do any of the following: |

|

Add one punctuation character to the end of the output string without moving the pointer. |

||

|

When the character at the pointer is a punctuation character, advance the pointer by one position without changing the output string. |

||

|

When the character at the pointer is a punctuation character, add one punctuation character to the end of the output string, and advance the pointer by one position. |

||

|

REPLACE |

100 |

Add any one character to the end of the output string, and advance the pointer by one position. |

|

SINGLE |

20 |

When the character at the pointer is the same as the character that follows in the input string, advance the pointer by one position without changing the output string. |

|

SWAP |

20 |

Copy the character that follows the pointer from the input string to the output string. Then copy the character at the pointer from the input string to the output string. Advance the pointer two positions. |

|

TRUNCATE |

10 |

When the output string is shorter than the input string, advance the pointer by one position without changing the output string. |

Although the COMPGED function provides users with a robust and comprehensive approach to performing searches and/or matches, some users still resort to using traditional data transformation methods. In the example below, traditional WHERE clause logic with the UPCASE function is specified to perform a concatenation of two queries by indicating the matching criteria. As the results show, the WHERE clause permits both data sets to be concatenated, but the results fail to collapse (or consolidate) the values for the categorical variable, Prodtype, to the value, “Software”.

PROC SQL Code with Traditional WHERE clause Logic

PROC SQL;

SELECT *

FROM Products

WHERE UPCASE(Prodtype) IN ("SOFTWARE","SOFTWEAR","SOFTWARA","SOFTWARES")

OUTER UNION CORRESPONDING

SELECT *

FROM Products_with_messy_data

WHERE UPCASE(Prodtype) IN ("SOFTWARE","SOFTWEAR","SOFTWARA","SOFTWARES");

QUIT;

Results

To address the shortcomings of the preceding example, the next example specifies a UNION set operator, a CASE expression, and a WHERE clause with an UPCASE function to concatenate and consolidate “Software” products from the Products and Products_with_messy_data data sets. As the code and results suggest, a UNION set operator and a WHERE clause concatenates and consolidates both data sets on “Software” products.

PROC SQL Code with CASE Expressions and WHERE clause Logic

PROC SQL;

CREATE TABLE Products_Concatenated AS

SELECT Prodnum, Prodname, Manunum, Prodcost,

CASE

WHEN UPCASE(Prodtype) IN ("SOFTWARE","SOFTWEAR","SOFTWARA","SOFTWARES")

THEN "Software"

ELSE "ERROR – Prodtype Unknown"

END AS Prodtype

FROM Products

WHERE UPCASE(Prodtype) IN ("SOFTWARE","SOFTWEAR","SOFTWARA","SOFTWARES")

UNION

SELECT Prodnum, Prodname, Manunum, Prodcost,

CASE

WHEN UPCASE(Prodtype) IN ("SOFTWARE","SOFTWEAR","SOFTWARA","SOFTWARES")

THEN "Software"

ELSE "ERROR – Prodtype Unknown"

END AS Prodtype

FROM Products_with_messy_data

WHERE UPCASE(Prodtype) IN ("SOFTWARE","SOFTWEAR","SOFTWARA","SOFTWARES");

SELECT * FROM Products_Concatenated;

QUIT;

Results

In the next example, the COMPGED function is specified to determine the best possible match for the Custcity value, “Solana Beach”, found in the Customers_with_messy_data data set. As the code and results suggest, the lower the value of the COMPGED_Score the better the match (e.g., 0 = Best match, 10 = Next Best match, etc.). The search argument, “Solana Baech”, produces a derived COMPGED_Score of 20.

PROC SQL Code with COMPGED Function

PROC SQL;

SELECT *,

COMPGED(Custcity,"Solana Beach") AS COMPGED_Score

FROM Customers_with_messy_data

ORDER BY Custnum;

QUIT;

The results show the derived COMPGED_Score values for the spelling variation of “Solana Baech” in the column Custcity.

Results

In the next example, the COMPGED function is specified to determine the best possible match for the Prodtype value, “Software”, in the Products_with_messy_data data set. As the code and Figure 20 suggests, the lower the value of the COMPGED_Score the better the match (e.g., 0 = Best match, 10 = Next Best match, etc.). The search argument, “Software”, produces derived scores for COMPGED_Score of 50, 100 and 200.

PROC SQL Code with COMPGED Function

PROC SQL;

SELECT *,

COMPGED(Prodtype,"Software") AS COMPGED_Score

FROM Products_with_messy_data

ORDER BY Prodname;

QUIT;

The results show the derived COMPGED_Score values for the different spelling variations associated with “Software” in the column Prodtype.

Results

The next example specifies a CASE expression, a COMPGED function with the “INL” argument, a UNION set operator, and a WHERE clause to restrict the results of the concatenated and consolidated “Software” Products and Products_with_messy_data data sets to a COMPGED score of no more than 200. As the code and results suggest, the specification of the UNION set operator with the WHERE clause concatenates and consolidates “Software” products from both data sets.

PROC SQL Code with CASE Expression, COMPGED Function, and WHERE clause Logic

PROC SQL;

CREATE TABLE Products_Concatenated AS

SELECT Prodnum, Prodname, Manunum, Prodcost,

CASE

WHEN COMPGED(Prodtype,"Software","INL") LE 200 THEN "Software"

ELSE "ERROR – Prodtype Unknown"

END AS Prodtype

FROM Products

WHERE COMPGED(Prodtype,"Software","INL") LE 200

UNION

SELECT Prodnum, Prodname, Manunum, Prodcost,

CASE

WHEN COMPGED(Prodtype,"Software","INL") LE 200 THEN "Software"

ELSE "ERROR – Prodtype Unknown"

END AS Prodtype

FROM Products_with_messy_data

WHERE COMPGED(Prodtype,"Software","INL") LE 200;

SELECT * FROM Products_Concatenated;

QUIT;

Results

CALL COMPCOST Routine

Readers are also able to set the costs for each operation performed by the COMPGED function using the CALL COMPCOST routine. In the next example, the default costs for each operation performed by the COMPGED function are displayed. But using a DATA step, we can assign our own costs for any operation. In the DATA step, we execute the CALL COMPCOST routine to assign costs to three operations : Insert, Delete, and Replace. Finally, we display the results using PROC SQL.

CALL COMPCOST and PROC SQL Code

PROC SQL;

SELECT *,

COMPGED(Custcity,"Solana Beach") AS COMPGED_Score

FROM Customers_with_messy_data

ORDER BY Custnum;

QUIT;

data compcost;

set Customers_with_messy_data;

if _n_=1 then CALL COMPCOST('insert=',10,'delete=',11,'replace=', 12);

Compged_Score = COMPGED(Custcity,"Solana Beach");

run;

PROC SQL;

SELECT *

FROM compcost

ORDER BY Custnum;

QUIT;

Results

As you can see, the derived costs are different in each output result. The first set of results illustrates the default costs derived for operations performed with the COMPGED function. The second set of results illustrates the revised costs defined with the CALL COMPCOST routine and performed with the COMPGED function.

Use the Lower Score

For those fuzzy matching techniques that are not commutative (it matters which data set is placed first and which is placed second), use the lower score that results from the different sequences.

Validation

As can be seen when comparing the SOUNDEX and SPEDIS methods, and when looking at the results of COMPLEV and COMPGED, these methods worked well on a test data set that was designed to illustrate the results. It should be noted that the COMPLEV function is best used when comparing simple strings where data sizes and/or speed of comparison is important, such as when working with large data sets. It should also be noted that the COMPGED function generally requires more processing time to complete because of its more exhaustive and thorough capabilities.

Stephen Sloan and Dan Hoicowitz conducted research on 50,000 business names to manually identify fuzzy matches using the SAS COMPGED function. The intent of their study was to identify false negatives by looking at an alphabetic sort of the business names. From the extracted test files the researchers identified false positives. Finally, the conditions that were specified in the COMPGED function were repeated until the false positives and false negatives were significantly reduced. These conditions then became part of the fuzzy matching process and achieved improved results efficiently and on demand.

When data originating from multiple sources contains duplicate observations, duplicate and/or unreliable keys, missing values, invalid values, capitalization and punctuation issues, inconsistent matching variables, and imprecise text identifiers, the matching process is often compromised by unreliable and/or unpredictable results. This chapter demonstrates a six-step approach including identifying, cleaning and standardizing data irregularities, conducting data transformations, and utilizing special-purpose programming techniques such as the application of SAS functions, the SOUNDEX algorithm, the SPEDIS function, approximate string matching functions including COMPLEV and COMPGED, and an assortment of constructive programming techniques to standardize and combine data sets together when the matching columns are unreliable or less than perfect.