C H A P T E R 2

.NET Practice Areas

This chapter is about identifying the sources of better .NET practices. The focus is on the areas of .NET development and general software development that provide an opportunity to discover or learn about better practices. Think of this chapter as providing a large-scale map that gives you the basic geography. Broadly speaking, the sources of better practices include:

- Understanding problems, issues, defects, or breakdowns

- Looking at the interconnected events and behaviors of development

- Finding sources of appropriate patterns, conventions, and guidance

- Applying tools and technologies

- New ideas, innovations, and research

There are .NET practice areas at the forefront of establishing new and widely-used tools and technologies for better practices. The list includes automated testing, continuous integration, and code analysis. These practice areas are so important that this book devotes a chapter to each topic:

- Automated testing is covered in Chapter 8.

- Continuous integration is covered in Chapter 10.

- Code analysis is covered in Chapter 12.

This chapter brings up .NET practice areas that are important and warrant further exploration. Hopefully, you get enough information to plan and prepare a deeper investigation into the .NET practice areas that are beyond the scope of this book. There are resources and references listed in Appendix A.

There is more to selecting, implementing, and monitoring practices than can be explained in one chapter. In subsequent chapters, the significance of focusing on results, quantifying value, and strategy is discussed, as follows:

- Delivering, accomplishing, and showing positive outcomes is covered in Chapter 3.

- Quantifying the value of adopting better practices is covered in Chapter 4.

- Appreciating the strategic implications of better practices is covered in Chapter 5.

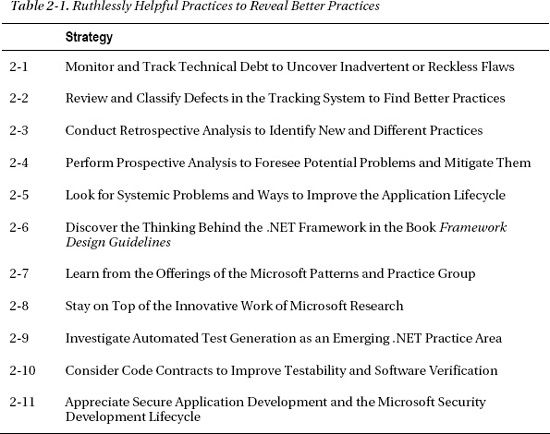

The discussion of the areas covered in this chapter draws out ways to uncover better practices in these areas. In these cases, the recommended practice is highlighted and stated directly. All of the recommended practices in this chapter are summarized in Table 2-1. These are ruthlessly helpful practices that reveal new and different practices, which should uncover specific, realistic, practical, and helpful practices to you, your team, and your organization.

COMMENTARY

Internal Sources

The problems, issues, defects, or breakdowns that your current team, project, or organization are experiencing are a gold mine of new and different practices. These internal sources are usually a lot more valuable than external sources because a suggested practice is

- Undeniably relevant: The practice is important; it is of interest

- Well-timed: The practice is urgently-needed because the issues are recent

- Acceptable: The practice is fitting or home-grown because it matches the situation

Better practices that derive from internal sources have less opposition and fewer debates because the need is backed-up by the value of the source itself. For example, if the source is a defect tracking system then the better practice is as important as reducing defects is to the organization. This significantly lowers the barriers to adoption. The discussion now focuses on the effectiveness of the new and different practice. After some time, the practice is measurable because the internal source ought to show the improvement that comes from the better practice. If the defect tracking system is the source then a better practice lowers the number of defects in some measurable way. Specific practices that relate to quantifying value with internal sources are presented and discussed in Chapter 4.

____________________

1Krzysztof Cwalina and Brad Abrams, Framework Design Guidelines: Conventions, Idioms, and Patterns for Reusable .NET Libraries, 2nd Edition (Upper Saddle River, NJ: Addison-Wesley Professional, 2008).

Technical Debt

The “technical debt” metaphor was created by Ward Cunningham as a way to understand and track the intentional and unintentional design and coding shortcomings of software.2 We take on technical debt when we oversimplify a design or recklessly create a flawed design. We take on technical debt when we wisely or unwisely write code that does not work as well as it should. Taking this metaphor from the financial world, debt is one of the ways to finance an organization. It can provide the cash needed to make payroll. It provides the organization an opportunity to buy things like furniture and equipment. If the payments on interest and principal are well managed then the organization can properly handle the costs of financial debt. The same is true for technical debt. With technical debt, the “interest payments” are the added effort and frustration caused by the inadequacies of the current design and source code. In this metaphor, the “principal payments” are the effort needed to go back and remediate or improve the design and source code.

Some projects take on technical debt intelligently and deliberately. On these projects the technical debt often serves a useful purpose, and the consequence of the debt is understood and appreciated. On other projects the technical debt is recklessly accumulated, usually without regard to the consequences. Examining how your project accumulates and deals with technical debt is an excellent way to find new and different practices that improve the situation. In fact, the technical debt metaphor itself is a good way to get the team focused on following better practices.

Not all technical debt is created equal. There are minor design flaws that have little impact and there are major design flaws that require huge amounts of rework. For example, if localization is an ignored requirement, retrofitting tens of thousands of lines of code late in the project can be an expensive and error-prone effort.

It is useful to recognize that there are two distinct types of technical debt:

- Design debt: This can result from prudent decision-making, underappreciated requirements, inadequate architecture, or hurried development. Often the design limitations will eventually need to be addressed.

- Code debt: This can result from demanding circumstances, temporary simplification, imprudence, or hastiness that finds its way into the source code. Often the code should be reworked and improved.

Take some time to review the designs that you and your team create. Do the designs have flaws that need to be dealt with? What new and different practices should your team adopt to prevent or remediate these inadequate designs? Select better practices that ensure design debt is not accumulating without intention and that design debt is paid off before the consequences are disastrous.

Review the source code that you or your team is writing. Does the code need to be reworked or improved? What new and different practices should your team adopt to write better code? Select better practices that help the team to produce better code.

![]() Practice 2-1 Monitor and Track Technical Debt to Uncover Inadvertent or Reckless Flaws

Practice 2-1 Monitor and Track Technical Debt to Uncover Inadvertent or Reckless Flaws

____________________

2For more information see http://c2.com/cgi/wiki?TechnicalDebt.

A specific practice that helps deal with technical debt is to capture the technical debt as it accumulates. This raises awareness and goes a long way toward preventing inadvertent and reckless debt.3 In addition, the team should use the information to make plans to pay down the technical debt as the project proceeds. With this information the team can take a principled stand against proceeding under a heavy burden of technical debt that is causing delay and jeopardizing the quality of the software. Monitoring and analyzing technical debt is an excellent way to find and adopt better ways of delivering software.

Defect Tracking System

In general, the defect tracking system provides feedback from testers and customers that indicates that there are problems. This is a rich source for improvements to the overall development process. By examining the defect tracking system there are a number of common problems that imply deficient practices:

- Requirements that are poorly defined or understood

- Designs that are inadequate or incomplete

- Modules that are error-prone and need to be redesigned or rewritten

- Source code that is difficult to understand and maintain

- Defect reports that are incomplete or unclear

- Customer issues that are hard to reproduce because of missing detail

![]() Practice 2-2 Review and Classify Defects in the Tracking System to Find Better Practices

Practice 2-2 Review and Classify Defects in the Tracking System to Find Better Practices

It is not uncommon to find clusters of defects in the tracking system that relate to the same feature, module, or code file. These defects are the result of something not having been done properly. Ideas and support for new and different practices come from assembling those defects and making the case for a better practice. For example, if a large number of defects is caused by unclear or incomplete requirements then better requirements analysis and more design work are indicated. Improving the understanding and thoroughness of requirements goes a long way toward eliminating this source of defects.

In some cases the issues entered in the defect tracking system are the problem. They may lack steps to reproduce the issue. In other cases, the customer cannot provide adequate information and the developers are left guessing what caused the issue. Better practices, such as having the testers write complete and clear defect entries, improve the situation. Better system logging and debugging information is another way to improve the situation.

____________________

3Classify the nature of the technical debt. See http://martinfowler.com/bliki/TechnicalDebtQuadrant.html

Retrospective Analysis

A retrospective meeting is a discussion held at the end of an Agile sprint or project iteration. At the end of a project, a retrospective of the entire project is also held. The meeting often covers what worked well and what did not work well. A major goal of these meetings is to find out what could be improved and how to include those improvements in future iterations or projects. One technique for facilitating these meetings is the 4L's Technique.4 This technique concentrates on four topics and the related questions:

- Liked: What did we like about this iteration?

- Learned: What did we learn during this iteration?

- Lacked: What did we not have or require during this iteration?

- Longed for: What did we wish we had during this iteration?

![]() Practice 2-3 Conduct Retrospective Analysis to Identify New and Different Practices

Practice 2-3 Conduct Retrospective Analysis to Identify New and Different Practices

Knowing what was liked and what was learned helps identify new and different practices that worked during the iteration. The things that the team liked are practices worth continuing. The things the team learned usually suggest better practices that the team implicitly or explicitly discovered. Sometimes what is learned is that some practices did not work. Be sure to discontinue those practices. Knowing what was lacking or longed for also suggests practices. The team should work with the retrospective discussion and analysis to define better practices that can apply to future iterations.

Prospective Analysis

Prospective analysis engages the team to imagine and discuss the potential for problems, errors, or failures. This work is done in advance of any significant requirements, design, or testing work. The goal is to think through the possible failure modes and the severity of their consequences. Prospective analysis includes activities such as the following:

- Evaluating requirements to minimize the likelihood of errors

- Recognizing design characteristics that contribute to defects

- Designing system tests that detect and isolate failures

- Identifying, tracking, and managing potential risks throughout development

![]() Practice 2-4 Perform Prospective Analysis to Foresee Potential Problems and Mitigate Them

Practice 2-4 Perform Prospective Analysis to Foresee Potential Problems and Mitigate Them

____________________

4 The 4L's Technique: http://agilemaniac.com/tag/4ls/.

Risk identification and risk management are forms of prospective analysis commonly practiced by project managers. The same type of analysis is appropriate for technical development topics like requirements, design, and testing. This analysis engages the imagination and creative skills of the people involved. The better practices are suggested by anticipating problems and the effort to prevent problems from occurring.

Application Lifecycle Management

The traditional waterfall model of software development consists of distinct and separate phases. This approach has shown itself to be too rigid and too formal. Software applications have characteristics that make them more flexible and more powerful than a purpose-built hardware solution. If, instead of software, the team of engineers is building an airplane, then a waterfall approach is warranted. With hardware development, the extremely disruptive costs of late changes imply the need for the distinct, formal, and separate phases of the waterfall model. The virtue of software is that it is flexible and adaptable, and so, the waterfall model wrecks this responsiveness to change and detracts from software's primary virtue.

![]() Note The choice of life cycle, flexible or rigid, depends on the maturity and criticality of the application domain. Developing software to control a nuclear power plant should follow a waterfall methodology. The laws of physics are not going to change, and control software is a critical component. This domain has a strict set of physical circumstances and regulations that perfectly fits the waterfall methodology. The requirements of a social media site are difficult to formally specify because they are driven by a changing marketplace. An on-the-fly adaptation is needed, such as agile software development, to make sure the team delivers an application that serves the evolving social media landscape.

Note The choice of life cycle, flexible or rigid, depends on the maturity and criticality of the application domain. Developing software to control a nuclear power plant should follow a waterfall methodology. The laws of physics are not going to change, and control software is a critical component. This domain has a strict set of physical circumstances and regulations that perfectly fits the waterfall methodology. The requirements of a social media site are difficult to formally specify because they are driven by a changing marketplace. An on-the-fly adaptation is needed, such as agile software development, to make sure the team delivers an application that serves the evolving social media landscape.

Let's consider the analogy of hiring a private jet flying from London to Miami. The passengers have an objective to fly to Miami safely, efficiently, and in comfort. Once hired, the charter company works with the passengers' representative to discuss cost, schedule, and expectations to book the charter. Once booked, the pilot and crew prepare the airplane by planning and performing activities like fueling the plane, filing a flight plan, and stocking the plane with blankets, beverages, and meals. Once in the air the pilot and crew make adjustments based on new information and changing realities. If the weather is bad, the airplane can fly around it or above it. If the passengers are tired and want to sleep, the cabin is made dark and quiet. The pilot and crew have some trouble abiding fussy and selective passengers, yet they make every effort to provide reasonable accommodations. With a different breed of erratic and demanding passengers, the pilot and crew are in for trouble. These are the high-maintenance passengers that demand the plane turn around to pick up something they forgot in the limousine but are unwilling to accept any delay. Worse yet, there are passengers that demand an in-flight change of destination from Miami to Rio de Janeiro. Very few charter airline companies are organized to satisfy the fickle demands of rock stars, world leaders, and eccentric billionaires. Similarly, software applications should be developed in a way that is responsive to relevant adjustments and adapts quickly to new information and changing realities. At the same time, the changes cannot be chaotic, impulsive, whimsical, and unrealistic.

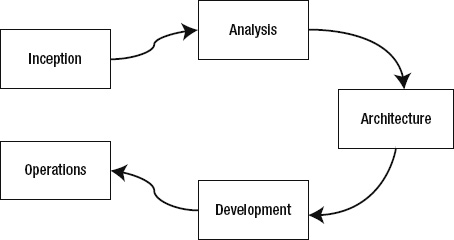

The application lifecycle is a broad set of connected activities that happen throughout the software's lifetime. For the purposes of this discussion, the broadest phases of application lifecycle management (ALM) include:

- Inception: Form the vision, objectives, and core requirements.

- Analysis: Establish the core feature sets and desired behavior for the system.

- Architecture: Create the high-level design and system architecture.

- Development: Detail the functionality, low-level design, code, deploy, and test the system.

- Operations: Monitor the post-delivery system, discover issues, resolve issues, and address system improvements.

These five phases are illustrated in Figure 2-15. Do not think of these phases as discrete and separate. This diagram is a conceptual model that shows how the application moves from an idea and vision, to common understanding and primary functionality, through strategy and approach, toward tactics and implementation, and finally into rollout and usage. The activities of each phase do adjust, inform, and change the conclusions and decisions of the previous phase, but they should not render the prior phases entirely pointless and irrelevant. A proper project Inception phase lays the groundwork for an effective Analysis phase. A proper Analysis phase lays the groundwork for an effective Architecture phase. The Development phase proceeds to develop and deploy an effective system that goes into operation. What was accomplished during the Development phase is on the whole a proper solution that is utilized during the Operations phase.

Figure 2-1. The application lifecycle management phases

A great many projects that turn into disasters have fundamental mistakes and misunderstandings rooted in the Inception, Analysis, and Architecture phases. These three phases are important areas to look for new and different practices for overall application development improvement. It is good to enhance the architecture during the Development phase, but there is something wrong when the entire architecture is found to be wrong or inadequate. It is good to adapt to unforeseeable requirement changes during the Development phase, but there is something wrong when a vital, core feature set is overlooked or intentionally played down during the Analysis phase.

____________________

5Adapted from Grady Booch, Object Solutions: Managing the Object-Oriented Project (Menlo Park, CA: Addison-Wesley, 1996).

![]() Practice 2-5 Look for Systemic Problems and Ways to Improve the Application Lifecycle

Practice 2-5 Look for Systemic Problems and Ways to Improve the Application Lifecycle

Within the Development phase, the activities and disciplines cover many topics from change management to configuration management to release management. For many .NET developers, the important day-to-day activities are in the Development phase. When looking for new and different .NET practices, focus on key principles and the fundamentals of development. All the activities and disciplines connect to the following seven significant aspects of development:

- Requirements

- Design

- Coding

- Testing

- Deployment

- Maintenance

- Project Management

Consider Agile software development: the approach harmonizes and aligns these seven significant aspects of development. It promotes things like collaboration, adaptability, and teamwork across these seven aspects of the Development phase and throughout the application lifecycle.

The Visual Studio product team recognizes the importance of ALM, and Microsoft is doing more to develop tools that support integrating team members, development activities, and operations. In fact, the Microsoft Visual Studio roadmap is centered on the part of the application lifecycle management that focuses on the Development and Operation phases.6 Microsoft calls its effort to bring better practices to the application lifecycle Visual Studio vNext. ALM is a .NET practice area that is important and is showing increasing relevance as teams and organizations continue to find ways to improve.

Patterns and Guidance

Today there are a lot of .NET developers who have excellent experience, good judgment, and common sense. Thankfully, they are writing books and posting to their blogs. Microsoft is clearly interested in having their best and brightest communicate through resources like the Microsoft Developer Network (MSDN). Microsoft has a patterns and practices group that is disseminating guidance, reusable components, and reference applications. There is a great number of sources of rich material available that points to better .NET practices.

____________________

6For more information see http://www.microsoft.com/visualstudio/en-us/roadmap.

The goal of this section is to raise your awareness of the places to look and topics to investigate, to round out your .NET practice areas. The section starts with general sources that offer guideline-oriented information, follows into important patterns, and provides tables with some specific tools.

Framework Design Guidelines

There is a single book that provides so much information on good and bad .NET development practices that it is a must-read book. That book is Framework Design Guidelines: Conventions, Idioms, and Patterns for Reusable .NET Libraries (2nd Edition) by Krzysztof Cwalina and Brad Abrams. The book strives to teach developers the best practices for designing reusable libraries for the Microsoft .NET Framework. However, this book is relevant to anyone developing applications that ought to comply with the .NET Framework best practices. It is very helpful to developers who are new to the .NET Framework and to those unfamiliar with the implications of designing and building frameworks.

![]() Practice 2-6 Discover the Thinking Behind the .NET Framework in the Book Framework Design Guidelines

Practice 2-6 Discover the Thinking Behind the .NET Framework in the Book Framework Design Guidelines

The guidelines in Framework Design Guidelines present many recommendations, with uncomplicated designations, like the following:

- Do: Something you should always (with rare exceptions) do

- Consider: Something you should generally do, with some justifiable exceptions

- Do not: Something you should almost never do

- Avoid: Something that is generally not a good idea to do

Additionally, a great deal of what is described in Framework Design Guidelines is available online at MSDN, under the topic of “Design Guidelines for Developing Class Libraries.” You will also find additional information on MSDN blog sites of the authors, Krzysztof Cwalina and Brad Abrams.7

Microsoft PnP Group

The Microsoft patterns and practices (PnP) group provides guidance, tools, code, and great information in many forms, including the following:

- Guides: Books that offer recommendations on how to design and develop applications using various Microsoft tools and technologies.

- Enterprise library: A reusable set of components and core functionality that help manage important crosscutting concerns, such as caching, encryption, exception handling, logging, and validation.

- Videos, symposiums, and hands-on labs: Many structured and unstructured ways to learn Microsoft technologies, guidance, and the Enterprise Library.

- Reference applications: The Enterprise Library download includes source code and sample applications that demonstrate proper usage.

____________________

Enterprise Library (EntLib) includes extremely thorough documentation and source code. It is a very valuable resource for learning better architectural, design, and coding practices.

![]() Practice 2-7 Learn from the Offerings of the Microsoft Patterns and Practice Group

Practice 2-7 Learn from the Offerings of the Microsoft Patterns and Practice Group

Presentation Layer Design Patterns

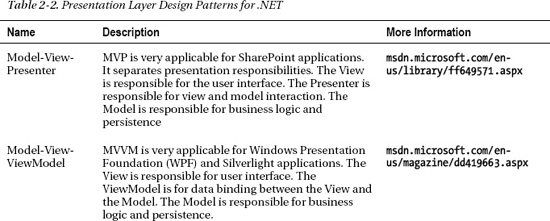

Whether you are building browser-hosted or Windows desktop applications, the presentation layer of the application is often a source of changing requirements and new features. The reason is that this is the surface of the application that the end-user sees and interacts with. It is the human-machine interface.

Developers have long struggled to implement systems that enable user interface changes to be made relatively quickly without negatively impacting other parts of the system. The goal of the Presentation Layer design pattern is to more loosely couple the system, in such a way that the domain model and business logic are not impacted by user interface changes. A great deal of important work has been done to bring better patterns to.NET user interface software. Investigating and adopting these design patterns offers an excellent opportunity to bring better .NET practices to your software development. Table 2-2 provides a list of presentation layer design patterns worth investigating further.

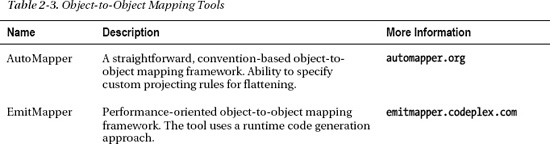

Object-to-Object Mapping

An object-to-object mapping framework is responsible for mapping data from one data object to another. For example, a Windows Communication Foundation (WCF) service might need to map from database entities to data contracts to retrieve data from the database. The traditional way of mapping between data objects is coding up converters that copy the data between the objects. For data-intensive applications, a lot of tedious time and effort is spent writing a lot of code to map to and from data objects. There are now a number of generic mapping frameworks available for .NET development that virtually eliminate this effort. Some of the features to look for include

- Simple property mapping

- Complex type mapping

- Bidirectional mapping

- Implicit and explicit mapping

- Recursive and collection mapping

There are many object-to-object mapping tools available. Table 2-3 provides a list of a few object-to-object mapping tools that are worth evaluating.

![]()

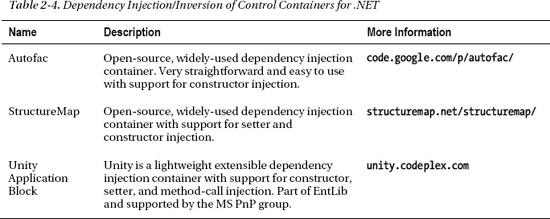

Dependency Injection

The Factory Method pattern is an excellent way to move the logic needed to construct an object out of the class that uses the object. The class calls a factory method that encapsulates any complex processes, restricted resources, or details required to construct the object. What if the Factory pattern could be even easier? The class could define what it needs as arguments of a specific type in its constructor. Then the class would expect the code that is instantiating the object to pass in instances of classes that implement that interface. In other words, the class does not instantiate its external dependencies. The class expects any code that needs the class to instantiate and supply the external dependencies. This is the concept behind dependency injection (DI), specifically constructor injection. This is useful in unit testing, as it is easy to inject a fake implementation of an external dependency into the class-under-test.

Some of the benefits of DI include

- Improved testability: Providing fake implementations, stubs and mocks, for unit testing in isolation and interaction testing.

- Configuration flexibility: Providing alternative implementations through configuration.

- Lifetime management: Creating dependent objects is centralized and managed.

- Dependencies revealed: With constructor injection a class's dependencies are explicitly stated as arguments to the constructor.

![]() Note Dependency injection does introduce abstraction and indirection that might be unnecessary or confusing to the uninitiated. Take the time to establish DI in a clear and appropriate way.

Note Dependency injection does introduce abstraction and indirection that might be unnecessary or confusing to the uninitiated. Take the time to establish DI in a clear and appropriate way.

There are different forms of DI: constructor, setter, and interface injection. The concept of inversion of control (IoC) is closely associated to DI as this is a common way to implement tools that provide DI facilities. IoC commonly refers to frameworks, or “containers”, while DI refers to the strategy. There are a great many IoC containers available for .NET development.8 Table 2-4 provides a list of just a few that are worth evaluating.

____________________

8For more information see http://elegantcode.com/2009/01/07/ioc-libraries-compared/.

Research and Development

Since 1991 Microsoft Research has performed ground-breaking research in computer science as well as in software and hardware development.9 The Kinect product is a sensational example of the results of their work. There are many other projects with names like CHESS, Code Contracts, Cuzz, Doloto, Gadgeteer, Gargoyle, Pex, and Moles. Information on their work is available at the Microsoft Research and DevLabs websites.10 Some of the research is targeted toward finding solutions to very specific problems while other work has broader applicability. Some of the results have not yet made it out of the lab while other work is now part of the .NET Framework. For the developer looking to apply new and innovative approaches to .NET development, this research work is a great source of information and new .NET practices.

![]() Practice 2-8 Stay on Top of the Innovative Work of Microsoft Research

Practice 2-8 Stay on Top of the Innovative Work of Microsoft Research

In this section, the focus is on two specific .NET practice areas that have emerged from their research and development. There are many others and there will surely be more in the future. The purpose of this section is only to give you a taste of the kind of work and to show you how learning about research and development reveals new and different .NET practice areas.

____________________

9For more information see http://research.microsoft.com.

10For more information see http://msdn.microsoft.com/en-us/devlabs/.

Automated Test Generation

Writing test code is not easy, but it is very important. Chapter 8 of this book is dedicated to the topic of unit testing and writing effective test code. The challenges in writing test code come from the dilemmas that many projects face:

- There is a lot of untested code and little time to write test code.

- There are missing test cases and scenarios and few good ways to find them.

- There are developers that write thorough test code and some that do not.

- There is little time to spend on writing test code now and problems that could have been found through testing that waste time later.

One goal of automated test generation is to address these kinds of dilemmas by creating a tool set that automates the process of discovering, planning, and writing test code. Some of these tools take a template approach, where the tool examines the source code or assemblies and uses a template to generate the corresponding test code.11 Another approach focuses on “exploring” the code and generating test code through this exploration.

![]() Practice 2-9 Investigate Automated Test Generation As an Emerging .NET Practice Area

Practice 2-9 Investigate Automated Test Generation As an Emerging .NET Practice Area

A Microsoft Research project called Pex, which is short for parameterized exploration, takes an exploration approach to generating test code.12 Pex interoperates with another tool known as Moles. It is beyond the scope of this book to delve into the details of Pex and Moles; however, Appendix A provides resources for further investigation. The important idea is that Pex and Moles provide two important capabilities:

- Generate automated test code

- Perform exploratory testing to uncover test cases that reveal improper or unexpected behavior

These two tools, Pex and Moles, are brought together as part of the Visual Studio Power Tools. MSDN subscribers can download Pex for commercial use. Pex works with Visual Studio 2008 or 2010 Professional or higher. The sample code is provided as a high-level look at the code that Pex and Moles produces and how these tools are a .NET practice area worth investigating.13

____________________

11I have used the NUnit Test Generator tool from Kellerman Software to generate literally hundreds of useful test method stubs to help write tests against legacy source code. For more information see http://www.kellermansoftware.com/p-30-nunit-test-generator.aspx.

12For more information see http://research.microsoft.com/en-us/projects/pex/downloads.aspx.

13Chapter 8 focuses on current automated testing practices while this section is about the emerging practice of automated test generation. An argument could be made that Pex and Moles belongs in Chapter 8. Think of this as a preview of one of the tools and technologies yet to come.

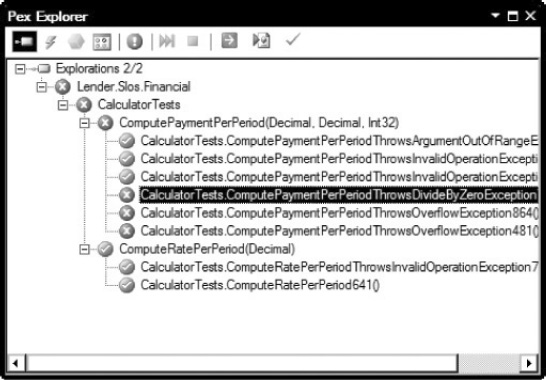

Running Pex to create parameterized unit tests adds a new project named Tests.Pex.Lender.Slos.Financial, which contains the test code that Pex generated. Once generated, the next thing is to select Run Pex Exploration from the project's context menu.

The results of the Pex exploration include three failing test cases; the output is in the Pex Explorer window, as shown Figure 2-2. One of the test cases is failing because the ComputePaymentPerPeriod method is throwing a divide by zero exception. The other two are caused by an overflow exception in that method.

____________________

14For Pex documentation and tutorials see http://research.microsoft.com/en-us/projects/pex/documentation.aspx.

Figure 2-2. Output shown in the Pex Explorer window

These three test cases are failing because the method-under-test is throwing exceptions that are not expected. The code is almost certainly not working as intended, which is the case in this example. There is code missing from ComputePaymentPerPeriod and Pex has revealed it: the method argument ratePerPeriod is not guarded against invalid values with if-then-throw code.

By investigating, debugging, and analyzing the Pex tests, five new tests cases, as shown in Listing 2-1, are added to the test code in the Tests.Unit.Lender.Slos.Financial project. With this, the Pex test code has done its job and revealed the unit testing oversight. The Pex generated code might or might not remain a project of the solution, depending on your situation. As an individual developer you could use Pex and Moles to enhance your unit testing, or as a team leader, you could use Pex and Moles to review the test code of others. The new Pex project could remain a part of the solution and pushed to the code repository as a permanent set of automated tests.

In the end, the code in the method-under-test is improved with new if-then-throw statements so that all of these five test cases pass.

Listing 2-1. Pex and Moles: Discovered Test Cases

[TestCase(7499, 0.0, 113, 72.16)]

[TestCase(7499, 0.0173, 113, 72.16)]

[TestCase(7499, 0.7919, 113, 72.16)]

[TestCase(7499, -0.0173, 113, 72.16)]

[TestCase(7499, -0.7919, 113, 72.16)]

public void ComputePayment_WithInvalidRatePerPeriod_ExpectArgumentOutOfRangeException(

decimal principal,

decimal ratePerPeriod,

int termInPeriods,

decimal expectedPaymentPerPeriod)

{

// Arrange

// Act

TestDelegate act = () => Calculator.ComputePaymentPerPeriod(

principal,

ratePerPeriod,

termInPeriods);

// Assert

Assert.Throws<ArgumentOutOfRangeException>(act);

}

Through this example, it is very clear that Pex and Moles have the power to improve software. It revealed five test cases where invalid values of the argument ratePerPeriod were not being tested. The .NET practice area of automated test generation is emerging as a significant way to develop better test code. Microsoft Research has other projects that you can find within the general topic of Automated Test Generation.15

Code Contracts

Code Contracts is a spin-off from the Spec# project at Microsoft Research.16 Code Contracts is available on DevLabs and is also a part of the .NET Framework in .NET 4.0, which is covered in Chapter 7. Code contracts are very relevant to code correctness and verification.17 The work is related to the programming concept of design-by-contract that focuses on

- Preconditions: Conditions that the method's caller is required to make sure are true when calling the method

- Postconditions: Conditions that the method's implementer ensures are true at all normal exit points of the method

- Object Invariants: A set of conditions that must hold true for an object to be in a valid state

The System.Diagnostics.CodeContract class provides methods to state preconditions, postconditions, and invariants. Code Contracts is then supported by two tools: Static Checker and Rewriter, which turns the CodeContracts method calls into compile-time and runtime checks.

![]() Practice 2-10 Consider Code Contracts to Improve Testability and Software Verification

Practice 2-10 Consider Code Contracts to Improve Testability and Software Verification

____________________

15For more information see http://research.microsoft.com/en-us/projects/atg/.

16For more information see http://research.microsoft.com/en-us/projects/specsharp/.

17For a very comprehensive treatment of Code Contracts, see this 14-part series of blog postings: http://devjourney.com/blog/code-contracts-part-1-introduction/.

The primary advantage of using Code Contracts is improved testability, a static checker that verifies contracts at compile-time, and the facility to generate runtime checking using the contracts. Code Contracts is a .NET practice area that is very powerful and should take off as the new features of .NET 4.0 are more widely employed.

Microsoft Security Development Lifecycle

The Microsoft Security Development Lifecycle (SDL) is a secure application development program developed and instituted at Microsoft. Their approach to developing secure applications has been made available to all developers. Microsoft makes the SDL information and tools available for free. It includes a lot of helpful information related to security practices, guidelines, and technologies. Everything is grouped into seven phases:

- Training

- Requirements

- Design

- Implementation

- Verification

- Release

- Response

Based on their internal software development, the SDL techniques embraced by Microsoft resulted in software that is more secure. When they followed the SDL for a product, the number of security defects was reduced by approximately 50 to 60 percent.18

![]() Practice 2-11 Appreciate Secure Application Development and the Microsoft Security Development Lifecycle

Practice 2-11 Appreciate Secure Application Development and the Microsoft Security Development Lifecycle

The Microsoft SDL process lists many practice areas. For example, within the Design phase the SDL practice of Threat Modeling is highlighted. Threat modeling is a structured approach to identifying, evaluating, and mitigating risks to system security. This .NET practice area offers sensible ways to anticipate attacks and reveal vulnerabilities. Threat modeling helps better integrate security into your development efforts.

The Verification phase points to the practice area of Fuzz Testing, commonly known as fuzzing. The idea behind fuzzing is straightforward:

- Create malformed or invalid input data.

- Force the application under test to use that data.

- See if the application crashes, hangs, leaks memory, or otherwise behaves badly.

____________________

18Source: Michael Howard, A Look Inside the Security Development Lifecycle at Microsoft available at http://msdn.microsoft.com/en-us/magazine/cc163705.aspx.

Fuzzing finds bugs and security vulnerabilities by trying to exploit problems that exist in the application.19 If there are potential security issues in an application, bad guys will exploit them to impact you and your end-users through denial of service attacks or worse. Fuzzing is a .NET practice area that helps find and fix problems proactively.

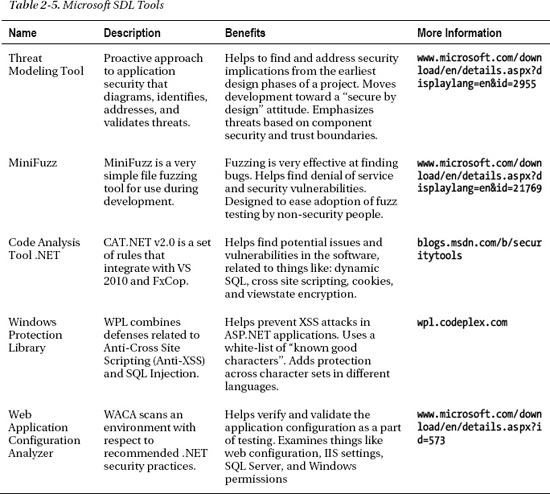

It is important to know how to protect your applications from Cross Site Scripting (XSS), SQL Injection, and other attacks. A list of some of the key tools that the Microsoft SDL offers or is developing is presented in Table 2-5. These tools help in the development of more secure applications, which is an important .NET practice area.

____________________

19For more information see http://blogs.msdn.com/b/sdl/archive/2010/07/07/writing-fuzzable-code.aspx.

Summary

In this chapter you learned about where you might find better .NET practices. Every day, new and different practices are being developed and adopted in areas like secure application development and Microsoft Research. The expertise and experience of the PnP group comes forth in guide books, the Enterprise Library, and the very thorough documentation and reference applications they offer.

Most importantly, you learned that your team and organization have internal sources of better .NET practices. The retrospectives, technical debt, and defect tracking system are rich sources of information that can be turned into better practices.

In the next chapter, you will learn the mantra of achieving desired results. Positive outcomes are the true aim of better practices and the yardstick with which they are measured.