C H A P T E R 13

Aversions and Biases

This chapter is about the tendencies of many people to keep better practices from being adopted. As human beings, we see the world, not as it is, but as we perceive it to be. If our eyes and mind could not deceive us then optical illusions would not work.1 To explain the success of optical illusions, there are two basic theories:

- Physiological Illusions: Effects that are based on the manner by which things are sensed, which influences or changes our perceptions.

- Cognitive Illusions: Effects that are based on the manner by which things are judged, thought about, or remembered, which influences or changes our perceptions.

Although physiological illusions are interesting, it is not a topic we will cover in this chapter. It is unlikely that someone is opposing a better practice because they are literally seeing stars. The more relevant topic relates to the mental processes that influence and impact the adoption of better practices. More specifically, there are two ways cognitive perception often thwarts new or different practices:

- Aversion: Reluctance of an individual or group to accept, acknowledge, or adopt a new or different practice as a potentially better practice.

- Bias: Tendency or inclination of an individual or group to hold a particular view of a new or different practice to the detriment of its potential.

In the case of an aversion, the developer or team is not interested in the practice. In the case of a bias, the developer or team does not hold a fair or complete understanding of the practice. Some would argue that an aversion is simply a very strong bias. However, the distinction is important in that people are usually more willing to talk about practices that they are biased against. If there is an unwillingness to consider a change in practice then it is an aversion. If it is ambivalence, skepticism, uneasiness, or worry then there is likely a bias that needs to be overcome. It is important to know how to approach either an aversion or a bias.

Nobody wants to have food they cannot stand shoved down their throat, even if they are told it is “healthy.” People often have an aversion to new or different practices for some reason; ultimately the reason may not turn out to be a good one, but it is still a reason. With the exception of exigent circumstances, such as a life and death situation, the worst way to deal with an aversion is to force the better practice upon the individual or group. In almost all cases, it is best to start by listening. Assume there are good reasons that you do not know about. Focus on finding those reasons. Not surprisingly, many good practices are made better by information that those that oppose the change in practice. They cannot articulate their concerns, and so, they have an aversion. You can benefit from knowing what they know or what they are reluctant to tell you. Do not expect to convince them to support the change, but you should fully convince them that you understand and appreciate their concerns.

_____________________

1 This site has a lot of great optical illusions: http://www.123opticalillusions.com/.

In the case of a bias, the way to deal with the bias depends on the strength and nature of the bias. If it is a mild confusion then a brief explanation may be enough to eliminate the bias. Sometimes a little training is all that is needed. Other times you need to make a strong case in a group presentation. The goal is to make a persuasive case by cultivating a change in knowledge, skill, or attitude.

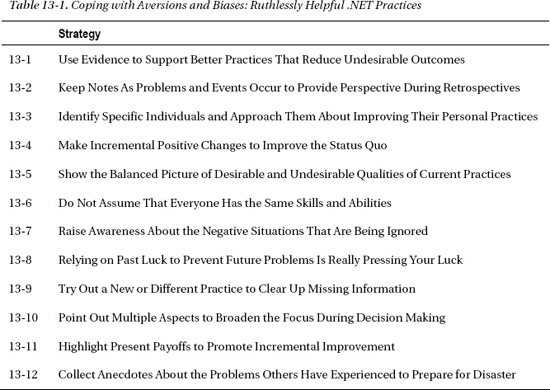

In the broadest sense, this chapter describes cognitive biases. These are observable behaviors that are studied in both cognitive science and social psychology. Experiments have shown that the information that is actually taken in by a person depends on that person's assumptions, aversions, biases, and preconceptions.2 In other words, people cannot always see the evidence that is right in front of them. People tend to perceive what they expect to perceive. This is a bias that impacts people evaluating evidence. Researchers find biases in decision-making, thinking, interacting, and remembering. There are many, many cognitive biases and this chapter cannot provide practices to help you cope with every one of them. Instead, this chapter introduces a dozen aversions and biases and provides practices to help cope with those twelve, which are listed in Table 13-1. The hope is that by understanding how cognitive bias impacts changes in practice that you can learn to identify, cope with, and manage those biases.

_____________________

2 This fascinating book covers many cognitive biases: Richards J Heuer, Psychology of Intelligence Analysis (Washington, DC: Center for the Study of Intelligence, Central Intelligence Agency, 1999).

Group-Serving Bias

There is a natural tendency for people to take credit for successes and distance themselves from failures. For the individual this is called a self-serving bias and for a group it is group-serving bias.3 A development team would like to believe that what the team is doing is leading to success and that the problems and issues are caused by situations or circumstances beyond the control or influence of the team. This bias can inhibit the team from adopting better practices because they would have to first acknowledge that they bear some responsibility for the issues. The message they are resistant to hear is, “We could have done things better.”

For example, a team of developers writes a lot of code under severe schedule pressure, and so there is little or no unit testing before the system is released to the quality assurance (QA) testers. The developers are happy to take credit for their productivity and extraordinary effort. However, the QA testers soon find that the system is not stable and that important functionality is incomplete or incorrect. The system is not working as intended. In this example, the evidence clearly shows that the software was deployed for QA to test before it was ready. At this point, the developers could acknowledge that more unit and integration testing on their part would have found these issues before QA testing began.

If the entire project understood and accepted that the developers were delivering an unstable and incomplete system then the responsibility is shared by the entire project team. If, on the other hand, the developers ignored or misrepresented the situation then the developers must bear the responsibility for releasing the system to QA before it was ready. The reality is often somewhere between these two extremes. The better practice is to not release the software to QA until the developers are convinced that the software is stable enough to test and that there are no significant showstopper issues.

![]() Practice 13-1 Use Evidence to Support Better Practices That Reduce Undesirable Outcomes

Practice 13-1 Use Evidence to Support Better Practices That Reduce Undesirable Outcomes

There is often clear evidence that mistakes were or are being made and that things could be done better. Better practices are premised on the idea that mistakes can be avoided and that there are better ways to do things. Use that evidence to point to the better practices. In this section's example, the developers could have insisted that they needed time to determine if the system was ready for QA testing. They could have established a set of unit and integration tests. They could have worked with QA to establish the minimum and essential criteria that determines if the software is ready to be tested. The important practice here is to avoid the self-serving bias and look to the evidence that a better set of practices can lead to better outcomes.

Rosy Retrospection

It is nice to look back on an issue, mistake, or event and believe that it was not as bad as it seemed. People are biased to have a rosy memory and regard the past as being less negative or more positive than it really was.4 When the problem came up, the situation may have been dire, but now that events are seen in retrospect, the problem is minimized. It is understandable that for unpredictable and uncontrollable events this helps people overcome the trauma of the event. Few people want to remember just how bad things were. However, if the event was preventable, this bias is unhelpful. The message is, “In retrospect, the problem was not that bad, so we can get through it if it happens again.”

_____________________

3 For more information see http://en.wikipedia.org/wiki/Self-serving_bias.

4 For more information see http://en.wikipedia.org/wiki/Rosy_retrospection.

This tendency blocks many better practices from being adopted. Continuous integration is a practice that helps to avoid the long and difficult late-integration cycles that many development projects face. Too many developers forget how catastrophic late integration is. Instead of clearly remembering the difficulties involved, developers can rationalize the situation during a retrospective meeting. Late integration is described as an unavoidable obstacle that was overcome and will be overcome in the next iteration or a future project.

![]() Practice 13-2 Keep Notes As Problems and Events Occur to Provide Perspective during Retrospectives

Practice 13-2 Keep Notes As Problems and Events Occur to Provide Perspective during Retrospectives

Jot down short notes on the pain-points and problems that are occurring as they are occurring. These notes help during retrospective meetings because they recall the real and serious concerns that underlie the issue. They help make the problems clearer and get people to focus on avoiding the preventable problems of the past by adopting better practices.

Group-Individual Appraisal

In some organizations, a manager scolds an entire department for the action or inaction of one or two individuals. The classic example is an e-mail that reprimands everyone in the organization for not submitting their timesheets on time. Those who submit their timesheets on time ignore the e-mail because it does not apply to them. Ironically, the many who do not turn in their timesheets on time also ignore the e-mail. Individuals are likely to think that nobody in the department is turning in their timesheet on time, and so assume their behavior is the norm. Individuals in the department ultimately attribute the poor appraisal to the entire department, not to themselves.

In the realm of software development, a team leader can gather the team and explain that the defect rate is unacceptably high. The team leader hopes that the one or two developers who are writing buggy code get the message and improve. This rarely works because those individual developers do not see themselves as responsible for the team's high defect rate.

![]() Practice 13-3 Identify Specific Individuals and Approach Them About Improving Their Personal Practices

Practice 13-3 Identify Specific Individuals and Approach Them About Improving Their Personal Practices

In this example, it would be better if the team leader talked to the individual developer directly about the unacceptably high defect rate. That developer needs to know that their individual performance is a problem for the team. Letting the developer know that their personal practices are expected to improve offers an opportunity to teach better practices.

Status Quo and System Justification

People become comfortable with the way things are. They like the status quo. Alternative ways of doing things are disparaged.5 The existing state of affairs is familiar and a source of predictability. People have developed habits and have come to know what to do and how to do them. There is a bias toward justifying, defending, and supporting the status quo. Any disruption to the status quo is opposed, sometimes blocked. Some people cannot rationally discuss a new or different practice simply because it represents a change to the way things have always been done. The message is, “Do not introduce change because any change is disruptive and bad.”

Some development environments have become entrenched in their practices and processes. One example is manual deployments. Perhaps a developer copies the files to the target server and changes the configuration settings. All the evidence shows that the manual deployments are error prone because the manual steps are regularly misapplied. Since some developers are careful and some are not, there is a lot of unevenness in the quality and reliability of the deployments. However, this is the way deployments have always been done. Nobody wants to adopt an automated deployment solution, in large part, because it changes the status quo.

![]() Practice 13-4 Make Incremental Positive Changes to Improve the Status Quo

Practice 13-4 Make Incremental Positive Changes to Improve the Status Quo

People are afraid that if the status quo is destroyed they will be lost. A better message is one that focuses on bringing forth a slightly better status quo. The new status quo ought to keep all the benefits of the old status quo and introduce a few incremental improvements. In the case of the manual deployments, there could be a command file that copies the files to the target server. The developer still sees that the files go to the proper folders. That command file could also run an MSBuild script that sets all the configuration settings. Again, the developer reviews the values to double-check that the script works properly. The message is that only the tedious and error-prone parts of the task are improved without reducing the developer's involvement. The results from these incrementally better practices are more consistent and reliable deployments.

Illusory Superiority

Many people like to think that the way they are doing things is just as good, if not better, than some other way. In other words, there is an overestimation of the desirable qualities and an underestimation of the undesirable qualities, referred to as illusory superiority.6 The message is, “The practice that we follow is superior to another new or different practice.”

An example of this can be seen with regards to automated code analysis. Some team leaders feel that group code reviews are the only appropriate form of code analysis. To be sure, there are many desirable benefits of reviewing the source code as a group. Those benefits are undeniable. However, team code reviews do have undesirable qualities, as well. For one, they take up a significant amount of time. For another, they often do not occur frequently enough or cover enough of the source code. Most significantly, they can get bogged down in topics like coding standards and guidelines that the automated code analysis tools readily identify. In other words, the group covers matters that should already be settled and ends up wasting time remediating preventable problems.

_____________________

5 For more information see http://en.wikipedia.org/wiki/System_justification.

6 For more information see http://en.wikipedia.org/wiki/Illusory_superiority.

The goal is to always keep things in perspective. Current practices should not be justified by ignoring the undesirable qualities of that practice. With manual code reviews, the practice can be enhanced by automated code analysis. For example, prior to the team getting together to review the code, the developer is expected to meet all the coding standards and guidelines that the automated code analysis tool is configured to find. Yes, exceptions may be warranted, and they should be brought to the attention of the group. In this way, the code review does not focus on the mundane and settled issues, but remains focused on the quality of the solution and the implementation of the design. The better practice is to recognize that undesirable qualities of current practices can be improved by new and different practices.

Dunning-Kruger Effect

A person with a lot of experience and expertise can make the assumption that others have the same understanding and skills that they have. This incorrect calibration of what others know is part of the Dunning-Kruger effect.7 Experts can be biased to believe that if they know something then everyone else ought to know it as well. There is a mistaken assumption that their specialized knowledge is common knowledge. This can make them a weak advocate for better practices when faced with opposition or hesitation from others. Their bias leads them to believe that their detractors, who are assumed to be equally informed, have good reasons to oppose them. In many cases, this is simply not true.

For example, a developer who has been unit testing code for ten years has acquired a lot of experience. If they read widely and keep on unit testing then they bring the experience and expertise of others. This aptitude, skill, and knowledge justifies their strongly-held position that unit testing is a beneficial practice. Another less experienced developer, who has limited experience unit testing, might view the practice as unproductive and unhelpful. The experienced advocate wrongly assumes that his or her colleague is equally skilled, and therefore, does not promote the practice of unit testing. An opportunity to benefit from the experience and expertise of the more senior developer is lost.

![]() Practice 13-6 Do Not Assume That Everyone Has the Same Skills and Abilities

Practice 13-6 Do Not Assume That Everyone Has the Same Skills and Abilities

Start with the premise that everyone has different experiences, skills, and abilities. Assume that, knowing what you know, they would be likely to support the better practices you advocate. This approach suggests building a proof-of-concept, having a trial period, running a pilot project, or designing a training exercise. The goal is to get everyone closer together on the evidence as to whether the practice is better or not better. The most important thing is to focus on knowledge transfer and better practices through experience and know-how.

_____________________

7 For more information see http://en.wikipedia.org/wiki/Dunning%E2%80%93Kruger_effect.

Ostrich Effect

All too often, people ignore an obviously negative situation instead of dealing with it straight on. Like the proverbial ostrich, they bury their head in the sand to avoid the dire circumstances that surround them.8 The implications of facing the situation may be too painful to deal with and sometimes they hope that by ignoring the situation it will improve on its own. Others may believe that by not recognizing the negative situation they cannot be held accountable for not resolving it. This message is, “If I do not see the problems, I do not have to deal with the problems.”

An example of the ostrich effect in software development is security and threat assessment. Software vulnerabilities are a growing problem; however, all too many developers are not involved enough in dealing with security and minimizing vulnerabilities. Developing systems that are “secure by design” requires that those developers who design and build the software are actively assessing the security of the software. Ignoring security and not worrying about vulnerabilities and threats cannot lead to secure designs. No aspect of the software is improved by ignoring the potential or existing problems that lie within that aspect of software development.

![]() Practice 13-7 Raise Awareness About the Negative Situations That Are Being Ignored

Practice 13-7 Raise Awareness About the Negative Situations That Are Being Ignored

The security development lifecycle is all about thinking through the security implications of decisions at every step of development. One example is the practice of threat modeling. Through this practice the design is considered from a threat vulnerability perspective so that potential negative situations are revealed. With the information that comes from threat modeling, designs can then be improved. In general, directly confronting current and potential negative situations is one of the most effective ways to prevent problems and adopt better practices.

Gambler's Fallacy

To the person who plays the lottery with the same numbers every day there is often the mistaken assumption that their odds improve each time their numbers are not chosen as the winning numbers. The biased belief that past events influence future probabilities is the gambler's fallacy.9 The odds of winning are always the same and are not influenced by past events. Similarly, past luck does not influence future luck. When it comes to resisting new and different practices that prevent problems there can be some of the gambler in all of us. The message is, “We have avoided any problems so far, so let's assume we will continue to avoid problems.”

This can be seen in software development when important practices are ignored only because problems have not yet occurred. For example, the uninitiated developer may not like reversion control. They prefer to operate on their own development environment free from the constraints of the version control system. They do not regularly check in their code or get the latest code from the other developers. They rely on the fact that, since they have always been able to manage things this way, they can continue to operate in this way. When their luck runs out and the problems come from late integration, lost changes, or worse problems, it is their unwise decision not to follow best practices that is to blame.

_____________________

8 For more information see http://en.wikipedia.org/wiki/Ostrich_effect.

9 For more information see http://en.wikipedia.org/wiki/Gambler%27s_fallacy.

Direct and unambiguous communication is very important to adopting a better practice. It must be made clear that not following a practice that prevents problems is unwise and unacceptable. Any past luck developers have had not following the practice needs to be quickly forgotten. The project's gamblers need to stop gambling to ensure that better practices are consistently followed.

Ambiguity Effect

When there is ambiguity or missing information there is a tendency to avoid a direction, practice, or option. It may be a better practice, however, simply because there is not enough information; there is a bias against the potentially better practice.10 People are uncomfortable with ambiguity, but that should not mean a new or different practice is to be avoided. It means the practice needs to be understood, investigated, and appreciated. Once the uncertainty is cleared up then it can be recognized as a helpful practice or discarded as an unhelpful practice.

There is a lot of ambiguity that surrounds unit testing. Some developers avoid unit testing simply because they have more questions than answers. They avoid the practice because they do not know where to start or how to proceed. The same is true for other new and different practices that offer a lot of promise but present a lot of uncertainty as well.

![]() Practice 13-9 Try Out a New or Different Practice to Clear Up Missing Information

Practice 13-9 Try Out a New or Different Practice to Clear Up Missing Information

Often a little experience or a short tutorial on a better practice can fill in the missing information that is holding back adoption. Other times the team needs to try out the practice for a short period of time just to clear up some vagueness and doubt. This is especially true when their reluctance relates to feasibility and practicality. In the case of unit testing, many developers have found that unit testing is helpful and improves their overall productivity. This is true once they have experienced the benefits of problem prevention and early defect resolution. It is the simple act of trying out a potentially better practice that fills in the missing pieces and convinces the team to adopt the practice.

Focusing Effect

There is a tendency, called the focusing effect, for people to put too much emphasis on one aspect of a problem or event during decision making.11 Often there are many contributing factors that influence things; however, many people search for or focus on one in particular. Since there is a singular focus, this bias can lead to a situation where the important impacts of the other aspects are ignored.

When investigating a significant problem, the focusing effect bias can lead people to search for just one root cause. In actual fact, there may be many contributing factors to the problem. Each of the contributing factors created the conditions or caused the actions that led to the problem. The problem-solving effort ought to lead to more than one recommended change; however, only one aspect is seriously considered. Instead of a set of better practices being selected, only one is selected. Sometimes, no change in practice is recommended because the one aspect that was investigated is an unavoidable aspect. It is better to review all the contributing factors of a problem because a better practice can change the conditions and prevent the problem.

_____________________

10 For more information see http://en.wikipedia.org/wiki/Ambiguity_effect.

11 For more information see http://en.wikipedia.org/wiki/Focusing_effect.

It is usually better to assume that there are multiple reasons that a problem or event occurred. There are usually multiple conditions that existed at the time the problem occurred. There are multiple actions that happened or failed to happen that contributed to the problem. Expand the focus to multiple causes as a way to reveal many more new and different practices that can improve the situation.

Hyperbolic Discounting

Risk and reward are not always considered in a rational way. Add in the element of time delay and the tendency is to favor the immediate reward, even though the delayed reward will be greater. This is the cognitive bias that underlies hyperbolic discounting.12 Given two options that have the same risk, many people prefer a smaller reward that they can realize today rather than wait for a larger reward that comes later on. A payoff today is enjoyed today, but a future payoff requires the person to wait to enjoy it.

Understanding this bias is applicable to best practices in the idea of incremental improvement. In software development, better practices are sometimes not adopted because there are several options, and no one option is a clear favorite. Some take a long time to show improvement and require significant effort to get started, but offer huge potential benefits. Others can show immediate results, but the potential benefits are modest. In these cases, the practice that shows the quickest results could be the one to emphasize. The relatively quick rewards that come from the payoff are very gratifying and support further incremental improvement. The decision-makers appreciate the immediate reward of supporting the change.

![]() Practice 13-11 Highlight Present Payoffs to Promote Incremental Improvement

Practice 13-11 Highlight Present Payoffs to Promote Incremental Improvement

Take time to consider the many better practices that can be implemented. Some are likely to produce immediate or near-term improvements. Those are the practices that have early payoffs and should be promoted. Adopting better practices is an initiative that is helped by incremental, not radical, changes. As each better practice is put into place the rewards of the previously selected practices help keep the initiative active.

_____________________

12 For more information see http://en.wikipedia.org/wiki/Hyperbolic_discounting.

Normalcy Bias

Too often people do not plan for a problem or event simply because it has never happened to them before. Since they have not had the experience, they are biased against the need to plan for the problem. This is the normalcy bias.13 Most people plan for eventualities that are expected over the normal course of events. On the other hand, there are unexpected and disastrous events that are out of the ordinary, which many people do not prepare for. Some people refuse to plan for a disaster only because they have not experienced the disaster before.

Today, many companies are experiencing problems related to cyber attacks and hacking. However, some development groups refuse to plan or prepare for these attacks and other threats. Unlike the ostrich effect, the danger is not present, and so it is hard to point to a specific problem that is or has happened. Today things seem normal and calm, but there is a disaster waiting to happen. The better practice is opposed simply because the problem has never happened to them before.

![]() Practice 13-12 Collect Anecdotes About the Problems Others Have Experienced to Prepare for Disaster

Practice 13-12 Collect Anecdotes About the Problems Others Have Experienced to Prepare for Disaster

Have you read an article that describes how another organization had to cope with a denial of service attack? The stories range from annoying to very ugly.14 In the news recently, a major corporation sent e-mails to all their customers advising them that their personal information, including credit card information, was compromised. Consider the impact to your organization if they had to let all your users know that their personal data is now in the hands of an intruder. The horrible experiences of others can be a powerful motivator that counteracts the normalcy bias. Collect the anecdotes and experiences of others who have not followed best practices and use that information to support a change in practices.

Summary

In this chapter you learned about the influence that cognitive biases have upon following or changing better practices. Since software development is significantly impacted by individuals interacting, it stands to reason that how an individual or group perceives and thinks about a new and different practice is very important. Now that you have read about the gambler's fallacy, the Dunning-Kruger effect, and the group-serving bias it should be clear that rational argument alone is frequently insufficient to advance a change in practice. You may need to understand the aversion. You may need to counteract a bias.

_____________________

13 For more information see http://en.wikipedia.org/wiki/Normalcy_bias.

14 Phil Haack describes his experiences at http://haacked.com/archive/2008/08/22/dealing-withdenial-of-service-attacks.aspx.