![]()

Overview of Software Testing

It’s now time to take a look at how test and quality assurance teams can use application lifecycle management ideas to improve their process.

First we will look at the core elements of traditional software testing; test planning, test design, manual test execution, and bug tracking.

When we know what to test and how to do it, we can start looking at automating our tests.

Finally when we have automated all or parts of our testing effort we need a way to integrate the automated tests into our daily work.

But before we dive into the tooling, let’s take a look at some ideas to help us work efficiently with testing in our projects.

Agile Testing

Agile projects are challenging. With a common mindset in which we embrace change and want to work incrementally and iteratively, we have good conditions to deliver what our customer asks for on time.

To get testing to work in an agile environment we need to rethink the testing approach we use. Working with incremental development typically means we need to do lots of regression testing to make sure the features we have developed and tested still continue to work as the product evolves. Iterative development with short cycles often means we must have an efficient test process or else we will spend lots of time in the cycle preparing for testing rather than actually running the tests.

We can solve these problems by carefully designing our tests; this helps us maintain only the tests that actually give value to the product. As the product evolves through increments, so should the tests and we can choose to add only relevant tests to our regression test suite. To make the testing more efficient, we should automate the tests and include them in our continuous integration scheme to get the most value from the tests.

We will now look at ideas to help us design our tests and in the coming chapters we will look at how we can improve our testing process to help us do testing in an agile context.

Defining Tests

To define tests we need to think about what we want to achieve with the tests. Are we testing requirement coverage? Are we testing to make sure the software performs according to our service level agreement? Are we testing new code or re-testing working software? These and other aspects affect the way we think about tests.

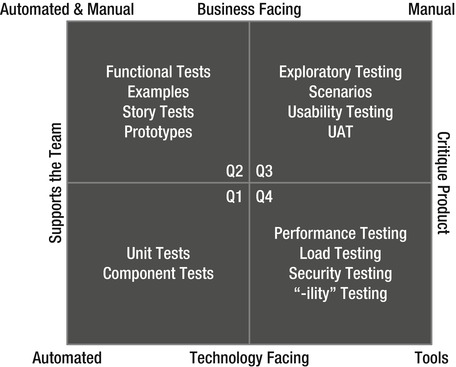

Brian Marick has created the model shown in Figure 20-1 that is excellent when reasoning about what kind of tests we should create, when, and for what purpose. Let’s take a look at the model and how it can be used to help us define our tests in a suitable way.

Figure 20-1. Agile testing quadrants help define our tests

Q1 – Unit and Component Tests

Unit and component tests are automated tests written to help the team develop software effectively. With good suites of unit and component level tests we have the safety net that helps us develop software incrementally in short iterations without breaking existing functionality. The Q1 tests are also invaluable when refactoring code. With good test coverage a developer should feel confident to make a change without knowing all about every dependency. The tests should tell us if we did wrong!

Q2 – Functional Tests

Functional tests are mainly our traditional scripted system tests in different flavors. It is hard to avoid running these tests manually at first, but we should try to find ways to automate them as we learn more about our product and how it needs to be tested. Many functional tests can be automated and then we can focus on early testing for the manual tester.

Q3 – Exploratory Testing

Exploratory testing is a form of software testing in which the individual tester can design and run tests in a freer form. Instead of following detailed test scripts, the tester explores the system under tests based on the user stories. As the tester learns how the system behaves the tester can optimize the testing work and focus more on testing than documenting the test process.

We should leave this category of tests as manual tests. The focus should be to catch bugs that would fall through the net of automated tests. A key motivation for automated testing is to let do more of exploratory and usability testing because these tests validate how the end-user feels when using the product.

We will look more at exploratory testing in Chapter 21 when we look at how we can plan and test using the Microsoft Test Manager product.

Q4 – Capability Testing

Lastly we have the capability tests. These tests are run against the behavior of the system; we test non-functional requirements, performance, and security. These tests are generally automated and run using special purpose tools, such as load test frameworks and security analyzers.

Acceptance Criteria

Acceptance criteria are to testing what user stories are to product owners. Acceptance criteria sharpen the definition of a user story or requirement. We can use acceptance criteria to define what needs to be fulfilled for a product owner to approve a user story.

Chapter 6 looked at the agile planning process and how product management can use user stories to define the product.

With acceptance criteria we have yet another technique to help us refine the stories.

A user story can be stated as simply as

- As an Employee I want to have an efficient way to manage my expenses.

The conversation around this statement between the product owner and the development team can raise questions such as:

- Who can submit expense reports?

- What states can an expense report be in?

- When is it possible to change or remove an expense report?

- What data is required in an expense report to register it correctly?

- Where are the expense reports stored? For how long?

We use this information to formulate acceptance criteria. Take for instance the question “what states can an expense report be in?” From this we can formulate acceptance criteria such as the following:

- An expense report has the following state model:

- New when created

- Pending after submitted for approval

- Approved

- Rejected

This exercise then leads to more questions to the product owner, for instance should an employee be able to change an expense report that has been refused? So having this type of conversation not only helps us know what to test but also helps define the product.

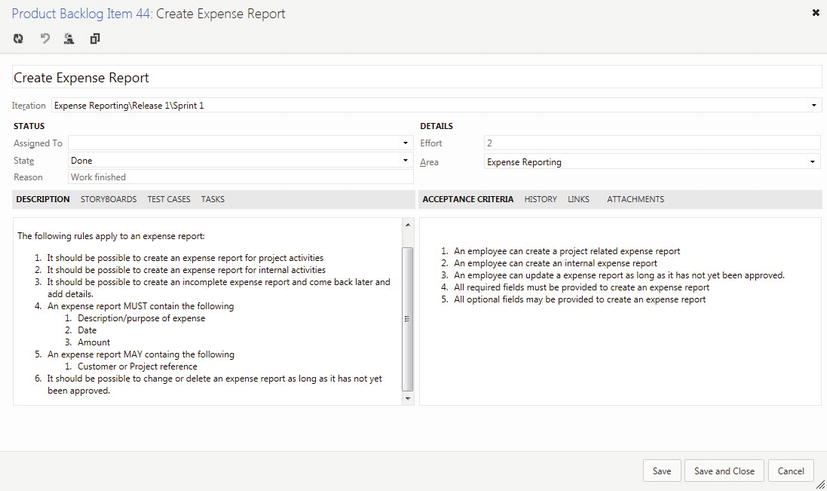

When working with TFS we can capture all important pieces of information from this process in the product backlog work item. The PBI gives good traceability to follow the user story (requirement) to its acceptance criteria. Figure 20-2 shows an example of how the Visual Studio Scrum template shows this information side by side.

Figure 20-2. A product backlog item with acceptance criteria

Planning

Test planning typically involves creating a test plan. The test plan captures the requirements for a period of testing. The test plan includes information about aspects of the quality assurance process, including

- Scope of testing

- Schedule

- Resources

- Test deliverables

- Release criteria

- Approvals

The IEEE 829-20081 standard can be used as a reference to create the test plan documentation structure for a project. We can create this as an overview document with links to the details either in TFS or in other documents.

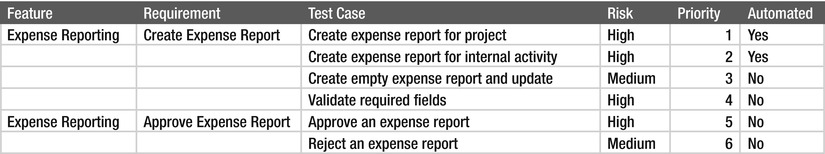

Test Specification and Test Matrix

A big part of a test plan is the list of tests to run during the project. This list is often referred to as the test design specification. The test specification contains the details about which features to test, test cases, grouping of tests into categories, their priorities, and so on. Most of this can be captured in TFS by using product backlog items and test cases, but to get a good overview we should work with a test matrix to summarize the test cases. The test matrix helps us find relevant tests when a requirement is changed, find all tests of a particular priority, or get a list of all automated tests.

The table in Figure 20-3 shows how to define a test matrix. In Chapter 21 we come back to this matrix and look at how we can map this typical table into the test plan in Microsoft Test Manager and use TFS to store our test plans and test cases and at the same time get the metrics automatically calculated for us.

Figure 20-3. Basic test matrix to track test cases with priority and status

Evolving Tests

As a part of the agile process we need to deal with an incremental and iterative development of test assets. As the product goes through the specification-design-implementation-release cycle the test cases also need to adapt to this flow as well. Initially we know very little about a new feature and we typically need to run tests against all acceptance criteria defined for the requirement. When a feature has been completed we should be confident it has been tested according to the test cases and that it works as expected. After that we only need to run test to validate changes in the requirement, which means we must have a process for how we know which tests to run.

Another side of the agile story is to look at how to speed up the testing process to keep up with short iterations. If we follow the preceding ideas we can have techniques to know more about which tests to run. But running all tests manual will probably not be feasible so we need to rethink how we design these test cases.

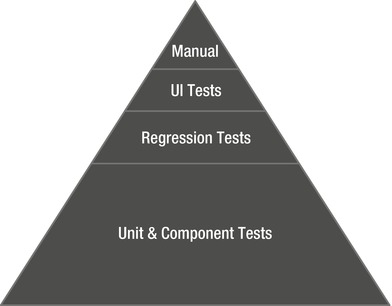

One way to think about how we can structure our test base is to think of it as a pyramid. Figure 20-4 shows how the types of tests from the testing quadrant can be put in proportion in our specific case.

Figure 20-4. Proportions of types of tests

Typically we would focus on a big part of unit and component tests because these are the cheapest to implement and maintain. But these tests do not test the system as a whole so we need to add regression tests to run end-to-end tests as well. Some of the regression tests should be implemented as user interface tests to really simulate how an end-user would use the system, but UI tests are more complex to design and maintain and it is often not practical to have more than a small set of these tests. Most of these tests can and should be automated to give us an efficient way to keep up to the changes in the product.

Strategy for Automated Testing

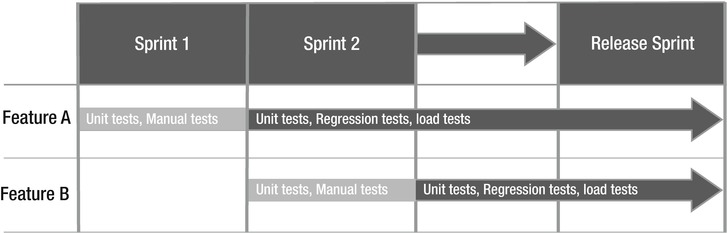

Figure 20-5 shows an approach for evolving tests that we have found practical to use as a model.

Figure 20-5. Strategy for evolving automated tests

The principle here is that when we start with a new requirement we know very little about how the feature behaves and how it needs to be tested. So in order to test it, we typically run manual tests against all acceptance criteria.

When we have verified the new feature and know that it works, we can look at the tests used to achieve this. Based on this knowledge we can do two things; select which test cases we should keep as regression tests and also select a set of test cases to implement as automated acceptance tests.

In coming sprints we then run all automated tests as well as the manual regression tests, which should give us confidence for the quality in the product and help us avoid regressions when evolving the software.

If we look at the automated regression tests, we often find that these represent realistic end-user scenarios. Wouldn’t it be great if we could take those scenarios and use them for performance testing as well? Well, it turns out we can! By using the load testing capabilities in Visual Studio we can plug in the regression tests in performance test suites. We can also start running the performance tests early in the development process because the team can have all tools needed installed and ready for use.

Platform Support for Testing Practices

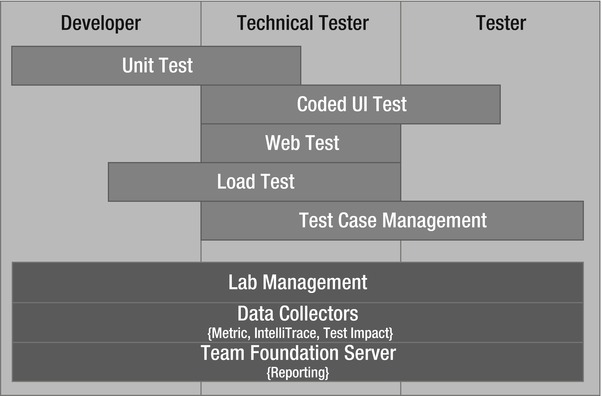

Finally let’s take a look at how the concepts we have looked at fit together. Figure 20-6 illustrates how we added testing to the shared ALM platform. The traditional tester focuses on test case management (planning, designing, and running manual tests). The technical tester works on automated tests—building on the initial work from manual testing. Developers create unit tests as part of the development process. Regardless of who does what we now have a platform for testing to work on and all is available to us as part of the Visual Studio platform!

Figure 20-6. Overview of Microsoft Test Manager and Team Foundation Server

Summary

In this chapter we looked at some of the key concepts in agile testing and how this changes how we do testing in an agile project. Agile testing requires us to start testing early because we aim to deliver working software in every sprint. This means we need to define tests in parallel of feature development and run the tests again as the software evolves. Acceptance criteria is a powerful technique to use to sharpen the definition of user stories and at the same time get the foundation for testing the stories in place. To manage this process we need a solid model for tracking the test cases to know when to re-run tests to avoid regressions in our software.

In the following chapter we will look at how we can apply these principles to our testing process using Visual Studio 2012. First we will create the test assets and run manual tests and then we will look at how we can automate the test cases using several different testing tools available in Visual Studio.