6.1. Data Push Essentials

Data pushing in a web application context implies the server sending data to a browser-based client without the client necessarily requesting it. There are ways to achieve this partially and fully, but before we explore those, let's gain an understanding of where data pushing is important. Understanding the usage scenarios will help you choose the correct alternative and highlight the potential upsides and downsides of these alternatives.

6.1.1. Data Push Usage Scenarios

The financial market data continuously changes as buyers and sellers present their bid and offer prices and engage in trading. If you are building an investment management application or simply an application for displaying market data, then you want to receive the latest data snapshot as soon as it's available. In such cases, server-initiated data updates are suitable.

Online collaboration and chat applications benefit from real-time instant updates. Message passing between the interacting clients is appropriate in this case. Monitoring applications could be more effective if the object being monitored sent a status message to the monitor at periodic intervals to report its current state. Online gaming applications need to instantly relay a player's choices to all other participants for the game to be consistent and relevant.

In all these cases and more data pushing provides the basis for the application's usability and pertinence. Next, you'll see the ways to push data.

6.1.2. The Alternatives

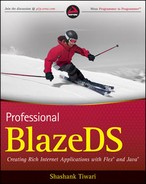

The simplest improvement to a regular request-response method, which mimics data pushing, is polling. Polling uses multiple request-response interactions at a regular predefined interval. Such periodic and recurring calls are usually driven by a timer and run over long durations. Polling is resource-intensive and can often be ineffective because multiple calls could end with no useful results. In order to minimize this overhead, the frequency of these calls can be reduced. However, decreasing the frequency could lead to larger time intervals between requests and increased latency. Striking a balance between reducing overhead and reducing latency can be difficult and requires an iterative methodology. Figure 6-1 depicts the process of polling in a diagram.

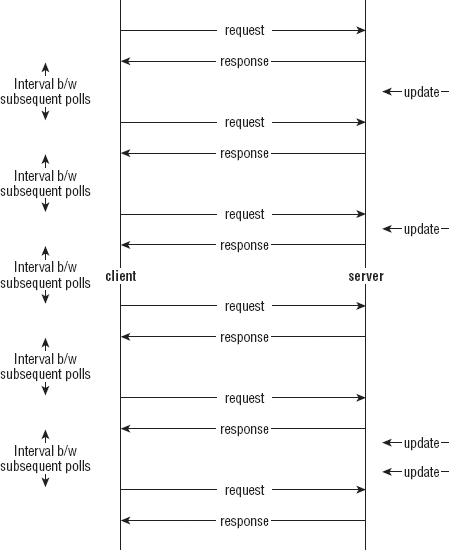

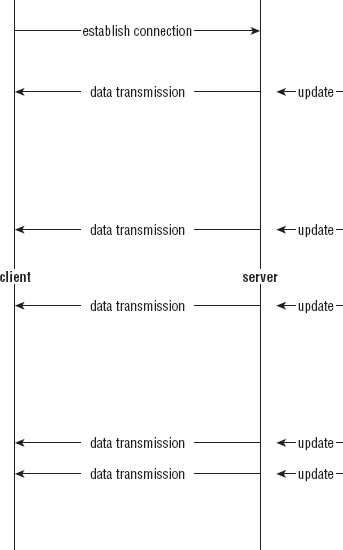

A typical application can create, read, update, or delete data. These four actions have been nicely put together in an acronym: CRUD. When you poll you are repeating your read requests. However, you make independent round trips to the server for create, update, and delete operations. When you go for one of these CUD operations, you could also read data and check for any updates. This simple enhancement on plain vanilla polling is called piggybacking. In piggybacking, you benefit from an additional read while you are on a trip to the server anyway, so it makes sense to reinitialize the polling counter every time such a piggyback trip occurs. That way, you truly avoid some extra trips. Figure 6-2 depicts piggybacking in a diagram.

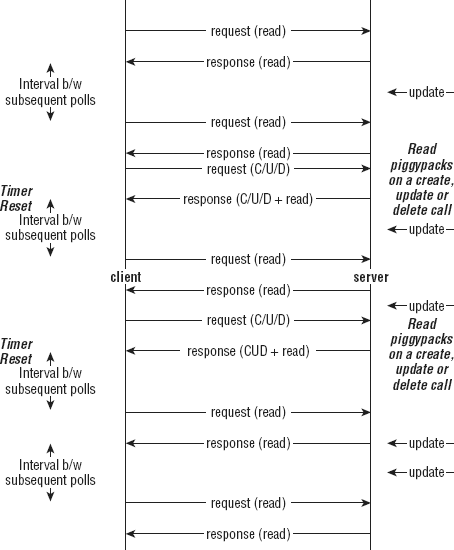

A further enhancement on polling would be to wait at the server until an update arrives. That is, make a request and do not return instantly but instead wait till an update arrives. This process immediately hints that you would be blocking resources. It also says that scaling up could be troublesome as more concurrent waiting operations could mean more resource requirements, possibly to the point that one runs out of such resources. Nonetheless, we enlist this as a possible option. Not all applications need scalability as a necessary requirement and sometimes waiting is better than going back and forth. This process of waiting until an update arrives is termed long polling. Long polling can improve latency requirements. Figure 6-3 depicts long polling in a diagram.

Figure 6.1. Figure 6-1

Beyond long polling, opening a direct connection could be an alternative. Direct socket-like connections can provide the pipe for servers or clients to freely interact via messages. However, opening up sockets in a Web world can be challenging. Custom options, such as the usage of XML Sockets, binary sockets, RTMP (Real Time Messaging Protocol), or HTML 5 WebSocket can be promising. Figure 6-4 shows the direct connection option in a diagram.

Finally, a hybrid option between long polling and direct connection exists. In this case, the direct connection is open, as with sockets, but resources are not necessarily blocked. Multiple client connections are shared over a set of resources. Connections waiting for updating are kept in a pending state and resources are released back to the pool. There has been much talk suggesting that "Comet" could be such an option. Figure 6-5 attempts to show a Comet-style interaction in a diagram.

Figure 6.2. Figure 6-2

What is Comet?Comet (or Reverse Ajax) is a style of communication between a web based client and a server where the server initiates data push to the client. Unlike the traditional request-response communication model that a client originates, Comet-based communication involves a server-initiated data transmission. Comet, therefore, improves on the traditional style of polling for updates and scales better than long polling. Comet is still an emerging framework and so while implementations exist from multiple vendors including Apache, Webtide, and DWR, the standards have yet to be established. A standard protocol, called Bayeux, and a common abstraction API, called Atmosphere, seem to be gaining traction and making progress in the direction of standardization. Comet emerged in the world of Ajax but has seen adoption in other technologies as well. Comet can be used with Flash platform based clients. You can read more about Comet in Alex Russell's seminal article on the topic titled "Comet: Low Latency Data for the Browser," which is accessible online at http://alex.dojotoolkit.org/2006/03/comet-low-latency-data-for-the-browser/. |

Figure 6.3. Figure 6-3

Figure 6.4. Figure 6-4

BlazeDS off-the-shelf supports polling and long polling and can be extended to work with Comet. LCDS, the commercial alternative to BlazeDS, supports RTMP.

Figure 6.5. Figure 6-5

XML and binary sockets can be opened to a BlazeDS server, but often it is difficult to open the socket in a reverse direction because of the absence of unique network identifiers for the clients. You will learn about the details of the available data push options and the extensions to standard BlazeDS data push functionality later in this chapter.

Next, you will learn about messaging domains, which are an important concept, necessary for effective usage of real-time messaging in BlazeDS.