Memory management is an essential ingredient of every program except the most trivial applications. There are different classifications of memory. Registers, static data area (SDA), stacks, thread local storage (TLS), heaps, virtual memory, and file storage are types of memory. Registers hold data that require quick and efficient memory access. Critical system information, such as the instruction pointer and the stack pointer, are stored in dedicated registers. Static and global values are stored automatically in the SDA. Stacks are thread-specific and hold function-related information. Local variables, parameters, return values, the instruction pointer of the calling function, and other function-related information is placed on the stack. There is a stack frame on the call stack for each outstanding function. TLS is also thread-specific storage. The TLS table is 64 slots of 32-bit values that contain thread-specific data. TLS slots frequently hold pointers to dynamically allocated memory that belongs to the thread. Heaps contain memory allocated at run time, from the virtual memory of an application, and are controlled by the heap manager. An application can have more than one heap. Large objects are commonly placed on the heap, whereas small objects are typically placed on the stack. Virtual memory is memory that developers directly manipulate at run time with transparent assistance from the environment. Virtual memory is ideal for collections of disparate-sized data stored in noncontiguous memory, such as a link list.

Managed applications can directly manipulate the stack, managed heap, and TLS. Other forms of memory, such as registers and virtual memory, are largely unavailable except through interoperability. Instances of value types and references (not the referenced objects) reside on the stack. These are generically called the locals. Referenced objects are found on the managed heap.

The lifetime of a local is defined by scope. When a local loses scope, it is removed from the stack. For example, the parameters of a function are removed from the stack when the function is exited. The lifetime of an object on the managed heap is controlled by the Garbage Collector (GC), which is a part of the Common Language Runtime (CLR). The GC periodically performs garbage collection to remove unused objects.

The policies and best practices of the GC and garbage collection are the primary focus of this chapter. The tradition of C-based developers, as related to memory management, is somewhat different from other developers—particularly Microsoft Visual Basic and Java developers. The memory model employed in Visual Basic and Java is cosmetically similar to the managed environment. However, the memory model of most C-based languages is dissimilar to this environment. These differences make this chapter especially important to developers with a C background.

Developers in C-based languages are accustomed to deterministic garbage collection, where developers explicitly set the lifetime of an object. The malloc/free and new/delete statement combinations create and destroy objects and values that reside on a heap. Managing the memory of a heap with these tools requires programmer discipline, which proves to be inconsistent with robust code. Memory leaks and other problems are common in C and C++. These leaks can eventually destabilize an application or cause complete application failure. Instead of each developer individually struggling with these issues, the managed environment has the GC, which is omnipresent and controls the lifetime of objects located on the managed heap.

The GC offers nondeterministic garbage collection. Developers explicitly allocate memory for objects. However, the GC determines when garbage collection is performed to clean up unused objects from the managed heap. There is no delete operator.

When memory for an object is allocated at run time, the GC returns a reference for that object. As mentioned, the reference is placed on the stack. The new operator requests that an instance of a type (an object) be placed on the managed heap. A reference is an indirect pointer to that object. This indirection helps the GC transparently manage the managed heap, including the relocation of objects when necessary.

In .NET, the GC removes unused objects from the heap. When is an object unused? Reference counting is not normally performed in the managed environment. Reference counting was common to Component Object Model (COM) components. When the reference count became zero, the related COM component was considered no longer relevant and was removed from memory. This model had many problems. First, it required careful synchronization of the AddRef and Release methods. Breakdown of synchronization could sometimes cause memory leakage and exceptions. Second, reference counting was expensive. Reference counting was applied to both collectable and noncollectable components, and it was unnecessarily expensive to perform reference counting on objects that were never collected. Finally, programs incurred the overhead of reference counting, even when there was no memory stress on the application. For this reason, reference counting was deservedly abandoned for a more efficient model that addresses the memory concerns of modern applications. When there is memory stress in the managed environment, garbage collection occurs, and an object graph is built. Objects not on the graph become candidates for collection and are removed from memory sometime in the future.

Unmanaged resources and memory can cause problems.

Some managed types are wrappers from unmanaged resources. A wrapper can have a managed and unmanaged memory footprint, which affects memory management and garbage collection. The underlying unmanaged resource of a managed object might consume copious amount of unmanaged memory, which cannot be ignored. This sometimes leads to a disparity between the amount of memory used in managed memory versus the amount used in native memory. Developers can compensate for this disparity and avoid unpleasant surprises. You can no longer hide an unmanaged elephant inside a managed closet.

A managed wrapper class for a bitmap is one example. The wrapper class is relatively small (a thimble), and the memory associated with the managed object is trivial. However, the bitmap is the elephant. A bitmap requires large amounts of memory, which cannot be ignored. If ignored, creating several managed bitmaps could cause your application to be trampled unexpectedly by a stampede of elephants.

The relationship between an unmanaged resource and the related managed wrapper can be latent. This can delay the release of sensitive resources, which in turn can result in resource contention, poor responsiveness, or application failure. For example, the FileStream class is a wrapper for a native resource: a physical file. The FileStream instance, which is a managed component, is collected nondeterministically. Therefore, the duration between when the file is no longer needed and when the managed component is collected could be substantial. This could prevent access to the file from within and outside your application, even though the file is not being used. When the file is no longer needed, the ability to release the file deterministically is imperative. You need the ability to say "Close the file now." The Disposable pattern provides this ability and helps developers manage memory resources.

Some unmanaged resources are discrete. When consumed in a managed application, discrete resources must be tracked to prevent overconsumption. The overconsumption of unmanaged resources can have an adverse affect on the managed application, including the potential of resource contention or raising an exception. For example, suppose that a device can support three simultaneous connections. What happens when a fourth connection is requested? In the managed environment, you should be able to handle this scenario gracefully.

The topics in this section highlight unmanaged resources management, which potentially has an impact on proper memory management. For this reason, these topics are discussed throughout this chapter.

This section is an overview of garbage collection. A detailed explanation of the mechanics of garbage collection is presented in Chapter 16. Two assumptions guide the implementation of garbage collection in the managed environment. The first assumption is that objects are more likely to communicate with other objects of a similar size. The second assumption is that smaller objects are short-lived objects, whereas larger objects are long-lived objects. For these reasons, the GC attempts to organize objects based on size and age.

In the managed environment, garbage collection is nondeterministic. With the exception of the GC.Collect method, whose use is discouraged, developers cannot explicitly initiate garbage collection. The managed heap is segmented into Generations 0, 1, and 2. The initial sizes of the generations are about 256 kilobytes (KB), 2 megabytes (MB), and 10 MB, respectively. As the application executes, the GC fine-tunes the thresholds based on the pattern of memory allocations. Garbage collection is prompted when the threshold for Generation 0 is exceeded. At that time, objects that can be discarded are removed from memory. Objects that survive garbage collection are promoted from Generation 0 to 1. The generations then are compacted and references are updated. If Generation 0 and Generation 1 exceed their thresholds during garbage collection, both generations are collected. Surviving objects are promoted from Generation 1 to Generation 2 and from Generation 0 to Generation 1. In fewer instances, all three generations will exceed thresholds and require collection. The later generations typically contain larger objects, which live longer. Because the short-lived objects reside primarily on Generation 0, most garbage collection is focused in this generation. Generations allow garbage collection to implement a partial cleanup of the managed heap at a substantial performance benefit.

Objects larger than 85 KB are considered large, and they are treated differently from other objects. Large objects are placed on the large object heap, not in a generation. Large objects are generally long-lived components. Placing large objects on the large object heap eliminates the need to promote these objects between generations, thereby conserving resources and reducing the number of overall collection cycles. The large object heap is collected with Generation 2, so large objects are collected only during a full garbage-collection cycle.

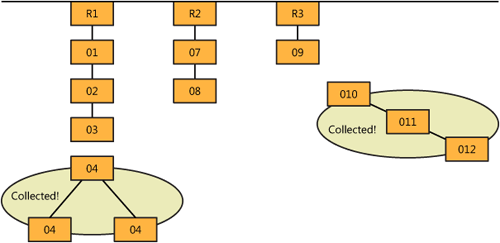

When garbage collection is performed, the GC builds a graph of live objects, called an object graph, to determine which objects are not rooted and can be discarded. First the GC populates the object graph with root references. The root references of an application are global, static, and local references. Local references include references that are function variables and parameters. The GC then adds to the graph those objects reachable from a root reference. An embedded object of a local is an example of an object reachable from a root reference. Of course, the embedded object could contain other objects. The GC extrapolates all the reachable objects to compose the branches of the object graph. Objects can appear only once in the object graph, which avoids circular references and related problems. Objects not in the graph are not rooted and are considered candidates for garbage collection.

Objects that are not rooted can hold outstanding references to other objects. In this circumstance, the object and the objects that it references potentially can be collected. (See Figure 17-1.)

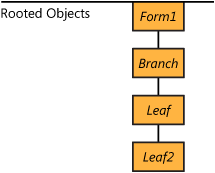

The Rooted application demonstrates how non-rooted objects are collected. In the application, the Branch class contains the Leaf class, which contains the Leaf2 class. In the Form1 class, an instance of the Branch class is defined as a field. When an instance of the Branch class is created, it is rooted through the Form1 instance. Leaf and Leaf2 instances are rooted through the Branch field. (See Figure 17-2.)

In the following code, a modal message box is displayed in the finalizer. This is done here for demonstration purposes only—it generally is considered bad practice:

public class Branch {

~Branch() {

MessageBox.Show("Branch destructor");

}

public Leaf e = new Leaf();

}

public class Leaf {

~Leaf() {

MessageBox.Show("Leaf destructor");

}

public Leaf2 e2 = new Leaf2();

}

public class Leaf2 {

~Leaf2() {

MessageBox.Show("Leaf2 destructor");

}

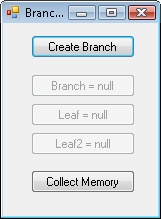

}The user interface of the Rooted application allows users to create the Branch, which includes Leaf instances. (See Figure 17-3.) You then can set the Branch or any specific Leaf to null, which interrupts the path to the root object. That nullified object is collectable. Objects that are rooted through the nullified object are also now collectable in this application. For example, if the Leaf instance is set to null, the Branch instance is not collectable; it is before the Leaf reference in the object graph. However, any Leaf2 object belonging to the Leaf is immediately a candidate for collection. After setting the Branch or Leaf objects to null, garbage collection can occur. If it doesn’t occur, click the Collect Memory button in the application to force garbage collection.

As mentioned, garbage collection occurs when the memory threshold of Generation 0 is exceeded. Other events, which are described in the following list, can prompt garbage collection:

Frequent allocations can accelerate garbage collection cycles by stressing the memory generations.

Garbage collection is conducted when the memory available to any generation is exceeded. The GC.AddMemoryPressure method artificially applies pressure to the managed heap to account for the memory footprint of an unmanaged resource.

The limit of handles for an unmanaged resource is reached. The HandleCollector class sets limits for handles.

Garbage collection can be forced with the GC.Collect method. This behavior is not recommended because forcing garbage collection is expensive. Nonetheless, this is sometimes necessary.

Garbage collection also occurs when overall system memory is low.

Certain suppositions are made for garbage collection in the managed environment. For example, small objects are generally short-lived. Coding contrary to these assumptions can be costly. Although it makes for an interesting theoretical experiment, this is not recommended for production applications. Defining a basket of short-lived but larger objects is an example of coding against assumptions of managed garbage collection, which would force frequent and full collections. Full collections are particularly expensive. Defining a collection of near-large objects—objects that are slightly less than 85 KB—is another example. These objects would apply immediate and significant memory pressure. Because they are probably long-lived, you have the overhead of eventually promoting the near-large objects to Generation 2. It would be more efficient to pad the near-large objects with a buffer, forcing them into large object status, in which the objects are directly placed onto the large object heap. You must remain cognizant of the underlying principle of garbage collection: Implement policies that enhance, rather than exacerbate, garbage collection in the managed environment.

One such policy is to limit boxing. Constant boxing of value types can trigger more frequent garbage collection. Boxing creates a copy of the value type on the managed heap. Most value types are small, and the resulting object placed on the managed heap is larger than the original value. This is yet another reason that boxing is inefficient and should be avoided when possible. This is particularly a problem with collection types. The best solution is to use generic types as described in Chapter 7.

Finalization is discussed in complete detail in the section titled "Finalizers" later in this chapter.

There are two flavors of garbage collection: Workstation GC is optimized for a workstation or single-processor system, whereas Server GC is fine-tuned for a server machine that has multiple processors. Workstation GC is the default; Server GC is never the default—even with a multiprocessor system. Server GC can be enabled in the application configuration file. If your application is hosted by a server application, that application might be preconfigured for Server GC. Your application then will execute with Server GC.

Workstation GC can have concurrent garbage collection enabled or disabled. Concurrent is a single thread concurrently servicing the user interface and garbage collection. The thread simultaneously responds to events of the user interface while also handling garbage collection responsibilities. The alternative is non-concurrent. When garbage collection is performed, other responsibilities, such as servicing the user interface, are deferred.

Workstation GC with concurrent garbage collection is the default. It is ideal for desktop applications, where the user interface must remain responsive. When garbage collection occurs, it will not subjugate the user interface. Concurrency applies only to full garbage collection, which involves the collection of Generation 2. When Generation 2 is collected, Generation 0 and Generation 1 also are collected. Partial garbage collection of Generation 0 or Generation 1 is a quick action. The potential impact on the user interface is minimal, and concurrent garbage collection is not merited.

The following are the garbage collection steps for Workstation GC with concurrent processing:

A GC thread performs an allocation.

All managed threads are paused.

Garbage collection occurs. If garbage collection is completed, proceed to Workstation GC with Concurrent Garbage Collection.

Interrupt garbage collection. Resume threads for a short time to respond to user interface requests. Return to Workstation GC with Concurrent Garbage Collection.

Resume threads.

In Workstation GC with Concurrent Garbage Collection, threads are suspended at a secure point. The CLR maintains a table of secure points for use during garbage collection. If a thread is not at a secure point, it is hijacked until a secure point is reached or the current function returns. Then the thread is suspended.

Workstation GC without concurrent garbage collection is selected when Server GC is chosen on a single-processor machine. With this option, priority is placed on garbage collection rather than on the user interface.

Here are the garbage collection steps for Workstation GC without concurrent processing:

Server GC is designed for multiprocessor machines that are commonly deployed as servers, such as Web, application, and database servers. In the server environment, emphasis is on throughput and not the user interfaces. The Server GC is fine-tuned for scalability. For optimum scalability, garbage collection is not handled on a single thread. The Server GC allocates a separate managed heap and garbage collection thread for every processor.

Here are the garbage collection steps with Server GC:

Workstation GC with concurrent garbage collection can be enabled or disabled in an application configuration file. Because concurrent is the default, the configuration file is used primarily to disable concurrent garbage collection. This is set in the gcConcurrent tag, as shown in the following code:

<configuration>

<runtime>

<gcConcurrent enabled="false"/>

</runtime>

</configuration>When needed, Server GC is also stipulated in the application configuration file. Remember that Server GC is never the default—even in a multiprocessor environment. Select the Server GC using the gcServer tag, as demonstrated in the following code:

<configuration>

<runtime>

<gcServer enabled="true"/>

</runtime>

</configuration>